?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The understanding and acquisition of a language in a real-world environment is an important task for future robotics services. Natural language processing and cognitive robotics have both been focusing on the problem for decades using machine learning. However, many problems remain unsolved despite significant progress in machine learning (such as deep learning and probabilistic generative models) during the past decade. The remaining problems have not been systematically surveyed and organized, as most of them are highly interdisciplinary challenges for language and robotics. This study conducts a survey on the frontier of the intersection of the research fields of language and robotics, ranging from logic probabilistic programming to designing a competition to evaluate language understanding systems. We focus on cognitive developmental robots that can learn a language from interaction with their environment and unsupervised learning methods that enable robots to learn a language without hand-crafted training data.

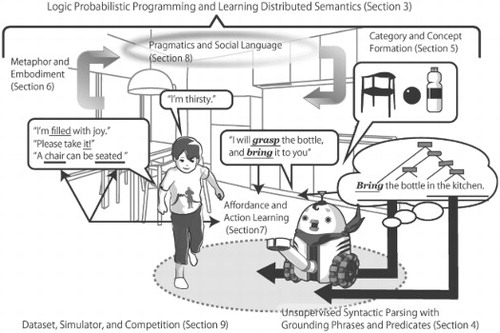

GRAPHICAL ABSTRACT

1. Introduction

Language acquisition and understanding are still linked to a wide range of challenges in robotics, despite significant progress and achievements in artificial intelligence (AI) through recent advances in machine learning during this decade. These advances have especially been in deep learning, fields related to language (such as machine translation), speech recognition, image recognition, image captioning, distributed semantic representation learning, and parsing. Although several studies demonstrated that an agent without pre-existing linguistic knowledge can learn to understand linguistic commands solely with deep learning and a labeled dataset, problems still abound. The actual linguistic phenomena that future service robots interacting with human users naturally in their daily lives will have to deal with are complex, diverse, dynamic, and highly uncertain. The goal of this study is to expose the scientific and engineering challenges clearly at the intersection of robotics and natural language processing (NLP) that must be solved to develop future service robots.

There are many reasons why language is still challenging in AI and robotics, as we describe later in this paper. Further exploration of this field should be conducted based on a clear understanding of the difficulties of the challenges. We would argue that the ad-hoc implementation of language skills in robots and an at-random exploration of the language system cannot lead to an appropriate understanding of the language used by humans, or to the creation of robots that can deal with language in the same way as humans. This is because language itself is dynamic, systemic, cognitive, and a social phenomenon.

The goal of this survey paper is to clarify the frontier, i.e. the challenges, in language and robotics by surveying achievements of the related research communities and linguistic phenomena that have been mostly ignored in robotics to date. Accordingly, we first conduct a background review of this research field and share our ideas on language and robotics, including thoughts on the importance of this research field. This is not only for building future intelligent robots, but also for understanding human intelligence and language phenomena. The definition of language may depend on the relevant academic field. In this paper, we use the term language to represent a natural language that we humans use in our daily lives, e.g. English or Japanese. Although it is sometimes argued that other animals have language-like systems, e.g. the syntactic structure of the songs of certain birds, this study focuses on human language. A language is a type of social sign system, such as gestures or traffic signs. Nonlinguistic social signs can be used as signs for social communication; e.g. nonverbal communication has been studied in social robotics for decades [Citation1–5]. However, we have excluded such signs from the scope of this survey and focused on natural language, which has syntax, semantics, and lexicons.

The remainder of this paper is organized as follows. Section 2 introduces the background of the research field of language and robotics, and points to seven distinct topics in the field. These seven topics are described in Sections 3–9, respectively, i.e. logic probabilistic programming and learning distributed semantics, unsupervised syntactic parsing with grounding phrases and predicates, category and concept formation, metaphorical expressions, affordance and action learning, pragmatics and social language, and resources and criteria for evaluation, i.e. dataset, simulator, and competition. Finally, Section 10 concludes this paper with a discussion and future perspectives.

2. Background

This section introduces the background for the research field of language and robotics and highlights seven distinct challenges in the field.

2.1. Language in robotics

Robots interacting with human users using speech signals try to behave correctly based on speech commands from the users in a real-world environment, which is full of uncertainty. To deal with the problems related to language understanding in a real-world environment, robot audition and vision have been studied to improve the robustness of automatic speech recognition and scene understanding for decades [Citation6–13]. However, challenges still abound in this field. Several studies have attempted to enable robots to understand the meaning of sentences. However, many applications still use manual rules, which only enable robots to understand a very small proportion of language. They also often have difficulty in dealing with uncertainty in language, e.g. treating speech recognition errors and the ambiguity of expressions.

The number of studies on language learning by robots has been increasing recently. One representative approach is based on deep learning. Several studies successfully enabled artificial agents to understand simple sentences using neural networks by composing linguistics, visuals, and other information based on supervised learning or reinforcement learning (RL) [Citation14–16]. Another representative approach has been taken in the field of symbol emergence in robotics [Citation17,Citation18]. Many types of unsupervised learning systems that can discover words, categories, and motions from sensor–motor information based on hierarchical Bayesian models have been developed. We must specifically highlight achievements in object category and concept formation by robots with cognitive scientists and linguists, as many parts of category formation discussed in cognitive science, typically nominal category formation, have already been modeled and reproduced in robotics (see Section 5). Of course, there are many types of categories and concepts. However, that which has been learned is still a very limited set of linguistic phenomena. For example, current robots cannot learn logic and reasoning, use metaphorical expressions, or infer a variety of concepts from abstract and functional words using a bottom-up approach.

To create robots that can communicate naturally with people in a real-world environment, e.g. offices and houses, we need to develop methods that enable them to process uttered sentences robustly in a real-world environment, despite inevitable uncertainties. We need to recognize that language is not a material object or a set of objective signals, but rather a dynamic symbol system in which the meaning of signs depends on the context and is understood subjectively. Further, language needs to be understood from a social viewpoint. Considering mutual (or shared) belief systems is indispensable to develop a theory of language understanding in communication [Citation19].

Symbol grounding is a long-standing problem in AI and robotics [Citation20], even though several researchers pointed out that the definition of the problem itself is somewhat misleading [Citation17,Citation18,Citation21,Citation22]. In all cases, it is important for a robot to ground symbols in their sensor–motor information, i.e. the perceptual world.Many studies related to the symbol grounding problem attempted to ground ‘words'. However, the meaning of a sentence is not the sum of the meanings of its words. Robots must ground the meaning of phrases and sentences. For this purpose, unsupervised learning of syntactic parsing with grounding phrases and predicates is also important. The meanings of words and phrases are not only determined by what they represent, but also by their relationship with other words. This is called distributional semantics. Therefore, learning distributional semantics is also crucial.

As we addressed in this section, creating a robot capable of ultimately communicating naturally with humans in a real-world environment is a great scientific challenge. To make progress in this field, we need to define appropriate tasks, and have an appropriate dataset. Considering the reality of the communication and collaboration to which the language contributes, these cannot be described as one-shot input–output information processing steps; instead, they involve continuous interaction. Therefore, a static dataset prepared by recording a series of interactions is insufficient for the study. However, using actual robots is not cost-efficient. Therefore, having a suitable simulator is important to accelerate such studies. This point is also addressed in the following section.

2.2. Robotics for language

The language that we, human beings, use is far more complex and structured than the sign systems that other animals use. The linguistic capability enables us to collaborate with other agents, i.e. leads to multi-agent coordination, and to form social norms and structures. Such magnificent capabilities gave humans the highest position among the species on earth. This language capability can be regarded as the fruit of our evolution and adaptation to the real-world environment. We can argue that language is meaningful in terms of adaptation and competition in a real-world environment. Furthermore, we can argue that the main functions of language are to enable people to communicate with each other and to represent objects, actions, events, emotions, intentions, and phenomena in the real-world, including the physical and social environment, to survive and prosper.

Therefore, it is crucially important to explore how language can help agents to adapt to the environment and collaborate with others to grasp its central function. However, researchers in the field of classical linguistics have not been able to address these problems, as stereotypical linguists have been focusing on written sentences alone. In studies of linguistics and NLP, real-world sensor–motor information has rarely been involved. However, currently, we can use robots with sensor–motor systems to experience multimodal information and perform real-world tasks. Employing robots will expand the horizon of linguistics. Cognitive linguistics introduced the notion of embodiment, leading to significant progress [Citation23–25]. However, so far, actual embodied systems have not been employed as ‘materials and methods’ in the study of language, despite embodiment and real-world uncertainty being crucial for intelligence. We believe that including robots in the study of language will broaden the horizon of cognitive linguistics as well.

The field of linguistics has mainly been focused on the language spoken or written by adults, i.e. learned and used language. NLP has mainly dealt with correct written sentences. However, humans can not only use but also learn a language. In the developmental process, input data received during the learning process is not written text data, but rather speech signals with multimodal sensor–motor information including haptic, visual, auditory, and motor information. Language learning needs to be performed in a real-world environment that is full of uncertainty. It is unrealistic to assume that an infant can acquire complete linguistic knowledge from speech signals alone. To model language acquisition, we need to deal with real-world information, including at least sensor–motor information. This means that at the minimum, a robot would be required for further study of the language.

In understanding a language, real-world multimodal information is essential as well. When one says, ‘please take it', to another person while pointing at an object, visual information is used to reduce uncertainty in the interpretation of ‘it’ (exophora). This indicates that many sentences require additional information, i.e. context, for interpretation. In practice, most context cannot be captured by considering written sentences alone. In various situations, the existence of real-world, i.e. embodied, information is essential to language understanding.

NLP has mainly been handling written text. NLP has led to many achievements, of course, as many linguistic phenomena and problems could be addressed using written text alone. However, many open questions, which cannot be solved solely with written text, remain in NLP. The NLP research community is also expanding in the direction of studies involving multimodal sensor information, e.g. multimodal machine translation, image and video captioning, and visual question answering [Citation26–29]. A new academic challenge regarding NLP using sensor–motor information in real-world environments, namely, language and robotics, is now being introduced.

Having an embodied system is crucial to the modeling of many linguistic phenomena. For example, the meaning of metaphors is based on cross-modal inference. Metaphors cannot be understood without the notion of embodiment. Robotics will be able to provide an appropriate model for metaphors by leveraging its multimodal servomotor information.

Affordance learning is also crucial for language understanding. The concept of a tool is linked to actions. For example, ‘chair’ cannot be defined without referring to the ‘sit down’ or ‘be seated’ action. Affordance learning has been studied in cognitive robotics in the past decade [Citation30]. This demonstrates that there is scope for robotics to contribute to language understanding.

2.3. Scope of survey on frontiers of language and robotics

We have pointed out several challenges and important topics in this section. Many challenges still abound at the frontier of language and robotics. To create a robot that can learn and understand language through natural interactions with human participants, as does a human infant, we must tackle the following problems.

Logic probabilistic programming and learning distributed semantics (Section 3)

Unsupervised syntactic parsing with grounding phrases and predicates (Section 4)

Category and concept formation (Section 5)

Metaphor and embodiment (Section 6)

Affordance and action learning (Section 7)

Pragmatics and social language (Section 8)

Dataset, simulator, and competition (Section 9)

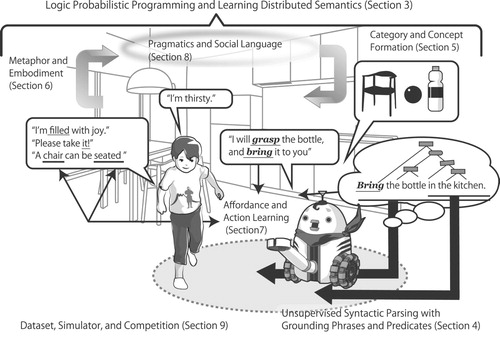

Although these are the topics that must be studied, they do not cover all the problems in language and robotics. We have intentionally chosen the topics that are related to language understanding and acquisition and have been relatively lacking in the context of studies of robotics and NLP to highlight its frontiers. We have excluded the topics that have been intensively studied. For example, we have excluded robot audition and vision studies. In addition, we have excluded nonverbal communication in social robotics as well, because we focus on natural language in this survey. Figure summarizes the issues in an illustration. We describe their current status and more detailed challenges in the following sections.

3. Probabilistic logic programming and distributed representations

As exemplified by Sherlock Holmes, humans can predict what happens next, or what happened before, by combining observed facts with their knowledge of the world. The ability to draw a conclusion with reasoning, henceforth, inference, is one of the crucial components of future intelligent robots [Citation31].

For example, in Figure , the robot brings a bottle, responding to the user utterance ‘I'm thirsty ’. In order to achieve this, the robot needs to infer that it should bring the bottle, based on the user utterance and problem-solving knowledge, e.g. ‘a bottle of water can solve thirstiness', and ‘there is a bottle of water in the kitchen'. Even if the robot can recognize the sentence spoken and has knowledge, it may not be capable of doing this if it does not have the ability to derive a conclusion by combining these types of information.

Conventional studies of logic programming (LP) have been focused on this issue. However, developing methods that enable a robot to learn logic programs and distributed representations on a large-scale knowledge base via sensor–motor experiences in a real-world environment is still a challenge.

This section discusses the latest advances in reasoning in two areas, LP and distributed representations in NLP, and points to future challenges.

3.1. Probabilistic logic programming

3.1.1. Logic programming

LP is essentially a declarative programming paradigm based on formal logic. LP has its roots in automated theorem proving, where the purpose is to test whether or not a logic program Γ can prove a logical formula, or query, ψ, i.e. or

. For computational efficiency, the language used in logic programs is typically restricted to a subset of first-order logic (e.g. Horn clauses [Citation32]). From a reasoning perspective, LP serves as an inference engine. Hobbs et al. [Citation33] propose an Interpretation as Abduction framework, where natural language understanding is formulated as abductive theorem proving. In this context, a logic program Γ is a commonsense knowledge base (e.g.

) and a query ψ will be a question that is of interest (e.g.

).

Typically, reasoning involves a wide variety of inferences (e.g. coreference resolution, word sense disambiguation, etc.), where the inferences are dependent on each other. Thus, it is difficult to define the types of inferences that should precede in advance algorithmically. Although there is a wide variety of approaches to implementing reasoning, ranging from conventional, feature-based machine learning classifiers such as logistic regression to modern deep learning techniques, LP provides an elegant solution to this issue, by virtue of its declarative nature. In declarative programming, all that is required to solve a problem is to provide general knowledge around problem-solving; procedures on how to actually solve this specific problem are not needed. For example, for Sudoku, we would write rules such as ‘for all ', where

represents a cell value at the ith row and jth column, and then simply run the inference engine. In the literature, a number of LP formalisms have been proposed such as Prolog, Answer Set Programming [Citation34], where the expressivity of logic programs and their logical semantics, etc., are different from each other.

3.1.2. Reasoning with uncertainty

Conventional LP cannot represent uncertainty in knowledge. For example, a rule such as ‘If it rains, John will be absent from school with the probability of 60%’ is not representable in LP. To solve this problem, probabilistic logic programming (PLP), a probabilistic extension of LP, has been developed. A wide variety of formalisms has been proposed, such as PRISM [Citation35], stochastic logic programming [Citation36], and ProbLog [Citation37]. In the field of statistical relational learning, certain logic-based formalisms have also been proposed such as Markov logic [Citation38] and probabilistic soft logic [Citation39]. Most of the popular PLP formalisms to date are based on distribution semantics (DS), proposed by Sato [Citation35].

We briefly provide an overview of DS. DS introduces a probabilistic semantics for logic programs. The idea is as follows. We assume that a logic program Π consists of facts F and rules R (i.e. ). Consider a probabilistically sampled subset of facts

, according to some probability distribution, and a logic program

. We then derive a logical consequence, e.g. in terms of a minimal model. After we repeat the sampling many times, we obtain a set of logical consequences (or interchangeably, interpretation or truth assignment) from the sampled program in terms of some LP semantics, e.g. minimal model semantics. In DS, the probability mass is distributed over these logical consequences. Let

be a set of all such logical consequences, namely

. In DS,

is set to be 1. The probability function of I can be designed arbitrarily.

One instantiation of DS is ProbLog [Citation37]. The basic syntax of ProbLog resembles that of Prolog. As an LP semantics, ProbLog employs a well-founded model [Citation40] and assumes that the program given is a locally stratified normal logic programs; under a locally stratified LP, a well-founded model coincides with the minimal model and the stable model. One important extension is probabilistic fact denoted by p::f, which means that fact f is selected with probability p and not selected with probability 1−p. A ProbLog program consists of three components: (i) facts F, (ii) probabilistic facts , and (iii) rules R. Assuming probabilistic independence over the probabilistic facts, the probability of interpretation I is defined as follows:

(1)

(1) where

is a family of sets of probabilistic facts that has I as a logical consequence, that is,

. ProbLog also has several strong, built-in mechanisms to perform efficient inference, e.g. computing a marginal probability and a maximum a posteriori (MAP) inference, and learn its probabilistic parameters [Citation41].

3.2. Reasoning with distributed representations

Representing the meaning of words, phrases, or even sentences using low-dimensional dense vectors is shown to be effective in a wide range of NLP tasks, such as machine translation, textual entailment, and question answering [Citation42–45, etc.]. Such representations are called distributed representations. The benefit of distributed representations is that they allow us to estimate the proximity of meaning based on vector similarity. In the field of reasoning, researchers started to leverage distributed representations in the reasoning process. This section discusses two recent advances in reasoning leveraging distributed representations: automated theorem proving and knowledge base embedding.

3.2.1. PLP with distributed representations

One weakness of PLP is that a symbol used in logic programs is assumed to represent a unique concept. Consider the logic program {grandfather

happy}. Under the above assumption, PLP does allow us to deduce happy given that grandfather holds; however, given that grandpa holds, it does not allow us to deduce happy, regardless of the conceptual similarity between these two symbols. If we could exhaustively enumerate ontological axioms in the world, i.e. grandpa

grandfather in this case, then this would not be a problem. However, this assumption is impractical from a knowledge engineering perspective.

To overcome this weakness, researchers embed PLP, or other logic-based formalisms, into a continuous space using advances in distributed representations [Citation46–52, etc.], which is an active topic in both the NLP and machine learning research communities. For instance, Rocktaschel and Riedel [Citation52] propose a Prolog-style theorem prover, i.e. selective linear definite clause (SLD) derivation, in a continuous space. Given a goal (or query), SLD derivation subsequently attempts to prove the goal (or subgoal) by unifying it with the head of a rule in the knowledge base. However, as mentioned previously, the unification of a goal grandfatherOf(John, Bob) and head grandpaOf(John, Bob) fails even when these are semantically similar.

To solve this problem, Rocktaschel and Riedel [Citation52] employ soft unification based on the similarity of predicates and constants instead of hard pattern matching. The proposed theorem prover returns a score representing how successful the proof is, instead of whether the proof is successful or not. Specifically, predicates and constants occurring in a knowledge base are embedded into a continuous space by assigning a low-dimensional dense vector to them. When proving a goal, the similarity between a goal and a rule head in the knowledge base is calculated. Because unification is always successful, the proof process is performed up to depth d. The vectors representing predicates and constants are tuned such that a query that can be proven by the knowledge base receives a higher score.

3.2.2. Embedding knowledge into continuous space

Embedding a knowledge base into a continuous space has received much attention in recent years [Citation53–56]. The most basic and simple form of knowledge embedding methods is TransE [Citation57].

We assume that a knowledge base contains a set of triplets (h,r,t), where h,t represent an entity and r represents a relation between the entities (e.g. (Tokyo, is_capital_of, Japan)). TransE represents each entity and relation as a point in a n-dimensional continuous space. The core idea of TransE is that for each triplet in a knowledge base, the corresponding vector representations

can be learned by minimizing the following loss function:

(2)

(2) where γ is a margin,

represent a set of triplets contained, or not contained, in a knowledge base K, respectively, and

.

(or L1 norm), represents the quality of triplet

based on the corresponding vectors. During training, for a triplet

contained in a knowledge base,

becomes closer to

.

These learned distributed representations can be used for automated question answering, for instance. For example, consider a question ‘Where was Obama born?'. Let the distributed representation of Obama and is_born_in be . Let the distributed representation of an arbitrary entity t be

. To answer the question, we need to find an entity t that maximizes

. Compared to simple pattern matching, the advantage here is flexibility—the system can answer the question in a situation where (Obama, is_born_in, ·) does not exist in the knowledge base, but similarly related instances such as (Obama, is_given_birth_in, ·) do.

3.3. Challenges in PLP and distributed representations

Despite recent advances in the community, many obstacles remain to integrate logical inference within robots in the real world.

First, in the work we have seen so far, the model of the world is not grounded in the real world. Second, the vocabulary set, i.e. predicates and terms, used for representing observations and a knowledge base is predefined by the user. Third, the knowledge acquisition bottleneck is still present. The use of distributed representations partially solves this issue, i.e. through ontological knowledge; however, a separate mechanism is required for other types of knowledge, e.g. relations between events such as causal relations. Therefore, the following challenges remain as open questions:

Developing a method for logical inference where the model of the world is grounded on continuous and constantly changing real-world sensory inputs.

Developing a mechanism to associate new concepts emerging from inputs with existing predicates, i.e. ‘packing’ similar concepts from robots' perceptions and ‘labeling’ them.

Enhancing purely symbolic logical inference with causal inference in the physical world, e.g. using a physics simulator.

4. Unsupervised syntactic parsing with grounding phrases and predicates

To conduct the logical inferences described earlier, syntactic parsing should be perfected in advance to be suitable for real-world communication. For example, the robot in Figure is inferring the latent syntactic structure of the sentence given, and understands it needs to bring ‘the bottle’, not ‘the kitchen'. Syntactic parsing is indispensable for semantic parsing, semantic role identification, and other semantics-driven tasks in NLP. Syntactic parsing can essentially be categorized as follows, in the current practice of NLP [Citation58]: (a) dependency parsing, (b) constituent parsing, such as context-free grammars (CFG), tree adjoining grammars (TAG), and (c) combinatory categorial grammars (CCG).

Dependency parsing has become widespread by virtue of its simplicity and universality, and downstream tasks are often designed assuming dependencies. However, as it can be converted into a specific form of CFG [Citation59], constituent parsing such as CFG is still a key issue. Furthermore, because CCG can also handle information that cannot be dealt with using other formalisms (such as λ-calculus expressions), CCG has attracted much attention of late and much research has emerged around CCG, following [Citation60].

4.1. Unsupervised and supervised parsing

Learning a grammar is straightforward if equipped with a corpus of annotated ground truth trees, i.e. data labels for training a syntactic parser. However, in the realm of robotics, we need the unsupervised learning of grammars to be flexible and to fit the utterances of users. Indeed, aside from developmental considerations, users often speak in a way that cannot be handled by a predefined grammar, which is usually based on written text prepared in a different environment. Such colloquial expressions are especially characteristic of robotics. Unsupervised models are also necessary for semi-supervised learning as a prerequisite of using existing grammars [Citation61,Citation62]. Below, we describe the current status of the aforementioned three approaches to parsing.

4.1.1. Dependency parsing

The most basic model of unsupervised dependency parsing was first introduced by Klein and Manning [Citation63] and was referred to as the dependency model with valenceFootnote1 (DMV). DMV is a statistical generative model that yields a sentence by iteratively generating a dependent word in a random direction from the source word in a probabilistic fashion. DMV has been improved markedly through many studies since [Citation64–66]. For example, Headden et al. [Citation64] introduced enhanced smoothing and lexicalization based on the Bayesian treatment of equivalent probabilistic CFG. Spitkovsky et al. [Citation65] controlled the data fed to the inference algorithm from straightforward to complex, mimicking a baby learning a language, and yielded better accuracy than a simple batch inference. Jiang et al. [Citation66] recently leveraged neural networks to deal with possible correlations in grammar rules, defining the state of the art as an extension of a basic DMV.

However, dependency parsing has limitations: the most prominent issue is that large syntactic structures such as relative clauses or compositional sentences cannot be recognized. For example, ‘that…’ in ‘it is true that…’ contains a sentence, but dependency parsing just attaches ‘that’ to the head of the sentence and cannot recognize the fact that the term ‘that’ is not a pronoun here but introduces a relative clause. For this purpose, constituent parsing is a better alternative, as described below.

4.1.2. Constituent parsing

Constituent parsing is a general term referring to a model that assigns hierarchical phrase structures to a sentence. The most basic of these is CFG and its probabilistic extension, probabilistic CFG (PCFG) [Citation67]. For example, PCFG decomposes a sentence (S) into a noun (N) and a verb phrase (VP), VP in turn decomposes into a verb (V) and N, and finally N and V are substituted with actual words such as ‘she plays music'. This approach has a long history in the field of NLP, and many extensions and inference methods have been proposed [Citation58,Citation67].

However, unsupervised learning of PCFG is a notoriously difficult problem, because we usually only need to find few valid parses of a sentence within possibilities, where N is the length of the sentence and K is the number of nonterminal symbols, i.e. syntactic categories. Therefore, inference in unsupervised PCFG induction is quite prone to being trapped by local maxima, and thus has been avoided for a long time. Johnson et al. [Citation68] recently proposed a novel MCMC sampling scheme for this problem that avoids local maxima by using a Bayesian inference on PCFG induction. For simplicity, these studies on PCFG parsing assumed part-of-speech (POS) tags, i.e. preterminals, as input. Pate and Johnson [Citation69] showed that using a word itself instead of a POS improves parsing accuracy; Levy et al. [Citation70] employed a sequential Monte Carlo method for the online inference of grammars, which resembles actual situations found in robotics research.

4.1.3. CCGs

Although CFG recognizes phrase structures, it is still limited, because these structures only have a symbolic meaning. For example, a rule S NP VP merely states that the symbol ‘NP’ can be decomposed into a pair of symbols ‘NP’ and ‘VP', which has nothing to do with the fact that the actual words it governs are nouns or verbs. Therefore, the CFG analysis of sentence inevitably becomes a type of hierarchical POS tagging, i.e. a combinatorial process to yield preterminals such as N or V.

CCG [Citation60] is a formalism that does not suffer from this issue: all phrase structures in CCG are functions and derived from the bottom. For example, because VPs require a noun phrase (NP) to be an S, a VP is actually denoted by Footnote2 instead of a distinct, and meaningless, symbol VP. An NP is also an artifact that possibly takes a determiner (DT) to function as an N, thus denoted by

; therefore a VP is finally denoted as

. CCG was introduced to NLP around 2002 [Citation71], and supervised learning was readily available. By contrast, the unsupervised learning of CCG was only introduced in 2013 [Citation72] using a framework of hierarchical Dirichlet processes (HDP) [Citation73]. From a statistical perspective, it has a clear advantage over an unsupervised PCFG also using HDP [Citation74], in that it simply utilizes an infinite-dimensional vector of probabilities as opposed to a matrix of infinite × infinite dimensions. Martínez-Gómez et al. [Citation75] recently introduced ccg2lambda, which combines CCG parsing with a λ-calculus to enable an inference on textual entailment. It also has the advantage of handling ambiguities by virtue of a statistical formulation using logistic regression.

4.2. Semantic parsing and grounding

Once these syntactic analyses of the sentence are available, we can associate them with external information. This process is sometimes called ‘grounding’ and is also studied in the field of NLP [Citation76]. The term ‘grounding’ is related to the symbol grounding problem [Citation20]. However, the symbol grounding problem does not concern the interpretation of symbols, i.e. semiosis, or language understanding [Citation18]. The ‘grounding’ here could be rephrased as ‘language understanding using sensory–motor information'. Semantic parsing and ‘grounding’ by robots, i.e. sensory–motor systems, that can associate syntactic structure with dynamic information, is important for language acquisition and understanding in a real-world environment.

In a discrete case, Poon [Citation77] leverages a travel planning database called ATIS and associates nodes and edges in a syntactic tree with the database. This is essentially a nested hidden Markov model (HMM), based on unsupervised semantic parsing [Citation78] that automatically clusters each predicate in a tree by maximizing the likelihood of a sentence computed by a Markov logic network. It has the clear advantage of abstracting away various possible linguistic expressions with respect to the database; however, the database must be given in advance and usually has a narrow scope. Because of its discrete nature, this approach cannot discern subtle differences in linguistic expression and adjust the actual behavior of the robots accordingly.

In a continuous case, there is an abundance of research connecting linguistic expressions with images [Citation79–82]. As an example more closely inspired by robotics, [Citation83] and its extension [Citation84] aim to discriminate which predicates are applicable to a given object, such as ‘lift’ and ‘move', but not ‘sing', for a box. To solve this problem, the former employs consensus decoding and the latter uses a mixed observability Markov decision process (MDP) leveraging sensory information. Although these works connect linguistic expression with sensory information, this content, such as imagery, is usually static and the objective is discriminative. Moreover, the candidate predicates are known in advance, and thus the approach does not cover broad linguistic expressions in general.

Note that we are not insisting on ‘grounding’ any word or phrase on the sensory–motor information provided by robots, i.e. external information. Sensory–motor information provides the cognitive system of a robot with observations, e.g. visual, auditory, and haptic information. However, many words representing abstract concepts cannot be directly ‘grounded’ on such sensory–motor information. For example, we cannot determine a proper probabilistic distribution of sensory–motor information for ‘in', ‘freedom’, or ‘the'. Even a verb can be considered as an abstract concept. ‘Through’ can represent different trajectories or a controller depending on target objects. Even though ‘in’ is abstract, ‘in front of the door’ seems concrete and more conducive to an association with sensory–motor information. Semantic parsing with real-world information and finding a way to handle abstract concepts is an important challenge.

4.3. Challenges in unsupervised syntactic parsing with grounding phrases and predicates

Studies involving the unsupervised learning of syntactic parsing in robotics are still in the preliminary stage. Attamimi et al. developed an integrative robotics system that can learn word meaning and grammar in an unsupervised manner [Citation85]. However, this approach relies on HMM, which does not have a hierarchical structure, for modeling grammar. Aly et al. introduced an unsupervised CCG to enable a robot to find the syntactic role of each word in a situated human–robot interaction [Citation86].

In connection with the topics described in this section, we identified the following challenges:

Enabling robust unsupervised parsing of colloquial or nonstandard sentences with the help of multimodal information obtained from robots.

Associating syntactic structures and substructures (such as those in CCG) with sensor–motor information for grounded language interpretation and generation.

Developing a machine learning algorithm to associate the predicates in semantic parsing with a distributed meaning representation in robots, organized based on sensor–motor information.

5. Category and concept formation

The ability to categorize and to conceptualize is essential to living creatures. A creature may not to survive if it is not capable of distinguishing beneficial items from harmful ones including, with regard to food, whether it is edible or not. Humans have categorized objects, actions, events, emotions, intentions, and phenomena. In addition, we label these using language. Thus, it is reasonable to say that language reflects the way we think and perceive the world around us, and considering linguistic categories is an effective way to comprehend the human ability to categorize and the way robots should operate in this respect. To understand human language, a robot needs to be able to categorize objects and events, and to handle concepts. In Figure , the robot grasps the concepts of ‘chair', ‘bottle’, and ‘ball', and can understand utterances from the user. Of course, the robot needs to understand ‘grasp', ‘bring’, ‘thirsty’, and ‘joy’ as well. During this decade, unsupervised categorization and concept formation have been studied in robotics [Citation17]. This section describes the foundation of category and concept formation, the current state of robotics, and future challenges.

5.1. Linguistic categories

5.1.1. Similarities and differences

A linguistic category represents ‘the conceptualization of a collection of similar experiences thatare meaningful and relevant to us [Citation87]'. The existence of such categories proves that humans have the ability to find similarities between objects. For instance, whether a car is manufactured by Toyota, Honda, or Renault, we can categorize it as a ‘car’ as long as it fits the conceptualized form of a ‘car'. However, if a vehicle accommodates a group of people, then we would think of it as a ‘bus'. This leads to the following questions: Can a robot categorize ‘similar’ items into a group? Can a robot draw a line between linguistic categories such as a ‘car’ and a ‘bus?’

It should be noted here that the lines between categories are by no means definitive. While traditional semantics presupposes binary features (see [Citation88] for an overview), current trends in cognitive semantics consider a prototype with both central and peripheral members in each category (cf. [Citation89]).

We should also point out that categories are related to language [Citation90]. English speaking people distinguish a ‘bush', i.e. short tree, from a ‘tree’ in a daily context, but Japanese people usually do not. Conversely, there is only one word for ‘flatfish’ in English, whereas Japanese people delineate between two types of flatfish, namely ‘karei’ and ‘hirame'. Separate cultures and communities may consider distinct categories and concepts. This implies that there may even be separate categories for robots, as they have a completely different sensory system from human beings.

5.1.2. Taxonomy and partonomy

Linguistic categories often exhibit hierarchical relations [Citation87]. This type of lexical relation is called taxonomy. For example, ‘cucumbers', ‘cabbages’, and ‘onions’ are considered members of the ‘vegetable’ category. Similarly, ‘dogs', ‘cats’, and ‘horses’ are grouped in the category of ‘animals', These superordinate terms are usually abstract notions; no specific entity is labeled as a ‘vegetable’ or an ‘animal'. This leads to another question: Can a robot form abstract categories on top of concrete groups?

Another type of lexical relation is called partonomy, where one word denotes a part of another. Winston et al. [Citation91] proposes six types of partonomic relations, including a component and integral object, e.g. a ‘cup’ and a ‘handle'; a member and a collection, e.g. a ‘tree’ and a ‘forest'; and a material and an object, e.g. ‘steel’ and a ‘bike'. This naturally leads to the following question: Can a robot identify components, or ingredients, of an object?

5.1.3. Semantic network via frame

Words have semantic relations in relation to a frame or scene [Citation92,Citation93]. For example, a ‘menu', ‘dish’, ‘knife’, and ‘fork’ are linked through a designated ‘restaurant’ frame, and a ‘plane', ‘train’, ‘hotel’, and ‘camera’ are linked through a ‘travel’ frame. The important distinction from other types of linguistic categories is that words can be categorized based on human activity and social customs. The link between ‘hotel’ and ‘camera’ is by no means linguistic but rather it is situational and even subjective. A question then arises as to whether a robot can understand these semantic networks.

Note that a word can belong to several frames; ‘knife’ can be viewed as a member of the restaurant frame when used for eating, whereas it can also be a weapon and linked to words such as ‘sword’ and ‘arrow’ when used in the context of fighting.

5.1.4. Abstract concepts and ad-hoc categories

In many studies discussing concepts and categories, we tend to exhibit a nominal bias, i.e. we tend to think of nouns. Many concepts and categories corresponding to nouns, e.g. objects, places, and movements, are observable and a statistical categorization method can be a constructive model with a categorization and conceptualization capability. However, forming abstract concepts, e.g. ‘in', a preposition, ‘use', a verb, and ‘democracy', a conceptual word, requires other mechanisms and is important in many senses [Citation94]. In daily language, we tend to use an abundance of abstract words. Therefore, enabling a robot to grasp abstract concepts is also important [Citation95,Citation96].

Moreover, many categories are not static but dynamic. People can form so-called ad-hoc categories instantly based on the situations they face [Citation97]. From this viewpoint, categorization may even be considered as a type of inference. How to model the learning capability for ad-hoc categories in the cognitive system of a robot is another question.

5.2. Multimodal categorization

5.2.1. Concept formation by robots

To enable robots to implement concept formation, several studies assume that concepts are internal representations related to words or phrases that enable robots to infer categories into which they may classify sensory information. Regarding categorization, image classification using deep neural networks has been widely studied [Citation98–102]. These studies use a large amount of labeled data and achieve very accurate object recognition. However, humans do not use such labeled data and it is important that the concepts are formed in an unsupervised manner. Studies on unsupervised image classification have also been conducted [Citation103–108]. However, the importance of multimodality in concept formation has been recognized [Citation109] and the difficulty in forming human-like concepts using single modality has been acknowledged. In studies using multimodal information, methods to learn relationships among modalities by using nonnegative matrix factorization and neural networks have been proposed [Citation110–117]. In these studies, the learned latent space can be interpreted as representing concepts.

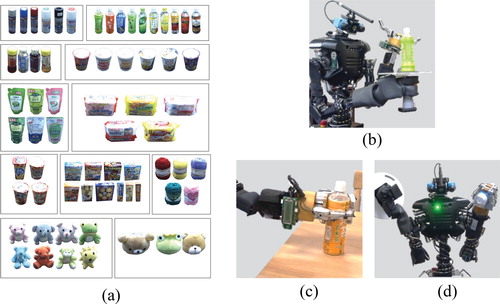

Several methods have been proposed to classify multimodal information into categories in an unsupervised manner using stochastic models. Methods based on latent Dirichlet allocation (LDA) [Citation118], initially proposed for unsupervised document classification and LDA, were extended to a multimodal LDA (MLDA) [Citation119] for the classification of multimodal information. Here, category z is learned by classifying multimodal information acquired through the sensors of a robot. The concepts are represented in a continuous space linked to a probability distribution

, and concept formation is equivalent to learning the parameters of this distribution. Using MLDA, the robot classified visual, haptic, and auditory information obtained by observing (Figure (b)), grasping (Figure (c)), and shaking (Figure (c)) the objects shown in Figure (a), and was able to form the basic-level categories shown in Figure (a). The probability distribution

can be computed using learned parameters and the multimodal information that maximizes this probability is considered to represent the prototype of the category:

Because this probability distribution is continuous, this concept model can represent not only a central member but also a peripheral member.

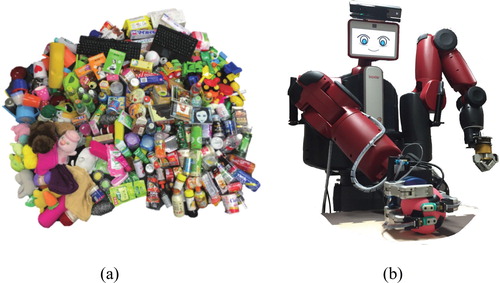

Figure 2. Object concept formation in a robot: (a) objects used in the experiment, and obtaining visual, haptic, and auditory information by (b) observing, (c) grasping, and (d) shaking objects.

Other nominal concept formation methods have also been developed. For example, locational, or spatial, concept formation methods have been proposed Taniguchi et al. [Citation120,Citation121].

5.2.2. Word meaning acquisition

The benefit of using multimodal information is that it makes it possible to infer abstract information from observations. For example, in neural network studies, relationships between modalities are learned, and they can therefore be inferred, to an extent, from other information. In MLDA, this inference is also possible by computing , which is the probability that modal information

is derived from other information.

Moreover, considering as words that represent object features and are taught by humans, the robot can acquire word meanings [Citation122]. The robot can recall the multimodal information

that can be represented by the word. The robot is considered to have understood word meanings through its own body. It has also been suggested that humans understand word meanings through their bodies [Citation123,Citation124] and MLDA partially implements this capability in robots.

Furthermore, a stochastic model [Citation125] enabling the robot to learn the parameters of a language model in the speech recognizer was proposed by introducing a nested Pitman–Yor language model [Citation126] into MLDA. Using this model, the robot can form an object concept and learn speech recognition similarly to infants by using multimodal information obtained from objects and teaching utterances given by humans. Moreover, by connecting the recognized words and concepts, the robot can also acquire word meanings. The robot on which this model is installed (Figure (b)) obtained multimodal information from the objects shown in Figure (a); meanwhile, a human user taught object features to the robot. Finally, the robot was able to recognize unseen objects with an 86% accuracy and teaching utterances with a 72% accuracy.

5.2.3. Hierarchical concept formation

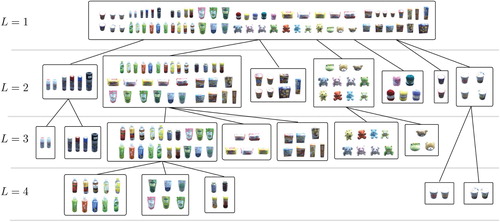

Concepts have a hierarchical structure and hence studies on the hierarchical classification of images using labeled data have been conducted [Citation127,Citation128]. However, as we mentioned earlier, learning concepts using multimodal information in an unsupervised manner is important. To implement such a hierarchical concept, a nested Chinese restaurant process [Citation129] was introduced into MLDA [Citation130]. Using multimodal information obtained by observing, grasping, and shaking objects as well as the studies discussed in the previous section, concepts were classified into categories and hierarchical relationships were estimated. As a result, the hierarchical structure shown in Figure was estimated by the robot, and one can see that hierarchical relationships based on feature similarities are captured. Applying this model to localization in the concept formation problem, Hagiwara et al. proposed a hierarchical spatial formation method [Citation131].

Moreover, studies to detect parts of objects [Citation132,Citation133] and faces [Citation134–136] are conducted regarding partonomy. However, in these studies, supervised learning based on visual information is utilized, and, currently, unsupervised learning based on multimodal information has not yet been implemented. A future challenge for robots is to learn partonomy using multimodal information in an unsupervised manner.

5.2.4. Integrated concept

Machine learning methods for forming various concepts and, furthermore, for learning relationships between them has been proposed. Moreover, as the transition in these concepts can be viewed as grammar, the proposed method enables robots to learn grammar using a bottom-up approach [Citation85]. The multimodal information obtained from scenes where individuals manipulate objects is classified by MLDAs and the individual, object, motion, and localization concepts are formed. Furthermore, another MLDA is placed on top of these MLDAs to learn the relationships between concepts. Using this model, for example, the meaning of motion can change based on simultaneous observations of objects. We consider that a type of semantic network has been implemented. However, this is a very limited, and inflexible network with a structure that changes depending on the context, as explained in Section 5.1.3. Concepts change depending on the context, situation, and purpose, as seen in the ad-hoc category [Citation97]. We need to change our perspective on concepts and no longer consider them as static objects, but rather as dynamic processes.

5.3. Challenges in category and concept formation

So far, we have shown that significant progress in category and concept formation is being made in current robotics. However, previous studies have mainly focused on nominal categories and concepts, e.g. objects, location, and movement, which are concrete and observable. Therefore, some of the remaining challenges are as follows:

Inventing a mechanism for representing abstract concepts including emotions, e.g. anger, happiness, and sadness, and social concepts, e.g. democracy, freedom, and society.

Inventing unsupervised machine learning methods to represent verbs, e.g. grasp, throw, and kick, and functional words, e.g. in, on, and over, from sensor–motor information.

Identifying and implementing the process of categorical extension, especially for polysemous words, e.g. gift as a present and gift as a talent.

Enabling a robot to spontaneously form ad-hoc categories to achieve a given goal within a certain context [Citation97].

Inventing a model for the distributed representation of concepts that can be used for logical inference, as discussed in Section 3.

6. Metaphorical expressions

Daily conversations, which a future service robot is expected to face, are full of metaphorical expressions. In Figure , ‘I'm filled with joy’ embeds a metaphor in which emotion is compared to a liquid. Even though metaphors play a crucial role in semantics, very few related studies have been performed in robotics.

6.1. Metaphor as a cognitive process

Before the advent of cognitive linguistics, metaphors were only viewed as linguistic ornaments, outside of the main scope of linguistic studies. However, since Lakoff and Johnson [Citation137], metaphors have been regarded as one of the important linguistic phenomena that reflect our way of thinking. They claim that, by analyzing language, we can find conceptual metaphors that reside at the core of the human conceptual system.

Conceptual metaphors are pervasive in language. As such, we are rarely conscious of their existence, but metaphors are the instruments that enable us to form abstract notions. Using metaphors, we understand and experience abstract concepts in the context of others. For example, when people are happy, they might say ‘I'm filled with joy.’ This sentence seems natural enough and may not sound metaphorical, but the subject for the verb ‘fill’ in the literal sense is usually liquid, whereas ‘joy’ is not. Here, we clearly understand ‘joy', as the target domain, which is invisible and intangible, in terms of a ‘liquid', as the source domain, and this expression is underpinned by a conceptual metaphor ‘emotions are liquids.’ The existence and pervasiveness of this metaphor is proven by its effectiveness in expressions such as ‘she is overflowing with love’ and ‘my anger is welling up.’ Thus, human beings are capable of metaphorical understanding, among other basic cognitive abilities. It should also be noted that the conventionality of this metaphor has led to the categorical extension of the verb ‘fill’ and dictionaries have an entry for the meaning of ‘be filled with emotions.’ If robots are to simulate the human cognitive process, then metaphors may play a vital role not only in terms of the cognitive ability to understand abstract notions, but also as a device for extending linguistic categories (see Section 5.1). It seems, however, that almost no attempts have been made in this respect in the field of robotics, and hence the mechanism supporting metaphorical understanding and extension requires further research.

6.2. Metaphor and embodied experiences

The process of metaphorical understanding involves two domains. One is the target domain, which is usually an abstract notion. The other is the source domain, which is concrete and a concept that we can observe or experience. The properties of the source domain are mapped onto the target domain and linguistic expressions accordingly. Fauconnier and Turner [Citation138] provides an actual model of conceptual blending. Some researchers in the field of cognitive linguistics and cognitive science argue that bodily experiences are embedded in the source domain [Citation23–25] claims that ‘abstract thoughts grow out of concrete embodied experiences, typically sensory–motor experiences.’ For example, in the ‘purposes (target domain) are destinations (source domain)’ metaphor that can be found in a sentence such as ‘he'll ultimately be successful, but he isn't there yet', the underlying sensory–motor experience, according to Feldman [Citation25], is ‘reaching a destination', and thus we can easily understand the metaphorical expression based on our own physical experiences. In addition, Grady [Citation139] claims that seemingly abstract metaphors can be decomposed into more basic elements identified there as primary metaphors, such as ‘more is up’ and ‘affection is warmth', with an experiential basis or experiential co-occurrences.

These findings have significant implications in robotics. If metaphors are grounded in embodied experiences, the body of a robot itself is an important interface for understanding the world. In other words, having a body is a considerable advantage for forming concepts as a robot. It also opens a way to connect concrete and abstract notions. Because metaphors may be used as devices for understanding abstract notions, robots may be able to understand abstract meaning based on embodied experiences, just as humans do. However, again, little research has been conducted on the topic, and hence whether a human-like body is advantageous to understanding abstract notions remains an open question.

6.3. Metaphor resources and universality

Several linguistic endeavors have aimed at identifying the various types of metaphors used in the human language. This effort ended up as an attempt to understand how we perceive and conceptualize the world, and identify the types of bodily experiences embedded using the inventory of basic primary metaphors. This included constructing dictionaries of metaphors [Citation140–142]. Among them, Seto et al. [Citation143] is particularly noteworthy in that it tries to uncover the polysemy of English words in terms of metaphors and metonymies. This has the potential to reveal how meaning emerges from very basic building blocks and thus provide a useful model for categorical extension. MetaNet [Citation144] is an online resource for metaphors based on frame semantics [Citation145]. The aim of the MetaNet project is to systematically analyze metaphors in a computational way; it now provides more than 600 conceptual metaphors, e.g. anger as a fire, happy as being up, and machines as people, with links to FrameNet frames [Citation144]. There have also been some attempts to identify metaphors using linguistic resources [Citation146], but the task was challenging, as metaphors are deeply interweaved into the language.

Comparing resources across several languages (cf. [Citation147]), it appears that many metaphors are surprisingly universal. Meanwhile, naturally, differences should be considered [Citation148,Citation149]. The universality of metaphors can be attributed to the universality of human bodily experiences, but robots may form completely different metaphors because of their physical differences. This may imply that having the body type of a human is one of the necessary conditions for robots to have a cognitive system similar to that of a human.

6.4. Challenges in metaphorical expressions

As pointed out above, there have been few endeavors in the field of robotics centered on metaphors and embodiment, despite their importance and the potential benefits. This could be due to the difficulty in implementing metaphorical thinking and the fact that robotics has not advanced enough to cope with metaphors. Nevertheless, we believe it is worth listing some of the challenges here, and we summarize them as follows:

Clarifying the computational process of categorical extension through metaphors, e.g. from ‘being physically drained’ to ‘emotionally drained.’

Inventing a computational way to understand abstract concepts based on bodily experience, e.g. using an experience tied to ‘reaching somewhere’ to understand the concept of ‘accomplishing something’ and thus, expanding the meaning of ‘reach.’

Inventing a computational mechanism for understanding creative uses of metaphors, e.g. ‘streaming is killing cable’ to mean ‘streaming is displacing cable.’

Metaphorical expressions are also one of the actively researched topics in cognitive linguistics and developmental psychology. Hence, new insights may come from related fields and interdisciplinary cooperation may be a key to unlocking new possibilities in the field.

7. Affordance and action learning

Language and actions are deeply related to each other. We can talk about ourselves and the actions of others using sentences, understand language instructions, and act accordingly. From a neuroscientific perspective, the motor and auditory areas are connected to each other, enabling, for example, the autonomous activation of the corresponding motor signals when auditory stimuli tied to a specific word are provided [Citation150,Citation151]. This type of phenomenon may be useful to the understanding of the meaning of verbs. Furthermore, to understand the language, constraints imposed by the physical body and the concept of possible actions, which is related to the idea of affordance, play an important role [Citation30]. Moreover, grammar learning is based on temporal information, namely the order of words, and it seems to be deeply related to an action-planning ability [Citation152,Citation153]. This section provides an overview of the research area of affordance and action learning, which are key to the understanding and generation of language based on embodiment.

7.1. Affordance learning

7.1.1. Affordance and functions of objects

The concept of an object is not only determined by multimodal information that can be directly observed via sensor systems, such as perception, but also by the functions of an object. Multimodal categorization, which was introduced in Section 5, is insufficient to explain object concept formation. Therefore, the functions of an object are important attributes of an object concept. The same can be said about robots [Citation154–156]. For example, considering the identification of a chair, what is important to us is the ability to sit down on it, whereas its appearance is not essential. Of course, we do have knowledge of the appearance of chairs and their usual location; however, whether we can sit on them is ultimately the decisive factor. In particular, it is considered that a function of a tool is perceived by an action–effect relationship. Such action-oriented perception learning is often referred to as affordance learning in cognitive robotics [Citation154–156].

Affordance is a concept proposed by Gibson, a psychologist who promoted ecological psychology [Citation157]. According to this concept, the meaning of the environment is not held in the human brain but instead exists as a set of possible actions by the human body. In other words, the meaning is naturally defined by the environment and the body facing the environment.

By contrast, for an object (chair) recognition using a deep neural network, the situation is completely different. For identification using a neural network learning from a large number of chair images, appearance is essential. Of course, functionality could correlate with visual information, but this does not necessarily hold. Affordance is an important concept in the quest for human intelligence, and a perfect example of the fact that intelligence and the body cannot be separated. Hence, affordance is a key concept in intelligent robotics.

7.1.2. Affordance learning

In studies on affordance in robotics, researchers often focus on ways to improve the performance of a robot using the concept of affordance. The other important area of interest regarding affordance in robotics is the issue of how to make robots learn, i.e. acquire, affordance. Here, we introduce several studies from the perspective of learning affordance.

The first work centered on the usage of affordance in navigation and obstacle avoidance for autonomous mobile robots. Sahin et al. proposed a model of affordance that links the motion of the robot to changes in the visual sensor [Citation158]. With this model, the robot can take actions to avoid obstacles naturally.

The second study contributed a learning tool to be used by robots. Stoytchev proposed a method that enables a robot to learn affordance in connection with a tool by gripping and moving a T-shaped tool in order to pick a target item [Citation159,Citation160]. This allowed robots to pick items with a high probability using the T-shaped tool.

The third work provided an example of learning more complex tools. Nakamura et al. defined the change in the object affected by tools such as scissors and staples as a function of the tool [Citation161]. Then, a method to learn the relationship between the local features of the tool and an action was proposed using Bayesian networks. Here, the robot could associate, for instance, the functionality of a tool with certain visual features and potential action. It should be noted that this affordance learning is deeply related to the multimodal categorization described in Section 5.

Many of these studies demonstrate that robots can acquire affordance in practice and that robots make better decisions using affordance.

7.2. Action learning

Action learning in robotics is often referred to as learning from demonstration (LfD) or programming by demonstration (PbD), which focuses on the issue of programming a robot motion [Citation162–164]. Action leaning is important in language and robotics, because it enables robots to understand the meaning of verbs by acting in the real world. In other words, there is no point in using a robot in the first place unless it can learn actions and form concepts in the actual, physical world. A robot capable of learning the mapping between language and actions, leading to the essential understanding of verbs, becomes very useful in practice, as language instructions can then be used to direct work.

7.2.1. Imitation learning

There are various types of action learning. In general, they can be divided roughly into two types according to the existence of supervision. In the presence of supervision, learning is sometimes referred to as imitation learning. In robotics, imitation learning often refers to the regeneration of the trajectories of the instructor, also known as LfD. In this case, the problem reduces to the modeling of the demonstrations, i.e. trajectories, of the instructor.

It should be noted that mimicking the trajectories of an expert is not essential for action learning. An important aspect of an action is its function, and one must be able to reproduce this function inherently. However, considering imitation learning by children, it is initially difficult to notice and to imitate the functional aspects of the actions. Instead, by imitating the trajectory, children eventually uncover its functional underpinnings through their own actions. In other words, it appears that it is also key to simulate trajectories initially in imitation learning. The problem currently revolves around the concept of a unit of action. In other words, we must segment continuous actions into meaningful units. From the perspective of mapping to language, segmented actions lead to discrete symbols, which facilitate the connection between the action and a word. To categorize the segmented unit actions, a Gaussian process hidden semi-Markov model (GP-HSMM) was proposed in [Citation165], with the ability to segment time series using the idea of state transitions in a hidden semi-Markov model (HSMM).

The segmentation of continuous signals is also important in speech signal segmentation. Taniguchi et al. proposed the concept of a double articulation analyzer (DAA) in order to carry out the segmentation and categorization simultaneously based on the double articulation property of speech signals [Citation166]. The DAA was implemented based on a hierarchical Bayesian formulation. The segmentation of actions, i.e. trajectories, and speech signals is highly significant in the relationship between actions and language [Citation167]. Indeed, each segmented word, i.e. verb, may be connected to a corresponding discrete action, leading to a word acquisition process that is extremely easy to understand.

In order to act based on the learned discrete actions, robots need to have a decision-making process. This is usually a matter of policy learning, and RL can be used for this. Learned discrete actions are selected based on the learned policy.

There are other types of imitation learning. In fact, Schaal discussed imitation learning from the perspective of efficient motor learning, the connection between action and perception, and modular motor control in the form of movement primitives [Citation168]. In [Citation169], a recurrent neural network with parametric bias (RNNPB) model was used to enable the identification and imitation of motions by robots. These were pioneering works on sensor–motor learning based on imitation; however, language was not involved.

Recent developments in deep learning technologies have opened a new direction in imitation learning. More specifically, generative adversarial imitation learning (GAIL) [Citation170] has been proposed based on generative adversarial networks (GAN). This approach is made possible by the fact that the output of the discriminator network can be seen as a reward for the action imitated.

Although language is not involved in GAIL, the idea has huge potential for action and language learning.

From a language learning perspective, Hatori et al. [Citation14] succeeded in building an interactive system in which the user can use unconstrained spoken language instructions to pick up a common object using an NLP technology based on deep learning. Actions are not learned but predefined in the study. However, the challenge will be to combine action learning and spoken language instruction understanding for picking tasks.

7.2.2. Reinforcement learning (RL)

RL can handle policy learning [Citation171,Citation172]. Thus, the problem mentioned above regarding the decision-making process can be solved by RL. RL techniques are roughly divided into two categories, the model-free and model-based methods. Q-learning is a well-known model-free RL method, which is suitable for discrete actions. Recent advances in deep learning have contributed to the improved performance of Q-learning using functional approximation. Deep Q-network (DQN) is the most famous method in this line of research [Citation173]. Owing to the end-to-end nature of deep learning, policy learning with continuous actions generalizes action learning, i.e. the modeling and segmentation of trajectories, and RL can be combined naturally through end-to-end learning. Deep deterministic policy gradient (DDPG) [Citation174] is one of the most frequently used algorithms for this purpose. In robotics, Levine developed a method that can be used to learn policies by mapping raw image observations directly to the torque of the motors of the robot [Citation175]. The authors demonstrated that the joint end-to-end training of the perception and control systems achieves better performance than the separate training of each component. From the perspective of connecting actions and language, end-to-end learning does not provide an explicit structure. However, an interesting approach to language acquisition by a computer agent in a simulation environment has been proposed by DeepMind Lab using deep reinforcement learning [Citation176]. The authors present an agent that learns to interpret language in a simulated 3D environment, where it is rewarded for the successful execution of written instructions. A combination of reinforcement and unsupervised learning enables the agent to learn to relate linguistic symbols to emergent perceptual representations of its physical surroundings and to pertinent sequences of actions. Although the actions contemplated in the study, such as pick-ups, are limited and relatively simple, the approach has potential for creating agents with a genuine understanding of language.

By contrast, model-based RL tries to capture the dynamics of the environment [Citation177]. Therefore, the agent can use the model to learn the policy. Although current performance is limited, model-based RL may potentially have advantages making it more applicable to complex tasks in the real world compared to model-free RL. From a language learning perspective, there have been few attempts to apply model-based RL to the language learning task, which could be a solution.

7.2.3. Syntax and actions

In generative grammar, the basic design of language capability in humans is assumed to comprise the following three modules: (1) the sensor–motor system, which is related to externalization such as utterance and gestures; (2) the conceptual and intentional system linked to the concept of semantics; and (3) the syntactic computational system, i.e. syntax, which connects the sensor–motor system to the conceptual and intentional system.

From the perspective of evolutionary linguistics, it can be argued that the syntactic operation system precedes hierarchical and sequential object manipulation abilities (action grammar [Citation178]), typically observed in the use and creation of tools. One must also insist that the conceptual and intentional interface and lexicon arise from this syntactic operation system [Citation153,Citation179]. In other words, the claim is that the syntactic operation system is derived from the sensor–motor system, i.e. syntactic bootstrapping [Citation180,Citation181]. These evolutionary linguistic viewpoints are very important for language and robotics, because affordance and action learning using robots is indispensable for acquiring language in a true sense.

7.3. Challenges in affordance and action learning

In this section, we described several studies on affordance and action learning in robotics with a close connection to language learning. From the above evolutionary linguistic viewpoint, language, especially syntax, has a strong relationship with affordance and action planning. Unfortunately, there are few existing studies focusing on learning affordance, actions, and language using real robots. Therefore, a future challenge will be to study the links among affordance, actions, and language. However, there have certainly been some pioneering attempts. In [Citation182], the authors proposed a bidirectional mapping between whole-body motion and language using deep recurrent networks. Yamada et al. proposed paired recurrent autoencoders, translating robot actions and language in a bidirectional way [Citation183]. Although the network architectures are different, these recent studies both share the concept of end-to-end learning. These deep-learning-based studies yielded promising results; however, the end-to-end learning approach has a limitation, as it does necessarily clarify the structure and the relationship between language and actions, as we explained earlier. Moreover, affordance is not explicitly taken into consideration in these studies. Therefore, achieving a comprehensive understanding of the language system, taking affordance and action learning into account, is a major challenge.

The challenges in this section can be summarized as follows:

Developing a synchronous method for learning action and affordance for language use and understanding.

Developing a computational model that forms a joint representation of action planning and syntax learning, i.e. a computational model realizing syntactic bootstrapping.

Inventing an action learning method that leads to the emergence of the concept of a verb.

The use of robots with physical bodies is an indispensable step toward this goal.

8. Pragmatics and social language

In daily conversations, spoken sentences do not always mean ‘what they literally mean.’ For example, in Figure , a person says ‘I'm thirsty.’ His utterance is a type of request to the robot rather than a declaration of his appetitive state. In many cases, language use cannot be handled without pragmatics.

There are three representative theories currently supported in pragmatics: (1) speech act theory [Citation184], (2) a theory of implicature by Grices [Citation185], and (3) relevance theory [Citation186]. These theories have provided many reasonable explanations, analyses, suggestions, and implications regarding language use, and have had a great influence on several academic disciplines. However, pragmatics and the social aspect of language have rarely been taken into consideration in robotics.

These theories tend to analyze the phenomena of language use based on reductionism, and have not yet been successful in dealing with holistic properties linked to the interdependency between foreground spoken stimuli and background beliefs. Explaining and analyzing the phenomenon in terms of language alone may lead to a limitation. Another issue may be that these approaches manipulate languages, but do not have any consideration of the body, nor its surroundings.

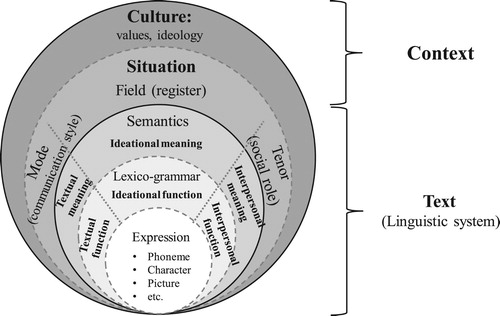

In addition, in terms of the social aspect of language, meaning is determined by use in a social context; here, context is linked to the culture and situation of a specific social group.

Considering these issues, it is important to equip the robot with knowledge of pragmatics and a sense for the use of language in a social context.

8.1. Pragmatics

8.1.1. Pragmatics in AI