?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This study investigates object picking focusing on object arrangement patterns. Objects stored in distribution warehouses or stores are arranged in regular patterns, and the grasping strategy for object picking is selected according to the object arrangement pattern. However, object arrangement patterns have not been modeled for object picking. In this study, we represent objects as polyhedral primitives, such as cuboids or hexagonal cylinders, and model object arrangements by considering occlusion patterns for object model surfaces and considering whether the adjacent object occluding the surface is moveable. We define grasp patterns based on combinations of the grasp surfaces and discuss the grasping strategy when the grasp surfaces are occluded by adjacent objects. We then introduce newly developed gripper for picking arranged objects. The gripper comprises a suction gripper and a two-fingered gripper. The suction gripper has a telescopic arm and a swing suction cup. The two-fingered gripper mechanism combines a Scott Russell linkage and a parallel link. This mechanism is advantageous for the gripper in reaching narrow spaces and inserting fingers between objects. We demonstrate the picking up of arranged objects using the grippers.

GRAPHICAL ABSTRACT

1. Introduction

Object picking is a fundamental task in the field of robotics. The most fundamental robotic manipulation task is bin picking, which refers to the robotic task of picking up an object placed randomly among other objects. Bin picking in a factory can use the computer aided design (CAD) models of the target object. Many previous studies have focused on vision guided bin picking, in which the 3D scan data of the object are matched to the CAD model, and the object pose is estimated from it [Citation1–6]. Kirkegaard adopted this approach and used the harmonic shape contexts feature for pose estimation [Citation2]. Buchholz used random sample matching (RANSAM) and iterative closest point (ICP) algorithm to identify object localization [Citation5].

Until recently, object picking was realized only in factory settings [Citation1–9], but more recent studies have investigated object picking in homes, warehouses and stores [Citation10–18]. However, most object picking tasks cannot use the CAD models, and simplified approximation models such as shape primitives [Citation10], superquadrics [Citation11], and bounding boxes [Citation12] are used.

The Amazon Picking Challenge (APC) 2015 and 2016 and the Amazon Robotic Challenge (ARC) 2017 were organized to encourage the advancement of state-of-the-art object picking tasks in warehouses. In APC and ARC, different items were placed randomly in a storage receptacle, and participating teams attempted to pick up and store the items with robots multiple times [Citation19–22]. The APC and ARC triggered the advancement of randomized picking tasks, and a significant amount research on randomized picking has since been carried out [Citation23–29]. Several studies on randomized picking have used deep learning techniques [Citation19–22, Citation24–29].

In randomized picking, objects adjacent to the target are regarded as obstacles, and grasping strategies avoid or move the obstacles. Berenson studied grasp planning in a complex environment involving obstacles [Citation13]. Dogar proposed moving obstacles by pushing [Citation16]. Harada used learning algorithms to estimate whether the pick-up task would succeed when a finger touched an adjacent object [Citation9].

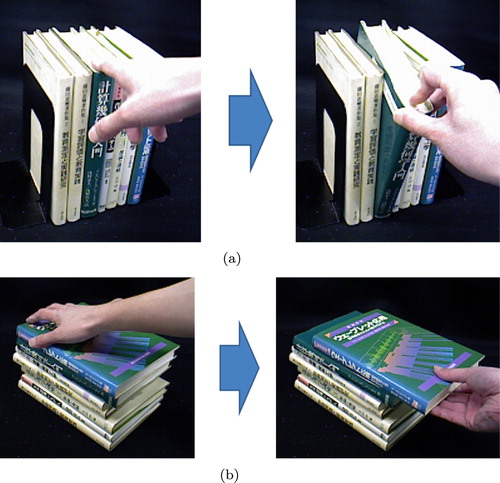

To achieve efficient storage and create attractive product displays, objects stored in distribution warehouses or stores are arranged in regular patterns, where similar items are placed, stacked, or lined up together. When objects are arranged in regular patterns, parts of the surfaces of the objects remain occluded. This limits the graspable areas on objects, and it becomes necessary to manipulate objects to reveal occluded surfaces. For instance, Figure (a) shows books lined up in a bookstand. In this case, the surfaces to be grasped by the fingers are the left and right sides of the book, which remain occluded by adjacent books. As seen in Figure (a), the book is tilted to reveal the hidden sides for grasping. Figure (b) shows a stack of books, where the top and bottom surfaces are used for grasping, and the bottom surface remain occluded. As shown in Figure (b), the book is slid forward to reveal the hidden surface for grasping. This illustrates how picking up objects arranged in regular patterns requires picking strategies corresponding to the patterns the objects are arranged in. Object arrangement patterns constrain the position and posture of the objects, which can be regarded as virtual segments. Objects in arrangements are regarded as object groups placed in the virtual segments. Thus, object picking tasks for arranged objects must consider the arrangement patterns in addition to the shapes of individual objects contained in the virtual segments. So far, object shapes have been modeled for object picking. However, the object arrangements have not been modeled. In this study, we accomplish object picking by focusing on the arrangement patterns of objects. Firstly, we represent objects as polyhedral primitives, and model object arrangements using the surface occlusion patterns. Then, we define the grasp patterns based on combinations of the grasp surfaces of the objects and discuss the grasping strategy when the grasp surfaces are occluded by adjacent objects. In addition, we demonstrate picking up arranged objects using a physical robot with a newly developed gripper. To the best of our knowledge, this is the first study on object picking focusing on object arrangement patterns.

Figure 1. Non-prehensile manipulation may be performed for object picking according to the object arrangement pattern. (a) Tilting an aligned book for picking. (b) Sliding the top book for picking.

The rest of the paper is organized as follows. In Section 2, we describe object arrangement models, and in Section 3, we outline the definitions of grasp patterns and explain the grasping strategies. In Section 4, we describe a picking experiment for arranged objects and conclude the paper in Section 5.

2. Modeling of an object arrangement pattern

The grasping strategy for object picking is selected according to whether the gripper can access the grasping surfaces on the target objects. We model an object arrangement pattern with the occlusion pattern of the object surface. To describe the object arrangement pattern, we define the following surfaces.

Accessible surface: A surface on the target object that a gripper can access without interference from adjacent objects or the surrounding.

Occluded surface: A surface occluded by adjacent objects or the surrounding, which a gripper cannot access for grasping. The occluded surface is the excluding of the accessible surface on the object.

Grasp surface: A surface on the target object that a gripper can potentially access for grasping. The grasp surface is defined depending on the features of the gripper. When the gripper type is a two-fingered gripper, the grasp surface is two opposing surfaces of the object within the stroke, and when the gripper type is a vacuum gripper, the grasp surface is a flat surface of the target object.

2.1. Object model for describing object arrangement patterns

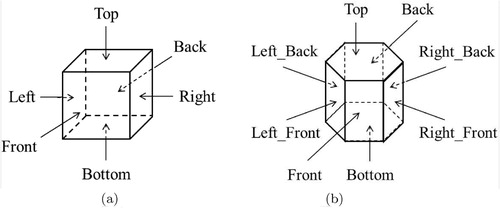

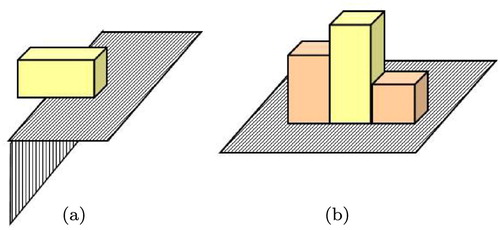

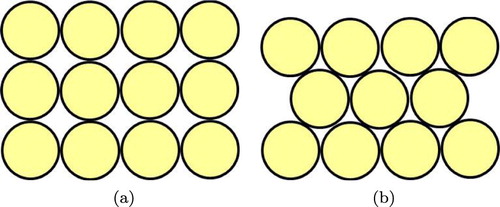

There are a wide variety of objects in warehouses and stores, and CAD models of these objects usually cannot be obtained. We model an object with a simplified shape primitive for describing the object arrangement pattern. Figure depicts two typical patterns of objects lined up on a flat surface. Figure (a) shows objects lined up in the front, back, left, and right, and many objects are arranged in this pattern. In such cases, objects come into contact with surrounding objects at up to four places, and objects can be modeled as cuboids. Figure (b) shows circular objects lined up closely, with up to six points of contact with surrounding objects. In this case, we model objects as hexagonal prisms.

Figure 2. Two arrangement patterns of circular objects on a plane. (a) Arrangement pattern 1, (b) Arrangement pattern 2.

Objects stored in warehouses or stores are placed on shelves, with similar objects grouped together. The surface of an object seen on the shelf head-on is regarded as the front of the object. The surfaces of the object model are labeled as in Figure . The notations used are in the following order.

(1)

(1)

(2)

(2)

2.2. Describing an object arrangement pattern

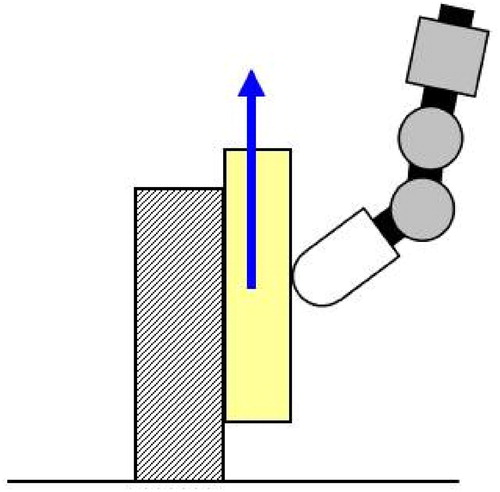

The grasping strategy for object picking is selected depending on which grasp surfaces of the object are occluded by adjacent objects. Additionally, different grasping strategies can be selected depending on whether the adjacent object occluding the grasp surface can be moved. For instance, if the adjacent object can be moved, the occluded grasp surface can be revealed by simply moving the adjacent object. Moreover, if the adjacent object is a fixed structure such as a wall, the occluded grasp surface can be revealed by moving the target object by pushing it up against the adjacent object, as shown in Figure . Therefore, we model object arrangement pattern by considering the occlusion pattern, and whether the adjacent objects occluding the surface are moveable. Thus, the object arrangement pattern is described as follows:

(3)

(3) where

expresses whether the surface is occluded by the adjacent object, and if the adjacent object is moveable.

if surface i is an accessible surface, and

if surface i is an occluded surface, and the adjacent object occluding that surface can be moved. Moreover,

if surface i is an occluded surface, and the adjacent object occluding that surface cannot be moved. Therefore, there are

object arrangement patterns. The following ternary number is introduced to identify the object arrangements.

(4)

(4)

If Id = 0, the object is floating in the air, and or

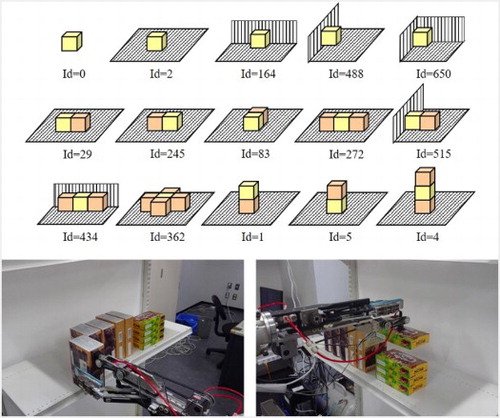

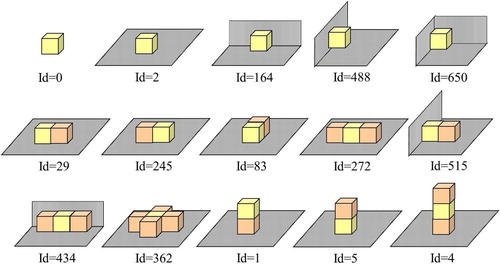

represents the object being placed inside a box. Figure shows representative object arrangement patterns of the cuboid model.

Figure 5. Representative object arrangement patterns of the cuboid model. The yellow cuboid is a target object, orange objects are moveable objects, and gray faces represent immovable objects.

In some cases, only a part of the surface is occluded in object arrangements, or there is a gap between the target and the adjacent object. This is regarded as an intermediate state between when the surface is fully occluded and when it is not occluded at all. For instance, Figure (a) depicts the case where a part of the object is protruding from the desk. In this case, the object arrangement is regarded as an intermediate state between Id = 0 and Id = 2, as shown in Figure . Figure (b) shows that the target object is at the middle and taller than the objects on the left and right. The left object is taller than the object on the right. In this case, the object arrangement is regarded as an intermediate among the three state of and 272 in Figure . Whether a gripper can grasp the partial occluded surfaces depends on the size of the exposed area or size of the gap between the surface and the adjacent objects, and mechanical features of the gripper such as gripper type, shape, grip force, control accuracy.

3. Picking arranged objects

In this section, we present a strategy for picking up arranged objects.

3.1. Grasp pattern

The grasp surface of an object is determined by the mechanical features of the gripper such as gripper type, shape, stroke. Moreover, there are a number of grasping methods even for the same gripper, depending on which surface of the object is grasped. The combination of grasp surfaces on the model when an object is grasped is called the grasp pattern and is described as follows:

(5)

(5) where n is the number of surfaces in the object model.

is a binary number that represents whether the face is a grasp surface. If the i-th face is a grasp surface,

, else,

. The order of the face is the same as that in Equations (Equation1

(1)

(1) ) or (Equation2

(2)

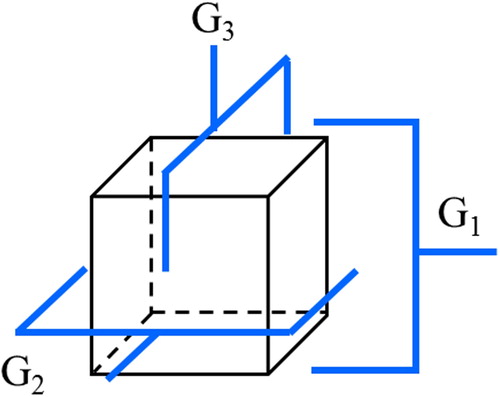

(2) ). Figure shows grasp patterns of a cuboid model when a two-fingered gripper can grasp all faces of the object within the finger stroke. Then, the following three grasp patterns can be defined:

(6)

(6)

3.2. Grasping strategy for picking up arranged objects

A grasping strategy is an action sequence for picking up an object from an arrangement. To pick-up an object, the following formula must be satisfied:

(7)

(7)

(8)

(8)

(9)

(9) where

is the outward normal vector of surface k, and

is the direction to which the object is picked up. Equation (Equation7

(7)

(7) ) expresses the situation where no moveable object is on top of the target object, and Equation (Equation8

(8)

(8) ) describes the situation where no adjacent object is present in the direction to which the object is picked up. Equation (Equation9

(9)

(9) ) expresses the situation when all grasp surfaces are accessible. The gripper must be able to approach and pick up the object, and there must be no adjacent objects in the approach trajectory. If the direction of the approach is opposite to the action of picking up, then Equation (Equation8

(8)

(8) ) is satisfied. Where either Equations (Equation7

(7)

(7) )–(Equation9

(9)

(9) ) are satisfied, it is a graspable condition. If a grasp pattern that satisfies a graspable condition exists, the object can be directly grasped and picked up using the grasp pattern. Else, different grasping strategy is required to pick up an arranged object. The following is a description of the typical grasping strategies:

| S1: | Remove the adjacent objects. In this case, the adjacent objects should be movable and graspable with the gripper. If Equations (Equation7 | ||||

| S2: | Grasp between a gap. Insert a finger between the target object and the adjacent object to access the occluded grasp surface and grasp it. In this case, the object arrangement state can be regarded as the intermediate state. Whether the finger can insert into the gap depends on the size of the gap, size of the finger and the control accuracy of the robot. The gap must be detected by tactile or visual sensors. The control accuracy required is determined by the gap size. The narrower the gap the more difficult it is to insert a finger into the gap. | ||||

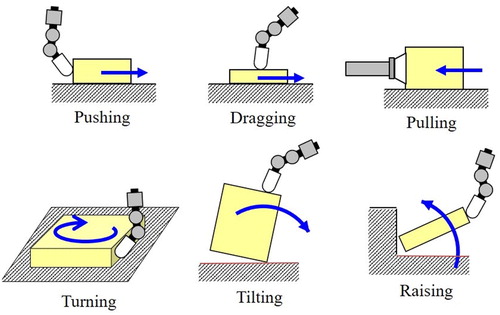

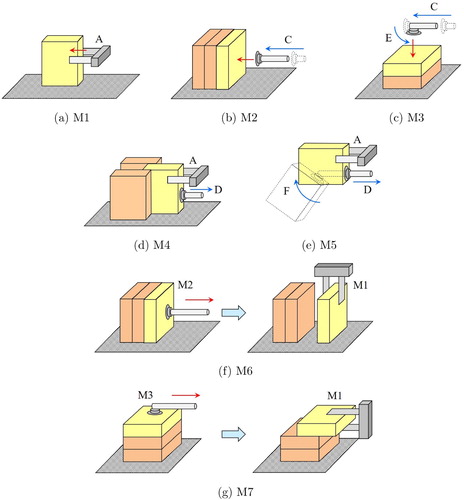

| S3: | Non-prehensile manipulation. Exert non-prehensile manipulations to the accessible surfaces of the target object and expose the occluded grasp surfaces, then grasp the target. Non-prehensile manipulation is a manipulation that translates or rotates an object without grasping the object. Figure shows representative non-prehensile manipulations in which a finger touches one surface of the object. Pushing and dragging are sliding manipulations executed by contact between the finger and the side or top face of the object, respectively. Pulling is a sliding manipulation executed by sucking the side face of the object with a vacuum gripper. Turning is a rotating manipulation around the vertical axis, executed by contact between the finger and the side face of the object. Tilting is a manipulation that tilts an object with the finger in contact with the top face of the object. Raising is a manipulation that raises an object with the finger pushing the object against a wall. The non-prehensile manipulation technique is selected according to the object arrangement pattern and gripper type. | ||||

The object picking task for arranged object can be expressed by the state transition diagram with the object arrangement pattern described in Section 2.1 as a node. Actions have been assigned to state transitions between each node. The grasping strategy can be generated by obtaining the action sequence when the object state transitions from the arranged state to a graspable condition. In the generation of the grasping strategy, the path of the state transitions and actions between nodes depends on the object type, and mechanical features of the gripper such as gripper type, shape, finger stroke, grip force or suction force. Thus, the grasping strategy should be generated by considering the objects and the gripper. When transitioning between two object arrangement patterns, the graspable condition may be satisfied in the intermediate state before the occluded grasp surfaces are completely exposed. Whether the intermediate state satisfies the graspable condition depends on the conditions described in Section 2.2. Therefore, it is necessary to evaluate in advance the size of the grasp surfaces required to grasp the object with a real gripper.

4. Experiment on picking up arranged objects

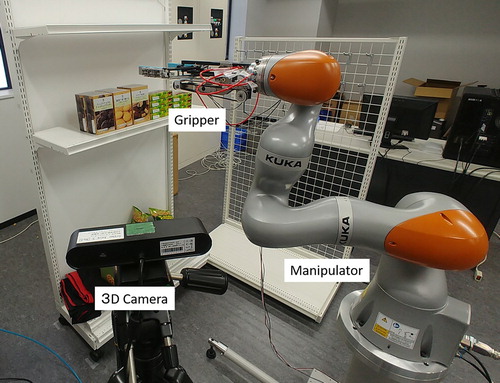

Here, we demonstrate examples of picking up arranged objects with a physical robot. The object picking system comprises a manipulator and a 3D camera, as shown in Figure . The manipulator was equipped with a new developed gripper on the tip of the robotic arm (LBRiiwa14R820, KUKA Robotics), and the 3D camera used was the Astra S (Orbbec 3D Tech. Intl. Inc.).

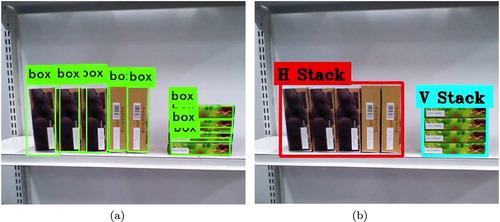

In the experiment, the object models are given in the system, and the real objects that are lined up horizontally and vertically on a shelf, as shown in Figure , were picked up. The horizontally and vertically arranged objects are of typical arrangement patterns.

Picking up arranged objects, as when refilling shelves with products, may involve repeatedly picking the same type of object. Therefore, the grasping strategy that can be applied to many objects is given preference in strategy selection. Grasping strategy selection must consider what direction the target object can be moved in for transition of the object arrangement state. To describe the motion of the target object, we introduce a right-handed coordinate system where Front is in the axis direction, and the Right is in the

axis direction. The translation and rotation along each axis are expressed as

and

, respectively.

In Figure , the object arrangement pattern of the object on the left end, middle, and right end in the left stack are denoted by Id = 29, Id = 272, and Id = 245, respectively. All objects in the left stack can move along ,

and

. The left end object can additionally move along

and

, and the right end object can additionally move along

and

. If the robot picks up all objects from the left or right of the stack, the same grasping strategy can be applied to all objects in the stack.

In the right stack, only the top object satisfies the condition of Equation (Equation7(7)

(7) ). The object arrangement pattern of the top object is Id = 1, and it can move along

,

,

and

. In this case, the robot should pick up objects from the top to the bottom, and the same grasping strategy can be applied to all objects.

A concrete grasping strategy for object picking is dependent of the gripper type. We will now describe the gripper developed for object picking in this study.

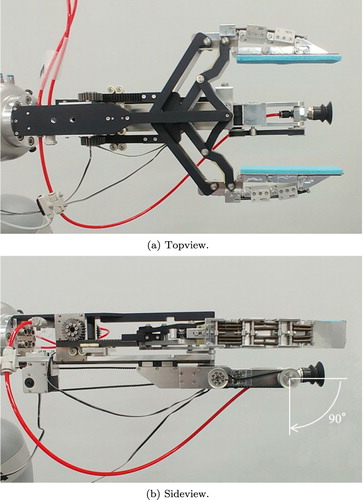

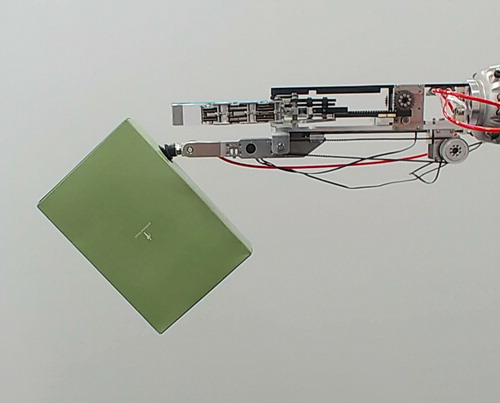

4.1. Developed gripper

As shown in Figure , the developed gripper comprise a two-fingered gripper and a suction gripper. To reach into narrow spaces of shelves and pick up a wide variety of objects, the width of the gripper base should be compact and the finger stroke width should be wide. Moreover, to allow the insertion of a finger of the two-fingered gripper between objects, parallel rectilinear motion of both fingers when opening and closing is desirable. To date, many two-fingered grippers have been developed using a rack and pinion, feed screw, or parallel link mechanism. Two-fingered grippers using a rack and pinion mechanism or feed screw mechanism are capable of opening and closing their fingers through parallel rectilinear motion, but the base of the grippers are wider than the finger stroke width. This is disadvantageous for reaching into narrow spaces. Moreover, a two-fingered gripper using a parallel link mechanism allows for a compact gripper base, but it does not allow for linear motion of the fingertips when opening and closing the fingers. Thus, with current grippers, it is difficult to make a grasp plan due to the change in the length from the base to the fingertip in accordance with the finger stroke width. In this study, the two-fingered gripper mechanism was developed by combining a Scott Russell linkage and a parallel link. Although this favors a compact gripper base, it also enables parallel, rectilinear motion of the fingers when opening and closing. The fingers of the two-fingered gripper are 115 mm from the base to the fingertip, the finger stroke is 0–150 mm, and the width of the gripper base is 64 mm. A suction gripper was also developed for the object picking system. The suction gripper has a telescopic arm, and as shown in Figure (b), the suction cup has range of 0–90. The telescopic stroke of the suction gripper is 0–148 mm, and when the gripper is extended, the suction cup protrudes 33 mm from the tip of the two-fingered gripper. The diameter of the suction cup is 30 mm. Moreover, the suction gripper has a pressure sensor to detect contact between the suction cup and an object. Overall, the gripper length is 411 mm from the base bottom to the fully extended suction cup. So far, several grippers combining a two-fingered gripper and a suction gripper have been developed [Citation30, Citation31]. Since the fingertip trajectory of these grippers are non-linear, strategies for inserting a finger in between arranged objects are difficult to adopt.

4.2. Design of the grasping strategy with the developed gripper

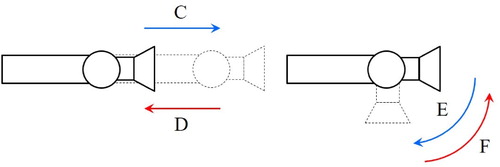

The initial condition of the gripper is that the finger of the two-fingered gripper is open, the suction arm is contracted, and suction cup is straight. The basic action of the gripper is as follows:

| (A): | pinch by the two-fingered gripper | ||||

| (B): | object suction by the suction gripper | ||||

| (C): | extension of the suction arm | ||||

| (D): | contraction of the suction arm | ||||

| (E): | rotating the suction cup to 90 | ||||

| (F): | returning the suction cup to 0 | ||||

Figure shows basic actions of the suction gripper. By combining these basic actions, the following strategies can be adopted (as shown in Figure ).

M1: A

M2: C+B

M3: C+E+B

M4: C+B+D+A

M5: C+E+B+F+D+A

M6: M2+M1

M7: M3+M1

M1 involves moving the gripper to the grasping point through the motion of the arm and pinching the object with the two-fingered gripper (Figure (a)). M2 involves extending the suction arm, bringing the suction cup into contact with the object through the motion of the arm while sucking air, and grasping the object through suction (Figure (b)). The contact between the suction cup and the object is detected by the pressure sensor. M3 involves extending the suction arm, rotating the suction cup by 90, bringing the suction cup into contact with the object through the motion of the arm while sucking air, and grasping the object through suction (Figure (c)). The contact between suction cup and the object is detected by the pressure sensor. This grasping strategy is selected the top of the object is exposed. M4 involves strategy M2, followed by pulling the object between the two fingers by contracting the suction arm then pinching the object (Figure (d)). M5 involves strategy M3, followed by returning the suction cup to 0

, and pulling the object between the two fingers by contracting the suction arm and pinching the object (Figure (e)). M4 and M5 involve grasping the object with both the two-fingered gripper and the suction gripper to grasp the object in a stable manner. M6 and M7 involve M2 and M3, respectively. The motion of the arm then reveals the occluded surface, and after the object is temporarily released, and then grasped with the two-fingered gripper (Figure (f,g)). Therefore, this grasping strategy involves a regrasping action.

The grasping strategy should be developed according to the objects and the gripper. Using the developed gripper, the various grasping strategies for picking up the arranged objects, as shown in Figure , was developed. The grasping strategy with the lowest number of motion steps, which can be applied to a large number of objects, was prioritized.

First, we designed the grasping strategies for picking up the horizontally stacked objects, as shown on the left in Figure . The objects cannot be directly grasped by the two-fingered gripper, as the left, right, or both faces are occluded by adjacent objects. Whereas the front and top faces, and the left and right faces of the far left and far right objects, respectively, are exposed and are thus graspable with the suction gripper. However, when grasping the left or right face with the suction gripper, there is a possibility that the object will topple sideways when the suction cup is pushed onto the surface. Further, the top face of the object is difficult to visually sense with the camera. Thus, the front face is the ideal grasp surface for the suction gripper, and the strategy was selected that moves the object along for grasping. The applicable strategies in this case are M2, M4, and M6. When grasping is only performed through the suction gripper, as in strategy M2, the object tilts because the suction cup is soft, as shown in Figure . Moreover, as grasping strategy M6 involves regrasping, the number of motion steps increases. Thus, the object can be picked up by employing the M4 strategy as this strategy uses both the two-fingered gripper and the suction gripper, and therefore, can achieve a stable grasp.

Next, we designed the grasping strategies for picking up the vertically stacked objects, as shown on the right in Figure . The object cannot be grasped directly by the two-fingered gripper as the bottom face is occluded by the object beneath it. Thus, the suction gripper is needed to pick up the objects. For the suction gripper, the grasp surfaces are the front, right, and left faces, as well as the top face of the top object. In this case, the front, right, and left faces of the objects are small vertically, and therefore require highly precise positioning when placing the suction gripper. Thus, the ideal grasp surface for the suction gripper is the top face, and a strategy was selected that moved the object along or

for grasping. In this scenario, strategies M3 or M7 are applicable. As the target object was light, M3 is the ideal strategy owing to fewer motion steps involved. In the case of heavy objects that cannot be grasped using the suction gripper alone, grasping strategy M7 must be applied.

4.3. Experiments

The grasping points for each grasp pattern of the two-fingered gripper and the suction gripper are set in the object model in advance. In the experiment, first, object arrangement states are recognized. The robot selects the grasping strategy based on the recognition result, and the gripper and the grasp pattern used for picking are determined. Next, the position and posture for each of the individual objects in the object arrangement are estimated, and the robot get the grasping points in the world frame using the object model. Thereby, the robot picks up the objects by applying the selected grasping strategy.

The recognition of the object arrangement was performed using the technique described in [Citation32], and segmented the regions where the same object arrangement state and identified the object arrangement. Specifically, segmentation was performed by inputting two-dimensional RGB images of the scene, detecting individual objects with a bounding box (BB) through general object detection, defining a four-dimensional feature vector of which elements are central position , width and height of the BB, and clustering the feature vectors through density-based spatial clustering of applications with noise (DBSCAN) [Citation33]. Then, object BBs in the same cluster were converted into 1-of-K vectors using self-organizing maps (SOM) [Citation34], and their sum was used to create a bag-of-words [Citation35]. Support vector machine (SVM) [Citation36] was used for the identification of horizontal and vertical stacks in the segmentation. Figure (a) shows the general object detection results for boxed objects, and Figure (b) shows the segmentation results for the identification of arranged object regions and arrangement patterns.

Figure 15. Recognition of the arranged objects and stacks. (a) Detection of the arranged objects and (b) recognition of the object arrangement.

Next, the position and posture for each of the individual objects was estimated. The arranged objects vary in terms of position according to the arrangement patterns, but objects are lined up in virtually the same posture. Using this fact, the 3D point cloud and object model were compared, and the position and posture of the object was estimated. The 3D shape of the object was obtained from the object model. In the case of horizontally stacked objects, the point cloud was matched with the model from the BB of the end object, and in the case of vertically stacked objects, the point cloud in the BB of the top object was collated. Using the 3D features termed the Shape Index [Citation37, Citation38], the position and posture were obtained. To estimate the position and posture of the second and subsequent objects, the previously obtained object positions were translated in the horizontal direction along the front of the object arrangement region for horizontally stacked objects and translated along the vertical direction for vertically stacked objects. The position and posture estimation were performed in the same way, by matching the point cloud in the BB and the model. This reduces computing costs of estimating the position and posture of the second and subsequent objects in the same arrangement pattern.

By identifying the object arrangement pattern and estimating the position and posture of the object included in the object arrangement, the grasping strategy and its grasping point were obtained. Then, the robot started the object picking task by applying the grasping strategy. The procedure for object picking is as follows: First, the gripper moves to the starting point according to the selected grasping strategy. In our experiment, the starting point was at a line that passes through the grasping point perpendicular to the grasp surface. The distance from the grasping point was set to constant in advance. Then, the robot grasps the object by applying the selected grasping strategy. After grasping the object, the robot moves to an escape point, which is the same as the starting point. Then, the robot brings the object to the predetermined destination point.

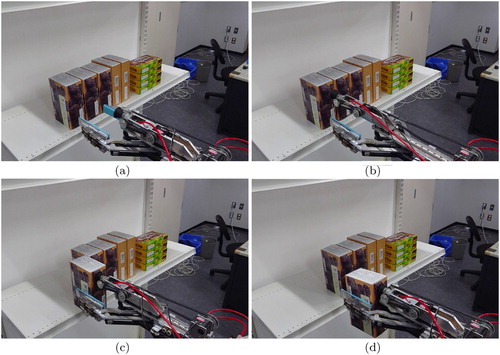

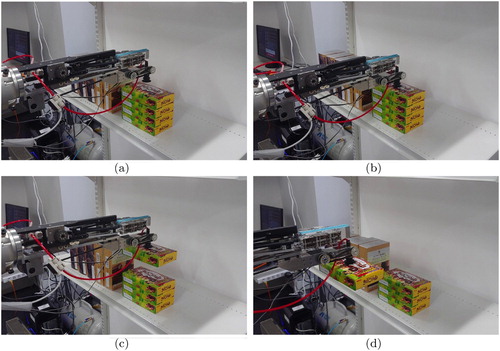

Figures and depict object picking when objects are placed in horizontal and vertical stacks, respectively, and grasping strategies are selected based on the 3D camera recognition results (strategies M3 and M4).

In the experiment on picking up a horizontally stacked object, the gripper moved to the starting point for picking the object on the far left (Figure (a)), and the suction cup made contact with the front of the object at the grasping point (Figure (b)). Then, the suction arm contracted for pulling out the object, and the object was grasped with two-fingered grippers (Figure (c)) and moved to the escape point (Figure (d)), and then to the destination point.

In the experiment on picking up a vertically stacked object, the gripper moved to the starting point for picking the top object (Figure (a)), the suction gripper approached close to the grasping point on top surface of the object with the suction cup facing downwards, the object was sucked with the suction gripper (Figure (b)), moved to the escape point (Figure (c)), and then moved to the destination (Figure (d)).

5. Conclusions

In this paper, we described object picking focusing on object arrangement patterns. Firstly, we defined accessible surface, occluded surface, and grasp surface from the viewpoint of the finger access to the object surfaces and grasping the object. We represented a variety of objects in polyhedral primitives such as cuboids or hexagonal cylinders and modeled object arrangements considering the combination of occluded surfaces of the object model, and whether the adjacent object occluding the object surface is moveable. Moreover, grasp patterns were defined by combining the grasp surfaces and the graspable condition for arranged objects was derived and the grasping strategy for picking up an arranged object was discussed.

In addition, we introduced newly developed gripper for picking arranged objects. The gripper comprises a suction gripper and a two-fingered gripper. The suction gripper has a telescopic arm and a swing suction cup. The two-fingered gripper mechanism combines a Scott Russell linkage and a parallel link. This mechanism is advantageous for reaching into narrow spaces and inserting the finger between objects. We described the design of the grasping strategy for picking up horizontally and vertically arranged cuboidal objects using the newly developed gripper and conducted an experiment of picking up the objects. The basic actions of the gripper can expose the occluded surfaces of an object placed in other arrangement states. The grasping strategy may be generated for picking up objects in other arrangement states by combining these basic actions. In the experiment, the grasping strategy was developed manually. The automatic generation of the grasping strategy is a challenging theme which involves task planning and grasp planning. In the future, we plan to conduct research on automatic generation of the grasping strategy.

The object arrangement patterns in the experiment are relatively simple but typical. Complex arrangement patterns may be present in objects stored in warehouses or stores, where only parts of the object surfaces are occluded, for instance, when there are gaps between objects or when objects are stacked in a tilting pile. In the future, we also plan to investigate picking up various types of objects arranged in complicated patterns with the aim of automating robotic tasks in distribution warehouses or stores.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Kazuyuki Nagata

Kazuyuki Nagata received his B.S. and Ph.D. in Engineering from Tohoku University, Japan in 1986 and 1999, respectively. He joined Tohoku National Industrial Research Institute (TNIRI), Ministry of International Trade and Industry in 1986, he was assigned to Electrotechnical Laboratory (ETL) in 1991, and assigned to Planning Headquarters of the National Institute of Advanced Industrial Science and Technology (AIST) in 2001. He is currently a senior research scientist at AIST. His current research interests include mechanics of robot hands, robotic manipulation and grasping.

Takao Nishi

Takao Nishi received his Ph.D. in Agriculture from Okayama University in 1999. After working at the National Institute of Advanced Industrial Science and Technology (AIST), he has been a specially appointed associate professor at the Graduate School of Engineering Science, Osaka University since 2020. His research interests include computer vision, intelligent robotic systems, and agricultural machinery.

References

- Berger M, Bachler G, Scherer S. Vision guided bin picking and mounting in a flexible assembly cell. In: Logananthara R, Palm G, Ali M editors. Intelligent Problem Solving. Methodologies and Approaches. IEA/AIE 2000. Lecture Notes in Computer Science. Vol. 1821, Berlin, Heidelberg: Springer; 2000. p. 109–117.

- Kirkegaard J, Moeslund TB. Bin-picking based on harmonic shape contexts and graph-based matching. In: International Conference on Pattern Recognition (ICPR); 2006. p. 581–584.

- Fuchs S, Haddadin S, Keller M, et al. Cooperative bin-picking with Time-of-Flight camera and impedance controlled DLR lightweight robot III. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Taipei, Taiwan; 2010. p. 4862–4867.

- Choi C, Taguchi Y, Tuzel O, et al. Voting-based pose estimation for robotic assembly using a 3D sensor. In: IEEE International Conference on Robotics and Automation (ICRA); Saint Paul, MN, USA; 2012. p. 1724–1731.

- Buchholz D, Futterlieb M, Winkelbach S, et al. Efficient bin-picking and grasp planning based on depth data. In: IEEE International Conference on Robotics and Automation (ICRA); Karlsruhe, Germany; 2013. p. 3230–3235.

- Harada K, Yoshimi T, Kita Y. Project on development of a robot system for random picking -grasp/manipulation planner for a dual-arm manipulator. In: IEEE/SICE International Symposium on System Integration (SII); Tokyo, Japan; 2014. p. 583–589.

- Dupuis DC, Leonard S, Baumann MA, et al. Two-fingered grasp planning for randomized bin-picking. In: Robotics: Science and Systems (RSS) 2008 Manipulation Workshop; 2008.

- Domae Y, Okuda H, Taguchi Y, et al. Fast graspability evaluation on single depth maps for bin picking with general grippers. In: IEEE International Conference on Robotics and Automation (ICRA); Hong Kong, China; 2014. p. 1997–2004.

- Harada K, Wan W, Tsuji T, et al. Initial experiments on learning-based randomized bin-picking allowing finger contact with neighboring objects. In: IEEE International Conference on Automation Science and Engineering; Fort Worth (TX), USA; 2016. p. 1196–1202.

- Miller AT, Knoop S, Christensen HI, et al. Automatic grasp planning using shape primitives. In: IEEE International Conference on Robotics and Automation (ICRA); Taipei, Taiwan; 2003. p. 1824–1829.

- Goldfeder C, Allen PK, Lackner C, et al. Grasp planning via decomposition trees. In: IEEE International Conference on Robotics and Automation (ICRA); Roma, Italy; 2007. p. 4679–4684.

- Huebner K, Ruthotto S, Kragic D. Minimum volume bounding box decomposition for shape approximation in robot grasping. In: IEEE International Conference on Robotics and Automation (ICRA); Pasadena, CA, USA; 2008. p. 1628–1633.

- Berenson D, Diankov R, Nishiwaki K, et al. Grasp Planning in Complex Scenes. In: IEEE-RAS International Conference on Humanoid Robots (Humanoids); 2008. p. 42–48. 2008.

- Nagata K, Miyasaka T, Nenchev DN, et al. Picking up an indicated object in a complex environment. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Taipei, Taiwan; 2010. p. 2109–2116.

- Rao D, Le QV, Phoka T, et al. Grasping novel objects with depth segmentation. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Taipei, Taiwan; 2010. p. 2578–2585.

- Dogar MR, Hsiaoy K, Ciocarliey M, et al. Physics-based grasp planning through clutter. In: Robotics: Science and Systems (RSS); 2012.

- Shiraki Y, Nagata K, Yamanobe N, et al. Modeling of everyday objects for semantic grasp. In: IEEE International Symposium on Robot and Human Interactive Communication Ro-Man) Edinburgh, UK; 2014. p. 750–755.

- Pas AT, Platt R. Using geometry to detect grasp poses in 3D point clouds. In: The International Symposium on Robotics Research (ISRR); Sestri Levante, Italy; 2015.

- Jonschkowski R, Eppner C, Hofer S, et al. Probabilistic multi-class segmentation for the Picking Challenge. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Daejeon, Korea; 2016. p. 1–7.

- Zeng A, Yu KT, Song S, et al. Multi-view self-supervised deep learning for 6D pose estimation in the Amazon Picking Challenge. In: IEEE International Conference on Robotics and Automation (ICRA); Preprint 2017. Available from arXiv:1609.09475 [cs.CV].

- Schwarz M, Lenz C, Garcia GM, et al. Fast object learning and dual-arm coordination for cluttered stowing, picking, and packing. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; 2018.

- Zeng A, Song S, Yu KT, et al. Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; Preprint 2018. Available from arXiv:1710.01330 [cs.RO].

- Mitash C, Boularias A, Bekris KE. Improving 6D pose estimation of objects in clutter via physics-aware monte carlo tree search. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; Preprint 2018. Available from arXiv:1710.08577 [cs.RO].

- Lenz I, Lee H, Saxena A. Deep learning for detecting robotic grasps. In: International Conference on Learning Representations (ICLR); Preprint 2013. Available from arXiv:1301.3592 [cs.LG].

- Mahler J, Liang J, Niyaz S, et al. Dex-Net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. In: Robotics: Science and Systems (RSS); Preprint 2017. Available from arXiv:1703.09312 [cs.RO].

- Yan X, Hsu J, Khansari M, et al. Learning 6-DOF grasping interaction via deep 3D geometry-aware representations. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; Preprint 2018. Available from arXiv:1708.07303 [cs.RO].

- Mahler J, Matl M, Liu X, et al. Dex-Net 3.0: Computing robust vacuum suction grasp targets in point clouds using a new analytic model and deep learning. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; Preprint 2018. Available from arXiv:1709.06670 [cs.RO].

- Hatori J, Kikuchi Y, Kobayashi S, et al. Interactively picking real-world objects with unconstrained spoken language instructions. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; Preprint 2018. Available from arXiv:1710.06280 [cs.RO].

- Fang K, Bai Y, Hinterstoisser S, et al. Multi-task domain adaptation for deep learning of instance grasping from simulation. In: IEEE International Conference on Robotics and Automation (ICRA); Brisbane, Australia; 2018. Available from arXiv:1710.06422 [cs.LG].

- Hasegawa S, Wada K, Niitani Y, et al. A three-fingered hand with a suction gripping system for picking various objects in cluttered narrow space. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Vancouver, BC, Canada; 2017. p. 1164–1171.

- Kang L, Seo JT, Kim SH, et al. Design and implementation of a multi-function gripper for grasping general objects. Appl Sci. 2019;9(24):5266. https://doi.org/10.3390/app9245266.

- Asaoka T, Nagata K, Nishi T, et al. Detection of object arrangement patterns using images for robot picking. ROBOMECH J. 2018;5(1):1–23.

- Ester M, Kriegel HP, Sander J, et al. A density-based algorithm for discovering clusters in large spatial databases with noise. In: International Conference on Knowledge Discovery and Data Mining (ICKDDM); 1996. p. 226–231.

- Kohonen T. The self-organizing map. Proc IEEE. 1990;78(9):1464–1480.

- Liu D, Sun DM, Qiu ZD. Bag-of-words vector quantization based face identification. In: Proceedings of International Symposium on Electronic Commerce and Security (ISECS) Vol. 2; 2009. p. 29–33.

- Suykens JAK, Vandewalle J. Least squares support vector machine classifiers. Neural Process Lett. 1999;9(3):293–300.

- Koenderink JJ, Doorn JA. Surface shape and curvature scales. Image Vis Comput. 1992;10:557–564.

- Feldmar J, Ayache N. Rigid, affine and locally affine registration of free-form surfaces. Int J Comput Vis. 1996;18:99–119.