?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Extensive research has been conducted to realize highly expressive android faces. In contrast, not much is being discussed which android faces are mechanically better and how advanced each android is because a fair and meaningful standard index of expressiveness does not exist. Therefore, in this study, we propose a numerical index and three types of visualization methods for facial deformation expressiveness to investigate the mechanical performance of android faces compared to humans. We used a data set of three-dimensional displacement vectors of over 100 facial points on the faces of two androids and three human males to calculate the expressiveness that reflects how diversely each facial point could move. As a result, we found that the expressiveness of the androids was significantly lower than humans, especially in the lower faces. The expressiveness of the androids was approximately only 20 that of humans even in the most expressive axis and two orders of magnitude lower than humans in three-dimensional evaluation. Thus, our method revealed the lackness of mechanical performance in recently developed androids to move facial skin widely. This method enabled us to reasonably discuss future directions to realize next generation androids with expressiveness similar to that in humans.

GRAPHICAL ABSTRACT

1. Introduction

Improving expressiveness on a face is crucial in android robotics because the deformable facial surface for communication distinguishes android robots from other humanoid robots. Multiple research teams have attempted to generate improved expressive android faces using ingenious facial mechanisms [Citation1–19] considering the social effects of androids can be enhanced by improving the motion performance on a face, a remarkable deformable display device of emotional and social cues. For example, androids exhibit stronger emotional states if they move their face more widely. Furthermore, they can distinctly communicate several emotions and social signals by clearly generating different facial movements.

However, not much research has been conducted to determine which android faces are mechanically better, and how advanced each android is in terms of expressiveness on the basis of a fair and meaningful standard index. Traditionally, the number of actuators installed in an android face and their locations are often highlighted and compared among different androids [Citation10,Citation13,Citation18–21]. This comparison is helpful to discuss which facial deformation patterns, such as action units defined in the Facial Action Coding System [Citation22], android designers intend to install. However, this is insufficient to evaluate how much these patterns are realized on the facial surface because skin deformations are determined not only by the number and locations of actuators but also by the soft skin properties, such as the thickness, material, and elasticity, as pointed out by Tadesse and Priya [Citation15]. Expressiveness should be evaluated based on the realized surface deformations because humans observe the patterns of the surface deformations produced by the hidden actuators and spread on the facial surface. Moreover, even when the realized deformation patterns are the same with different androids, the android with fewer actuators should be evaluated more than the others from an efficiency point of view when the motion performance is the same, as suggested by Lin et al. [Citation18]. Although impression evaluation has also been adopted for androids [Citation3,Citation7,Citation8,Citation10,Citation11,Citation17,Citation18,Citation23,Citation24], it is insufficient to determine the index of expressiveness given that the variations in the evaluation value are considerable among human evaluators, making it difficult to specify the problematic facial regions where expressiveness is low. In addition, numerically comparing the motions and shape changes of facial surface among android and human faces [Citation9,Citation10,Citation16,Citation17,Citation25–27] is considered effective in discussing the similarity, or human likeness, in each facial expression, but not the expressiveness that each face equips.

To effectively improve facial expressiveness in androids, a numerical index of expressiveness would be required to fairly and strictly examine the amount of expressiveness each face and facial region equips. Based on this expressiveness index, we can investigate the achievement level of expressiveness in recently developed androids compared to the expressiveness of human faces (e.g. we can say ‘the facial expressiveness of this android is 80 that of human on average’). Furthermore, we can determine ways to improve the facial deformation mechanism of each android and examine their insufficiency of expressiveness (e.g. we can say ‘an additional facial mechanism as this is necessary to improve the expressiveness by 10

near the mouth region’).

However, it is difficult to define expressiveness for each region or point on every face. Considering the facial expressiveness for humans and androids is often mentioned as ‘this face is very expressive’ or ‘this new android is more expressive than the others,’ facial expressiveness is a familiar perspective with respect to the motion performance of faces. Additionally, there is no clear and practical definition of the expressiveness of faces in the form of display devices that convey a wide variety of information through surface deformation. Although the intensity of facial motions, which has been examined extensively [Citation11,Citation14,Citation15,Citation26], should be considered as a component of facial expressiveness, it does not reflect the repertoire of facial motions. Similarly, the realisticity of facial motions as human facial expressions are essential features of androids but do not reflect the repertoire of facial motions. The number of typical facial motions an android face can produce appears as an appropriate representation of the repertoire of facial motions [Citation1,Citation4,Citation7,Citation8,Citation10,Citation12,Citation28]. However, the expressiveness should be low when several motions in the repertoire are similar.

Therefore, in this study, we define facial expressiveness based on the intensity and similarity of the repertoire of facial motions. Then, we propose a calculation method to obtain a numerical index value for the expressiveness of facial deformation, which can be adopted by both androids and humans. Furthermore, we developed three types of visualization methods, i.e. expressiveness map, expressiveness plot, and representative expressiveness octahedron, to investigate the property of expressiveness for each face. We evaluated a total of five faces, which includes two android faces and three human faces, in the proposed index to verify the effectiveness of the index by comparing their mechanical performance and efficiency, specifying the expressive/inexpressive facial regions, and determining ways to improve android faces.

2. Method

2.1. Requirements and concept for determining the expressiveness index

In this section, we discuss the six requirements for determining the expressiveness index of a face.

The index should not be influenced by the static properties of the facial surface: Static properties such as size, shape, and skin color should not influence the evaluation of expressiveness in order to precisely evaluate the performance of the facial movement.

The index should be fairly applicable to any facial mechanism: This is necessary to compare the expressiveness among androids having different facial mechanisms, including actuators, transmissions, and skin materials.

The index should be applicable both to androids and humans: This is necessary to evaluate the absolute achievement level of android technologies.

The index should be independent of human likeness: This is crucial in order to accept androids whose facial motions are not human-like, but expressive.

The index should be applicable to all facial regions: This is necessary to specify the expressive/inexpressive regions on faces.

The index should be useful for investigating the expressiveness from different perspectives: This is necessary to determine the improvement direction of the expressiveness in androids.

Based on the above requirements, we propose the expressiveness index, which is the expressiveness is evaluated at all facial points as the maximum length, area, and volume of the approximate octahedron of movable regions of the facial point. This index satisfies the first four requirements mentioned above considering it focuses on the variations of facial skin movements produced on facial surfaces of androids and humans. Furthermore, the fifth requirement was satisfied by measuring the movements of multiple skin points on android and human faces. Moreover, to satisfy the sixth requirement, we evaluated the movement variations of a skin point in three-dimensional orthogonal evaluation axes corresponding to the three orthogonal diagonals of the approximate octahedron. Details of the expressiveness index are explained in Section 2.3.

2.2. Data

The proposed expressiveness was calculated according to the variations in the three-dimensional movements of each facial skin point using the data set of three-dimensional displacement vectors of over 100 skin points in the right-half faces of two androids (child- and female-type) and three human male adults, measured by using an optical motion capture system in a previous study [Citation27]. Figure shows the appearance of the androids and locations of the measurement points (silver markers) on faces of child-type android (left: Affetto [Citation21]), female-type android (middle: A-lab Co., Ltd., A-lab Female Android Standard Model), and a human male adult (right). The child- and female-type androids are different in the number of degrees of freedom (DoFs) for facial expression, especially in the lower face: the child-type android has 15 DoFs (5 in the upper face and 10 in the lower face) for testing various facial expressions in exploratory research, whereas the female-type android has 8 DoFs (5 in the upper face and 3 in the lower face) for reducing its price in the market. The previous study [Citation27] measured the displacements of the facial points by tracking the three-dimensional positions of hemispherical infrared reflection markers with a 3 mm diameter with six infrared cameras (OptiTrack Flex13). These markers were attached to the right halves of the faces at intervals of approximately 10 mm, which was the minimum length with which the motion capture system could track the markers correctly.

Figure 1. Locations of the measurement points, namely infrared reflective markers for the motion capturing, on faces of child-type android (left), female-type android (middle), and a human (right) (modified from [Citation27]).

![Figure 1. Locations of the measurement points, namely infrared reflective markers for the motion capturing, on faces of child-type android (left), female-type android (middle), and a human (right) (modified from [Citation27]).](/cms/asset/bdad14cd-ae5f-4be1-ba6b-5820fe81b50f/tadr_a_2103389_f0001_oc.jpg)

The differences in the shape and size of three adult males and two androids were compensated using thin-plate spline warping [Citation29], a non-linear smooth transformation of multivariate data, to appropriately compare the expressiveness of each facial region among these faces. More precisely, the geometric arrangements of facial parts were normalized so that the nine representative facial points (the nose root, outer and inner corners of the eyes, top of the nose, earlobe root, corners of the mouth, tops of the upper and lower lips, and top of the chin) matched among these five faces. Hereafter, we refer to the child- and female-type androids as A1 and A2, and the three humans as H1, H2, and H3.

The displacement vectors were measured for almost every independent movement unit of the androids and humans. The displacement vectors of A1 and A2 were measured for 15 and 8 types of independent facial movements, each produced to their limits by an individual actuator, respectively. Although the android A1 and A2 have 16 and 9 movement units (degrees of freedom) produced by 16 and 9 actuators in total, we excluded one facial movement used to open the jaw intensively, considering the skin movements associated with these movements were exceptionally extensive. On the other hand, 43 independent facial movements were examined for H1, H2, and H3, who were instructed to exhibit each facial movement with their maximum effort. We chose these movements from 44 independent movements that have been defined in the Facial Action Coding System [Citation22]. The movement excluded here was the one to open the jaw. Therefore, we obtained a set of 15, 8, and 43 displacement vectors for each facial point in the child-type android (A1), female-type android (A2), and three humans (H1, H2, and H3) faces, respectively. Tables , , and summarized the measured movements in A1, A2, and H1, H2, and H3, respectively. The numbers for the facial movements listed in Table correspond to the numbers defined in the Facial Action Coding System as action units.

Table 1. Measured movements in the child-type android (A1).

Table 2. Measured movements in the female-type android (A2).

Table 3. Measured facial movements in humans (H1, H2, and H3).

This set of displacement vectors on each point reflect the diversity in the movement of each point, that is, they reflect the expressiveness of each point on a face. We defined the jth displacement vector of the ith skin point as , where N and M are the number of skin points and facial movements measured for individual androids and humans, respectively.

2.3. Calculation of the expressiveness

2.3.1. Evaluation axis

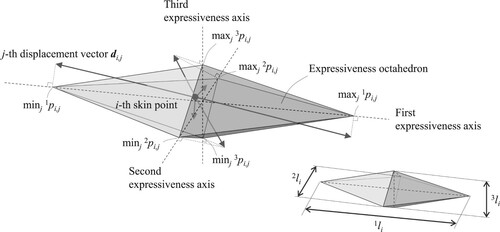

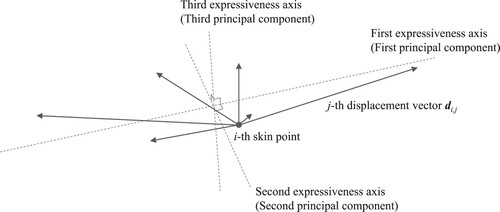

We defined a peculiar three-dimensional coordinate system for each facial point (hereafter called an ‘expressiveness coordinate system’) to calculate its expressiveness to analyze the peculiar variations in its displacement vectors. As shown in Figure , the expressiveness coordinate system was defined as the orthogonal coordinate system whose first, second, and third axes are consistent with the first, second, and third principal components of the end points of the displacement vectors, respectively. The initial positions of a skin point and its displacement vectors are represented as a black dot and black allows in this figure.

Figure 2. Expressiveness coordinate system defined according to the displacement vectors on a skin point.

Here, the principal components are the orthogonal axes that pass through the average point of the displacement vector endpoints. The first principal component is the axis along which the variance of the vector endpoints is maximized, whereas the second principal component is the axis orthogonal to the first principal component. The plane defined with the first and second principal components maximize the variance of the vector endpoints. The third principal component is the axis normal to the plane.

The first, second, and third axes of the expressiveness coordinate system are referred to as the first, second, and third expressiveness axes, respectively, which can be used to investigate the variations in facial movements at each facial point. For example, different types of facial movements are differentiated most prominently along the direction of the first expressiveness axis. Additionally, based on the contribution ratio of the first principal component, we investigated whether the distribution vectors were distributed one-dimensionally or multi-dimensionally.

2.3.2. Expressiveness octahedron

The existence region of the displacement vectors was approximated with an octahedron, referred to as the ‘expressiveness octahedron,’ whose three diagonals corresponded to the expressiveness axes. We defined the diagonal lengths along with the first, second, and third expressiveness axes of the ith skin point as ,

, and

, respectively. Furthermore, we determined these lengths as the distribution widths of the displacement vectors in each expressiveness axis, as shown in Figure .

The distribution widths were calculated based on the principal component scores of the displacement vectors, defined as the value of each displacement vector projected onto each expressive axis, namely, the principal component. We defined the principal component score of the displacement vector against the first, second, and third principal components as

,

, and

, respectively. Then, we calculated

,

, and

as the differences between the maximum and minimum principal component scores as:

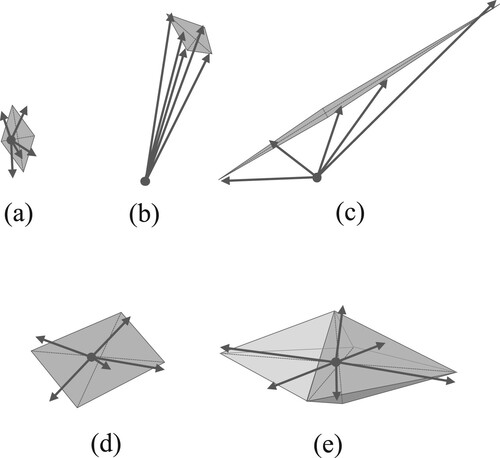

Figure shows examples of the expressiveness octahedron for five facial skin points (black dots) with different variations of displacement vectors (black arrows). It can be seen that the shape and size of the expressive octahedron are different depending on the existing regions of the displacement vectors, that is, the octahedron size is small when all displacement vector are small (Figure (a)), or when every displacement vector is similar (Figure (b)). Conversely, the octahedron size is large when the displacements are large and different in one, two, and three directions (Figure (c–e), respectively). Note that the octahedrons in Figure (a–d) were depicted as almost flat.

2.3.3. 1D, 2D, and 3D expressiveness

We calculated three different types of expressiveness, one-dimensional (1D), two-dimensional (2D), and three-dimensional (3D) expressiveness, for each point depending on their expressiveness octahedron. The expressiveness can be calculated from the lengths of the three expressiveness octahedron diagonals. The 1D expressiveness of ith skin point was calculated as the diagonal length along with the first expressiveness axis, namely . The 2D expressiveness was calculated as the area of the expressiveness octahedron in the plane defined by the first and second expressiveness axes, namely

. Lastly, the 3D expressiveness was calculated as the volume of the expressiveness octahedron, namely

.

2.4. Visualization methods for expressiveness

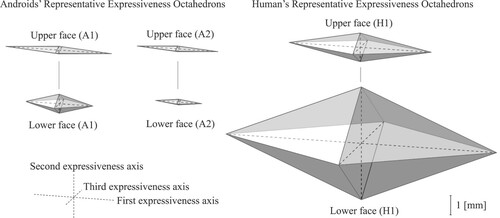

We proposed three different types of visualization methods of 1D, 2D, and 3D expressiveness: the ‘expressiveness map,’ ‘expressiveness profile,’ and the ‘representative expressiveness octahedron.’ The expressiveness map is a spatial distribution plot of the expressiveness on a face, which is illustrated as a two-dimensional color map. The expressiveness profile, which is presented as a line plot, is a compressed representation of the spatial distribution of expressiveness along with the facial midline. The representative expressiveness octahedron, drawn as a single octahedron, is a more compressed representation of the expressiveness in a facial region.

First, the spatial resolution of expressiveness was enhanced to obtain an expressiveness map having a higher resolution on a face. Furthermore, we performed Natural neighbor interpolation [Citation30] on the displacement vectors to calculate lattice point data at intervals of 0.5 mm on the coronal plane, which is a two-dimensional plane that cuts the head into the front and back halves. We defined the coronal plane as the x–y plane, where the origin is the chin top and the y-axis is the axis direction towards the top of the head. As a result, the y-axis corresponds to the facial midline in the expressiveness map.

Second, we calculated the averaged values of the expressiveness in each y position to obtain the expressiveness profile. Because the averaged values of the expressiveness are different along the facial midline, the line plot of the averaged values along with the y-axis showed which facial regions having higher expressiveness, such as the forehead, eye, cheek, mouth, and jaw regions.

Lastly, we calculated the average expressiveness in facial regions to obtain a representative expressiveness octahedron. By drawing a single octahedron for each facial region based on the region's averaged expressiveness, we were able to obtain the regional characteristics of expressiveness intuitively.

3. Results

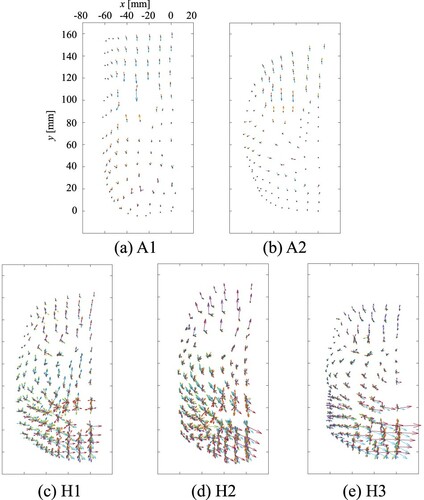

3.1. Displacement vectors

Figure shows the variations of the displacement vectors on each facial point for A1 and A2 and H1, H2, and H3 on the x–y plane. The displacement vectors for measured facial movement patterns were superimposed in these figures. Each arrow represents each displacement vector, and the colors indicate that the displacements were measured for the same facial movement. At the jaw, mouth, cheek, nose, eye, and forehead regions, y = 10, 30, 50, 70, 100, and 120 mm, respectively. The vertical line x = 0 was approximately the facial midline.

Figure 5. Variations of displacement vectors in the two androids (A1 and A2) and three humans (H1, H2, and H3).

It can be seen how the displacement vectors vary at each facial point. For example, the vector lengths were observed to be longer in the forehead region (around y = 120) compared to the cheek regions (around y = 50), whereas the vector orientations were similar in the forehead regions than the mouth regions (around y = 30). Additionally, we can determine the difference in the variations of the displacement vectors among androids and humans based on the fact that the vector lengths were prominently longer in humans than androids.

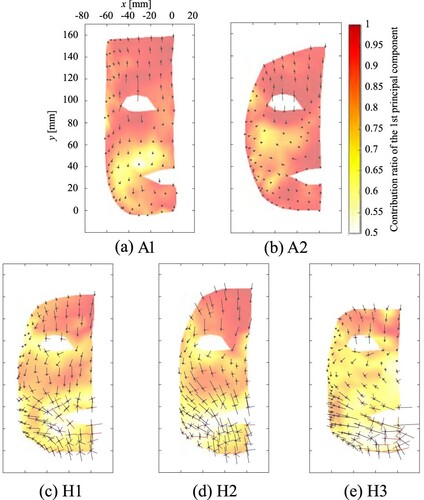

3.2. Expressiveness axes

Figure shows the directions of the expressiveness axes on each facial point of A1 and A2 and H1, H2, and H3 on the x–y plane. The first, second, and third expressiveness axes are indicated as black, red, and blue line segments, respectively. The lengths of these line segments were determined based on the three diagonal lengths of the expressiveness octahedrons at each facial point. The heat maps show the contribution ratio of the first principal components. The red and yellow regions indicate that the ratios were approximately 1 and below 0.7, respectively, in these regions.

Figure 6. Spatial distributions of the contribution ratio of the first principal components in two androids (A1 and A2) and three humans (H1, H2, and H3).

We evaluated whether the ratio was approximately one in the forehead region in both androids and humans. Results showed that the endpoints of the displacement vectors were distributed on a straight line at each facial point in this region. In other words, different movements of the skin point were expressed almost one-dimensionally. Conversely, the ratio was below 0.7 in several regions, especially around the mouth, thereby indicating that different movements were expressed two- or three-dimensionally in these regions.

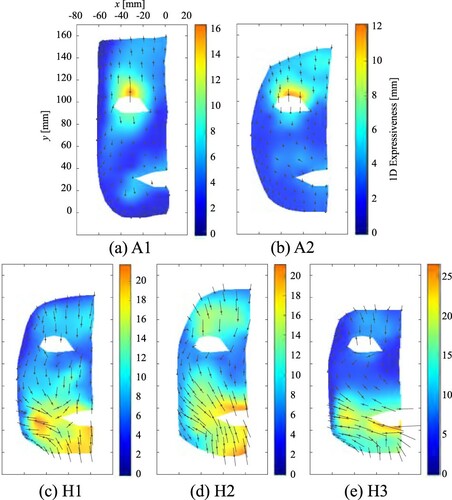

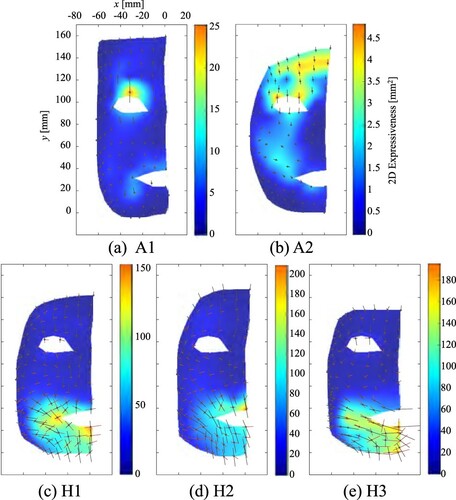

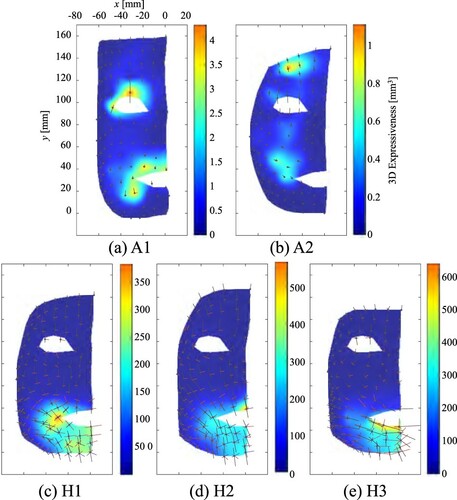

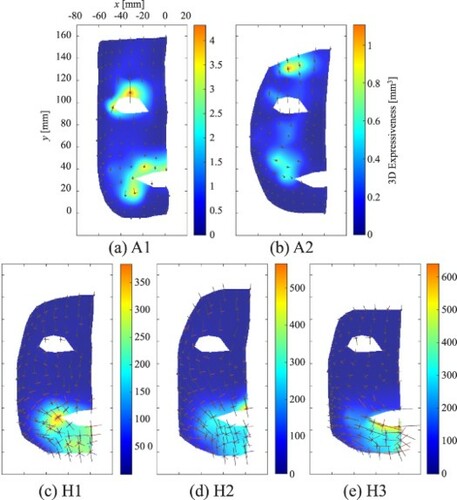

3.3. Expressiveness map

Figures , , and show the 1D, 2D, and 3D expressiveness maps, respectively. The line segments depicted on the facial points represent the diagonals of expressiveness octahedrons used to calculate the expressiveness at each facial point. For example, the diagonals of the first expressiveness axes were depicted in the 1D expressiveness map, whereas that of the first, second, and third expressiveness axes were in the 3D expressiveness map.

The spatial distributions of the calculated expressiveness were depicted as color maps, where the orange and blue regions represent the higher and lower expressiveness regions in a face, respectively. The ranges of these color maps were different for different faces, that is, the maximum value of each color bar was determined based on the maximum expressiveness score in each face, which is summarized in Table .

Table 4. Maximum expressiveness score in each face.

From the figures, we can see which facial regions are more expressive in each face. For example, the upper eyelid region (around y = 110) was most expressive in the androids (A1 and A2) in the 1D expressiveness map. Conversely, the mouth regions (around y = 30) were the most expressive regions in humans (H1, H2, and H3) in the 1D expressiveness map. Additionally, it is noteworthy that a common blue region with a relatively low 1D expressiveness was observed in the central region of the human faces (H1, H2, and H3), which was identified as the continuous region comprising the nose, lower eye, and outer eye corner regions, located at approximately .

Furthermore, we observed that the most expressive regions were not always the same among the 1D, 2D, and 3D expressiveness maps, despite in the same face. For example, the upper eye region was most expressive in A2 in the 1D expressiveness map, whereas the upper forehead region was the most expressive in the 3D expressive map.

3.4. Expressiveness profile

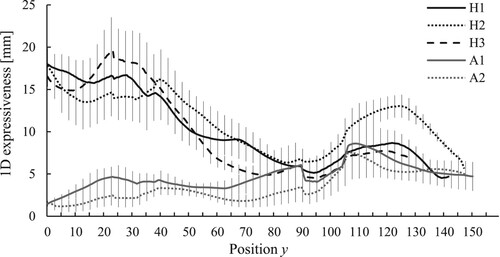

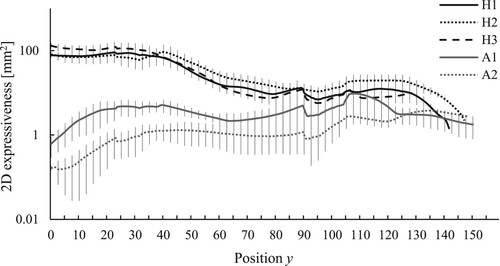

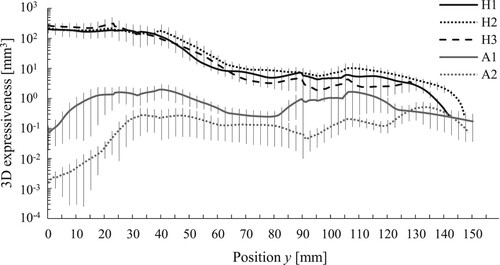

Figures , , and show the 1D, 2D, and 3D expressiveness profiles, respectively. The horizontal axis indicates the position along the y-axis, whereas the vertical axis indicates the average expressiveness scores in each y position. These graphs also depict the standard deviations of expressiveness. Figures and are semilogarithmic graphs.

While the expressiveness profiles among androids and humans were similar, they were different between humans and androids, especially the lower face regions, whose y positions were approximately below 60. The 1D, 2D, and 3D expressiveness profiles of humans exhibited higher peaks in the lower face regions compared to those in the upper face regions. In contrast, the expressiveness profiles of androids were relatively flat and exhibited the highest peaks in the upper face regions.

Table summarizes the averages of expressiveness in lower and upper faces for each face. The standard deviations are listed in parentheses. Herein, we determined the boundaries of the upper and lower faces on the line of y = 60 mm, which passes through the nose top.

Table 5. Average and standard deviations of expressiveness in the lower and upper faces.

We also determined the difference in the expressiveness between androids and humans to be prominent in the lower face and this prominence increased as the number of expressiveness dimensions increased. In other words, on average, the expressiveness scores of A1 and A2 were approximately 25 and 17

of those of humans in 1D expressiveness, respectively. Additionally, these scores were one and two orders of magnitude lower in androids than in humans in 2D and 3D expressiveness, respectively. In contrast, the difference in the upper face between androids and humans was relatively small, especially in 1D expressiveness.

Furthermore, we observed a difference in expressiveness between A1 and A2. The 1D, 2D, and 3D expressiveness of A1 was higher than that of A2 in the upper and lower faces. Particularly, on average, the 3D expressiveness of A1 was eleven times larger than that of A2 in the lower face as shown in Table .

3.5. Representative expressiveness octahedron

Figure shows the representative expressiveness octahedrons of the lower and upper faces for A1, A2, and H1. Table summarizes the diagonal lengths of each octahedron, calculated according to the averaged expressiveness shown in Table . Because the diagonal lengths of H1, H2, and H3 were similar, only the representative expressiveness octahedron of H1 was depicted in Figure .

Figure 13. Comparison of the shape and size of the representative expressiveness octahedrons of the two androids (A1 and A2) and a human (H1).

Table 6. Diagonal lengths of the representative expressiveness octahedrons.

We observed that the representative expressiveness octahedron of the lower face (H1) was remarkably thick and large. In contrast, the octahedron of the upper face (H1) was small and elongated. Its diagonal lengths along with the first, second, and third expressiveness axes were a half, one third, and one-quarter of the octahedron of the lower face, respectively, as shown in Table .

Although the H1's upper face octahedron was smaller than that of the lower face, the upper face octahedron was larger than that of A1 and A2. The diagonal length of the first expressiveness axis was approximately 1.5 times longer in the upper face than the octahedrons of A1 and A2. Furthermore, it was observed that the upper face octahedrons of the androids were elongated in one direction and longer than their lower face octahedrons.

4. Discussion

4.1. Usefulness of the proposed index

The proposed evaluation index of facial deformation expressiveness depicted how and where the variations in the displacements of the facial points were quantitatively inferior in androids compared to humans. Considering the scores of 1D, 2D, and 3D expressiveness corresponded approximately to the maximum length, area, and volume of the existence region of displacement vectors, respectively, we could easily determine the amount of insufficiency in the displacements for androids to achieve expressiveness similar to humans. For example, according to Table , the maximum displacement length should be increased approximately by 5–10 mm for androids to have a level of 1D expressiveness similar to humans.

Therefore, we proposed three visualization methods of expressiveness, which were useful in investigating the characteristics of facial expressiveness. First, the expressiveness map was effective in investigating which facial regions having higher or lower expressiveness on each face. Second, the expressiveness profile was effective in comparing the expressiveness contour among different faces. A semilogarithmic graph was used for 2D and 3D expressiveness profiles considering the 2D and 3D expressiveness of humans exhibited a different order of magnitude, which was higher in the lower face than the androids. Third, the size and shape of the representative expressiveness octahedrons were used to compare the expressiveness among different faces.

The proposed method can be applied to any android for evaluating and comparing their mechanical performance based on the proposed expressiveness index. Furthermore, we can calculate the efficiency of the mechanical design for facial expressions by dividing the expressiveness in any facial region by the number of actuators installed in the region. This design efficiency indicates how efficiently the installed actuators realize the expressiveness on average. Here, we know that the lower face 1D expressiveness were 3.5 and 2.4 as shown in Table while the number of actuators in A1 and A2 were 10 (numbers 6–15 in Table ) and 3 (numbers 6–8 in Table ) for the facial movements in the lower face, respectively. In this case, the design efficiency of the 1D expressiveness of A1 and A2 in the lower face is 0.35 and 0.8, respectively. Consequently, we can say that the mechanical design of A2 is 2.3 times more efficient than A1 in the lower face. This lower efficiency of A1 in the lower face is considered because the skin displacements produced by several actuators are similar or relatively small, and therefore, several actuators do not significantly increase the variations of skin movements. To date, several types of android robots have been created with different kinds of facial mechanisms. Still, it has been difficult to clearly say how a newly developed android is novel and advanced to the others in the mechanical performance. The proposed expressiveness index can create a favorable situation where android engineers claim that their new design can definitely increase the mechanical performance of android faces effectively and efficiently. This contributes to promoting effective competition in android robotics.

The most significant advantage of the proposed index is that android robot researchers can obtain a stable and objective evaluation score for their android face. Since the proposed index depends on the ‘mechanical’ property of a face and not on ‘psychological’ factors of human evaluators, the researchers can have a rigorous discussion on the mechanical design of the android faces. The hardware performance of android faces will be increased with this new evaluation index as the performance of display monitors has been increased with the physical evaluation index, such as color gamut and screen luminance.

4.2. Characteristics of expressiveness determined in androids and humans

Herein, we summarize the characteristics of expressiveness determined in androids and humans in this study. First, we observed that human faces exhibit three-dimensional expressiveness in both upper and lower faces. In particular, the expressiveness was prominent in the lower face, indicating that on average, the expressiveness in the lower face was 26 times higher than that in the upper face. This higher expressiveness was attributed to the particular subcutaneous structure in the lower face, that is, the skin surface in the lower face can move widely in any direction owing to the presence of a thick deformable fat layer that supports it and is not fixed to the bone.

Second, the maximum 1D, 2D, and 3D expressiveness of H1, H2, and H3 were found to be 26.4 mm, 208.5 mm, and 640.2 mm

, respectively. These values of expressiveness are considered as one of the numerical targets for determining future androids that have similar expressiveness to humans. The skin materials and structures should be improved to accept these levels of skin surface movements.

Third, we found that the expressiveness of the upper faces in androids tends to be one-dimensional. On average, the 1D expressiveness was 1.7 times higher in upper faces than in lower faces. According to Figure , this high 1D expressiveness can be attributed to the fact that the upper eyelids can move widely.

Fourth, the 1D, 2D, and 3D expressiveness in the lower faces was found to be 4–6 times, one order of magnitude, and two orders of magnitude higher in humans than in androids, respectively. This indicates that android designers should preferentially improve the 1D expressiveness 4- to 6-fold, despite being insufficient for achieving 2D and 3D expressiveness like humans.

Fifth, we found that the 1D expressiveness of the upper face, whose expressiveness axes were almost along with the y-axis, was comparable for androids and humans. In contrast, the 2D and 3D expressiveness was one order of magnitude lower in androids than in humans. This indicates that improving the facial movements that move skin points along the x-axis in the upper face can enhance the 2D expressiveness. For example, facial motions that bring the eyebrows or lower eyelids inward are candidates.

Sixth, human faces were found to have common regions with a relatively low expressiveness between the upper and lower faces. These regions are candidate regions where a soft android skin is fixed to a hard inner shell representing a human skull. Given that android skin is too soft to maintain its default shape, it is necessary to prepare several supporting points on the inner shell. However, we could lose the expressiveness around the points where the skin is fixed. Therefore, having low expressiveness regions in human faces is desirable for android designers.

4.3. Limitation

One of the limitations of the proposed method is the high time and costs for developing the measurement environment of skin displacement vectors, a 3D motion capture system. This system requires a set of expensive infrared cameras and reflective markers instead of accurately obtaining 3D motion data of facial points. Moreover, we should carefully determine the positions and optical parameters of cameras, intervals of reflective markers, and lighting conditions for the motion capture system.

We can utilize the displacement vectors obtained by using the other measurement environments to calculate the proposed expressiveness because the calculation method of the expressiveness is independent of the measurement method. One of the other measurement methods is to calculate the displacement vectors called ‘optical flows’ based on the estimation of displacement of each in consecutive frames of an image sequence [Citation31]. This method is more casual than 3D motion capture systems because it requires only one standard camera and no facial markers. On the other hand, it is estimated that the calculated expressiveness will be significantly lower than actual in several facial regions because of the inaccuracy of motion estimation based on the optical flow as explained in a survey of optical flow methods [Citation31]. For example, the optical flows will be smaller than the actual displacements around the lateral facial regions because the displacements are nearly vertical to the camera image plane. Also, the optical flows around the cheek and forehead regions might be zero even when they are moving because the appearance is uniform in these regions, especially in androids. Therefore, when we utilize the optical flows to calculate the expressiveness, we should carefully evaluate the accuracy of the estimated displacement vectors in each facial region. The displacement vector data used in this study, namely 3D motion capture data with facial markers, is appropriate ground truth for the accuracy evaluation.

The other limitation is on three aspects of the proposed expressiveness. First, we cannot discuss the asymmetricity of the expressiveness because this study focused only on the half faces. Since it is well known that human faces are asymmetric, the expressiveness plot may be asymmetric as well. Suppose we reveal how the asymmetricity of the expressiveness in human faces contributes to providing human-likeness or friendliness to others. In that case, the asymmetricity will be effectively adapted to the android face design. Second, we cannot discuss how the proposed mechanical expressiveness corresponds to the perceived expressiveness by human observers. If we know the relationship between mechanical and perceived expressiveness, we can determine the design target of the mechanical expressiveness that satisfies the design requirement of the perceived expressiveness. We will investigate the relationship in future works by examining how humans evaluated android faces' expressiveness with different mechanical expressiveness. Third, the proposed expressiveness evaluates the variations of each facial point's movements independently, and therefore, it does not evaluate the variations of producible deformation patterns on entire facial surface. Even when androids or humans have the same evaluation scores in the proposed expressiveness, the ones who can move several facial regions more independently can exhibit more numbers of facial deformations. Considering humans cannot express some of the facial motions listed in Table completely independently, we should propose the other type of expressiveness index to evaluate the variations of deformation patterns that any combinations of actuators or muscles can produce.

5. Conclusion

In this study, we proposed a numerical index for the facial deformation expressiveness in androids and humans. Depending on the proposed index, we investigated the difference in facial expressiveness among faces and facial regions. As a result, we determined that the expressiveness of two recently developed androids were approximately 25 and 17

that of humans in the lower faces, even in one-dimensional evaluation, wherein the variation of facial point movements was calculated along the axis with maximized range. The proposed method revealed how recent androids lack mechanical performance in terms of moving the facial skin widely. Moreover, we were able to numerically discuss the future directions of hardware improvement of android faces to realize the next generation androids with expressiveness similar to that of humans.

Author Contributions

H. I. designed the study, analyzed the data, and prepared the manuscript.

Acknowledgements

The author thanks the members of the Emergent Robotics Laboratory from the Department of Adaptive Machine Systems at the Graduate School of Engineering at Osaka University for their helpful comments.

Disclosure statement

The author declares that this study received funding from A-Lab, Co., Ltd. The funder was not involved in the study design, collection, analysis, interpretation of data, writing of this article, and the decision of submission for publication.

Additional information

Funding

Notes on contributors

Hisashi Ishihara

Hisashi Ishihara received M.S. and Ph.D. degrees in engineering from Osaka University in 2009 and 2014 respectively. He has been an associate professor at Osaka University, Osaka, Japan, from 2019. He had been a PRESTO/Sakigake researcher at Japan Science and Technology Agency from 2016 to 2020. His research field is android robotics.

References

- Kobayashi H, Tsuji T, Kikuchi K. Study of a face robot platform as a Kansei medium. In: Proceedings of the 26th Annual Conference of the IEEE Industrial Electronics Society; Vol. 1; Nagoya, Japan; 2000. p. 481–486.

- Hanson D, Pioggia G, Dinelli S, et al. Identity emulation (IE): bio-inspired facial expression interfaces for emotive robots. In: AAAI Mobile Robot; Edmonton, Alberta, Canada; 2002.

- Kobayashi H, Ichikawa Y, Senda M, et al. Realization of realistic and rich facial expressions by face robot. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems; Vol. 2; Las Vegas, NV, USA; 2003. p. 1123–1128.

- Wu W, Men Q, Wang Y. Development of the humanoid head portrait robot system with flexible face and expression. In: Proceedings of IEEE International Conference on Robotics and Biomimetics; Shenyang, China; 2004. p. 757–762.

- Pioggia G, Ahluwalia A, Carpi F, et al. FACE: facial automaton for conveying emotions. Appl Bionics Biomech. 2004;1(2):91–100.

- Hashimoto T, Senda M, Kobayashi H. Realization of realistic and rich facial expressions by face robot. In: Proceedings of the IEEE Conference on Robotics and Automation. TExCRA Technical Exhibition Based; Minato, Japan; 2004. p. 37–38.

- Berns K, Hirth J. Control of facial expressions of the humanoid robot head ROMAN. In: Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems; Beijing, China; 2006. p. 3119–3124.

- Hashimoto T, Hiramatsu S, Kobayashi H. Development of face robot for emotional communication between human and robot. In: Proceedings of the IEEE International Conference on Mechatronics & Automation; Luoyang, China; 2006. Available from: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.127.8768.

- Hashimoto M, Yokogawa C, Sadoyama T. Development and control of a face robot imitating human muscular structures. In: Proceedings of the International Conference on Intelligent Robots and Systems; Beijing, China; 2006. p. 1855–1860.

- Hashimoto T, Hiramatsu S, Kobayashi H. Dynamic display of facial expressions on the face robot made by using a life mask. In: Proceedings of the 8th IEEE-RAS International Conference on Humanoid Robots; Daejeon, Korea; 2008. p. 521–526.

- Allison B, Nejat G, Kao E. The design of an expressive humanlike socially assistive robot. J Mech Robot. 2009;1(1):Article ID 011001.

- Lee DW, Lee TG, So B, et al. Development of an android for emotional expression and human interaction. In: Proceedings of the Seventeenth World Congress the International Federation of Automatic Control; Seoul, Korea; 2008. p. 4336–4337.

- Ishihara H, Yoshikawa Y, Asada M. Realistic child robot ‘Affetto’ for understanding the caregiver-child attachment relationship that guides the child development. In: Proceedings of the International Conference on Development and Learning; Frankfurt am Main, Germany; 2011. p. 1–5.

- Tadesse Y, Hong D, Priya S. Twelve degree of freedom baby humanoid head using shape memory alloy actuators. J Mech Robot. 2011;3(1):Article ID 011008.

- Tadesse Y, Priya S. Graphical facial expression analysis and design method: an approach to determine humanoid skin deformation. J Mech Robot. 2012;4(2):Article ID 021010.

- Chihara T, Wang C, Niibori A, et al. Development of a head robot with facial expression for training on neurological disorders. In: Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO); Shenzhen, China; 2013. p. 1384–1389.

- Yu Z, Ma G, Huang Q. Modeling and design of a humanoid robotic face based on an active drive points model. Adv Robot. 2014;28(6):379–388.

- Lin CY, Huang CC, Cheng LC. An expressional simplified mechanism in anthropomorphic face robot design. Robotica. 2016;34(3):652–670.

- Asheber WT, Lin CY, Yen SH. Humanoid head face mechanism with expandable facial expressions. Int J Adv Robot Syst. 2016;13(1):29.

- Glas DF, Minato T, Ishi CT, et al. ERICA: the ERATO intelligent conversational android. In: Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN); NY, USA; 2016. p. 22–29.

- Ishihara H, Wu B, Asada M. Identification and evaluation of the face system of a child android robot affetto for surface motion design. Front Robot AI. 2018 Oct;5:119.

- Ekman P, Friesen WV, Hager JC. Facial action coding system (FACS). Salt Lake City (UT): Paul Ekman Group LLC; 2002.

- Lazzeri N, Mazzei D, Greco A, et al. Can a humanoid face be expressive? A psychophysiological investigation. Front Bioeng Biotechnol. 2015;3:64.

- Becker-Asano C, Ishiguro H. Evaluating facial displays of emotion for the android robot Geminoid F. In: Proceedings of the IEEE Workshop on Affective Computational Intelligence (WACI); Paris, France; 2011. p. 1–8.

- Cheng LC, Lin CY, Huang CC. Visualization of facial expression deformation applied to the mechanism improvement of face robot. Int J Soc Robot. 2013;5(4):423–439.

- Baldrighi E, Thayer N, Stevens M, et al. Design and implementation of the bio-Inspired facial expressions for medical mannequin. Int J Soc Robot. 2014;6(4):555–574.

- Ishihara H, Iwanaga S, Asada M. Comparison between the facial flow lines of androids and humans. Front Robot AI. 2021;8:Article ID 540193. DOI:10.3389/frobt.2021.540193

- Nakaoka S, Kanehiro F, Miura K, et al. Creating facial motions of cybernetic human HRP-4C. In: Proceedings of the 9th IEEE-RAS International Conference on Humanoid Robots; Paris, France; 2009. DOI:10.1109/ichr.2009.5379516

- Duchon J. Splines minimizing rotation-invariant semi-norms in Sobolev spaces. In: Constructive theory of functions of several variables. Berlin Heidelberg: Springer; 1977. p. 85–100.

- Sibson R. A brief description of natural neighbour interpolation. In: Interpreting multivariate data. 1981. Available from: https://ci.nii.ac.jp/naid/10022185042/.

- Shah STH, Xuezhi X. Traditional and modern strategies for optical flow: an investigation. SN Appl Sci. 2021;3:289. DOI:10.1007/s42452-021-04227-x