ABSTRACT

This article is a longitudinal bibliometric study of articles from six major universities published over 130 years of the Journal of the American Chemical Society (JACS). The Scopus API (Application Programming Interface) was used to download bibliographic metadata in multiple tables to create a relational database for automated data extraction and manipulation scripts to analyze publishing and citation indicators. The study examined several correlations between average number of institutional, non-institutional, single- and multiple-authored, and international authors per paper and citation counts; open access trends; and page count indicators. Also reported are the characteristics of highly cited papers and the average number of citations for articles.

Introduction

The online availability of detailed bibliographic data covering extended runs of journal titles, particularly through the Web of Science and Scopus, has provided a major benefit to bibliometric analysis and study (Fortunato et al. Citation2018; Garfield Citation1955; Visser, van Eck, and Waltman Citation2021). For example, Scopus embarked on a program to retrospectively add metadata entries for earlier volumes of major journal titles dating back to their first volume. The detailed bibliographic resource metadata for articles within individual journal titles and in journal title groupings in specific disciplines provides the raw data for comprehensive bibliometric analyses.

The relationships between scholarly bibliographic elements, such as co-authorship indicators, author collaborations, and citation counts have become increasingly complex because of the rapid growth of multiple-authored and multiple-institution works and the increasing emphasis on international research collaboration (Abt Citation2007a; Greene Citation2007; Kennedy Citation2003; Velez-Estevez et al. Citation2022; Wagner, Whetsell, and Leydesdorff Citation2017). In addition, conducting precise bibliometric analyses over these metadata elements has been difficult because of the incomplete and sometimes inaccurate Abstracting and Indexing (A&I) service digital representations of the bibliographic data, particularly author affiliation data (Marx Citation2011; Van Raan Citation2005; He, Geng, and Campbell-Hunt Citation2009).

This paper reports on a longitudinal study that analyzed the publications authored by six major research institutions in the United States over 130 years of the Journal of the American Chemical Society (JACS). The six institutions were selected to represent a mix of research foci and generate a representative picture of high-quality university chemical research. The goal of the study was to examine those complex scholarly publishing relationships, including authorship and co-authorship patterns; multiple-institutional and international authorship; the relationships between citation data and authorship; page count data; and open access availability. Identifying and understanding these publication indicators and their relationships is valuable to subject specialists and science librarians and to historians of science.

The Scopus database contains JACS content back to volume 1 in 1879. The bibliometric analysis in this study was done at both the individual institution level and in aggregate over the combined six institutions, with a focus on the combined dataset. Faculty research at six selected institutions: Harvard University, California Institute of Technology, Massachusetts Institute of Technology (MIT), University of California at Berkeley, University of Illinois at Urbana-Champaign (UIUC), and Stanford University represents a strong cross-section of chemistry research in the U.S. The Scopus Applications Programming Interface (API) and the enhanced user interface were used to extract and manipulate a variety of bibliographic data points from the publications of the six major research universities.

Since its inception in 1879, JACS has been the flagship journal of the American Chemical Society. JACS has steadily increased in size from publishing 97 items in 1880 to 2,432 items in 2021. JACS has been one of the most often read and cited science and technology journals (Bonn Citation1963). It has the distinction of being the first journal used for a citation analysis when Gross and Gross (Citation1927) examined citations to various journals from the 1926 volume of JACS to determine which key journals should be available in academic science library collections. Additionally, since the Journal Impact Factor (JIF) was introduced in 1955 by Eugene Garfield, JACS has exhibited a high impact factor value and is currently averaging a value of over 16 throughout the past 5 years.

Early volumes of JACS did not typically provide author affiliation information. Affiliations being listed in the author information did not begin until around 1891. Prior to that time affiliations were occasionally listed in parenthetical information at the end of the article or as part of the text but were not indexed by Scopus in their conversion project.

Literature review

There have been numerous studies reporting author, citation, and other publication metrics and relationships derived from analyses of multiple publication years of science journal titles, including JACS. The previous studies on JACS and the field of chemistry have been performed over a sample of JACS articles or over a limited number of years of articles and not over the corpus of JACS publishing from its inception in 1879 to the current year.

Based on an analysis of articles indexed in Chemical Abstracts, Price (Citation1963) predicted that there would cease to be single-authored articles in chemistry journals by 1980. Price pointed out that that three-author papers were growing faster than two-author papers and four-author papers were growing faster than three-author papers and that, if the trend held, “more than half of all papers will be in this category by 1980 and we shall move steadily toward an infinity of authors per paper” (Price, p. 89).

Abt (Citation2007a) examined the growth of multinational papers in 16 scientific fields and found that the average number of authors per paper has been increasing steadily. In 2005, the average in the 16 fields was 5.53. The range among the 16 areas was from 2.83 authors per paper in mathematics to 9.41 in physics. Using Price’s predictions as a jumping-off point, Abt (Citation2007b) derived an exponential model for the growth in authorship in four fields of science: chemistry, physics, astronomy, and biology. In this model, the number of single-authored papers declines dramatically but never reaches zero.

Additional author-based studies over chemistry and scientific journals have also been conducted. Stefaniak (Citation1982) reviewed the growth of multiple authored articles in Polish chemistry and physics articles published between 1978 and 1980. This analysis showed that 31% of articles in chemistry journals and 51% in physics journals were single-authored works. There were differences within the five chemistry subfields examined with the average number of authors differing in the sub-disciplines. The averages were as follows: biochemistry (2.41), organic chemistry (2.48), macromolecular chemistry (2.14), applied chemistry (2.08) and physical chemistry (2.17).

Herbstein (Citation1993) examined 34 publications of senior chemistry faculty at the Technion-Israel Institute of Technology between 1980 and 1990. Only 15% of the articles were single-authored. Herbstein also noted that international collaborations tended to have more authors.

Other studies have looked broadly at authorship over multiple disciplines and subject areas. Larivière et al. (Citation2015) examined a dataset of 32.5 million papers and 515 million citations from the Web of Science covering 1900 to 2011. In 1900, papers with one author accounted for 87% and 97% of all papers in natural and medical sciences (NMS), and social sciences and humanities (SSH), respectively, whereas the percentages in 2011 were 7% and 38%, respectively. In the early years after 1900, the decrease in the proportion of single-author papers in NMS was due to the increase of papers with two authors. The proportion of two-author papers has decreased since the beginning of the 1960s because of the increase of papers with more than two authors.

Qin (Citation1994) reviewed the types of collaboration within multiple-authored articles published in The Philosophical Transactions of the Royal Society of London written between 1901 and 1991. Using sample articles taken from 5-year intervals in the Science Citation Index (now Web of Science), Qin derived various collaboration types: within an institution; interdepartmental at same institution; multiple-institutional within same country; and international. The number of multiple-authored articles increased from 25.5% in 1901 to 58.6% by 1991.

Other studies also noted the increase in multiple-authored works. Cronin, Shaw, and La Barre (Citation2004), while analyzing acknowledgment statements in JACS articles between 1900 and 1999, also investigated the increase in multiple-authored articles. Using a sample of 2.6% of the total JACS articles (excluding reviews, editorials, and other document types) they noted the number of single vs. multiple-authored articles. In their sample of 2,866 articles, only 344 (12%) were single-authored.

Butcher and Jeffrey (Citation2005) studied the trend toward more industry-academia collaborations in water treatment research and found a decrease in the number of single-authored papers and an increase in the number of four or more authored papers over the last 35 years. The proportion of industry – academic collaborative papers involving five or more authors was greater than those involving two, three, or four authors during the previous five years. Only a small percentage (13%) were single-authored papers from the 1,678 papers reviewed with the largest increase of collaboration after 1997. They measured an increase in co-authors in four dominant countries: United Kingdom, United States, Japan, and France.

Multiple-authorship and citation counts

The growth of multiple-authored papers has led to studies examining the relationship between the number of authors and other article research indicators such as citation counts. Numerous studies have found that multiple-authorship increases the frequency of being cited by other articles (Glänzel et al. Citation2006; Larivière et al. Citation2015; Lawani Citation1986). A higher number of authors are often associated with a higher number of citations. Larivière et al. (Citation2015) found that an increase in the number of authors leads to an increase in impact and is not due simply to self-citations. Wuchty, Jones, and Uzi (Citation2007) processed 19.9 million papers from the Web of Science over five decades and 2.1 million patents to demonstrate that research teams in all fields typically produce more frequently cited research than individuals, and this advantage has been increasing over time. They found that single authors produced the papers of singular distinction in the 1950s but by 2000 the highest cited work was being done by groups.

Figg et al. (Citation2012) selected original research articles from six leading biology and medical journals from the years 1975, 1985, and 1995 and recorded the number of authors, the number of institutions represented, and the number of times the 1995 articles were cited in future years. They found significant correlations between the number of authors and the number of institutions with the number of times an article was cited.

Other studies found the relationship between the number of authors and citation counts to be more nuanced. Rousseau (Citation2001) noted that multiple-authored articles often have higher citation frequencies than single-authored articles, but the relationship does not hold under all circumstances and for all domains of science. Citation behavior within the field of chemistry was dependent on the specific subfield. Smart and Bayer (Citation1986) looked at 10-year citation rates for single- and multiple-authored papers in the leading journals of three applied science fields and found little evidence of any incremental advantage to collaboration, at least as measured by citations. They did, however, note other benefits to collaboration. Franceschet and Costantini (Citation2010) noted that several previous studies provided no support for a link between collaboration and citation. They looked at citation and peer review impact and while they found a general tendency toward author collaboration producing higher citations, notable counterexamples were discovered. These include physics, biology, and chemistry. In chemistry, papers with at most three authors were the most cited although some papers with eight or more authors were judged as high quality by peer reviewers.

Using a dataset of 1,765 articles submitted to Angewandte Chemie International Edition (AC-IE), Bornmann et al. (Citation2012) found a significant correlation of higher citations for four factors: each paper’s h index for citing references, language of the journal with more expectations for English or multiple language articles, prestige of the authors, and articles in specific sub-disciplines of physical, organic, and analytic chemistry. No statistically significant correlation was found between citation counts and number of authors on individual papers.

International collaborations

In the same vein as the studies proposing significant relationships between multiple-authored articles and citation counts, other studies, using a variety of statistical methods, have found that papers generated from international collaborations produced higher citation rates than domestic collaborations (Fan et al. Citation2022; Kato and Ando Citation2013; Sugimoto et al. Citation2017).

Velez-Estevez et al. (Citation2022) noted the growth and impact of international research collaborations and determined that the topic, themes, and structure of research in Library and Information Science affected the differences in impact between international and domestic collaborations. Wagner, Whetsell, and Leydesdorff (Citation2017) measured the increases in international collaborative articles from previous studies begun in 1990. They found that internationally coauthored papers in the Web of Science database rose from 10% in 1990 to 25% in 2011. Using the field weighted citation impact (FWCI), a nation-to-nation collaboration network, and a regression model, they found significant positive relationships between international collaborations and research impact in four of the six subject disciplines they studied. Wuchty, Jones, and Uzi (Citation2007), in the 19.9 million papers analyzed from the Web of Science over five decades, also emphasized the growing role of collaborative scientific research, including international collaborations.

Other studies have found the relationship to be more complex. Abramo, D’Angelo, and Di Costa (Citation2021) found that cited publications increase with the level of internationalization in all disciplinary areas except art and humanities, biomedical research, economics, law, and political and social sciences. Glänzel and Schubert (Citation2001) examined the publication patterns between 1995 and 1997 of the chemistry journals in the Web of Science and found that international co-authorship resulted in publications with higher citation impact than purely domestic papers, although the differences between countries varied a great deal and there were exceptions on the national level for several countries. They indicated that the relationship between international collaboration and a high number of citations is weaker than one might expect.

A rigorous study of the publication patterns of 65 biomedical scientists by He, Geng, and Campbell-Hunt (Citation2009) found that within-university collaboration was just as strongly related to a paper’s quality as international collaboration. The authors strongly suspected that the importance and contribution of local collaborations was severely understated in previous studies. Rousseau and Ding (Citation2016) found that American scientists publishing in Nature and Science did not benefit, in terms of number of citations, from writing with international collaborators. Persson (Citation2010) found general positive effects of international collaboration on citation levels but at the research specialties level, these effects were weaker or absent. He suggested that international collaboration may be a less important factor contributing to high impact in various research specialties but a stronger influence in contributing to high impact in papers from small countries.

Salisbury, Smith, and Faustin (Citation2022) looked at the relationship between Altmetric Attention Scores (AAS) and article views with several publication indicators within selected JACS articles. They examined all 2018 to 2020 JACS articles and communications. While they discovered no correlation between AAS and the number of citations, references, page counts, number of authors, or number of country affiliations, they did find a weak correlation between number of authors and the number of citations, but no correlation between international authorship and citations. They found that 98.57% of the JACS articles examined were cited and 5.6% were cited over 100 times.

Citation analysis

Other studies have focused on examining citation patterns during a given time period within specific journals. In 1974, Ghosh and Neufeld (Citation1974) utilized Science Citation Index to review 222 JACS articles published in January and February 1965 to determine the number of uncited articles after six years. The distribution of citations among four sub-disciplines (physical, inorganic, organic, and biological chemistry) and document type (communication or article) were also computed. Each paper, except for one communication, was cited by 1971. The average number of citations per paper was 24.9.

Van Noorden (Citation2017), refuting earlier studies that suggested a high rate of uncitedness, found that of all biomedical science papers published in 2006 only 4% were uncited by 2016. In chemistry, that number is 8% and in physics, it is near 11%. For the literature as a whole—39 million research papers across all disciplines recorded in the Web of Science from 1900 to the end of 2015—some 21% have not yet been cited. Unsurprisingly, most of these uncited papers appear in little-known journals; almost all papers in well-known journals are cited.

Tahamtan, Afshar, and Ahamdzadeh (Citation2016) reviewed the factors affecting citations and identified three general categories with 28 total factors that influence the number of citations received by a scholarly work. The citation impact was affected by a variety of influences but the quality of the paper, the journal impact factor, the number of authors, the visibility of the work, and the international cooperation elements stood out as strong predictors.

Methodology

The Scopus database was used for this study, primarily for its inclusion of all JACS documents dating back to volume one and the robust feature set of the Scopus API. At the time of the study (12/02/2022), Scopus contained 198,909 JACS records and Web of Science had 190,314 records. A Google Scholar search for the journal title retrieved more than 902,000 records (with citations removed) and 503,000 after limiting to the period 1890–2022. Google Scholar is well known for its inability to de-dupe records and that could account for its large record count.

The authors have developed a suite of scripts that utilize the Scopus API for earlier projects and were able to adapt and extend these scripts for the current study.

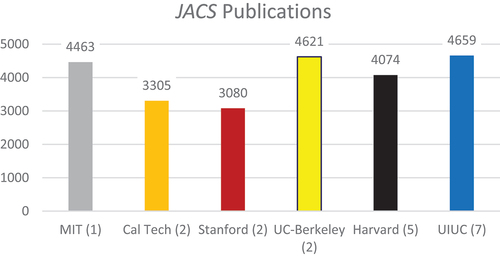

For the present study, a review of the top eight institutions with the highest number of JACS publications in Scopus showed the following:

Cross referencing the number of these institutional publication numbers with the US News and World Report rankings of the top graduate school programs in chemistry:

#1 Massachusetts Institute of Technology (MIT)

#2 California Institute of Technology (Cal Tech)

#2 Stanford University

#2 University of California – Berkeley (UCB)

#5 Harvard University

#7 University of Illinois at Urbana-Champaign (UIUC)

#7 Northwestern University

#7 Princeton University

#7 University of Chicago

For this analysis, the authors selected the top five programs and the University of Illinois at Urbana-Champaign (UIUC) since it had produced the highest number of JACS publications compared to the other two 7th ranked schools and significant interest in this comparative study. In addition, Scopus had just performed a re-indexing of the author affiliation fields in all UIUC records. shows the number of JACS publications by each institution and their US News and World Report rank.

Each institution’s overarching Scopus Affiliation ID was searched and limited to the Exact Source title field EXACTSRCTITLE(“Journal of the American Chemical Society”) (Example: AF-ID (“University of California Berkeley” 60025038) AND (LIMIT-TO (EXACTSRCTITLE , “Journal of The American Chemical Society”)). Scopus uses an institution affiliation ID hierarchy with an overarching Affiliation ID. A Scopus API script was used to search and download the corpus of JACS articles authored by researchers at each of the six selected universities.

The six individual institutions were searched separately, and selected elements of the structured XML-based metadata text retrieved from the search results were processed and put into six separate tables in a relational database. Processing the six institutions separately allowed the calculation of the institutional and non-institutional components of the average number of authors in multiple-authored works. The six institutional tables contain records with column data comprised of API retrieved data: Scopus EID number, a list of all authors, a combined author affiliation list, a separate author ID and associated Affiliation ID pairs blob, the article title, source journal, date, volume, and issue, the number of citations to the article (cited-by count), links back to the record in Scopus, the article DOI, an indication of open access and an open access descriptive label, the document type, funding data, ISSN, ISBN, and other bibliographic data. Once downloaded, it was noted that there were date variations for the first article published by each institution: Cal Tech 1912; Harvard 1900; MIT 1893; Stanford 1897; UC-Berkeley 1903; and UIUC 1900. The authors considered a consistent start year, but since the number of publications was small it was decided to use all records from the initial searches. Many of the 1890s articles in JACS did not have an author affiliation listed in the articles so it is possible that some early articles by authors from the six selected institutions do not appear in the database tables.

Additional separate columns for each record in the tables were created from scripts and added to the records. These additional columns calculated the following: the total number of authors for each article; the number of authors from the specific institution represented by the table; the number of authors that were not from the particular institution; the page count for each article; text indicating whether the article was Gold, Green, or Hybrid open access; the number of non-U.S. coauthors; a 0–1 field indicating if at least one of the coauthors was from an institution outside of the United States; and a list of the non-U.S. countries of the coauthors, if applicable. The primary reason for generating the initial six separate tables was to be able to derive the institutional and non-institutional author counts for each article for later processing and analysis.

A composite table of JACS metadata records from the six selected institutions was also constructed. This all-six-institutions table initially contained 24,201 unique records. There were 520 duplicate records which had authors from two or more of the six institutions. For these duplicate records, the selected entry for the table was from the institution record with the highest number of institutional authors and/or corresponding author, with the idea that the particular record was the “home” or primary publishing institution. That process reduced the all-six-institution table to 23,681 records.

From this database structure, a macro script operating over each of the institutional tables and the all-six-institutions table was developed. That script executed a series of SQL queries on the institution tables that produced custom report tables, arranged by decade, that contain a set of desired data points for the institutional and the all-institution groups. The decade-by-decade displays showed the average number of authors per article for articles in that decade; the average number of institutional authors; the average number of non-institutional authors for the articles in the decade; the highest number of authors for any articles in the decade; the percentage of single-authored and multiple-authored articles in the decade; the average number of pages per article; an open access indicator and description; the number of open access articles and the percentage of articles that were open access in the decade; the percentage of articles with at least one non-U.S. author; the collaboration constant of the articles by decade; the number of citations in the highest cited article in the decade; the number of uncited articles; and the average citation count for articles in the decade.

In addition, columns were created and stored in the decades display to assist in the statistical analysis. These included by decade: the variance, the sum of the element values for several of the indicator values, and the standard deviations for several of the field values, such as the average number of authors, average page counts, the average of the institutional affiliated authors, and the average non-institutional authors. These were used to perform t-test and analysis of variance calculations. In addition, several correlation analyses were carried out over pairs of indicator data elements stored in the original all-six-institutions table.

Document type information

The Scopus API returns, for each record, one of the seven document types: Article, Review, Letter, Note, Editorial, Erratum, and Retracted. These correspond roughly to the JACS document types with Review replacing Perspective, Letter replacing Communication, and Erratum taking the place of the JACS Additions/Corrections.

shows the breakdown of document types for the all-six-institution combined file.

Table 1. Document types and average number of citations for the combined six Institutions.

For this analysis, articles with the document types Note, Erratum, and Retracted were not included. More granular document type refinements for correlation calculations, for example, to the document type Article only or to the combined types Article and Review, were accomplished in the SQL statement that generates the base recordset for the correlation. After removal of the three document types, the all-six-institutions table contained 23,336 records.

Results

show the decade-by-decade breakdown of author, citation, and other publication metrics over all six institutions. Additional columns containing the variance and standard deviation data used in the statistical analyses were calculated by script stored in the table. Those columns are not shown below. shows the UIUC Author indicators and shows Harvard University publication statistics.

Table 2. Author statistics for composite file of all six institutions.

Table 3. Publication statistics for composite file of all six institutions.

Table 4. UIUC author statistics.

Table 5. Harvard publication statistics.

The bibliographic database tables constructed above provide a framework for accomplishing the expressed goals of the study: to examine the complex scholarly publishing relationships, including authorship and co-authorship patterns, multiple-institution information, and international authorship; the relationships between citation data and author, open access, and page count; and open access availability within the 130 years of JACS articles written by authors from the six selected institutions. It provides a foundation for the study’s focus on examining decade-by-decade authorship patterns and characteristics, international collaboration, the relationships between citations and number of authors and between citations and international co-authorship, and open access data both in aggregate and between institutions.

Total publications

contain data from the combined six institutions, and the total number of publications for each decade is displayed in a common column labeled Total Publications.

Several institutions had a decrease in the number of articles published in JACS during the 1960s. One factor that may have changed the number of articles submitted to JACS in the late 1950s and 1960s is the emergence of new and specialized ACS journals during this period. Examples of these discipline journals include Journal of Agriculture and Food Chemistry, 1953; Journal of Chemical & Engineering Data, 1956; Journal of Medicinal Chemistry, 1959; Journal of Chemical Information & Modeling, 1961; Inorganic Chemistry, 1962; Biochemistry, 1962; Environmental Science & Technology, 1967; Macromolecules, 1968; and Accounts of Chemical Research, 1968.

Average number of authors per paper by decade

Looking at for the all-six-institutions indicator values, the third column values for the Average Number of Authors per article in the designated decade shows a steady increase beginning with an average of 1.52 for 1890–1899, a dip for 1900–1910 to 1.49, and then increases to an average of 5.74 authors per paper in the last full decade of 2011–2020. The 2021–2022 sample set of articles shows an average of 6.87 authors per paper. Three of the six institutions reached an average of over two authors in the 1930s and three during the 1940s decade. All the six institutions average between six and eight authors per article for the 2021–2022 timeframe.

The difference between the average number of authors per paper for the 1890–1899 sample and the 2021–2022 period is statistically significant (t = 24.007, df = 419, p < .001) as are the differences between the full decade 2011–2020 average with each of the other decades, including the shortened 2021–2022 period (t = 5.541, df = 475, p < .001). Several of the early decade pair averages are not significant at p = .05, including 1921–1930 and 1911–1920 (t = 1.325, df = 650, p < .20) and 1941–1950 and 1951–1960 (t = 1.089, df = 2835, p < .30). The succeeding pair of 1961–1970 and 1951–1960 are significantly different (t = 10.293, df = 4073, p < .001). Performing pairwise comparisons of the average number of authors per paper for the 14 decades in the study revealed four pairs that were not significantly different.

To test whether a significant difference is present in the average number of authors per paper across the 14 decades, an analysis of variance test was performed. The ANOVA test found the calculated F ratio (F = 795.77, alpha = .01) indicating the differences in the average number of authors are significant.

This study of articles written by faculty from six prominent research universities in a major chemistry journal over 130 years of publishing has observed the same significant growth in the average number of authors per paper that has been reported widely in the literature. Although the increase in multiple-authored works has not progressed to the stage predicted by Price (Citation1963). The situation does more closely follow the model for the increase in multiple-authored articles put forth by Abt (Citation2007b).

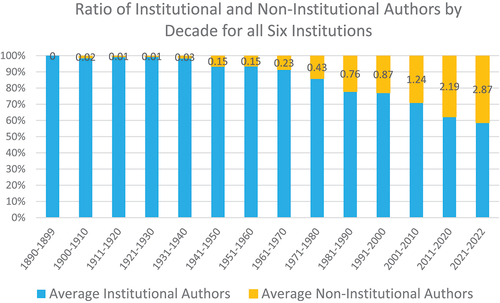

Average number of institutional and non-institutional authors per paper by decade

shows columns for the Average Number of Institutional Authors and the Average Number of Non-Institutional Authors. These values break the increase in the average number of authors per paper into two components: the increase from the average number of authors affiliated with the institution and the increase from the average number of authors not affiliated with the particular institution. These institutional and non-institutional totals and averages are available because each record in the combined database table is from one of the six specific institution tables. For the duplicate articles where two or more of the coauthors are from two or more of the six institutions, the combined table contains the data from the particular institution record that contains the highest number of local institution authors.

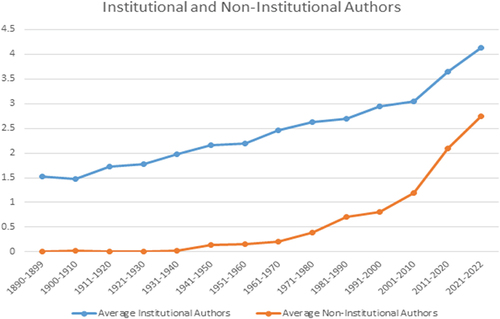

As the average number of authors per paper has grown over the decades, both the average number of institutional authors and the average number of non-institutional authors have both increased. shows the growth of non-institutional authors in the ratio that institutional and non-institutional authors make up in the total average number of authors per paper.

illustrates the rate of growth of the average institutional and average non-institutional components that make up the average number of authors per paper. For the first seven decades, the slope of the institutional author increase was higher than the non-institutional, but over the last four decades the slope of the increase of the average non-institutional authors has been higher than the institutional authors, showing the increase in the non-institutional component.

Figure 3. Average number of institutional and non-institutional authors across all six institutions.

The average number of institutional authors does vary by institution. Cal Tech currently has the lowest average number of institutional authors at 3.22 and UC-Berkeley is high among the six institutions at an average of 4.94 institutional authors per paper. The growth in the institutional component of the increase may be due to the formation of more formal teams or groups within or across university departments. These teams typically consist of multiple faculty members, postdocs, and graduate students (Larivière et al. Citation2015; Wuchty, Jones, and Uzi Citation2007).

After the initial dip in the average institutional authors per paper from 1890–1999 to 1900–1910, the average values increase, with a jump occurring with the 2001–2010 decade. The differences in the 2021–2022 and 2011–2020 decades (t = 3.43, df = 486, p < .001), the 2011–2020 and 2001–2010 decades (t = 11.777, df = 4371, p < .001), the 2001–2010 and 1991–2000 decades (t = 2.295, df = 5350, p < .05), and the 1991–2000 and 1981–1990 (t = 4.777, df = 3722, p < .001) are all statistically significant. Several of the earlier decade pairs do not show significant differences.

The average number of non-institutional authors per paper do not reach above 0.5 until the 1981–1990 decade and do not reach above 1.0 until the 2001–2010 decade. Nevertheless, the differences in the averages between 2021–2022 and 2011–2020 (t = 3.327, df = 470, p < .001), 2011–2020 and 2001–2010 (t = 12.652, df = 3964, p < .001), and 2001–2010 and 1991–2000 (t = 7.612, df = 5104, p < .001) are all significant. Some of the earlier decades do not show continuous increases or show very small stepwise differences. The overarching analysis of variance test across the 14 decades found a calculated F ratio (F = 330.871, alpha = .01) indicating a significant difference in the average number of non-institutional authors.

The number of institutional and non-institutional authors for each paper was calculated by a script that matched the Scopus author IDs (AU-ID) with the Scopus affiliation IDs (AF-ID). There were some difficulties with this. Scopus has attempted to normalize the AF-ID system by creating parent AF-IDs that subsume several child AF-IDs connected with a particular institution. This has been done more successfully at some institutions than others.

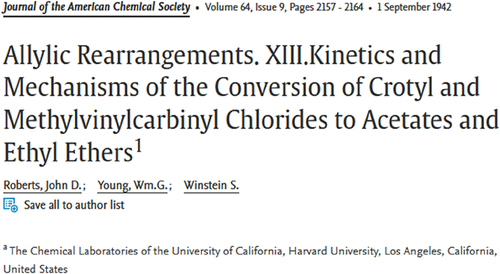

Another issue has to do with retrospective conversion errors in Scopus’s identification of author affiliations. In some cases, the affiliation information for authors was merged into one combined affiliation blob with an AF-ID that contained a combination of institutions. An example of this is shown below.

Example of merged author affiliations in 1942 JACS record in Scopus.

Original database record from Scopus API:

Although the parent–child AF-ID relationship led to the successful retrieval of the article under the home institution, the parsing algorithm that assigned institutional and non-institutional numbers was rendered incorrectly. The numbers of these types of errors varied across the six institutions. Cal Tech had 283 incorrect records; Harvard had 111; MIT had 147 records; Stanford had 120 records; UC-Berkeley had 181; and UIUC had 25. The Scopus API can retrieve author affiliations from the standard Search endpoint and can also retrieve author affiliation history from an Affiliations endpoint. Using a combination of a separate affiliation search script and manual inspection of the source document at the JACS website, these records were corrected.

Author affiliation data have, historically, been problematic in bibliometric studies. Marx (Citation2011) noted that the early databases such as Science Citation Index, INSPEC, and others prior to 1950 often did not provide full author address information and more often only for the first author or corresponding author. Larivière et al. (Citation2015) pointed out that inter-institutional and international collaboration data are available only from 1973 onward in Web of Science and author and affiliation data are linked only from 2007 onward. Van Raan (Citation2005) discussed the issues connected with the variations of affiliation naming structures, especially for larger organizations and universities. He pointed out that one of the major issues with performing accurate and scalable bibliometrics on author indicators has been the inconsistent and incomplete nature of the affiliation data provided by major database vendors. Buznik et al. (Citation2004) noted the problem of matching affiliations between databases given that authors provided variations of regional branches, universities, or research institutions in their affiliation entries.

Dodson (Citation2020) noted that Scopus has a larger title coverage than Web of Science and has improved author affiliation searching. However, it was discovered that the affiliation search from the search interface often included some sub-affiliations that were not obvious until processing some of the author affiliations by the Affiliation ID (AF-ID). Searching the AF-ID in Scopus is only possible via the Advanced Search function.

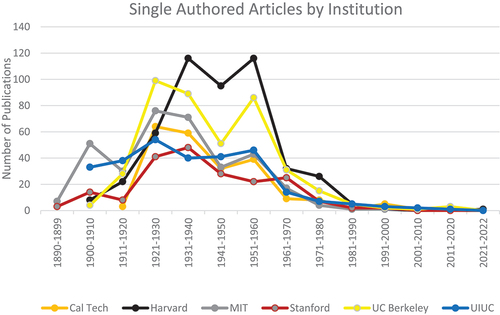

Single-authored and multiple-authored articles

From , there were only 12 (0.19%) single-authored papers in the 6,301 JACS papers published by the study’s six institution cohort in the period 2001 to 2022. Four of these were Review articles and one was a Letter. shows the number of single-authored papers per decade for each institution.

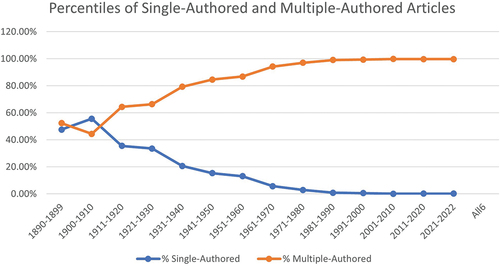

shows the percentage of single-authored and multiple-authored papers across all six institutions by decade in the study.

Figure 5. Percentages of single-authored and multiple-authored articles for all institutions by decade.

The all-institutions dataset reached an average of two authors per paper in the 1931–1940 or 1941–1950 decades. Most of the institutions had a decline in the total number of publications due to World War II.

The numbers in this study on single and multiple authors verifies Abt’s (Citation2007b) model of the decline of single-authored chemistry articles. Phillips’ (Citation1955) analysis of single-authored articles published in JACS since 1918 indicated a decline of single-authored articles from 45% in 1918 to only 14% in 1950 despite a significant increase in the total number of articles published each year. Phillips referred to chemists who published more than two single-authored articles per year as “lone wolves.” This decline in single-authored articles is consistent with Stefaniak’s (Citation1982) analysis of articles in Polish chemistry and physics articles that indicated less than one-third of all chemistry articles were single-authored articles.

Notable is the irregularity in which single-authored articles differ at different institutions. For example, Harvard had very high spikes in single authors in the 1930s and 1950s. Qin (Citation1994) also measured a similar irregularity in single-authored articles in his study of 1901 to 1991 Philosophical Transactions of the Royal Society of London. He noted that 70% of single-authored articles were historical, observational, theoretical, or generalized in nature.

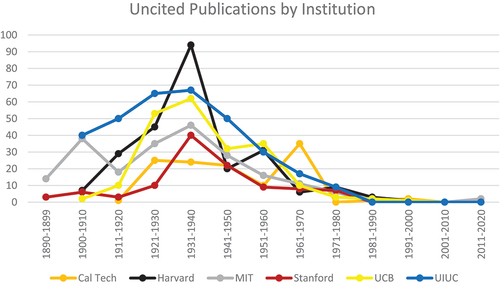

Citation characteristics

shows the Average Number of Citations for papers published in each decade. This number has increased over time with a peak at 139.22 during 2001–2010. Some of the variation and, in particular, the smaller numbers from 1900 to 1960 may be at least partially due to Scopus’s incomplete coverage of publications from the early 1900s. This issue is being addressed in the Scopus retrospective conversion process, but journal coverage is more comprehensive for the periods after 1970.

Interestingly, five out of the six institutions have their highest average citation count during the 2001–2010 decade, except for Harvard which had their highest in the 1990s. also has a column indicating the Highest Citations number for papers from any institution in each specific decade.

also contains a column for the Number of Uncited Papers for all six institutions in each decade. This number peaked at 312 in the 1930s but was reduced to double digits in the 1960s. shows the uncited articles by decade for each of the six institutions. From 1981 to 2020 only seven of the 10,529 articles are uncited, comprising 0.07% of the total. For Stanford University and UIUC, all their publications from 1981 to 2020 have been cited. This is consistent with Ghosh and Neufeld’s (Citation1974) analysis of 222 JACS articles published in January to February 1965 where only one article was not cited during the six-year study.

Van Noorden (Citation2017) noted, in a Nature feature, that “almost all papers in well-known journals do get cited.” In addition to the prestige of JACS, the international reputation of many of the authors, and the quality of the highest-ranking graduate programs in chemistry in the United States, the “Matthew Effect” – where heavily cited articles are cited more often – is also in effect (Merton Citation1968). In this sample set, self-citations have not been eliminated, but previous studies have determined that the percentage of self-citations, while a factor in multiple-authored works, does not significantly alter statistical analyses (Abramo, D’Angelo, and Di Costa Citation2021; Larivière et al. Citation2015; Smart and Bayer Citation1986; Wuchty, Jones, and Uzi Citation2007).

To test whether the data compiled in our study corroborate the previously reported high correlations between multiple authorship and citation counts and international collaboration and citations, two different tests were performed over the data. Pearson correlation calculations were applied over the values in two selected columns: the number of authors column and the number of citations column, or the number of international authors and the citations column in the table of 23,336 records containing metadata of articles from faculty at all six institutions.

Multiple authorship and citation analysis

The first correlation looked at the author count column and the cited-by count column taken from the Scopus API record. Since the recordset used for processing the correlation is generated by a SQL command over the table, tests could be conducted over various time periods of the 1890 to 2022 corpus and with results limited to several document types. For this analysis of multiple-authored papers and citation counts, the values for the number of authors range from 1 to 49 and the cited-by counts range from 0 to 8,360 citations.

The closing year for citations was limited to 2017 to ensure that later articles had an adequate half-life and reasonable time to accrue citations. The Pearson r values (with the time period covered and the sample size N) for comparing the number of authors and citation counts:

The results are all significant at p < .05. These r values all constitute weak or very weak correlations and demonstrate that, in this sample of articles from JACS, the number of authors shows a very weak correlation with citation counts. Limiting the analysis to later years or article and review document types does not result in higher correlations.

In this sample set of JACS publications by researchers at six prominent universities, a side-by-side comparison of the number of authors on a paper and the number of times the paper is cited does not yield a high correlation. The papers with the highest number of authors – where the number of authors is between 1 and 49 – are not consistently among the most cited. There are 92 papers with 15 or more authors and only three of them have been cited 300 or more times. By contrast, 22 of the single-authored papers have been cited 300 times or more and 397 of the papers with three or fewer authors have been cited at least 300 times. Adding to the number of authors does not appear to result in higher correlations. The conclusion is that JACS papers from the six institutions in the study with a larger number of authors do not have a higher number of citations.

It is important to note that the sample set used in this study is a distinguished subset of all the JACS articles from 1890 to 2022 and is comprised of the publications of prominent faculty from the six major research universities. This may have skewed the multiple-author and citations correlation given the highly cited work of many of these individual or institutional group authors.

There are also alternate ways to look at the relationship between citation counts and co-authorship. One approach used in previous studies has been to examine the average number of citations of single-authored and multiple-authored works. This involves examining the highly cited papers or partitioning the set into two or more groups – often the single-authored and papers with two or more authors.

Working with SQL commands on the all-six-institution table provides several additional analyses. The 4,550 papers in the corpus with 100 citations or more show an average of 3.97 authors per paper. The 462 papers with 400 citations or more have an average of 4.09 authors; the 38 papers with 1,500 or more citations have an average of 4.65 authors; and the 11 papers with 2,500 or more citations average 3.82 authors per paper. So, the highly cited papers tend to converge on an average of four authors per paper.

Another approach is to look at the average number of citations for papers with a specific number of authors or a range of number of authors. There are 1,830 single-authored articles published between 1900 and 2017 and they average 27.74 citations. Looking at 1970 to 2017, there are 123 single-authored papers averaging 70.79 citations per paper. The 6,424 papers with four or more authors written between 1900 and 2017 average 109.89 citations per paper. For 1970 to 2017, the papers with four or more authors have been cited an average of 119.19 times. These averages represent a significant difference and show that the multiple-authored papers have higher average number of citations.

As modern scholarship has evolved, many papers are structured with contributions from one or more graduate students and postdocs along with the work of the supervising faculty member. This may entail a three- or four-person group from the same institution all focused on a dissertation level project or a specific laboratory study. This can be contrasted with publications emanating from multiple authors or groups representing several institutions and working on different aspects of the project workflow.

Looking at relatively recent JACS papers published by the six universities between 1980 and 2017, there are 4,358 papers with one to three authors all from the same institution. These papers have been cited an average of 115.67 times. The contrasting set of 2,880 papers with four or more authors affiliated with two or more institutions shows an average of 115.25 citations per paper. The two average number of citations are essentially equivalent. They represent the differences between the modern equivalent of the single-authored paper in the field of chemistry and multiple-authored papers reporting the collaborative work of researchers at two or more institutions.

International authors

The rightmost column in shows the Percent of International Authors within each decade, the percent that contain a non-U.S. coauthor. This international author percentage indicator has grown from 0.09% in 1921–1930 to 30.05% in 2021–2022. This progression holds true for all six institutions being over 25% for the 2021–2022 timeframe.

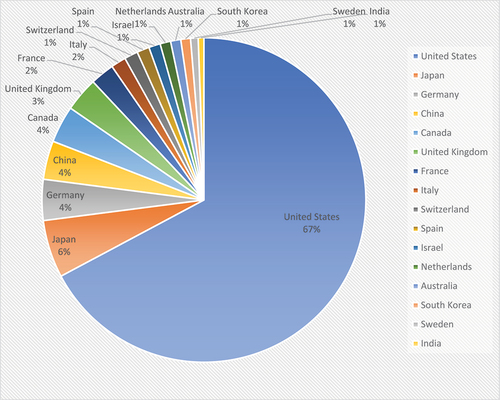

JACS has always had international contributions, although the earliest publications from the United Kingdom, Germany, and Canada and others between 1879 and 1900 were predominantly reports on foreign patents and meeting announcements. An analysis of the top countries publishing in JACS was compiled from the Scopus Advanced search. shows the 16 countries with over 1,000 contributions to JACS since 1879.

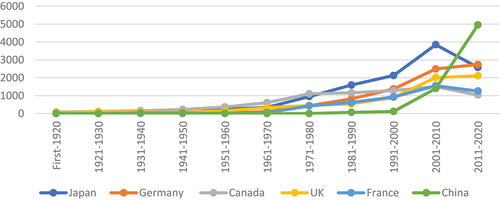

The growth in JACS publications through 2020 by several major non-U.S. countries is shown in . These top five contributors are Japan, Germany, Canada, China, and the United Kingdom. Chinese authors have produced a surge of publications starting in the early 2000s and has far outgrown other countries in the past 10 years. China is quickly gaining on the United States for total contributions to JACS with 2,053 during 2021 to October 2022 from the United States and China contributing 1,524 during the same time.

International collaborations and citation counts

As described in the literature review, a number of studies have examined the comparative relationships between papers possessing an international coauthor and their article citation counts.

In this study, two international coauthor indicator fields were generated. These two indicators were used to perform Pearson correlation analyses against the cited-by count field in each paper in the composite table of all six institutions.

The composite table of 23,336 records contains a column that indicates the number of non-U.S. coauthors on each paper. This field was generated by matching Scopus author IDs against Scopus affiliation ID text labels that contained a country address outside the U.S. This procedure was complicated by the somewhat frequent occurrence of authors with one or more U.S. affiliations and one or more non-U.S. affiliations. In a number of situations, an author had an affiliation in one of the six contributing universities and also in one or more different non-U.S. institutions. This may have been from authors on a sabbatical or a visiting appointment at a U.S. university and one or more home appointments outside the U.S. A script was written to identify the exact number of non-U. S. contributors to a paper. For verification, the number of institutional and non-institutional author fields were relied on as a check on the upper bound of non-U.S. coauthors on a paper.

There were one or greater non-U.S. authors in a total of 1,907 records or 8.17% on the all-six-combined table. From indicator Percent of International Authors, the percentage in recent years has grown to 18.21% in the 2001–2010 decade, 24.81% in 2011–2020, and 30.05% in the latest two years. This is a larger percentage than Glänzel and De Lange (Citation2002) modeled, but this study’s sample set encompassed 130 years of publication.

A separate field was also added to the all-six-combined table to indicate the presence or absence of a non-U.S. collaborator based on a scan of the affiliation fields.

Pearson correlation analyses were carried out similar to the analyses described in the Multiple-Authors section above. The first correlation looked at the Number of Non-U.S. Authors column and the cited-by count column. Using SQL commands, correlation tests could be conducted over various time periods of the 1890 to 2022 corpus and results could be limited to selected document types. The values for the number of non-U.S. collaborators range from 0 to 26 and the cited-by counts again range from 0 to 8,360 citations.

The closing year for citations was limited to 2017 to ensure that later articles had an adequate half-life and reasonable time to accrue citations. The Pearson r values (with the time period covered and the sample size N) for comparing the international collaboration, in the form of the number of non-U.S. coauthors, and citation counts:

The results are all significant at p < .05. These r values all represent very weak correlations and demonstrate that, in this sample of articles from JACS, the number of non-U.S. authors shows a very weak correlation with citation counts. And limiting the analysis to later years or article and review document types does not result in higher correlations.

Using the alternate indicator of the presence or absence of a non-U.S. collaborator yielded similar correlations, as expected.

For this selected sample of papers in a major chemistry journal, there was no correlation between the number of or presence of non-U.S. authors and the paper’s citation counts. Again, the fact that the sample set contains the publications of internationally known and highly cited individual faculty from six major research-oriented universities may have skewed the correlation. This is supported by several studies that examined the publications of chemists. A study of Italian university faculty’s research collaboration habits by Abramo, D’Angelo, and Murgia (Citation2017) found that chemistry researchers had a propensity to collaborate at the intramural and university level. Glänzel and De Lange (Citation2002) have also noted that, in some cases in the natural sciences, the international author and citations relationship is the same as the relationship between teams of domestic authors and citation counts.

Page counts

The number of pages per article were not included in the download of records so script was written to process the Pages field to parse out the number of pages per article. There were some errors found in the Scopus record; a few returned counts of over 1,000 pages. Some of these were incorrect from the JACS information, others were transposed or slightly incorrect numbers in the pages information. Records were sorted by page counts and the authors reviewed both the Scopus records and the actual pdfs of the documents to correct the records. We also spot checked some articles with page counts of more than 30 pages to be sure they were correct.

shows the average page counts by decade for all institutions in the Average Number of Pages column. The average page counts do not constitute a normal distribution over time. The first few decades have a high average page count, up to 11.78 pages for 1911–1920. The page counts then drop steadily from 1921 to 1971–1980 and then slowly rise to 7.4 pages for 2011–2020. For 2021–2022, the page count average is at 9.1 pages among all six institutions.

The differences in average page counts between the 2011–2020 and 2001–2010 decades are statistically significant (t = 17.53, df = 5648, p < .001) as are others, e.g., 1981–1990 and 1971–1980 (t = 2.58, df = 4183, p < .02). Several of the differences in average page count between two different decades are not statistically significant. The difference in the two average page counts for the periods from 1911–1920 to 1900–1910 are not statistically significant (t = 0.069, df = 382, accept the null hypothesis).

Open access

The Scopus API returns, for each record, a somewhat confusing indication of the article’s open access (OA) status and text indicating whether the article OA status is of the Gold, Green, or Hybrid category. Both tags need to be used to determine OA status. displays a column that shows the number of OA papers per decade and the percent of papers in that decade that are OA. The early percentages are very high: 95.24% of the articles in the 1890–1899 timeframe are OA and 83.51% of the 1900–1910 are OA with the lion’s share of these deposited OA into Zenodo.

After 1920, the percent of OA papers drops precipitously until 2001–2010 when it rises to 28.68% and then increases in 2011–2020 to 64.95%. shows the number and percentage of OA documents for each institution from 2001 to 2022.

Table 6. 2001 to October 2022 open access documents by institution.

Subramanyam collaboration ratio

Subramanyam (Citation1983) outlined a degree of collaboration metric C = Nm/Nm + Ns where

C = degree of collaboration;

Nm = number of multiple-authored publications;

Ns = number of single-authored publications.

shows the collaboration degree measure for each decade over all institutions. Subramanyam’s Collaboration ratio (C) measured over .90 by the 1961–1970 decade.

Conclusion

This paper reports on a longitudinal study of publication indicators from researchers at six prominent U.S. research universities and articles published over 130 years in JACS, a major chemistry journal. The metadata from articles published in JACS by these six institutions was downloaded using the Scopus API and the data was deposited in multiple tables in a relational database. The study developed a suite of automated data extraction and manipulation mechanisms that facilitate bibliometric analyses of publishing and citation indicators. The methodologies and algorithms developed here can serve as a model for other longitudinal journal studies and for comparing historical runs of multiple journal titles.

The goal of the study was to examine several scholarly publishing indicators within a sample set of 130 years of publications within JACS. The study examined single-authorship, multiple-authorship, and institutional publication patterns; the relationships between multiple-authorship and international co-authorship with citation counts; page count data; and open access availability on a decade-by-decade basis from 1890 to 2022. The relationships between these indicators have become increasingly complex and have been the subject of much study.

The average number of authors per paper has increased in a statistically significant manner, consistent with the model proposed by Abt (Citation2007b). At the same time, the two components making up that average – the average number of institutional authors and the average number of non-institutional authors – have also both increased, with the average number of non-institutional authors growing at a faster rate the last 30 years.

The often-studied relationships between multiple-authored papers and citation counts and between articles exhibiting international collaboration and citation counts found very weak correlational values in this study. Limiting the analysis to later years or article and review document types did not result in higher correlations. The sample set containing the publications of internationally known and highly cited individual faculty from six major research-oriented universities may not be representative of all JACS articles and may have skewed the correlation. However, examining the highly cited papers showed that they tend to converge on an average of four authors per paper. And looking at the average number of citations for papers with a specific number of authors or a range of number of authors suggested that papers with four or more authors had a higher number of citations per paper than the single-authored papers.

As modern scholarship has evolved, the definition of single author paper has changed. Many papers are structured with contributions from one or more graduate students and postdocs along with the work of the supervising faculty member. This three- or four-person team from the same institution, and all focused on a dissertation level project or a specific laboratory study, is in many ways the new single author.

Further testing of all the relationships between authors and citations within other journals or within disciplines other than chemistry would be valuable and informative. It would also be useful to repeat the study within a multiple disciplinary environment.

Overall, this analysis provides an interesting picture of JACS aggregate bibliometric characteristics over publications of chemistry researchers from the six selected major universities.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

References

- Abramo, G., C. A. D’Angelo, and F. Di Costa. 2021. On the relation between the degree of internationalization of cited and citing publications: A field level analysis, including and excluding self-citations. Journal of Informetrics 15 (1):101101. doi:10.1016/j.joi.2020.101101.

- Abramo, G., C. A. D’Angelo, and G. Murgia. 2017. The relationship among research productivity, research collaboration, and their determinants. Journal of Informetrics 11 (4):1016–30. doi:10.1016/j.joi.2017.09.007.

- Abt, H. A. 2007a. The frequencies of multinational papers in various sciences. Scientometrics 72:105–15. doi:10.1007/s11192-007-1686-z.

- Abt, H. A. 2007b. The future of single-authored papers. Scientometrics 73 (3):353–58. doi:10.1007/s11192-007-1822-9.

- Bonn, G. S. 1963. Most-Used science and technology periodicals seen in study. Chemical & Engineering News 41 (19):68. doi:10.1021/cen-v041n019.p068.

- Bornmann, L., H. Schier, W. Marx, and H. D. Daniel. 2012. What factors determine citations counts of publications in chemistry besides their quality? Journal of Informetrics 6 (1):11–18. doi:10.1016/j.joi.2011.08.004.

- Butcher, J., and P. Jeffrey. 2005. The use of bibliometric indicators to explore industry–academia collaboration trends over time in the field of membrane use for water treatment. Technovation 25 (11):1273–80. doi:10.1016/j.technovation.2004.06.003.

- Buznik, V. M., I. V. Zibareva, V. N. Plottukh-Peletskii, and N. I. Sorokin. 2004. Bibliometric Analysis of the Journal of Structural Chemistry. Journal of Structural Chemistry 45 (6):1096–106. doi:10.1007/s10947-005-0100-z.

- Cronin, B., D. Shaw, and K. La Barre. 2004. Visible, less visible, and invisible work: Patterns of collaboration in 20th century chemistry. Journal of the American Society for Information Science and Technology 55 (2):160–68. doi:10.1002/asi.10353.

- Dodson, D. 2020. Big in the big Ten: Highly cited works and their characteristics. Science and Technology Libraries 39 (4):353–68. doi:10.1080/0194262x.2020.1780539.

- Fan, L., L. Guo, X. Wang, L. Xu, and F. Liu. 2022. Does the author’s collaboration mode lead to papers’ different citation impacts? An empirical analysis based on propensity score matching. Journal of Informetrics 16 (4):101350. doi:10.1016/j.joi.2022.101350.

- Figg, W. D., L. Dunn, D. J. Liewehr, S. M. Steinberg, P. W. Thurman, C. Barrett, and J. Birkinshaw. 2012. Scientific collaboration results in higher citation rates of published articles. Pharmacotherapy 26 (6):759–67. doi:10.1592/phco.26.6.759.

- Fortunato, S., C. T. Bergstrom, K. Börner, J. A. Evans, D. Helbing, S. Milojević, A. M. Petersen, F. Radicchi, R. Sinatra, B. Uzzi, et al. 2018. Science of science. Science: Advanced Materials and Devices 359 (6379):185. doi:10.1126/science.aao0185.

- Franceschet, M., and A. Costantini. 2010. The effect of scholar collaboration on impact and quality of academic papers. Journal of Informetrics 4 (4):540–53. doi:10.1016/j.joi.2010.06.003.

- Garfield, E. 1955. Citation indexes for science: A new dimension in documentation through association of ideas. Science: Advanced Materials and Devices 122 (3159):108–11. July 15, 1955. http://garfield.library.upenn.edu/essays/v6p468y1983.pdf.

- Ghosh, J. S., and M. L. Neufeld. 1974. Uncitedness of articles in the Journal of the American Chemical Society. Inform Storage and Retrieval 10 (11–12):365–69. doi:10.1016/0020-0271(74)90043-6.

- Glänzel, W., K. Debackere, B. Thijs, and A. Schubert. 2006. A concise review on the role of author self-citations in information science, bibliometrics and science policy. Scientometrics 67 (2):263–77. doi:10.1007/s11192-006-0098-9.

- Glänzel, W., and C. De Lange. 2002. A distributional approach to multinationality measures of international scientific collaboration. Scientometrics 54 (1):75–89. doi:10.1023/A:10156684505035.

- Glänzel, W., and A. Schubert. 2001. Double effort=double impact? A critical view at international co-authorship in chemistry. Scientometrics 50 (2):199–214. doi:10.1023/A:1010561321723.

- Greene, M. 2007. The demise of the lone author. Nature 450 (7173):1165. doi:10.1038/4501165a.

- Gross, P. L. K., and E. M. Gross. 1927. College libraries and chemical education. Science. 66 (1713):385–89. doi:10.1126/science.66.1713.385.

- He, Z.-L., X.-S. Geng, and C. Campbell-Hunt. 2009. Research collaboration and research output: A longitudinal study of 65 biomedical scientists in a New Zealand university. Research Policy 38 (2):306–17. doi:10.1016/j.respol.2008.11.011.

- Herbstein, F. H. 1993. Measuring ‘publications output’ and ‘publications impact’ of faculty members of a university chemistry department. Scientometrics 28 (3):349–73. doi:10.1007/BF02026515.

- Kato, M., and A. Ando. 2013. The relationship between research performance and international collaboration in chemistry. Scientometrics 97 (3):535–53. doi:10.1007/s11192-013-1011-y.

- Kennedy, D. 2003. Editorial: Multiple authors, multiple problems. Science: Advanced Materials and Devices 301 (5634):733. doi:10.1126/science.301.5634.733.

- Larivière, V., Y. Gingras, C. R. Sugimoto, and A. Tsou. 2015. Team size matters: Collaboration and scientific impact since 1900. Journal of the Association for Information Science and Technology 66 (7):1323–32. doi:10.1002/asi.23266.

- Lawani, S. M. 1986. Some bibliometric correlates of quality in scientific research. Scientometrics 9 (1–2):13–25. doi:10.1007/BF02016604.

- Marx, W. 2011. Special features of historical papers from the viewpoint of bibliometrics. Journal of the American Society for Information Science and Technology 62 (3):433–39. doi:10.1002/asi.21479.

- Merton, R. K. 1968. The matthew effect in science. Science: Advanced Materials and Devices 159 (3810):56–63. doi:10.1126/science.159.3810.56.

- Persson, O. 2010. Are highly cited papers more international? Scientometrics 83 (2):397–401. doi:10.1007/s11192-009-0007-0.

- Phillips, J. P. 1955. The individual in chemical research. Science: Advanced Materials and Devices 121 (3139):311–12. doi:10.1126/science.121.3139.311.c.

- Price, D. J. D. S. 1963. Little science, big science, 87–89. New York: Columbia University Press.

- Qin, J. 1994. An Investigation of Research Collaboration in the Sciences through the Philosophical Transactions 1901-1991. Scientometrics 29 (2):219–38. doi:10.1007/BF02017974.

- Rousseau, R. 2001. Are multi-authored articles cited more than single-authored ones? Are collaborations with authors from other countries more cited than collaborations within the country? A case study. In Proceedings of the Second Berlin Workshop on Scientometrics and Informetrics, ed. F. Havemann, R. Wagner-Döbler, and H. Kretschmer, 173–76. http://hdl.handle.net/1942/822.

- Rousseau, R., and J. Ding. 2016. Does international collaboration yield a higher citation potential for US scientists publishing in highly visible interdisciplinary journals? Journal of the Association for Information Science and Technology 67 (4):1009–13. doi:10.1002/asi.23565.

- Salisbury, L., J. J. Smith, and F. Faustin. 2022. Altmetric attention score and its relationships to the characteristics of the publications in the Journal of the American Chemical Society. Science & Technology Libraries 1–18. doi:10.1080/0194262X.2022.2099505.

- Smart, J. C., and A. E. Bayer. 1986. Author collaboration and impact: A note on citation rates of single and multiple authored articles. Scientometrics 10 (5–6):297–305. doi:10.1007/BF02016776.

- Stefaniak, B. 1982. Individual and multiple authorship of papers in chemistry and physics. Scientometrics 4 (4):331–37.

- Subramanyam, K. 1983. Bibliometric studies of research collaboration: A review. Journal of Information Science 6 (1):35–42. doi:10.1177/016555158300600105.

- Sugimoto, C. R., N. Robinson-Garcia, D. S. Murray, A. Yegros-Yegros, R. Costas, and V. Larivière. 2017. Scientists have most impact when they’re free to move. Nature 550:29–31. doi:10.1038/550029a.

- Tahamtan, I., A. S. Afshar, and K. Ahamdzadeh. 2016. Factors affecting number of citations: A comprehensive review of the literature. Scientometrics 107 (3):1195–225. doi:10.1007/s11192-016-1889-2.

- Van Noorden, R. 2017. The science that’s never been cited. Nature 552 (7684):162–64. doi:10.1038/d41586-017-08404-0.

- Van Raan, A. F. J. 2005. Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics 62 (1):133–43. doi:10.1007/s11192-005-0008-6.

- Velez-Estevez, A., P. Garcia-Sanchez, J. A. Moral-Munoz, and M. J. Cobo. 2022. Why do papers from international collaborations get more citations? A bibliometric analysis of library and information science papers. Scientometrics 127 (12):7517–55. doi:10.1007/s11192-022-04486-4.

- Visser, M., N. J. van Eck, and L. Waltman. 2021. Large-scale comparison of bibliographic data sources: Scopus, web of science, dimensions, crossref, and microsoft academic. Quantitative Science Studies 2 (1):20–41. doi:10.1162/qss_a_00112.

- Wagner, C. S., T. A. Whetsell, and L. Leydesdorff. 2017. Growth of international collaboration in science: Revisiting six specialties. Scientometrics 110 (3):1633–52. doi:10.1007/s11192-016-2230-9.

- Wuchty, S., B. F. Jones, and B. Uzi. 2007. The increasing dominance of teams in production of knowledge. Science: Advanced Materials and Devices 316 (5827):1036–39. doi:10.1126/science.1136099.