Abstract

Problem, research strategy, and findings

The integration of a artificial intelligence (AI) into urban planning presents potential ethical challenges, including concerns about bias, transparency, accountability, privacy, and misinformation. As planners rely more on AI for decision making, the potential for these systems to perpetuate biases, obscure decision-making processes, and infringe on privacy becomes more pronounced, potentially undermining public trust and excluding marginalized communities. We reviewed existing literature on AI ethics in urban planning, examining biases, transparency, accountability, and privacy issues. Our methodology synthesized findings from various studies, reports, and theoretical frameworks to highlight ethical concerns in AI-driven urban planning. Recommendations for ethical AI implementation emphasize transparency, inclusive data sets, public engagement, and robust ethical guidelines. Our research identified critical ethical concerns in AI-driven urban planning. Bias in AI systems can lead to unequal outcomes, disproportionately affecting marginalized communities. Transparency issues arise from the black box nature of AI, complicating understanding and trust in AI-driven decisions. Privacy concerns are heightened due to extensive data collection and potential misuse, raising the risk of surveillance and data breaches. Limitations include the availability of specific literature focused on AI ethics for urban planning and the evolving nature of AI technologies, suggesting a need for ongoing research and adaptive strategies. Human oversight and continuous monitoring are essential to ensure ethical practices, with an emphasis on community engagement and public education to foster trust and inclusivity.

Takeaway for practice

Urban planners should adopt a proactive approach to mitigate ethical risks associated with AI. Ensuring transparency, involving diverse community groups, and maintaining robust data privacy measures are crucial. Prioritizing public engagement and education will help to demystify AI technologies and build public trust. Addressing these ethical concerns allows planners to leverage AI’s potential while safeguarding equity, privacy, and accountability in urban development.

Keywords:

The machine does not isolate man from the great problems of nature but plunges him more deeply into them.

—Antoine de Saint-Exupéry

The quote by Antoine de Saint-Exupéry, a French writer and pioneering aviator best known for his novella The Little Prince, reflects his views on the impact of technology on human engagement with the natural world and existential issues. The statement frames the following discussion about the ethical implications of artificial intelligence (AI) in urban planning. As urban planners increasingly use AI to address complex urban challenges, they find themselves confronting not only the technological complexities of AI but also a diverse mix of moral and ethical questions (Phillips & Jiao, Citation2023). This convergence of advanced technology with human-centric urban environments underscores the urgent need for a comprehensive and critical exploration of AI’s influences on urban landscapes and society. This integration is fraught with ethical considerations, including privacy, equity, and accountability, which are viewed with caution and sometimes fear.

In the rapidly evolving landscape of urban technology, emerging AI tools have the potential to significantly affect urban planning and management. Considerable growth has been observed in areas like smart cities, the internet of things (IoT), and digital twins, contributing to the digitalization of urban environments. Other current cases of urban planners using AI include sentiment analysis of public comment, computer vision for neighborhood conditions assessments and school district boundary adjustment, and optimization processes to improve public transportation routes (Sanchez, Citation2023a; Ye et al., Citation2023a). On a broader scale these technologies are expected to have barriers to adoption, but proactively identifying those challenges and understanding potential negative disruptive effects should be considered diligently (Ye et al., Citation2023b). In this article we explore some of the anticipated challenges urban planners face when adopting AI technology. AI encompasses a wide array of tools rather than a singular platform or application, and it has already been integrated into various software types commonly used by planners, including word processing, email, spreadsheets, and GIS. We rely on a simple definition of AI for this article and the issues that are being discussed, where AI is seen as a set of methods that mimic human intelligence through computerized instructions, rules, and processes.

Like other fields, AI in urban planning is expected to encounter an array of ethical challenges and risks, notably regarding human control and the potential for misuse. As AI systems increasingly influence decision making in urban governance, questions about bias, transparency, and accountability must be considered (Yigitcanlar et al., Citation2020). We discuss these issues as they relate to AI in urban planning, with a focus on addressing the ethical implications. By exploring several aspects of ethics related to planning practice and AI, we seek to highlight the balance between harnessing AI’s capabilities and safeguarding the ethical and social values integral to urban planning. One of the challenges we encountered was that there were very few current publications with a specific focus on AI ethics for practicing planners. Our search using Web of Science, Scopus, and Google Scholar to find such publications (using the terms urban planning and AI and ethics) returned only six publications. These publications covered a much broader territory than AI ethics for planners but helped shape our discussion (see Luusua et al., Citation2023; Peng et al., Citation2023; Phillips & Jiao, Citation2023; Sanchez et al., Citation2022; Son et al., Citation2023; Yigitcanlar et al., Citation2020).

Given the rapid growth in data collection, accelerated computer processing speeds, and greater accessibility of AI applications, planners are now asking how (or whether) applying advanced analytical approaches, including the concept of “urban AI” as described by Cugurullo (Citation2020), can enhance planning practice. Urban AI encompasses the integration of AI into the fabric of urban environments, transforming cities into more autonomous, self-regulating systems. This underscores a shift from mere data analysis to the proactive, AI-driven management of urban spaces, suggesting a future where cities not only predict but adapt to changes autonomously. The novelty of AI and urban AI methods for planners presents both opportunities and challenges that will require time to understand their impacts fully (Allam & Dhunny, Citation2019). Though the potential of AI for planning has been acknowledged since the 1960s and 1970s, its practical application was limited by the lack of urban system data and inadequate computing power (Batty, Citation2021; Sanchez, Citation2023b). Today, however, the landscape is dramatically different. Enhanced data collection capabilities allow for the monitoring of movement patterns, land use changes, real estate transactions, and energy usage, providing a rich data set for urban AI applications to optimize and autonomize urban planning processes (Barkham et al., Citation2022). This evolving scenario, propelled by advancements in information technology, sets the stage for urban planners to explore the potential of urban AI in creating self-sufficient, intelligently managed urban ecosystems, aligning with Cugurullo’s vision of the future of urban planning.

AI and big data are increasingly becoming part of our everyday lives. This includes data being used to make decisions, such as medical diagnoses, credit reporting, and consumer recommendations (Sanchez, Citation2023b). In the realm of urban planning and development, smart city initiatives deploy data collection sensors that monitor human activities across various scales, amassing vast amounts of data about us, often without our awareness (Chang, Citation2021; Cugurullo, Citation2020; Zuboff, Citation2019). Other significant concerns and warnings have been expressed, such as bias embedded in search engine results and algorithms (Noble, Citation2018) and how big data and algorithms harm the poor, reinforcing racism and inequality (Eubanks, Citation2018; O’Neil, Citation2016). The potential reliance on AI for critical decision making in urban planning raises questions that include accuracy, bias, and displacement of human expertise. As the profession moves toward AI-driven urban planning, it is important to address these concerns to ensure that the development of our cities remains inclusive, sustainable, and reflective of the needs of stakeholders.

In this article, we identify specific areas of concern where the appropriateness of a new generation of analytical methods will be the subject of debate. This article draws on limited (but hopefully growing) literature with a specific focus on ethical concerns related to the application of AI to urban planning practice. Future research will likely result from experiences documented in the field of planning over time because of increasing AI implementation. Besides contributing to the growing academic literature, one of our objectives is to address the needs of the planning profession. Professional organizations like APA have been putting substantial energy and resources into strategizing about how planners can prepare for AI. This includes a recent series of reports directed to planning professionals on digitalization for planning (see Gomez & DeAngelis, Citation2022), AI and planning (see Andrews et al., Citation2022; Sanchez, Citation2023a), and generative AI (Daniel, Citation2023). We hope that this article will lead to further research, discussion, and debate. We then conclude by providing recommendations on how to proceed with caution as the profession gains experience with these new processes and outcomes.

Ethics for Planners

The role of ethics in urban planning has several dimensions, encompassing the responsibility to guide urban development and management in a manner that is equitable, just, and sustainable. Urban planning, by its very nature, affects the lives of many, and the decisions influenced by planners have long-lasting impacts on communities. This responsibility necessitates a strong ethical framework to navigate the complexities and challenges inherent in the field. Many ethical dilemmas planners face stem from the need to balance diverse and often conflicting interests (Wachs, Citation1985). Urban planning is not merely about land use and infrastructure development; it involves mediating among different stakeholders, each with its own set of values, needs, and objectives. Ethical planning requires a conscientious approach to conflicts, striving for solutions that are fair and just, especially in the face of competing demands.

At the heart of decision making in urban development lies the power dynamics that permeate the planning process. Forester (Citation1989) discussed the challenges planners face in advocating for equitable outcomes, especially when confronted with powerful entities whose interests may conflict with those of the wider community. Forester underscored the importance of ethical courage and the need for planners to stand firm in their principles, even when it means challenging established power structures. In addition, Fainstein (Citation2010) argued for a model of urban development that prioritizes equity, democracy, and diversity. This includes the ethical imperative for planners to create cities that not only function efficiently but are also socially just and inclusive, particularly for marginalized and disadvantaged communities.

Ethics in urban planning further includes a commitment to environmental stewardship and sustainability (Wheeler, Citation2013). Planners are tasked with making decisions that have long-term implications for the environment and future generations. This involves balancing current development needs with the preservation of natural resources and the health of ecosystems. Furthermore, public participation and engagement are essential ethical components in urban planning (Brenman & Sanchez, Citation2012). However, it remains a challenge for planners to ensure that community members have opportunities to be involved in the planning process, particularly those from underrepresented or marginalized groups. This commitment to inclusivity and transparency is intended to build trust, incorporate diverse perspectives into planning decisions, and avoid adverse impacts on groups such as people of color.

Challenges

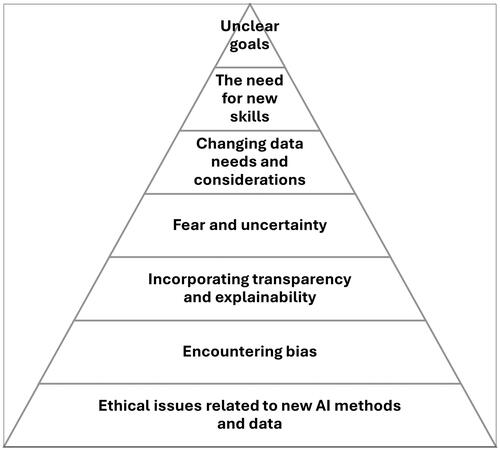

There are a variety of challenges that will influence the speed and efficiency with which urban planners can implement AI. In discussing the future of AI for urban planning, Sanchez (Citation2023b) identified seven challenges that could be encountered as part of the innovation adoption process (see ). The challenges urban planners will face range from fear and uncertainty, the demand for new skills, evolving data needs, unclear objectives, issues of transparency and explainability, the presence of bias, to ethical dilemmas. These are not distinct from each other but interwoven and dynamic. This interrelation stems from the inherently complex nature of AI systems and the many ways in which they may affect urban planning practices. For instance, fear and uncertainty about AI can be directly linked to a lack of transparency and explainability in AI algorithms, making it difficult for planners to trust and effectively use these systems. Similarly, the need for new skills is intertwined with changing data needs and considerations, because urban planners must not only understand how to work with new types of data but also how to do so in a way that aligns with ethical principles and minimizes bias. Acknowledging the interconnectedness of these challenges is crucial for developing holistic strategies that address not just the technical aspects of AI adoption but also the human and ethical dimensions, ensuring that AI serves as a tool for enhancing urban planning outcomes.

Of these challenges, we focus here on those associated with ethical issues. Although ethical issues are intertwined with the other challenges, they have emerged as primary concerns given the perceived impacts of AI decisions on community plans and quality of life. We next discuss these ethical concerns as they relate to urban planning and AI. This includes five primary areas related to ethical concerns: bias, privacy, equity and inclusivity, accountability and transparency, and misinformation and disinformation. Like the challenges mentioned above, each is not completely distinct from the other, but they represent aspects that have been identified thus far in the literature on AI ethics. We then provide a range of considerations directed to planners who may be at risk from AI-generated outputs being used in the plan-making process. We conclude by highlighting the major points while acknowledging there are many unknowns that make it difficult to provide firm conclusions other than to extend what has already been observed.

Bias

Bias in AI can arise in various forms, frequently stemming from skewed data sets or assumptions embedded in algorithms (Du et al., Citation2023). In addition, missing data, such as census undercount data or low public survey participation rates, lead to representation bias in AI systems, primarily because these systems rely on data to learn, make predictions, and inform decision-making processes (Shahbazi et al., Citation2023). When certain populations are undercounted or missing from the data set, the AI models developed using these incomplete data inherit these gaps, leading to skewed outputs. These biases can lead to unequal outcomes in urban development, disproportionately affecting certain communities or failing to adequately represent the diverse needs of urban and other populations. The challenge, therefore, is to ensure that AI systems in urban planning are developed and implemented with an awareness of potential biases. This involves not only scrutiny of the data used to train these systems, such as large language models (LLMs) and other generative AI applications, but also continuous monitoring and adjustment of algorithms to address biases and outcomes as they emerge. Engagement and consultation with diverse community groups and stakeholders to understand their unique needs and perspectives can also inform more equitable AI development.

Research on human bias, particularly implicit biases, emerged from well-established theories of learning and memory as well as experimental psychology and neuroscience and revealed that even without conscious awareness, people harbor biases against races, genders, ages, classes, and nationalities (Lovell et al., Citation2023; Nordell, Citation2021). Early concerns highlighted how these biases manifest in ways that are discrepant from facts, such as associating American symbols more with White faces than with Asian faces, despite all being U.S. citizens (Fiske & Taylor, Citation2013). The extent to which these human biases can infiltrate AI systems and cause detrimental outcomes has become a topic of discussion in society in the last few years (Ferrer et al., Citation2021; Roselli et al., Citation2019). These hazards are important considerations for all organizations considering the adoption of AI systems. Systems trained on large databases and human behavior seem to inevitably replicate pre-existing biases. Overcoming, reducing, eliminating, and ameliorating these biases and their adverse effects on people is very difficult. In the United States this is evident in failures in the enforcement of civil rights laws and diversity programs and initiatives that have been in place for several decades.

AI methods have the potential to intensify human bias by systematically introducing these biases into sensitive application domains. Algorithms are susceptible to bias in several ways, even when sensitive factors like gender, ethnicity, or sexual orientation are accounted for (Roselli et al., Citation2019). It can be extremely difficult to account for and correct for non-merit factors. Even large amounts of accumulated training data can be incomplete or inaccurate, reflecting previous poor decisions, social conditions, the vestiges of past discrimination, and biased analyses resulting from historical conditions. One question is whether AI can assist in identifying and minimizing the effects of human biases if properly trained. We are all susceptible to and responsible for combating bias. Unfortunately, some ideological adherents do not believe bias exists (Sue, Citation2010), some choose to perpetuate bias and its effects, and some seek to increase non-rational differences among groups. An agnostic or colorblind approach contributes nothing to solving social problems. However, some expect that computer algorithms can be trained to detect even subtle forms of bias (Mayson, Citation2018). Given the subtlety and societally embedded nature of some forms of bias and their manifestation, it seems unlikely that bias can be eliminated.

Not only does bias harm those discriminated against but it also limits people’s participation in the economy and society. As a result of fostering distrust and delivering skewed results, bias lowers AI’s potential for use in government, business, and society in general. Bias is irrational, illogical, and not the result of random processes. Whereas software systems can be programmed to follow the rules of formal logic, assumptions and premises must be ascertained and examined for veracity and credibility, for which there are few resources or applications. In theory, random decisions can be substituted for biased human (and machine) decision making. However, true randomness is notoriously difficult to achieve.

Solutions to combating bias involve expanding research into AI prejudice in planning and public policy, as well as general education, to carefully consider the many ways in which AI may supplement our current methods of making decisions. It is important to detect, resolve, and document any instances where we witness bias in AI. However, defining and assessing fairness is very difficult in the public realm (Brenman & Sanchez, Citation2012). Researchers have devised technical definitions of fairness, such as mandating that models have similar outcome values across socioeconomic groups (Corbett-Davies & Goel, Citation2018). However, defining fairness by outcomes invites opposition by some conservatives that social programs demand equality of outcomes rather than just equality of opportunities. Different fairness criteria typically cannot be satisfied at the same time, which is a considerable challenge.

Researchers have made strides in strategies that can improve how AI systems match fairness requirements and metrics, whether by preprocessing data, reviewing results, or embedding appropriate and transparent rules as part of the data training process (Corbett-Davies & Goel, Citation2018). Counterfactual fairness is a method that ensures a model’s conclusions would be true even if sensitive characteristics like race, gender, or sexual orientation were modified (Kusner et al., Citation2017). This approach can be used in complex situations in which some impacts from sensitive qualities that affect outcomes are viewed as fair, whereas others are viewed as unfair. The model could be used, for instance, to ensure that an applicant’s race had no bearing on whether they were approved for a mortgage while still allowing the lender to include race as demographic information for later reporting.

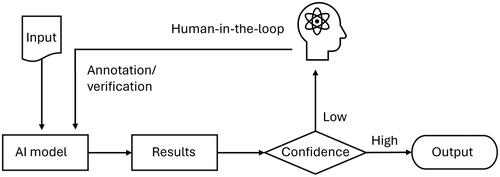

It is also necessary to determine when a system is deemed fair for use by deciding under what circumstances automated decision making can be permitted (Araujo et al., Citation2020). In some cases, human-in-the-loop algorithms or AI system responses, which include human intervention or review, will be needed to maintain control or oversight, especially in unusual circumstances that may not have significant machine intelligence to draw upon. These issues call for interdisciplinary approaches from planners, engineers, designers, ethicists, and social scientists. This can be especially challenging in the case of planning, where political forces play a significant role in decision making.

There are some basic steps to address potential ethical concerns relating to bias. Planners should keep up to date on the ethical dimensions of rapidly evolving urban technologies, including AI, which are being continuously uncovered as more and more applications are being implemented. New forms and effects of discrimination are being discovered. An example is the recent discovery that houses occupied and owned by Blacks are often given lower appraisals than identical houses occupied and owned by Whites (Faber, Citation2020). In addition, planners can create accountable procedures that help reduce prejudice when they use AI, including the use of technical tools or operational techniques such as oversight committees or external evaluations. Oversight and external evaluation have their challenges, along with the enforcement of guidelines (Katyal, Citation2019). Much depends on who is doing the oversight and evaluation and whether the findings of the oversight group are adopted or merely treated as recommendations.

Ongoing organizational discussions about potential biases in all plans or analyses can increase awareness to determine whether decisions were fair. Research has been conducted on how to hold individuals to higher standards using more sophisticated techniques for checking for bias in machines (Schwartz et al., Citation2022). This can include comparing the outcomes of algorithm performance with human decisions and using explainability approaches that, depending on the AI technique used and the level of detail needed, can help identify factors that influenced the model’s results (Belle & Papantonis, Citation2021). The question can be raised as to whether accepting the fallibility of human decision making as a standard is sufficient. Human-in-the-loop systems can offer suggestions that staff can verify or select from (see ). Humans can intervene to help interpret results and explain levels of confidence in these algorithms. Probabilities, Bayesian concepts, and margins of error can be made explicit. Individuals representing different disciplines such as planning, engineering, architecture, and computer science can confer in the event of contentious or difficult-to-explain algorithmic performance. The nature of public policy decisions, however, is that there are many external influences and undue influence by small groups.

Privacy

As urban planners increasingly use AI for their planning analyses, potential ethical concerns begin to surface, with privacy being one of the most prominent (Machin et al., Citation2021). Privacy in urban planning encompasses the safeguarding of individuals’ and group rights to control personal and group information, particularly within the context of urban spaces and their interactions within them (Braun et al., Citation2018). It involves respecting the boundaries of personal and group space and ensuring that individual and group data are collected, stored, and used in a manner that respects their autonomy. With AI-driven planning analyses, vast amounts of data may be required for comprehensive insights. However, this reliance on data collection brings forth a wide range of privacy concerns that must be addressed responsibly (Xia et al., Citation2023).

One of the most pronounced privacy concerns in AI-driven urban planning revolves around the extensive data collection necessary for analyses such as traffic analysis. Urban planners gather data from various sources, including public records, sensor networks, social media, and mobile applications. These data might encompass information about residents, visitors, and users as well as customers’ movements, transportation habits, and even their social interactions. Although high-resolution, disaggregate data are indispensable for comprehending urban dynamics, the data simultaneously pose potential issues related to surveillance and infringements on individual and group privacy (Kitchin, Citation2016). For instance, the implementation of smart city technologies, such as surveillance cameras equipped with facial recognition, has the capacity for constant monitoring of individuals in public spaces (Kashef et al., Citation2021). Facial recognition has been shown to sometimes generate false identification for decision purposes such as arrest. Surveillance can violate individuals’ privacy and freedom rights, especially if they are unaware that they are being watched, their activities are being recorded, or decisions are being made solely based on automated surveillance. Striking a balance between data collection for insightful analysis and respecting individuals’ privacy and freedom rights becomes an ethical question for planners in these cases. Legal issues can arise that go beyond ethics into curtailment of rights.

The growing amount of data collected for AI-driven urban planning analyses requires secure storage and protection against data breaches and misuse (Xia et al., Citation2023). Given the potential sensitivity of the information involved, a breach can have severe repercussions on individuals’ privacy and overall safety. Unauthorized access to data can lead to identity theft, stalking, or other malicious activities, profoundly harming the affected individuals. Urban planners and planning organizations bear the ethical responsibility to institute strict data security measures that ensure the information collected remains confidential, inaccessible to unauthorized parties, and not misused. Such security measures include encryption, access controls, periodic security audits, well-defined protocols for responding swiftly to data breaches, and institutional review boards. Not only is this an ethical imperative, but it is also a legal requirement in many jurisdictions (Smallwood, Citation2019).

To mitigate privacy concerns, there are methods for de-identification and anonymization to eliminate personally identifiable information from data sets. However, it is essential to recognize that de-identification is not infallible, and there remains a risk of re-identification if sufficient information is available (Lauradoux et al., Citation2023). Moreover, with the rise of external data sources and increasingly sophisticated AI algorithms, the possibility of re-identification attacks becomes more substantial. Planning organizations will need to recognize the limitations of de-identification and commit to ongoing research and development efforts to enhance privacy protection methods. In addition, adopting best practices for data anonymization can help reduce the risk of re-identification, thereby preserving individuals’ privacy.

Respecting individuals’ privacy rights extends to informed consent, where individuals should be fully informed about the data being collected, their purpose, and how they will be used. In many AI-driven urban planning initiatives, residents may not receive adequate information or the opportunity to provide meaningful consent (Yigitcanlar et al., Citation2021). This lack of transparency can erode trust between the community and planning organizations, perhaps undermining the legitimacy of data collection efforts and leading to opposition. To address this privacy concern, urban planners should also prioritize transparency in data collection practices (Engin et al., Citation2020). Residents should be made aware of the types of data being collected and the precise purposes for which they will be used. Providing clear and accessible privacy policies and mechanisms for residents to opt in or out of data collection initiatives can help residents make informed decisions about their participation. This will often require distributing information in languages other than English and providing access to people with cognitive impairments.

Data ownership in the context of AI-driven methods is a complex and evolving issue. Individuals may not always have control over the data generated by their interactions with urban environments, such as data collected from IoT devices or shared on social media platforms. This raises concerns when data are collected without explicit consent and then used for decision making that affects individuals’ lives. Urban planners will need to confront questions about data ownership and control. This includes considering who owns the data and whether individuals should have more control over how their data are used. Initiatives that empower individuals to manage and control their data can be a step toward addressing privacy concerns and fostering a sense of ownership over their personal information.

Beyond privacy, the ethical use of data in AI-driven urban planning encompasses broader considerations. Planners must ensure that data are used for the betterment of the community and do not result in harm to vulnerable populations or disproportionately benefit certain groups. The adoption of ethical guidelines and codes of conduct can serve as valuable tools to guide planners in making responsible decisions about data usage. Beyond guidelines and codes, there must be monitoring, implementation, and enforcement mechanisms.

Equity and Inclusivity

Equity and inclusion within urban planning refers to the principles of fairness and social justice in the development and management of cities and communities (Moroni, Citation2020). It involves ensuring that all individuals, regardless of their background or circumstances, have access to essential and basic services, opportunities, and resources within the urban environment (Sharma, Citation2023). AI in urban planning has the potential to either reinforce existing inequalities or reduce them. AI algorithms used for urban planning will likely rely on historical data for training models. These historical data may carry biases inherited from past discriminatory practices and systemic inequalities (Veale & Binns, Citation2017). When AI algorithms are applied to decision-making processes, they may inadvertently perpetuate and exacerbate these biases. For example, if historical data favor investment in affluent neighborhoods over marginalized ones, AI algorithms may recommend resource allocation that continues to neglect underserved communities, leading to biased decision making, as previously discussed.

Addressing this issue requires planners and organizations to be vigilant in identifying and mitigating biases within AI systems. This may involve refining algorithms, diversifying training data, implementing regular audits to ensure fairness and equity in decision-making processes, and having historical knowledge of harms that have come before. In addition, engaging with communities to understand their unique needs and perspectives may increase in importance. Those with limited access to digital devices, the internet, or digital literacy may find themselves excluded from the benefits of AI-driven planning initiatives, particularly those for public participation. To address this concern, urban planners need to consider how to ensure equitable access to AI-driven services and information. Strategies may include providing digital literacy training, improving broadband infrastructure in underserved areas, and designing AI interfaces that are user friendly and accessible to diverse populations. By bridging the digital divide, planners can work to see that AI-driven planning benefits all residents, visitors, customers, clients, and users.

When properly managed, AI technologies can significantly improve public participation by providing innovative tools for gathering input and feedback from residents and others. However, there is a risk that relying too heavily on AI-driven engagement processes may exclude those who lack access to technology or are not comfortable using digital platforms (Afzalan et al., Citation2017). Urban planners and planning organizations will need to strike a balance between leveraging AI for community engagement and ensuring that traditional, in-person engagement methods remain accessible and inclusive. This includes conducting outreach in multiple languages, providing accommodations for individuals with disabilities, and fostering a culture of inclusion where all voices are valued, regardless of their mode of expression (Brenman & Sanchez, Citation2012).

One of the unintended consequences of AI-driven planning could be forms of gentrification and displacement. As AI identifies areas for redevelopment or investment, it may inadvertently accelerate the process of raising property values and rents, pushing out long-standing residents and businesses (Lorinc, Citation2022). This can lead to the displacement of vulnerable communities and the loss of cultural diversity within neighborhoods. Urban planners should proactively develop strategies to mitigate these effects, such as affordable housing initiatives, tenant protections, and community land trusts. In addition, urban planners should prioritize the preservation of cultural heritage and the voices of those at risk of displacement in their planning processes (Anguelovski et al., Citation2019).

To truly harness the potential of AI in urban planning for the betterment of all community members, a deep commitment to equity and inclusivity is paramount. This commitment necessitates a shift in perspective, recognizing that technological solutions must be deliberately designed and implemented to address the unique needs of diverse populations, including those historically marginalized. Urban planners and AI developers must engage directly with these communities, employing a co-creation approach to ensure that AI-driven solutions are grounded in the real-world experiences and challenges of those they aim to serve. This engagement should not be superficial but deeply integrated into every stage of the planning process, from conceptualization to implementation and evaluation.

Furthermore, inclusivity in this context means going beyond mere access to ensuring that AI applications are comprehensible, usable, and beneficial across different socioeconomic backgrounds, ages, and abilities. Urban planners must advocate for and develop AI tools that are inherently accessible, with interfaces and interactions designed for a broad spectrum of users. Efforts to improve digital literacy, alongside the deployment of AI solutions, will be critical in ensuring that the digital divide does not widen the gap between different community segments.

Accountability and Transparency

As public servants, the issue of accountability is paramount to urban planners, regardless of the methods they are using. AI systems potentially add a layer of complexity based on the vast amounts and types of data that may be used. However, when these decisions lead to unanticipated outcomes, it can be challenging to determine who is responsible. Is it the developers of the AI, the data providers, the urban planners who implemented it, or political leadership? This ambiguity can lead to a lack of accountability. For example, if an AI system recommends reducing public transportation in a certain area, resulting in reduced accessibility for lower-income residents, who is to blame? Without clear lines of accountability, such decisions can exacerbate social inequalities.

The complexity of AI algorithms may further complicate this issue. AI models, especially those based on machine learning, are often described as black boxes owing to their opaque analytical processes. This opacity makes it difficult for planners and stakeholders to understand how particular predictions or decisions were reached. In urban planning, where decisions can have far-reaching consequences on community development, infrastructure, and public welfare, this lack of understanding is a significant concern as it relates to the distribution of services and benefits.

Transparency is closely linked to accountability. For urban planners to be held accountable, the processes and reasoning behind AI-driven decisions must be transparent. However, achieving transparency in AI systems is challenging. Many AI algorithms are proprietary, and their internal workings are closely guarded secrets of the companies that developed them. Even when the algorithms are open for inspection, their complexity can make them incomprehensible to those without specialized knowledge. This lack of transparency can lead to distrust among the public, especially in communities directly affected by urban planning decisions. Moreover, the data used by AI in urban planning can raise additional transparency issues. AI systems are only as good as the data they are trained on. If these data are biased, the AI’s decisions may perpetuate and amplify these biases. Transparency about data sources, data quality, and the potential for bias are crucial for ethical AI use in urban planning.

Another concern is the potential for AI to reinforce existing power structures within planning organizations and communities (Afzalan & Muller, Citation2018). AI-driven planning tools can be expensive and require specialized knowledge to operate, potentially centralizing power in the hands of those who have access to these resources. This centralization can lead to a less-inclusive planning process, with community members and smaller stakeholders having less influence over decisions that directly affect them. To address these issues, urban planners and planning organizations will need a multifaceted approach. One critical step is the development and enforcement of ethical guidelines for AI use in urban planning. These guidelines should emphasize accountability, requiring clear lines of responsibility for decisions made with the assistance of AI. They should also promote transparency, in terms of both the algorithms used and the data they operate on. Making these aspects of AI systems accessible and understandable to nonexperts can help build trust and facilitate more inclusive decision-making processes. Cities such as San José (CA), Tempe (AZ), and Boston (MA) have drafted such guidelines that outline frameworks along these lines.

Related to openness, transparency, and accountability, urban planners should consider enhanced participatory approaches involving community members in the planning process. This involvement can help identify potential biases in data and decision making, ensuring that the AI’s recommendations do not inadvertently harm certain groups. Moreover, it can help demystify AI, making it a tool for the community rather than a force acting upon it. Addressing these concerns requires a concerted effort from all stakeholders involved in the urban planning process. By developing ethical guidelines, promoting transparency, ensuring accountability, and engaging with the community, urban planners can use new AI methods while mitigating its potential ethical pitfalls. But it is important to point out that this is true for all aspects of a planner’s responsibilities and not limited to AI-based approaches.

The use of AI algorithms in urban planning may be opaque, making it challenging for residents to understand how certain recommendations or decisions were made. A lack of transparency can erode trust in the planning process, particularly among marginalized communities that may already have a history of being excluded from decision making. To address this concern, planners and organizations should prioritize transparency in their use of AI. Transparency should be emphasized throughout the planning process (Hersperger & Fertner, Citation2021). This means not only providing clear explanations of how algorithms work but also involving the community in algorithm development and decision making. Transparency can help residents understand the rationale behind planning decisions and hold planners accountable for their actions.

The challenge of explaining AI methods and results to the public is a critical aspect of fostering trust and inclusivity. As urban planners increasingly rely on AI to inform their decisions, it becomes imperative to develop strategies for effectively communicating the complexities and outcomes of these technologies to a nonspecialist audience. This includes creating clear, accessible explanations of how AI algorithms function, the data they use, and the rationale behind their recommendations. Simplifying the technical jargon and employing visual aids or interactive tools can help bridge the gap between complex AI processes and public understanding. Moreover, urban planners should actively seek opportunities for public education about and engagement with AI, organizing workshops, forums, or online platforms where community members can ask questions, provide feedback, and learn about the AI tools being used in their cities. By prioritizing the explanation of AI methods and results in a transparent and comprehensible manner, planners can ensure that all community members, regardless of their technical expertise, feel informed, involved, and empowered within the urban planning process.

Misinformation and Disinformation

Another major ethical problem involves the deployment of AI in disseminating false, harmful, and misleading information. Bad actors may use machine learning models to produce and spread on social media channels factually incorrect information regarding contentious urban development issues (Hollander et al., Citation2020). This has already been seen in U.S. politics. The extent to which social media played a role in propagating false information during the 2016 presidential election serves as an example of this on a much larger scale (Shu et al., Citation2017). The spread of misinformation will likely continue on social media platforms, however, with or without the intervention of AI technologies. Slowing the spread of misinformation on social media platforms is a complex and multifaceted challenge that requires a multipronged approach. Overall, it will require a combination of fact-checking, algorithm adjustments, education, community engagement, moderation, and legal measures (Anderson & Rainie, Citation2017). More forceful and contentious measures include censoring or banning social media platforms such as TikTok, as proposed by the U.S. Congress in 2024.

Misinformation, the unintentional spread of false information, and disinformation, the deliberate dissemination of misleading or false information, pose unique challenges in the context of AI-driven urban planning. One of the primary issues is the quality and veracity of the data fed into AI systems. AI algorithms, particularly those based on machine learning, are heavily dependent on large data sets to make predictions and decisions. If the underlying data are flawed, outdated, or biased, the AI’s outputs can be inaccurate or misleading, leading to misinformation. The risk of disinformation arises when there is a deliberate attempt to influence urban planning processes or decisions using false or manipulated data. This can occur for various reasons, such as political gain, financial profit, or sabotage. For example, a developer might manipulate data to make a site appear more suitable for development than it is, influencing planning decisions to their advantage. Such acts not only undermine the planning process but also erode public trust in the authorities and the systems they use.

Another aspect of this challenge is the complexity and opacity of many AI systems. The black box nature of some AI models makes it difficult for planners and stakeholders to understand certain outputs. This opacity can be exploited to spread disinformation, because it is challenging to verify or contest decisions made by an AI system whose reasoning is not transparent. For urban planning, where decisions can have significant impacts on communities, this lack of transparency and accountability can lead to distrust. To address these challenges, urban planners and planning organizations need to take a proactive and multifaceted approach. First, ensuring the quality and integrity of the data used by AI systems is crucial. This involves not only using reliable and up-to-date data sources but also regularly auditing and validating the data for accuracy and bias, such as human-in-the-loop evaluation. Planners should be aware of the sources of their data, understand the limitations, and consider the potential for manipulation.

Although AI offers potential benefits for the urban planning process, the risks of misinformation and disinformation cannot be ignored. Addressing these risks requires diligence, transparency, and a commitment to ethical practices. By focusing on data integrity, transparency in decision making, public engagement, and creation and adherence to ethical standards, urban planners and planning organizations can mitigate the risks of misinformation and disinformation. This is true at any level or with any type of analysis whether AI is involved or not. This approach not only will enhance the effectiveness and fairness of urban planning but will also help maintain public trust in the systems and institutions responsible for shaping our cities.

How Humans Control AI Technology

Recognizing the limitations of AI underscores the necessity for human control and oversight. The relationship between AI and its human supervisors is emphasized in various facets of AI deployment and operation. First, the human-in-the-loop paradigm underscores the critical role of human experts in guiding, intervening, and even correcting AI output and decisions. In AI applications within urban planning, AI may produce preliminary recommendations based on data analysis, but these should be refined or adjusted by human planners to account for contextual nuances that the AI might overlook or errors that the AI system might have produced. It should be noted, however, that relying on the human in the loop may assume a level of expertise, consistency, and dependability beyond what we can reasonably expect from humans.

The training phase of AI, especially within supervised learning models, further accentuates the need for human control. During this phase, AI learns from data curated, cleaned, and labeled by human experts. It is through this iterative process, where humans delineate right from wrong and correct from incorrect and create rules-based structures, that AI models gain their decision-making capabilities. In addition, though AI operates on algorithms, the nuances of its operations are determined by parameters and hyperparameters set by human operators. This offers a means for experts to fine-tune the behavior and outcomes of AI, ensuring its alignment with specific urban planning objectives, like the population prediction example mentioned earlier.

The use of AI in urban planning obligates planners to play multiple roles, transitioning from mere beneficiaries of AI’s analytical capabilities to stewards of its ethical and effective deployment. Planners, while leveraging AI’s insights, retain the crucial responsibility of contextualizing these insights within the landscape of the urban fabric, societal nuances, and historical precedents. A problem for planners who are assigned these new roles becomes one of playing a gatekeeper role in society. AI, for all its computational agility, may at times provide solutions that, although theoretically optimal, are misaligned with the lived experiences and aspirations of urban populations. It is here that the planner’s expertise and attention to ethics and morals become indispensable in moderating and refining AI’s recommendations to ensure alignment with holistic urban objectives.

The ethical dimensions of AI’s incorporation into urban planning further accentuate the planner’s role as a gatekeeper. In a domain where decisions have profound sociospatial ramifications, the onus lies on urban planners to ensure that AI-driven solutions uphold the highest ethical standards, safeguarding against biases and championing inclusivity. By setting and enforcing ethical parameters, urban planners can mitigate the risk of AI perpetuating systemic biases or overlooking marginalized communities. The AICP Code of Ethics and Professional Conduct states that most important, planners “serve the public interest” with “integrity,” “proficiency,” and “knowledge.” Though the Code of Ethics does not address specific planning methodologies, some of the themes do have applicability to the use of AI, particularly related to the adoption of an open data ethic (Schweitzer & Afzalan, Citation2017).

Recommendations for Ethical AI Implementation in Urban Planning

The earlier discussion of the ethical implications of AI in urban planning underscores the importance of comprehensive and balanced preparation. To ensure that AI technologies benefit urban planning activities and outcomes, it is important to note that the planning profession has emphasized the need for ethical standards that apply to all areas of the plan-making process. Based on this discussion, the following recommendations are proposed:

Establish clear ethical guidelines and standards: Urban planners and policymakers should develop and adhere to comprehensive ethical guidelines for AI implementation. These guidelines should address privacy, data security, transparency, and fairness. Planners should learn from the lessons and best practices of their peers, especially in the early stages of AI adoption. Drawing from best practices in cities with established clear standards can help prevent biases and protect citizen and community rights.

Prioritize transparency and accountability: AI systems should be designed to be as transparent as possible, allowing stakeholders to understand and question the decision-making processes. This includes clear documentation of AI algorithms and methodologies.

Ensure inclusive and diverse data sets: To mitigate bias, it is crucial to use diverse and inclusive data sets that accurately represent the entire population and users of an urban area. This involves actively seeking data that include underrepresented communities, ensuring equitable AI outcomes. Planning analyses of all types, whether in AI models or not, should meet this standard.

Foster public engagement and participation: Encourage public participation in AI-driven urban planning projects. This can be achieved through community consultations, surveys, and participatory design sessions, allowing residents and others to voice their concerns and preferences.

Conduct regular ethical audits and reviews: Implement regular audits of AI systems to evaluate their ethical implications, effectiveness, and alignment with city and area goals. Ethical committees or review boards can provide oversight and ensure ongoing compliance with ethical standards. Most, if not all, public agencies, as well as the planning profession, already have such mechanisms in place that should incorporate knowledge of new technologies. Unfortunately, these committees and boards do not always make the best use of their roles. Because of this, our recommendations for AI use might go beyond current practice. We are comfortable with this. It is better to stay ahead of technological developments rather than play catch-up later.

Promote interdisciplinary collaboration: Encourage collaboration between technologists, urban planners, ethicists, and community groups. This interdisciplinary approach can provide a holistic view of the implications of AI in urban planning and foster solutions that are both technologically sound and ethically responsible.

Invest in AI literacy and education: Investing in AI literacy for both professionals and the public can lead to better understanding and engagement with AI technologies. Educational programs can demystify AI and foster informed discussions about its role in urban development. This will also be an important aspect of all oversight and review functions, to be knowledgeable about data and methodologies.

Develop robust privacy and data security policies: Robust policies on data privacy and security are essential and will become increasingly important. This includes implementing strong cybersecurity measures and clear policies on data usage and sharing.

At this early stage, urban planners should emphasize the need for education about the many dimensions of AI (Sanchez et al., Citation2022). An understanding not only facilitates optimized AI applications but also equips planners to discern instances where human knowledge or expertise should prevail. The allure of AI’s data processing capabilities, although tempting, should not overshadow the intrinsic human touch, characterized by context and empathy, which has been central to effective urban planning. Equally important is the collaboration between urban planners and AI experts. Such interdisciplinary synergy can fine-tune AI tools to cater specifically to urban challenges, ensuring relevance and utility. Moreover, this cross-pollination of expertise can bridge the existing knowledge gap and ease the uncertainty that clouds AI’s integration into urban planning.

Conclusions

Though AI holds great promise for enhancing urban analytical methods, recognizing its ethical challenges is crucial. In this article, we identify areas of ethical concerns and recommend establishing clear ethical guidelines, prioritizing transparency and accountability, ensuring inclusive data sets, and fostering public engagement. Regular ethical audits, interdisciplinary collaboration, AI literacy, and robust privacy policies are also strongly recommended. These recommendations are aimed at achieving a balance where AI can be used effectively in urban planning while ensuring that ethical standards are upheld and the rights and needs of all urban residents are considered. Ultimately, the successful integration of AI in urban planning will depend on a concerted effort from technologists, policymakers, urban planners, and the public to navigate these ethical complexities and work toward creating more equitable, efficient, and sustainable urban environments by using these tools.

A key takeaway from the preceding discussion is that warnings about the use of AI ultimately echoing existing concerns regarding human performance and trustworthiness (De Cremer & Kasparov, Citation2022). As complex as some AI models are, it should be noted that the human brain is the ultimate black box (O’Gieblyn, Citation2022). Humans often exaggerate or are unaware of the reasons why they made a particular decision or why they selected specific alternatives. The term hallucination is frequently used to characterize misinformation assembled by LLMs; however, humans do this as a matter of course, and these instances are casually dismissed. There is no difference, but it is implied that LLMs are somehow less trustworthy. It can be very difficult to detect the source or type of bias in human thought, whereas the data and logic used by computers can be carefully deconstructed and analyzed. There are, however, exceptions to this, including deep neural networks, known for being black boxes where outputs are very difficult to predict or explain.

Though there are concerns about rapid technology changes, the adoption cycle is often less disruptive than expected because it tends to be a gradual process that occurs incrementally (Rogers et al., Citation2014; Rosário & Dias, Citation2022). In the case of planning, consider the adoption of GIS, possibly the most significant technological advancement for planners in the past few decades (Drummond & French, Citation2008; Sanchez et al., Citation2022). The use of GIS has progressed from the creation and updating of maps by specialized GIS technicians to relatively sophisticated spatial analysis methods now available to anyone with access to a smartphone or web-based GIS platforms. This has taken place over several years and has transformed planning practice with few (if any) negative impacts on the profession. It remains to be seen whether the adoption of AI by planners will follow a similar path. The planning profession is generally risk averse and not a highly technical field, so we suspect a relatively gradual process.

The promises and potential of AI are both intriguing and absorbing, but organizations should carefully prepare before investing significant resources in AI. It is important to prepare for change, and some interruption is to be expected. We are accustomed to our routines; therefore, all types of change take time and have their challenges. As we describe here, it is important to create a clear vision and goals for AI use and to develop a thorough understanding of what AI offers. Upon adoption, it is also important to track performance and evaluate the value and advantages of AI deployment as well as its possible harms. Throughout the process of adopting AI, planners should be aware of the potential for ethical concerns related to its use as new types of data and analysis emerge for AI applications.

Finally, we return to Antoine de Saint-Exupéry’s quote. He suggested that technology, rather than distancing humans from the natural world and humankind, actually deepens our involvement with it. For instance, machines like airplanes do not just transport us above the Earth; they offer new viewpoints and understanding of our planet and our role within it. This deeper engagement does not simplify our problems but exposes their complexity and interconnectedness. He also touches on the moral and ethical responsibilities that accompany technological power. As our capabilities grow, so do the ethical dilemmas regarding our impacts on the world around us. This perspective is a departure from the notion that technology enables humans to dominate or control nature. Instead, it suggests that technology pushes us to better understand humankind. Ultimately, there are many unknowns, and the record shows that we are not skilled at predicting the future, especially when it comes to technological change. It is the planner’s role to use all available facts and proceed in a way that best serves the public.

RESEARCH SUPPORT

This work is partially based upon work supported by the National Science Foundation under Grant Nos. 2122054 and 2232533, the start-up Grant 241117–40000 from Texas A&M University’s Department of Landscape Architecture and Urban Planning, and the Harold Adams Endowed Professorship. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author and do not necessarily reflect the views of the National Science Foundation and Texas A&M University.

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Notes on contributors

Thomas W. Sanchez

THOMAS W. SANCHEZ, AICP ([email protected]) is a professor of landscape architecture and urban planning at Texas A&M University and serves as the chair of the APA Education Committee.

Marc Brenman

MARC BRENMAN ([email protected]) is a retired federal and state civil rights executive and former executive director of the Washington State Human Rights Commission.

Xinyue Ye

XINYUE YE ([email protected]) is the Harold Adams Endowed Professor in Urban Informatics and Geospatial AI at Texas A&M University, where he directs the Center for Geospatial Sciences, Applications, and Technology established by the Texas A&M Board of Regents.

Notes

1 This quote is sometimes attributed to Isaac Asimov.

REFERENCES

- Afzalan, N., & Muller, B. (2018). Online participatory technologies: Opportunities and challenges for enriching participatory planning. Journal of the American Planning Association, 84(2), 162–177. https://doi.org/10.1080/01944363.2018.1434010

- Afzalan, N., Sanchez, T. W., & Evans-Cowley, J. (2017). Creating smarter cities: Considerations for selecting online participatory tools. Cities, 67, 21–30. https://doi.org/10.1016/j.cities.2017.04.002

- Allam, Z., & Dhunny, Z. A. (2019). On big data, artificial intelligence and smart cities. Cities, 89, 80–91. https://doi.org/10.1016/j.cities.2019.01.032

- Anderson, J., & Rainie, L. (2017). The future of truth and misinformation online. Pew Research Center.

- Andrews, C., Cooke, K., Gomez, A., Hurtado, P., Sanchez, T. W., Shah, S., & Wright, N. (2022). AI in planning opportunities and challenges and how to prepare conclusions and recommendations from APA’s “AI in planning” foresight community. American Planning Association.

- Anguelovski, I., Irazábal‐Zurita, C., & Connolly, J. J. (2019). Grabbed urban landscapes: Socio‐spatial tensions in green infrastructure planning in Medellín. International Journal of Urban and Regional Research, 43(1), 133–156. https://doi.org/10.1111/1468-2427.12725

- Araujo, T., Helberger, N., Kruikemeier, S., & De Vreese, C. H. (2020). In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI & Society, 35(3), 611–623. https://doi.org/10.1007/s00146-019-00931-w

- Barkham, R., Bokhari, S., & Saiz, A. (2022). Urban big data: City management and real estate markets. In P. M. Pardalos, S. T. Rassia, & A. Tsokas (Eds.), Artificial intelligence, machine learning, and optimization tools for smart cities: Designing for sustainability (pp. 177–209). Springer.

- Batty, M. (2021). Science and design in the age of COVID-19. Environment and Planning B: Urban Analytics and City Science, 48(1), 3–8.

- Belle, V., & Papantonis, I. (2021). Principles and practice of explainable machine learning. Frontiers in Big Data, 4, 688969. https://doi.org/10.3389/fdata.2021.688969

- Braun, T., Fung, B. C., Iqbal, F., & Shah, B. (2018). Security and privacy challenges in smart cities. Sustainable Cities and Society, 39, 499–507. https://doi.org/10.1016/j.scs.2018.02.039

- Brenman, M., & Sanchez, T. W. (2012). Planning as if people matter: Governing for social equity. Island Press.

- Chang, V. (2021). An ethical framework for big data and smart cities. Technological Forecasting and Social Change, 165, 120559. https://doi.org/10.1016/j.techfore.2020.120559

- Corbett-Davies, S., & Goel, S. (2018). The measure and mismeasure of fairness: A critical review of fair machine learning. arXiv Preprint arXiv:1808.00023.

- Cugurullo, F. (2020). Urban artificial intelligence: From automation to autonomy in the smart city. Frontiers in Sustainable Cities, 2, 38. https://doi.org/10.3389/frsc.2020.00038

- Daniel, C. (2023). ChatGPT: Implications for planning. PAS QuickNotes 101. American Planning Association.

- De Cremer, D., & Kasparov, G. (2022). The ethical AI—paradox: Why better technology needs more and not less human responsibility. AI and Ethics, 2(1), 1–4. https://doi.org/10.1007/s43681-021-00075-y

- Drummond, W. J., & French, S. P. (2008). The future of GIS in planning: Converging technologies and diverging interests. Journal of the American Planning Association, 74(2), 161–174. https://doi.org/10.1080/01944360801982146

- Du, J., Ye, X., Jankowski, P., Sanchez, T. W., & Mai, G. (2023). Artificial intelligence enabled participatory planning: A review. International Journal of Urban Sciences, 28(2), 183–210. https://doi.org/10.1080/12265934.2023.2262427

- Engin, Z., van Dijk, J., Lan, T., Longley, P. A., Treleaven, P., Batty, M., & Penn, A. (2020). Data-driven urban management: Mapping the landscape. Journal of Urban Management, 9(2), 140–150. https://doi.org/10.1016/j.jum.2019.12.001

- Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. St. Martin’s Press.

- Faber, J. W. (2020). We built this: Consequences of New Deal era intervention in America’s racial geography. American Sociological Review, 85(5), 739–775. https://doi.org/10.1177/0003122420948464

- Fainstein, S. S. (2010). The just city. Cornell University Press.

- Ferrer, X., Nuenen, T. v., Such, J. M., Cote, M., & Criado, N. (2021). Bias and discrimination in AI: A cross-disciplinary perspective. IEEE Technology and Society Magazine, 40(2), 72–80. https://doi.org/10.1109/MTS.2021.3056293

- Fiske, S. T., & Taylor, S. E. (2013). Social cognition: From brains to culture. Sage.

- Forester, J. (1989). Planning in the face of power. University of California Press.

- Gomez, A., & DeAngelis, J. (2022). Digitalization and implications for planning. American Planning Association.

- Hersperger, A. M., & Fertner, C. (2021). Digital plans and plan data in planning support science. Environment and Planning B: Urban Analytics and City Science, 48(2), 212–215. https://doi.org/10.1177/2399808320983002

- Hollander, J. B., Potts, R., Hartt, M., & Situ, M. (2020). The role of artificial intelligence in community planning. International Journal of Community Well-Being, 3(4), 507–521. https://doi.org/10.1007/s42413-020-00090-7

- Kashef, M., Visvizi, A., & Troisi, O. (2021). Smart city as a smart service system: Human-computer interaction and smart city surveillance systems. Computers in Human Behavior, 124, 106923. https://doi.org/10.1016/j.chb.2021.106923

- Katyal, S. K. (2019). Private accountability in the age of artificial intelligence. UCLA Law Review, 66, 54.

- Kitchin, R. (2016). The ethics of smart cities and urban science. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 374(2083), 20160115. https://doi.org/10.1098/rsta.2016.0115

- Kusner, M. J., Loftus, J., Russell, C., & Silva, R. (2017). Counterfactual fairness. In I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, & R. Garnett (Eds.), Advances in neural information processing systems (Vol. 30, pp. 1–11). Curran Associates, Inc.

- Lauradoux, C., Curelariu, T., & Lodie, A. (2023, February 6). Re-identification attacks and data protection law. https://ai-regulation.com/re-identification-attacks-and-data-protection-law/

- Lorinc, J. (2022). Dream states: Smart cities, technology, and the pursuit of urban utopias. Coach House Books.

- Lovell, R. E., Klingenstein, J., Du, J., Overman, L., Sabo, D., Ye, X., & Flannery, D. J. (2023). Using machine learning to assess rape reports: Sentiment analysis detection of officers “signaling” about victims’ credibility. Journal of Criminal Justice, 88, 102106. https://doi.org/10.1016/j.jcrimjus.2023.102106

- Luusua, A., Ylipulli, J., Foth, M., & Aurigi, A. (2023). Urban AI: Understanding the emerging role of artificial intelligence in smart cities. AI & Society, 38(3), 1039–1044. https://doi.org/10.1007/s00146-022-01537-5

- Machin, J., Batista, E., Martínez-Ballesté, A., & Solanas, A. (2021). Privacy and security in cognitive cities: A systematic review. Applied Sciences, 11(10), 4471. https://doi.org/10.3390/app11104471

- Mayson, S. G. (2018). Bias in, bias out. Yale Law Journal, 128, 2218.

- Moroni, S. (2020). The role of planning and the role of planners: Political dimensions, ethical principles, communicative interaction. Town Planning Review, 91(6), 563–576. https://doi.org/10.3828/tpr.2020.85

- Noble, S. U. (2018). Algorithms of oppression. New York University Press.

- Nordell, J. (2021). The end of bias: A beginning: The science and practice of overcoming unconscious bias. Metropolitan Books.

- O’Gieblyn, M. (2022). God, human, animal, machine: Technology, metaphor, and the search for meaning. Anchor.

- O’Neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Broadway Books.

- Peng, Z. R., Lu, K. F., Liu, Y., & Zhai, W. (2023). The pathway of urban planning AI: From planning support to plan-making. Journal of Planning Education and Research. Advance online publication. https://doi.org/10.1177/0739456X231180568

- Phillips, C., & Jiao, J. (2023, May). Artificial intelligence & smart city ethics: A systematic review [Paper presentation]. 2023 IEEE International Symposium on Ethics in Engineering, Science, and Technology (ETHICS) (pp. 01−05). IEEE. https://doi.org/10.1109/ETHICS57328.2023.10154961

- Rogers, E. M., Singhal, A., & Quinlan, M. M. (2014). Diffusion of innovations. In D. W. Stacks, M. B. Salwen, & K. C. Eichhorn (Eds.), An integrated approach to communication theory and research (pp. 432–448). Routledge.

- Rosário, A. T., & Dias, J. C. (2022). Sustainability and the digital transition: A literature review. Sustainability, 14(7), 4072. https://doi.org/10.3390/su14074072

- Roselli, D., Matthews, J., & Talagala, N. (2019, May). Managing bias in AI [Paper presentation]. In Companion Proceedings of the 2019 World Wide Web Conference (pp. 539–544). https://doi.org/10.1145/3308560.3317590

- Sanchez, T. W. (2023a). Planning on the verge of AI, or AI on the verge of planning. Urban Science, 7(3), 70. https://doi.org/10.3390/urbansci7030070

- Sanchez, T. W. (2023b). Planning with artificial intelligence (Planning Advisory Service Report 604). American Planning Association.

- Sanchez, T. W., Shumway, H., Gordner, T., & Lim, T. (2022). The prospects of artificial intelligence in urban planning. International Journal of Urban Sciences, 27(2), 179–194. https://doi.org/10.1080/12265934.2022.2102538

- Schwartz, R., Vassilev, A., Greene, K., Perine, L., Burt, A., & Hall, P. (2022). Towards a standard for identifying and managing bias in artificial intelligence. NIST special publication, 1270(10.6028).

- Schweitzer, L. A., & Afzalan, N. (2017). 09 F9 11 02 9D 74 E3 5B D8 41 56 C5 63 56 88 C0: Four reasons why AICP needs an open data ethic. Journal of the American Planning Association, 83(2), 161–167. https://doi.org/10.1080/01944363.2017.1290495

- Shahbazi, N., Lin, Y., Asudeh, A., & Jagadish, H. V. (2023). Representation bias in data: A survey on identification and resolution techniques. ACM Computing Surveys, 55(13s), 1–39. https://doi.org/10.1145/3588433

- Sharma, R. (2023). Promoting social inclusion through social policies. Capacity Building and Youth Empowerment, 76, 617.

- Shu, K., Sliva, A., Wang, S., Tang, J., & Liu, H. (2017). Fake news detection on social media: A data mining perspective. ACM SIGKDD Explorations Newsletter, 19(1), 22–36. https://doi.org/10.1145/3137597.3137600

- Smallwood, R. F. (2019). Information governance: Concepts, strategies and best practices. John Wiley & Sons.

- Son, T. H., Weedon, Z., Yigitcanlar, T., Sanchez, T. W., Corchado, J. M., & Mehmood, R. (2023). Algorithmic urban planning for smart and sustainable development: Systematic review of the literature. Sustainable Cities and Society, 94, 104562. https://doi.org/10.1016/j.scs.2023.104562

- Sue, D. W. (Ed.). (2010). Microaggressions and marginality: Manifestation, dynamics, and impact. John Wiley & Sons.

- Veale, M., & Binns, R. (2017). Fairer machine learning in the real world: Mitigating discrimination without collecting sensitive data. Big Data & Society, 4(2), 205395171774353. https://doi.org/10.1177/2053951717743530

- Wachs, M. (Ed.). (1985). Ethics in planning. Rutgers University Press.

- Wheeler, S. (2013). Planning for sustainability: Creating livable, equitable and ecological communities. Routledge.

- Xia, L., Semirumi, D. T., & Rezaei, R. (2023). A thorough examination of smart city applications: Exploring challenges and solutions throughout the life cycle with emphasis on safeguarding citizen privacy. Sustainable Cities and Society, 98, 104771. https://doi.org/10.1016/j.scs.2023.104771

- Ye, X., Du, J., Han, Y., Newman, G., Retchless, D., Zou, L., Ham, Y., & Cai, Z. (2023a). Developing human-centered urban digital twins for community infrastructure resilience: A research agenda. Journal of Planning Literature, 38(2), 187–199. https://doi.org/10.1177/08854122221137861

- Ye, X., Newman, G., Lee, C., Van Zandt, S., & Jourdan, D. (2023b). Toward urban artificial intelligence for developing justice-oriented smart cities. Journal of Planning Education and Research, 43(1), 6–7. https://doi.org/10.1177/0739456X231154002

- Yigitcanlar, T., Corchado, J. M., Mehmood, R., Li, R. Y. M., Mossberger, K., & Desouza, K. (2021). Responsible urban innovation with local government artificial intelligence (AI): A conceptual framework and research agenda. Journal of Open Innovation: Technology, Market, and Complexity, 7(1), 71. https://doi.org/10.3390/joitmc7010071

- Yigitcanlar, T., Desouza, K. C., Butler, L., & Roozkhosh, F. (2020). Contributions and risks of artificial intelligence (AI) in building smarter cities: Insights from a systematic review of the literature. Energies, 13(6), 1473. https://doi.org/10.3390/en13061473

- Yigitcanlar, T., Kankanamge, N., Regona, M., Ruiz Maldonado, A., Rowan, B., Ryu, A., DeSouza, K., Corchado, J. M., Mehmood, R., & Li, R. Y. M. (2020). Artificial intelligence technologies and related urban planning and development concepts: How are they perceived and utilized in Australia? Journal of Open Innovation: Technology, Market, and Complexity, 6(4), 187. https://doi.org/10.3390/joitmc6040187

- Zuboff, S. (2019, January). Surveillance capitalism and the challenge of collective action. In New Labor Forum, 28(1), 10–29. https://doi.org/10.1177/1095796018819461