?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We consider a generalization of standard vector optimization which is called vector optimization with variable ordering structures. The problem class under consideration is characterized by a point-dependent proper cone-valued mapping: here, the concept of K-convexity of the incorporated mapping plays an important role. We present and discuss several properties of this class such as the cone of separations and the minimal variable K-convexification. The latter one refers to a general approach for generating a variable ordering mapping for which a given mapping is K-convex. Finally, this approach is applied to a particular case.

1. Introduction

In this paper, we present some properties of a K-convex mapping in variable ordering settings, that is, a vector mapping such that

(1)

(1) for all

and all

, where C is a convex set and

is a proper cone-valued mapping. This class of mappings arises in vector optimization with a variable ordering structure, given as

(2)

(2) which consists in finding a point

such that

for all

(see [Citation1]). We refer to Definition 2.3, where a proper cone-valued mapping, or synonymously variable ordering cone mapping, is defined. The K-convexity of a mapping has been extensively studied in standard vector optimization, in particular, in the context of convergence results for numerical solution methods [Citation2–5]. The K-convexity of F in (Equation2

(2)

(2) ) allows to give a sufficient first-order optimality condition [Citation1]. Furthermore, for the (generalized) concept of vector optimization with variable ordering structures, recent results on the convergence of solution methods can be found in [Citation1,Citation6]. We also mention [Citation7], where a variable ordering setting for set-valued optimization was introduced and studied.

Several applications of Problem (Equation2(2)

(2) ) arise, e.g. in medical diagnosis and portfolio optimization [Citation8,Citation9]; for a summary of these applications, we refer to [Citation10, Section 1.3.1]. We briefly expose here an application in medical diagnosis. After obtaining information from images, the data are transformed into another presentation and, based on that, the diagnosis is made. In order to determine the best transformation from the original data to the desired pattern, different criteria for measuring can be used which lead to the optimization of a vector of functions. It is well known that the solution of that model, which uses a classical weighting technique, may yield inadequate results. However, if the set of weights is point-dependent, then better results are reported [Citation10].

The goal of this paper is twofold. Firstly, we define the so-called cone of separations and relate its properties to K-convexity; in particular, under certain assumptions, the set of K-convex mappings is reduced to the class of affine functions. Secondly, we introduce a particular cone-valued mapping that provides a theoretical approach for obtaining K-convex mappings. This particular mapping is called minimal variable K-convexification. We study several of its properties, for example, the Lipschitz continuity of related cone generator mappings.

This paper is organized as follows. Section 2 contains basic notation and definitions as well as some preliminary results. Section 3 presents the concept of the cone of separations. Section 4 discusses the main results of this paper: a special cone-valued mapping, the minimal variable K-convexification, is defined and corresponding properties are shown. In Section 5, this approach is applied to a particular case, and Section 6 gives some conclusions.

2. Notations and preliminary results

Throughout this paper, we will use the following standard notations. The inner product in is denoted by

and the Euclidian norm by

. The ball and the sphere centred at

with radius r>0 are

and

, respectively. The set

represents the set of k-times continuously differentiable mappings

with derivative

and Hessian

at a point

. For a set

, let

,

,

and

denote the set of its interior points, its convex hull, its boundary and its closure, respectively.

The following definition recalls some well-known notations related to cones (see, e.g. [Citation11]).

Definition 2.1

A non-empty set

is called a cone if

for all

and all real numbers

.

A cone K is called solid if

.

A cone K is called pointed if

.

A cone K is called proper if it is solid, pointed and a convex closed set.

The dual cone

of a cone K is the set

Let

be a given set. The intersection of all convex cones containing the set A is called the convex conic hull of A and is denoted by

.

A set A is called a generator of a cone K if

.

It is well known that a proper cone in defines a partial ordering in

(see, e.g. [Citation11, p. 43]). The following lemma summarizes some known results on cones.

Lemma 2.1

A convex closed cone

is pointed, if and only if there exist

and a real number

such that

for all

.

A cone

is proper if and only if

is proper.

Let

be convex closed cones and assume that

is pointed. Then, we have

if and only if there exists

such that

for all

,

, and

for all

.

Proof.

For the proof of (i), (ii) and (iii), see [Citation11, Subsection 2.6.1], [Citation12, Subsection 2.7.2] and [Citation13, Theorem 3.22], respectively.

In the following, we will sometimes consider a set-valued mapping and its graph

The next definition recalls two well-known concepts [Citation14,Citation15].

Definition 2.2

Let two nonempty sets

be given. The directed Hausdorff distance between A and B is given by

Moreover, the Hausdorff distance between A and B is defined as

A set-valued mapping

is called Lipschitz continuous on C if there exists a real number

such that

for all

.

As mentioned above, in this paper, we are interested in variable ordering cone mappings, which are a particular class of set-valued mappings and whose definition is recalled in the following, see [Citation1,Citation10].

Definition 2.3

Let a convex set , a set-valued mapping

and a mapping

be given.

K is said to be a cone-valued mapping if

is a cone for all

.

K is said to be a proper cone-valued mapping (or, synonymously, variable ordering cone mapping) if

is a proper cone for all

.

Assume that K is a cone-valued mapping and that

is closed and convex for all

. The mapping F is called K-convex on C if

(3)

(3) for all

and all

.

Our interest in proper cone-valued mappings is motivated by the fact that they provide a variable ordering structure, see [Citation1,Citation10]. That is why we also call them variable ordering cone mappings. In the concluding lemma of this section, we characterize K-convexity for the differentiable case.

Lemma 2.2

[Citation1,Citation6]

Let the set C and the mappings K and F be given as in the previous definition. Furthermore, assume that , K is a cone-valued mapping such that

is closed and convex for all

, and that F is continuously differentiable on an open neighbourhood of C. Then , we have the following:

If

(4)

(4) for all

, then F is K-convex on C.

If F is K-convex on C and

is closed, then (Equation4

(4)

(4) ) holds for all

.

3. Cone of separations on

Throughout this section, we assume the following.

is a proper cone-valued mapping and

is closed.

is a K-convex mapping (on

).

are arbitrarily chosen points.

According to Lemma 2.2, these assumptions imply that

(5)

(5) As already mentioned, the motivation of this paper is closely related to the consideration of proper cone-valued mappings. These mappings have the property that

,

are closed cones. Obviously, the supposed closedness of

implies already the closedness of

,

. In order to avoid confusion concerning the motivation of this paper, we will sometimes assume that

is closed and use the notation of a proper cone-valued mapping. The following definition is basic for this section.

Definition 3.1

The set

is called the cone of separations for

.

Obviously, it holds that whenever

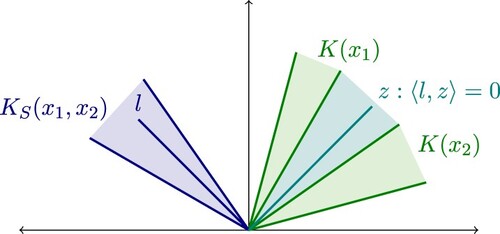

. In Figure , a cone of separations is illustrated assuming m = 2 and

.

The goal of this section is to relate some properties of the mapping F to those of the cone of separations. The next lemma presents some properties of .

Lemma 3.1

If

, then the hyperplane

separates the cones

and

.

is a convex, closed and pointed cone.

If

, then

is a proper cone.

It is

(6)

(6)

Proof.

(ii) It is easily seen that is convex and closed. Suppose for a moment that

is not pointed, that is, there exists

with

From the previous expression, it follows that

for all

and all

. However, this is not possible since

and

are solid and, therefore, span the whole space

.

(iii) By (ii), we only have to show that is solid. By Lemma 2.1(iii) and since

and

are pointed, there exist

such that

for all

and all

. Hence,

The statements (i) and (iv) are obvious.

The first theorem in this section relates to the Jacobian of F.

Theorem 3.1

If , then

.

Proof.

Let and suppose that

. By definition, we have

(7)

(7) for all

and

. Hence, by the K-convexity of F, (Equation5

(5)

(5) ) and (Equation7

(7)

(7) ), we get for all

that

and, therefore,

Now, let a real number

be arbitrarily chosen. Substituting

, we get

By

, letting

yields a contradiction since the left-hand side of the latter inequality is finite, while its right-hand-side becomes unbounded. This completes the proof.

As a consequence, we obtain the following corollary.

Corollary 3.1

For all

and all

we obtain

If

is a solid cone, then

.

Proof.

(i) By (Equation5(5)

(5) ), the K-convexity of F implies

for all

. Combining this with (Equation7

(7)

(7) ) it follows that

and therefore,

(8)

(8) By Theorem 3.1, we obtain the desired result.

(ii) Since is a solid cone, there exist

and a real number

such that

Then, there exist linearly independent vectors

and by Theorem 3.1, we have

The linear independence of

implies

.

We conclude this section by presenting its main result.

Theorem 3.2

Let be an open and connected set. If there exists

such that

for all

, then

is an affine mapping.

Proof.

By Lemma 3.1(iii), is a solid cone for all

and by Corollary 3.1(ii), we get the system

whose solution is

for some

.

Note that the fact that F has to be an affine mapping, under the assumptions of Theorem 3.2, is a surprising result given the flexibility of variable order structures.

4. Minimal variable K-convexification

In this section, we study a particular cone-valued mapping which provides a theoretical approach to obtain K-convex mappings. For a more practical approach, we refer to [Citation16], where Bishop-Phelps and simplicial cones are used. Throughout this section assume the following:

is a convex set with

.

is a continuously differentiable mapping on an open neighbourhood of C.

Definition 4.1

The set

is defined as the family of all cone-valued mappings

with the following properties:

is closed and convex for all

.

for all

.

The mapping

is defined by

(9)

(9) and it is called the minimal variable K-convexification of F on C.

It is easily seen that is a convex and closed cone for all

, and that, by Lemma 2.2(i), F is

-convex on C. Note that

need not to be pointed. According to the previous definition, the minimality of

is defined with respect to all

.

Lemma 2.2(i) implies that F is K-convex on C for all . Moreover, Lemma 2.2(ii) yields that

whenever the assumptions of Lemma 2.2 are fulfilled, that is, whenever F is K-convex on C,

is closed and

is convex for every

.

The next lemma presents a specific form for the minimal variable K-convexification of F on C. For this define the mapping by

and let

.

Lemma 4.1

For all we have

.

Proof.

By (ii) in Definition 4.1, we have for all and all

that

and therefore,

for all

. Since

is a convex and closed cone, we get

for all

.

On the other hand, is a closed and convex cone for all

and

for all

. Therefore,

for all

which completes the proof.

Following [Citation1], one is furthermore interested in conditions which are related to the existence of a proper cone such that

(10)

(10) for all

. The following lemma presents a necessary condition for this property.

Lemma 4.2

Assume that there exists a proper cone such that (Equation10

(10)

(10) ) holds for all

. Then, there exists

such that the function

given as

is convex on C.

Proof.

Since for all

, by (ii) in Definition 4.1, we have

(11)

(11) for all

. Applying Lemma 2.1(i), there exist

and

such that

(12)

(12) for all

. By (Equation11

(11)

(11) ) and (Equation12

(12)

(12) ), we get

for all

. Obviously, the latter means that

is convex.

Our next goal is to present a sufficient condition for the existence of a proper cone such that (Equation10

(10)

(10) ) holds for all

. For this, we assume in the remainder of this section that the set C is compact and that the mapping F is twice continuously differentiable on an open neighbourhood of C. Furthermore, for

define the function

where

.

Lemma 4.3

Assume that there exists a vector such that

is positive definite (denoted by

) for all

. Then, there exist real numbers

such that

for

whenever

.

Proof.

Suppose contrarily that there exist two sequences such that

and that

(13)

(13) Note that

, since C is compact. Obviously, we have

A componentwise (

denote the components of F) second-order Taylor expansion separately for each of the both parts of the latter fraction yields

(14)

(14) By

, the right-hand side in (Equation14

(14)

(14) ) is finite which contradicts (Equation13

(13)

(13) ).

The next theorem presents a sufficient condition for the existence of a proper cone such that (Equation10

(10)

(10) ) holds for all

. The cone

, as defined in (Equation16

(16)

(16) ), will be later used in Lemma 4.5 as well as in Proposition 4.2.

Theorem 4.1

Assume that there exists a vector such that

for all

. Then, there exists a proper cone

such that

for all

.

Proof.

By Lemma 4.3, there exist real numbers and

such that

for

whenever

. Furthermore, since

for all

, it follows that

for all

with

. Hence, the fraction

is defined for all

with

, and the compactness of C implies that there exists a real number M>1 such that

(15)

(15) for all

with

. Now, define the set

(16)

(16) Since M>1, it is easily seen that

and, therefore,

is solid. Moreover,

is obviously a proper cone. By (Equation15

(15)

(15) ), we have

for all

with

and, therefore,

for all

. Finally, by Lemma 4.1, we get

for all

which completes the proof.

Note that if m = 2 and the assumptions of Theorem 4.1 hold, then we can obtain a formula for as follows. Let

with

, where w is given as in Theorem 4.1. Moreover, for

let

(17)

(17)

(18)

(18) Then, we have

(19)

(19) Obviously, finding

and

is not trivial and a global optimization method must be used (see, e.g. [Citation17, Subsection 1.1.4]).

Example 4.1

Let n = m = 2, and

Take

and fix

. By using (Equation17

(17)

(17) ) and (Equation18

(18)

(18) ), we compute

,

for

and list the corresponding results in the following table:

Table

Next, we deal with the Lipschitz continuity of two generators of . For this we recall the assumptions given just before Lemma 4.3, namely, that C is a compact set and F is twice continuously differentiable. We start with the following lemma.

Lemma 4.4

Let

be nonempty compact sets. Then, there exist

and

such that

as well as

for all

.

There exists

such that

for all

.

Let

. Then, it holds that

and the equality holds if and only if

.

Proof.

The compactness of A and B implies (i). Since is continuously differentiable and C is compact, it holds that

is Lipschitz continuous on

. Hence, there exists

such that

for all

. For proving (iii) consider

Now, we prove the Lipschitz continuity of the two generators.

Proposition 4.1

Let the set-valued mapping be given as

Then, G is Lipschitz continuous on C.

Proof.

Obviously, we only have to show that there exists such that

for all

. Since

is a compact set for all

, by Lemma 4.4 (i), it follows that there exists

such that

(20)

(20) for all

. Moreover, there exist

,

,

with

(21)

(21) Choosing

(22)

(22) and substituting (Equation21

(21)

(21) ) and (Equation22

(22)

(22) ) into (Equation20

(20)

(20) ), we get

(23)

(23) Then, the statement follows by applying the triangle inequality and Lemma 4.4 (ii).

The generator used in the next result is in general not Lipschitz continuous on C. However, we present a sufficient condition for its Lipschitz continuity on a subset of C.

Lemma 4.5

Let , M>1 and the cone

be given as in (Equation16

(16)

(16) ). Assume that

,

,

and

Then, we have

Proof.

Let Φ be an orthogonal matrix whose first row is w. Then,

As mentioned just before Lemma 4.5, the next result yields a sufficient condition for a generator to be Lipschitz continuous (only) on a subset . Here, we have to ‘cut out’ a subset from

. We refer also to Remark 4.1, where we discuss how, under certain conditions, this result could be used.

Proposition 4.2

Let be a compact convex set. Assume that for some

it holds that

for all

, and that there exists

such that

(24)

(24) for all

. Then, the set-valued mapping

, given as

is Lipschitz continuous on A.

Proof.

We will prove that G is locally Lipschitz continuous for any arbitrarily chosen . Then, the Lipschitz continuity of G on A follows from the convexity and the compactness of A. By Theorem 4.1, there exists a proper cone

such that

where

is given as in (Equation16

(16)

(16) ) for certain M>1. Let

be given as in Lemma 4.4 (ii). Choose

By Lemma 4.4 (ii), we have

(25)

(25) whenever

for

. Next, we will show that

(26)

(26) Analogously to the proof of Proposition 4.1, by (Equation24

(24)

(24) ), we get

(27)

(27) with

(28)

(28) Note that in (Equation28

(28)

(28) ), the two inequalities follow from (Equation24

(24)

(24) ) and (Equation25

(25)

(25) ), respectively. By Lemma 4.4 (iii) and (Equation27

(27)

(27) ), we get

(29)

(29) Moreover, by Lemma 4.5 and (Equation28

(28)

(28) ), it follows that

(30)

(30) Finally, applying the triangle inequality, Lemma 4.4 (ii) and using (Equation30

(30)

(30) ) in (Equation29

(29)

(29) ), we obtain (Equation26

(26)

(26) ).

Remark 4.1

Proposition 4.2 can be exploited in the following way. Let be a set for which the assumptions of Proposition 4.2 are fulfilled. Choose a cone-valued mapping

with

where

is a fixed cone satisfying

for

and

for

. Define the set-valued mapping

Since

is bounded, the Hausdorff distance is a metric for the family of compact subsets of

(see, e.g. [Citation15, Section 4C]). A moment of reflection shows that

is continuous on C whenever its codomain is endowed with this metric. Moreover, by Proposition 4.2, it follows that

is Lipschitz continuous on A and, by definition, that

is Lipschitz continuous on

. Consequently,

is Lipschitz continuous on C. We will come back to this approach at the end of Section 5. Of course, such a choice of

is not always possible. Necessary or sufficient conditions for its existence will be considered in future research.

5. A particular case

In this section, we study a particular case and apply to it the general approach described in the previous sections. Specifically, we consider an interval and a mapping

,

. Moreover, we assume that

,

, where

and

,

denote the first and second derivative at

, respectively. For this case, we obtain a particular expression for

. First, we define four auxiliary functions.

Lemma 5.1

Let and define

Then,

is strictly decreasing, and

is strictly increasing.

Proof.

For we get

for some

. Since

,

, it holds that

is strictly increasing. Analogously, we obtain that

is strictly decreasing.

Since , Lemma 5.1 yields for

that

for all

. Moreover, by Lemma 5.1, both

and

are injective functions. Therefore, the functions

and

, given by

are well defined. The following two results concern the relationship between the latter two functions and

.

Lemma 5.2

The right derivatives of and

at x = 0 are

Proof.

We show it for . Since

, we have

(31)

(31) After applying L'Hôpital's rule twice we get the desired result. The proof runs analogously for

.

Lemma 5.3

If is an increasing function on

, then

is concave and

is convex.

Proof.

For showing the convexity of , we prove that

(32)

(32) for all

. For

and

, after a straightforward calculation, we get that

Since

, in order to obtain (Equation32

(32)

(32) ), we use that

By Cauchy's mean value theorem, the latter inequality holds since

, and

is an increasing function on

. The concavity of

is obtained analogously.

In the remainder of this section, we assume that is increasing on

. The next result is a consequence of Lemma 5.3.

Corollary 5.1

Let . Then, we have

for all

.

Proof.

If , then for

we have

. By the convexity of

, Lemma 5.2 implies

Hence, we obtain

and, analogously, for

that

A combination of these two results delivers for

that

and a corresponding result for

. This completes the proof.

In the next result, we see the advantage of considering . We show that in this case, the minimal variable K-convexification of F on

can easily and directly be calculated.

Theorem 5.1

Let the mappings and

be given as

and define the set-valued mapping

. Then,

.

Proof.

We distinguish two cases.

Case 1: Let . Then, we have

Hence, it holds that

(33)

(33) Next, we prove that

(34)

(34) Note that

. Now, choose

. Therefore, there exists

such that

. From Corollary 5.1, it follows for

that

and that

Thus, there exists

such that

A moment of reflection shows that the latter equation yields

(35)

(35) Note that in (Equation35

(35)

(35) ) the coefficients of

and

are nonnegative since

,

,

and

. Thus, (Equation34

(34)

(34) ) is shown. By Lemma 4.1, (Equation33

(33)

(33) ) and (Equation34

(34)

(34) ), we get

.

Case 2: Let (analogously for

). Applying L'Hôpital's rule twice, we get

and by

, we get

Moreover, letting

in Corollary 5.1, we obtain

(36)

(36) Using (Equation36

(36)

(36) ) we deduce an expression which is analogous to (Equation35

(35)

(35) ). The remaining part of the proof runs as in Case 1.

Example 5.1

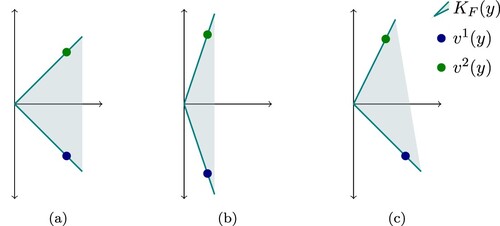

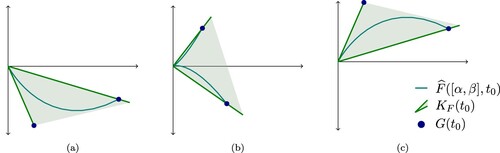

Let ,

and

. By using Theorem 5.1, we have

for

, which is illustrated in Figure .

Figure 3. Application of Theorem 5.1 for with

and

. (a)

, (b)

and (c)

.

Note that the set-valued mapping in Theorem 5.1 is not Lipschitz continuous. Indeed, since

the function

is not continuous. However, we can find a cone-valued mapping

close to

such that its generator mapping is Lipschitz continuous. As an example, fix

, define

and set

Analogously to Proposition 4.2, it can be shown that

is Lipschitz continuous on

. Moreover, it is easy to check that

for all

and that

for all

. This latter construction represents an application of the strategy described in Remark 4.1 to this particular case considered in the current section.

6. Final remarks

In this paper, we considered vector optimization problems with variable ordering structures. They are related to K-convex mappings where K refers to a proper cone-valued mapping. This model has several applications, e.g. in medical diagnosis and portfolio optimization. We defined the cone of separations and showed that under certain assumptions the corresponding K-convex mapping needs to be affine. Note that this is a somehow counter-intuitive property and its geometric meaning need still to be discussed in the future. Moreover, we introduced the concept of the minimal variable K-convexification , presented sufficient conditions for the images of this cone-valued mapping to be contained in a proper cone and proved the Lipschitz continuity of some corresponding generator mappings. Note that we described a theoretical approach for generating a variable ordering mapping for which a given mapping is K-convex. We applied this approach to a particular case; the construction of further applications will be a topic for further research.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Bello Cruz JY, Bouza Allende G. A steepest descent-like method for variable order vector optimization problems. J Optim Theory Appl. 2014;162:371–391.

- Graña Drummond LM, Iusem AN. A projected gradient method for vector optimization problems. Comput Optim Appl. 2004;28(1):5–29.

- Graña Drummond LM, Svaiter BF. A steepest descent method for vector optimization. J Comput Appl Math. 2005;175(2):395–414.

- Luc DT. Theory of vector optimization. New York (NY): Springer; 1989.

- Luc DT, Tan NX, Tinh PN. Convex vector functions and their subdifferential. Acta Math. 1998;23(1):107–127.

- Bello Cruz JY, Bouza Allende G. On inexact projected gradient methods for solving variable vector optimization problems. Optim Eng. 2020. Available from: https://doi.org/10.1007/s11081-020-09579-8

- Durea M, Strugariu R, Tammer C. On set-valued optimization problems with variable ordering structure. J Global Optim. 2015;61:745–767.

- Engau A. Variable preference modeling with ideal-symmetric convex cones. J Global Optim. 2008;42:295–311.

- Wacker M, Deinzer F. Automatic robust medical image registration using a new democratic vector optimization approach with multiple measures. In: Yang GZ, editor. Medical image computing and computer-assisted intervention – MICCAI. New York: Springer; 2009.

- Eichfelder G. Variable ordering structures in vector optimization. New York (NY): Springer; 2014.

- Boyd S, Vandenberghe L. Convex optimization. Cambridge: Cambridge University Press; 2009.

- Dattorro J. Convex optimization & Euclidean distance geometry. Palo Alto (CA): Meboo Publishing USA; 2013.

- Jahn J. Vector optimization: theory, applications and extensions. New York (NY): Springer; 2011.

- Mordukhovich BS. Variational analysis and generalized differentiation. New York (NY): Springer; 2006.

- Rockafellar RT, Wets RJ. Variational analysis. New York (NY): Springer; 1998.

- Bouza Allende G, Hernández Escobar D, Rückmann J-J. Generation of K-convex test problems in variable ordering settings. Rev Inv Oper. 2018;39(3):463–479.

- Pintér JD. Global optimization in action. New York (NY): Springer; 1996.