?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This work is focussed on computing the Lipschitz upper semicontinuity modulus of the argmin mapping for canonically perturbed linear programs. The immediate antecedent can be traced out from Camacho J et al. [2022. From calmness to Hoffman constants for linear semi-infinite inequality systems. Available from: https://arxiv.org/pdf/2107.10000v2.pdf], devoted to the feasible set mapping. The aimed modulus is expressed in terms of a finite amount of calmness moduli, previously studied in the literature. Despite the parallelism in the results, the methodology followed in the current paper differs notably from Camacho J et al. [2022] as far as the graph of the argmin mapping is not convex; specifically, a new technique based on a certain type of local directional convexity is developed.

1. Introduction and motivation

The main goal of the present paper is to compute the upper semicontinuity modulus of the optimal set (argmin) mapping associated with the parameterized linear programming (LP, in brief) problem given by

(1)

(1) where

is the decision variable, regarded as a column-vector, the prime stands for transposition,

is fixed for each

, and the pair

, with

, is the parameter to be perturbed around a nominal one

. In this way we are dealing with the so-called canonical perturbations (tilt perturbations of the objective function together with the right-hand side – RHS – of the constraints). We consider the feasible set and the optimal set mappings

and

defined as

(2)

(2)

(3)

(3) From now on we identify each problem π with the corresponding parameter

accordingly,

denotes the nominal problem. The space of variables,

, is endowed with an arbitrary norm

, whose dual norm is denoted by

, i.e.

. The parameter space

is endowed with the norm

(since c is identified with the linear functional

, where

.

The current paper is mainly oriented to the computation of the Lipschitz upper semicontinuity modulus of at the nominal parameter

, denoted by

following [Citation1]; see Section 2 for the formal definitions. At this moment let us comment that

provides a semi-local measure of the stability (in fact, a rate of deviation) of the optimal set around the nominal problem

. The term ‘semi-local’ refers to the fact that only parameters π around

are considered, while all elements of

are taken into account. A point-based formula (only depending on the nominal data

for the aimed

is provided in Theorem 4.2 (see also Theorem 4.1) in terms of a finite amount of calmness moduli (of a local nature) of certain feasible set mappings coming from adding new constraints to the system in (Equation1

(1)

(1) ). These calmness moduli can be computed through the point-based formula given in [Citation2, Theorem 4] and recalled in [Citation3, Theorem 3]. The reader is referred to monographs on variational analysis as [Citation4–7] for details about calmness and other Lipschitz-type properties and to [Citation8] for other stability criteria in linear optimization.

The theory of parametric linear optimization goes back to the early 1950s (see, e.g. [Citation9, Citation10]). Some years later a systematic development of stability theory in LP with canonical perturbations emerged. One direction of research was the analysis of semicontinuity and Lipschitz continuity properties based on approaches from variational analysis like Berge's theory or Hoffman's error bounds. In the current parametric context both and

are polyhedral multifunctions; i.e. their graphs,

and

, are finite unions of convex polyhedra. In fact

is a convex polyhedral cone, while the union of polyhedra constituting

comes from considering the different choices of subsets of active indices involved in the Karush–Kuhn–Tucker (KKT) optimality conditions. Hence, as a consequence of a classical result by Robinson [Citation11], both

and

are Lipschitz upper semicontinuous (see Section 2.2 for the formal definition) at any

and

, respectively, provided that

and

are nonempty. The current paper borrows the terminology from [Citation5] or [Citation1], although the Lipschitz upper semicontinuity property, introduced in [Citation11] as upper Lipschitz continuity, has been also popularized under the name outer Lipschitz continuity (see the reference book [Citation4]). In the context of RHS perturbations (where only b is perturbed, c remains fixed) a well-known result establishes that both

and

are Lipschitz continuous relative to their domains (see, e.g. [[Citation12, p. 232],[Citation13, Chapter IX (Sec. 7)],[Citation4, Chapter 3C]]). At this moment we point out that the case of ‘fully perturbed’ problems, when all data (

and

,

) are perturbed, entails notable differences regarding the Lipschitz upper semicontinuity as it is emphasized in Remark 2.3.

The immediate antecedent to this work can be found in [Citation3, Theorems 4 and 6], where the Lipschitz upper semicontinuity modulus of at

,

, is analysed.

Remark 1.1

There exists a striking resemblance between the formula of obtained from [Citation3, Theorems 4 and 6] and the new one, established in Theorem 4.2, of

. Just to show the similar appearance, here we gather both formulae:

where

denotes the calmness modulus (see again Section 2 for the definition) of

at

and

the corresponding calmness modulus of

at

, and

and

are two nonempty finite subsets of extreme points of certain subsets of

and

introduced in (Equation11

(11)

(11) ) and (Equation16

(16)

(16) ), respectively. Despite these formal similarities between the two results, let us emphasize that they both follow different methodologies, mainly due to the fact that

is convex (hence the last part of Theorem 2.1 below applies), while

is not, even when fixing

and allowing only for RHS perturbations. Indeed, as commented above,

is a finite union of convex polyhedra.

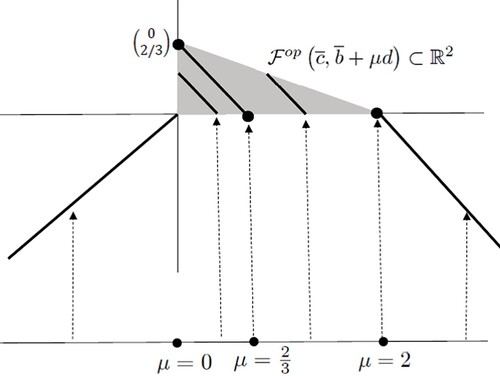

To overcome the drawback coming from the lack of convexity of , the paper appeals to a weaker form of this property. Specifically, Theorem 3.1 shows that a certain local directional convexity property of the graph of

is preserved; indeed, when parameter c remains fixed (

) and b is perturbed in the way

for a fixed direction

and a small

. In order to illustrate this idea, let us consider the following example, where

stands for the convex hull of

.

Example 1.1

Let us consider the problem in

(4)

(4) with

in other words, we are perturbing

in the directions

with

.

It can be easily checked that (see Figure )

In particular

and

belong to

, while the middle point

does not.

Figure 1. Local directional convexity in Example 1.1.

Now we summarize the structure of the paper. Section 2 contains some notation and preliminary results used later on. Section 3 formalizes the announced local directional convexity of . The main result of this section, Theorem 3.1, is applied in Section 4 to establish Lemma 4.1, which constitutes a key step in the process of computing

, leading to Theorem 4.2. Finally, Section 5 includes some conclusions and perspectives. In particular, the so-called Hoffman stability modulus is recalled, which is known to coincide with the Lipschitz upper semicontinuity modulus under the convexity of the graph. This is the case of

, but no longer of

(see Example 5.1). The reader is referred to [Citation3, Citation14–18] for extra details about Hoffman constants from a global (instead of semi-local) approach.

2. Preliminaries

This section introduces some necessary notation and results which are used later on. Given ,

, we use the standard notation

and

for the conical convex hull and linear hull of X respectively, with the convention

(the zero vector of

). Provided that X is convex,

stands for the set of extreme points of X.

Consider a generic multifunction between metric spaces Y and X (with both distances denoted by d). Recall that the graph and the domain of

are respectively given by

and

. Mapping

is said to be calm at

if there exist a constant

and neighbourhoods V of

and U of

such that

(5)

(5) where

, provided that

and

, with the usual convention

and

. Since we are concerned with nonnegative constants, we understand that

. It is well-known (see, e.g. [Citation4, Theorem 3H.3 and Exercise 3H.4]) that neighbourhood V appearing in the definition of calmness is redundant; formally, the calmness of

at

is equivalent to the existence of a constant

and a (possibly smaller) neighbourhood U of

such that

(6)

(6) The latter property is known as the metric subregularity of

at

(recall that

. The calmness modulus of

at

, denoted by

, is the infimum of constants κ such that (Equation5

(5)

(5) ) (equivalently (Equation6

(6)

(6) )) holds for some associated neighbourhoods; this modulus can be written as:

(7)

(7) where

and

is understood as the supremum (maximum, indeed) of all possible sequential upper limits (i.e. with

being replaced with elements of sequences

converging to

as

).

2.1. Lipschitz upper semicontinuity of multifunctions

The current work is focussed on a semi-local Lipschitz-type property: A multifunction is said to be Lipschitz upper semicontinuous (see, e.g. [[Citation1, Definition 2.1(iii)],[Citation3, Section 3]]) at

if there exist a constant

and a neighbourhood V of

such that

(8)

(8) The associated Lipschitz upper semicontinuity modulus at

, denoted by

, is defined as the infimum of constants κ satisfying (Equation8

(8)

(8) ) for some associated V. Clearly V is not redundant here. The following result provides a limiting expression for

.

Proposition 2.1

[Citation3, Proposition 2(i)]

Let be a multifunction between metric spaces and let

, then

The following result establishes the relationship between calmness and Lipschitz upper semicontinuity for generic multifunctions. See Section 5 for additional comments including the so-called Hoffman stability, defined in (Equation22(22)

(22) ).

Theorem 2.1

[Citation3, Corollary 2 and Theorem 4]

Let be a multifunction between metric spaces and let

. We have

(9)

(9) Moreover, equality holds in (Equation9

(9)

(9) ) if Y is a normed space, X is a reflexive Banach space,

is a nonempty convex set, and

is closed.

Remark 2.1

2.2. Lipschitz upper semicontinuity of the feasible set mapping

This subsection deals with the feasible set mapping introduced in (Equation2

(2)

(2) ), which has a closed convex graph and, so, Theorem 2.1 allows us to write

(10)

(10) Going further, the next theorem provides a more computable expression for

as far as it involves a finite amount of calmness moduli. It appeals to the following set of extreme points:

(11)

(11) For details about this construction the reader is addressed to [Citation19, p. 142]. In addition to the following theorem, set

is also a key tool in [Citation20] to provide a point-based formula for the calmness modulus of the optimal value function in linear optimization. This set is known to be always nonempty and finite.

Theorem 2.2

[Citation3, See Theorems 4 and 6]

Let . Then

(12)

(12)

In the sequel, for any ,

represents the set of active indices at x, defined as

In particular, it is appealed to in the definition of the following family of sets appearing in the next remark: given

,

is formed by all subsets

such that system

is consistent (in the variable

in other words,

,

lives in some hyperplane which leaves the remaining coefficient vectors

and the origin,

, in one of its two associated open half-spaces.

Remark 2.2

It is worth mentioning that any calmness modulus, , at any

appearing in (Equation12

(12)

(12) ) can be computed through the point-based formula given in [Citation2, Theorem 4] (see also [Citation3, Theorem 3], as stated in the introduction), which is recalled here for completeness:

(13)

(13) where

represents the distance associated with the dual norm

.

Remark 2.3

The fact of considering RHS perturbations is crucial in our analysis. Observe that in the case when the 's are also perturbed the corresponding feasible set mapping is no longer Lipschitz upper semicontinuous in general. Just consider the example in

with only one constraint:

. Then, for each

, we have

which entails

. Roughly speaking, the previous situation arises from the semi-local nature of this property (i.e. the fact that it involves the whole feasible sets associated with perturbed parameters). Indeed, other variational properties of local character (dealing with parameters and points near the nominal ones) do not change so drastically. For instance, this is the case of the calmness property (see, [Citation2, Theorem 5]).

3. Local directional convexity of

This is an instrumental section oriented to tackle our problem of computing . As

is not convex, we are not allowed to apply the last part of Theorem 2.1, and this fact entails notable differences with respect to the methodology followed in [Citation3]. Specifically, this section is devoted to establish a certain directional-type convexity of the graph of

around

with a fixed

, where only local directional RHS perturbations of the constraints are considered; see Figure for a geometrical motivation. Formally, associated with our nominal problem

, any scalar

, and any direction

with

, we consider the local directional optimal set mapping

given by

(14)

(14) The following lemmas constitute key tools for establishing Theorem 3.1. To start with, we introduce some extra notation: given

,

denotes the so-called minimal KKT subsets of indices at π, defined as

(15)

(15) provided that x is any optimal point of π. For convenience, sometimes

is alternatively denoted by

for

. Let us observe that

is correctly defined since the expression in (Equation15

(15)

(15) ) indeed does not depend on x as it was commented in [Citation20, Remark 2]. Finally, we introduce the counterpart of

when dealing with optimization problems,

(16)

(16) The reader is referred to [Citation20, Section 2.2] for additional details about this set of extreme points. Standard arguments of linear optimization yield

for

.

Lemma 3.1

[Citation20, Lemma 2]

Let converge to

. Then

where

stands for the Painlevé–Kuratowski upper/outer limit as

(see, e.g. [Citation6, Citation7]).

Lemma 3.2

Let . Then there exists

such that for every

with

we have

.

Proof.

Reasoning by contradiction, suppose that there exists a sequence such that

and

for all

. Since

(finite) for all r, we may assume (by taking a subsequence if necessary) that

is constant, say

for all r.

Applying Lemma 3.1, let and, without loss of generality (for an appropriate subsequence, without relabelling), write

, for some

,

For each r,

entails

, which yields

. Moreover,

and D is minimal with respect to this property since it is for any r (recall

). Hence, we attain the contradiction

.

Theorem 3.1

Let and

be as in Lemma 3.2. Then

is convex for all

with

.

Proof.

Let ,

with

. Let us see that

for

. If

, then

and

belong to the same convex set

, and hence clearly

for all

.

From now on, let us assume . First observe that

for all

because of the convexity of

. We distinguish two cases:

Case 1 . Fix any

. Observe that in this case

. Let us prove that

. Take

(because of Lemma 3.2 and the choice of ε). In particular,

. Therefore

since, for any

,

Hence, as we are not perturbing

, KKT optimality conditions ensure

.

Case 2 . Fix again any

and let us see that

.

Start by choosing an arbitrary and define

Reasoning as in the previous case, with

and

playing the role of

and λ, respectively, we deduce

Appealing to the convexity of the previous optimal set, define

A routine computation yields the existence of scalars

and

such that

Indeed, they are unique and their explicit expressions are

Since

, there exists

, in particular,

. Hence, for any

, taking into account the fact that

, one has

In this way,

and again the KKT optimality conditions yield

. In other words,

.

The following example illustrates the previous results.

Example 3.1

Example 1.1 revisited

Let us consider the parameterized problem (Equation4(4)

(4) ) in

with

and let us see that the statement of Lemma 3.2 fulfils by taking

. First observe that

(17)

(17) Reasoning by contradiction, assume that there exists

such that

,

and

. It is clear that

according to KKT conditions (

). Then, by distinguishing cases we attain a contradiction: assume that

, then

and

, which yields

which contradicts

. Hence

. Then, necessarily

(since

, but again

and

yield the contradiction

.

From (Equation17(17)

(17) ) one easily derives

(18)

(18) Indeed, given any

one can check

Therefore, for any

, with

, and

, we have

(19)

(19) which clearly entails the convexity of

for each

with

and each

.

Finally, observe that (Equation17(17)

(17) ) is no longer true for

since

However, (Equation18

(18)

(18) ) still holds by replacing

with

, since the only way to preclude

is having

which implies

; although the description of

would be different from that of (Equation19

(19)

(19) ) when

.

4. Lipschitz upper semicontinuity of the optimal set mapping

This section tackles the final goal of the current paper: the computation of the Lipschitz upper semicontinuity modulus for the optimal set mapping introduced in (Equation3

(3)

(3) ). First, let us see that perturbations of c are redundant when looking for the aimed modulus. Formally, we consider

given by

Proposition 4.1

[Citation20, Lemma 4]

There exists such that

whenever

satisfy

.

Corollary 4.1

Let . We have

(20)

(20)

Proof.

Inequality follows directly from the definitions. Appealing to Propositions 2.1 and 4.1 we have

From now on, appealing to the local directional convexity of the graph of under RHS perturbations, we adapt some arguments used in [Citation3] to the current setting. The following technical lemma uses Theorem 3.1 to provide an upper bound on the variation rate of optimal solutions with respect to RHS perturbations.

Lemma 4.1

Let . Let

be as in Lemma 3.2, take

with

and let

be a projection (a best approximation) of x in

. Then

Proof.

The case is trivial from

. Assume

and let

. With the notation (Equation14

(14)

(14) ),

and

, which entails, for each

, by applying Theorem 3.1,

Moreover, from [Citation3, Lemma 1] we have that

is also a best approximation of

in

i.e.

. Consequently,

Hence, letting

we obtain the aimed inequality.

Proposition 4.2

Let , then

Proof.

Inequality ‘’ comes from Theorem 2.1. Let us prove the nontrivial inequality

. Take an

as in Lemma 3.2 (and Theorem 3.1). We can write (recall Corollary 4.1)

where we have applied Lemma 4.1 and, as in that lemma, for each

, with

,

is a projection of x in

.

Consequently,

Next we show that the supremum in the previous proposition may be reduced to a finite subset of points, indeed to points in . To do that we appeal to the following theorem.

Theorem 4.1

[Citation21, Corollary 4.1]

Let with

. Then

(21)

(21) where, for each

,

is defined by

and

.

Remark 4.1

Observe that each is nothing else but a feasible set mapping of the same type as

but associated with an enlarged system. Consequently, Remark 2.2 also applies here for computing each

, and therefore

.

Finally, by gathering the previous results of this section, we can establish our main goal in the following theorem. We point out that this theorem provides an implementable procedure for computing the aimed as far as it is written in terms of a finite amount of calmness moduli of feasible set mappings (as the previous theorem says) and these calmness moduli can be computed via formula (Equation13

(13)

(13) ).

Theorem 4.2

Let , then

Proof.

Starting from the equalities of Proposition 4.2 and Theorem 4.1, we can write

In the third equality we have used the fact that

for all

(see [Citation21, Proposition 4.1]), while the fourth comes from Theorems 2.1 and 2.2 by taking Remark 4.1 into account, together with the fact that the role played by

in Theorem 2.2 is now played by

The last equality comes again from Theorem 4.1.

5. Conclusions and perspectives

At this moment we recall the parallelism between both formulae of and

, for

, pointed out in Remark 1.1. The first one was established in [Citation3] by strongly appealing to the convexity of

, while a local directional convexity property of

has been used here to develop the second one. Indeed, [Citation3] analyses another Lipschitz-type property, called there Hoffman stability, which is commented in the next subsection.

5.1. On the Hoffman stability of the optimal set

We say that , with Y and X being metric spaces, is Hoffman stable at

if there exists

such that

for all

and all

, or, equivalently, if

(22)

(22) The associated Hoffman modulus at

,

, is the infimum of constants κ satisfying (Equation22

(22)

(22) ) and may be expressed as

Theorem 4 in [Citation3], again appealing to the convexity of

, establishes the equality

which is no longer true for our optimal set mapping

, neither for

(recall that

and

are not convex), as the following example shows.

Example 5.1

Consider the parameterized problem of Example 1.1 with being endowed with the Euclidean norm, and consider the following nominal parameters:

The reader can easily check that

Thus, an ad hoc routine calculation gives

Of course Theorem 4.2 can be alternatively used for this computation.

Now, let us perturb by considering

for

. Then it is easy to check that

for all

and, accordingly,

The computation of and

remains as an open problem for further research.

5.2. Some repercussions on the stability of the optimal value

Let us finish the paper with some comments about perspectives on the behaviour of the optimal value function , given by

(with the convention

when

in our context of finite LP problems

is finite if and only if

. Moreover, a well-known result in the literature establishes the continuity of ϑ restricted to its domain (see, e.g. [Citation22, Theorem 4.5.2] for a proof based on the Berge's theory); i.e. the continuity of

. Going further and regarding the subject of the current paper, the calmness modulus of

provides a quantitative measure of the stability of the optimal value (indeed, a rate of variation with respect to perturbations of the data). Specifically, for

this calmness modulus is given by

This calmness modulus is analysed in [Citation20], where point-based formulae for this quantity are provided in two stages: firstly, under RHS perturbations and, in a second stage, under canonical perturbations. That paper is focussed on a dual approach and formulae obtained there involve the maximum and minimum norms of dual optimal solutions (vectors of KKT multipliers) associated with the minimal KKT subsets of indices,

.

At this moment we point out the fact that appealing to we may follow a primal approach to the estimation of

. The situation is particularly easy in the case of RHS perturbations as commented in the next lines: given

we have

(23)

(23) where

is any optimal solution of

and

is any projection of x on

. From (Equation23

(23)

(23) ), we obtain

Adding perturbations of c and trying to reproduce inequalities of the form (Equation23

(23)

(23) ) in the context of canonical perturbations yields a different scenario, where the size of primal optimal solutions could play an important role, but this lies out of the scope of the present paper.

Acknowledgments

The authors wish to thank the anonymous referees for their valuable critical comments, which have definitely improved the original version of the paper.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Uderzo A. On the quantitative solution stability of parameterized set-valued inclusions. Set-Valued Var Anal. 2021;29(2):425–451.

- Cánovas MJ, López MA, Parra J, et al. Calmness of the feasible set mapping for linear inequality systems. Set-Valued Var Anal. 2014;22(2):375–389.

- Camacho J, Cánovas MJ, Parra J. From calmness to Hoffman constants for linear semi-infinite inequality systems. Available from: https://arxiv.org/pdf/2107.10000v2.pdf

- Dontchev AL, Rockafellar RT. Implicit functions and solution mappings: a view from variational analysis. New York: Springer; 2009.

- Klatte D, Kummer B. Nonsmooth equations in optimization: regularity, calculus, methods and applications. Dordrecht: Kluwer Academic; 2002. (Nonconvex optimization and its applications; vol. 60).

- Mordukhovich BS. Variational analysis and generalized differentiation, I: basic theory. Berlin: Springer; 2006.

- Rockafellar RT, Wets RJ-B. Variational analysis. Berlin: Springer; 1998.

- Goberna MA, López MA. Linear semi-infinite optimization. Chichester: Wiley; 1998.

- Gass S, Saaty T. Parametric objective function (part 2)-generalization. J Oper Res Soc Am. 1955;3:395–401.

- Saaty T, Gass S. Parametric objective function (part 1). J Oper Res Soc Am. 1954;2:316–319.

- Robinson SM. Some continuity properties of polyhedral multifunctions. Math Progr Study. 1981;14:206–214.

- Klatte D. Lipschitz continuity of infima and optimal solutions in parametric optimization: the polyhedral case. In: Guddat J, Jongen HTh, Kummer B, et al., editors. Parametric optimization and related topics. Berlin: Akademie; 1987. p. 229–248.

- Dontchev A, Zolezzi T. Well-posed optimization problems. Berlin: Springer; 1993. (Lecture Notes in Mathematics; vol. 1543.

- Azé D, Corvellec J-N. On the sensitivity analysis of Hoffman constants for systems of linear inequalities. SIAM J Optim. 2002;12(4):913–927.

- Hoffman AJ. On approximate solutions of systems of linear inequalities. J Res Natl Bur Stand. 1952;49(4):263–265.

- Klatte D, Thiere G. Error bounds for solutions of linear equations and inequalities. Z Oper Res. 1995;41:191–214.

- Peña J, Vera JC, Zuluaga LF. New characterizations of Hoffman constants for systems of linear constraints. Math Program. 2021;187(1–2):79–109.

- Zălinescu C. Sharp estimates for Hoffman's constant for systems of linear inequalities and equalities. SIAM J Optim. 2003;14(2):517–533.

- Li W. Sharp Lipschitz constants for basic optimal solutions and basic feasible solutions of linear programs. SIAM J Control Optim. 1994;32(1):140–153.

- Gisbert MJ, Canovas MJ, Parra J, et al. Calmness of the optimal value in linear programming. SIAM J Optim. 2018;28(3):2201–2221.

- Cánovas MJ, Henrion R, López MA, et al. Outer limit of subdifferentials and calmness moduli in linear and nonlinear programming. J Optim Theory Appl. 2016;169(3):925–952.

- Bank B, Guddat J, Klatte D, et al. Non-linear parametric optimization. Berlin: Akademie; 1982 and Birkhäuser: Basel; 1983.