Abstract

Introducing undergraduates to the peer-review process helps them understand how scientific evidence is evaluated and communicated. In a large biology course, technical writing was taught through mock peer-review. Students learned to critique original research through a multistep framework, calibrating their assessment skills with class standards. We hypothesized students’ assessment of their own and their peers’ writing becomes more accurate as they gain academic experience, and that novice students are more overconfident in their writing abilities. Counter to many instructors’ assumptions, our results indicated that regardless of level of academic experience, students’ self-assessed grades were higher than peer-assessed grades (p < 0.01). Both self- and peer-assessed grades were significantly higher than the instructor’s grade (p < 0.01). Overall, the peer-review exercise was positively viewed by students. Most students found the peer-review process beneficial, and 88% reported taking it seriously, despite knowing they would receive instructor’s feedback at the final stage of the writing exercise. Ultimately, the peer-review process provides an effective way to teach technical writing to undergraduates, but instructors should not assume that returning students with greater academic experience are better judges of their own performance. This study illustrates that all students are equally challenged to accurately assess the quality of their own work.

Introduction

The ability to communicate scientific discoveries through technical writing has been emphasized to become a crucial part of undergraduate biology education (National Research Council Citation2003; Coil et al. Citation2010; Brewer and Smith Citation2011). Undergraduate courses implement writing exercises to enhance scientific communication skills (Libarkin and Ording Citation2012), enhance critical thinking skills (Quitadamo and Kurtz Citation2007), and to improve students’ understanding of the scientific method (Coil et al. Citation2010; Reynolds and Thompson Citation2011). Comprehension and critical analysis of scientific information (Round and Campbell Citation2013), along with gaining scientific literacy and developing the ability to discern research-supported information from peer-reviewed journals, are important skills (Miller Citation1998; Anelli Citation2011).

Scientific writing through peer-review is one aspect of science that is often new to college students who may not have read peer-reviewed research articles before attending university. While advanced undergraduate courses often use primary literature as a pedagogical tool to teach information and science literacy (Porter Citation2005; Kararo and McCartney Citation2019), using primary literature in introductory courses can be challenging for novice students who are inexperienced with the organization, conventions and the peer-review process that underlies this form of technical writing (Round and Campbell Citation2013; Sandefur and Gordy Citation2016). For instructors of introductory courses, technical writing exercises are one way to teach students about primarily literature. However, technical writing assignments can be very time consuming both to produce and to provide feedback on. This issue can lead to larger courses avoiding including this type of assessment due to the inability of a few instructors to provide adequate feedback on each paper.

One way to reduce the time burden on instructors to provide incremental feedback on written assignments is to engage students in a mock peer-review exercise, in which students assess classmates’ writing anonymously as peer-reviewers. This process also provides an opportunity to engage students in formal self-assessment of their writing. However, students can struggle to accurately evaluate the strengths and weaknesses of their work when asked to self-assess (Lindblom-Ylänne, Pihlajamäki, and Kotkas Citation2006). The tendency to inaccurately judge and inflate one's ability - most pronounced among unaware or unskilled non-experts - has been dubbed the Dunning-Kruger effect (Kruger and Dunning Citation1999).

Difficulty accurately self-assessing one’s work has real-world implications (Dunning, Heath, and Suls Citation2004). For example, at one company 42% of engineers unrealistically thought their work ranked in the top 5% among their peers (Zenger Citation1992). This biased self-evaluation could lead many people to mistakenly think that they are top performers and that there is no room for improvement in their work (Dunning, Heath, and Suls Citation2004; Ehrlinger et al. Citation2008). The Dunning-Kruger effect could be at play in an introductory biology class, in which novice students may be overconfident in their writing ability due to less academic experience. This lack of expertise may lead them to inaccurately gauge whether their writing is satisfactory or fails to meet expectations.

Here we report on an exercise that challenges undergraduates to communicate through technical writing by replacing traditional laboratory reports with a semester-long, comprehensive writing exercise, mirroring the scientific peer-review process. Not only did it aim to teach essential communication and comprehension skills, but it also exposed students to the rigorous peer-review process that scientists go through to publish their work. The peer-review process, in which anonymous peers provide feedback and critique, is known to have many benefits and challenges in the classroom (Adachi, Tai, and Dawson Citation2018; Huisman et al. Citation2019). Noted benefits include that it can be used as a low-stakes way to improve students’ writing before formal grading.

Utilizing a detailed grading rubric and requiring self-assessment during the exercise can facilitate one-to-one comparisons of self and peer-assessed grades and can curb students’ non-expert biases. Investigating how students’ experience level influenced their ability to accurately self-assess, we hypothesized that students’ assessment of their own and their peers’ work becomes more accurate as they gain academic experience, and that novice students are overconfident in their own writing ability due to inexperience. We also hypothesized that these differences can be detected in high granularity, even between first and second-semester freshmen. Asking how self-assessed and peer-assessed grades compare to an instructor’s assessment, we hypothesized that all students inaccurately self-assess their scientific writing compared to peer and instructor assessments. Furthermore, we aimed to evaluate students’ perceived value of the peer-review exercise, examining differences in opinion among students with different levels of college experience.

Materials and methods

Course background and goals of peer-review exercise

The undergraduate students in this study were enrolled between 2015–2018 in Cornell University’s largest introductory biology course - Investigative Biology (BioG 1500) - an inquiry-based introductory class with lecture and laboratory components. The course objectives were to provide laboratory experience with emphasis on the processes of scientific investigation and to promote collaboration, communication and scientific literacy. The broad goals of this laboratory course are to teach transferrable skills, especially critical thinking and problem-solving (Deane-Coe, Sarvary, and Owens Citation2017).

The self and peer-assessment of original writing in the style of journal articles served to help accomplish three of the syllabus’ stated outcomes: that students would be able to (1) design hypothesis-based experiments, choose appropriate statistical test(s), analyse data, and interpret results, (2) find relevant scientific information using appropriate library tools, and to communicate effectively using both written and oral formats, and (3) think through a scientific process with peers and understand the ethics, benefits and challenges of collaborative work.

Peer-review exercise’s format

A rubric that communicated class standards and facilitated assessment was developed prior to the study and utilized for assessment. It was validated by students and laboratory instructors over four semesters and tested for clarity and usability. The final rubric that was developed was used by both students and instructors involved in this study. The rubric broke apart the sections of a scientific paper (abstract, introduction, methods, etc.) and prompted evaluation of these sections as “satisfactory”, “acceptable but below standards”, or “absent or not acceptable” for points that added up to 100.

To prepare students to write and evaluate papers during the mock peer-review exercise a series of activities were used to teach about the content of scientific papers and train them how to evaluate with the rubric. For example, in-class activities included dissecting and discussing actual peer-reviewed papers in groups. In addition, students were given guidance regarding these grading categories during class activities led by instructors, helping students practice grading using the rubric accurately. Specifically, students used the grading rubric to evaluated example papers - of varied quality - that were written by previous students and the grades were shared and discussed by with the entire class.

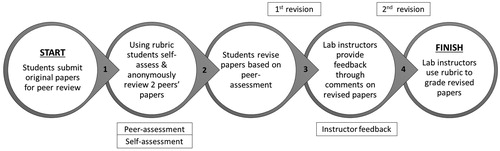

After these preparatory lessons during the first part of the semester, students took part in a peer-review exercise that had four steps (). During this exercise every student acted as an anonymous reviewer for two papers that were written by their peers. Papers were assigned randomly by the classes’ learning management system (Turnitin in Blackboard). The reviews followed the double-blind model: students did not know who reviewed their papers nor whose papers they were reviewing. Students were asked to assess peers’ papers using the same rubric that would later be used by their laboratory instructors to grade their final papers and to provide qualitative feedback as comments. At the same time, students were required to self-assess their papers with the grading rubric. The peer-review and self-assessment were incentivized with 3% participation credit. After peer and self-assessment, students revised their papers before their instructor provided feedback, which consisted of qualitative comments only. Using their laboratory instructor’s feedback, students revised their papers for a second time before submitting them for instructors to grade with the rubric. The final version of the papers was 12% of a student’s course grade.

Data collection

Data were anonymous - de-identified of names and student identification numbers - prior to analysis. The first dataset consisted of a total of 1,354 students, across two fall semesters (2015 and 2016) and two spring semesters (2017 and 2018). The average number of students each semester was 338.5, and according to a Kruskal-Wallis rank sum test there was no statistically significant difference in class size among semesters (p = 0.39).

This anonymized data, containing self and peer assessed grades, were used to compare students with different levels of academic experience. This was made possible by the inclusion of data that consisted of a student’s year of matriculation, which we refer to as their level of academic experience, their self-assessed grade, their peer-assessed grade, semester and year of course enrolment. The average of two peer-assessed grades was used in analyses and is hereafter referred to as the ‘peer-assessed grade’, because preliminary analyses of data showed that the two peer-assessed grades were not significantly different from each other (Wilcoxon paired rank-sum test, p = 0.06). Students with missing data were excluded from analyses. Missing data that was criteria for exclusion from the study included students who received only one peer-assessed grade, provided no self-assessed grade, or clearly did not follow the rubric, indicated by non-numerical grade responses.

To test if instructor grades would differ from the self and peer-assessed grades, a subset of 80 papers was selected. This subset of papers included an equal number of papers written by freshmen and sophomore students in fall and spring semesters, who were the largest group of students (). The papers selected were graded by the instructor using the same rubric and were at the first submission stage (). It is established that teachers can have implicit biases that affect student performance (Hoffmann and Oreopoulos Citation2009; Killpack and Melón Citation2016). However, in this instance the instructor who graded the papers did so blind to factors, like gender and ethnicity, that can lead to outright discrimination and was also highly trained and experienced using the rubric; therefore, we take their assessment as accurate.

Table 1. Number of students and their academic level by semester in the subset of data that was also graded by an instructor.

Poll Everywhere, a classroom technology that allows for interactive audience participation (Sarvary and Gifford Citation2017), was used at the end of spring and fall semesters to survey students’ opinions about the peer-review exercise. This second data set was used to gauge what aspects of the peer-review process students thought were helpful. Students across nine semesters - Fall 2013, 2015, 2016, 2017, 2018 and Spring 2014, 2016, 2017, 2018 - were asked “How did the peer-review help you?” (N = 2,606). Students in the Spring and Fall 2018 semesters were asked an additional yes or no question about the benefit of peer-review to them (N = 612), and an additional true or false question regarding whether they took peer-review seriously (N = 524).

Statistical analyses

All statistical analyses were performed using R software (v 3.5.3) in RStudio (R Core Team Citation2019). Pearson correlation coefficient tests were utilized to test for correlation between a student's self-assessed and the peer-assessed grade (α = 0.05). Wilcoxon rank-sum tests were used to test for significant differences between self-assessed and the peer-assessed grades of students (α = 0.05). To test whether the difference between self-assessed and peer-assessed grades was explained by students’ level of college experience, Wilcoxon rank-sum tests were used to compare the difference between self-assessed and the peer-assessed grades of freshmen to upperclassmen and first-semester freshmen to second-semester freshmen (α = 0.05). For our study, we defined all non-freshmen students, including sophomores, as upperclassmen.

For the subset of data that was also graded by an instructor, Pearson correlation coefficient tests were used to test for correlation among self-assessed, peer-assessed and instructor-assessed grades. In this data set, Wilcoxon paired rank sum tests were used to compare instructor-assessed grades to peer and self-assessed grades, and to compare self-assessed and peer-assessed grades (α = 0.05).

Students’ responses to Poll Everywhere questions, related to their opinions of the peer-review exercise, were examined using descriptive principles and tests (e.g. percent total of responses). To test if academic experience level influenced student’s perception of what was helpful about the peer-review exercise, students’ responses to the question “How did the peer-review help you?” were analysed using a Chi-squared test of independence (i.e. contingency analysis) (α = 0.05). Similarly, response data from the Spring and Fall 2018 semesters to the two yes or no and true or false questions were evaluated using Chi-squared tests of independence. Associations between observed and expected responses were compared to their academic experience levels (α = 0.05). Descriptive principles and tests were also used to examine the composition of students’ academic level (e.g. percent of total number of students) in peer-review data. A Kruskal-Wallis rank sum test was used to test for a significant difference in the number of students in each semester (α = 0.05).

Results

Students’ self and peer-assessed grades differ

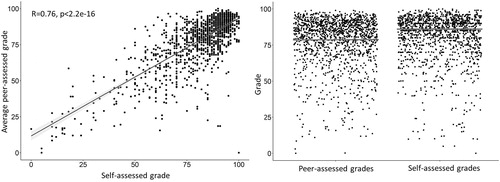

Students’ self and the peer-assessed grades generally agreed with each other and there was significant positive correlation (r = 0.76, P < 2.2e-16, N = 1,354) (). Students’ self and peer-assessed grades were significantly different (P = 1.5e-8) (). Students’ self-assessed grades were on average of 3% greater than the peer-assessed grade that they received. The average peer-assessed grade was 77.7% ± 17.4 StDev and the average self-assessed grade was 80.8% ± 16.2 StDev.

Figure 2. There was a strong positive correlation between students’ peer- and self-assessed grades (N = 1,354) (left). Students, regardless of their level of experience, self-assessed grades were significantly higher level than the peer-assessed grade (P = 1.5e-8). The horizontal lines indicate the mean grade (right).

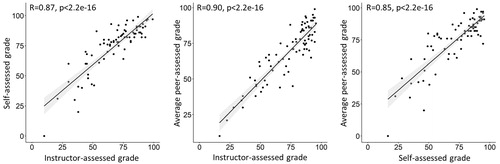

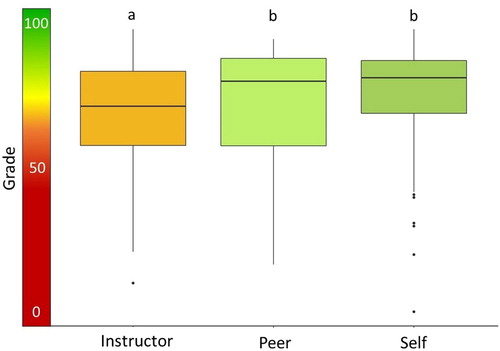

In the subset of the data that was composed of papers that were also graded by the instructor (N = 80), the three grades generally agreed with each other. There were significant positive correlations between: instructor and self-assessed grades (r = 0.86, P < 2.2e-16), instructor and peer-assessed grades (r = 0.90, P < 2.2e-16), and self and peer-assessed grades (r = 0.85, P < 2.2e-16) (). However, we found that the instructor-assessed grade was significantly different from both self-assessed (P = 3.3e-7) and peer-assessed grades (P = 1.2e-4) (). The median instructor-assessed grade was 72% (26 IQR), while the medians of the peer and self-assessed grades were 80.75% (30.6 IQR) and 82% (18.5 IQR) respectively.

Academic experience and self-assessment accuracy

In the four semesters combined in which data were collected to analyse peer and self-assessment there were 1,354 students. More than half of the students were freshmen (64%), while upperclassmen (sophomores, juniors and seniors) made up the rest. In general, there were more non-freshmen enrolled in fall semesters than in spring semesters, which were dominated by freshmen ().

Table 2. The number of students and their academic level by semester.

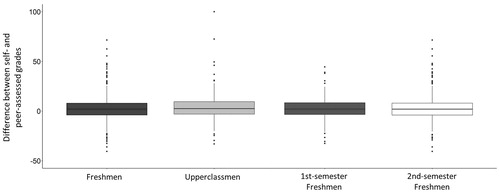

Academic level of students (freshmen, sophomore, junior, senior) did not predict how accurate self-assessed grades were to the peer-assessed grade. There was no significant difference between freshmen (n = 861) and upperclassmen (n = 493) students’ self and peer-assessed grades (p = 0.26) (). Furthermore, there was no significant difference between self and peer-assessed grades between first-semester freshmen (n = 307) and second-semester freshmen (n = 554) (p = 0.49) (). Students overestimated their own performance compared to the assessment by their peers at all academic levels, as both freshmen and upperclassmen students’ self-assessed grades were greater than their peer-assessed grade (2.9% and 3.5% higher respectively).

Figure 5. There were no significant differences between self- and the peer-assessed grades of freshmen and upperclassmen (n = 861 and n = 493 respectively), or between first- and second-semester freshmen (n = 307 and n = 554 respectively).

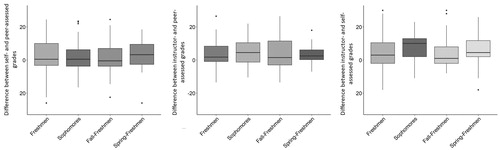

Like in the larger dataset (), in the subset of papers that were also graded by an instructor, we found that experience level did not predict grading accuracy. There were no significant differences between freshmen and sophomores when we looked at the differences between peer and self-assessed grades (p = 0.67), self and instructor-assessed grades (p = 0.27), or instructor and peer-assessed grades (p = 0.42) (). When novice fall-semester freshmen were compared to spring-semester freshmen there were no significant differences in the calculated gap between self and peer-assessed grades (p = 0.79), self and instructor-assessed grades (p = 0.34), or instructor and peer-assessed grades (p = 0.90) ().

Figure 6. Subset of the data with self-, peer-, and instructor-assessed grades (N = 80). Data with self- and peer-assessed grades (left), peer- and instructor-assessed grades (centre), and self- and instructor-assessed grades (right) are shown. There were no significant differences between freshmen and sophomores, or between fall- and spring-semester freshmen in either of the comparisons (p > 0.05, n = 20).

Students’ perceptions of the peer-review exercise

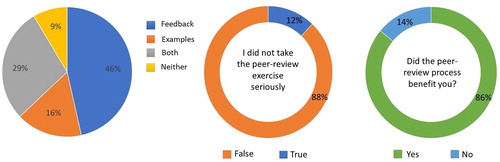

Students viewed the peer-review exercise as beneficial to their writing as reported across nine semesters by their responses to the Poll Everywhere questions, “How did the peer-review help you?” (N = 2,606) (). This data showed that 91% of the students found the peer-review process helpful in some way, with the 46% of the students identifying the feedback that they received as most helpful. Only 16% of the students indicated that just seeing peers’ papers during the peer-review process was helpful to them, while almost a third of students (29%) said that both aspects of the peer-review exercise were helpful. Less than 10% of students said that neither the feedback nor chance to see an example paper written by their peers were helpful (). Chi-squared test results indicated that what students found helpful about the peer-review activity was independent of their academic level of experience (p = 0.14, Chi2=27.903).

Figure 7. Students’ perspectives of the peer review exercise. Percent of total student responses to the question “How did the peer-review help you?” (N = 2,606) (left). Students’ perception of the exercise was also assessed with questions about how seriously they took the peer-review exercise (N = 524) (centre) and if it was beneficial (N = 612) (right).

Looking at the combined responses of students in the Spring and Fall 2018 semesters (N = 524), 88% of students identified the statement “Since I knew that my lab instructor was going to give feedback on the paper, I did not take the peer-review activity seriously. Why should I listen to peers, when an “expert” is going to provide feedback anyway?” as false. This indicates that most students took the peer-review activity, and the feedback they received from it, seriously (). However, Chi-squared test results showed that students’ academic experience level was not independent of their response (p = 0.002, Chi 2=22.074). Specifically, more second-semester freshmen than first-semester freshmen indicated that they did not take the activity seriously, 17% and 7% respectively. In the Spring and Fall 2018 semesters, 86% of students responded yes to the question “Did the peer-review process benefit you?” (). Chi-squared test results indicated that students’ responses to this question were independent of their academic level of experience (p = 0.17, Chi2=10.331).

Discussion

Investigative Biology is a large, inquiry-based laboratory gateway course at Cornell University, with the goal to train the next generation of scientists. Scientific communication (i.e. disseminating discoveries to fellow scientists) through peer-reviewed scholarly publications is an important step of the scientific process. Each student enrolled in Investigative Biology participated in the peer-review process, regardless of their academic experience level. It is expected that novice and experienced undergraduates behave differently in a higher education learning environment, and the general assumption is that increased academic experience benefits students’ performance and behavior. For example, Investigative Biology was a part of a multi-college study that showed that predictions about teaching practices by novice freshmen and first-generation students were less accurate than of those returning students who had more academic experience (Meaders et al. Citation2019).

In our study however, we found that novice students were no less accurate in their self-assessment than their more academically experienced peers. There were no differences in assessment accuracy between first and second-semester freshmen. Our assumption, that more academically experienced sophomore, juniors and seniors were more accurate assessors of technical writing than freshmen, was false. The Dunning-Kruger effect (Kruger and Dunning Citation1999), that novice students may be overconfident in their writing ability due to less academic experience, was not detected when first-semester freshmen were compared to second-semester freshmen, or when freshmen were compared to upperclassmen. However, the Dunning-Kruger effect is detected at a larger scale, and it applies to undergraduates at all academic levels, as they all inaccurately judge and inflate their writing skills. Our finding evidences that when the task at hand is something as sophisticated and novel as technical writing to communicate science, students at all academic experience levels struggle to accurately evaluate their own work. Instructors of diverse courses should not assume that freshmen overestimate the quality of their work because of their lack of college experience, and instructors should not assume that returning students with greater academic experience are better judges of their own performance.

While students had difficulties assessing their own work accurately compared to the reviews they received from their peers, the peers’ assessment were also an overestimation of the quality of the work. In this study, students used the same rubric to recognize peers’ writing errors and the errors in their own writing but applied the rubric criteria more leniently to their own work than their peers did. However, both self and peer-assessment were overestimated compared to the instructor’s assessment of the same work using the same rubric. This indicates that while instructors can explicitly communicate their expectations and be transparent about the assessment criteria, students may not be able to use these assessment tools accurately when applying it their own or to their peers’ work. This can lead to students’ criticism of the instructor being a “harsh grader”, “unfair” or “biased”.

The peer-review process can have many benefits in the classroom (Adachi, Tai, and Dawson Citation2018; Huisman et al. Citation2019). However, in order to achieve these benefits both parties (reviewers and reviewees) must take the process seriously. Similar to the role of the third reviewer in a real-life peer-review process, the laboratory instructors provided feedback on each student’s paper after the peer-review, but before the final submission. Despite knowing that an expert (instructor) would give feedback on the paper, a great majority of the students in the study said that they took the peer-review process seriously (88%). Students’ overall positive perception towards the usefulness of peer-review in the classroom is important for successful implementation into the curriculum, especially as a low-stake assignment. Students understanding the benefits of peer-review can effectively involve them in multiple rounds of feedback without putting additional time-constraint on the instructor.

While instructors in the course felt some concern about students losing agency in this inquiry-based class over their own work because of exposure to their peers’ papers, this was not supported by the self-evaluation. Only 16% of the students said the main benefit of the peer-review process was seeing another students’ work. Overall, there is evidence that the benefits of peer-review outweigh these concerns. It is not only an essential skill for scientists, recent work has shown that students participating in peer-review exercises can receive a grade boost. This is especially true when the process involves training for those students in grading their peers’ work and if the exercise utilizes computer-mediated assessment (Li et al. Citation2020). In Investigative Biology both of these indicators of high-quality peer-review exercise were met. Consequently, students, regardless of level of academic experience, viewed the process as beneficial.

Concluding remarks and directions for future research

The integrity, reproducibility and trust in scientific publications depend on the rigor and quality of peer-review (Lee and Moher Citation2017). Peer-review is the first line of defense against scientific misinformation, therefore exposing undergraduates to the peer-review process and helping them fine-tune their skills is an investment in the future. In addition to these big picture benefits, there are additional benefits to using a peer review exercise in the undergraduate curriculum. In courses that are designed to teach the scientific process, students must learn skills to communicate technical information in writing. Teaching this can be a very time-consuming process, especially in large courses where instructors need to provide valuable feedback multiple times during the semester to many students. As it was shown in this study, peer-review can be an effective solution in improving student writing, however it has some limitations that instructors need to be aware of.

In Investigative Biology students indicated that they found the peer-review process useful and took it seriously. They understood that the process was designed to help them improve their own writing skills through a structured feedback process. The key to this structure was in the clear guidelines and well-developed rubric used. The peer-review process used was fully transparent and students used the peer-review rubric multiple times in the class before they needed to review their own and their peers' work. Even so, instructor-assessed grades were significantly lower than peer and self-assessed grades. This clearly indicates that peer-assessment should not replace instructor feedback. Furthermore, when it comes to self-assessment, we found that academic experience does not directly translate to ability to accurately assess their own or their peers’ work. Students with more years of experience still may not have acquired this specific skill, which is complex. Due to this inaccuracy, if peer-review is implemented, it should remain a low-stakes exercise. Instructors should be aware of their own biases and be cognizant that on sophisticated writing tasks, even advanced students can struggle to accurately assess the quality of work.

As shown with tasks such as memorizing (Dunlosky and Rawson Citation2012), more interventions during peer-review could help students improve their ability to judge their work accurately. Interventions, such as using peer-review to teach technical writing, are powerful pedagogical tools and learning how they can be correctly fine-tuned to become more effective, requires instructors to tease apart a variety of factors other than level of academic experience that affect student performance.

Supplemental Material

Download JPEG Image (471 KB)Acknowledgements

We thank the BIOG 1500 team of teaching assistants, administrators and coordinators. We are especially grateful to Irena Horvatt for her help with data management and de-identification. In addition, we are grateful for Cornell’s Center for Teaching Excellence’s Scholarship of Teaching and Learning Fellowship.

Disclosure statement

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication on this article. The authors received no financial support for this research, authorship, and or publication of this article.

References

- Adachi, C., J. H.-M. Tai, and P. Dawson. 2018. “Academics’ Perceptions of the Benefits and Challenges of Self and Peer Assessment in Higher Education.” Assessment & Evaluation in Higher Education 43 (2): 294–306. doi:10.1080/02602938.2017.1339775.

- Anelli, C. 2011. “Scientific Literacy: What is It, Are we Teaching It, and Does It Matter.” American Entomologist 57 (4): 235–244. doi:10.1093/ae/57.4.235.

- Brewer, C. A., and D. Smith. 2011. “Vision and Change in Undergraduate Biology Education: A Call to Action.” Chapter 2: 10-19. American Association for the Advancement of Science

- Coil, D., M. P. Wenderoth, M. Cunningham, and C. Dirks. 2010. “Teaching the Process of Science: Faculty Perceptions and an Effective Methodology.” CBE Life Sciences Education 9 (4): 524–535. doi:10.1187/cbe.10-01-0005.

- Deane-Coe, K. K., M. A. Sarvary, and T. G. Owens. 2017. “Student Performance along Axes of Scenario Novelty and Complexity in Introductory Biology: Lessons from a Unique Factorial Approach to Assessment.” CBE—Life Sciences Education 16 (1): ar3. doi:10.1187/cbe.16-06-0195.

- Dunlosky, J., and K. A. Rawson. 2012. “Overconfidence Produces Underachievement: Inaccurate Self Evaluations Undermine Students’ Learning and Retention.” Learning and Instruction 22 (4): 271–280. doi:10.1016/j.learninstruc.2011.08.003.

- Dunning, D.,. C. Heath, and J. M. Suls. 2004. “Flawed Self-Assessment: Implications for Health, Education, and the Workplace.” Psychological Science in the Public Interest : a Journal of the American Psychological Society 5 (3): 69–106. doi:10.1111/j.1529-1006.2004.00018.x.

- Ehrlinger, J., K. Johnson, M. Banner, D. Dunning, and J. Kruger. 2008. “Why the Unskilled Are Unaware: Further Explorations of (Absent) Self-Insight among the Incompetent.” Organizational Behavior and Human Decision Processes 105 (1): 98–121. doi:10.1016/j.obhdp.2007.05.002.

- Hoffmann, F., and P. Oreopoulos. 2009. “A Professor like Me the Influence of Instructor Gender on College Achievement.” Journal of Human Resources 44 (2): 479–494. doi:10.1353/jhr.2009.0024.

- Huisman, B., N. Saab, P. van den Broek, and J. van Driel. 2019. “The Impact of Formative Peer Feedback on Higher Education Students’ Academic Writing: A Meta-Analysis.” Assessment & Evaluation in Higher Education 44 (6): 863–880. doi:10.1080/02602938.2018.1545896.

- Kararo, M., and M. McCartney. 2019. “Annotated Primary Scientific Literature: A Pedagogical Tool for Undergraduate Courses.” PLoS Biology 17 (1): e3000103doi:10.1371/journal.pbio.3000103.

- Killpack, T. L., and L. C. Melón. 2016. “Toward Inclusive STEM Classrooms: What Personal Role Do Faculty Play?” CBE—Life Sciences Education 15 (3): es3. doi:10.1187/cbe.16-01-0020.

- Kruger, J., and D. Dunning. 1999. “Unskilled and Unaware of It: How Difficulties in Recognizing One's Own Incompetence Lead to Inflated Self-Assessments.” Journal of Personality and Social Psychology 77 (6): 1121–1134. doi:10.1037/0022-3514.77.6.1121.

- Lee, C. J., and D. Moher. 2017. “Promote Scientific Integrity via Journal Peer Review data.” Science (New York, NY) 357 (6348): 256–257. doi:10.1126/science.aan4141.

- Li, H., Y. Xiong, C. V. Hunter, X. Guo, and R. Tywoniw. 2020. “Does Peer Assessment Promote Student Learning? A Meta-Analysis.” Assessment & Evaluation in Higher Education 45 (2): 193–211. doi:10.1080/02602938.2019.1620679.

- Libarkin, J., and G. Ording. 2012. “The Utility of Writing Assignments in Undergraduate Bioscience.” CBE Life Sciences Education 11 (1): 39–46. doi:10.1187/cbe.11-07-0058.

- Lindblom-Ylänne, S., H. Pihlajamäki, and T. Kotkas. 2006. “Self-, Peer-and Teacher-Assessment of Student Essays.” Active Learning in Higher Education 7 (1): 51–62. doi:10.1177/1469787406061148.

- Meaders, C. L., E. S. Toth, A. K. Lane, J. K. Shuman, B. A. Couch, M. Stains, M. R. Stetzer, E. Vinson, and M. K. Smith. 2019. “What Will I Experience in My College STEM Courses?” An Investigation of Student Predictions about Instructional Practices in Introductory Courses?” CBE Life Sciences Education 18 (4): ar60. doi:10.1187/cbe.19-05-0084.

- Miller, J. D. 1998. “The Measurement of Civic Scientific Literacy.” Public Understanding of Science 7 (3): 203–224. doi:10.1088/0963-6625/7/3/001.

- National Research Council. 2003. BIO 2010: Transforming Undergraduate Education for Future Research Biologists. Washington, D.C.: The National Academic Press.

- Porter, J. R. 2005. “Information Literacy in Biology Education: An Example from an Advanced Cell Biology course.” Cell Biology Education 4 (4): 335–343. doi:10.1187/cbe.04-12-0060.

- Quitadamo, I. J., and M. J. Kurtz. 2007. “Learning to Improve: Using Writing to Increase Critical Thinking Performance in General Education Biology.” CBE Life Sciences Education 6 (2): 140–154. doi:10.1187/cbe.06-11-0203.

- R Core Team. 2019. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/

- Reynolds, J. A., and R. J. Thompson, Jr.2011. “Want to Improve Undergraduate Thesis Writing? Engage Students and Their Faculty Readers in Scientific Peer Review.” CBE Life Sciences Education 10 (2): 209–215. doi:10.1187/cbe.10-10-0127.

- Round, J. E., and A. M. Campbell. 2013. “Figure Facts: Encouraging Undergraduates to Take a Data-Centered Approach to Reading Primary Literature.” CBE Life Sciences Education 12 (1): 39–46. doi:10.1187/cbe.11-07-0057.

- Sandefur, C. I., and C. Gordy. 2016. “Undergraduate Journal Club as an Intervention to Improve Student Development in Applying the Scientific Process.” Journal of College Science Teaching 45 (4): 52–58. doi:10.2505/4/jcst16_045_04_52.

- Sarvary, M. A., and K. M. Gifford. 2017. “The Benefits of a Real-Time Web-Based Response System for Enhancing Engaged Learning in Classrooms and Public Science Events.” Journal of Undergraduate Neuroscience Education: June: A Publication of Fun, Faculty for Undergraduate Neuroscience 15 (2): E13–E16.

- Zenger, T. R. 1992. “Why Do Employers Only Reward Extreme Performance? Examining the Relationships among Performance, Pay, and Turnover.” Administrative Science Quarterly 37 (2): 198–219. doi:10.2307/2393221.