Abstract

Learning is not just determined by the curriculum, but by how it is assessed. This article focuses on the analysis of the role played by the quality of assessment tasks on learning in undergraduate courses. During two successive academic years, information was collected on the views of students on the assessment activities and practices that they had experienced in subjects in business and economics with the aim of examining what influenced their perception of assessment tasks. A causal relationship model was developed which included key variables such as participation, self-regulation, learning transfer, strategic learning, feedback and empowerment (learner control). It was validated using partial least squares structural equation modeling (PLS-SEM). The relationships between assessment task quality and these variables were explored. Feedback, participation, empowerment and self-regulation were identified as mediating effects of the quality of assessment tasks on learning. The results highlight how assessment practices in higher education can be enhanced through improvements in the design of assessment and suggestions are offered on future lines of research that will allow a better understanding of the effectiveness of assessment processes.

Introduction

When educators design teaching activities, they usually focus on trying to answer questions such as: what do I have to do so that students learn? Or what activities do I have to organise? Biggs and Tang (Citation2011) pointed out that this is quite a different point of view from students, who design their activities on the basis of how they are going to be assessed, so that for the student, activities only make sense if they are consistent with what is going to be assessed and with the way in which it is going to be assessed. Therefore, educators would ease communication and mutual understanding between their intentions and students' expectations if they used the same approach as the students. That is, if they could plan from the perspective of what Wiggins and McTighe (Citation1998) called backward design, making the curriculum design process revolve around what students need to be able to do. Of course, this is only likely to be effective if assessment itself is well designed.

Designing the assessment process involves making decisions to determine its purposes, what the learning outcomes will be, its context, how feedback will be organised and, of course, what assessment tasks will be undertaken (Bearman et al. Citation2014, Citation2016). Assessment tasks are central as it is on those that the learner's performances will be judged. While these judgements will be made formally by assessors, in the overall process of a course they are also made by learners themselves, by their peers or by other agents, and they will be communicated either through oral or written comments and recommendations, or through grades.

Different assessment approaches in higher education such as those developed by Carless (Citation2015) or Sambell, McDowell, and Montgomery (Citation2013) pay particular attention to assessment tasks in order to promote in students deep approaches to learning. On the basis of these previous contributions, Rodríguez-Gómez and Ibarra-Sáiz (Citation2015) made assessment tasks a dynamic starting point for what they term student empowerment, that is, students taking control of their own learning process. However, is assessment task design in itself so decisive? To what extent do students value the usefulness of assessment for their learning? What elements or aspects of the assessment processes and practices are the most differentiating from the learner's perspective? What kinds of assessment practice overall might best facilitate learners’ learning?

These questions form the basis of our research, although this paper focuses on answering the first two. Firstly, we analyse whether the quality of the assessment tasks is directly related to students’ perceptions of their strategic learning and learning transfer, consequently providing a predictive model of learning based on the interrelationship of a set of variables involved in the assessment process. Secondly, we seek to provide an instrument that facilitates analysis and understanding of learners' perceptions of assessment practices in higher education.

Assessment approach and development of hypotheses

Assessment as learning and empowerment

There are several approaches and multiple elements that educators have to consider to design assessment. Each of the existing approaches to assessment in higher education emphasizes some or other of these elements and are based on different theoretical conceptions or practices (McArthur Citation2018). Thus, for example, Boud and Soler (Citation2016) underline the importance of the longer-term influence of the assessment on the learner; Carless (Citation2015) emphasizes the importance of assessment tasks, the development of self-assessment capacity and student participation in feedback; and Whitelock (Citation2010) emphasizes guidelines for action and the role of technologies in the context of assessment. The theoretical basis of each of these approaches is documented and evidence of their benefits published, but there is little evidence on how students perceive the interactions between the different constituent elements of each of these approaches.

Influenced by the ideas of these authors, Rodríguez-Gómez and Ibarra-Sáiz (Citation2015) developed what they termed the assessment as learning and empowerment approach. This approach identifies three main challenges (student involvement, feedback and task quality) and ten fundamental principles or rules that guide assessment. In addition, their approach provides a set of key statements or declarations that regulate assessment, and actions that help design and implement the assessment.

Research suggests that participation and involvement should be used throughout a course to empower students and thus improve their ability to shape their own learning experiences (Baron and Corbin Citation2012). On the basis of contributions from Freire (Citation1971, Citation2012), we conceive empowerment as the chance to encourage discussion, reflection and actions with transformative potential that requires active participation from learners (Fangfang and Hoben Citation2020). Specifically, from the context of assessment, empowerment is conceived as "learners sharing, if they want, in decisions about assessment" (Leach, Neutze, and Zepke Citation2001, 293). Empowerment in assessment requires enabling spaces that allow learners, as individuals and as social beings, separately and in groups, to take control and value their work and that of their peers, to debate and criticise the assessment system and to be able to suggest and negotiate different assessment practices.

The design of assessment tasks from the perspective of the assessment as learning and empowerment approach is based on the principles of challenge, reflection and transversality. Conceiving the assessment task as a challenge to students requires assessment tasks to provide opportunities for them to address challenging, motivating realizations that incrementally require their implementation of high-level skills and performances. Assessment based on the principle of reflection means that tasks constitute an activity that encourages reflective, analytical and critical thinking through meaningful activities that make it possible to assess own and others’ work and actions, which thus allow judgments to be made. Finally, assessment should be carried out in a coherent, interrelated and integrated manner within the course, programme, subject or theme, avoiding the segmentation and disconnection of learning.

In addition, the assessment as learning and empowerment approach considers assessment tasks as the focal point of a whole series of variables that characterise the wider assessment process. When designing assessment tasks, decisions will be taken on important aspects such as learner participation in the assessment process or how the information from the assessment process will be used, since this will largely determine student's self-regulation and, consequently, the transfer of learning beyond the immediate tasks.

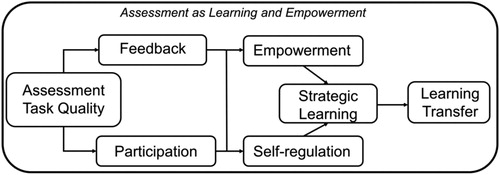

summarizes each of the constructs that interacting with each other make up this assessment approach. It also highlights key references that have served as the basis for supporting these conceptualizations.

Table 1. Constructs definition.

Research model and hypotheses

The model to be tested proposes that students’ perceptions of transfer of learning, that is application beyond the immediate task or course context, is determined by strategic learning which, in turn, is determined by feedback, participation, self-regulation and empowerment, all these variables being dependent on the quality of the assessment tasks. illustrates this model indicating in each case the relationships between all constructs.

On the basis of this theoretical model and the contributions presented above, the following hypotheses are proposed:

H1: Learning transfer is expected to be positively related to empowerment (H1a), self-regulation (H1b) and strategic learning (H1c).

H2: Strategic learning is expected to be positively related to empowerment (H2a), feedback (H2b), participation (H2c) and self-regulation (H2d).

H3: Empowerment is expected to be positively related to feedback (H3a), assessment task quality (H3b) and participation (H3c).

H4: Self-regulation is expected to be positively related to feedback (H4a), assessment task quality (H4b) and participation (H4c).

H5: Assessment task quality is expected to be positively related to feedback (H5a) and participation (H5b).

H6: The relationship between assessment task quality and strategic learning is expected to be mediated by feedback (H6a), participation (H6b), empowerment (H6c) and self-regulation (H6d).

H7: The relationship between assessment task quality and learning transfer is expected to be mediated by feedback (H7a), participation (H7b), empowerment (H7c) and self-regulation (H7d).

Methodology

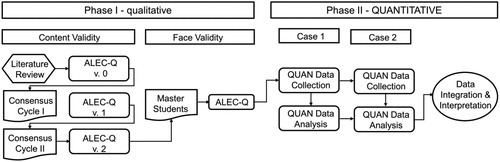

To carry out this study, a mixed methodology was employed, using an exploratory sequential design (qual-> QUAN) (Creswell Citation2015) in which the emphasis is placed on the quantitative phase (). In the first phase of the research, the design and validation of the ALEC_Q-Assessment as Learning and Empowerment Climate Questionnaire (Online Resource 1) was carried out. In the second phase we proceeded, through the application of questionnaires, to obtain the opinion of 769 university students who were studying different subjects on the final year of degrees in business administration and management in a Spanish university.

Each of the four subjects organised assessment differently, which we can group around three different assessment styles. The first subject was characterised by summative assessment, based essentially on the results of a final test. Two other subjects included a formative assessment, in which continuous assessments were carried out during the course and students received feedback information on their performance. The final subject was characterized by the participatory nature of the assessment that was carried out, since it used self-assessment and peer assessment formatively in the different assessment tasks during the course.

Data collection was done at the end of the first semester during the academic years 2017/18 (Case 1) and 2018/19 (Case 2). By answering the questionnaires at the end of the semester, students were aware of everything about the assessment process they had followed and could therefore give their opinion on their experience of it.

The perception questionnaire ALEC_Q

The constructs and measurement indicators of the ALEC_Q questionnaire were developed based on a review of the literature and, subsequently, a validation process was carried out by experts (). Different methods used for content validation were reviewed by expert judges (Johnson and Morgan Citation2016) and the group consensus method was used to avoid employing voting systems. The definition of the constructs was revised at the end of each of the cycles and the indicators were specified during the discussion process. Finally, in order to analyse the apparent validity, the questionnaire was presented to a group of masters students so that it could be improved in terms of clarity and ease of understanding.

The questionnaire was structured in seven dimensions () and consisted of 40 items in a Likert scale format (0-10) distributed in each of the dimensions. It was administered in Spanish. The completion of the questionnaire required about 15-20 min.

Table 2. ALEC_Q Questionnaire Structure.

It is important to emphasize that, from the beginning of this research, a model of measurement of a formative nature was chosen, since each of the indicators that constituted the different constructs are not interchangeable with each other, but each of them captures a specific aspect of the domain of the construct. As Coltman et al. (Citation2008) point out, it is vital that the explicit justification of the choice of a formative or reflective model is based on theoretical arguments and that it can be empirically tested. This is to avoid simplification in the measurement of constructs and to increase the rigor of the theory and its relevance for decision making.

Participants

Five experts in assessment and a total of 15 masters students participated in the qualitative phase of the study. In the quantitative phase, a total of 769 questionnaires were collected, 55.9% from women and 44.1% men (). The students expressed their views on the activities and the assessment process that had been followed in the subjects they were studying in their final year. The assessment processes and activities of four different subjects were evaluated - human resources management (HR), operations management (OP), project management (PM) and market research (MR) - all taught in the fourth year of the Business Administration and Management (ADE) degree at the University of Cadiz, Spain.

Table 3. Demographic characteristics.

Data analysis

In this study, the partial least squares structural equation modeling (PLS-SEM) method and the statistical software SmartPLS 3 (Ringle, Wende, and Becker Citation2015) were used to estimate the model. In order to confirm the nature of the constructs a confirmatory tetrad analysis (CTA-PLS) was employed.

Results

Evaluation of the measurement model

Initially, to empirically verify the formative nature of the constructs, a CTA-PLS was carried out (online resource 2). The convergent validity analysis was carried out through an analysis of the redundancy for each of the constructs. In all cases, path coefficients above the established minimum of 0.70 were obtained (Hair et al. Citation2017), so the convergent validity of the formative constructs is supported (online resource 3).

The results obtained for the variance inflation factor (VIF) allow us to conclude that collinearity does not reach critical levels in any of the formative constructs and is not an issue for the estimation of the PLS path model (threshold value of 5). When analysing the significance and relevance of the indicators, some were found whose weight was not statistically significant, but instead had loads greater than 0.5, so according to the rules of thumb expressed by Hair et al. (Citation2017) all indicators were maintained (online resource 4).

Evaluation of the structural model

Collinearity among the predictor constructs in not a critical issue in the structural model, as VIF values are clearly below the threshold of 5 (online resource 5).

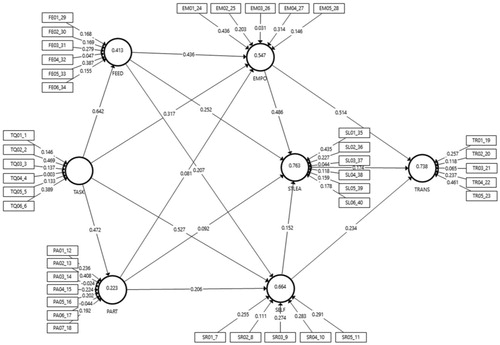

According to the guidelines offered by Hair et al. (Citation2017) a consistent bootstrapping (5,000 resamples) was carried out to check the statistical significance of the path coefficients (t-statistics and confidence intervals). shows the statistical results that support hypotheses H1, H2, H3, H4 and H5 (p<.001), although in the case of hypothesis H3c with a significance level p<.10.

Table 4. Structural model results using t values and percentile bootstrap 95% confidence interval (n = 5,000 subsamples).

The results presented in indicate that the effect sizes (f2) of the quality of the assessment task on feedback and empowerment, as well as of empowerment on strategic learning are high (f2≥.35). Medium level effect sizes (f2≥.15) are found for the effect of the quality of the assessment task on participation (.287), of empowerment on the transfer of learning and of feedback on empowerment. In the remaining cases the effect sizes are small (f2≤.02).

We can analyse the predictive power of the model through the analysis of the coefficient of determination (R2). Thus, as shown in , it is evident how 73.8% of the variance (R2) of the learning transfer construct is directly explained by the constructs empowerment, strategic learning and self-regulation and, indirectly, by the quality of tasks, feedback and participation constructs. The strongest effect on transfer is exerted by the empowerment construct, followed by self-regulation and strategic learning. The variance of the strategic learning construct is explained 76.3% by the constructs empowerment, feedback, self-regulation and participation, to which the indirect effect of the quality of the assessment task must be added. Overall, the results indicate the strong predictive power of the model, since the coefficients of strategic learning determination and transfer of learning are greater than 0.70. Furthermore, our research model achieves a SRMR of 0.05, which means an appropriate fit taking the usual cut-off of 0.08 into account.

In order to assess the predictive relevance of the path model it is necessary to focus on the construct cross-validated redundancy estimates (online resource 6). All Q2 values for endogenous constructs are significantly above zero. More precisely, strategic-learning has the highest Q2 value (0.499), followed by learning transfer, self-regulation, empowerment, feedback and, finally, participation. These results provide clear support for the model’s predictive relevance regarding the endogenous latent variables.

Regarding the effect sizes (q2), a medium value is reached in the case of the effect of feedback on participation and a low value in the case of feedback on empowerment and strategic learning, and empowerment on strategic learning and transfer of learning.

Mediation analysis

As illustrated in , this study presents a multiple mediation model in which the relationship between the quality of assessment tasks, strategic learning and the transfer of learning is mediated by several variables simultaneously. The analysis of multiple mediation allows all mediators to be considered at the same time in one model (Hair et al. Citation2017), so we can achieve a better representation of the mechanisms through which an exogenous construct (quality of assessment tasks) affects an endogenous construct (strategic learning, transfer of learning).

To test the mediation hypotheses (H6-H7) the analytical approach proposed by Nitzl, Roldán, and Cepeda (Citation2016) was employed. To test the indirect effects, following the proposals of Williams and MacKinnon (Citation2008), the bootstrapping procedure was implemented.

Our study aims to analyse, in the first place, the mediating effect that the variables feedback, empowerment, participation and self-regulation exert in the relationship between the quality of assessment tasks and strategic learning (H6). The results of this relationship (online resource 7) confirm that the total indirect effect of the quality of assessment tasks on strategic learning is 0.630 (t = 28.668, p<.01). When analysing the specific indirect effects, we demonstrate that the relationship between the quality of the assessment task and the strategic learning is mediated by feedback (H6a), both in simple mediation (TASK->FEED->STLEA, t = 6.161, p<.01) and through multiple mediation (TASK->FEED->EMPO->STLEA, t = 6.090, p<.01; TASK->FEED->SELF->STLEA, t = 0.020, p<.01). The mediation produced by participation (H6b) is significant, although in this case multiple mediation is significant at 10% (TASK->PART->EMPO->STLEA, t = 0.019, p<.10). Likewise, in the case of self-regulation (H6c) we can confirm its direct mediating character (TASK->SELF->STLEA, t = 0.154, p <.01), or multiple character in combination with participation or feedback. Finally, the mediation of empowerment (H6d) is confirmed, both directly and in combination with participation and feedback.

To analyse the strength of mediation, the variance accounted for (VAF) has been calculated, as suggested by Cepeda, Nitzl and Roldán (Citation2017). We note that the effect of feedback represents 25.70% of the total effect of the assessment task on strategic learning, in the case of empowerment it represents 24.48%, 12.72% for self-regulation and 6.93% for participation.

Secondly, we consider the analysis of the mediating effect of feedback, participation, empowerment and self-regulation on the relationship between the quality of the assessment task and the transfer of learning (H7) (see online resource 8). The total indirect effect of the assessment task on learning transfer is 0.613 (t = 4.784, p<.01). Analysis of the specific indirect effects shows that the relationship between the quality of the assessment task and the transfer of learning is mediated by feedback (H7a) and all cases of multiple mediation are significant, although the one with the highest effect is that established by multiple mediation in conjunction with empowerment (0.144), which represents 23.46% of the total indirect effects. This relationship between the quality of the assessment task and the transfer of learning is also mediated by empowerment (H6c), with a strength of 26.57%, and self-regulation (H6d), with a strength of 20.12%. In the case of mediation affected by participation (H6b) we can point out that its strength is reduced since, at best, its strength is 3.71%.

Discussion

This study aimed, firstly, to analyse if students’ perceptions of the quality of assessment tasks is related with learning transfer (incorporating knowledge and experience from other subjects, modules or real world; using different communication strategies; using useful strategies for academic and professional contexts) and to verify the interrelationships between the set of variables that characterize assessment as learning and empowerment. Secondly, it was intended to offer an instrument that enabled analysis of the perceptions of university students on assessment practices. The results obtained in this study suggest a series of implications and, in turn, allow us to consider future lines of research.

Theoretical implications

One of the main contributions of this work is the confirmation of a model that establishes the relationship between the set of variables that characterize assessment as learning and empowerment. The results obtained show that the hypothesized model can predict a large part of the relationships between the variables involved and show, on the one hand, that the perceived quality of the assessment tasks is directly related to feedback and participation and, on the other, the mediating role of feedback, participation, empowerment and self-regulation in the context of assessment processes.

The hypothesis which asserts the relationship between empowerment, self-regulation and strategic learning with the transfer of learning (H1) has been confirmed. Likewise, the positive relationship between empowerment, feedback, participation and self-regulation with strategic learning (H2) has also been proven. Similarly, there is clear evidence of the positive relationship of empowerment with feedback and the quality of assessment tasks (H3), the relationship between the quality of assessment tasks and participation with self-regulation (H4) and the relationship between the quality of assessment tasks with feedback and participation (H5). Finally, the hypotheses concerning the mediation character exerted by feedback, empowerment, participation and self-regulation (H6 and H7) have been tested.

In line with the contributions of Carless et al. (Citation2017), Gore et al. (Citation2009), Ibarra-Sáiz, Rodríguez-Gómez and Boud (Citation2020), Kyndt et al. (Citation2011) and Sadler (Citation2016) the results of this study show how students perceive the relevance and importance of the design of assessment tasks. They want them to be challenging, eminently practical and connected with professional reality and be such that they can demonstrate a deep understanding of fundamental concepts and ideas that require them to produce complex outputs.

Limitations and future research

From a methodological perspective, this research suffers from certain limitations that may lead to suggestions for future research. First, it is a study carried out within a specific context and based on the perception of students attending the final year of their degree in the field of economic and business sciences. This makes it difficult to generalize the results to other contexts within higher education. Secondly, it is research carried out on the basis of a mixed design in which the degree of control over the intervening variables is reduced so, according to Stone-Romero and Rosopa (Citation2008), the inferences that can be taken from the mediation model are limited. Finally, the measuring instrument is based on the perception of the students themselves, an aspect that could be improved through the use of complementary or alternative measuring instruments.

In this paper, results have been presented from a global perspective, but deeper and more detailed analysis would be interesting regarding the possible differences in students' perceptions of the different assessment systems they evaluated. For example, analysing what are the differences that students manifest when they value different processes and assessment activities. This analysis, which could be enriched with qualitative techniques, would allow a greater understanding of the assessment processes, investigating the active role of students.

Finally, as a line of future research, a need has been revealed to review and update the constructs that have been considered in this research and their interrelations, incorporating aspects that will be of great importance in the near future, such as the development of evaluative judgement (Boud Citation2020), a deeper understanding of the role of feedback or of the nature of assessment tasks (Ibarra-Sáiz and Rodríguez-Gómez Citation2020). In any case, this necessary, in-depth analysis will have to be carried out from an approach based on considering the student as a learner, in a context promoting empowerment, where he or she plays an active part in the assessment decision-making process.

Conclusion

Through this study the relationship between the constructs that make up the approach of assessment as learning and empowerment and the importance of the design of the assessment tasks has been confirmed. An instrument has been provided that can facilitate replication in other contexts and future lines of research have been proposed, through which assessment and learning in higher education could be improved.

On the basis of the results presented, there is a clear need to emphasise and facilitate the role of educators as designers of challenging, rigorous, realistic, transversal and useful assessment tasks for learning. As Rodríguez-Gómez and Ibarra-Sáiz (Citation2015) have pointed out, the challenge of designing assessment tasks that are challenging and meaningful for students and that provoke their high-level reflective, analytical and critical thinking requires a change in the mentality of both educators and students. The study of Ibarra-Sáiz and Rodríguez-Gómez (Citation2020) as well the review of Pereira, Flores, and Niklasson (Citation2016) and the challenges of Boud (Citation2020) on key aspects of assessment in higher education is encouraging in this regard, as it provides an indicator of the changes that are taking place in assessment practices and the evolution towards an approach more focused on the student (learner-centred approach), but as we have seen in this study, it is necessary to continue deepening our knowledge of assessment practices in which student learning is the centre of attention and of the changes required at micro (classroom), meso (curriculum) or macro levels (university).

Open sources

All online resources are available at Mendeley repository: http://dx.doi.org/10.17632/c6grcc4tcc.1

Acknowledgements

This work was made possible by the TransEval Project (Ref. R + D + i 2017/01) funded by University of Cadiz, the FLOASS Project (Ref. RTI2018-093630-B-I00) funded by Spanish Ministry of Science, Innovation and Universities, and the support of UNESCO Chair Evaluation and Assessment, Innovation and Excellence in Education.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

María Soledad Ibarra-Sáiz

María Soledad Ibarra-Sáiz. Senior Lecturer in Educational Assessment and Evaluation at University of Cadiz. Director of UNESCO Chair on Evaluation and Assessment, Innovation and Excellence in Education. Director of EVALfor Research Group-SEJ509 Assessment & Evaluation in Training Contexts from the Andalusian Programme of Research, Development and Innovation (PAIDI). She develops her research mainly in the field of assessment and evaluation in higher education. She has been principal researcher of more than 10 European, international and national projects, whose results have been published in various articles, book chapters and contributions to international conferences. She is currently the main co-researcher of the FLOASS Project-Learning outcomes and learning analytics in higher education: An action framework from sustainable assessment (RTI2018-093630-B-I00) in which 6 Spanish universities participate.

Gregorio Rodríguez-Gómez

Gregorio Rodríguez-Gómez. Professor of Educational Research Methods at the University of Cadiz. He is the coordinator of the strategic area Studies and research in assessment and evaluation of the UNESCO Chair on Evaluation and Assessment, Innovation and Excellence in Education. Founding member of the EVALfor Research Group-SEJ509 Assessment and evaluation in training contexts. His research interest is focused on research methods and assessment and evaluation in higher education. He is currently co-researcher of the FLOASS Project-Learning outcomes and learning analytics in higher education: A framework for action from sustainable assessment (RTI2018-093630-B-I00). Author of articles, book chapters and contributions to international conferences. He has been President of the Interuniversity Association for Pedagogical Research (AIDIPE). He is currently President of the RED-U Spanish University Teaching Network.

David Boud

David Boud is Alfred Deakin Professor and Director of the Centre for Research in Assessment and Digital Learning at Deakin University (Melbourne) and Emeritus Professor at the University of Technology in Sydney. He is also a Professor of Work and Learning at Middlesex University (London). He has written several publications on teaching, learning and assessment in higher and professional education. His current work focuses on the areas of assessment for learning in higher education, academic formation and learning in the workplace. He is one of the most highly cited scholars worldwide in the field of higher education. He has been a pioneer in the development learning-centred approaches to assessment across the disciplines focused on learning, especially in building assessment skills for the long-term learning (Developing Evaluative Judgement in Higher Education, Routledge 2018) and designing new approaches to feedback (Feedback in Higher and Professional Education, Routledge, 2013). Re-imagining University Assessment in a Digital World, by the publisher Springer, will come out during 2020.

References

- Ajjawi, R., and D. Boud. 2018. “Examining the Nature and Effects of Feedback Dialogue.” Assessment & Evaluation in Higher Education 43 (7): 1106–1119. doi:10.1080/02602938.2018.1434128.

- Alkharusi, H. A., S. Aldhafri, H. Alnabhani, and M. Alkalbani. 2014. “Modeling the Relationship between Perceptions of Assessment Tasks and Classroom Assessment Environment as a Function of Gender.” The Asia-Pacific Education Researcher 23 (1): 93–104. doi:10.1007/s40299-013-0090-0.

- Ashwin, P., D. Boud, K. Coate, F. Hallet, E. Keane, K. L. Krause, B. Leibowitz, et al. 2015. Reflective Teaching in Higher Education. London: Bloomsbury. doi:10.1142/S0129183114500405.

- Baron, P., and L. Corbin. 2012. “Student Engagement: Rhetoric and Reality.” Higher Education Research & Development 31 (6): 759–772. doi:10.1080/07294360.2012.655711.

- Bearman, M., P. Dawson, D. Boud, M. Hall, S. Bennett, E. Molloy, and G. Joughin. 2014. “Guide to the Assessment Design Decisions Framework.” http://www.assessmentdecisions.org/guide/.

- Bearman, M., P. Dawson, D. Boud, S. Bennett, M. Hall, and E. Molloy. 2016. “Support for Assessment Practice: Developing the Assessment Design Decisions Framework.” Teaching in Higher Education 21 (5): 545–556. doi:10.1080/13562517.2016.1160217.

- Biggs, J., and C. Tang. 2011. Teaching for Quality Learning at University. What the Students Does. 4th ed. Berkshire, UK: McGraw-Hill-SRHE & Open University Press.

- Boud, D. 2020. “Challenges in Reforming Higher Education Assessment: A Perspective from Afar.” RELIEVE - Revista Electrónica de Investigación y Evaluación Educativa 26 (1). doi:10.7203/relieve.26.1.17088.

- Boud, D., and E. Molloy. 2013. “Rethinking Models of Feedback for Learning: The Challenge of Design.” Assessment & Evaluation in Higher Education 38 (6): 698–712. doi:10.1080/02602938.2012.691462.

- Boud, D., and R. Soler. 2016. “Sustainable Assessment Revisited.” Assessment & Evaluation in Higher Education 41 (3): 400–413. doi:10.1080/02602938.2015.1018133.

- Carless, D. 2015. “Exploring Learning-Oriented Assessment Processes.” Higher Education 69 (6): 963–976. doi:10.1007/s10734-014-9816-z.

- Carless, D., S. M. Bridges, C. K. Y. Chan, and R. Glofcheski, eds. 2017. Scaling up Assessment for Learning in Higher Education. Singapore: Springer.

- Cepeda Carrión, G., C. Nitzl, and J. L. Roldán. 2017. “Mediation Analyses in Partial Least Squares Structural Equation Modeling: Guidelines and Empirical Examples.” In Partial Least Squares Path Modeling: Basic Concepts, Methodological Issues and Applications, edited by H. Latan and R. Noonan, 173–195. Cham, Switzerland: Springer. doi:10.1007/978-3-319-64069-3.

- Coltman, T., T. M. Devinney, D. F. Midgley, and S. Venaik. 2008. “Formative versus Reflective Measurement Models: Two Applications of Formative Measurement.” Journal of Business Research 61 (12): 1250–1262. doi:10.1016/j.jbusres.2008.01.013.

- Creswell, J. W. 2015. A Concise Introduction to Mixed Methods Research. Thousands Oaks, CA: Sage.

- Dawson, P., M. Henderson, P. Mahoney, M. Phillips, T. Ryan, D. Boud, and E. Molloy. 2019. “What Makes for Effective Feedback: Staff and Student Perspectives.” Assessment & Evaluation in Higher Education 44 (1): 25–36. doi:10.1080/02602938.2018.1467877.

- Falchikov, N. 2005. Improving Assessment through Student Involvement. Practical Solutions for Aiding Learning in Higher Education and Further Education. London: RoutledgeFalmer.

- Fangfang, G., and J. L. Hoben. 2020. “The Impact of Student Empowerment and Engagement on Teaching in Higher Education: A Comparative Investigation of Canadian and Chinese Post-Secondary Settings.” In Student Empowerment in Higher Education: Reflecting on Teaching Practice and Learner Engagement, edited by S. Mawani and A. Mukadam, 153–166. Berlin: Logos Verlag Berlin.

- Francis, R. A. 2008. “An Investigation into the Receptivity of Undergraduate Students to Assessment Empowerment.” Assessment & Evaluation in Higher Education 33 (5): 547–557. doi:10.1080/02602930701698991.

- Freire, P. 1971. Unusual Ideas about Education. Paris: UNESCO - International Commission on the Development of Education.

- Freire, P. 2012. Pedagogía del Oprimido. 2nd ed. Madrid: Siglo XXI.

- Gore, J., J. Ladwig, W. Eslworth, and H. Ellis. 2009. Quality Assessment Framework: A Guide for Assessment Practice in Higher Education. Callaghan, NSW Australia: The Australian Learning and Teaching Council. The University of Newcastle.

- Gulikers, J. T. M., T. J. Bastiaens, and P. A. Kirschner. 2004. “A Five-Dimensional Framework for Authentic Assessment.” Educational Technology Research and Development 52 (3): 67–85. doi:10.1007/BF02504676.

- Gulikers, Judith T. M., Theo J. Bastiaens, Paul A. Kirschner, and Liesbeth Kester. 2006. “Relations between Student Perception of Assessment Authenticity, Study Approaches and Learning Outcomes.” Studies in Educational Evaluation 32 (4): 381–400. doi:10.1016/j.stueduc.2006.10.003.

- Hair, J. F., G. T. M. Hult, C. M. Ringle, and M. Sarstedt. 2017. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM). London: Sage.

- Häkkinen, P., S. Järvelä, K. Mäkitalo-Siegl, A. Ahonen, P. Näykki, and T. Valtonen. 2017. “Preparing Teacher-Students for Twenty-First-Century Learning Practices (PREP 21): A Framework for Enhancing Collaborative Problem-Solving and Strategic Learning Skills.” Teachers and Teaching 23 (1): 25–41. doi:10.1080/13540602.2016.1203772.

- Hawe, E., and H. Dixon. 2017. “Assessment for Learning: A Catalyst for Student Self-Regulation.” Assessment & Evaluation in Higher Education 42 (8): 1181–1192. doi:10.1080/02602938.2016.1236360.

- Henderson, M., T. Ryan, and M. Phillips. 2019. “The Challenges of Feedback in Higher Education.” Assessment & Evaluation in Higher Education 44 (8): 1237–1252. doi:10.1080/02602938.2019.1599815.

- Hortigüela Alcalá, D., D. Palacios Picos, and V. López Pastor. 2019. “The Impact of Formative and Shared or Co-Assessment on the Adquisition of Transversal Competences in Higher Education.” Assessment & Evaluation in Higher Education 44 (6): 933–945. doi:10.1080/02602938.2018.1530341.

- Ibarra-Sáiz, M. S., and G. Rodríguez-Gómez. 2014. “Participatory Assessment Methods: An Analysis of the Perception of University Students and Teaching Staff.” Revista de Investigacion Educativa 32 (2): 339–361. doi:10.6018/rie.32.2.172941.

- Ibarra-Sáiz, M. S., and G. Rodríguez-Gómez. 2020. “Evaluating Assessment. Validation with PLS-SEM of ATAE Scale for the Analysis of Assessment Tasks.” RELIEVE - e-Journal of Educational Research, Assessment and Evaluation 26 (1). doi:10.7203/relieve.26.1.17403.

- Ibarra-Sáiz, M. S., G. Rodríguez-Gómez, and D. Boud. 2020. “Developing Student Competence through Peer Assessment: The Role of Feedback, Self-Regulation and Evaluative Judgement.” Higher Education 80 (1): 137–156. doi:10.1007/s10734-019-00469-2.

- Johnson, R. L., and G. B. Morgan. 2016. “Survey Scales.” A Guide to Development, Analysis, and Reporting. London: The Guilford Press.

- Kickert, R., M. Meeuwisse, K. M. Stegers-Jager, G. V. Koppenol-Gonzalez, L. R. Arends, and P. Prinzie. 2019. “Assessment Policies and Academic Performance within a Single Course: The Role of Motivation and Self-Regulation.” Assessment & Evaluation in Higher Education 44 (8): 1177–1190. doi:10.1080/02602938.2019.1580674.

- Kyndt, E., F. Dochy, K. Struyven, and E. Cascallar. 2011. “The Perception of Workload and Task Complexity and Its Influence on Students’ Approaches to Learning: A Study in Higher Education.” European Journal of Psychology of Education 26 (3): 393–415. doi:10.1007/s10212-010-0053-2.

- Leach, L.,. G. Neutze, and N. Zepke. 2001. “Assessment and Empowerment: Some Critical Questions.” Assessment & Evaluation in Higher Education 26 (4): 293–305. doi:10.1080/0260293012006345.

- McArthur, J. 2018. Assessment for Social Justice: Perspectives and Practices within Higher Education. London: Bloomsbury Academic.

- McDonald, Fiona, John Reynolds, Ann Bixley, and Rachel Spronken-Smith. 2017. “Changes in Approaches to Learning over Three Years of University Undergraduate Study.” Teaching & Learning Inquiry 5 (2): 65–79. doi:10.20343/teachlearninqu.5.2.6.

- Nielsen, S., and E. H. Nielsen. 2015. “The Balanced Scorecard and the Strategic Learning Process: A System Dynamics Modeling Approach.” Advances in Decision Sciences 2015: 1–20. doi:10.1155/2015/213758.

- Nitzl, C., J. L. Roldán, and G. Cepeda. 2016. “Mediation Analysis in Partial Least Squares Path Modeling: Helping Researchers Discuss More Sophisticated Models.” Industrial Management & Data Systems 116 (9): 1849–1864. doi:10.1108/IMDS-07-2015-0302.

- Panadero, E., and M. Alqassab. 2019. “An Empirical Review of Anonymity Effects in Peer Assessment, Peer Feedback, Peer Review, Peer Evaluation and Peer Grading.” Assessment & Evaluation in Higher Education 44 (8): 1253–1278. doi:10.1080/02602938.2019.1600186.

- Panadero, E., H. Andrade, and S. Brookhart. 2018. “Fusing Self-Regulated Learning and Formative Assessment: A Roadmap of Where We Are, How We Got Here, and Where We Are Going.” The Australian Educational Researcher 45 (1): 13–31. doi:10.1007/s13384-018-0258-y.

- Pereira, D., M. A. Flores, and L. Niklasson. 2016. “Assessment Revisited: A Review of Research in Assessment and Evaluation in Higher Education.” Assessment & Evaluation in Higher Education 41 (7): 1008–1032. doi:10.1080/02602938.2015.1055233.

- Pitt, E. 2017. “Student Utilisation of Feedback: A Cyclical Model.” In Scaling up Assessment for Learning in Higher Education, edited by D. Carless, S. M. Bridges, C. K. Y. Chan, and R. Glofchesk, 145–158. Singapore: Springer.

- Ringle, C. M., S. Wende, and J. M. Becker. 2015. SmartPLS 3. Bönningstedt: SmartPLS. http://www.smartpls.com.

- Rodríguez-Gómez, G., and M. S. Ibarra-Sáiz. 2015. “Assessment as Learning and Empowerment: Towards Sustainable Learning in Higher Education.” In Sustainable Learning in Higher Education. Developing Competencies for the Global Marketplace, edited by M. Peris-Ortiz and J. M. Merigó Lindahl, 1–20. Innovation, Technology, and Knowledge Management. Cham: Springer International Publishing. doi:10.1007/978-3-319-10804-9_1.

- Sadler, D. R. 2016. “Three in-Course Assessment Reforms to Improve Higher Education Learning Outcomes.” Assessment & Evaluation in Higher Education 41 (7): 1081–1099. doi:10.1080/02602938.2015.1064858.

- Sambell, K., L. McDowell, and C. Montgomery. 2013. Assessment for Learning in Higher Education. London: Routledge.

- Stone-Romero, E. F., and P. J. Rosopa. 2008. “The Relative Validity of Inferences about Mediation as a Function of Research Design Characteristics.” Organizational Research Methods 11 (2): 326–352. doi:10.1177/1094428107300342.

- Strijbos, J., N. Engels, and K. Struyven. 2015. “Criteria and Standards of Generic Competences at Bachelor Degree Level: A Review Study.” Educational Research Review 14: 18–32. doi:10.1016/j.edurev.2015.01.001.

- Tan, K. H. K. 2012. Student Self-Assessment: Assessment, Learning and Empowerment. Singapore: Research Publishing. doi:10.1080/02602938.2013.769198.

- Whitelock, D. 2010. “Activating Assessment for Learning: Are We on the Way Web 2.0?” In Web 2.0- Based-e-Learning: Applying Social Informatics for Tertiary Teaching, edited by M. J. W. Lee and C. McLoughlin, 319–342. Hershey, PA: IGI Global.

- Wiggins, G., and J. McTighe. 1998. Understanding by Design. Alejandría, VA: Association for Supervison and Curriculum Development.

- Williams, J., and D. P. MacKinnon. 2008. “Resampling and Distribution of the Product Methods for Testing Indirect Effects in Complex Models.” Structural Equation Modeling : A Multidisciplinary Journal 15 (1): 23–51. doi:10.1080/10705510701758166.