Abstract

E-assessments are becoming increasingly common and progressively more complex. Consequently, how these longer, more complex questions are designed and marked is imperative. This article uses the NUMBAS e-assessment tool to investigate the best practice for creating longer questions and their mark schemes on surveying modules taken by engineering students at Newcastle University. Automated marking enables calculation of follow through marks when incorrect answers are used in subsequent parts. However, awarding follow through marks with no further penalty for solutions being fundamentally incorrect leads to non-normally distributed marks. Consequently, it was found that follow through marks should be awarded at 25% or 50% of the total available to produce a normal distribution. Appropriate question design is vital to enable automated method marking in longer style e-assessment with questions being split into multiple steps. Longer calculation questions split into too few parts led to all or nothing style questions and subsequently bi-modal mark distributions, whilst questions separated into too many parts provided too much guidance to students so did not adequately assess the learning outcomes, leading to unnaturally high marks. To balance these factors, we found that longer questions should be split into approximately 3–4 parts, although this is application dependent.

Introduction

The concept of e-assessment has been around since the 1920s when machines were used to mark multiple choice questions (Skinner Citation1958). Since then the increase in the use of technology and advances in automated marking mean that e-assessment has become a cost effective and convenient way to assess large student cohorts (Audette Citation2005). Furthermore, the introduction of the World Wide Web in the 1990s meant that e-assessment could become web-based, making it a more popular tool (Llamas-Nistal et al. Citation2013), mainly for multiple choice and/or short answer right or wrong questions (Stödberg Citation2012). However, more recently e-assessments have become increasingly more sophisticated and complex (Boyle and Hutchison Citation2009).

Students often prefer e-assessment, with Donovan, Mader, and Shinsky (Citation2007) stating that survey results showed that 84% of students preferred e-assessment over paper assessment. Reasons for students liking e-assessment include the friendly interfaces, use of games and simulations for some tests as well as a more recreational environment for learning (Ridgway, McCusker, and Pead Citation2004). Williams and Wong (Citation2009) showed that students prefer flexibility, i.e. when an assessment is not at a specific time and place, which can be facilitated by e-assessments. Furthermore, the flexibility of e-assessment is particularly beneficial for instances such as during the Covid-19 pandemic when assessment had to be undertaken remotely due to social distancing measures and the closure of university buildings. However, maximum flexibility e-assessment depends on students having access to their own computer or tablet, although the reliance on such devices together with fast, stable internet connections is becoming an ever diminishing problem, given the prevalence of such devices as tools for standard life tasks.

For assessors, the key benefits of e-assessment relate to the automation of marking. Firstly, automated (instantaneous) marking can save assessor time and therefore has a potentially large cost saving for educational institutions. Secondly, depending on the type of assessment, the complexity of a mark scheme and the size of the cohort, marking consistency can be difficult to maintain, which can be overcome with automated marking. Finally, the level of personal feedback given to each student is severely limited when traditional assessments are given to large cohorts. Automated marking allows all work to be marked instantaneously, consistently and can show students exactly where marks were gained and lost, and if desired by the assessor, what answers should have been provided, enhancing the personal feedback available. For degree courses with large numbers of students, these three factors are important for maintaining the timeliness and quality of assessment marking plus appropriate feedback.

Despite the clear benefits of automated marking through e-assessment, there are several challenges compared with setting more traditional hand-marked written assessments (whether paper or digital). Electronic platforms are continually evolving, and time must be committed to the set up and design of assessments, which can be longer than a traditional assessment. Thus one has to contemplate the benefits of automated marking and the assessment longevity, for example whether (with some minor modifications) the assessment can be used for multiple cohorts of students and/or in future years. The second challenge is that students can be unfamiliar with recent e-assessment platforms, so it is often advisable to provide similar practice or mock assessments before any formal assessment takes place, which adds to the assessor’s workload. Additionally, it can be challenging to set and automatically mark assessments of a more complex nature than multiple choice or standard right/wrong single response questions. In science, technology, engineering and mathematics (STEM) disciplines, the use of longer computational questions is common. Students are provided with a particular question or problem which may only require a single final answer (or set of inter-related final answers), but a series of tasks or interim computations must be undertaken to obtain the correct final answer. In such questions, the facility to potentially award method marks to the student who has not correctly computed the final answer is important. Hence, the investigation and implementation for automated marking in e-assessment with appropriate method or follow through marks is the focus of this article.

There are two aspects to method marking that must be considered for automated marking of longer computational questions: (1) follow through marks and (2) breakdown of a longer question into interim steps. Follow through marking (FTM) is the principle of awarding marks for question parts that rely on previously answered values, where the value is incorrect. Students are therefore penalised for answering the early part of the question incorrectly, but not necessarily penalised for all subsequent dependent incorrect answers. The concept of awarding follow through marks is common place within several mathematical based subjects and at a range of levels from school through to university. FTM can be applied when marking by hand but is more computationally intensive as the marker must compute what the correct answer should have been given the incorrect input value. However, with the emergence of e-assessment the awarding of accurate follow through marks has become easier.

There appears to be very little literature on how FTM should be applied both in e-assessment and when marked by hand. One approach advocated by Ashton and Beevers (Citation2002) proposes that students should not be penalised twice for the same mistake in a single question and thus after the initial error has been penalised, all subsequent sections can be marked entirely correct if the only error is the use of an initial incorrect value. Such a lack of subsequent penalty may be acceptable in theoretical or conceptual assessments where the demonstration of the correct method is key. However, in subjects with a practical application where (cross) checks on the final answers are often possible, as is the case in many aspects of engineering, it may not be appropriate to award full marks for subsequent question parts that are fundamentally incorrect and readily identifiable by the student through checking procedures. For example, if the question involved the assessment of the structural integrity of a building, a final answer could be catastrophically incorrect but still be awarded near full marks with such an approach. Consequently, in this article we assess the effect of awarding different FTM approaches and investigate the most appropriate way to apply FTM on some engineering problems, including the appropriateness of the penalty level.

In automated marking, student working can take any format and length and hence is not usually submitted (Ridgway, McCusker, and Pead Citation2004), making apportioning method marks difficult. A particular challenge to address is how to enable automated method marking without providing too much guidance to the student on how to tackle longer computational questions, to ensure that the desired student learning outcomes of identifying the necessary interim steps to obtain the final answer are realised. Therefore, in this article, we investigate how to enable the automated award of method marks in longer computational questions by breaking down the question into a series of steps and interim answers, and attempt to evaluate how many such steps are appropriate.

NUMBAS

To develop long computational e-assessments a highly flexible tool was required, which allowed complex questions to be designed with random variables, automated marking procedures and the ability to award follow through marks. Consequently, NUMBAS, which is a versatile open access e-learning software developed at Newcastle University in the School of Mathematics, Statistics and Physics (Perfect Citation2015) was selected. It was initially developed to allow students to undertake a series of diagnostic tests to assess student mathematics ability and performance when commencing their degree programme. However, it is now widely used for e-assessment with automated marking by multiple schools at Newcastle University, including for engineering subjects. Outside of Newcastle it has been used at a range of other universities such as Kingston University, Cork Institute of Technology and the University of Pretoria (Denholm-Price and Soan Citation2014; Rowlett Citation2014; Carroll et al. Citation2017; Loots, Fakir, and Roux Citation2017; Alruwais, Wills, and Wald Citation2018), as well as in UK schools from primary education to A-level teaching.

One benefit of NUMBAS is that it can be utilised to design a wide range of question types with unique values given to each student. The use of NUMBAS allows fast and accurate implementation of FTM, whereby questions can easily be designed to incorporate a student’s previous incorrect (part) answers into subsequent answers. NUMBAS was used throughout all the assessments in this article to evaluate how to award follow through marks and design longer questions with multiple steps.

Follow through marking

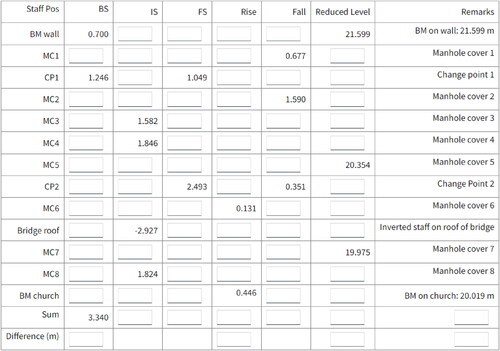

To assess the potential impact of FTM a summative assessment completed by 57 Newcastle University undergraduate students was examined. The assessment consisted of two equally weighted questions based around the topic of spirit levelling within surveying, which is a means of determining differences in heights of points above a datum such as mean sea level, a common requirement in construction and flood modelling, for example. The first question tested the student’s ability to correctly calculate a levelling loop using the rise and fall technique (e.g. Uren and Price Citation2018, 12 − 51). The submission consisted of a large table, shown in , with students required to enter values in 30 of the empty boxes. The majority of the required answers followed on from previous answers and FTM could be used if mistakes were made in earlier parts of the question. The second question focussed on the computation of a two peg test to assess if a levelling instrument was correctly adjusted. This computation involved computing height differences between two pegs in two different instrument set up scenarios and using the difference to assess the error with the instrument (e.g. Uren and Price Citation2018, 12 − 51). This question had four parts with the first three parts following on from each other and was therefore eligible for FTM.

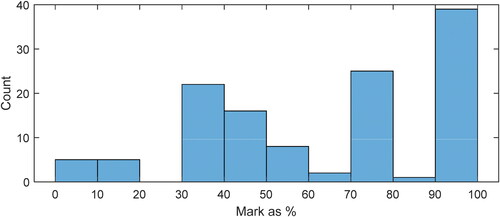

FTM was trialled for the 2-question levelling assessment under the following criteria: (1) 100% FTM which simulates an existing convention of applying no subsequent penalty for using incorrect values, (2) 0% FTM which is equivalent to no follow through marks being awarded, (3) 25% FTM, (4) 50% FTM and (5) 75% FTM. The 25%, 50% and 75% FTM apply varied penalties for the use of incorrect values, awarding fewer marks than to students who achieve the fully correct solution. For example, if a student gets part A worth four marks incorrect and part B worth four marks also incorrect but the only reason part B is incorrect is through the use of the incorrect value from part A, for part B they will be awarded four marks with 100% FTM, three marks with 75% FTM, one mark with 25% FTM and no marks with 0% FTM. A comparison of the impact of the different FTM criteria in terms of marks for the 57 students attempting the levelling assessment has been tabulated () and plotted as histograms ().

Figure 2. Histograms for a range of follow through marking (FTM) approaches applied to the levelling assessment.

Table 1. Impact of different follow through marking (FTM) weightings on the levelling assessment marks, which are listed as marks out of 100.

The distribution of marks on an assessment can vary, especially when the number of students is low. However, in fair and effective assessments more students will achieve a mark near the group mean with gradually fewer students obtaining marks either side of this mean. This distribution can be approximated by a normal distribution and although low cohort numbers can affect the accuracy of this distribution, the effectiveness of the assessments in this article will be judged on their ability to produce normally distributed cohort marks. To validate this expectation of normally distributed marks, the overall module marks for students on two Newcastle University stage 1 surveying modules, with an average of 30 and 108 students each, were evaluated over 10 years. 19 of the 20 overall module mark profiles considered followed a normal distribution at 95% confidence according to a Shapiro-Wilk normality test (Shapiro and Wilk Citation1965): the only mark profile failing this test was for a module delivery in 2020 at the height of the Covid-19 pandemic, when assessments had to be drastically changed with only a few weeks’ notice.

The marks for the levelling assessment under each of the five conditions were analysed to evaluate if they were normally distributed according to the Shapiro-Wilk test. In this test, the larger the P value, the more likely the data are deemed to be normally distributed, with P values greater than 0.05 being large enough to state the data are normally distributed using a 95% confidence interval. This test showed that using 75% FTM and 100% FTM produced non-normally distributed results with marks being skewed towards the higher end with means of ∼65% (). However, 0%, 25% and 50% FTM all produced marks which were normally distributed and produced means between 60% and 63% (). The 0% FTM approach does provide a normal distribution of marks but does not distinguish between those students who make one early mistake and those who make multiple mistakes, which was important for our levelling assessment, and therefore we discounted the 0% FTM approach. and show that weighting the FTM too highly resulted in skewed mark distributions, tending to elevated scores. Consequently, we concluded that 25% or 50% FTM should be applied. For the levelling assessment question, we used 25% FTM and this convention has subsequently been applied to further assessments within surveying at Newcastle University, including those discussed in the remainder of this article.

Question design in e-assessment

When designing e-assessments it is crucial to consider that questions designed to be marked by hand do not necessarily translate well into e-assessment questions. This is especially true for longer style questions that require multiple stages to be completed by the student as is common within engineering assessment. The difficulty in how to award method marks automatically in e-assessment for those multiple stages increases the design complexity for longer style questions.

The main approach described in the literature to allow students to be awarded method marks during longer style questions is to split the question into multiple smaller parts (McGuire et al. Citation2002; Ashton et al. Citation2006). In these studies students were given two options: (1) answer the question in one large part with the potential of achieving full marks, or (2) have the question broken down into steps with a penalty applied (approximately 25%) as a consequence of being provided with more guidance. However, this approach requires the student to predict their ability to answer the question correctly or accept the help of multiple steps. Additionally, these studies were based on invigilated examinations where student collusion is not possible. For coursework assessments the potential for collusion is greater, with only one student being required to cap their mark to receive the additional help for the rest of a group to potentially benefit via collusion and obtain full marks with the benefit of seeing the multiple steps, and hence there is a potential challenge of maintaining assessment integrity. In our study, students were assessed via coursework with completion required over several weeks. Therefore, to achieve a fair and consistent assessment all students had to receive the same amount of guidance and opportunity to be awarded method marks. Consequently, the assessments in this article gave all students the same questions and number of steps, although with unique quasi-randomly generated values, with no further hints available. Hence, the challenge was to ascertain the balance of the correct number of steps.

To evaluate the impact of varying the number of interim steps provided on coursework style assessments, the marks from civil engineering and geospatial engineering degree automatically-marked e-assessments at Newcastle University were analysed. Each assessment was unique with randomly generated variables for each student to reduce the potential for cheating and collusion (Alin, Arendt, and Gurell Citation2022). The first two assessments that were considered centred on the topics of trilateration (a method of determining the Easting and Northing of a point by measuring horizontal distances to two or more points of known Easting and Northing) and horizontal polar coordinate computations, whereby the Easting and Northing of a point is computed if the bearing and distance are known from another point of known Easting and Northing (e.g. Schofield and Breach Citation2007, 6 − 11). In both assessments, the main question required the calculation of Easting and Northing coordinates of the point of interest with no intermediate steps given to the students. This allowed no method marks to be awarded and provided no guidance to the students of the required approach to compute the solution.

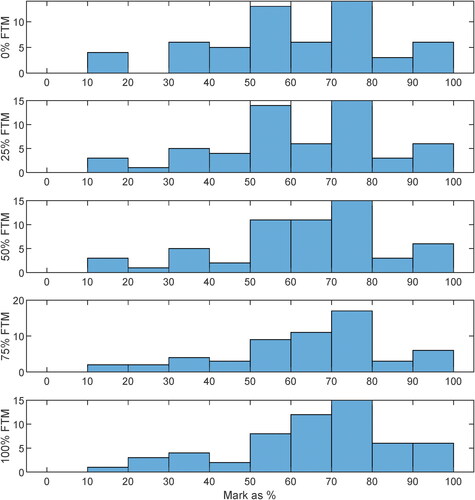

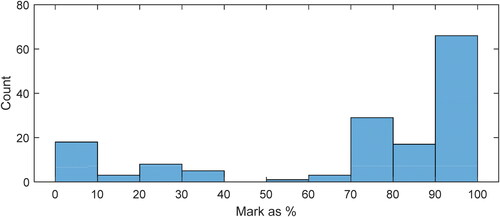

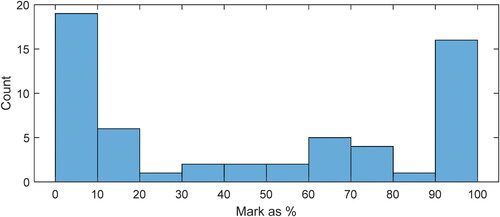

Assessment A on trilateration was undertaken by 150 students who achieved a mean mark of 72% with a standard deviation of 35%. Assessment B on polar coordinate computation was completed by 58 students and had a mean of 46% and a standard deviation of 42%. For both of these assessments, the results are polarised as highlighted by the large standard deviations (see and ). Effectively, this resulted in bi-modal distributions as a result of no intermediate steps being provided, with the students who could answer the questions correctly achieving close to full marks and those who could not close to zero marks. As discussed earlier, fair and effective assessment will have an approximately normal mark distribution, unlike the and bi-modal distributions.

Figure 3. Histogram of student marks for coursework Assessment A – Trilateration (no interim steps) undertaken by 150 civil engineering and geospatial engineering students at Newcastle University.

Figure 4. Histogram of student marks for coursework Assessment B – Polar Coordinate Computation (no interim steps) undertaken by 58 geospatial engineering students at Newcastle University.

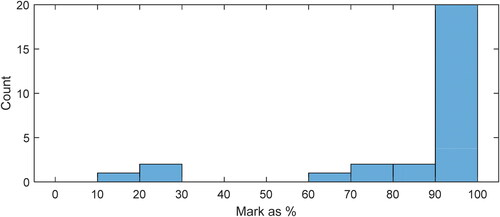

A subsequent assessment was designed to combat polarised assessment marks by providing intermediate steps. Assessment C was aimed at testing the student’s ability to solve a question about resection (a technique by which the Easting and Northing of an unknown point may be determined by observing horizontal angles to three or more points of known Easting and Northing), and similarly to assessments A and B, students were provided with simulated observation data from known stations. However, unlike in Assessments A and B, and to attempt to account for a resection computation being more complicated than a trilateration or polar coordinate computation, in Assessment C nine intermediate steps were provided to the students to allow them to achieve method marks even if the final correct answer was not obtained. If an error was made in an earlier part of the question, 25% FTM was applied. Assessment C was taken by 28 geospatial engineering students at Newcastle University and the mean mark awarded was 87%, the standard deviation of the marks was 25%, and 24 of the 28 students achieved marks greater than 70% (). This demonstrated that providing students with so many intermediate steps led to unnaturally high marks, with the distribution not being normal (). By providing nine steps the students were heavily guided on the correct approach to solving the question, meaning they were not adequately assessed on their independent understanding and hence could not demonstrate independent attainment of the intended learning outcomes.

Figure 5. Histogram of student marks for Assessment C – Resection (nine interim steps) undertaken by 28 geospatial engineering students at Newcastle University.

A further assessment question was designed to attempt to find a balance between the concerns raised by providing both too few and too many steps. Assessment D tested the students on the topic of mapping scale factors. This required students to compute the physical ground distance between two points given their Ordnance Survey National Grid Easting and Northing. To do this the students had to compute the mapping scale factor at each point and use this together with the height above mean sea level of the points to find the ground distance (Schofield and Breach Citation2007, 306 − 311), rather than the distance indicated directly on the map. In this assessment the question was split into three intermediate steps to help guide the students and allow method marks to be awarded. The assessment was completed by 123 civil engineering students at Newcastle University and provided a mean mark of 64% with a standard deviation of 30% (). The results show that there is still a large cluster of students getting close to full marks and some students who get close to zero marks. However, there is a more even spread of students achieving marks within the mid-range marks from 30% to 80%.

Conclusions

The introduction of e-assessment using the NUMBAS software has led to a number of benefits within engineering at Newcastle University, notably marking consistency and substantially reduced staff marking time, while using random variables in the questions has enabled each student to attempt their own individualised assessment with data/values unique to them, hence reducing the opportunity for plagiarism. Collusion is not totally eliminated, in that one student can still provide another with their solutions and hence share the method, but the computations themselves must be carried out separately for every student’s individualised assignment. The automated marking has enabled instantaneous individual student feedback, with each student getting access to how every mark was awarded, which led to more than 60% of students stating (when surveyed) that they preferred using NUMBAS over paper-based assessment, compared with only 19% who preferred the paper-based assessment.

Designing e-assessment questions is challenging: it is important to balance the awarding of follow through marks alongside the design of longer style questions. It was found that while awarding follow through marks can mean students are not penalised too heavily for early and often fundamental mistakes, awarding full follow through marks is not necessarily appropriate when checks on their final answers are possible, and leads to non-normally distributed marks. The award of follow through marks at 100% or 75% resulted in non-normally distributed results while 0%, 25% and 50% all resulted in normal distributions. 0% follow through marks was discounted due to the inability to distinguish between a single error and multiple errors. Consequently, we suggest follow through marks be awarded at 25% or 50% of the original available mark.

Question design in automated marking e-assessment was found to be different from paper-based assessment and an important factor in the marks students achieved in a particular assessment. The awarding of method marks is difficult within automatically-marked e-assessments and can only be done effectively by splitting longer questions into a series of smaller intermediate steps. Our analysis of four assessments undertaken by civil engineering and geospatial engineering students at Newcastle University showed that using too few steps resulted in polarised mark distributions with students getting very high or very low marks and little in between. Too many intermediate steps guided the students too heavily and resulted in artificially high marks. Therefore, for the surveying and mapping application considered here, approximately three intermediate steps allowed a balance of awarding some method marks while providing an appropriate level of student guidance. For other applications and question types, the exact number of intermediate steps may change, and this needs to be determined empirically, including refinement over time.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Christopher Pearson

Dr Christopher Pearson is a research associate in the School of Engineering at Newcastle University. He teaches engineering and offshore surveying to undergraduate students on courses such as Geospatial Engineering, Civil Engineering, Earth Science and Town Planning. His primary research interests are GNSS geodesy and pedagogical approaches within surveying.

Dr Nigel Penna is a senior lecturer in the School of Engineering at Newcastle University. He teaches surveying, geodesy and mathematical techniques to undergraduate GIS, Geospatial Engineering, Civil Engineering, Physical Geography and Town Planning students. His principal research interests are GNSS geodesy and its scientific and engineering applications.

References

- Alin, P., A. Arendt, and S. Gurell. 2022. “Addressing Cheating in Virtual Proctored Examinations: Toward a Framework of Relevant Mitigation Strategies.” Assessment & Evaluation in Higher Education 47: 1–14. doi:10.1080/02602938.2022.2075317

- Alruwais, N., G. Wills, and M. Wald. 2018. “Advantages and Challenges of Using e-Assessment.” International Journal of Information and Education Technology 8 (1): 34–37. doi:10.18178/ijiet.2018.8.1.1008

- Ashton, H. S., and C. E. Beevers. 2002. “Extending Flexibility in an Existing on-Line Assessment System.” In Proceedings 6th International CAA Conference, Leicestershire, UK: Loughborough University.

- Ashton, H. S., C. E. Beevers, A. A. Korabinski, and M. A. Youngson. 2006. “Incorporating Partial Credit in Computer-Aided Assessment of Mathematics in Secondary Education.” British Journal of Educational Technology 37 (1): 93–119. doi:10.1111/j.1467-8535.2005.00512.x

- Audette, B. 2005. “Beyond Curriculum Alignment: How One High School is Using Student Assessment Data to Drive Curriculum and Instruction Decision Making.” http://assets.pearsonglobalschools.com/asset_mgr/legacy/200727/2005_07Audette_420_1.pdf.

- Boyle, A., and D. Hutchison. 2009. “Sophisticated Tasks in e-Assessment: What Are They and What Are Their Benefits?” Assessment & Evaluation in Higher Education 34 (3): 305–319. doi:10.1080/02602930801956034

- Carroll, T., D. Casey, J. Crowley, K. Mulchrone, and Á. Ní Shé. 2017. “Numbas as an Engagement Tool for First-Year Business Studies Students.” MSOR Connections 15 (2): 42–50. doi:10.21100/msor.v15i2.410

- Denholm-Price, J., and P. Soan. 2014. “Mathematics eAssessment Using Numbas: Experiences at Kingston with a Partially ‘Flipped’ Classroom.” In HEA STEM Annual Learning and Teaching Conference 2014, Edinburgh, 30 April–1 May.

- Donovan, J., C. Mader, and J. Shinsky. 2007. “Online vs. traditional Course Evaluation Formats: Student Perceptions.” Journal of Interactive Online Learning 6 (3): 158–180.

- Llamas-Nistal, M., M. J. Fernández-Iglesias, J. González-Tato, and F. A. Mikic-Fonte. 2013. “Blended e-Assessment: Migrating Classical Exams to the Digital World.” Computers & Education 62: 72–87. doi:10.1016/j.compedu.2012.10.021

- Loots, I., R. Fakir, and A. Roux. 2017. “An Evaluation of the Challenges and Benefits of e-Assessment Implementation in Developing Countries: A Case Study from the University of Pretoria.” Cape Town, 418–420.

- McGuire, G. R., M. A. Youngson, A. A. Korabinski, and D. McMillan. 2002. “Partial Credit in Mathematics Exams: A Comparison of Traditional and CAA Exams.” In Proceedings of the 6th International CAA Conference, 223–230. Loughborough: Loughborough University.

- Perfect, C. 2015. “A Demonstration of Numbas, an e-Assessment System for Mathematical Disciplines.” In CAA Conference, 1–8. http://www.numbas.org.uk/wp-content/uploads/2015/07/caa2015_numbas.pdf.

- Ridgway, J., S. McCusker, and D. Pead. 2004. Literature Review of e-Assessment. Bristol, UK: Nesta Future Lab.

- Rowlett, P. 2014. “Development and Evaluation of a Partially-Automated Approach to the Assessment of Undergraduate Mathematics.” In Proceedings of the 8th British Congress of Mathematics Education, edited by S. Pope, 295–302. London: British Society for Research into Learning Mathematics.

- Schofield, W., and M. Breach. 2007. Engineering Surveying. Boca Raton, FL: CRC Press.

- Shapiro, S. S., and M. B. Wilk. 1965. “An Analysis of Variance Test for Normality (Complete Samples).” Biometrika 52 (3–4): 591–611. doi:10.2307/2333709

- Skinner, B. F. 1958. “Teaching Machines: From the Experimental Study of Learning Come Devices Which Arrange Optimal Conditions for Self-Instruction.” Science (New York, N.Y.) 128 (3330): 969–977.

- Stödberg, U. 2012. “A Research Review of e-Assessment.” Assessment & Evaluation in Higher Education 37 (5): 591–604. doi:10.1080/02602938.2011.557496

- Uren, J., and B. Price. 2018. Surveying for Engineers. London: Bloomsbury Publishing.

- Williams, J. B., and A. Wong. 2009. “The Efficacy of Final Examinations: A Comparative Study of Closed-Book, Invigilated Exams and Open-Book, Open-Web Exams.” British Journal of Educational Technology 40 (2): 227–236. doi:10.1111/j.1467-8535.2008.00929.x