?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Prospective graduate students are usually required to have attained an undergraduate degree in a related field and high prior grades to gain admission. There is consensus that some relatedness between the students’ undergraduate and graduate programs is required for admission. We propose a new measurement for this relatedness using cosine similarity, a method that has been tried and tested in fields such as bibliometric sciences and economic geography. We used this measurement to calculate the relatedness between a student’s undergraduate and graduate program, and tested the effect of this measure on study success. Our models show that there is an interaction effect between undergraduate grades and cognitive relatedness on graduate grades. For bachelor students with high cognitive relatedness, the relationship between bachelor grades and master grades is about twice as strong compared to bachelor students with low cognitive relatedness. This is an important finding because it shows that undergraduate grades, the most common admission instrument in higher education, have limited usefulness for students with relatively unrelated undergraduate programs. Admissions officers need to carefully assess their admission instruments for such students and rely less on grades when it comes to the decision to admit students.

Introduction

In recent years, the number of students applying to graduate and master programs has drastically increased (Darolia, Potochnick, and Menifield Citation2014; Smyth Citation2016). This trend, combined with growing student mobility, has made student populations larger and more diverse than ever (Beine, Noël, and Ragot Citation2014; Schwager et al. Citation2015). This greatly complicates the admission decisions that student admission officers have to make because they need to assess more students with more diverse educational backgrounds which can lead to students being wrongfully accepted or rejected (van Ooijen-van der Linden et al. Citation2017). To prevent this from happening, empirical studies and meta-analyses have studied the predictive validity of various admission instruments (e.g. Kuncel, Kochevar, and Ones Citation2014; Kuncel, Hezlett, and Ones Citation2001; Westrick et al. Citation2015b). The majority of these studies focus on admission instruments that study how cognitive skills predict student success, often measured as degree attainment or average grades.

A cognitive admission instrument that is commonly used in practice but receives little scientific attention is the relatedness between previous education and the educational program the student is applying to. This is remarkable since it is widely accepted that domain-specific knowledge influences student success (Dochy Citation1994). Cognitive relatedness (also known as cognitive proximity) is a concept that has received much attention in disciplines that study innovation and learning, such as economic geography, bibliometrics and management. It expresses the similarity in knowledge base between individuals, groups and organizations (Nooteboom Citation2000; Boschma Citation2005). In the case of graduate student admissions, the term refers to the relatedness between the undergraduate program of students and the graduate program they apply for. Cognitive relatedness is a particularly important admission instrument in graduate programs because prospective students often come from a wide variety of undergraduate programs. This contrasts with applications to undergraduate programs, wherein students come from high schools with more comparable curricula. A student applying to a graduate program usually needs to have a degree in an undergraduate program or major in a related subject to prove that they possess the knowledge to graduate within a certain amount of time. However, the influence of the relatedness of the students’ knowledge base on their performance in graduate programs has not yet been studied. The cognitive relatedness of previous education might also affect the predictive value of commonly used admission indicators, such as grade attainment, as the knowledge base that previous grades represent becomes less relevant as cognitive relatedness decreases. Cognitive relatedness between undergraduate and graduate programs can influence student success both directly and indirectly.

The student admission literature has dedicated some attention to the relatedness of prior knowledge and educational background to student success. For example, Hailikari, Nevgi, and Lindblom-Ylänne (Citation2007) concluded in their study on mathematics students that ‘the knowledge students bring to the course has a significant facilitating effect on learning’ (p. 330) and is a strong predictor of the students’ grades. Arzuman, Ja’afar, and Fakri (Citation2012) found that students with a background in biology perform better in graduate medical programs than other students. Dochy, de Rijdt, and Dyck (Citation2002) report that the amount and quality of prior knowledge explains a large part of the variance of students’ grades. These studies show that the relatedness of prior knowledge is most likely a relevant admission criterion. However, all these studies compared groups of students with one specific educational background to groups without this background. They did not test the effect of how much prior knowledge or education is related to the follow-up program for which success is measured. Such a binary approach does not capture the great variety in the cognitive relatedness of prior education programs to graduate programs for which students apply. A more detailed measure is needed. In this article, we test such a measure in the form of the cosine similarity between graduate and undergraduate programs (Van Eck and Waltman Citation2009). Cosine similarity is a common measure from bibliometric science to measure the cognitive relatedness between two fields of knowledge, usually expressed by patents (Alstott et al. Citation2017) or scientific publications (Rafols Citation2014). We apply this measure in the context of higher education studies by answering the following research question: How does the cognitive relatedness between previous education and graduate education predict the success of graduate students?

To answer this question, we collected data about master applications from a multidisciplinary geoscience faculty at a large university in the Netherlands. Our results show that the more related a bachelor’s program is to a master’s program, the more predictive grades achieved in the bachelor’s program are for grades obtained in the master’s program. Previous grades become a less valid admission instrument as cognitive relatedness decreases. This is an important finding, as grades in bachelor’s programs are very commonly used as an admission instrument. Admissions officers need to account for the cognitive relatedness between a students’ bachelor’s and master’s programs when using grades to predict student success. We add methodological novelty to the field of higher education research by applying concepts from economic geography and methods from bibliometric sciences to quantify this cognitive relatedness. These concepts and methods can also be applied in other educational contexts, such as diversity of student teams and cohorts and teacher–student relatedness.

Theoretical framework

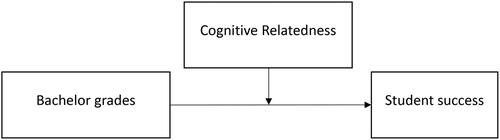

displays our main concepts and how we hypothesize their relationships.

Student success

In this article, we define student success as ‘academic achievement, engagement in educationally purposeful activities, satisfaction, acquisition of desired knowledge, skills, and competencies, persistence, and attainment of educational objectives’ (Kuh et al. Citation2007, p. 7). Most often, students are admitted to programs when they are expected to succeed. One’s conception of what a successful student is influences the way in which students are selected and which admission instruments are used. In higher education, student success has traditionally been associated with academic success, which is the achievement of desired learning outcomes (see, for instance, Kuh et al. Citation2011). This is often operationalized by degree attainment, time needed to obtain a degree or grades (de Boer and Van Rijnsoever Citation2022). These are also the measures that we will employ in this study. Grades are the most commonly used indicator, as they are the primary method of indicating how well the student performed in tests and coursework and reflect students’ self-regulatory competencies (Galla et al. Citation2019). Grades are awarded to students throughout the program, providing consistent insight into the applicant’s cognitive skills.

Cognitive relatedness

The cognitive relatedness of prior education can influence one’s success in master’s programs. To understand the concept of cognitive relatedness, we first need to discuss prior knowledge. This concept can be defined as ‘the whole of a person’s actual knowledge that: (a) is available before a certain learning task, (b) is structured in schemata, (c) is declarative and procedural, (d) is partly explicit and partly tacit, (e) and is dynamic in nature and stored in the knowledge base’ (Dochy Citation1994, p. 4699). Studies have shown that having prior knowledge in an area facilitates the learning of related new knowledge (Dochy, Segers, and Buehl Citation1999; Kaplan and Murphy Citation2000). Students with prior knowledge generally reflect more on their learning processes and are better able to contextualize new content and prioritize learning goals (Taub et al. Citation2014). Prior knowledge also has a positive effect on students’ grades and test scores (Dochy, Segers, and Buehl Citation1999; Hailikari, Katajavuori, and Lindblom-Ylanne Citation2008; Lin and Liou Citation2019). In the student admissions context, prior knowledge is normally measured in a comparative manner: the student either has or does not have it. However, relatedness thinking in management, economic geography, and science, technology and innovation studies can provide a more multidimensional measurement. In these fields, the concept of cognitive relatedness is introduced, which can be defined as the similarities in the way actors perceive, interpret, understand and evaluate the world (Wuyts et al. Citation2005, p278). In other words, it refers to the extent to which two actors share the same knowledge base (Nooteboom Citation2000).

One could apply this view on relatedness between education programs. Many master’s programs require students to have a bachelor’s degree in a related subject. Cognitive relatedness expresses how much the subject is related to the master’s program. The higher this relatedness, the better the students are able to absorb and interpret the new knowledge offered in master’s programs. This will increase the chances of success in the program in terms of receiving higher grades and the ability to attain a degree more quickly. This led to our first hypothesis:

H1: Cognitive relatedness has a positive influence on master student success in terms of: (a) degree attainment, (b) timely graduation, (c) average grades attained in the master’s program, and (d) grades attained in the first year of the master’s program.

Bachelor’s program grades

Grades have been one of the most common admission instruments (Steenman Citation2018) and a consistently good predictor of student success (Kuncel, Credé, and Thomas Citation2005, Citation2007; Westrick et al. Citation2015b). This is because, independent of the content of the bachelor’s program, grades reflect self-regulatory competencies (Galla et al. Citation2019). Grades further reflect the students’ overall cognitive ability and intelligence (Kuncel, Credé, and Thomas Citation2007). All are needed to for students to graduate. Hence, we formulated the following hypothesis:

H2: Bachelor’s program grades have a positive influence on success as a master’s program student in terms of: (a) degree attainment, (b) timely graduation, (c) average grades attained in the master’s program, and (d) grades attained in the first year of the master’s program.

The interaction between cognitive relatedness and bachelor’s program grades

In addition to these two direct effects, we also expect an interaction effect between cognitive relatedness and bachelor’s program grades when it comes to predicting student success. This is because grades in cognitively related bachelor programs indicate the degree to which the student is knowledgeable about the contents of the master’s program. Students who have received high grades in a strongly related bachelor’s program are thus better able to absorb and interpret the new knowledge offered in their master’s program. Students with high grades in their bachelor’s program could be more motivated to pursue master’s programs in the same area because they want to continue learning in an area where they have previously been successful (Sojkin, Bartkowiak, and Skuza Citation2012). Hence, we formulated the following hypothesis:

H3: Cognitive relatedness positively affects the relationship between bachelor’s program grades and success as a master’s program student success in terms of: (a) degree attainment, (b) timely graduation, (c) average grades attained in the master’s program, and (d) grades attained in the first year of the master’s program.

Control variables

In this study, we also include three control variables that can, according to the existing literature, have an effect on our dependent and independent variables.

First, we controlled for the educational institutes the student attended before applying to their master’s program. The literature shows that student success is not only explained by the skills and knowledge of the students (Pritchard and Wilson Citation2003) but also depends on their academic and social experience (Tinto, Goodsell, and Russo Citation1993). Academic experiences include interaction with staff and faculty both inside and outside the classroom, as well as formal and informal activities or interactions with peers during their time at university (Townsend and Wilson Citation2006). These experiences contribute to a student’s sense of belonging to the institute which is found to have a positive influence on student retention. There is evidence in the academic literature for the so-called ‘transfer shock’, where students who enter university from a different kind of institute need time to adjust, resulting in lower grades (House Citation1989; Rhine, Milligan, and Nelson Citation2000). Master’s students who completed their bachelor’s degree at the same institution have a higher sense of belonging compared to new entrants as they are already familiar with the institutional routines and faculty staff, which has a positive influence on student success. They are also likely to have high cognitive relatedness, given that their master’s program often overlaps with, and builds on, their bachelor’s program.

Finally, we controlled for the demographic variables age and gender. Age and gender have a well-reported influence on student success, with multiple studies finding that older students and female students perform better (Ofori Citation2000; Cuddy, Swanson, and Clauser Citation2008; Adam et al. Citation2015). We also know that demography has an influence on the cognitive area, and therefore, cognitive relatedness, in which students are active. For example, male students are still overrepresented in STEM programs (Alon and Diprete Citation2015; Gomez Soler, Abadía Alvarado, and Bernal Nisperuza Citation2020). The completion of study programs varies in length between academic disciplines, which means that cognitive relatedness has a relationship with student age, as students from disciplines that take longer to graduate are older when they start with their master’s program.

Methods

We sought and gained approval for the study and data management plan from the ethics committee of our faculty. Only data relevant to the research was extracted. All data were anonymized.

Data collection

We collected data about student applications at a large multidisciplinary faculty at a university in the Netherlands. We did this for four cohorts of students who started their program between 2014 and 2017. All students included in the sample started one of 10 two-year, English-speaking master’s programs offered at the faculty. The programs are in the fields of geology, geochemistry, geophysics hydrology, economic geography, geographical planning, marine science, innovation science, sustainability, sustainable business and energy, and therefore cover the natural sciences or the social sciences to varying degrees.

To be considered, students are required to have enough domain-specific knowledge. They can show this by having completed a related bachelor’s program and, in some cases, an additional tailor-made pre-master’s program. Students provided the admission office with a list of attained grades during their bachelor’s degree, which is also used to evaluate the amount of domain-specific knowledge. Finally, students provide their resume, a personal application essay, and a letter of recommendation. Each admission was made on a case-to-case basis.

The time window between 2014 and 2017 provided sufficient time for students to complete their master’s programs by the time data collection began. After data cleaning, the sample consisted of 2701 students from all over the world. 650 of these students dropped out at some point in their program. Despite our efforts, we were unable to fully complete the data for each student. The number of students that finished their master’s program and for which all data was available is 1154. The number of students who entered the program and for which we were able to reconstruct a complete admission file was 1307.

Measurement

Student success

We measured student success in four ways. First, whether students exited their program with a degree. We call this binary variable ‘degree attainment’. Second, timely graduation. This was measured by the number of days it took the student to complete the master’s program. We then multiplied the number of days by −1 so that a higher value corresponds to higher study success. The third and fourth measures consist of the students’ attained grades during the program. Students’ progress at different speeds throughout their program and often need to adjust to a new master’s program and institute (Myles and Cheng Citation2003; Arzuman, Ja’afar, and Fakri Citation2012). Therefore, it is important to measure grades at various points in the study program. As an indicator for grades, we used the average grade of the student during the entire master’s program and their average grades during the first year. All programs fit in most of their coursework in the first year, whereas the second year consists mostly of internships and a thesis.

Cognitive relatedness

A methodological innovation of this study is that we rely on the cosine similarity measure from bibliometrics to measure cognitive relatedness. The cosine similarity is the most commonly used similarity measure in the field of bibliometrics (and information sciences), due its applicability to information retrieval (Van Eck and Waltman Citation2009). It has been a staple in this academic discipline since the 1960s (Salton Citation1963). In the context of relatedness of knowledge, it has been mostly used to calculate citation similarities between individual scientists, academic departments and institutes, and even entire academic fields. It is commonly applied to data about scientific publications (Rafols Citation2014), but it has also been applied to patents (Alstott et al. Citation2017). Cosine similarity has also found use in other academic disciplines such as medicine, data science and text mining (Li and Han Citation2013; Ye Citation2015). As higher education programs are based on one or more scientific disciplines, such a measure is a welcome addition to the methodological toolkit used in higher education, as it can be used to calculate similarities between academic programs, courses or students. We used cosine similarity to measure cognitive relatedness between the subject categories that correspond to bachelor’s and master’s programs of students in the dataset.

Mathematically, the cosine similarity equals the ratio between the number of times two objects (A and B) are observed together and the geometric mean of the number of times A and B are observed individually (Van Eck and Waltman Citation2009). Cosine similarity is calculated as follows:

To calculate the cosine similarity between academic disciplines, we utilized the Web of Science (WoS), a database that hosts academic articles, conference proceedings and books. WoS is hosted by the publisher Thompson Reuters and is considered one of the most important multidisciplinary bibliographic databases (Wang and Waltman Citation2016). The reason why WoS is useful in this study is because every article or book in their collection is assigned to at least one of 263 subject categories, such as geological engineering, management and environmental sciences. The bachelor’s and master’s programs of each student were manually linked to one of these 263 categories by the first author. We determined the contents of the bachelor and master programs with a qualitative study of the program descriptions and, in case of the master programs, a short interview with the program director. Based on this, the first author assigned a WoS category to each bachelor or master program, which was then checked by the second author. The program was therefore the unit of analysis. In the vast majority of cases, the authors quickly reached consensus on which category to assign to which program. For some, this was more difficult. We therefore created two more cognitive relatedness variables using our second and third choice assigned categories, and ran these as robustness checks. In these models, there were no different outcomes compared to our main model, which implies our main cognitive relatedness measure is quite robust.

The next step was to construct a co-citation matrix, which lists how often articles from one WoS category cite every other category. The base data for this matrix was provided by the Centre for Science and Technology Studies in Leiden, the Netherlands. This results in a 263 by 263 matrix that contains the co-citation scores for each combination of categories. For every individual category, we computed a vector that lists all the co-citation scores of that single category. We then took the co-citation vector of the category that was linked to the students’ bachelor programs and the co-citation vector that was linked to the students’ master programs. We calculated the cosine similarity between these two vectors, which returned a value between 0 and 1. This value represents how similar the citation patterns of the bachelor’s program and master’s program categories are. If the cosine similarity is equal to 1, the citation patterns are identical. The closer this value is to zero, the lower the cognitive relatedness between bachelor’s and master’s programs.

Control variables

The average bachelor grades of students who completed a Dutch bachelor’s program were extracted from the faculties’ data storage application. For the 843 international students, the acquisition of this data was more complex. Application data for international students were stored in PDF files that listed the full academic transcript of each student in a bachelor’s program. A research assistant manually examined every file and extracted the average grades in the bachelor’s program. An additional difficulty for international students was that grading curves and educational systems vary by country. Information from the Dutch organization Nuffic, which provides information on educational systems across the world, was used to transform international grades into a Dutch equivalent between 1 and 10.

The age and gender of each student were known to the faculty as part of the admission process. Gender was measured as a binary variable, with men coded as 1 and women as 2. The age of the students was measured by their age in years at the starting day of the master’s program. We measured the students’ academic and institutional backgrounds with a categorical variable by looking at the type of degree the student used to enter the master’s program, identifying six categories: (1) the student entered with a bachelor’s degree attained at the same faculty as his/her master’s education, (2) with a bachelor’s degree attained at the same university, (3) with a bachelor’s degree attained in the same country, (4) with a bachelor’s degree from a foreign country, (5) from a university of applied sciences, or (6) after completing a pre-master’s program. Pre-master programs are tailor-made six-month programs intended for bachelor students from universities of applied science or other Dutch universities.

Analyses

We estimated three separate models for each of the four dependent variables, resulting in 12 models in total. The first model of each set of three (Models 1, 4, 7 and 10) only includes the control variables. In Models 2, 5, 8 and 11, we added the independent variables as main effects. These models test Hypotheses 1a–d and 2a–d. In Models 3, 6, 9 and 12, we added the interaction terms between the independent variables, which tested hypotheses 3a-d, respectively. Models 1, 2 and 3 have degree attainment, a binary variable, as the dependent variable. Therefore, we estimated a binary logit model. For Models 4 to 12, we estimated an ordinary least squares (OLS) multiple linear regression, as the dependent variables tested in these models are continuous.

Results

Model fit

We used the adjusted R2 to evaluate the model fit for the OLS regression models (). For Models 8, 9, 11 and 12, the adjusted R2 was greater than 0.2, which means these models fit well. For Models 4, 5 and 6, the adjusted R2 was higher than 0.1, which was an acceptable model fit. However, for Models 7 and 10, the adjusted R2 was quite low (< 0.1). This is not problematic, as these models only contained control variables. An inspection of the model residuals revealed that these were normally distributed. We also made a scatterplot of the residual and predicted values of the OLS models to evaluate them for heteroscedasticity: there was reason to assume that heteroscedasticity occurred. We used variance inflation factors (VIF) to check for multicollinearity. All VIFs for noninteraction variables were below 2.7, which indicated no problematic levels of multicollinearity. As expected, the interaction variables had a much higher VIF, as these variables were, by definition, multicollinear to the variables that made up the interaction effect.

Table 1. Regression results for Models 1 to 12. For Models 1 to 3, we report the McFadden R2. For Models 4 to 12, we report the adjusted R2.

Hypothesis tests

None of the models revealed a significant direct relationship between cognitive relatedness and study success. These models thus give no support to Hypotheses 1a-d. This means that cognitive relatedness does not have a direct effect on the students’ chance of attaining their degree (and by extension, of dropping out), nor the speed at which they attain their degree and the grades they attain during their master. However, the main effects models (2, 5, 8 and 11) reveal that there is a positive and significant relationship between average bachelor’s program grades and all measures of study success, which lends support to Hypothesis 2a-d. Students with higher grades in their bachelor’s program are more likely to graduate, graduate sooner and with higher grades. However, the relationship is much more significant for predicting grades rather than predicting (timely) degree attainment. This is to be expected, as bachelor’s and master’s grades are similar measures. Models 3, 6, 9 and 12 further refine our findings. There was a strong positive interaction effect between cognitive relatedness and average bachelor’s program grades in the models that predicted grades in master’s programs (Models 9 and 12). This means that average bachelor grades attained in cognitively strongly related programs are a better predictor for master’s program grades than those attained in cognitively less related programs. This finding is in line with Hypotheses 3c and 3d. We did not find this interaction effect in Models 3 and 6. This is likely because the main effect of average bachelor’s grades was far weaker for the (timely) degree attainment variables. Hence, we did not find support for Hypotheses 3a and 3 b.

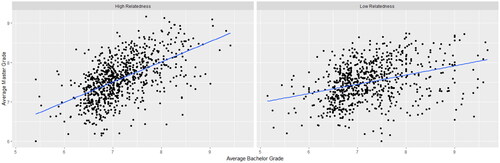

To further illustrate the significant interaction effect, we divided our sample into two groups. One group contained students with the lowest cognitive relatedness (below 0.5), and the other group contained students with cognitive relatedness above 0.5. For each group, we ran the OLS models to predict master grades with the average bachelor grades and the control variables. displays the results of these regressions. We see that the estimator for average bachelor grade is around twice as high for students with high cognitive relatedness as it is for students with low relatedness. Thus, if students have high cognitive relatedness, a one-point increase in bachelor’s program grades leads to higher master’s program grades roughly twice as fast. plots the relationships between the average bachelor grades and the master grades for both groups. The figure on the left plots the relationship for the groups of students with high relatedness, and the plot on the right plots the students with low relatedness. The adjusted R2 shows us that model fit is better for the models testing the interaction effect for students with high cognitive relatedness. Furthermore, we see that the coefficient for bachelor grade is high in these models as well. This means that average bachelor’s grade is a much better predictor for students with high cognitive relatedness than for students with low cognitive relatedness ( and ).

Figure 2. Plot of correlations between master and bachelor grades for groups with low and high cognitive relatedness.

Table 2. Regression results for additional interaction effects.

Control variables

When looking at the demographic control variables, Models 7 to 12 show a significant effect of gender. Female students attain higher grades than males, which is in line with results from earlier studies (Jacob Citation2002; Buchmann and DiPrete Citation2006). Models 1–3 show a significant negative effect for age, which means that older students are less likely to attain their degrees. Students with a background from the same university are less likely to attain master degrees, but graduate quicker than students from other backgrounds. Students from the Netherlands also graduate faster compared to students in other groups, a result that could be because they are used to the Dutch educational system (Kurysheva et al. Citation2022). International students and students from universities of applied sciences attain significantly lower grades, both in the first year and over the course of the entire program. However, international students graduate earlier, perhaps because they pay a higher tuition fee. Alternatively, this result could be due to international students tending to enroll in master’s programs while on a scholarship. Students who entered after completing a pre-master’s program have slightly higher average grades than the other groups (significant at the 10% level). Pre-master’s programs may help bridge the gap between the previous and the master’s program.

Discussion and conclusion

We posed the following research question: How does the cognitive relatedness between previous education and graduate education predict the success of graduate students? We have modelled and discussed four different measures of study success and studied cognitive relatedness as a direct and as an interaction effect with bachelor’s program grades. Our models showed no direct significant effect between cognitive relatedness and study success. However, we do find that there is an interaction effect between cognitive relatedness and the students’ average bachelor’s program grade. Cognitive relatedness does not predict study success in itself, but having high cognitive relatedness increases the predictive validity of bachelor’s program grades for predicting master’s program grades.

Theoretical implications

Our findings add critical new knowledge to the academic field of student admissions. We introduce the concept of cognitive relatedness from bibliometric science to create a robust and non-categorical criterion for measuring the degree in which study programs are related to each other. The finding that bachelor’s program grades are about twice as predictive for highly related programs compared to lowly related programs gives a clear indication that cognitive relatedness matters. The aspect of bachelor grades that represents self-regulatory competences and general cognitive abilities is about half as predictive of grades in a master’s program compared to the aspect that represents field-specific prior knowledge about the master’s program. This means there are clear limitations to using bachelor’s program grades as a predictor of grades in master’s programs. This is a critical finding, as bachelor’s program grades are one of the most widely studied admission instruments. We recommend that future researchers exercise caution when using bachelor’s programs grades as variables and that they replicate this finding. The interaction effect between bachelor’s program grades and cognitive relatedness only influences master’s program grades; it does not affect degree attainment or the time it takes for a student to graduate. Students with a low cognitive relatedness are not at greater risk of dropping out, encountering study delays or attaining lower grades in their masters. We recommend that future researchers test if the interaction effect between cognitive relatedness and grades obtained during prior education also holds true for grades obtained in high schools.

This study further enriches the student admission literature with new methodological tools from bibliometric science using cosine similarity as a measure of cognitive relatedness (Van Eck and Waltman Citation2009). This indicator can be useful for understanding the learning processes and outcomes of students with different knowledge backgrounds.

Limitations

The literature on student admission is predominantly focused on predicting study success using cognitive performance. In this article, we also measure student success in a relatively traditional and cognitive way by measuring grades, degree attainment and timely graduation. However, we call upon future researchers to use other noncognitive measures of study success to test these relationships.

As with most studies on student admission, this study suffers from admission bias. Students with very low cognitive relatedness are often not admitted to the study because admission officers view that their prior knowledge does not have enough overlap with the contents of the program. This admission bias probably does not influence our main finding: the interaction effect. Allowing students with a lower cognitive relatedness to enter would not alter the composition of the group of students with a high relatedness or change the fact that, for these students, grades are a stronger predictor of study success.

Our data collection was done at a single faculty from a single institute which brings to mind questions of generalizability. Our results should be generalizable for other disciplines, faculties and institutes because the faculty spans a number of disciplines from the social and natural sciences. We controlled for these disciplines with education program dummies, and found they do not significantly influence our main findings. Moreover, we replicate findings from other disciplines (Sandow et al. Citation2002; Halberstam and Redstone Citation2005; Kulatunga-Moruzi and Norman Citation2002; Allen et al. Citation2016). These observations make it likely that our findings will hold up in other contexts as well, but this is a suitable topic for further research.

Practical implications

We suggest that admissions officers who use bachelor grades as admission instruments look critically at the content of the prior education of students. For students with low cognitive relatedness, bachelor grades are less suitable as admission instruments. We encourage admissions officers for master’s programs to have a solid grasp of the relatedness of various bachelor’s programs. They can achieve this by doing a qualitative check on the relatedness of their program and the applicants bachelor program. Designing a tool that shows the relatedness of various study programs would be very helpful in this regard. The database used in this study can serve as a point of departure and we are happy to provide our code to admissions officers. Admission officers can also use other cognitive admission instruments that measure the cognitive skills of students: the Graduate Record Examinations (GRE) or General Management Admission Test (GMAT) are valid alternatives (Kuncel, Credé, and Thomas Citation2005, Citation2007; de Boer and Van Rijnsoever Citation2022).

Finally, admission officers can choose not to rely on admission instruments that predict grades obtained in a master’s program. However, grades obtained in a master’s program are probably one of the best indicators to measure study success in the cognitive domain, on which much evidence to predict study success in master’s programs are also based. However, admitting applicants with a low relatedness can be beneficial to both students and teachers, as diversity and interdisciplinarity are important and lead to higher study success. With a careful admission procedure, using cognitive relatedness should not hinder this, and students can enjoy the benefits of a multidisciplinary classroom.

References

- Adam, J., M. Bore, R. Childs, J. Dunn, J. Mckendree, D. Munro, and D. Powis. 2015. “Predictors of Professional Behaviour and Academic Outcomes in a UK Medical School.” Medical Teacher 37 (9): 868–880. doi:10.3109/0142159X.2015.1009023.

- Allen, R. E., C. Diaz, K. Gant, A. Taylor, and I. Onor. 2016. “Preadmission Predictors of On-Time Graduation in a Doctor of Pharmacy Program.” American Journal of Pharmaceutical Education 80 (3): 43. doi:10.5688/AJPE80343.

- Alon, S. and T. A. Diprete. 2015. Gender Differences in the Formation of a Field of Study Choice Set. Sociological Science 2: 50–81. doi:10.15195/v2.a5.

- Alstott, J., G. Triulzi, B. Yan, and J. Luo. 2017. “Mapping Technology Space by Normalizing Patent Networks.” Scientometrics 110 (1): 443–479. doi:10.1007/s11192-016-2107-y.

- Arzuman, H., R. Ja’afar, and N. M. R. M. Fakri. 2012. “The Influence of Pre-Admission Tracks on Students’ Academic Performance in a Medical Programme.” Education for Health (Abingdon, England) 25 (2): 124–127. doi:10.4103/1357-6283.103460.

- Beine, M., R. Noël, and L. Ragot. 2014. “Determinants of the International Mobility of Students.” Economics of Education Review 41: 40–54. doi:10.1016/j.econedurev.2014.03.003.

- Boschma, R. 2005. “Proximity and Innovation.” Regional Studies 39 (1): 61–74. doi:10.1080/0034340052000320887.

- Buchmann, C., and T. A. DiPrete. 2006. “The Growing Female Advantage in College Completion.” American Sociological Review 71 (4): 515–541. doi:10.1177/000312240607100401.

- Crisp, G., and I. Cruz. 2009. “Mentoring College Students: A Critical Review of the Literature Between 1990 and 2007.” Research in Higher Education 50 (6): 525–545. doi:10.1007/sl.

- Cuddy, M. M., D. B. Swanson, and B. E. Clauser. 2008. “A Multilevel Analysis of Examinee Gender and USMLE Step 1 Performance.” Academic Medicine 83 (Supplement): S58–S62. doi:10.1097/ACM.0b013e318183cd65.

- Darolia, R., S. Potochnick, and C. E. Menifield. 2014. “Assessing Admission Criteria for Early and Mid-Career Students.” Education Policy Analysis Archives 22: 101. doi:10.14507/epaa.v22.1599.

- de Boer, T., and F. Van Rijnsoever. 2022. “In Search of Valid Non-Cognitive Student Selection Criteria.” Assessment & Evaluation in Higher Education 47 (5): 783–800. doi:10.1080/02602938.2021.1958142.

- Dochy, F., C. de Rijdt, and W. Dyck. 2002. “Cognitive Prerequisites and Learning.” Active Learning in Higher Education 3 (3): 265–284. doi:10.1177/1469787402003003006.

- Dochy, F. J. R. C. 1994. Prior Knowledge and Learning, International Encyclopedia of Education (Second edition, pp. 4698–4702). Oxford: Pergamon Press.

- Dochy, F., M. Segers, and M. M. Buehl. 1999. “The Relation Between Assessment Practices and Outcomes of Studies.” Review of Educational Research 69 (2): 145–186. doi:10.3102/00346543069002145.

- Galla, B. M., E. P. Shulman, B. D. Plummer, M. Gardner, S. J. Hutt, J. P. Goyer, S. K. D’Mello, A. S. Finn, and A. L. Duckworth. 2019. “Why High School Grades Are Better Predictors of On-Time College Graduation Than Are Admissions Test Scores.” American Educational Research Journal 56 (6): 2077–2115. doi:10.3102/0002831219843292.

- Gomez Soler, S. C., L. K. Abadía Alvarado, and G. L. Bernal Nisperuza. 2020. “Women in STEM.” Higher Education 79 (5): 849–866. doi:10.1007/s10734-019-00441-0.

- Hailikari, T., N. Katajavuori, and S. Lindblom-Ylanne. 2008. “The Relevance of Prior Knowledge in Learning and Instructional Design.” American Journal of Pharmaceutical Education 72 (5): 113. doi:10.5688/aj7205113.

- Hailikari, T., A. Nevgi, and S. Lindblom-Ylänne. 2007. “Exploring Alternative Ways OF Assessing Prior Knowledge, Its Components and Their Relation to Student Achievement.” Studies in Educational Evaluation 33 (3–4): 320–337. doi:10.1016/j.stueduc.2007.07.007.

- Halberstam, B., and F. Redstone. 2005. “The Predictive Value of Admissions Materials on Objective and Subjective Measures of Graduate School Performance in Speech-Language Pathology.” Journal of Higher Education Policy and Management 27 (2): 261–272. 10.1080/13600800500120183. doi:10.1080/13600800500120183.

- House, J. D. 1989. “THe Effect of Time of Transfer on Academic Performance of Community College Transfer Students.” Journal of College Student Development 30 (2): 144–147.

- Jacob, B. A. 2002. “Where the Boys Aren’t.” Economics of Education Review 21 (6): 589–598. doi:10.1016/S0272-7757(01)00051-6.

- Kaplan, A. S., and G. L. Murphy. 2000. “Category Learning with Minimal Prior Knowledge.” Journal of Experimental Psychology. Learning, Memory, and Cognition 26 (4): 829–846. doi:10.1037/0278-7393.26.4.829.

- Kuh, G. D., J. Kinzie, J. A. Buckley, B. K. Bridges, and J. C. Hayek. 2007. Piecing Together the Student Success Puzzle. Google Books. https://books.google.nl/books?hl=en&lr=&id=E2Y15q5bpCoC&oi=fnd&pg=PR7&dq=student+success+defined&ots=ZxINljxrKN&sig=rvxsWCBH_MWjvBh4p4-oRMMQuwc#v=onepage&q=studentsuccessdefined&f=false

- Kuh, G. D., J. Kinzie, J. H. Schuh, and E. J. Whitt. 2011. “Fostering Student Success in Hard Times.” Change: The Magazine of Higher Learning 43 (4): 13–19. doi:10.1080/00091383.2011.585311.

- Kulatunga-Moruzi, C., and G. R. Norman. 2002. “Validity of Admissions Measures in Predicting Performance Outcomes.” Teaching and Learning in Medicine 14 (1): 34–42. doi:10.1207/S15328015TLM1401_9.

- Kuncel, Nathan R., Rachael Kochevar, and D. Ones. 2014. “A Meta-Analysis of Letters of Recommendation in College and Graduate Admissions.” International Journal of Selection and Assessment 22 (1): 101–107. doi:10.1111/ijsa.12060.

- Kuncel, N. R., M. Credé, and L. L. Thomas. 2005. “The Validity of Self-Reported Grade Point Averages, Class Ranks, and Test Scores.” Review of Educational Research 75 (1): 63–82. (https://www.jstor.org/stable/pdf/3516080.pdf?refreqid=excelsior%3Ae3b600fc750bae83a1c4a89972b01feb. doi:10.3102/00346543075001063.

- Kuncel, N. R., M. Credé, and L. L. Thomas. 2007. “A Meta-Analysis of the Predictive Validity of the Graduate Management Admission Test (GMAT) and Undergraduate Grade Point Average (UGPA) for Graduate Student Academic Performance.” Academy of Management Learning & Education 6 (1): 51–68. https://www.jstor.org/stable/40214516. doi:10.5465/amle.2007.24401702.

- Kuncel, N. R., M. Credé, L. L. Thomas, D. M. Klieger, S. N. Seiler, and S. E. Woo. 2005. “A Meta-Analysis of the Validity of the Pharmacy College Admission Test (PCAT) and Grade Predictors of Pharmacy Student Performance.” American Journal of Pharmaceutical Education 69 (3): 51–347. doi:10.5688/aj690351.

- Kuncel, N. R., S. A. Hezlett, and D. S. Ones. 2001. “A Comprehensive Meta-Analysis of the Predictive Validity of the Graduate Record Examinations.” Psychological Bulletin 127 (1): 162–181. doi:10.1037/0033-2909.127.1.162.

- Kurysheva, A., N. Koning, C. M. Fox, H. V. M. van Rijen, and G. Dilaver. 2022. “Once the Best Student Always the Best Student?” International Journal of Selection and Assessment 30 (4): 579–595. doi:10.1111/ijsa.12397.

- Li, B., and L. Han. 2013. Distance weighted cosine similarity measure for text classification. Lecture Notes in Computer Science, 8206: 611–618. doi:10.1007/978-3-642-41278-3_74.

- Lin, J. J. H., and P.-Y. Liou. 2019. “Assessing the Learning Achievement of Students from Different College Entrance Channels.” Assessment & Evaluation in Higher Education 44 (5): 732–747. doi:10.1080/02602938.2018.1532490.

- Myles, J., and L. Cheng. 2003. “The Social and Cultural Life of Non-Native English Speaking International Graduate Students at a Canadian University.” Journal of English for Academic Purposes 2 (3): 247–263. doi:10.1016/S1475-1585(03)00028-6.

- Nooteboom, B. 2000. “Learning by Interaction.” Journal of Management and Governance 4 (1/2): 69–92. doi:10.1023/A:1009941416749.

- Ofori, R. 2000. “Age and ‘Type’ of Domain Specific Entry Qualifications as Predictors of Student Nurses’ Performance in Biological, Social and Behavioural Sciences in Nursing Assessments.” Nurse Education Today 20 (4): 298–310. doi:10.1054/nedt.1999.0396.

- Pritchard, M. E., and G. S. Wilson. 2003. “Using Emotional and Social Factors to Predict Student Success.” Journal of College Student Development 44 (1): 18–28. (doi:10.1353/csd.2003.0008.

- Rafols, I. 2014. “Knowledge Integration and Diffusion.” Measuring Scholarly Impact (pp. 169–190). Cham: Springer.

- Rhine, T. J., D. M. Milligan, and L. R. Nelson. 2000. “Alleviating Transfer Shock.” Community College Journal of Research and Practice 24 (6): 443–453. doi:10.1080/10668920050137228.

- Salton, G. 1963. “Associative Document Retrieval Techniques Using Bibliographic Information.” Journal of the ACM 10 (4): 440–457. doi:10.1145/321186.321188.

- Sandow, P. L., A. C. Jones, D. D. S. Chuck, W. Courts, J. Watson, D. D. S. R. E., and Peek, Frank. 2002. “Correlation of Admission Criteria with Dental School Performance and Attrition.” Journal of Dental Education 66 (3): 385–392. doi:10.1002/j.0022-0337.2002.66.3.tb03517.x.

- Schwager, I. T. L., U. R. Hülsheger, B. Bridgeman, and J. W. B. Lang. 2015. “Graduate Student Selection.” International Journal of Selection and Assessment 23 (1): 71–79. doi:10.1111/ijsa.12096.

- Smyth, G. 2016. “Collation of Data on Applicants, Offers, Acceptances, Students and Graduates in Veterinary Science in Australia 2001–2013.” Australian Veterinary Journal 94 (1–2): 4–11. doi:10.1111/avj.12396.

- Sojkin, B., P. Bartkowiak, and A. Skuza. 2012. “Determinants of Higher Education Choices and Student Satisfaction.” Higher Education 63 (5): 565–581. doi:10.1007/s10734-011-9459-2.

- Steenman, S. 2018. Alignment of Admission. Amersfoort: Wilco BV.

- Taub, M., R. Azevedo, F. Bouchet, and B. Khosravifar. 2014. “Can the Use of Cognitive and Metacognitive Self-Regulated Learning Strategies be Predicted by Learners’ Levels of Prior Knowledge in Hypermedia-Learning Environments?” Computers in Human Behavior 39: 356–367. doi:10.1016/j.chb.2014.07.018.

- Tinto, V., A. Goodsell, and P. Russo. 1993. “Building Community among New College Students.” Liberal Education 79 (4): 16–21.

- Townsend, B. K., and K. Wilson. 2006. “A Hand Hold for A Little Bit.” Journal of College Student Development 47 (4): 439–456. doi:10.1353/csd.2006.0052.

- Van Eck, N. J., and L. Waltman. 2009. “How to Normalize Cooccurrence Data?” Journal of the American Society for Information Science and Technology 60 (8): 1635–1651. doi:10.1002/asi.21075.

- van Ooijen-van der Linden, L., M. J. van der Smagt, L. Woertman, and S. F. Te Pas. 2017. “Signal Detection Theory as a Tool for Successful Student Selection.” Assessment & Evaluation in Higher Education 42 (8): 1193–1207. doi:10.1080/02602938.2016.1241860.

- Wang, Q., and L. Waltman. 2016. “Large-Scale Analysis of the Accuracy of the Journal Classification Systems of Web of Science and Scopus.” Journal of Informetrics 10 (2): 347–364. doi:10.1016/j.joi.2016.02.003.

- Westrick, P. A., H. Le, S. B. Robbins, J. M. R. Radunzel, and F. L. Schmidt. 2015b. “College Performance and Retention.” Educational Assessment 20 (1): 23–45. doi:10.1080/10627197.2015.997614.

- Wuyts, S., M. G. Colombo, S. Dutta, and B. Nooteboom. 2005. “Empirical Tests of Optimal Cognitive Distance.” Journal of Economic Behavior & Organization 58 (2): 277–302. doi:10.1016/j.jebo.2004.03.019.

- Ye, J. 2015. “Improved Cosine Similarity Measures of Simplified Neutrosophic Sets for Medical Diagnoses.” Artificial Intelligence in Medicine 63 (3): 171–179. doi:10.1016/j.artmed.2014.12.007.