Abstract

This study offers a critical examination of university policies developed to address recent challenges presented by generative AI (GenAI) to higher education assessment. Drawing on Bacchi’s ‘What’s the problem represented to be’ (WPR) framework, we analysed the GenAI policies of 20 world-leading universities to explore what are considered problems in this AI-mediated assessment landscape and how these problems are represented in policies. Although miscellaneous GenAI-related problems were mentioned in these policies (e.g. reliability of AI-generated outputs, equal access to GenAI), the primary problem represented is that students may not submit original work for assessment. In the current framing, GenAI is often viewed as a type of external assistance separate from the student’s independent efforts and intellectual contribution, thereby undermining the originality of their work. We argue that such problem representation fails to acknowledge how the rise of GenAI further complicates the process of producing original work and what it means by originality in a time when knowledge production becomes increasingly distributed, collaborative and mediated by technology. Therefore, a critical silence in higher education policies concerns the evolving notion of originality in the digital age and a more inclusive approach to address the originality of students’ work is required.

Introduction

Since the emergence of powerful generative artificial intelligence (GenAI) tools such as ChatGPT, universities worldwide have been working on policies to regulate the use of these tools among students in assessment (Chan Citation2023a; Russell Group Citation2023). By GenAI, we refer to an artificial intelligence technique capable of generating a variety of new content including but not limited to texts, videos and images (Cao et al. Citation2023). From a post-structural viewpoint, these GenAI policies are not merely responses to GenAI-related problems that exist ‘out there’ in higher education. Instead, they shape how problems are understood by prioritising certain representations over others. The way problems are represented in GenAI policies not only manifests a university’s preferred logic for governing emerging technologies but also reveals deeper assumptions underlying assessment in higher education.

This line of thinking echoes what Bacchi (Citation2010) termed the ‘problem questioning’ paradigm in policy research. According to Bacchi (Citation2012), ‘what we say we want to do about something indicates what we think needs to change and hence how we constitute the “problem”’ (4). For example, ‘at-risk youths’ are often viewed in school policies as a problem that ‘needs to be solved’ (Loutzenheiser Citation2015). The problem of ‘at-risk youths’ can be framed as individuals’ failure or as a structural issue caused by inadequate resources to support youths. The two different problem framings will lead to very different solutions (e.g. punishing ‘at-risk youths’ or enhancing youth support). Therefore, rather than accepting policies at face value, the ‘problem questioning’ paradigm encourages critical scrutiny of what problems are represented and how they are represented through policy discourses (Tawell and McCluskey Citation2021). By doing so, this paradigm also opens possibilities for exploring alternative problem representations that challenge taken-for-granted presuppositions.

Following the ‘problem questioning’ paradigm, this study aims to critically examine higher education policies on GenAI and assessment. In particular, it seeks to understand what are considered problems in higher education assessment in the rise of GenAI and how these problems are represented. The underlying assumptions, potential silences and effects of such problem representations will also be explored. Bacchi’s ‘What’s the problem represented to be’ (WPR) approach, which includes six questions central to the ‘problem questioning’ paradigm, provides the methodological tool to anchor our analysis.

GenAI and the changing assessment landscape in higher education

Early research on GenAI and assessment following the release of ChatGPT has focused on its capability to accomplish assessment tasks such as examinations with multiple-choice or open-ended questions (Bommarito and Katz Citation2022; Gilson et al. Citation2022). Others have explored whether AI-generated outputs can be successfully distinguished by humans and AI detection tools (Gao et al. Citation2022; Cingillioglu Citation2023). Despite some variations in research findings, there is a prevailing consensus that GenAI exhibits satisfactory competence in passing certain professional examinations and generating coherent texts.

On the one hand, these results point to many new opportunities GenAI can bring to higher education assessment. These opportunities include but are not limited to generating feedback through ChatGPT (Dai et al. Citation2023), conducting automated essay scoring (Mizumoto and Eguchi Citation2023), and creating personalised assessment with GenAI assistance (Cotton, Cotton, and Shipway Citation2023).

On the other hand, groundbreaking advancements in GenAI have also presented significant challenges to the field of assessment. Many people are concerned that academic misconduct is on the rise because students can bypass their learning process by submitting AI-generated work as their own for assessment, and there is a lack of reliable AI detection tools (Lodge, Thompson, and Corrin Citation2023). Without proper regulations, these students may gain an unfair advantage over their peers in assessment (Cotton, Cotton, and Shipway Citation2023). There are also concerns about equitable access to GenAI tools (Sullivan, Kelly, and McLaughlan Citation2023), data safety in GenAI use (Yan et al. Citation2023), biases in AI algorithms (Sullivan, Kelly, and McLaughlan Citation2023; Yan et al. Citation2023), a lack of AI literacy training for university teachers and students (Chan Citation2023a), and the spread of fraudulent information generated by AI (Rudolph, Tan, and Tan Citation2023).

With regard to the use of GenAI in assessment, many grey areas without established guidelines have surfaced (Chan Citation2023b). For example, is it academic misconduct if a student independently contributes to the intellectual content but uses GenAI to polish the writing? Should students’ work completed without GenAI assistance be considered more ‘favourable’ and ‘independent’? These questions not only highlight the importance of revisiting key concepts in assessment (e.g. assessment security and validity) but also point to the need for reviewing and redesigning assessment in higher education to better prepare students for a world with AI (Lodge, Thompson, and Corrin Citation2023).

University policies on GenAI and assessment

These challenges place significant pressure on universities to ‘bring order’ to the disrupted assessment landscape through policymaking. Although many AI-related guidelines have been published before the release of ChatGPT, most remain generic and do not specifically address issues in higher education (Schiff Citation2022; Nguyen et al. Citation2023). Schiff (Citation2022) reviewed 24 national AI policy strategies and found that the use of AI in education is largely absent from the policy discourses. Previous policies have also not accounted for the depth and scope of influence brought by recent technological breakthroughs. As GenAI tools are rapidly being used at scale among students, Chan (Citation2023a) argued that ‘more work is still to be done in order to formulate more comprehensive and focused policy documents on AI in education’ (6).

The lack of a robust policy framework on GenAI use in higher education adds to the difficulty for universities to quickly and effectively address GenAI-related challenges in assessment. Initially, GenAI use was prohibited among students in some universities, but this policy was later criticised as unsustainable and counterproductive in cultivating AI-literate citizens for the future (Sullivan, Kelly, and McLaughlan Citation2023). Among these criticisms, a large number of opinion articles and research papers have been published (e.g. Chan Citation2023a; Lodge, Thompson, and Corrin Citation2023; Rudolph, Tan, and Tan Citation2023). For example, Chan (Citation2023a) proposed a GenAI policy framework for university teaching and learning based on survey data on ChatGPT use. The framework highlights three dimensions – pedagogical, ethical and operational – for higher education stakeholders to consider in policymaking. Many international organisations, such as the United Nations Educational, Scientific and Cultural Organization (UNESCO Citation2023) and the Organisation for Economic Co-operation and Development (OECD Citation2023), have also published guidelines on the regulation of GenAI in education. Yet, these guidelines tend to focus on wider issues related to GenAI’s impact on education (e.g. national regulations, digital poverty) rather than on the specific problems faced by higher education assessment.

As the understanding of GenAI evolves, many universities have reviewed and developed their GenAI policies to guide more responsible use of the technology. A notable example is the GenAI policy guidelines co-designed by 24 Russell Group universities in the UK which emphasises how universities should support students and staff to become AI-literate while attending to issues of privacy and plagiarism (Russell Group Citation2023). In relation to higher education assessment, the Tertiary Education Quality and Standards Agency in Australia (TEQSA Citation2023) proposed five guiding principles underlying assessment reform in the GenAI age, such as adopting a programmatic approach to assessment and promoting assessment that emphasises authentic engagement with AI.

These policy documents and guidelines represent a valuable data source for understanding how universities around the world conceive and approach the impacts, benefits and risks associated with GenAI in assessment. However, no research to date has reviewed these university-level policies on GenAI and critical angles are especially lacking. Most of the ongoing policy discussions revolve around the ‘what works’ agenda, such as whether universities have prohibited or allowed the use of GenAI in students’ work, whether AI detection tools should be in use and the potential effectiveness of certain policy advocations (e.g. University World News Citation2023; South China Morning Press Citation2023).

“Problem questioning” in policy research and the WPR framework

The ‘what works’ agenda represents a traditional approach to policy analysis, which believes that policies are designed in response to pre-existing problems (Bacchi Citation2010). The focus of analysis is often on the effects of problem-solving and the problems themselves are left unexamined (Tawell and McCluskey Citation2021). Important as it is, this approach views GenAI policies as functional and overlooks how problems can be socially constructed to reflect certain interests and perspectives.

The ‘problem questioning’ paradigm challenges this approach and contends that policies ‘give shape and meaning to the “problems” they purport to “address”’ (Bacchi and Eveline Citation2010, 111, emphasis original). How problems are represented in policies matters because the representations carry implications for what is considered ‘problematic’ and in need of ‘fixing’. These implications not only shape our worldviews but also influence society in political ways by determining whose voice to include or leave out (Bacchi Citation2012). Therefore, it is not enough to simply analyse university GenAI policies for how well they solve predefined problems in assessment – we also need to question how certain problems came to be seen as problems in the first place.

To facilitate critical problem questioning, Bacchi (Citation2009) proposed the ‘What’s the problem represented to be (WPR)’ framework which was inspired by Foucault’s work on problematisation. The framework consists of six questions (see ). The WPR framework is well-suited for this study because it provides concrete steps and questions to operationalise the problematisation of GenAI policies. Its emphasis on unpacking problem constructions aligns with our research goal to surface the implicit assumptions lodged within GenAI policies.

Table 1. The WPR framework (adapted from Bacchi and Goodwin Citation2016, 20).

Current study

The overarching goal of this study is to provide a critical analysis of the representation of problems in university policies regarding the use of GenAI in assessment. Specifically, we are interested in these research questions:

What’s the major problem represented to be in university policies on the use of GenAI in assessment?

What deep-seated assumptions underlie this problem representation?

What is left unproblematic and silenced in this problem representation?

What potential effects may be produced by this problem representation?

These research questions draw upon four of the six questions in the WPR framework (see , Question 1, 2, 4 and 5). In applying this framework to policy studies, multiple scholars have noted that researchers are free to select ‘which question(s) to investigate, the order of investigation, or the format of investigation’ based on their research needs (Woo Citation2022, 649; see also Nieminen and Eaton Citation2023). Due to the space limit in a single research paper, this study is unable to present a comprehensive genealogy of identified problems (i.e. Question 3 in WPR). As this study analyses policy texts alone, investigating how certain problem representations are disseminated falls outside its scope (i.e. Question 6 in WPR).

Method

Sampling

Since it is infeasible for this study to analyse all available GenAI policies issued by universities worldwide, we adopted purposive sampling to focus on the top 20 universities recognised by the 2024 QS World University Rankings. Among these 20 universities, 10 are located in North America, five in Europe, three in Australia and two in Asia.

Although there are controversies surrounding such university rankings (e.g. bias towards research, commercialisation), policies from these universities often attract extensive public attention and serve as models to other institutions in policymaking. Therefore, our sampling approach allows us to analyse information-rich cases whose problem representations are most likely to exert influence beyond their own university and reveal dominant thinking surrounding GenAI in higher education assessment.

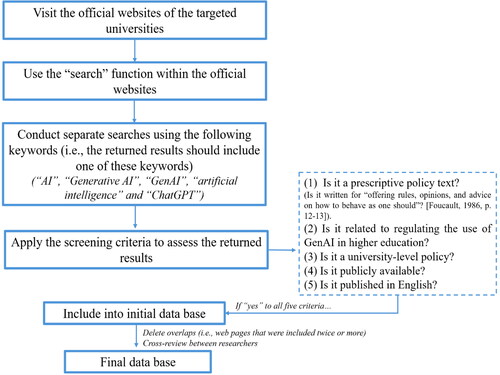

Data collection

We searched the official websites of the 20 top-ranked universities for their policies on the use of GenAI in assessment (see for the detailed data collection procedure). A major guideline directing our inclusion of policies is Foucault’s definition of ‘prescriptive texts’ – i.e. texts that are ‘written for offering rules, opinions, and advice on how to behave as one should’ (1986, 12–13). The inclusion and exclusion criteria for policy texts are outlined in .

Table 2. Inclusion and exclusion criteria.

The search was performed in early November 2023, which is roughly a year since the introduction of ChatGPT. A separate data file was created for each university – all webpages containing GenAI policies on a university’s website were transformed into PDF format and saved in its data file. An Excel sheet was also generated to catalogue the web links to all the included policy texts. This research provides timely insights into GenAI policies following a year of intense discussions in higher education around AI technologies and their role in assessment.

Two research assistants were involved in the search. One examined universities ranked 1–10, while the other focused on universities ranked 11–20. After the individual search, the two research assistants reviewed each other’s results to validate the data. The author cross-checked a sample (around 30%) of their work. Discrepancies were solved by referring back to the university websites and through discussions.

Data analysis

The data analysis began with open coding of the included documents to capture the main contents delivered in these policies. Subsequently, we performed the analysis guided by the four targeted questions in the WPR framework (see ). While the WPR framework sets up a straightforward guideline, our analysis was not linear but involved a reiterative cycle of examination, reflection and refinement.

Table 3. Data analysis.

Findings

Among the 20 targeted universities, nineteen have published publicly available policies regulating the use of GenAI in assessment. To avoid singling out specific universities and assigning blame, we will use codes (e.g. University 01) to refer to universities in our presentation and discussion.

What’s the major problem represented to be? (RQ1)

This study identified miscellaneous problem representations in universities’ GenAI policies regarding assessment, which include concerns around students’ work originality, the need to redesign assessment, data safety and fairness issues. Based on how they are framed within the policy texts, these problems are further categorised into six groups with different orientations ().

Table 4. Problem representations regarding GenAI use in assessment.

The major problem represented is that students may not submit their own original work for assessment with the presence of GenAI. Terms such as ‘original work’, ‘own work’ and ‘authors’ are frequently mentioned in the policies. Students are reminded that AI-generated content should not be considered their original work and that they are held accountable for including any such content for assessment. Failure to submit original work, as indicated in most policies, constitutes a severe violation of academic integrity. For example:

students must be the authors of their own work. Content produced by AI platforms, such as ChatGPT, does not represent the student’s own original work so would be considered a form of academic misconduct. (University 02)

Submitting work and assessments created by someone or something else, as if it was your own, is plagiarism and is a form of cheating and this includes AI-generated content. (University 06)

Students may not misrepresent as their own work any output generated by or derived from generative AI. (University 18)

In addition, there are recurring calls for teachers to reconsider their assessment design to reduce ‘cheating problems’. University 16’s ‘AI Guidance’ explicitly states that ‘Changes in assignment design and structure can substantially reduce students’ likelihood of cheating’. University 06 advises teachers to ensure that their assessment measures ‘higher-order skills that cannot yet be well replicated by AI’ or ‘the use of AI content generation tools is not advantageous/useful’ to complete the assessment task. Teachers are also recommended to use ‘authenticity interviews’ or ‘oral exams’ to verify whether students independently completed their work. One notable example is from University 06 which states that ‘a random selection of students’ should be invited by their department to attend an ‘authenticity interview’. During the interview, students will be asked to clarify their subject knowledge and how they approached their submitted assignments.

What deep-seated assumptions underlie this representation of the problem, and what is left unproblematic? (RQs2 and 3)

The originality of students’ work, as well as the associated concerns about academic misconduct, is represented as a significant problem in university policies worldwide. We argue that such problem representation reveals deep-seated beliefs held by the higher education community about how to understand and approach ‘originality’ in assessment.

Original work and non-AI-assisted work

In our policy analysis, we found that students’ use of GenAI is viewed as a threat to the originality of their work. This assumes that the involvement of GenAI constitutes a type of external assistance, which is separate from the student’s independent efforts and intellectual contribution. For example, University 20 discusses the capability of detection machines to ‘differentiate between ChatGPT text and text that is actually original’, which implies a clear distinction. In some policies, using GenAI is treated no differently from outsourcing the coursework to a third party (e.g. friends, ghostwriters):

Just as students may not turn in someone else’s work as their own, students may not misrepresent as their own work any output generated by or derived from generative AI. (University 18)

Absent a clear statement from a course instructor, use of or consultation with generative AI shall be treated analogously to assistance from another person. (University 05)

Original work and academic misconduct

In our policy analysis, we found that the originality of students’ work is mainly framed as a concern of plagiarism or academic misconduct. For example, University 02 explicitly wrote that ‘Content produced by AI platforms… does not represent the student’s own original work so would be considered a form of academic misconduct’. Several universities ask students to sign an ‘originality declaration form’ to make sure ‘they [you] do not present AI-generated output as their [your] original work’ (University 14). Even before the recent surge in attention towards GenAI, the close connection between work originality and academic misconduct has been evidenced in the ‘originality score’ produced by Turnitin and other plagiarism detection machines to indicate similarities between students’ work and a database of previous work (Eshet Citation2023). The engrained value orientation towards original work is intertwined with dishonest behaviours among students. For example, Johnson-Eilola and Selber (Citation2007) observed that students sometimes intentionally refuse to make attributions to source texts because they also recognise the primary value placed on producing original work that is 100% self-generated.

While the current framing addresses valid concerns in GenAI use, it presents a very limited understanding of originality from a surveillance angle. In these long-held practices in higher education, originality is reduced into a quantifiable number (e.g. originality score) or a box-ticking exercise (e.g. signing an originality declaration form). There is very little problematisation on what makes a student’s work ‘original’ throughout our dataset. One exception is University 07 which provides some elaborations on how humans generate original text and argues that this process is similar to how GenAI produces text (e.g. ‘Arguably, to a non-vanishing degree, humans are doing the same thing [as AI] when generating what is considered original text: we write based on associations, and our associations while writing come from what we previously heard or read from other humans’). And yet these elaborations did not lead to a more sophisticated understanding of ‘original work’. Rather, the university continues to conclude that submitting AI-generated content for assessment is similar to ghostwriting, which should be regarded as a form of academic misconduct.

What potential effects may be produced by this problem representation? (RQ4)

Bacchi (Citation2009) noted three types of effects that could be generated by a certain problem representation – discursive, subjectification and lived effects. Discursive effects denote the ways in which policy discourse shapes our understanding of what is considered relevant or important. Subjectification effects point to the power of policy discourse in shaping individual identities and subjectivities. Lived effects draw attention to the tangible consequences experienced by individuals in their daily lives. Since this study only examines policy texts, we discuss the potential effects of the represented problems rather than empirical ones.

In our analysis, we found that GenAI is presented as a threat to the originality of students’ work. Discursively, this representation implies that students’ work without any AI involvement is more ‘original’ and hence more aligned with the values underpinning academic integrity. Although multiple universities have advised teachers to create assessment designs that allow students and AI to collaborate in work production, the highlighted distinction between ‘AI-assisted work’ and ‘original work’ in policies may still construct a hierarchy – work that is thoroughly ‘human’ is understood to be more original whereas AI-collaborated work is useful but less valued. In this context, many emerging forms of human-AI collaborative work could be sidelined due to a lack of ‘originality’. Subjectively, this representation may stigmatise students who use GenAI regardless of how they use it, assuming that their work is less authentic or valuable. Considering the lived effects, students may become reserved, if not resistant, to leveraging such technology in their learning.

We also found that the represented problem of work originality is mainly approached from the angle of academic misconduct. Discursively, this problem representation positions students as potentially untrustworthy and inclined to submit work that is not their own. One important observation pertains to the framing of specific guidelines (e.g. redesigning assessment, conducting oral examinations) within university policies. Some policies frame them as important not because they hold the potential to enhance student learning, but because they prevent students from academic misconduct. For example, University 16 advises teachers to reconsider their assessment design because this can ‘reduce students’ likelihood of cheating’. As Adler-Kassner, Anson, and Howard (Citation2008) rightly noted, ‘invoking “better ways to prevent plagiarism” serves only to strengthen the assumption that students are looking to plagiarize’ (235). Far too often the representations of students and technology convey a conception of education that orientates around ‘catching’ students rather than engaging them. As a consequence, students may feel distrusted rather than empowered amidst the recent technological development. Teachers are subjectified less as educators but more as ‘gatekeepers’ to avoid academic misconduct in the digital age.

Discussion

Our analysis shows that miscellaneous GenAI-related problems have been implicitly or explicitly targeted in the policies of 20 leading universities, and the originality of students’ work is the predominant concern. These findings are not surprising as they have been to different extents mentioned in opinion articles or media reports. What is particularly intriguing and worthy of further deliberation is how the problem of originality is represented in GenAI policies and its associated repercussions and implications.

The current policy framing – which views GenAI involvement as external to students’ individual efforts and intellectual contributions – reflects an engrained mindset and value orientation towards what constitutes original work. In the field of writing, Johnson-Eilola and Selber (Citation2007) noted that there is an implied hierarchy where the best of writers’ work is always associated with the idea of a ‘lone genius in the attic slaving away on a piece of written work’ (376). Almost two decades later, this idea still subtly influences how the originality of work is interpreted and constructed by institutional policies. The distinction drawn between ‘AI-assisted work’ and ‘original work’ in university policies can inadvertently reinforce the traditional hierarchy that places greater value on solely self-generated work. The potential drawback of this is that students may become hesitant to use GenAI even for legitimate learning purposes. Students who choose to use GenAI in assessment could be stigmatized and perceived as less independent and capable.

Against this context, we argue that there is a critical silence in higher education policies about the evolving notion of originality in the digital age. On the one hand, the process of producing original work is becoming more convoluted given recent technological development. Eaton (Citation2023) wrote about how we have come to a post-plagiarism era where hybrid human-AI writing will become the new normal. She argued that AI-generated texts are not static but are often ‘edited, revised, reworked, and remixed’ by humans, making it difficult to draw the line between human and AI contributions (3). The integration of GenAI into various productivity tools (e.g. Microsoft Office, Google Workspace) only makes this process more intertwined, and Chan (Citation2023b) noted that students may not even be aware of their use of AI in work production. On the other hand, the rise of GenAI further complicates what it means by originality in students’ work. While originality is traditionally regarded as ‘individualistic’ and ‘attributed to individual persons’ (Nakazawa, Udagawa, and Akabayashi Citation2022, 705), knowledge production in the digital age is an increasingly distributed and collaborative process. With continued advancements in technology, AI will be increasingly woven into our creativity and problem-solving process (Wu et al. Citation2023).

These observations point to a need for more nuanced conceptualisations of originality in higher education policies and practices. One potential avenue is to develop a situated conceptualisation of originality, which identifies key dimensions for assessing originality based on the disciplinary context and learning goal. What is considered original is often dependent on a given field or domain (Guetzkow, Lamont, and Mallard Citation2004) – for instance, creative writing courses often require students to produce highly innovative content while disciplines such as law may involve the use of templates and standardized language. Therefore, the definition of originality, as well as its connection to broader concerns of academic misconduct and student learning, will be dependent on ‘what is being offloaded, by whom, and for what purposes in the learning process’ (Lodge, Thompson, and Corrin Citation2023, 4). This will not only allow for a more subtle understanding of originality but will also provide clarity on what specific aspects of students’ work should be original to ensure that students demonstrate particular learning outcomes on their own.

Another important finding is that higher education policies tend to frame the problem of work originality from the angle of academic misconduct. We argue that a more inclusive approach that extends beyond viewing originality through the narrow lens of surveillance should be reflected in future higher education policies. Moving forward, higher education policies can reframe originality from a collaborative perspective and situate it along a continuous spectrum. For example, a narrow framing of originality would expect students working on a scientific research project to complete all necessary stages on their own without any AI assistance. However, by reframing originality on a continuous spectrum, we can explore different degrees of collaboration between students and AI. The students may leverage GenAI to help analyse large datasets and generate initial hypotheses, after which they exercise evaluative judgement to assess and refine the AI-generated insights (Bearman & Ajjawi Citation2023; Luo and Chan Citation2023). The end product is a collaborative effort between the student and the AI, blending their respective strengths to produce an original piece of work.

Implications

This study makes contributions at the level of both knowledge and practice. At the knowledge level, the study represents the first of its kind to critically analyse GenAI policies across universities globally. This provides valuable insights into how issues around GenAI are currently prioritized and framed in higher education and exposes important areas that require further attention in policymaking. While originality as a concept often remains unquestioned, our discussion will enrich the much-needed theoretical toolkit for future studies to further conceptualise and research originality in the age of AI.

Understanding the problem representation in university GenAI policies is crucial to underpin further moves at a practical level. This study evokes critical reflection on what makes a student’s work original in the presence of AI, prompting higher education policymakers and practitioners to review, rethink and revise current policy discourses around GenAI use and work originality. One recommended approach is to organize consultation meetings, seminars and debates to further challenge and deepen the concept of originality rather than seeing it as enshrined in the policies. Partnering with students in these consultations to understand how they approach GenAI and understand work originality will be desirable.

A more inclusive approach needs to be reflected in the policies to address issues around originality in students’ work. Rather than stressing originality from a surveillance angle, policies can place more emphasis on the available support to students in producing original work that is meaningful to their learning. It would also be important to acknowledge broader structural factors that influence the originality of students’ work, such as cultural traditions on technology use and the accessibility of GenAI in the university. Open communication and collaboration with students and faculty members should be stressed over compliance in developing policies to foster a culture of trust and care. Policymakers could invite student feedback on their policy development to demonstrate partnership over policing. A stronger emphasis on transparency and two-way dialogue among higher education leaders, staff and students may lead to governance perceived as more equitable and learning-centred by all stakeholders.

Limitations, future studies and conclusions

This study critically reviewed the GenAI policies of 20 leading universities worldwide via Bacchi’s WPR framework. We found that the primary problem represented in university policies concerns the originality of students’ work in the age of AI which is predominantly framed as academic misconduct. Building on these findings, we elaborate on the evolving notion of originality in an AI-mediated assessment landscape.

To conclude, we reflect on some limitations of this study and outline potential directions for future studies. First, it is important to acknowledge that the policy analysis in this study is influenced by our own positionality as researchers. Our inclination towards adopting a critical perspective, as well as our research background in assessment for learning (rather than ‘assessment of learning’ or ‘education surveillance’), inevitably shapes our analysis. Therefore, our analysis represents only one potential interpretation of GenAI policies and does not mean to undermine all the efforts invested into developing them. To provide more diverse perspectives, we encourage researchers from diverse fields (e.g. sociology, psychology, economics) to contribute their insights on this topic. It will also be interesting to conduct comparative policy studies on universities in different geographical or socioeconomic contexts. Rather than focusing on top universities measured by QS Rankings, future research could expand the scope and size of policy samples to capture a broader spectrum of institutional policies.

Second, as this study only focuses on policy texts, the potential effects as discussed would benefit from empirical evidence. At present, there are a massive number of opinion articles on GenAI and assessment, but empirical research is severely lacking. We recommend more empirical studies on how teachers and students interpret and respond to GenAI policies to provide rigorous data on the policy effects.

Finally, GenAI technology is developing at an unprecedented speed and higher education policies are also in a constant process of changes and updates. In view of this fast-moving field, the policies under analysis should not be read as fixed or static. This paper is not a ‘definitive diagnosis’ of university GenAI policies but part of an ongoing process of shaping and improving these policies towards more ethical, effective and meaningful use of technology in assessment. We also note that our discussion of originality is still rather preliminary – there is much to be explored in this evolving landscape and we encourage researchers to delve deeper into the theoretical foundation of originality in the age of AI.

Acknowledgment

We would like to thank the three reviewers who provided very constructive feedback to enhance the paper quality.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Adler-Kassner, L., C. Anson, and R. M. Howard. 2008. “Framing Plagiarism.” In Originality, Imitation, and Plagiarism: Teaching Writing in the Digital Age, edited by C. Eisner & M. Vicinius, 231–246. Ann Arbor, MI: University of Michigan Press.

- Bacchi, C. 2009. Analysing Policy: What’s the Problem Represented to Be? Frenchs Forest: Pearson Education.

- Bacchi, C. 2010. “Policy as Discourse: What Does It Mean? Where Does It Get us?” Discourse: Studies in the Cultural Politics of Education 21 (1): 45–57. doi:10.1080/01596300050005493.

- Bacchi, C. 2012. “Why Study Problematizations? Making Politics Visible.” Open Journal of Political Science 02 (01): 1–8. doi:10.4236/ojps.2012.21001.

- Bacchi, C., and J. Eveline. 2010. “Approaches to Gender Mainstreaming: What’s the Problem Represented to Be?.” In Mainstreaming Politics: Gendering Practices and Feminist Theory, edited by C. Bacchi & J. Eveline, 111–138. Adelaide: University of Adelaide Press.

- Bacchi, C., and S. Goodwin. 2016. Poststructural Policy Analysis: A Guide to Practice. New York: Springer.

- Bearman, M., and R. Ajjawi. 2023. “Learning to Work with the Black Box: Pedagogy for a World with Artificial Intelligence.” British Journal of Educational Technology 54 (5): 1160–1173. doi:10.1111/bjet.13337.

- Bommarito, M., and D. M. Katz. 2022. “GPT Takes the Bar Exam.” arXiv Preprint.

- Cao, Y., Li, S. Liu, Y. Yan, Z. Dai, Y. Philip, and Sun, L. 2023. “A Comprehensive Survey of AI-Generated Content (AIGC): a History of Generative AI from GAN to ChatGPT.” arXiv Preprint.

- Chan, C. K. Y. 2023a. “A Comprehensive AI Policy Education Framework for University Teaching and Learning.” International Journal of Educational Technology in Higher Education 20 (1): 38. doi:10.1186/s41239-023-00408-3.

- Chan, C. K. Y. 2023b. “Is AI Changing the Rules of Academic Misconduct? An in-Depth Look at Students’ Perceptions of AI-Giarism.” arXiv Preprint.

- Cingillioglu, I. 2023. “Detecting AI-Generated Essays: The ChatGPT Challenge.” The International Journal of Information and Learning Technology 40 (3): 259–268. doi:10.1108/IJILT-03-2023-0043.

- Cotton, D. R. E., P. A. Cotton, and J. R. Shipway. 2023. “Chatting and Cheating: Ensuring Academic Integrity in the Era of ChatGPT.” Innovations in Education and Teaching International. doi:10.1080/14703297.2023.2190148.

- Dai, W., J. Lin, F. Jin, T. Li, Y. S. Tsai, D. Gasevic, and G. Chen. 2023. “Can Large Language Models Provide Feedback to Students? A Case Study on ChatGPT.” EdArxiv.

- Eaton, S. E. 2023. “Postplagiarism: Transdisciplinary Ethics and Integrity in the Age of Artificial Intelligence and Neurotechnology.” International Journal for Educational Integrity 19 (1): 23. doi:10.1007/s40979-023-00144-1.

- Eshet, Y. 2023. “The Plagiarism Pandemic: Inspection of Academic Dishonesty during the COVID-19 Outbreak Using Originality Software.” Education and Information Technologies. doi:10.1007/s10639-023-11967-3.

- Foucault, M. 1986. The Use of Pleasure: The History of Sexuality. New York: Vintage.

- Gao, C. A., F. M. Howard, N. S. Markov, E. C. Dyer, S. Ramesh, Y. Luo, and A. T. Pearson. 2022. “Comparing Scientific Abstracts Generated by ChatGPT to Original Abstracts Using an Artificial Intelligence Output Detector, Plagiarism Detector, and Blinded Human Reviewers.” BioRxiv.

- Gilson, A., C. Safranek, T. Huang, V. Socrates, L. Chi, R. A. Taylor, and D. Chartash. 2022. “How Does ChatGPT Perform on the Medical Licensing Exams? The Implications of Large Language Models for Medical Education and Knowledge Assessment.” MedRxiv.

- Guetzkow, J., M. Lamont, and G. Mallard. 2004. “What is Originality in the Humanities and the Social Sciences?” American Sociological Review 69 (2): 190–212. doi:10.1177/000312240406900203.

- Johnson-Eilola, J., and S. A. Selber. 2007. “Plagiarism, Originality, Assemblage.” Computers and Composition 24 (4): 375–403. doi:10.1016/j.compcom.2007.08.003.

- Lodge, J. M., K. Thompson, and L. Corrin. 2023. “Mapping Out a Research Agenda for Generative Artificial Intelligence in Tertiary Education.” Australasian Journal of Educational Technology 39 (1): 1–8. doi:10.14742/ajet.8695.

- Loutzenheiser, L. W. 2015. “Who Are You Calling a Problem?: Addressing Transphobia and Homophobia through School Policy.” Critical Studies in Education 56 (1): 99–115. doi:10.1080/17508487.2015.990473.

- Luo, J., and C. K. Y. Chan. 2023. “Conceptualising Evaluative Judgement in the Context of Holistic Competency Development: Results of a Delphi Study.” Assessment & Evaluation in Higher Education 48 (4): 513–528. doi:10.1080/02602938.2022.2088690.

- Mizumoto, A., and M. Eguchi. 2023. “Exploring the Potential of Using an AI Language Model for Automated Essay Scoring.” Research Methods in Applied Linguistics 2 (2): 100050. doi:10.1016/j.rmal.2023.100050.

- Nakazawa, E., M. Udagawa, and A. Akabayashi. 2022. “Does the Use of AI to Create Academic Research Papers Undermine Researcher Originality?” AI 3 (3): 702–706. doi:10.3390/ai3030040.

- Nguyen, A., H. N. Ngo, Y. Hong, B. Dang, and B.-P T. Nguyen. 2023. “Ethical Principles for Artificial Intelligence in Education.” Education and Information Technologies 28 (4): 4221–4241. doi:10.1007/s10639-022-11316-w.

- Nieminen, J. H., and S. E. Eaton. 2023. “Are Assessment Accommodations Cheating? A Critical Policy Analysis.” Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2023.2259632.

- Organization for Economic Cooperation and Development [OECD]. 2023. Opportunities, Guidelines and Guardrails on Effective and Equitable Use of AI in Education. Paris: OECD Publishing. https://www.oecd.org/education/ceri/Opportunities,%20guidelines%20and%20guardrails%20for%20effective%20and%20equitable%20use%20of%20AI%20in%20education.pdf

- Rudolph, J., S. Tan, and S. Tan. 2023. “ChatGPT: Bullshit Spewer or the End of Traditional Assessments in Higher Education?” Journal of Applied Learning and Teaching 6 (1): 1–22.

- Russell Group. 2023. New principles on use of AI in education. https://russellgroup.ac.uk/news/new-principles-on-use-of-ai-in-education/

- University World News. 2023. Oxford and Cambridge ban ChatGPT over plagiarism fears. https://www.universityworldnews.com/post.php?story=20230304105854982

- United Nations Educational, Scientific and Cultural Organization [UNESCO]. 2023. Guidance for generative AI in education and research. https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research

- Schiff, D. 2022. “Education for AI, Not AI for Education: The Role of Education and Ethics in National AI Policy Strategies.” International Journal of Artificial Intelligence in Education 32 (3): 527–563. doi:10.1007/s40593-021-00270-2.

- South China Morning Press. 2023. Hong Kong universities relent to rise of ChatGPT, AI tools for teaching and assignments, but keep eye out for plagiarism. https://www.scmp.com/news/hong-kong/education/article/3233269/hong-kong-universities-relent-rise-chatgpt-ai-tools-teaching-and-assignments-keep-eye-out-plagiarism

- Sullivan, M., A. Kelly, and P. McLaughlan. 2023. “ChatGPT in Higher Education: Considerations for Academic Integrity and Student Learning.” Journal of Applied Learning & Teaching 6 (1): 1–10.

- Tawell, A., and G. McCluskey. 2021. “Utilising Bacchi’s What’s the Problem Represented to Be? (WPR) Approach to Analyse National School Exclusion Policy in England and Scotland: A Worked Example.” International Journal of Research & Method in Education 45 (2): 137–149. doi:10.1080/1743727X.2021.1976750.

- Tertiary Education Quality and Standards Agency [TEQSA]. 2023. Assessment reform for the age of artificial intelligence. https://www.teqsa.gov.au/guides-resources/resources/corporate-publications/assessment-reform-age-artificial-intelligence

- Woo, E. 2022. “What is the Problem Represented to Be in China’s World-Class University Policy? A Poststructural Analysis.” Journal of Education Policy, 38 (4): 644–664. doi:10.1080/02680939.2022.2045038.

- Wu, Z., D. Ji, K. Yu, X. Zeng, D. Wu, and M. Shidujaman. 2023. “AI Creativity and the Human-AI Co-Creation Model.” In Human-Computer Interaction. Theory, Methods and Tools, edited by M. Kurosu. Switzerland: Springer.

- Yan, L., L. Sha, L. Zhao, Y. Li, R. Martinez-Maldonado, G. Chen, X. Li, Y. Jin, and D. Gašević. 2023. “Practical and Ethical Challenges of Large Language Models in Education: A Systematic Scoping Review.” British Journal of Educational Technology 55 (1): 90–112. doi:10.1111/bjet.13370.