Abstract

Introduction

Systematic reviews (SR) and systematic reviews with meta-analysis (SRMA) can constitute the highest level of research evidence. Such evidence syntheses are relied upon heavily to inform the clinical knowledge base and to guide clinical practice for meningioma. This review evaluates the reporting and methodological quality of published meningioma evidence syntheses to date.

Methods

Eight electronic databases/registries were searched to identify eligible meningioma SRs with and without meta-analysis published between January 1990 and December 2020. Articles concerning spinal meningioma were excluded. Reporting and methodological quality were assessed against the following tools: Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), A MeaSurement Tool to Assess systematic Reviews (AMSTAR 2), and Risk Of Bias in Systematic reviews (ROBIS).

Results

116 SRs were identified, of which 57 were SRMAs (49.1%). The mean PRISMA score for SRMA was 20.9 out of 27 (SD 3.9, 77.0% PRISMA adherence) and for SR without meta-analysis was 13.8 out of 22 (SD 3.4, 63% PRISMA adherence). Thirty-eight studies (32.8%) achieved greater than 80% adherence to PRISMA. Methodological quality assessment against AMSTAR 2 revealed that 110 (94.8%) studies were of critically low quality. Only 21 studies (18.1%) were judged to have a low risk of bias against ROBIS.

Conclusion

The reporting and methodological quality of meningioma evidence syntheses was poor. Established guidelines and critical appraisal tools may be used as an adjunct to aid methodological conduct and reporting of such reviews, in order to improve the validity and transparency of research which may influence clinical practice.

Introduction

Meningioma is the most common primary intracranial tumour in adults. Evidence synthesis in the form of SR and SRMA, are increasingly utilised to provide clinicians with a wider clinical knowledge base and to guide decision making across neurosurgery, especially for meningioma. However, retrospective studies of variable quality often underpin such work. A previous assessment of neurosurgical meta-analyses found methodological conduct and reporting to be suboptimal.Citation1 For a disease which relies so heavily on evidence syntheses, sound methodological quality and comprehensive and transparent reporting are of paramount importance.Citation2

Efforts have been made to address the reporting quality, methodological quality and risk of bias (RoB) of SRs. Notably, PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) which provides SRMA reporting recommendations for authors (published 2009 and updated 2021).Citation3 AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews)Citation2 and ROBIS (Risk Of Bias In Systematic reviews)Citation4 are widely recognised tools that may be used to assess the methodological quality and RoB of SRs respectively. AMSTAR 2Citation2 provides a 16-item framework for the appraisal of the methodological quality of SRs. ROBIS,Citation4 completed in three phases, may also be used to assess methodological quality, but has a particular emphasis on RoB. Whilst none of these tools are exclusive or mandatory, they are acknowledged as useful.

The aim of this review of reviews was to make a post hoc assessment of both the reporting and methodological quality (including RoB), of published meningioma SRs and SRMAs, using adaptations of tools and guidelines currently available.

Materials and methods

Information sources

PubMed, EMBASE, CINAHL and Epistemonikos were examined through a detailed search strategy from 1 January 1990 until 31 December 2020. The following electronic registries were examined with the search term ‘meningioma’: Cochrane Central Register of Controlled Trials, Database for Abstracts of Reviews of Effects (DARE), Joanna Briggs Institute and PROSPERO. 1990 was chosen as very few SRs were likely to have been performed before this date.Citation5 The complete PubMed search strategy is provided as an example in supplementary appendix 1.

Design and eligibility criteria

The Cochrane collaboration definition of what constitutes a systematic review was used to assess eligibility and required that the following criteria were met: 1) has a research question, 2) there is a systematic literature search, 3) specific eligibility criteria are stated and 4) attempts to analyse data or draw conclusions from collated studies.Citation6 SRs were considered to include meta-analysis if stated as such, or if study-level data were combined using recognised meta-analytical methods (rather than averages of study-level data only).Citation7 Only full-text reviews concerning cranial meningioma written in the English language were included (due to limitations of translation services). The full eligibility criteria are described in supplementary appendix 2.

Study selection and data extraction

The online platform Rayyan was used for de-duplication and screening of titles and abstracts.Citation8 Screening was performed by two reviewers independently (SG and AMG). All potentially eligible full-text articles were then assessed for inclusion by two review authors (SG and AMG). Disagreements were resolved between the two reviewers, and if resolution not possible, discussed with the senior author (CPM). Reference lists of all screened full-text articles were hand-searched to identify any articles not found through electronic database and registry searches.

Data was extracted by a single review author (SG, AMG, MAM, CSG, GER, ACS, AII, CPM). A second review author (SG, AMG, MAM, CSG, GER, ACS, AII, CPM) checked every data point in discussion with the primary data extractor. In addition, every allocated review typology, question format, AMSTAR 2 critical domain, and overall RoB as per ROBIS were also checked by two senior authors (AII, CPM) in discussion with the data extractor and data checker, to ensure agreement by at least four authors in total.

Data was extracted into a piloted spreadsheet in Microsoft Excel utilising all available information provided by the authors, including supplementary files. Demographic data included study details (journal, 2020 impact factor, country of first author, year of publication, title), number of databases searched, and whether meta-analysis had been performed.

Assessment of review typology and question format

Nine types of SR have previously been described and each is associated with a specific question format.Citation9 For example, an effectiveness review assesses the effectiveness of an intervention and the question format would be PICO (population, intervention, comparators, outcomes).Citation9 Review type and question format were assigned by the study team for each included review, and subsequently compared to the typology and question format assigned by the authors themselves, when stated. A summary of review typologies and associated question formats and explanations are provided in supplementary appendix 3.

Assessment of methodological quality

Methodological quality was assessed against AMSTAR 2, a 16 domain tool appraising the conduct of study selection, data collection and appraisal, findings and funding for studies of healthcare interventions.Citation2 Annotations for interpretation across a wider range of systematic review typologies have been added for several items and are described in supplementary appendix 4.

Domains 11, 12 and 15 were considered inapplicable to SRs without meta-analysis and were not considered when assigning a confidence rating to the reviews. Domains 2, 4, 7, 9, 11, 13, 15 are stated to be ‘critical’ according to the tool and deemed to substantially influence the validity of the review.Citation2 For this study, the total number of critical domains considered for SRMAs was 7 (Domains 2,4,7,9,11,13,15) and 5 for systematic reviews without meta-analysis (Domains 2,4,7,9,13). Domains were scored as yes, no and partial yes according to the guidance document. For the purposes of this study, partial yes was considered as a yes when considering whether a critical domain was met or not.

Based on the number of critical and non-critical domains not met (according to AMSTAR 2 guidance), an overall confidence rating can be ascribed to each SR. Confidence ratings included: critically low (more than one critical domain not met), low (one critical domain not met), moderate (more than one non-critical domain not met) and high (no more than one non-critical domain not met).

Assessment of risk of bias

The ROBIS tool was used to assess RoB of reviews and involves 3 phases.Citation4 Phase 1 (‘Assessing Relevance’) was not conducted as this is optional and relevance was assessed during study selection. Phase 2 involves four domains (‘eligibility criteria’, ‘study selection’, ‘data collection and appraisal’, ‘synthesis and findings’). All domains were deemed applicable to SRMAs. However, Items 4.4, 4.5, 4.6 within domain 4 were interpreted to be inapplicable to SRs without meta-analysis; non-compliance with these items was not penalised by the study team. Each domain in Phase 2 was scored as high, low, or unclear RoB. High concern was graded if 2 or more criteria were marked as high or unclear concern. Phase 3 involves generating an overall RoB rated either high or low; low quality was scored if two or more concerns were identified in a domain within Phase 2. A description of this tool with annotations is provided in supplementary appendix 5.

Assessment of reporting quality

Reporting quality was assessed against the PRISMA guidelines (2010), encompassing 27 different items. Five items (Items 14,15,16,22,23) were considered inapplicable to SRs without meta-analysis. For this study, the maximum PRISMA score for a SRMA was therefore 27, and 22 for a SR. These scores were converted to percentages to reflect adherence to the guideline. A single point was awarded for each item judged to have been achieved when evidenced within the manuscript or supplementary materials. Individual PRISMA items and their application within this study are provided in supplementary appendix 6.

Statistical analysis

Categorical data are reported as frequencies and proportions. Continuous variables and outcomes were assessed for normality using the Kolmogorov–Smirnov test. Normally distributed data are expressed as mean values (standard deviation [SD]), and non-normally distributed data are presented as median (interquartile range [IQR]). Statistical analyses were conducted with SPSS Version 27 (IBM corporation).

Results

Study characteristics

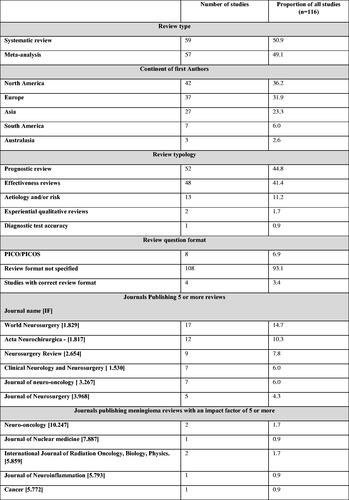

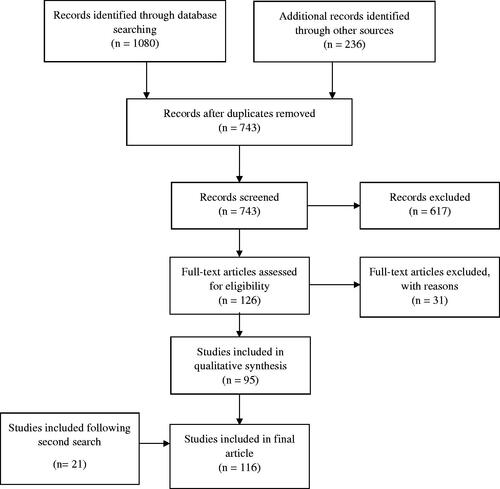

One hundred sixteen articles published between 1 January 1990 and 31 December 2020 were included: 59 SRs and 57 SRMAs. The search, screening, and selection results are summarised ().

Figure 1. Prisma flow chart. One thousand eighty titles were identified following the initial searches. Screening of titles and abstracts resulted in 126 studies undergoing full text review. Ninety-five articles were included for analysis. A repeat search of all databases identified a further 110 new titles to be screened resulting in a further 21 articles included for analysis. The total number of articles included for analysis was 116.

Since 1990, publication of meningioma SRs has steadily increased. More than 50% (N = 62) were published between 2018 and 2020 (supplementary appendix 7). One-third of articles were published by authors with a primary affiliation in the U.S.A. (N = 39, 33.6%). Most SRs without meta-analysis were from the U.S.A. (25 of 59, 42.4%), whilst the majority of SRMAs were from China (19 of 57, 33.3%). Reviews were published in 48 different journals with a median 2020 impact factor of 1.83 [IQR 1.76–3.27]. Included reviews were found to be published most frequently by World Neurosurgery (N = 17, 14.7%).

Prognostic reviews were most common (N = 52, 44.8%), followed by effectiveness reviews (N = 48, 41.4%), and reviews exploring aetiology and/or risk (N = 13, 11.2%). Only eight reviews (6.9%) described the question format used, and only four of these reviews (3.4%) applied a question format that was appropriate to the review typology of that article. Review characteristics are summarised in .

Individual study characteristics for SRs without meta-analysis are provided in supplementary appendix 8 and for SRMAs in supplementary appendix 9.

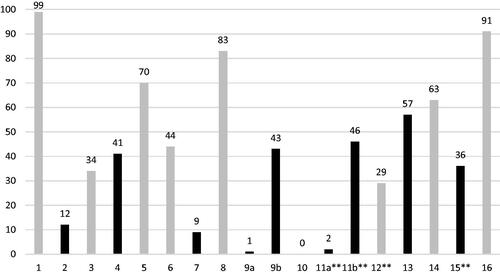

Quality of methodology as per AMSTAR 2

Out of the seven major criteria contributing to the downgrading of AMSTAR 2 confidence ratings, the two most frequent reasons were: 1) failure to state whether review methods were established prior to the conduct of the review (N = 104, 89.7%) and 2) failure to provide justifications for the exclusion of studies at the time of screening (N = 107, 92.2%). Only 14 studies (12.1%) provided a comprehensive search strategy (Item 4). One hundred ten reviews were classified as critically low (94.8%), five (4.3%) as low quality, and only one as high quality. A graphical summary of mean AMSTAR 2 adherence rates per domain is detailed in and individual AMSTAR 2 criteria adherence rates in supplementary appendix 10. Individual domain scores and overall confidence ratings for each included review can be seen in supplementary appendix 8 and 9.

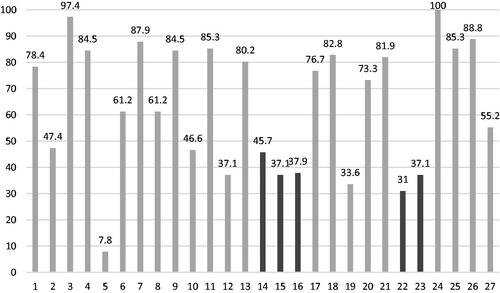

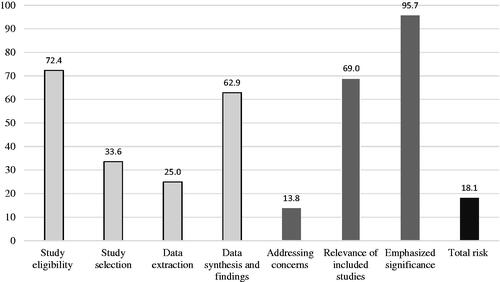

Risk of bias assessment as per ROBIS

Only 21 (18.1%) reviews were classified as ‘low RoB’ against ROBIS. The ‘data extraction’ Phase 2 domain resulted in the greatest number of reviews concluding with ‘high’ concern (N = 87, 75.0%), whilst least concern was associated with the ‘eligibility criteria’ domain (N = 84,72.4). Only (13.8%) 16 articles addressed concerns that had been identified in the individual domains in the study’s discussion. Domain adherence rates are shown in and individual results can be seen in supplementary appendix 11.

Figure 4. Compliance of studies to ROBIS. The first four columns shown in the lightest shade denotes the proportion of studies assessed as having a low risk of bias within the domains of; eligibility for inclusion of individual studies, selection process used in the review, extraction of data from individual studies and synthesis of extracted data. The results of positive responses in phase 3 signalling questions are then represented in darker shades. Signalling questions addressed whether; the reviewers mentioned concerns raised by the four domains in ROBIS, the included studies for reviews were relevant for the review question and if the review proceeded to emphasise results based on statistical significance as opposed to relevance. The last column denotes the proportion of articles that scored a low risk of bias after assessment by the tool.

Quality of reporting as per PRISMA

The mean PRISMA reporting score of SRs without meta-analysis was 13.8 out of a maximum of 22 (SD 3.4, PRISMA adherence 62.8%) and 20.9 out of a maximum of 27 (SD 3.9, PRISMA adherence 77.4%) for SRMAs.

Nine reviews (7.8%) stated that a protocol had been written prior to commencement of the review (Item 5). Forty-three reviews (37.1%) conducted RoB assessments for individual studies (Item 12), and 39 reviews (33.6%) presented the results of this assessment (Item 19). Almost half of the reviews (N = 55, 47.4%) provided a structured abstract (Item 2), and 64 reviews (55.2%) presented information on funding sources (Item 27). Regarding SRMA PRISMA recommendations, 36 studies (63.2% of meta-analyses) reported bias across studies (Item 22). A graphical summary of mean PRISMA adherence rates is summarised in and individual PRISMA item adherence rates are summarised in supplementary appendix 12.

Discussion

Systematic review is an important and accepted methodology for the assimilation of research evidence to inform clinical knowledge base and guide clinical practice. Intracranial meningioma SRs should not only be completed with a high degree of methodological quality, but also reported comprehensively to facilitate transparent interpretation of new knowledge in meningioma clinical practice. The robustness of the results of such syntheses is limited by: 1) the existing literature, and 2) the methodological approach and reporting of the review by the review authors. Meningioma clinical knowledge and practice is increasingly influenced by such evidence syntheses. Of particular note, meningioma clinical practice is not often informed by controlled clinical trials, with effectiveness reviews of low-quality retrospective cohort data often utilised to guide clinical decision making.

A previous review of methodological and reporting quality of a cohort of neurosurgical SRMAs demonstrated adherence rates less than 50% when assessed against AMSTAR and PRISMA, although the AMSTAR tool was an earlier version which did not incorporate a final confidence rating.Citation1 This methodological review demonstrates very poor methodological quality of meningioma evidence syntheses when judged against AMSTAR 2 and ROBIS, and poor adherence against PRISMA reporting guidelines. This study highlights common methodological and reporting deficiencies in the meningioma literature and suggests areas for improvement for systematic reviewers in general.

Review typology and question format

Defining review type is pivotal in guiding the rest of the review process, not least because it influences the review question format to be used. For example, prognostic reviews do not require interventions to be defined, as would be done if the PICOS question format was used for an effectiveness review. Instead, a prognostic review question format of PEO (population, exposure of interest, outcome) may be more appropriate. Historically, most SRs were concerned with the effectiveness of an intervention which may explain why many reviewers utilise the PICOS question format. However, more recently, reviewers have tackled a wider range of questions such as quality of life and prognostication. Munn et al.Citation9 serve as one particular introductory guide to review typologies and appropriate question formats. This study demonstrated that 93% of systematic reviewer authors did not define review typology or question format. Of those that did define review typology, less than half utilised an appropriate question format. Whilst this may not introduce bias, it does constitute a fundamental methodological flaw and warrants greater consideration.

Quality of methodology and risk of bias

Quality assessment of a reviews’ methodology is integral to accurate critical appraisal and balanced decision making. The methodological quality of included meningioma reviews was critically low with a majority having a high RoB. Nonetheless, most authors successfully provided information regarding the research question (AMSTAR 2 Item 1, ROBIS Domain 1) and conflicts of interest (AMSTAR 2 Item 16).

Major methodological flaws included failure to follow a protocol that was written and verified prior to the conduct of the review (AMSTAR 2 Item 2) and failure to provide a list of excluded studies, with justifications for exclusion at the time of title/abstract screening (AMSTAR 2 Item 7). Review protocols serve to prevent duplication of effort, allow researchers to anticipate and resolve potential issues prior to conduct and provide a record of the reviewer’s intentions mitigating against reporting bias of their results. Ideally, research protocols should follow the PRISMA-P recommended guidelines and be verified independently through publication in a peer-reviewed journal or alternatively, added to registries such as PROSPERO or the research registry.Citation10–13 Furthermore, journals could mandate protocol registration as a prerequisite for publication. Providing a list of excluded studies and the reason for exclusion at the title/abstract screening stage (not only at full-text analysis) may be an unrealistic expectation considering that some reviews requiring the screening of thousands of records. Ultimately, this was the reason why so many reviews were graded as critically low in this study.

Most reviews failed to provide eligibility criteria rationales (AMSTAR 2 Item 4). Publication restrictions are commonplace, especially English language restrictions. There may be acceptable reasons for restrictions, such as financial limitations and inaccessibility of translation service, but providing these rationales would ultimately improve the reliability of a review.

None of the reviews reported on funding sources of all included papers (AMSTAR 2 Item 10). When interventions are in question, which is the case for effectiveness reviews, industry funding of included studies may be a source of bias and thus introduce bias to the review’s conclusions.Citation14 Major deficiencies were found in the method of assessing bias within individual studies (AMSTAR 2 Item 12, ROBIS Domain 3) and across studies (publication bias) (AMSTAR 2 Item 15, ROBIS Domain 4). Measuring RoB within an individual study allows exclusion of low-quality studies, subsequently increasing the reliability of the synthesised results. Systematic reviewers should use an appropriate study-specific RoB assessment tool such as the Joanna Briggs Institute (JBI) critical appraisal checklist.Citation15 Publication bias should also be evaluated in SRMAs to minimise heterogeneity arising from confounders and may be assessed using funnel plot analysis, and its statistical significance determined through application of Begg’s and Egger’s tests.Citation16

Quality of reporting

The PRISMA guidelines serve to improve the quality of reporting; a lack of reporting transparency impairs the readers’ ability to assess the relative strengths and weaknesses of a review.Citation3,Citation17 Average adherence to PRISMA in this study (70%) compared favourably to PRISMA adherence in similar studies within plastic surgery (59%) and orthopaedic surgery (68%).Citation18,Citation19 SRMAs had better reporting quality than SRs (77.4%) which may be due to meta-analyses requiring more extensive planning and a greater awareness of required methodology.

PRISMA compliance to study rationales (Item 3), information sources (Item 7), limitations (Item 25) and conclusions (Item 26) were found to be high. However, PRISMA items related to providing the study type in the title (Item 1) and structured abstracts (Item 2) had low adherence rates. Informative titles should be used as they are known to improve the identification of such reviews in the future.Citation20 Although word count limits are imposed by journals, abstracts should aim to report information such as background with objectives, study selection criteria, appraisal and synthesis methods, results with limitations and a conclusion, in accordance with the PRISMA Abstracts Checklist.Citation17

Several authors failed to report eligibility criteria (Item 6) and search strategies (Item 8). Eligibility criteria provide authors the opportunity to narrow their search by consideration of criteria such as study type, participants, intervention and outcomes.Citation6 Full search strategies should be provided to allow for reproducibility of searches.Citation3 Almost half of the articles failed to declare funding (Item 27). Any financial support should be disclosed to allow readers to make a clear judgement regarding the influence a funding source may have on the results.

Recommendations

Efforts should be directed towards improving SR quality wherever possible. We offer the following specific recommendations to mitigate against common flaws, based on the findings of this study:

Early inclusion of a research methodologist may be of benefit to increase methodological quality, lower RoB and promote comprehensive reporting.

Dedicated resources for SR methodology should be utilised such as the Cochrane Handbook, which details the process of conducting evidence syntheses.Citation6

Review typology should be considered and an appropriate question format generated.Citation9

A protocol should be written a priori and can be published in a peer-reviewed journal or registered in an appropriate repository such as PROSPERO or the research registry.Citation12,Citation13

A rationale should be provided for study eligibility criteria.

Review abstracts should aim to summarise the following, as applicable: background with objectives, study selection criteria, appraisal and synthesis methods, results with limitations and a conclusion as outlined by the PRISMA 2020 abstracts checklist.Citation17

RoB in individual studies should be assessed and reported. Study-specific tools such as the JBI checklist for cross-sectional studies and ROB2 for RCTs may be employed.Citation15

Publication bias assessment should be conducted and reported. This may be done in a SRMA through funnel plot analysis and application of Begg’s and Egger’s tests.Citation16

Alongside recommendations made to authors, journal editors could endorse use of these tools. PRISMA is the most notable guideline amongst the three, with more than 50 journal endorsements across multiple specialties.Citation21 Unfortunately, aside from Neurosurgery, none of the other top 10 neurosurgery or Neuro-oncology journals formally endorse the PRISMA statement.Citation21

Limitations

However useful for the critical appraisal of SRs, the tools applied in this study, and our conclusions of poor methodological and reporting quality, do not gauge the clinical impact of such work. Therefore, we do not suggest that there is not merit in the identified reviews.

Deciding whether articles were, as a minimum, a SR was a subjective assessment, but was guided by the Cochrane definition of what constitutes a systematic review. This may have potentially led to the inclusion or exclusion of eligible reviews. Only English language articles were considered and to a degree, our results reflect quality within the English language literature. Annotations made to the tools by the study team for SRs without meta-analysis were also subjective, but aimed to allow for fair assessment of a wider selection of evidence syntheses, but may have contributed bias.

PRISMA was originally produced for the reporting of effectiveness reviews as it requires authors state their objective using PICOS (Item 4) and may explain why authors do not use other more appropriate question formats. The AMSTAR 2 tool has been designed to appraise SRs of studies of healthcare interventions; however, we adapted this tool to be used for all SR types (e.g. prognostic reviews) which may have contributed to lower confidence ratings. We used PRISMA (2009), ROBIS (2016) and AMSTAR 2 (2017) post hoc to assess all reviews, and whilst we conducted this study under the expectation of review authors not being aware of such tools, SRs published prior to guideline introduction would invariably have contributed lower scores.Citation4,Citation5,Citation17 An updated PRISMA statement was released at the conclusion of this study (2020), and further elaborates on the criteria required to fulfil the original 27 domains in the 2009 guidelines, but fundamentally, does not alter the reporting quality of the existing literature and its assessment in this study.Citation17

Conclusion

This review of reviews has demonstrated the need to improve the methodological and reporting quality of meningioma evidence syntheses. Systematic reviewers are encouraged to utilise dedicated resources intended to guide review methodology. The aforementioned established critical appraisal tools and reporting guidelines may be used as adjuncts to enhance the methodological and reporting quality of their work, with the hope of ultimately improving research standards and clinical management derived from such evidence syntheses.

Supplemental Material

Download Zip (186.8 KB)Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Klimo P, Thompson CJ, Ragel BT, Boop FA. Methodology and reporting of meta-analyses in the neurosurgical literature. J Neurosurg 2014;120:796–810.

- Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017;358:j4008.

- Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ 2009;339:b2700.

- Whiting P, Savović J, Higgins JPT, et al.; ROBIS Group. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. J Clin Epidemiol 2016;69:225–34.

- Smith V, Devane D, Begley CM, Clarke M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med Res Methodol 2011;11:15.

- Cochrane Handbook for Systematic Reviews of Interventions; 2021. Available from: https://training.cochrane.org/handbook [last accessed 2 Aug 2021].

- Shorten A, Shorten B. What is meta-analysis? Evid Based Nurs 2013;16:3–4.

- Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan—a web and mobile app for systematic reviews. Syst Rev 2016;5:210.

- Munn Z, Stern C, Aromataris E, Lockwood C, Jordan Z. What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC Med Res Methodol 2018;18:5.

- Moher D, Shamseer L, Clarke M, et al.; PRISMA-P Group. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev 2015;4:1.

- Correlation and Regression | The BMJ. The BMJ | The BMJ: Leading General Medical Journal. Research. Education. Comment; 2020. Available from: https://www.bmj.com/about-bmj/resources-readers/publications/statistics-square-one/11-correlation-and-regression [last accessed 12 Sep 2021].

- PROSPERO; 2021. Available from: https://www.crd.york.ac.uk/prospero/ [last accessed 15 Sep 2021].

- Research Registry. Research Registry; 2021. Available from: https://www.researchregistry.com/ [last accessed 15 Sep 2021].

- Khan NR, Saad H, Oravec CS, et al. A review of industry funding in randomized controlled trials published in the neurosurgical literature – the elephant in the room. Neurosurgery 2018;83:890–7.

- Farrah K, Young K, Tunis MC, Zhao L. Risk of bias tools in systematic reviews of health interventions: an analysis of PROSPERO-registered protocols. Syst Rev 2019;8:280.

- Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ 1997;315:629–34.

- Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71.

- Gagnier JJ, Kellam PJ. Reporting and methodological quality of systematic reviews in the orthopaedic literature. J Bone Joint Surg Am 2013;95:e771–7.

- Lee S-Y, Sagoo H, Whitehurst K, et al. Compliance of systematic reviews in plastic surgery with the PRISMA statement. JAMA Facial Plast Surg 2016;18:101–5.

- O’Donohoe TJ, Dhillon R, Bridson TL, Tee J. Reporting quality of systematic review abstracts published in leading neurosurgical journals: a research on research study. Neurosurg 2019;85:1–10.

- PRISMA; 2021. Available from: http://www.prisma-statement.org/Endorsement/PRISMAEndorsers [last accessed 17 May 2021].