ABSTRACT

It is known that people covertly attend to threatening stimuli even when it is not beneficial for the task. In the current study we examined whether overt selection is affected by the presence of an object that signals threat. We demonstrate that stimuli that signal the possibility of receiving an electric shock capture the eyes more often than stimuli signalling no shock. Capture occurred even though the threat-signalling stimulus was neither physically salient nor task relevant at any point during the experiment. Crucially, even though fixating the threat-related stimulus made it more likely to receive a shock, results indicate that participants could not help but doing it. Our findings indicate that the presence of a stimulus merely signalling the possibility of receiving a shock is prioritised in selection, and exogenously captures the eyes even when this ultimately results in the execution of the threat (i.e. receiving a shock). Oculomotor capture was particularly pronounced for the fastest saccades which is consistent with the idea that threat influences visual selection at an early stage of processing, when selection is mainly involuntarily.

In order to adequately deal with threat, it is important that potential danger is immediately detected and acted upon. In line with the idea that a bias in the processing of threat is adaptive and has survival value (LeDoux, Citation1996), numerous studies have demonstrated threatening stimuli are attended even when this is not beneficial for the task, or not part of the task set (e.g. Koster, Crombez, Van Damme, Verschuere, & De Houwer, Citation2004). Neuroimaging studies have demonstrated that neural activation associated with threat-related stimuli is obligatory and can even proceed without spatial attention (Vuilleumier, Armony, Driver, & Dolan, Citation2001; Whalen et al., Citation1998; but see Pessoa, McKenna, Gutierrez, & Ungerleider, Citation2002).

Studies investigating biased processing have shown that pictures displaying threat (e.g. spiders or angry faces) receive attentional priority (for a review see Vuilleumier, Citation2005). For example, the time to find a threat-related target presented among neutral distractors is shorter compared to a neutral target (e.g. Soares, Esteves, Lundqvist, & Öhman, Citation2009). Similarly, search times for a neutral target are longer when one of the distractors is threat-related compared to when all distractors are neutral (e.g. Devue, Belopolsky, & Theeuwes, Citation2011). Spatial cueing studies have demonstrated that response times on valid trials, when the cue is presented at the same location as the target, are faster for threat-related compared to neutral cues (e.g. Yiend & Mathews, Citation2001).

Studies using aversive conditioning have demonstrated that a cue signalling the possibility of an aversive tone resulted in faster response times on valid and slower response times on invalid trials (Koster et al., Citation2004). Notebaert, Crombez, Van Damme, De Houwer, and Theeuwes (Citation2011) showed that search times were facilitated when the target was presented near the stimulus signalling the possibility of an electric shock. Whether this latter study demonstrated true attentional capture causing modulation in search time is however unclear as the location of the target and the threat-related stimulus could be the same in this study such that there were no actual costs to attend the threat-related stimulus. To overcome this drawback, Schmidt, Belopolsky, and Theeuwes (Citation2015a) used a task in which a threatening distractor never coincided with the target, implying that attending it would always harm performance. During an initial fear-conditioning phase, the colour of one of the non-target stimuli was associated with the delivery of a shock while another colour was associated with the absence of the shock. In a subsequent test phase, in which no shocks were delivered, participants had to covertly search for a circle among diamonds and report the orientation of a line inside that circle. All shapes had the same colour except that on some trials, one of the diamonds had a colour that was associated with a shock during the fear-conditioning phase. Even though there was no incentive to attend the colour associated with the shock, this distractor impaired performance more than an equally salient object not associated with a shock providing evidence that threatening stimuli can capture attention.

Schmidt, Belopolsky, and Theeuwes (Citation2015b) also investigated the effect of threat on eye movements and showed that observers were faster in generating a saccade to the location previously occupied by a fear-conditioned than to a neutral stimulus. Similarly, in a study by Mulckhuyse, Crombez, and Van der Stigchel (Citation2013) participants had to saccade to a stimulus above or below fixation while ignoring a distractor. This distractor either had a colour conditioned to an aversive noise or a colour that was not aversely conditioned. The results showed that the distractor attracted the eyes more often when it was of a threat-related colour relative to a no-threat colour.

Even though these previous studies have demonstrated that threat-signalling stimuli bias covert and overt selection, it should be realised that the effects reported can likewise be explained in terms of top-down processes or differences in selection history (i.e. how often a threat-signalling stimulus had been selected in the past; Awh, Belopolsky, & Theeuwes, Citation2012) rather than a mandatory attentional prioritisation due to the threat signal. Indeed, in all the experiments investigating threat, participants were instructed to attend the threat-related stimulus either because it was part of the task (Koster et al., Citation2004; Schmidt, Belopolsky, & Theeuwes, Citation2015b) or because it was part of the pre-experiment conditioning phase (Mulckhuyse et al., Citation2013; Notebaert et al., Citation2011; Schmidt, Belopolsky, & Theeuwes, Citation2015a). Because attending the threatening stimulus was encouraged and trained, it is possible that the reported selection biases are due to top-down learning effects. In the current study, participants were never instructed nor required to attend the threatening stimulus. Attending the threat-related stimulus was neither part of the task set (participants always had to ignore the threatening stimulus) nor was there a pre-experiment conditioning phase which could have encouraged attending the threatening stimulus. Any effect of a threatening stimulus can thus only be explained in terms of exogenous capture.

The current study not only discouraged attending the threat-related stimulus by never rendering it the target, it even incentivised not attending the stimulus: if participants would make a saccade to the threatening stimulus, there was a higher probability of receiving an electric shock. Participants were furthermore informed that if their response was quick enough, they could avoid receiving a shock. Therefore, if anything, to avoid receiving (more) shocks, the best available strategy was to never attend the threat-signalling stimulus and saccade as quickly as possible to the target.

In the present study we used the oculomotor capture paradigm (Theeuwes, Kramer, Hahn, Irwin, & Zelinsky, Citation1999), in which participants were required to saccade to the shape singleton (e.g. a diamond among circles) and fixate it. The presence of one particularly coloured non-target shape (e.g. a blue shape) signalled the possibility of receiving a shock. When another designated colour was present (e.g. a red shape) it was certain that no shock would be delivered. Importantly, this task-irrelevant, shock-signalling stimulus was embedded in a heterogeneous display rendering it physically not more salient than any other stimuli in the display. Therefore, based on its visual features, the shock-signalling stimulus did not stand out from the other stimuli (Itti & Koch, Citation2001).

Method

Participants

Eighteen students of the Vrije Universiteit Amsterdam (14 females, mean age ±24) with reported normal or corrected-to-normal vision participated in the experiment. The sample size was estimated using GPower (Faul, Erdfelder, Lang, & Buchner, Citation2007) and based on the effect size obtained from a pilot study and a reward study using a similar paradigm (Failing, Nissens, Pearson, Le Pelley, & Theeuwes, Citation2015). The effect size of search time was dz = 0.8903 in the pilot study and dz = 0.8542 in the reward study (experiment 2), with α error probability = .05 and power = .90 resulting in a required sample size estimate of 16 and 17 participants, respectively. The experiment was approved by the Ethics Committee (Vaste Commissie Wetenschap en Ethiek, VCWE) of the Vrije Unversiteit Amsterdam.

Stimuli

The experiment was conducted in a sound-attenuated and dimly lit room. Event timings were controlled by an Intel(R) Core(TM) i5-3470 3.20 GHz processor. Visual stimuli were created in OpenSesame (Mathôt, Schreij, & Theeuwes, Citation2012) and presented on a Samsung Syncmaster 2233RZ monitor with a resolution of 1680 × 1050 pixels and a 120 Hz refresh rate. Eye movements were registered using the Eyelink 1000 Tower mount system (1000 Hz temporal resolution, <0.01° gaze resolution and gaze position accuracy of <0.5°). An automatic algorithm detected saccades using minimum velocity and acceleration criteria of 35°/s and 9500°/s2, respectively. The electric stimulation was a 400 V electric stimulus with a duration of 200 ms delivered to the left ankle. Two ECG-electrodes were connected to a Digitimer DS7A constant current stimulator (Hertfordshire, U.K.), which is devised for percutaneous electrical stimulation of subjects in clinical and biomedical research settings. The intensity of the current was calibrated to an “unpleasant but painless” level for each participant individually. This ensured that the shock was experienced by each participant independently of the later instructed shock-associated feature.

The experimental task was based on the oculomotor capture paradigm (Theeuwes et al., Citation1999). The search display consisted of six filled shapes on a black background which were presented at equal distances on an imaginary circle (10.1° radius) around the centre of the screen. Each shape was uniquely coloured. The full set of colours used in this study was as follows: blue, CIE: x = .150, y = .079, 3.4 cd/m2; red, CIE: x = .613, y = .349, 9.9 cd/m2; green, CIE: x = .271, y = .603, 23.4 cd/m2; yellow, CIE: x = .417, y = .500, 33.8 cd/m2; pink, CIE: x = .327, y = .240, 20.0 cd/m2; brown, CIE: x = 535, y = .408, 15 cd/m2; cyan, CIE: x = .207, y = .324, 26.6 cd/m2. The six shapes comprised either one diamond and five circles, or one circle and five diamonds. The unique shape was defined as the target. In each display one of the non-target shapes was coloured either red or blue and was defined as the signalling stimulus in experimental blocks (henceforth called the distractor). The colours of the remaining shapes were randomly sampled from the remaining set of colours without replacement.

Procedure and design

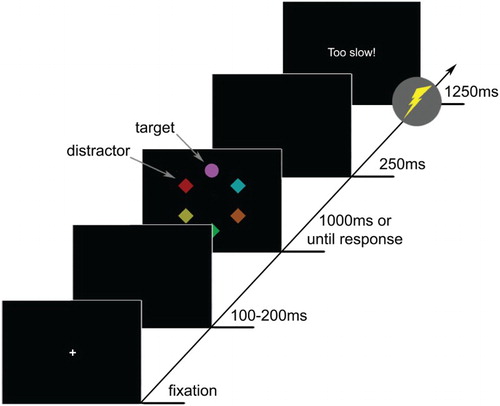

Each trial consisted of a fixation display, search display and feedback display (). Each trial started with the presentation of a fixation cross. Once drift correction was successful (i.e. participant fixating within 2° of the centre of the screen) the display turned blank for 100–200 ms. Following the blank display, the search display consisting of the six uniquely coloured shapes was displayed for 1000 ms or until response. Then the display blanked for 250 ms, followed by the feedback display for 1250 ms. Participants were asked to fixate the shape singleton (target) as quickly and accurately as possible and continue fixating it until the search display disappeared. A region of interest (ROI) with a radius of 3.5° was defined around the target. Once participants had fixated within this target ROI for more than 100 ms, a correct response was registered.

Figure 1. Sequence and time course of trial events. Participants had to make a saccade and fixate the unique shape (target; e.g. the circle) for 100 ms. One of the non-target shapes (distractor) could be red or blue indicating whether there was the possibility of getting a shock (e.g. red) or not (e.g. blue) on that trial. Shocks were delivered together with the onset of the feedback display.

Participants were informed that the presence of either a red or a blue non-target shape in the display would indicate whether they could receive a shock on that particular trial or not. Participants were also informed that they would not receive a shock if they managed to fixate the target before a variable latency limit. This variable latency limit was based on the third quartile of all response times (i.e. times taken to fixate the target for 100 ms on each trial) from the previous block. The latency limit was calculated for each participant individually to promote quick responses throughout the experiment. During experimental blocks, the feedback display read “too slow” if participants were slower than the latency limit or if no response was registered within 1000 ms (timeout). Shock delivery during experimental trials occurred together with the onset of the feedback display.

Counterbalanced across participants, either the red or blue colour signalled the possibility of a shock. That is, for half of the participants the presence of a red distractor indicated that a shock might be delivered on that trial if they would be too slow to respond correctly (threat trials), while the presence of a blue distractor indicated that there was no possibility of a shock (safe trials). For the other half of the participants this relationship was reversed. In each block, half of the trials were threat and the other half were safe trials. A shock was delivered only when participants were too slow on threat trials; with a maximum of one shock every eight threat trials. Participants received no more than 3 shocks per block and no more than 18 shocks throughout the whole experiment. The experiment comprised one block of practice and six experimental blocks of 48 trials each, yielding a total of 336 trials.

Discarded data

Timeouts (1.4% of all trials) were excluded from analyses. Trials in which participants engaged in anticipatory saccades, that is, trials with first saccade latency below 80 ms (2.7%), and trials in which the time between the start of the drift correction and the presentation of the first blank display exceeded 7000 ms (3.1%) were also discarded. This left 92.05% of the original data.

Results

Time to fixate the target

First, we determined whether the presence of a physically non-salient and task-irrelevant stimulus signalling the possibility of receiving a shock interfered with target search and whether that effect changed over time. We therefore analysed the time it took the participants’ eyes to reach the target (i.e. search time). The data were submitted to a within-subject ANOVA with factors trial type (threat vs. safe) and block (1–6). Note that here and in all following analyses using an ANOVA, the Greenhouse–Geisser correction was applied to control for a potential violation of sphericity (uncorrected degrees of freedom are reported for clarity). The ANOVA showed a significant main effect of trial type, F(1,17) = 26.477, p < .001, and block, F(5,85) = 12.901, p < .001,

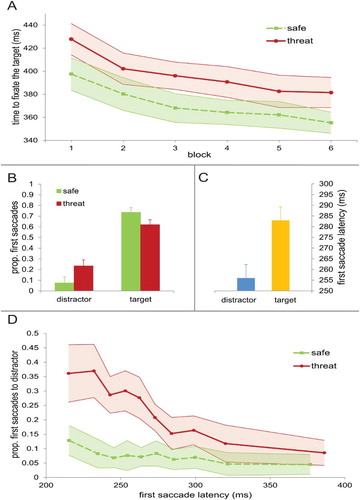

but no interaction, F(5,85) = .199, p > .250. It took participants on average longer to reach the target when the distractor signalled the possibility of receiving a shock (397 ms) compared to when it signalled a safe trial (371 ms). The absence of the trial type × block interaction indicates that there was no significant change in this pattern over the course of the experiment ((a)). The main effect of block indicates a decrease of search time over blocks reflecting general task learning.

Figure 2. (a) Time it takes to fixate the target for both distractor types across blocks. Error bars in this and all other figures represent within-subject 95% confidence intervals. (b) The proportion of first saccades going either to the distractor or to the target for both distractor types separately. (c) The mean latencies of first saccades either going to the distractor or target collapsed over both distractor types. (d) The proportion of first saccades going to the distractor as a function of first saccade latency for both distractor types separately.

First saccade latencies and destinations

The results above are consistent with the idea that the distractor was more likely to capture the eyes when it signalled the possibility of a shock than when it did not signal a shock. This difference in search time might have been caused by participants delaying their first saccade when a shock-signalling distractor was present – possibly to reduce conflict arising from the distractor’s presence. To test this alternative explanation we examined the latency of the first saccade for the two trial types over blocks. An ANOVA with trial type (threat vs. safe) and block (1–6) as factors showed no main effect of trial type, F(1,17) = .005, p > .250, a main effect of block, F(5,85) = 12.516, p < .001, and no trial type × block interaction, F(5,85) = 1.266, p > .250. This suggests that participants did not delay their first saccades on trials with a distractor signalling the possibility of a shock compared to trials with a distractor signalling safety, but speeded up their first saccades over the course of the experiment.

In order to investigate whether the difference in search time between threat and safe trials is driven by a difference in oculomotor capture by the shock- and safe-signalling distractor, we analysed the direction of the first saccades. A first saccade was defined as going in the direction of the target or in the direction of the distractor when the endpoint had an angular deviation of less than 30° to the left or the right (i.e. half the distance between objects) from the centre of the target or distractor on the imaginary circle around the central fixation point (Theeuwes et al., Citation1999). An ANOVA with trial type (threat vs. safe) and direction (target vs. distractor) as factors showed a main effect of direction, F(1,17) = 88.939, p < .001, with a higher proportion of first saccades going towards the target compared to the distractor (67.8% vs. 15.3%). There was no main effect of trial type, F(1,17) = 8.539, p = .10, but there was a trial type × direction interaction, F(1,17) = 27.398, p < .001,

Next, we examined the interaction effect by analyzing the difference between threat and safe trials in the proportion of first saccades going to the target and distractor separately. The analysis revealed that a greater proportion of first saccades went towards the distractor on threat compared to the safe trials (23.3% vs. 7.4%; (b)), t(17) = 5.306, p < .001, d = 1.4, 95% CI [9.6, 22.3]. Also, a smaller proportion of first saccades went towards the target on threat compared to safe trials (62.0% vs. 73.5%), t(17) = 4.751, p < .001, d = 0.77, 95% CI [6.4, 16.7].

To investigate whether particularly early eye movements were affected by the presence of a threat- or safe-signalling distractor, we analysed the difference in latency of first saccades going towards the distractor compared to first saccades going towards the target. An ANOVA with trial type (threat vs. safe) and direction (target vs. distractor) as factors showed a main effect of direction, F(1,17) = 60.104, p < .001, revealing that latencies were generally shorter for first saccades when going to the distractor (256 ms) than when going to the target (283 ms; (c)). There was no main effect of trial type, F(1,17) = .009, p = .926, and no interaction between trial type and direction, F(1,17) = 4.391, p = .051.

Time course of oculomotor capture

In order to investigate the time course of the effect of the presence of a shock- or safe-signalling distractor on first saccade destination in more detail, we analysed the onset times of the first saccades using the Vincentizing procedure. We calculated the mean saccade latencies and the proportion of first saccades going towards the distractor separately for each trial type and each decile of each of the individual saccade latency distributions.

The proportion of first saccades towards the distractor with factors trial type (threat vs. safe) and decile (1st–10th) were submitted to an ANOVA. There was a main effect of decile, F(9,153) = 10.598, p < .001, with the proportion of first saccades towards the distractor decreasing as first saccade latency increased. A main effect of trial type, F(1,17) = 28.355, p < .001,

reflected a greater proportion of first saccades towards the threat-signalling than the safe-signalling distractor. Importantly, a significant interaction, F(9,153) = 6.040, p < .001,

indicated that the influence of trial type was more reliably observed for short first saccades latencies than for longer latencies ((d)). A trend analysis revealed that the difference in the proportion of first saccades going towards the distractor between the trial types decreased linearly over deciles, F(1,17) = 18.200, p = .001,

We also compared the difference in the proportion of first saccades going towards the distractor between threat and safe trials for each decile separately using paired samples t-tests. Significantly more first saccades went to the distractor in the threat compared to the safe trials for decile 1, 2, 3, 4, 5, 6, 7 and 8 (decile 1: t(17) = 4.067, p = .001, d = 1.32, 95% CI [11.2, 31.4]; decile 2: t(17) = 4.507, p < .001, d = 1.46, 95% CI [15.3, 42.1]; decile 3: t(17) = 5.236, p < .001, d = 1.47, 95% CI [13.0, 30.6]; decile 4: t(17) = 4.171, p = .001, d = 1.36, 95% CI [11.1, 33.8]; decile 5: t(17) = 4.905, p < .001, d = 1.20, 95% CI [11.7, 29.4]; decile 6: t(17) = 3.024, p = .008, d = 0.92, 95% CI [3.8, 21.1]; decile 7: t(17) = 2.423, p = .027, d = 0.73, 95% CI [1.2, 16.8]; decile 8: t(17) = 3.145, p = .006, d = 0.71, 95% CI [3.1, 15.7]; decile 9: t(17) = 2.071, p = .054, 95% CI [−0.1, 14.3]; decile 10: t(17) = 1.679, p = .111, 95% CI [−1.0, 9.2]). This suggests that the effect of trial type is particularly pronounced for the fastest first saccades (see (d)).

Discussion

There is ample evidence that attentional orienting to threat is mandatory and automatic. Here we tested the attentional bias towards a stimulus that signals threat under extreme conditions. We show that the eyes are captured by a threat-signalling stimulus even when it was always physically non-salient, task-irrelevant and looking at it, was harmful. Capture by such a stimulus was the largest for the fastest eye movements. As such our data show convincing evidence that stimuli that are threatening have a mandatory and involuntary effect on the allocation of attention which cannot be controlled in a top-down effortful fashion. Indeed, in our experiment looking at the stimulus that signals the shock actually increased the probability of receiving it. Even though it may seem that looking at the threatening stimulus allows a way to control the threat, in the current experiment this was an inadequate strategy as looking at the threatening stimuli increased the chances of receiving a shock.

Our findings strongly suggest that attending and looking at the stimulus conveying threat is truly automatic. This is in line with brain imaging studies that have shown amygdala activation in response to fear arousing faces even under conditions of inattention (e.g. Vuilleumier et al., Citation2001) and studies showing that neglect patients can detect emotional stimuli presented in their neglected visual field (e.g. Lucas & Vuilleumier, Citation2008). However, others have shown that without attention, emotionally laden stimuli are not processed. For example, in conditions of high perceptual load there was no distracting effect on performance by pictures of mutilated bodies (Erthal et al., Citation2005). Similarly, Yates Ashwin, and Fox (Citation2010) showed a similar effect with fear-conditioned angry faces demonstrating no interference in conditions of high perceptual load. Even though we did not manipulate perceptual load as such our display consisting of multi-coloured elements should be considered to be high on perceptual load as the threat-signalling element stimulus did not stand out from the display (i.e. it was one out of six colours). Even under the circumstances of high perceptual load, the coloured element signalling threat grabbed the eyes providing strong evidence for true exogenous automatic capture.

Our findings are consistent with major emotion theories which assume that threatening stimuli are processed preferentially. For example, Mathews and Mackintosh (Citation1998) assume a Threat Evaluation System that automatically encodes the level of threat already very early in the processing stream. Similarly, Öhman and Mineka (Citation2001) propose a module for fear learning and elicitation that is activated by stimuli that are threatening. Our data, showing an effect of the threatening stimulus on the fastest eye movements (<250 ms), is consistent with the view that threat is detected immediately and automatically.

The present findings add to the growing body of evidence suggesting that threat-related stimuli influence visual selection at a very early stage (e.g. Mulckhuyse et al., Citation2013; Schmidt, Belopolsky, & Theeuwes, Citation2015b). However, in previous studies investigating threat on visual selection, selecting the threat-related stimuli was either instructed or necessary for successful task completion at some point during the experiment (e.g. Mulckhuyse et al., Citation2013; Schmidt, Belopolsky, & Theeuwes, Citation2015b). In contrast, in the present study selecting the threat-related stimulus was never necessary nor beneficial for task performance. In fact, selecting this stimulus made it more likely to obtain a shock. The fact that we observe modulations in visual selection by a threat-related stimulus particularly on very early saccades is consistent with the idea that threat influences visual selection already at a very early stage, when selection is mainly driven by involuntary selection processes (Theeuwes, Citation2010). Only at later stages top-down control gradually starts to have an influence on the selection process. Accordingly, the finding that the proportion of first saccades towards the threat-related stimulus decreases with increasing first saccade latency can be explained by an increased influence of top-down search goals on visual selection. In other words, the later the saccade the more top-down control can be exerted, effectively suppressing the threat-related stimulus and promote selection of the target stimulus.

In summary, we show that a task-irrelevant and non-salient stimulus signalling threat captures the eyes even if selecting it makes it much more likely to receive a shock. The capture effect was particularly pronounced for the fastest saccades which supports the idea that threat involuntarily affects selection at a very early stage of processing. These findings extend and elucidate previous evidence of attentional and oculomotor modulations by threat-related stimuli.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Awh, E., Belopolsky, A., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16(8), 437–443. http://dx.doi.org/10.1016/j.tics.2012.06.010

- Devue, C., Belopolsky, A. V., & Theeuwes, J. (2011). The role of fear and expectancies in capture of covert attention by spiders. Emotion, 11(4), 768–775. http://dx.doi.org/10.1037/a0023418

- Erthal, F., De Oliveira, L., Mocaiber, I., Pereira, M., Machado-Pinheiro, W., Volchan, E., & Pessoa, L. (2005). Load-dependent modulation of affective picture processing. Cognitive, Affective, & Behavioral Neuroscience, 5(4), 388–395. http://dx.doi.org/10.3758/CABN.5.4.388

- Failing, M., Nissens, T., Pearson, D., Le Pelley, M., & Theeuwes, J. (2015). Oculomotor capture by stimuli that signal the availability of reward. Journal of Neurophysiology, 114(4), 2316–2327. http://dx.doi.org/10.1152/jn.00441.2015

- Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. http://dx.doi.org/10.3758/bf03193146

- Itti, L., & Koch, C. (2001). Computational modelling of visual attention. Nature Reviews Neuroscience, 2(3), 194–203. http://dx.doi.org/10.1038/35058500

- Koster, E. H. W., Crombez, G., Van Damme, S., Verschuere, B., & De Houwer, J. (2004). Does imminent threat capture and hold attention? Emotion, 4(3), 312–317. http://dx.doi.org/10.1037/1528-3542.4.3.312

- LeDoux, J. E. (1996). The emotional brain. New York, NY: Simon & Schuster.

- Lucas, N., & Vuilleumier, P. (2008). Effects of emotional and non-emotional cues on visual search in neglect patients: Evidence for distinct sources of attentional guidance. Neuropsychologia, 46(5), 1401–1414. http://dx.doi.org/10.1016/j.neuropsychologia.2007.12.027

- Mathews, A., & Mackintosh, B. (1998). A cognitive model of selective processing in anxiety. Cognitive Therapy and Research, 22(6), 539–560. http://dx.doi.org/10.1023/a:1018738019346

- Mathôt, S., Schreij, D., & Theeuwes, J. (2012). OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods, 44(2), 314–324. http://dx.doi.org/10.3758/s13428-011-0168-7

- Mulckhuyse, M., Crombez, G., & Van der Stigchel, S. (2013). Conditioned fear modulates visual selection. Emotion, 13(3), 529–536. http://dx.doi.org/10.1037/a0031076

- Notebaert, L., Crombez, G., Van Damme, S., De Houwer, J., & Theeuwes, J. (2011). Signals of threat do not capture, but prioritize, attention: A conditioning approach. Emotion, 11(1), 81–89. http://dx.doi.org/10.1037/a0021286

- Öhman, A., & Mineka, S. (2001). Fears, phobias, and preparedness: Toward an evolved module of fear and fear learning. Psychological Review, 108(3), 483–522. http://dx.doi.org/10.1037/0033-295x.108.3.483

- Pessoa, L., McKenna, M., Gutierrez, E., & Ungerleider, L. G. (2002). Neural processing of emotional faces requires attention. Proceedings of the National Academy of Sciences, U.S.A., 99(17), 11458–11463. http://dx.doi.org/10.1073/pnas.172403899

- Schmidt, L. J., Belopolsky, A. V., & Theeuwes, J. (2015a). Attentional capture by signals of threat. Cognition & Emotion, 29(4), 687–694. http://dx.doi.org/10.1080/02699931.2014.924484

- Schmidt, L. J., Belopolsky, A. V., & Theeuwes, J. (2015b). Potential threat attracts attention and interferes with voluntary saccades. Emotion, 15(3), 329–338. http://dx.doi.org/10.1037/emo0000041

- Soares, S. C., Esteves, F., Lundqvist, D., & Öhman, A. (2009). Some animal specific fears are more specific than others: Evidence from attention and emotion measures. Behavior Research and Therapy, 47(12), 1032–1042. http://dx.doi.org/10.1016/j.brat.2009.07.022

- Theeuwes, J. (2010). Top–down and bottom–up control of visual selection. Acta Psychologica, 135(2), 77–99. http://dx.doi.org/10.1037/0096-1523.25.6.1595 doi: 10.1016/j.actpsy.2010.02.006

- Theeuwes, J., Kramer, A. F., Hahn, S., Irwin, D. E., & Zelinsky, G. J. (1999). Influence of attentional capture on oculomotor control. Journal of Experimental Psychology: Human Perception and Performance, 25(6), 1595–1608. http://dx.doi.org/10.1037/0096-1523.25.6.1595

- Vuilleumier, P. (2005). How brains beware: Neural mechanisms of emotional attention. Trends in Cognitive Sciences, 9(12), 585–594. http://dx.doi.org/10.1016/j.tics.2005.10.011

- Vuilleumier, P., Armony, J. L., Driver, J., & Dolan, R. J. (2001). Effects of attention and emotion on face processing in the human brain: An event-related fMRI study. Neuron, 30(3), 829–841. http://dx.doi.org/10.1016/s0896-6273(01)00328-2

- Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., & Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience, 18(1), 411–418.

- Yates, A., Ashwin, C., & Fox, E. (2010). Does emotion processing require attention? The effects of fear conditioning and perceptual load. Emotion, 10(6), 822–830. http://dx.doi.org/10.1037/a0020325

- Yiend, J., & Mathews, A. (2001). Anxiety and attention to threatening pictures. The Quarterly Journal of Experimental Psychology Section A, 54(3), 665–681. http://dx.doi.org/10.1080/713755991