ABSTRACT

Adults perceive emotional expressions categorically, with discrimination being faster and more accurate between expressions from different emotion categories (i.e. blends with two different predominant emotions) than between two stimuli from the same category (i.e. blends with the same predominant emotion). The current study sought to test whether facial expressions of happiness and fear are perceived categorically by pre-verbal infants, using a new stimulus set that was shown to yield categorical perception in adult observers (Experiments 1 and 2). These stimuli were then used with 7-month-old infants (N = 34) using a habituation and visual preference paradigm (Experiment 3). Infants were first habituated to an expression of one emotion, then presented with the same expression paired with a novel expression either from the same emotion category or from a different emotion category. After habituation to fear, infants displayed a novelty preference for pairs of between-category expressions, but not within-category ones, showing categorical perception. However, infants showed no novelty preference when they were habituated to happiness. Our findings provide evidence for categorical perception of emotional expressions in pre-verbal infants, while the asymmetrical effect challenges the notion of a bias towards negative information in this age group.

Categorical perception (CP) occurs when continuous stimuli are perceived as belonging to distinct categories; equal-sized physical differences between stimuli are judged as smaller or larger depending on whether the stimuli belong to the same or different categories (Harnad, Citation1987). This phenomenon has long been established in the perception of speech sounds (Liberman, Harris, Hoffman, & Griffith, Citation1957; Pisoni, Citation1971) and colours (Bornstein & Korda, Citation1984; Daoutis, Franklin, Riddett, Clifford, & Davies, Citation2006; Franklin, Clifford, Williamson, & Davies, Citation2005; Pilling, Wiggett, Özgen, & Davies, Citation2003). For example, the continuum of colour is parsed into categories with colour terms, and given the same chromatic difference, two colours from different categories (e.g. “green” and “red”) are easier for observers to distinguish than two colours from the same category (e.g. two shades of green).

CP has also been shown to occur for emotional stimuli, such as facial expressions. Using computer-generated line drawings of faces displaying different emotions, Etcoff and Magee (Citation1992) morphed two expressions to create a continuum of emotional expressions. They tested observers’ identification of the morphed expressions along the continuum and discrimination of pairs of these stimuli. They found that participants were better at discriminating pairs of expressions perceived to be from different emotion categories than pairs of expressions judged to be from the same emotion category. Their findings provided the first evidence for CP of emotional expressions, a finding that has since been replicated using photographs and a wider range of emotions (e.g. Calder, Young, Perrett, Etcoff, & Rowland, Citation1996; Campanella, Quinet, Bruyer, Crommelinck, & Guerit, Citation2002; de Gelder, Teunisse, & Benson, Citation1997; Young et al., Citation1997), as well as in auditory perception of emotions (Laukka, Citation2005).

Some theories have argued that top-down processes underlie CP, with linguistic or conceptual categories reflected in perceptual judgments (e.g. Fugate, Gouzoules, & Barrett, Citation2010; CitationRegier & Kay, Citation2009; Roberson & Davidoff, Citation2000). However, this explanation has been under question, particularly given research showing that CP is present already in pre-verbal infants as young as 4 months (e.g. Bornstein, Kessen, & Weiskopf, Citation1976; Eimas, Siqueland, Jusczyk, & Vigorito, Citation1971; Franklin & Davies, Citation2004). Though CP in pre-verbal infants is well established for the perception of colours and phonemes, few studies have been conducted in the domain of emotion perception, and the results have been inconsistent.

The first study conducted on CP of emotional expressions in pre-verbal infants tested 7-month-olds’ CP on the emotion continuum of happiness-fear (Kotsoni, de Haan, & Johnson, Citation2001). The study started with a boundary identification procedure in which 7-month-old infants were repeatedly presented with a prototypical expression of happiness as an anchor, paired with morphs blended with varying degrees of fear and happiness. Since it is well established that infants have a novelty preference (Fantz, Citation1964), the category boundary was set at the point at which infants started to prefer looking at the novel blended expression compared to the prototypical expression. Using this boundary, infants were then habituated to either a morphed expression from the fear category or from the happiness category and were then tested on their discrimination between the habituated expression and a novel expression taken either from the same emotion category or from a different category. Infants showed a novelty preference for the novel between-category expression, but not for the novel within-category expression. This suggests that they were able to discriminate the between-category pairs of emotional expressions from each other, but not the within-category pairs. Kotsoni and colleagues’ results thus provided the first evidence for CP of emotional expressions in infants. However, the CP effect in this study was only observed after infants were habituated to an expression of happiness, not to an expression of fear. This one-directional effect could be explained by the one-directional procedure used to establish the category boundary, as only a prototypical expression of happiness, but not fear, was used as an anchor. Some previous studies have observed an unequal natural preference for positive versus negative emotional stimuli in infants (Peltola, Hietanen, Forssman, & Leppänen, Citation2013; Vaish, Grossmann, & Woodward, Citation2008). Considering that the two emotions differ in valence, using only one of them as an anchor in the category boundary identification might result in a biased category boundary.

Using the same discrimination paradigm, a recent study investigated infants’ CP on two other emotion continua, happiness-anger and happiness-sadness (Lee, Cheal, & Rutherford, Citation2015). Though they did not report the results of each category of habituation separately (e.g. happiness versus anger), they reported that 6-month-old infants did not show evidence for CP, but that 9-month-olds and 12-month-olds did for the happiness-anger continuum, though not for the happiness-sadness continuum. However, this study did not identify category boundaries empirically. Instead, the categories were created based on the assumption that the boundary likely lies near the midpoint on the morphed continuum (i.e. 50% of one emotion and 50% of the other). However, at least for adult perception, it has been established that the category boundary does not always lie in the midpoint; Research on CP of emotional facial expressions has shown that category boundaries can be influenced by the specific models and emotions employed (Cheal & Rutherford, Citation2015). For example, one study found that a morph with 60% happiness and 40% sadness was judged as an expression of sadness rather than happiness, due to asymmetry in the intensity of emotions displayed in the prototypical expressions (Calder et al., Citation1996). Therefore, it may be precarious to determine the category boundaries on a theoretical, rather than empirical basis. In Lee and colleagues’ study, the variability across continua in general and the null finding on the happiness-sadness continuum specifically, could thus be due to an imprecise category boundary for some comparisons.

Contrasting with the results of Lee et al. (Citation2015), an ERP study testing 7-month-olds did find evidence for CP on the happiness-sadness continuum (Leppänen, Richmond, Vogel-Farley, Moulson, & Nelson, Citation2009). Specifically, attention-sensitive ERPs did not differentiate between two within-category expressions, whereas a clear discrimination among between-category expressions was observed. This study is the only test of CP of emotional expressions in infants to date that used category boundaries based on adults’ judgment, and the results lend support to the notion that CP of emotional expressions does occur when category boundaries are set empirically using adult judgments. However, the behavioural measures in this ERP study were not a direct examination of CP. Specifically, in order to hold the novel stimulus consistent during the ERP recordings, only one emotion was used to test each category type (i.e. infants were habituated to happiness in the within-category condition and to sadness in the between-category condition). This design therefore makes it difficult to draw strong conclusions about CP.

In sum, all previous studies testing CP of emotional expressions in pre-verbal infants have yielded some positive findings, though the specific patterns of results have been somewhat inconsistent. As noted, one limitation of the previous studies is that the procedures used to establish the category boundaries have been variable and potentially problematic. Identifying the category boundaries correctly is crucial in the investigation of categorical perception as an accurate category boundary ensures valid pairing of stimuli that are within-category or between-category.

In adult CP studies, participants are typically given two labels that describe the endpoints of a continuum, and are asked to label each midpoint of the continuum using one of the two labels. The point of the continuum where the majority of perceivers change label is considered the category boundary (e.g. Calder et al., Citation1996; Cheal & Rutherford, Citation2015; de Gelder et al., Citation1997; Etcoff & Magee, Citation1992; Young et al., Citation1997). Such a labelling procedure is not possible with pre-verbal infants. Studies on infants’ CP of colour and speech typically establish the category boundaries based on adult judgements before using the stimuli to test CP in infants (e.g. Bornstein et al., Citation1976; Eimas et al., Citation1971; Franklin et al., Citation2008; Franklin & Davies, Citation2004; McMurray & Aslin, Citation2005; Pilling et al., Citation2003).

One advantage of sampling adults is that the data gathered are more reliable than those collected from infant subjects. Due to the frequent fussiness and limited attention span of infants (which limits the number of possible trials), data collected with infants is inevitably noisy. In adult CP experiments, stimuli are presented in a random order usually repeatedly to ensure reliable responses. In contrast, using a habituation procedure with infants risks inaccurate identification of a biased boundary because habituation has to start from one end of the emotion continuum. Habituation trials then sequentially go towards the other end of the continuum, which may cause the category boundary to shift from its true location due to order and anchoring effects (Nelson, Morse, & Leavitt, Citation1979; Russell & Fehr, Citation1987). This might be what led to the uni-directional findings in previous research (e.g. Kotsoni et al., Citation2001).

A disadvantage of the use of adult judges, however, is that is makes the assumption that the location of category boundaries of a given set of stimuli is stable across ages. For emotional facial expressions, this has not been empirically tested with infants, but it has been shown that 3-year-olds have the same category boundary as adults given the same emotion stimuli (Cheal & Rutherford, Citation2011). In other perceptual domains, such as colours and phonemes, category boundaries have been shown to be consistent across age groups including pre-verbal infants (e.g. Eimas et al., Citation1971; Franklin et al., Citation2008; Franklin & Davies, Citation2004; McMurray & Aslin, Citation2005). These findings suggest ontogenetic consistency in category boundaries, and led us to adopt the approach of identifying category boundaries using adult judges.

The current study set out to conceptually replicate the original demonstration of CP in pre-verbal infants by Kotsoni et al. (Citation2001). Rather than establishing the category boundaries with infants, we deployed the standard procedure used in infant CP research on colour and sounds, that is, establishing the category boundaries using adults’ judgments before testing them with infants. In a series of three experiments, we first employed a standard adult naming procedure to identify the emotion categories on two morphed continua of happiness and fear using a new set of emotional facial expression stimuli (Experiment 1). We then used these stimuli to demonstrate CP of emotional facial expressions in adult perceivers (Experiment 2). Finally, we used the best stimulus pairs from the adult experiments to test whether CP occurs for emotional expressions in 7-month-old-infants (Experiment 3). Ethical approval was obtained from the Ethical Committee at the Department of Psychology at the University of Amsterdam for all experiments. Sample sizes were determined based on feasibility.

Experiment 1

Method

Participants

A total of 62 adults (31 females, Mage = 30 years) took part in this experiment. Participants were recruited online via social media and received no compensation for participation.

Stimuli

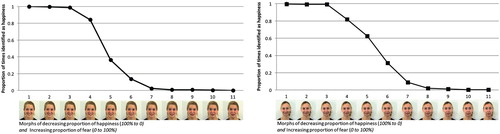

Prototypical facial expressions of fear and happiness were taken from The Amsterdam Dynamic Facial Expression Set (ADFES; Van Der Schalk, Hawk, Fischer, & Doosje, Citation2011). Two Caucasian female models were selected (F02 and F03). For each model, the two facial expressions were morphed using the Sqirlz 2.1 (Xiberpic.com) software to create continua of expressions with a mix of fear and happiness in different proportions. Intermediate exemplars at every 10% point were created (see ). The resulting morphs were edited in Photoshop to make them look more natural. Only small changes were made, such as removing shadows in the background and editing blurred parts of the shoulders, teeth, and hair. Any changes that were made were applied to all morphs on the continuum.

Design and procedure

The experiment was administered online using Qualtrics (http://www.qualtrics.com). Participants first saw a welcome screen and a digital consent form. After agreeing to participate, they were asked to enter their age and gender before proceeding to receive instructions about the task.

During the experiment, participants were presented with one image at a time and were asked to indicate which emotion was expressed on the image, given two response alternatives: “happiness” and “fear”. The position of the response alternatives was counter-balanced between participants. Participants always saw the image, the question, and the options concurrently.

There were 11 different expressions (2 prototypical expressions and 9 morphs) for each of the two models. Each expression was presented 4 times, making a total of 88 trials for each participant. The experiment was divided into four blocks. Each block consisted of 22 unique trials shown in a random order, and the two faces were shown interchangeably. The experiment was self-paced and participants could only continue to the next trial once they had responded to the previous trial. It took about 15 min for participants to complete the task.

Results and discussion

For each stimulus, the proportion of trials that were judged as “happiness” was calculated for each participant for each trial type. As illustrated in (left panel), for Model A, morphs 1–4 were perceived most of the time as happiness, while morphs 5–11 were perceived most of the time as fear. For Model B (, right panel), morphs 1–5 were perceived most of the time as expressions of happiness, while morphs 6–11 were perceived most of the time as expressions of fear.

Considering the series of morphs as a continuum of two emotions transitioning from one into another, there should be a point where participants’ responses change from being predominantly one response to being predominantly the other. This point can be considered a boundary separating the two emotion categories: all expressions to the left of the boundary are more likely to be perceived as happiness and all expressions to the right of the boundary are more likely to be perceived as fear.

For Model A, the boundary occurred between morph 4 and morph 5. Morph 4 was perceived in 84% of the trials to be happiness while morph 5 was perceived in 36% of the trials to be happiness. Here, there was a clear distinction between morphs to the left of the mid-point and those to the right, in terms of their likelihood of being judged as a particular emotion. For Model B, the boundary occurred between morphs 5 and 6. This boundary was, however, not clear-cut, because the morphs adjacent to the boundary points were not judged with a clear preference for one emotion. Specifically, morph 5 was perceived as happiness in only 63% of the trials, compared to 83% of the morph adjacent to the boundary point for Model A. This suggests that there was considerable inconsistency in participants’ perception of the morphs closest to the boundary point for Model B.

It is notable that the category boundary for Model A (between morph 4 and morph 5) and that for Model B (between morph 5 and morph 6) do not occur at the same point on the continuum. The difference in boundary locations could be caused by the differences in the intensity of emotions displayed in the prototypical (end-point) emotional expressions of the different models, or by differences in the facial morphology of the models (Cheal & Rutherford, Citation2015). Additionally, there are individual differences in the judgements of emotional morphs close to the category boundaries. This is particularly evident for Model B, given the less clear-cut boundary observed. Since a considerable number of participants judged the morph closest to the category boundary to be a different emotion than the majority did, these participants may in fact have a different perceptual category boundary for these stimuli. We therefore predicted that, when applied to a discrimination task in Experiment 2, stimuli with Model A would elicit CP, while stimuli with Model B, for which the boundary was less clear-cut, would be less likely to result in CP.

Experiment 2

Experiment 2 sought to establish adult CP of emotional facial expressions using the stimuli from Experiment 1. Specifically, we tested whether observers’ ability to discriminate between facial expressions would differ depending on whether two expressions were from the same emotion category or different ones, given the same amount of physical distance. Adopting the procedure employed in previous studies on adult CP of emotional facial expressions (e.g. Calder et al., Citation1996; Etcoff & Magee, Citation1992), we used a delayed match-to-sample (i.e. X-AB discrimination) task to test whether CP would occur for these stimuli.

Method

Participants

Participants were 34 students from the University of Amsterdam (20 females, Mage = 23 years), participating voluntarily or in return for partial course credits. One participant was excluded due to low accuracy (more than 2 standard deviations lower than the mean and no different from chance rate; inclusion of this participant did not change the pattern of results). The final sample consisted of 33 participants.

Stimuli and apparatus

The stimuli used in this experiment were a sub-set of those used in Experiment 1 (see Design and Procedure). Tests were run on lab PCs controlled by E-Prime (Schneider, Eschmann, & Zuccolotto, Citation2002). Responses were entered using standard keyboards.

Design and procedure

The experiment started with 6 practice trials, using morphs of a model from the ADFES database that was not used for the experimental trials. In each experimental trial, a target stimulus (“X”) was presented in the centre of the screen, disappearing after 1000 ms. Immediately afterwards, the target was presented together with a distractor next to it. Participants were asked to indicate which of the two images, the one to the left or the one to the right (“A” or “B”), was identical to what they had seen on the previous screen (i.e. the target, “X”). They entered their response with a key press. The inter-trial interval was self-paced and no feedback was provided.

The target was always paired with a distractor one step apart on the morphing continuum. For example, morph 4 would be paired with either morph 5 or morph 3 in a given trial. Prototypical expressions and the morphs closest to the prototypes were not included, as previous research has found a ceiling effect for discrimination of equivalent images (Calder et al., Citation1996). This resulted in 6 types of morph pairs for each model. Based on the results from Experiment 1, morph pair 4 and 5 was taken as a between-category comparison for Model A, and morph pair 5 and 6 as a between-category comparison for Model B; all the other morph pairs were within-category comparisons. Each pair was presented 8 times with each morph having an equal likelihood of being the target. The positions of the two images on the screen (left or right) were counterbalanced. Each participant completed 96 experimental trials (8 times per trial type x 6 types of trials x 2 models). The order of all trials was randomised. Participants were instructed to respond as quickly and as accurately as possible.

Results

The most widely used criterion for categorical perception is whether better discrimination occurs for pairs of stimuli from different perceptual categories than for pairs of stimuli from the same perceptual category, given the same amount of physical change (Calder et al., Citation1996). Better discrimination can be operationalised as higher accuracy or faster response speed; here both of these criteria were examined. First, a repeated-measure ANOVA was performed with Model (A vs B) and Category (within vs between) as within-subject factors. A significant interaction effect (Model*Category) was observed for both accuracy, F(1,32) = 11.92, p < 0.01, 95% CI [0.46, 1.94], d = 1.20, and response time, F(1,32) = 7.53, p < 0.01, 95% CI [0.23, 1.68]. d = 0.96. This is consistent with findings from Experiment 1 that Model A and Model B have different category boundaries. Therefore, subsequent analyses for the two models were conducted separately.

Following the analysis procedure from Calder et al. (Citation1996), two comparisons between the category pair types were made. The between-category pairs were first compared to within-category pairs that lie well within the categories (i.e. the tails), and then to all of the within-category pairs. Calder and colleagues found that discrimination was easier for pairs of morphs closer to the endpoints of a continuum. The former test should thus be more likely to reveal differences (i.e. providing an easier test) than the latter.

Model A.

Accuracy

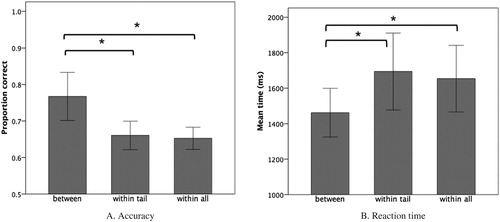

An accuracy rate for each trial type was calculated for each participant, with accuracy defined as the proportion of trials on which the target was correctly identified. The accuracy rate of the between-category comparison trials (morph 4 paired with morph 5) was compared to that of the within-category trials (all other trial types). We first compared the between-category trials with the tail, that is, within-category trials that lie well within each category (morph 3 paired with morph 4 on the happiness side and morph 8 paired with morph 9 on the fear side). A paired-sample t-test showed a significant difference in accuracy when comparing the between-category trials (M = 0.77, SD = 0.18) to the within-category trials in the tail (M = 0.66, SD = 0.11), t (32) = 3.95, p < 0.001, 95% CI [0.05, 0.16], d = 0.67. A paired-sample t-test comparing the between-category pairs to all the within-category pairs (M = 0.65, SD = 0.09) also revealed a significant difference in accuracy, t (32) = 3.64, p < 0.001, 95% CI [0.05, 0.18], d = 0.63. This shows that, as predicted, participants were significantly more accurate in discriminating between-category pairs of images than within-category pairs of images, that is, they showed CP for these stimuli (see ).

Figure 2. Accuracy (panel A: proportion correct) and reaction time (panel B: mean time in ms) of different trial types for Model A. In both plots, the leftmost bars show performance for between-category trials, the middle bars show performance for within-category pairs that lie well within the categories (i.e. the tails), and the rightmost bars show performance for all of the within-category pairs.

Reaction time

The average reaction time for each trial type was calculated for every participant. Then, we conducted the same comparisons between the two types of category pairs as for the accuracy measure. A paired-sample t-test showed a significant difference in reaction times for between-category pairs (M = 1462 ms, SD = 387 ms) compared to within-category pairs in the tail (M = 1694 ms, SD = 612 ms), that is, within-category pairs that were well within each category, t (32) = 3.62, p < 0.001, 95% CI [101.55, 362.87], d = 0.63. The difference was again significant when the between-category pair trials were compared to all the within-category pairs (M = 1654 ms, SD = 529 ms), t (32) = 3.02, p < 0.005, 95% CI [62.27, 321.45], d = 0.53. These results (see ) show that participants were faster at judging between-category pairs of expressions than within-category pairs, demonstrating that the enhanced accuracy for between-category trials was not due to a speed-accuracy trade-off.

Model B.

Accuracy

The same analysis procedure was followed for Model B. First, the between-category pair (morph 5 paired with morph 6) was compared to the within-category pairs in the tail, that is, those that lie well within each category (morphs 3 and 4 on the happiness side and morphs 8 and 9 on the fear side). A paired-sample t-test showed no difference in accuracy between the two types of trials, t (32) = 1.33, p = 0.19, 95% CI [−0.11, 0.02], d = 0.28. The between-category pairs were then compared to the average of all the within-category pairs. A paired-sample t-test again showed no significant difference in accuracy, t (32) = 1.37, p = 0.18, 95% CI [−0.11, 0.02], d = 0.07.

Reaction time

The same comparisons for reaction time were then performed. Paired-sample t-tests did not show significant differences in reaction time when comparing between-category pairs and within-category pairs in the tail, t (32) = 0.75, p = 0.46, 95% CI [−71.66, 155.48], d = 0.27, nor were there significant differences when comparing between-category pairs and the average of all within-category pairs, t (32) = 0.76, p = 0.45, 95% CI [−118.99, 54.13], d = 0.27. The results in terms of both accuracy and reaction times thus showed no evidence of CP for the stimuli of Model B.

Discussion

Using the category boundaries established in Experiment 1, Experiment 2 tested adult participants’ ability to discriminate pairs of emotional expressions. Measuring performance by both accuracy and reaction time, we found CP effects with Model A, but not with Model B of our stimuli. For Model A, participants were both more accurate and faster when discriminating between two between-category expressions, meaning that it was easier to discriminate them from each other compared to the within-category pairs, given equal physical distance (i.e. one morph step). Our results replicate findings from previous research on CP of emotional facial expressions in adults using other stimuli (Calder et al., Citation1996; de Gelder et al., Citation1997; Etcoff & Magee, Citation1992).

Morphs of Model B did not elicit CP effects. This is likely due to a blurred category boundary for these stimuli. Research shows that there are individual differences in identifying where a category boundary lies, which can interact with model identity (Cheal & Rutherford, Citation2015). As shown in the results from Experiment 1, the category boundary for Model B was less clear-cut than that of Model A. If participants vary considerably in their judgments of where the boundary is for a given continuum, pairings of between-category and within-category stimuli may consequently not be valid. This result highlights the importance of establishing empirically the existence and location of a category boundary for a given set of stimuli. The results of Experiment 1 and 2 yielded a set of verified between-category and within-category stimulus pairs with Model A, which were used to test for CP in pre-verbal infants in Experiment 3.

Experiment 3

In order to test whether pre-verbal infants perceive emotions categorically, we employed a discrimination task suited to infants, based on a habituation and visual preference paradigm used by Kotsoni et al. (Citation2001). They used a visual-preference apparatus that allowed the experimenter to observe the infants through a peephole in the centre of the presentation screen while the stimuli were presented. Two experimenters manually timed the trials by noting the infants’ looking times, judged by the reflection of the stimulus over the infant’s pupils. In the current study, we employed eye-tracking in order to maximise measurement accuracy, and to reduce the influence of possible human errors. Additionally, to make sure that habituation would occur for every infant, we used a subject-controlled habituation phase (e.g. Cohen & Cashon, Citation2001; Lee et al., Citation2015), meaning that each habituation stimulus was presented repeatedly as long as the infant was still looking, that is, until habituation of that infant’s perception of the stimulus had occurred.

If infants perceive emotions categorically, they should look more at a novel expression than the habituated expression, but only for between-category pairs. If infants do not show CP, they should spend the same amount of time looking at the novel expression and the habituated expression in both within-category pairs and between-category pairs.

Method

Participants

Participants were recruited via the municipality of Amsterdam. Letters were sent out to parents who had recently had a baby, inviting them to take part in scientific research at the University of Amsterdam. Thirty-four healthy Dutch infants between the age of 6.5 and 7.5 months (12 females, Mage = 7.1 months) participated in this experiment. Two of the participants were mixed-race; all others were Caucasian. Another 15 infants were tested but excluded due to calibration failures (N = 3) or fussiness during test (N = 12). All the infants were born full term and had no known visual or hearing impairments. All parents provided written informed consent for their child to participate and they received a small present in appreciation of their participation.

All parents of the participating infants reported having no family history of depression. Additionally, the main caregivers filled in the Depression Anxiety and Stress Scale (DASS; Lovibond & Lovibond, Citation1995) and all scored within the normal range.

Stimuli and apparatus

Because the morphed emotional expressions of Model B did not elicit CP effects in adults, only emotional expressions from Model A were used in the experiment. Consistent with previous research (e.g. Kotsoni et al., Citation2001), morphs two steps apart (20% physical distance) were used.

The experiment was programmed and presented in SR Research Experiment Builder (SR Research Ltd., Mississauga, Canada). The programme controls an EyeLink®eye-tracker with a sampling rate of 1000 HZ and an average accuracy of 0.25°–0.5°. The eye-tracker was located under a PC screen (size 34 × 27.5 cm; 1280 × 1024 pixels) where the stimuli were presented. A target sticker was placed on the participants’ foreheads as a reference point so that the eye-tracker could track the position of the head when participants moved during the experiment.

Design and procedure

We created two conditions, a happiness and a fear condition. Half of the infants (i.e. 17 infants) were habituated to an expression identified by adults as happiness (morph 4), and then presented with the same expression paired with either another happiness expression two morph steps away (morph 2, within-category comparison), or paired with an expression of fear, two morph steps away in the other direction (morph 6, between-category comparison). The other half of the infants were habituated to an expression of fear (morph 5), and then presented with this expression paired with either morph 7 (within-category comparison) or with morph 3 (between-category comparison).

Infants sat in a car seat on the lap of their caregiver about 60 cm in front of the screen. The experiment started with a three-point calibration, in which an attention getter was shown in three locations in order to record the location of the pupil and corneal reflection at specific points for each infant. After the eye-tracker was calibrated, the experiment commenced. Infants first saw a pre-test attention-getter (a cartoon image) to check that they were looking at the screen. The habituation trials then began. Infants were presented with two identical to-be-habituated images side by side on a black background. The size of each image measures 345 × 460 pixels on the screen with 205 pixels apart from each other. The infants were presented with the same images repeatedly until they habituated. Habituation is considered to occur when infants’ average looking time on three consecutive trials was less than 50% of the average looking time of the 3 longest trials, a commonly used habituation criterion (e.g. Cohen & Cashon, Citation2001; Lee et al., Citation2015; Woodward, Citation1998). The habituation phase ended when the habituation criterion was met or after 20 trials.

Four test trials then followed. Between-category pairs of expressions and within-category pairs of expressions were each presented twice, with the location on screen counterbalanced. The order of the four test trials was pseudo-randomized so that the first two trials consisted of one between-category pair and one within-category pair. Before the end of the experiment, the pre-test image appeared again, to check whether the infant was still looking at the screen.

Every trial started with an animated fixation-cue in the centre of the screen (an expanding and contracting bulls-eye) accompanied by sounds in order to get the infant’s attention. Once the infant was looking at the screen, the stimuli were presented. Stimulus presentation was controlled by a button press on the experimenter’s computer in the control room next door, where the test space could be observed through a camera.

A trial automatically ended when the infant looked away from the screen for more than 1500 ms or after 20 s. A trial was recycled (i.e. presented again) if the infant looked at the screen for less than 1000 ms before they looked away, or if the infant did not look at the screen at all within 4000 ms of the trial starting (see Cohen & Cashon, Citation2001). These criteria applied to both the habituation and test phase. A short animation attention-getter (lasting about 3 s) was presented every four trials to keep the infants’ attention on the screen. The eye-tracker tracked the gaze of the infant’s right eye throughout the experiment. The test took 5–10 min to complete.

Data processing

Gaze data were exported using EyeLink® Data Viewer (SR Research Ltd., Mississauga, Canada). In, Spatial Overlay View, we selected two rectangular interest areas as the locations of the images extending 55 pixels from the edges of each image. Dwell Time on each interest area was then defined as the looking time for each expression within a trial. Total Dwell Time on both interest areas was defined as total looking time on screen.

Results

To check if participants in the two conditions were comparable, we conducted a t-test on age and a chi-square test on sex. Both the t-test, t (32) = 0.37, p = 0.63, 95% CI [−0.15, 0.22], d = 0.13, and the chi-square test, X2 (1) = 0.52, p = 0.47, 95% CI [−0.43, 0.93], d = 0.25, revealed no significant differences between participants in the two groups. We therefore concluded that the two participant groups were comparable in age and sex and proceeded to the following analysis.

All infants reached the habituation criterion within 20 trials. It took an average of 8.9 trials (SD = 4.0) for infants to habituate to an emotional expression, with a minimum of 4 trials and a maximum of 18 trials. There was no significant difference between the number of trials taken to habituate to a happy expression (M = 8.6, SD = 4.4) compared to a fearful expression (M = 9.4, SD = 3.8), t (32) = 0.59, p = 0.37, 95% CI [−3.66, 2.13], d = 0.21.

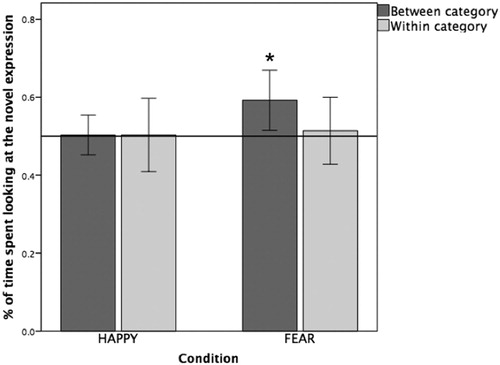

If infants perceive emotions categorically, they should show discrimination between pairs of facial expressions that belong to different emotion categories, but not between pairs of expressions from the same emotion category. To test this hypothesis, we followed the analysis procedure of Kotsoni et al. (Citation2001). First, we examined whether infants could discriminate between within-category pairs of facial expressions. We therefore compared infants’ proportion of looking time for the novel expression (relative to the habituated expression) in the within-category test trials to the proportion of looking time expected by chance (0.5). The results showed that infants did not look at the novel expressions in a within-category pair longer than would be predicted by chance, both after habituating to a happy expression, t(16) = 0.07, p = 0.09, 95% CI [0.09, 0.10], d = 0.04, and after habituating to a fearful expression t(16) = 0.35, p = 0.74, 95% CI [−0.07, 0.10], d = 0.18. This suggests that, as expected, infants did not discriminate between within-category pairs of facial expressions. We then performed the same comparison for between-category test trials. This test showed that infants did look longer at the novel expression after habituation to the fearful expression, t (16) = 2.53, p < 0.05, 95% CI [0.01, 0.17], d = 1.27, but not after habituation to the happy expression, t (16) = 0.13, p = 0.90, 95% CI [−0.05, 0.05], d = 0.07.Footnote1 These results are illustrated in .

Discussion

These results show that infants are able to discriminate between-category pairs of emotional expressions, but not within-category pairs of emotional expressions. The effect is characterised by preferential looking at a novel expression from a different emotion category (i.e. happiness) as compared to the emotion category of the habituated expression (i.e. fear). This provides support for CP of emotional facial expressions in pre-verbal infants.

However, we only observed the effect in one direction, that is, in the fear condition, but not in the happiness condition. This is contrary to a previous study that found CP of emotional facial expressions in infants after they were habituated to happy, but not fearful facial expressions (Kotsoni et al., Citation2001). Kotsoni and colleagues explained their results as possibly resulting from the “negativity bias”, a preference for negative stimuli that has been observed in this age group. This bias has been explained as being due to infants attending to negative stimuli because they are less frequently encountered in their daily life (de Haan & Nelson, Citation1998; Nelson & Dolgin, Citation1985; Vaish et al., Citation2008; Yang, Zald, & Blake, Citation2007). According to Kotsoni and colleagues, infants’ spontaneous preference for fearful faces may have interacted with their general preference for novel stimuli (i.e. the between-category happy faces), making the infants in their study look at both faces. However, our results could not be explained by this line of reasoning because the infants in our study did not prefer looking at fearful, as compared to happy, faces. An important difference between the current study and that of Kotsoni and colleagues is the method of habituation. It may be that the 4 trials of fixed familiarisation used for habituation in Kotsoni’s study were not sufficient for infants to habituate fully to the fearful expression, due to its high baseline novelty. In the current study, we employed a subject-controlled habituation procedure in order to ensure that infants were fully habituated to the initial image before moving on to the test phase. It is worth noting that the average number of trials taken to habituate in our study was nearly twice (8.9) the number of trials used in the fixed familiarisation procedure by Kotsoni and colleagues (4 trials). Therefore, it is possible that the fixed familiarisation phase did not allow enough time for infants to habituate to the emotional expressions in Kotsoni and colleagues’ study.

In the current study, infants did not take longer to habituate to a fearful expression than to a happy expression. This suggests that the baseline novelty of these two expressions may in fact not differ for 7-month-old infants. This would argue against a negativity bias in baseline preferences in this age group. Infants in our study did show a novelty preference for the between-category expression (happiness) after habituation to fear. However, after habituation to happiness, they looked at the novel between-category expression (fear) equally long as the habituated expression (happiness). Is this evidence for a “positivity bias”? Research has shown that 4-month-old and 7-month-old infants in fact avoid looking at facial expressions of anger and fear compared to expressions of happiness (Hunnius, de Wit, Vrins, & von Hofsten, Citation2011). After initial rapid detection, adults also respond to threat-related emotional facial expressions (e.g. fear and anger) with avoidance (Becker & Detweiler-Bedell, Citation2009; Lundqvist & Ohman, Citation2005; Van Honk & Schutter, Citation2007). Note that this avoidant looking pattern for fearful expressions does not necessarily yield a prediction of a baseline preference for either positive or negative emotional expressions; the preference for happiness over fear may only be evident when the two expressions are displayed side by side, forming a contrast. Indeed, in the current study, the habituation data revealed no difference in the number of trials taken to habituate to happiness as opposed to fear. This pattern would benefit from replication and further examination in future studies.

General discussion

The current study reported three experiments examining the CP of emotional facial expressions on the continuum happiness-fear in adults and 7-month-old infants. After identifying the category boundaries using a naming procedure and demonstrating CP with these stimuli in adults, we employed a habituation and visual-preference paradigm to test infants’ CP of emotional facial expressions. Our findings provide evidence for the CP of emotion in pre-verbal infants.

For the first time in the study of CP of emotional facial expressions in pre-verbal infants, we established category boundaries based on adults’ perceptual judgments before using the stimuli to test infants. One advantage of having validated the stimuli in adults before using them to test CP in infants was that this avoided the use of an emotion continuum with blurred category boundaries: The results in Experiment 1 for Model B revealed a blurred category boundary, and the stimuli with Model B did not elicit CP in adults in Experiment 2. This fits with previous research demonstrating variability in category boundary points and patterns across stimuli (Calder et al., Citation1996; Cheal & Rutherford, Citation2015). These differences between stimuli are a factor that has typically not been taken into account in infant emotion CP research.

Previous studies either did not establish the boundary empirically, or draw inferences based on infants’ behaviours (e.g. Kotsoni et al., Citation2001; Lee et al., Citation2015). If the category boundary is assumed to occur at a point of the continuum where it does not in fact occur perceptually, what are assumed to be between and within-category pairings of expressions may not correspond to the actual categories. This could explain previous inconsistencies in the findings on infant CP of emotional facial expressions. The current study opted to establish category boundaries beforehand using adult judges, a standard procedure employed in infant CP research on colour and speech (e.g. Eimas et al., Citation1971; Franklin et al., Citation2008; Franklin & Davies, Citation2004; McMurray & Aslin, Citation2005). The advantage of using adult judges is that is allows for robustly and directly establishing the category boundary based on a large number of data points. Given that the category boundaries identified by adult judges have been previously shown to match those of infants (e.g. Eimas et al., Citation1971; Franklin et al., Citation2008; Franklin & Davies, Citation2004; McMurray & Aslin, Citation2005), establishing category boundaries with adult judges thus provided more reliable estimates. One disadvantage of this approach, however, is that it ignores individual differences in judgements of category boundaries. Although there is convergence among most individuals, our data from Experiment 1 showed that some participants judged the locations of category boundaries to be slightly different from the majority. Future research with interest in individual differences should take this into account.

Another factor that may have contributed to the inconsistencies in previous findings on CP of emotions in infants is the variation in the emotion categories and ages examined. Research has indicated that the development of emotion recognition in infants is dependent on the amount of exposure to and the relevance of a specific emotion at a given point during development (e.g. de Haan, Belsky, Reid, Volein, & Johnson, Citation2004; Pollak & Kistler, Citation2002; Pollak & Sinha, Citation2002). It is therefore possible that CP of different emotions may be observed at different points of the developmental trajectory. For example, Lee et al. (Citation2015) only observed CP of emotion on the continuum happiness-anger in 9 and 12-month-olds, but not 6-month-olds. Future research may benefit from investigating a wider range of emotion continua and infants of different ages.

Consistent with Kotsoni et al. (Citation2001), the current research tested pre-verbal infants of around 7 months. Infants of this age have limited verbal knowledge. Previous research has shown that most children do not produce their first word until their first birthday (Thomas, Campos, Shucard, Ramsay, & Shucard, Citation1981). In terms of comprehension, 6–9-month-olds have been shown to understand only a small number of concrete words referring to familiar objects such as foods and body parts (Bergelson & Swingley, Citation2012). Emotion labels are generally not mentioned as one of the words 8–16-month-olds might understand or produce in the frequently used parental questionnaire to assess their vocabulary (i.e. communicative development inventory; Fenson et al., Citation2007). Therefore, it is highly unlikely that the infants in the current study had access to verbal labels matching the displayed emotions during our looking task. This suggests that CP does not require language to occur. This, however, does not imply that CP is independent of linguistic or conceptual knowledge once the individual has acquired such knowledge.

Our findings are consistent with accounts arguing that CP is not dependant on language modulating discrimination in a top-down manner (e.g. Dailey, Cottrell, Padgett, & Adolphs, Citation2002; Sauter, Le Guen, & Haun, Citation2011; Yang, Kanazawa, Yamaguchi, & Kuriki, Citation2016). For example, Dailey et al. (Citation2002) demonstrated that without any language input, a trained neural network is able to identify facial expressions in a similar fashion as human beings. Rather, CP occurs in very young infants across a range of different perceptual domains before the development of language or complex concepts. It is notable that emotional facial expressions may be perceptually more complex than speech sounds and colours, which vary on fewer perceptual dimensions. It is therefore particularly remarkable that CP occurs for emotional facial expressions in pre-verbal infants.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1 Given the asymmetrical CP found for infants in Experiment 3, we sought to establish whether CP for adults was also asymmetrical. We therefore re-analysed the adult data for Model A (for which CP had been found) from Experiment 2. In order to maximise the similarity to the infant analysis, between-category trials (i.e. trials with morphs 4 and 5) were compared to within-category trials for the single nearest trial type (i.e. trials with morphs 3 and 4 for within-category Happiness, and trials with morphs 5 and 6 for within-category Fear). CP was found for accuracy (i.e. participants being more accurate for between- as compared to within-category trials) both for Happiness, t(32) = 2.85, p = 0.008, 95% CI [0.05 0.30], d = 0.50, and for Fear, t(32) = 2.40, p = 0.02, 95% CI [0.03, 0.31], d = 0.42. CP was found for reaction times (i.e. participants being faster for between- as compared to within-category trials) for Happiness, t(32) = 2.41, p = 0.02, 95% CI [47.93, 563.74], d = 0.42, but not for Fear, t(32) = 1.11, p = 0.24, 95% CI [-445.49 130.60], d = 0.19, though the means were in the expected direction (between-category trials mean: 1609 ms; within-category trials mean: 1767 ms). These results show that CP for emotional facial expressions is not asymmetrical in in adults.

References

- Becker, M. W., & Detweiler-Bedell, B. (2009). Early detection and avoidance of threatening faces during passive viewing. Quarterly Journal of Experimental Psychology, 62(7), 1257–1264.

- Bergelson, E., & Swingley, D. (2012). At 6–9 months, human infants know the meanings of many common nouns. Proceedings of the National Academy of Sciences, 109(9), 3253–3258.

- Bornstein, M. H., Kessen, W., & Weiskopf, S. (1976). Color vision and hue categorization in young human infants. Journal of Experimental Psychology: Human Perception and Performance, 2(1), 115–129.

- Bornstein, M. H., & Korda, N. O. (1984). Discrimination and matching within and between hues measured by reaction times: Some implications for categorical perception and levels of information processing. Psychological Research, 46(3), 207–222.

- Calder, A. J., Young, A. W., Perrett, D. I., Etcoff, N. L., & Rowland, D. (1996). Categorical perception of morphed facial expressions. Visual Cognition, 3(2), 81–118.

- Campanella, D., Quinet, P., Bruyer, R., Crommelinck, M., & Guerit, J. M. (2002). Categorical perception of happiness and fear facial expressions: An ERP study. Journal of Cognitive Neuroscience, 14(2), 210–217.

- Cheal, J. L., & Rutherford, M. D. (2011). Categorical perception of emotional facial expressions in preschoolers. Journal of Experimental Child Psychology, 110(3), 434–443.

- Cheal, J. L., & Rutherford, M. D. (2015). Investigating the category boundaries of emotional facial expressions: Effects of individual participant and model and the stability over time. Personality and Individual Differences, 74, 146–152.

- Cohen, L. B., & Cashon, C. H. (2001). Do 7-month-old infants process independent features or facial configurations? Infant and Child Development, 10(1-2), 83–92.

- Dailey, M. N., Cottrell, G. W., Padgett, C., & Adolphs, R. (2002). EMPATH: A neural network that categorizes facial expressions. Journal of Cognitive Neuroscience, 14(8), 1158–1173.

- Daoutis, C. A., Franklin, A., Riddett, A., Clifford, A., & Davies, I. R. (2006). Categorical effects in children’s colour search: A cross-linguistic comparison. British Journal of Developmental Psychology, 24(2), 373–400.

- de Gelder, B., Teunisse, J. P., & Benson, P. J. (1997). Categorical perception of facial expressions: Categories and their internal structure. Cognition and Emotion, 11(1), 1–23.

- de Haan, M., Belsky, J., Reid, V., Volein, A., & Johnson, M. H. (2004). Maternal personality and infants’ neural and visual responsivity to facial expressions of emotion. Journal of Child Psychology and Psychiatry, 45, 1209–1218.

- de Haan, M., & Nelson, C. A. (1998). Discrimination and categorization of facial expressions of emotion during infancy. In A. Slater (Ed.), Perceptual development: Visual, auditory and speech perception in infancy (pp. 287–309). Hove: Psychology Press.

- Eimas, P. D., Siqueland, E. R., Jusczyk, P., & Vigorito, J. (1971). Speech perception in infants. Science, 171(3968), 303–306.

- Etcoff, N. L., & Magee, J. J. (1992). Categorical perception of facial expressions. Cognition, 44(3), 227–240.

- Fantz, R. L. (1964). Visual experience in infants: Decreased attention to familiar patterns relative to novel ones. Science, 146(3644), 668–670.

- Fenson, L., Bates, E., Dale, P. S., Marchman, V. A., Reznick, J. S., & Thal, D. J. (2007). MacArthur-Bates communicative development inventories. Baltimore: Paul H. Brookes Publishing Company.

- Franklin, A., Clifford, A., Williamson, E., & Davies, I. (2005). Color term knowledge does not affect categorical perception of color in toddlers. Journal of Experimental Child Psychology, 90(2), 114–141.

- Franklin, A., & Davies, I. R. (2004). New evidence for infant colour categories. British Journal of Developmental Psychology, 22(3), 349–377.

- Franklin, A., Drivonikou, G. V., Bevis, L., Davies, I. R., Kay, P., & Regier, T. (2008). Categorical perception of color is lateralized to the right hemisphere in infants, but to the left hemisphere in adults. Proceedings of the National Academy of Sciences, 105(9), 3221–3225.

- Fugate, J. M. B., Gouzoules, H., & Barrett, L. F. (2010). Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10(4), 544–554.

- Harnad, S. (1987). Categorical perception: The groundwork of cognition. Cambridge: Cambridge University Press.

- Hunnius, S., de Wit, T. C., Vrins, S., & von Hofsten, C. (2011). Facing threat: Infants’ and adults’ visual scanning of faces with neutral, happy, sad, angry, and fearful emotional expressions. Cognition and Emotion, 25(2), 193–205.

- Kotsoni, E., de Haan, M., & Johnson, M. H. (2001). Categorical perception of facial expressions by 7-month-old infants. Perception, 30(9), 1115–1125.

- Laukka, P. (2005). Categorical perception of vocal emotion expressions. Emotion, 5(3), 277–295.

- Lee, V., Cheal, J. L., & Rutherford, M. D. (2015). Categorical perception along the happy–angry and happy–sad continua in the first year of life. Infant Behavior and Development, 40, 95–102.

- Leppänen, J. M., Richmond, J., Vogel-Farley, V. K., Moulson, M. C., & Nelson, C. A. (2009). Categorical representation of facial expressions in the infant brain. Infancy, 14(3), 346–362.

- Liberman, A. M., Harris, K. S., Hoffman, H. S., & Griffith, B. C. (1957). The discrimination of speech sounds within and across phoneme boundaries. Journal of Experimental Psychology, 54(5), 358–368.

- Lovibond, S. H, & Lovibond, P. F. (1995). Manual for the depression anxiety stress scales (2nd ed). Sydney: Psychology Foundation.

- Lundqvist, D., & Ohman, A. (2005). Emotion regulates attention: The relation between facial configurations, facial emotion, and visual attention. Visual Cognition, 12(1), 51–84.

- McMurray, B., & Aslin, R. N. (2005). Infants are sensitive to within-category variation in speech perception. Cognition, 95(2), B15–B26.

- Nelson, C. A., & Dolgin, K. G. (1985). The generalized discrimination of facial expressions by seven-month-old infants. Child Development, 56(1), 58–61.

- Nelson, C. A., Morse, P. A., & Leavitt, L. A. (1979). Recognition of facial expressions by seven-month-old Infants. Child Development, 50(2), 1239–1242.

- Peltola, M. J., Hietanen, J. K., Forssman, L., & Leppänen, J. M. (2013). The emergence and stability of the attentional bias to fearful faces in infancy. Infancy, 18(6), 905–926.

- Pilling, M., Wiggett, A., Özgen, E., & Davies, I. R. (2003). Is color “categorical perception” really perceptual? Memory & Cognition, 31(4), 538–551.

- Pisoni, D. B. (1971). On the nature of categorical perception of speech sounds (doctoral dissertation). University of Michigan, Ann Arbor, MI.

- Pollak, S. D., & Kistler, D. J. (2002). Early experience is associated with the development of categorical representations for facial expressions of emotion. Proceedings of the National Academy of Sciences, 99(13), 9072–9076.

- Pollak, S. D., & Sinha, P. (2002). Effects of early experience on children’s recognition of facial displays of emotion. Developmental Psychology, 38(5), 784–791.

- Regier, T., & Kay, P. (2009). Language, thought, and color: Whorf was half right. Trends in Cognitive Sciences, 13(10), 439–446.

- Roberson, D., & Davidoff, J. (2000). The categorical perception of colors and facial expressions: The effect of verbal interference. Memory & Cognition, 28(6), 977–986.

- Russell, J. A., & Fehr, B. (1987). Relativity in the perception of emotion in facial expressions. Journal of Experimental Psychology: General, 116(3), 223–237.

- Sauter, D. A., Le Guen, O., & Haun, D. B. M. (2011). Categorical perception of emotional facial expressions does not require lexical categories. Emotion, 11(6), 1479–1483.

- Schneider, W., Eschmann, A., & Zuccolotto, A. (2002). E-Prime user’s guide. Pittsburgh, PA: Psychology Software Tools.

- Thomas, D. G., Campos, J. J., Shucard, D. W., Ramsay, D. S., & Shucard, J. (1981). Semantic comprehension in infancy: A signal detection analysis. Child Development, 52(3), 798–803.

- Vaish, A., Grossmann, T., & Woodward, A. (2008). Not all emotions are created equal: The negativity bias in social-emotional development. Psychological Bulletin, 134(3), 383–403.

- Van Der Schalk, J., Hawk, S. T., Fischer, A. H., & Doosje, B. (2011). Moving faces, looking places: Validation of the Amsterdam dynamic facial expression Set (ADFES). Emotion, 11(4), 907–920.

- Van Honk, J., & Schutter, D. J. (2007). Vigilant and avoidant responses to angry facial expressions. In E. Harmon-Jones, & P. Winkielman (Eds.), Social neuroscience: Integrating biological and psychological explanations of social behavior (pp. 197–223). New York: Guilford Press.

- Woodward, A. L. (1998). Infants selectively encode the goal object of an actor’s reach. Cognition, 69(1), 1–34.

- Yang, J., Kanazawa, S., Yamaguchi, M. K., & Kuriki, I. (2016). Cortical response to categorical color perception in infants investigated by near-infrared spectroscopy. Proceedings of the National Academy of Sciences, 113, 2370–2375.

- Yang, E., Zald, D. H., & Blake, R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion, 7(4), 882–886.

- Young, A. W., Rowland, D., Calder, A. J., Etcoff, N. L., Seth, A., & Perrett, D. I. (1997). Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition, 63(3), 271–231.