?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Facial mimicry has long been considered a main mechanism underlying emotional contagion (i.e. the transfer of emotions between people). A closer look at the empirical evidence, however, reveals that although these two phenomena often co-occur, the changes in emotional expressions may not necessarily be causally linked to the changes in subjective emotional experience. Here, we directly investigate this link, by testing a model in which facial activity served as a mediator between the observed emotional displays and subsequently felt emotions (i.e. emotional contagion). Participants watched videos of different senders displaying happiness, anger, or sadness, while their facial activity was recorded. After each video, participants rated their own emotions and assessed the senders’ likeability and competence. Participants both mimicked and reported feeling the emotions displayed by the senders. Moreover, their facial activity partially explained the association between the senders’ emotional displays and self-reported emotions, thereby supporting the notion that facial mimicry may be involved in emotional contagion.

The transfer of affective states between people has been given different names, such as emotional contagion (Hatfield, Cacioppo, & Rapson, Citation1994), emotional transfer (Parkinson, Citation2011), affective linkage (Elfenbein, Citation2014), or the social induction of affect (Epstude & Mussweiler, Citation2009). Hatfield et al. (Citation1994) promoted the term emotional contagion, which evokes the notion of a rapid, unconscious transmission of affect. Based on a review of literature and building on the ideas formulated by Lipps (Citation1907), they proposed a two-step process. First, the receiver imitates the sender’s emotional display in emotional mimicry. Second, facial feedback from such mimicry elicits the corresponding emotional state in the receiver (Söderkvist, Ohlén, & Dimberg, Citation2018). As such, mimicry is a cause of emotional contagion.

Yet, the evidence for the causal role of mimicry, although persuasive, was indirect. In a typical study, participants view pictures or videos of emotional faces while their facial activity is measured. After each picture or video (or a sequence thereof) participants report their own feelings as an indicator of emotional contagion. Such studies demonstrated that emotional mimicry and contagion co-occured (e.g. Hsee, Hatfield, Carlson, & Chemtob, Citation1990; Lundqvist & Dimberg, Citation1995), that is, participants’ facial behaviour as well as self-reported emotional experiences corresponded to the facial expressions of the senders. This co-occurrence of mimicry and emotional contagion was then interpreted to show that the former was the cause of the latter (Hatfield et al., Citation1994).

However, subsequent studies have challenged this view (Hess & Blairy, Citation2001; Van der Schalk et al., Citation2011). For instance, Hess and Blairy (Citation2001) found evidence of both mimicry and emotional contagion, but mediation analyses did not support the notion that these two processes were significantly related. In a similar vein, studies that tested the correlation between the relevant facial behaviour and self-reported feelings in response to photographed or video-taped senders did not find any significant link between mimicry and contagion (e.g. Van der Schalk et al., Citation2011). The only exception is the study by Sato, Fujimura, Kochiyama, and Suzuki (Citation2013) who used path analysis to demonstrate that, when participants were exposed to dynamic expressions, facial mimicry predicted the valence of both felt and recognised emotions. This, according to the authors, confirms that facial mimicry influences emotional contagion. However, the study did not examine the effects specific to particular emotions, which seems critically important because emotional displays may evoke not only convergent but also divergent reactions (e.g. angry faces may elicit not only anger, but also disgust or fear; Lundqvist & Dimberg, Citation1995; Van der Schalk et al., Citation2011).

Taken together, the majority of studies that went beyond testing for co-occurrence of emotional contagion and emotional mimicry did not support the notion that these phenomena are causally related. At the same time, a closer examination of previous studies shows that they were either limited to correlations between facial behaviour and self-reported feelings or tested a model in which emotional contagion served as a mediator between emotional mimicry and emotion recognition. Put differently, null findings reported in these studies do not directly challenge the notion that mimicry provides a link between emotions displayed by the sender and those reported by the receiver. Therefore, in the current study we addressed this link explicitly by testing a model in which facial mimicry served as mediator between the sender’s emotional display and the receiver’s feelings.

In addition, testing the mimicry-contagion link in the context of an emotion recognition task may cause interference between the emotion recognition task and participants’ feelings. Specifically, assigning the senders’ expression to a particular emotion category may make this category more accessible and thus shape the receivers’ interpretation of their own feelings (Gawronski & Bodenhausen, Citation2005). Moreover, emotional states displayed by the senders typically represent basic emotions and thus asking participants to recognise these expressions is a very easy task. Such easy tasks make processing fluent and thus often elicit positive affective reactions, regardless of the valence of a perceived facial display (Olszanowski, Kaminska, & Winkielman, Citation2018). This may explain why in the aforementioned experiments, which relied on emotion recognition tasks (e.g. Hess & Blairy, Citation2001; Van der Schalk et al., Citation2011), the effects observed for self-reported emotions following exposure to negative emotional displays (e.g. anger or sadness) were not very robust. It is possible that these effects were cushioned by fluency-based positive affective reactions evoked by the easiness of emotion recognition tasks.

The present study

Our primary aim in this research was to examine the role of facial mimicry for emotional contagion by treating mimicry as a mediator in the relationship between the sender’s emotional display and the receiver’s emotional experience. Second, we do not ask participants to recognise emotions expressed by the senders (instead, participants rate the senders’ social characteristics such as likeability and competence). Replacing an emotion recognition task with questions about the sender’s likeability and competence enables us to circumvent (or at least reduce) the problems related to the interference between emotion categorisation and self-reported feelings. Specifically, participants do not focus on the sender’s emotional displays (which makes emotion categories stand out) but on the overall impression of the sender (i.e. his/her physical appearance; Zebrowitz & Montepare, Citation2008). Of course, it is possible that social evaluations may also activate emotion categories (e.g. likeability taps into approach/avoidance), but such general categories do not overlap with discrete emotion categories we use (Mauss & Robinson, Citation2009). In addition, testing the effects of emotional mimicry on social evaluations provides an important extension to the facial feedback hypothesis (Kaiser & Davey, Citation2017).

Third, we use new stimuli to evoke emotional mimicry and contagion. Specifically, based on the observation that, in general, studies on emotional mimicry use short videos or pictures of basic emotions (e.g. Hess & Blairy, Citation2001; Lundqvist & Dimberg, Citation1995; Van der Schalk et al., Citation2011), whereas studies limited to emotional contagion use videos of spontaneous emotional behaviour lasting at least 30 s (e.g. Hsee et al., Citation1990), we created movies that combine these features. Specifically, we used video clips that lasted 35 s and presented basic, unambiguous emotional expressions, thereby bridging the gap between emotional mimicry and emotional contagion research paradigms.

Fourth, our mediation analyses employ a multilevel (ML) approach, because our data, similarly to other studies on emotional mimicry, have a multilevel structure (i.e. each participant is exposed to twelve videos). The ML approach provides unbiased estimates of indirect and total effects (Bauer, Preacher, & Gil, Citation2006) by recognising the existence of data hierarchies and controlling for residual components that may be related due to repeated trials or differences between stimuli (e.g. facial features of different senders).

Method

Participants

A power analysis with G*Power 3.1.3 (Faul, Erdfelder, Lang, & Buchner, Citation2007) indicated N = 54 to detect an effect size of d = .50 (i.e. a medium effect as defined by Cohen, Citation1988), with a probability of 1–β = .95, α = .05, and lower-bound estimate of non-sphericity correction at ε = .075. Assuming some data loss due to technical problems with the EMG, we recruited 68 participants (48 women) who participated in the study in exchange for partial course credit and coffee vouchers (equivalent of approx. 5$). EMG recordings of 9 participants could not be used due to technical problems (e.g. relatively high level of errors and artifacts in the signal). Thus, the EMG analyses were based on the remaining 59 participants (41 women).

Stimuli

Twelve video clips showing happiness, anger, and sadness displayed by Caucasian models (two men and two women) served as stimulus material (Wróbel & Olszanowski, Citation2019) (see Appendix 1).

Measures

Self-reported emotional state

We used a modified Polish version of the Differential Emotion Scale (DES; Izard, Dougherty, Bloxom, & Kotsch, Citation1974) to measure self-reported emotions. It contains twelve adjectives referring to anger (angry, irritated, mad), happiness (happy, cheerful, delighted), sadness (sad, downhearted, blue), and fear (anxious, fearful, tense). We decided to measure all four emotions because, as already mentioned, emotional reactions in response to emotional displays may be convergent or divergent. Participants rated the degree to which they felt these emotions using a 7-point scale ranging from not at all to extremely. Reliabilities ranged from α = .83 to α = .95.

Facial EMG

Muscle activity was measured by bipolar placement of single-use Ag/Cl electrodes on the left side of the face. A ground electrode was attached to the middle of the forehead, directly below the hairline. We measured activity of the corrugator supercilii (which lowers the eyebrows), zygomaticus major (which pulls up lip corners), and depressor anguli oris (which lowers lip corners) and then calculated facial activity indexes specific to happiness, anger, and sadness. These indexes were based on the assumption that facial mimicry is defined as the imitation of the emotional expressions of others (that is, the presence of facial mimicry implies a pattern of facial activity in response to the emotional display of others). Put differently, mimicry cannot be analysed on a muscle by muscle base but rather needs to capture the joint movement of the muscles involved in facial expressions (see Hess et al., Citation2017; Hess & Blairy, Citation2001). Specifically, for both anger and sadness, mimicry should be indexed by significantly higher levels of corrugator supercilii than zygomaticus major activity. Mimicry to happy displays should be indexed by significantly higher levels of zygomaticus major than corrugator supercilii. To capture this pattern, we calculated facial activity indexes, by subtracting the activity of corrugator supercilii from the activity of zygomaticus major. Additionally, in order to differentiate between anger and sadness more precisely, we calculated an alternative facial activity index for sadness, by subtracting the activity of depressor from the activity of zygomaticus major. However, given that the electrode placement for depressor is liable to pick up the EMG signal from adjacent areas (Hess et al., Citation2017), analyses involving sadness mimicry would rely on the more reliable facial activity index.

EMG was measured using a BioPac MP150, digitised with 24-bit resolution, sampled at 2 kHz, and recorded on a PC. The signal was offline visually inspected for artifacts due to excessive muscle movements, filtered with a 20-400 Hz bandpass filter and a 50-Hz notch filter and transformed into positive values using root mean square using ACQ Knowledge 5.0 software. For the analyses, the signal was averaged from a 0.5-second baseline (prior to the stimulus onset) up to 35 s of the stimulus presentation divided into 0.5-second epochs, which resulted in a baseline and 70 data points for each trial. The data was standardised within each participant and each trial, based on signal standard deviation measured for the entire 35 s of the trial and the trial baseline as the mean. Importantly, this approach to EMG data standardisation allows for treating the trial baseline as “point 0” and signal deviation as a relative change in muscle activity. As such, values not significantly different from 0 are indicative of no changes in muscle activation, whereas positive or negative values can be interpreted as a relative increase or decrease in muscle tension during the trial, respectively.

Social evaluations

To test a potential effect of facial feedback on the evaluations of the senders, participants rated each face on two scales referring to the basic dimensions of social perception, that is, communion (Does this person seem likeable?) and agency (Does this person seem competent?) (Abele & Wojciszke, Citation2014). Ratings were made on a 7-point scale, anchored with labels no and yes.

Procedure

The experiment was presented as a study on making judgments about other people. We informed participants that we would attach electrodes to their skin to assess cognitive and physiological effort they put in the task (see, Lundqvist & Dimberg, Citation1995, for a similar cover story). After signing the informed consent forms, electrodes were attached and participants were seated in front of a 30'' monitor. They watched the 12 video clips in random order. Each trial was preceded by a 2-second fixation cross and followed by the self-reported emotions scale and the two questions regarding the likeability and competence of the person shown. The videos were separated by a 10-second ITI.

Results

Emotional contagion

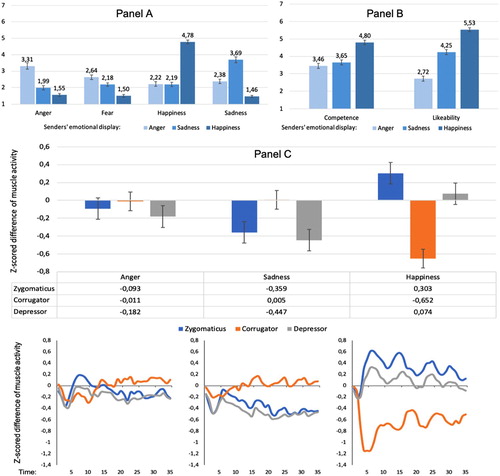

To test whether the videos elicited convergent emotions, we conducted repeated measures ANOVAs with the factor self-reported emotions (happiness, sadness, fear, anger) separately for the three emotions displayed by the senders (for means and standard errors, see , Panel A). The analysis revealed significant main effects for anger, F(3, 67) = 11.64, p < .001, = .15, happiness, F(3, 67) = 393.80, p < .001,

= .86, and sadness, F(3, 67) = 38.00, p < .001,

= .36. Contrast comparisons (p < .05) indicated that after exposure to anger, participants reported significantly more anger than fear, happiness or sadness. Similarly, after being exposed to happy displays, participants reported significantly more happiness than anger, fear or sadness. Finally, after being exposed to sadness, participants reported feeling significantly more sadness than anger, fear and happiness.

Figure 1. Panel A – Participants’ self-reported emotions after exposure to the senders’ emotional displays. Scores range from 1 to 7 with higher scores indicating more intense self-reported emotion. Error bars represent standard errors of the mean. Panel B – Evaluations of the senders’ social characteristics as a function of their emotional displays. Scores range from 1 to 7, with higher scores indicating more favourable evaluations. Error bars represent standard errors of the mean. Panel C – Facial EMG scores for zygomaticus, corrugator and depressor muscles as a function of the senders’ emotional displays. The upper plot shows mean changes for a 35-second stimuli presentation with error bars representing standard errors of the mean. Bottom plots show mean signal across stimuli presentation time.

Facial mimicry

To examine participants’ facial reactions in response to the presented facial displays, we used multilevel modelling (MLM) with maximum likelihood (for means and standard errors see , Panel C). The MLM procedure were performed on SPSS 25 and used a fixed effects structure that included the sender’s emotional display (angry, sad, happy), random effects included intercept fit across participants (to control for the differences in muscle reactivity between them) and faces of the senders (to control for their facial features). Our analytic strategy consisted of two steps. First, we analysed the effects of the senders’ emotional displays on the activity of each facial muscle separately to estimate the reliability of facial EMG to find out which facial activity index would be more reliable in the case of sadness (see Appendix 2). Second, we analysed the effects of the senders’ emotional displays on facial activity patterns (i.e. facial activity index calculated as a difference score between activation of relevant facial muscles).

The analysis confirmed that participants’ facial activity depended on the senders’ emotional expressions, F(2,649) = 26.78, p < .001. The facial activity index was higher after exposure to happy faces than after exposure to angry (M = .96; SE = .17 vs. M = −.08; SE = .17) and sad faces (M = −.36; SE = .17). Both differences were significant at p < .001. We observed no significant difference between facial activity in response to sadness and facial activity in response anger.

Social evaluations

To assess the influence of the senders’ emotional displays on social evaluations, we conducted a 2 (social dimension: competence, likability) by 3 (displayed emotion: anger, happiness, sadness) repeated measures ANOVA on the face evaluations (see , Panel B). The analysis revealed a main effect of the displayed emotion, F(2,67) = 124.06, p < .001, = .65. Happy faces were rated as most likeable and competent, as compared to angry and sad faces. Angry faces were rated as less likeable than sad faces, but the two displays did not differ in terms of competence ratings. Moreover, likeability ratings were, in general, higher than competence ratings, F(2,67) = 5.73; p < .03,

= .08. The interaction between social dimension and displayed emotion was also significant, F(2,67) = 51.85, p < .001,

= .44. Whereas faces displaying happiness and sadness were rated as more likeable than competent, angry faces were rated as more competent than likeable.

Mediation

To test whether facial activity served as a causal link between the senders’ emotional displays and emotional contagion, we conducted mediation analyses using the multilevel approach recommended by Bauer et al. (Citation2006). Mediation analyses were performed with the use of MLmed macro for SPSS (Rockwood & Hayes, Citation2017) with ML estimation. We treated the sender’s emotional display as the predictor (X)Footnote1, facial activity index as the mediator (M), and self-reported emotion as the dependent variable (Y). The model structure included fixed within-group effect of X, and random effects of X on Y and M, of M on Y and M intercepts.

provides estimates of fixed effects for the analysed paths. The analysis confirmed that exposure to the senders’ smiles predicted facial activity (i.e. the overall stronger activation of zygomaticus and reduced activity of corrugator). Further, facial activity predicted self-reported happiness. A direct link between the senders’ smiles and self-reported happiness was also significant. This direct effect was significantly reduced when the influence of facial activity was controlled for, thus confirming that the relationship between the senders’ displays of happiness and self-reported happiness can be partially explained by facial activity. In the case of the senders’ sad and angry displays, we observed a reverse facial activity pattern. Specifically, the activation of the corrugator supercilii was relatively stronger than the activation of the zygomaticus major. This activation, in turn, predicted self-reported sadness and anger. Facial activity also influenced self-reported sadness and anger directly but these direct links were significantly reduced when the indirect effects of facial activity on these self-reported emotions were controlled for.

Table 1. Mediational and indirect effects of the relationship between the sender’s emotional display and the receivers’ self-reported emotions through facial muscle activation: Estimates of fixed effects (paths a, b and c’) and indirect effects with 95% confidence intervals (computed using 10,000 Monte Carlo simulation).

Additional mediation analyses for social evaluations as dependent variables demonstrated that facial activity partially explained the relationship between the sender’s emotional displays and likeability and competence ratings (see Appendix 3).

Discussion

The goal of the current research was to take a new look at the old question regarding the role of facial mimicry in emotional contagion. To our knowledge, this is the first study to directly test the mediating role of mimicry for emotional contagion. Overall, our findings revealed that participants displayed and felt emotions corresponding to those of the senders. Further, and more importantly, facial activity partially explained the relationship between the observed and felt emotions, which suggests that facial mimicry may be involved in emotional contagion. Moreover, as demonstrated by our additional analyses, the effects of facial activity went beyond the receiver’s feelings and spread to social evaluations.

These results were most pronounced when participants were exposed to happiness displays. Here, the receivers reported more happiness after exposure to happy senders than after exposure to angry or sad senders. Moreover, the activation of their facial muscles clearly fit into a pattern typical of happiness expressions (i.e. the activity of zygomaticus major increased, while the activity of corrugator supercilii decreased). These results are in line with the notion that, when provided with no additional information about the senders, people typically react to smiles with matching emotional expressions because smiles, in general, signal affiliative intentions (Fischer & Hess, Citation2017). This refers in particular to smiles representing basic expressions of happiness because such expressions are indicative of psychological closeness and approach motivation (Rychlowska et al., Citation2017). What our results add to this observation is that such smiles may also elicit happy feelings in the receiver through facial muscle activity, thereby supporting the core assumption of the emotional contagion theory (Hatfield et al., Citation1994; Prochazkova & Kret, Citation2017). Moreover, smile mimicry may contribute to judging the sender as more likeable and competent.

Importantly, a similar pattern of results emerged when we analysed participants’ reactions to the senders’ anger and sadness. Here, participants also reported emotions consistent with those of the senders and their facial activity was in line with self-reported emotions. Even more crucially, those corresponding emotions were elicited through facial muscle activity. However, compared to participants’ reactions to smiles, the mediating role of facial activity in sadness and anger contagion was somewhat less clear-cut. First of all, the mediational effect for anger was marginal. The main reason for this might be that anger mimicry was relatively weak, which is in line with the general assumption that people avoid imitating non-affiliative emotional expressions (Fischer & Hess, Citation2017). Second, regardless of whether participants were exposed to sadness or anger, we based the analysis on the same facial activity index (i.e. the contrast between the zygomaticus major and corrugator supercilii muscles), because additional measurement of depressor activity turned out to be unreliable. Thus, although facial activity patterns were in line with general expectations, the precise differentiation between facial reactions to sadness and anger became difficult. Third, we found that neither exposure to anger nor exposure to sadness led to increased activity of the corrugator supercilii (we only observed decreased zygomaticus major activity). Therefore, although the analysis based on the difference score between the zygomaticus and corrugator muscles supports the idea that sadness and anger contagion may be triggered by facial mimicry, these results should be treated with some caution.

We should also note that regardless of which emotional expression was analysed, facial activity only partially explained the link between the senders’ emotional displays and participants’ self-reported emotions. This suggests that emotional contagion probably involves additional mediating mechanisms such as, for instance, social appraisal (Parkinson, Citation2011). These mechanisms may operate simultaneously with emotional mimicry. It is also possible that the impact of automatic, “reflex-like” facial activity on self-reported feelings was cushioned by reflective processing because emotional self-reports are often influenced by deliberate processes (Van der Schalk et al., Citation2011).

At the same time, the question remains why our results are in marked contrast to the majority of previous studies that directly tested the causal link between facial mimicry and emotional contagion and reported null findings (e.g. Hess & Blairy, Citation2001; Van der Schalk et al., Citation2011). One explanation is that, unlike previous studies, we used videos of basic emotional expressions that lasted longer than 30 s. This enabled us to integrate emotional contagion and emotional mimicry research paradigms by giving participants more time to observe the senders, which possibly made the assessment of their own feelings easier. Additionally, when testing the mimicry-contagion link, most studies relied on emotion recognition tasks that, as already mentioned, could have influenced participants’ self-reported feelings.

Taken together, our results provide the empirical demonstration of role of facial activity in the transfer of happiness, sadness, and anger. As such, they bridge the gap between theoretical models of emotional contagion and empirical findings. We believe that these findings are of key importance because the null results reported by other studies might have discouraged some authors from further explorations of the link between facial mimicry and emotional contagion.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1 Dummy-coded to contrast the displayed emotion of interest (1) against other emotions (0), e.g. happiness was contrasted against anger and sadness.

References

- Abele, A., & Wojciszke, B. (2014). Communal and agentic content in social cognition: A dual perspective model. Advances in Experimental Social Psychology, 50, 198–255.

- Bauer, D. J., Preacher, K. J., & Gil, K. M. (2006). Conceptualizing and testing random indirect effects and moderated mediation in multilevel models: New procedures and recommendations. Psychological Methods, 11, 142–163. doi: 10.1037/1082-989X.11.2.142

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Earlbaum Associates.

- Elfenbein, H. A. (2014). The many faces of emotional contagion: An affective process theory for affective linkage. Organizational Psychology Review, 4, 326–362. doi: 10.1177/2041386614542889

- Epstude, K., & Mussweiler, T. (2009). What you feel is how you compare: How comparisons influence the social induction of affect. Emotion, 9(1), 1–14. doi: 10.1037/a0014148

- Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39, 175–191. doi: 10.3758/BF03193146

- Fischer, A., & Hess, U. (2017). Mimicking emotions. Current Opinion in Psychology, 17, 151–155. doi: 10.1016/j.copsyc.2017.07.008

- Gawronski, B., & Bodenhausen, G. V. (2005). Accessibility effects on implicit social cognition: The role of knowledge activation and retrieval experiences. Journal of Personality and Social Psychology, 89, 672–685. doi: 10.1037/0022-3514.89.5.672

- Hatfield, E., Cacioppo, J. T., & Rapson, L. R. (1994). Emotional contagion. Cambridge: Cambridge University Press.

- Hess, U., Arslan, R., Mauersberger, H., Blaison, C., Dufner, M., Denissen, J. J., & Ziegler, M. (2017). Reliability of surface facial electromyography. Psychophysiology, 54, 12–23. doi: 10.1111/psyp.12676

- Hess, U., & Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. International Journal of Psychophysiology, 40, 129–141. doi: 10.1016/S0167-8760(00)00161-6

- Hsee, C. K., Hatfield, E., Carlson, J. G., & Chemtob, C. (1990). The effect of power on susceptibility to emotional contagion. Cognition and Emotion, 4, 327–340. doi: 10.1080/02699939008408081

- iMotions Biometric Research Platform 7.1. (2018). iMotions A/S, Copenhagen, Denmark.

- Izard, C. E., Dougherty, F. E., Bloxom, B. M., & Kotsch, N. E. (1974). The differential emotions scale: A method of measuring the subjective experience of discrete emotions. Unpublished manuscript, Vanderbilt Univ., Nashville, Tenn.

- Kaiser, J., & Davey, G. C. L. (2017). The effect of facial feedback on the evaluation of statements describing everyday situations and the role of awareness. Consciousness and Cognition, 53, 23–30. doi: 10.1016/j.concog.2017.05.006

- Lipps, T. (1907). Das wissen von fremden ichen. Psychologische Untersuchnung, 1, 694–722.

- Lundqvist, L.-O., & Dimberg, U. (1995). Facial expressions are contagious. Journal of Psychophysiology, 9, 203–211.

- Mauss, I. B., & Robinson, M. D. (2009). Measures of emotion: A review. Cognition and Emotion, 23, 209–237. doi: 10.1080/02699930802204677

- Olszanowski, M., Kaminska, O. K., & Winkielman, P. (2018). Mixed matters: Fluency impacts trust ratings when faces range on valence but not on motivational implications. Cognition and Emotion, 32, 1032–1051. doi: 10.1080/02699931.2017.1386622

- Olszanowski, M., Pochwatko, G., Kuklinski, K., Scibor-Rylski, M., Lewinski, P., & Ohme, R. (2015). Warsaw set of emotional facial expression pictures: A validation study of facial display photographs. Frontiers in Psychology, 5, 1516. doi: 10.3389/fpsyg.2014.01516

- Parkinson, B. (2011). Interpersonal emotion transfer: Contagion and social appraisal. Social and Personality Psychology Compass, 5, 428–439. doi: 10.1111/j.1751-9004.2011.00365.x

- Prochazkova, E., & Kret, M. E. (2017). Connecting minds and sharing emotions through mimicry: A neurocognitive model of emotional contagion. Neuroscience and Biobehavioral Reviews, 80, 99–114. doi: 10.1016/j.neubiorev.2017.05.013

- Rockwood, N. J., & Hayes, A. F. (2017, May). MLmed: An SPSS macro for multilevel mediation and conditional process analysis. Poster presented at the annual meeting of the Association of Psychological Science (APS), Boston, MA.

- Rychlowska, M., Jack, R. E., Garrod, O. G. B., Schyns, P. G., Martin, J. D., & Niedenthal, P. M. (2017). Functional smiles: Tools for love, sympathy, and war. Psychological Science, 28, 1259–1270. doi: 10.1177/0956797617706082

- Rymarczyk, K., Biele, C., Grabowska, A., & Majczynski, H. (2011). EMG activity in response to static and dynamic facial expressions. International Journal of Psychophysiology, 79, 330–333. doi: 10.1016/j.ijpsycho.2010.11.001

- Sato, W., Fujimura, T., Kochiyama, T., & Suzuki, N. (2013). Relationships among facial mimicry, emotional experience, and emotion recognition. PLoS One, 8, e57889. doi: 10.1371/journal.pone.0057889

- Söderkvist, S., Ohlén, K., & Dimberg, U. (2018). How the experience of emotion is modulated by facial feedback. Journal of Nonverbal Behavior, 42, 129–151. doi: 10.1007/s10919-017-0264-1

- Stöckli, S., Schulte-Mecklenbeck, M., Borer, S., & Samson, A. C. (2018). Facial expression analysis with AFFDEX and FACET: A validation study. Behavior Research Methods, 50, 1446–1460. doi: 10.3758/s13428-017-0996-1

- Tassinary, L. G., Cacioppo, J. T., & Vanman, E. J. (2007). The skeletomotor system: Surface electromyography. In J. T. Cacioppo, L. G. Tassinary, & G. G. Berntson (Eds.), Handbook of psychophysiology (pp. 267–299). New York, NY: Cambridge University Press. doi:10.1017/CBO9780511546396.012

- Van der Schalk, J., Fischer, A., Doosje, B., Wigboldus, D., Hawk, S., Rotteveel, M., & Hess, U. (2011). Convergent and divergent responses to emotional displays of ingroup and outgroup. Emotion, 11, 286–298. doi: 10.1037/a0022582

- Weyers, P., Muhlberger, A., Hefele, C., & Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology, 43, 450–453. doi: 10.1111/j.1469-8986.2006.00451.x

- Wróbel, M., & Olszanowski, M. (2019). Emotional reactions to dynamic morphed facial expressions: A new method to induce emotional contagion. Annals of Psychology. doi:10.18290/rpsych.2019.22.1-6

- Zebrowitz, L. A., & Montepare, J. M. (2008). Social psychological face perception: Why appearance matters. Social and Personality Psychology Compass, 2, 1497–1517. doi: 10.1111/j.1751-9004.2008.00109.x

Appendices

Appendix 1

The videos were created with the use of FantaMorph 5.0 software based on the pictures taken from the Warsaw Set of Emotional Facial Expression Pictures (WSEFEP; Olszanowski et al., Citation2015). We used morphing because this technique allows for good control of the duration and intensity of emotional expressions, even though the videos it produces may look somewhat unnatural. This reduced naturalness, however, does not challenge stimulus validity, because emotional mimicry has been shown to occur in response to not only real faces but also avatars (e.g. Weyers, Muhlberger, Hefele, & Pauli, Citation2006) or still pictures (Rymarczyk, Biele, Grabowska, & Majczynski, Citation2011).

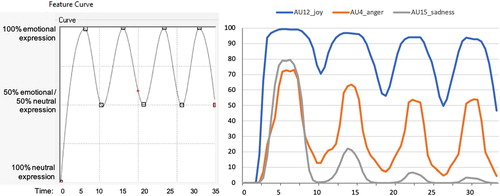

When selecting the photographs, we relied on the FACS scores for AU12 (lip corner puller), AU15 (lip corner depressor), and AU4 (brow lowerer) as well as the ratings of independent judges provided for each picture. The emotion expressions (anger, sadness, and happiness) were morphed with each model’s neutral (calm) face to create dynamic facial displays changing from neutral to emotional. Each video lasted 35 s and started with a 2-second still image of a neutral face that within 3 s changed gradually to an emotional one. After reaching the apex (i.e. full emotional display) the face returned to a blended expression (50% neutral and 50% emotional) (3 s) and then again changed to emotional, reaching the apex after 3 s. Such transformations were repeated four times. The video finished with a still image of a blended face. The exact morphing sequence is shown in (left panel).

In total, we produced twelve videos (4 senders × 3 emotional expressions). The videos were pre-tested (n = 5) in order to check whether the viewers realise that the frames in-between neutral faces and full emotional displays are digitally generated (none of the viewers was aware of it). Moreover, to ensure that facial actions remained visible after morphing and that the videos showed the activity of critical facial action units (AUs), all videos were analysed using iMotions 7.0 software (Citation2018) equipped with AFFDEX algorithms (Stöckli, Schulte-Mecklenbeck, Borer, & Samson, Citation2018). The analysis confirmed that the changes in the main AUs of interests were visible and that the morphed faces displayed the intended emotions (see , right panel). We should notice, though, that despite the fact that the displays reached exactly the same apex (i.e. 100% of emotional display) four times, consecutive peaks and troughs obtained relatively lower scores. This inconsistency may be attributed to the limitations of the algorithm-based analysis performed by iMotions. Its neural network compares relative positions of 34 facial landmarks (e.g. brows, lips) within one second of the video. As a result, slower changes or changes that start when an emotion is already partially displayed might not be accurately detected by the software (especially when these changes are rather subtle, as in the case of sadness).

The videos are available at: https://www.emotional-face.org.

Appendix 2

The MLM procedure used a fixed effects structure that included the sender’s emotional display (3 levels: angry vs. sad vs. happy), while random effects included intercept fit across participants (to control for the differences in muscle reactivity between them) and faces of the senders (to control for their facial features).

Analysis for the zygomaticus major muscle activation revealed a significant effect of emotional display, F(2, 649) = 12.08; p < .001. More specifically, exposure to smiling senders resulted in higher activity of the zygomaticus muscle than exposure to angry senders (p < .005) and sad senders (p < .001). Moreover, zygomaticus activation in response to anger was slightly higher than in response to sadness (p < .05). Additionally, we performed one sample t-tests testing averaged signal value against 0 to see if muscle activity increased or decreased as compared to its initial tension (i.e. prior to the trial). The analysis revealed that zygomaticus activation increased while participants watched happy faces (t(58) = 2.27, p < .03, d = .29) and decreased when participants watched sad faces (t(58) = -2.65, p < .01, d = .35). Zygomaticus activity in response to angry faces was not significantly different from 0 (t(58) = -0.76, p = .45, d = .10).

The corrugator supercilii muscle activation also differed across the senders’ emotional displays, F(2, 649) = 16.99, p < .001. Exposure to happy faces resulted in the lowest activation of the corrugator muscle, as compared to exposure to angry (p < .001) and sad faces (p < .001). Additionally, one sample t-tests showed that when participants were exposed to smiles, the activity of the corrugator muscle decreased during stimuli presentation (t(58) = -5.34, p < .001, d = .70), while for angry and sad faces, there was no significant change from baseline (t(58) = -0.11, p = .91, d = .01 and t(58) = 0.04, p = .96, d = .01 respectively).

Depressor activation also differed across the senders’ emotional displays, F(2, 649) = 7.67; p < .002. Here, the lowest activation was observed for sad faces as compared to angry faces (p < .05) and – marginally – happy faces (p = .055). One sample t-tests demonstrated that depressor activity was significantly reduced in response to sad faces (t (58) = -3.11, p < .004, d = .41). We found no change in the depressor overall activity in response to angry and happy faces (t(58) = -1.59, p =.12, d = .21) and t(58) = 0.56, p =.58, d = .07). This pattern of results was somewhat atypical and inconsistent with previous research (i.e. depressor activity should increase rather than decrease in response to sadness displays; Tassinary, Cacioppo, & Vanman, Citation2007). Moreover, as shown in Panel C, this pattern closely mirrored the one observed for zygomaticus major, which suggests crosstalk between depressor and zygomaticus. Therefore, we decided to use the difference score between zygomaticus and corrugator as a facial activity index for sadness.

Appendix 3

We conducted a series of mediation analyses with social evaluations as dependent variables. Specifically, we tested for an indirect effect of the facial activity index on likeability and competence ratings. First, we regressed facial activity index onto the senders’ emotional displays – dummy-coded to contrast the displayed emotion of interest (1) against other emotions (0), e.g. happiness was contrasted against anger and sadness (path a). Next, we regressed competence and likeability evaluations onto the facial activity index (path b). Finally, we regressed competence and likeability evaluations onto the senders’ emotional displays, examining both direct (path c) and indirect effects (path c’). The results presented in supported the notion that facial activity mediates the relationship between the sender’s emotional display and likeability and competence ratings. Specifically, more smile-like pattern of muscle activation in response to the senders’ emotional displays partially explained higher likeability and competence ratings.