ABSTRACT

Accurate perception of the emotional signals conveyed by others is crucial for successful social interaction. Such perception is influenced not only by sensory input, but also by knowledge we have about the others’ emotions. This study addresses the issue of whether knowing that the other’s emotional state is congruent or incongruent with their displayed emotional expression (“genuine” and “fake”, respectively) affects the neural mechanisms underpinning the perception of their facial emotional expressions. We used a visual adaptation paradigm to investigate this question in three experiments employing increasing adaptation durations. The adapting stimuli consisted of photographs of emotional facial expressions of joy and anger, purported to reflect (in-)congruency between felt and expressed emotion, displayed by professional actors. A Validity checking procedure ensured participants had the correct knowledge about the (in-)congruency. Significantly smaller adaptation aftereffects were obtained when participants knew that the displayed expression was incongruent with the felt emotion, following all tested adaptation periods. This study shows that knowledge relating to the congruency between felt and expressed emotion modulates face expression aftereffects. We argue that this reflects that the neural substrate responsible for the perception of facial expressions of emotion incorporates the presumed felt emotion underpinning the expression.

Can we perceive a facial expression at face value, or does prior knowledge we have about the emotional state of mind of the person, or contextual cues, affect how we perceive their expression? There are indications that (social) contextual information can influence facial expression perception (e.g. Bublatzky et al., Citation2020; Wieser & Brosch, Citation2012). For example, emotional attributions, induced by the dynamics of the perceived facial expression, alter the perception of the facial expression (Jellema et al., Citation2011; Palumbo & Jellema, Citation2013; Palumbo et al., Citation2015). These findings resonate with a longstanding debate on the “purity” of perception and the influence of cognition on the perceptual experience (see Firestone & Scholl, Citation2016 for a discussion).

Humans developed complex neural systems for the perception and understanding of socially meaningful stimuli. Traditionally, models of social perception suggested that these systems operate in a uni-directional, and hierarchical, bottom-up fashion (Adolphs, Citation2009; Allison et al., Citation2000) where information provided by the social stimulus is feed forward to higher-level networks that activate knowledge-related cognitive processes. In this view, the cognitive processes are informed by the lower-level perceptual processes, but do not feedback to alter the perception. However, more recent findings have challenged this view. For instance, people often update their perceptions and interpretations of facial expressions based on situational context and real-time information (e.g. Hudson & Jellema, Citation2011; Teufel et al., Citation2009), one’s own bodily state (i.e. embodied simulation; Gallese, Citation2007), and prior conceptual knowledge (e.g. beliefs regarding the observed agent; Niedenthal et al., Citation2010). Integration of such perceptual, somatosensory and conceptual information contributes to the accurate interpretation of the observed behaviour (Krumhuber et al., Citation2019).

Genuine and faked expressions

Accurate interpretation of an expressed emotion, such as being able to tell whether it matches a person’s underlying emotion (i.e. a genuine expression) or not (i.e. a faked expression), is an important social tool. These two types of expressions carry very different meanings and induce different social responses. While genuine expressions signal the agent’s underlying mental and emotional states, faked expressions don’t and may be used to control our social exchange by eliciting a desired behaviour in others (e.g. Cole, Citation1986; Rychlowska et al., Citation2017). Faked expressions, therefore, may elicit a very different affective response in the observer and trigger a different appraisal than genuine expressions (Niedenthal et al., Citation2010). Because the function of faked expressions (usually) is to convey to the observer that the expressed emotion is actually felt, they are often convincing reproductions of the genuine expressions, which makes the judgement of their authenticity difficult (Bartlett et al., Citation2014; Calvo et al., Citation2012; Dawel et al., Citation2015). Despite many morphological and physical similarities between the two types of expressions, there are subtle differences between them providing cues about the authenticity. For example, symmetry (Ekman et al., Citation1981), regularity (Hess et al., Citation1989), intensity (Gunnery et al., Citation2013; Hess et al., Citation1995) and presence of physical signs of arousal (Levenson, Citation2014) have been named as differentiating factors between static displays of genuine and faked expressions, with genuine expressions having more of each than faked ones.

Adaptation studies

One way in which the influences of contextual factors on basic perceptual processes has been studied is by using the visual adaptation paradigm (De La Rosa et al., Citation2014). Visual adaptation is a widely used paradigm to probe the mechanisms underlying the perception of visual stimuli (Fox & Barton, Citation2007; Leopold et al., Citation2001; Webster, Citation2011, Citation2015; Webster et al., Citation2004). Adaptation results from the prolonged exposure to a specific stimulus and can bias, and enhance, perception of subsequent stimuli (Barraclough et al., Citation2016; Clifford et al., Citation2007). Biases in perception are referred to as aftereffects, here perception is typically biased towards the opposite of the adapted stimulus. These adaptation aftereffects are usually interpreted as sensitivity of the underlying perceptual processing mechanisms to the manipulated properties of the stimuli, in line with electrophysiological recordings of units selectively sensitive to the adapting stimulus (Barraclough et al., Citation2009). The characteristics of such aftereffects allow the study of the properties of the underlying visual processing mechanisms of complex social stimuli including facial expressions (Campbell & Burke, Citation2009; Engell et al., Citation2010; Fox & Barton, Citation2007).

The current study

The current study explored whether prior knowledge about whether another’s facial emotional expression matches, or does not match, their presumed emotion influences the perception of their facial expression. When there is such a match we call the expression “genuine”, when there is no match we call the expression “fake”. We choose to investigate this question using a visual adaptation paradigm, because such paradigms are very well suited to examine influences of higher-level factors on perceptual processes (e.g. Teufel et al., Citation2009). In both the genuine and fake happy expressions the mouth displays a U-shape, whilst in both genuine and fake angry expressions the eyebrows are lowered and the mouth displays an inverted U-shape. Thus, from a low-level perceptual perspective, adaptation should produce similar results in both genuine and fake conditions, provided they are of equal expressive intensity. This scenario, therefore, lends itself very well for the assessment of the possible influence of prior knowledge the observer has about the actor’s emotion on the mechanisms involved in the perception of the actor’s facial expression.

We reasoned that if the neural substrate for the perception of emotional facial expressions incorporates the knowledge about the underpinning emotion, then this knowledge should affect the visual adaptation to facial expressions. More specifically, we hypothesised that adaptation to “faked” expressions of happiness and anger result in smaller aftereffects compared to adaptation to the “genuine” expressions, as the agent displaying faked happiness is most likely not happy and the agent displaying faked anger is most likely not angry. Given that, if anything, the faked expressions we used in this study showed more extreme facial articulations than their genuine counterparts, such a result would be hard to explain by purely low-level perceptual adaptation. Rather, it would suggest modulation of the neural substrate subserving facial expression perception, induced by knowledge of the actors’ emotion.

We tested this premise under three adaptation durations as it is well documented that the perceptual adaptation is influenced by the duration of the adapting stimulus, with stronger aftereffects occurring following longer adaptation (e.g. Leopold et al., Citation2005; Wincenciak et al., Citation2016). This is a key manipulation of the study. In contrast, little is known about the strength and time-course of modulatory effects on perceptual processing of social stimuli (e.g. Teufel et al., Citation2009; Teufel, Alexis, et al., Citation2010; Teufel, Fletcher, et al., Citation2010; Wiese et al., Citation2012). In Experiment 1 adaptation was set at 500 ms. Such a brief adaptation period has been shown to already induce perceptual aftereffects for different facial characteristics (e.g. Fang et al., Citation2007; Kovacs et al., Citation2007). In Experiment 2, we employed a longer, more typical adaptation duration with the adapting stimulus displayed for 5 s (Leopold et al., Citation2005). Generally, longer adaptation durations lead to stronger and more robust aftereffects (Leopold et al., Citation2005; Rhodes et al., Citation2011). In Experiment 3, we further increased the duration of the adapting stimulus to 8 s.

Judgement of the authenticity of the expression can, however, be difficult, especially under limited exposure duration (Calvo et al., Citation2013). Given that genuine and faked expressions have similar physical characteristics, both types of expression might be expected to elicit typical, repulsive aftereffects following a brief presentation to naïve participants (i.e. participants not having prior knowledge about whether an expression is “genuine” or “faked”). Therefore, we ensured that participants had the correct knowledge about the (in)congruency between the felt and expressed emotion of each actor by inserting a brief Validity checking procedure directly preceding each trial.

Methods

Participants

A total of 61 undergraduate students from the University of Hull took part in the experiments. Six participants failed to comply with the task requirements and were excluded from the analysis (see Results section for details), resulting in the final sample as follows: Experiment 1: 18 participants (12 females, 6 males, age M = 21.7 years, SD = 3.4); Experiment 2: 20 participants (16 females, 4 males, age M = 21.8 years, SD = 6.4); Experiment 3: 17 participants (13 females, 4 males, age M = 19.3 years, SD = 0.7). All participants had normal or corrected to normal vision, were naive to the purpose of the study and provided written consent prior to the experiment. Participants received course credits for taking part in the experiment. The study was approved by the Ethics Committee of the Department of Psychology, University of Hull, and was performed in accordance with the ethical standards laid down in the 1990 Declaration of Helsinki.

Stimuli

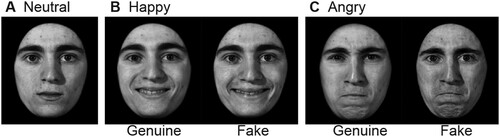

Stimuli consisted of custom-made digital colour photographs of neutral facial expressions, and of “genuine” and “faked” facial expressions of happiness and anger, of four professional actors (2 females and 2 males, between 20 and 30 years old; all were members of the Accademia “Arvamus” in Rome, Italy). Actors underwent the Stanislavski technique (Gosselin et al., Citation1995; Stanislawski, Citation1975), which focuses on helping an actor recall the emotions and circumstances needed for a role, which induces psychological processes such as emotional experiences and subconscious behaviour. However, even though the method aims to produce “authentic” facial emotional expressions, it should be stressed that the produced facial expressions were still deliberate and posed, and should not be confused with facial expressions that occur when one responds spontaneously to an emotion-eliciting stimulus.

For the faked expressions, actors followed the procedure described by Duclos and Laird (Citation2001). For faked happy expressions, actors were first trained to relax the muscles around the eyes, as in the neutral expression, in order to avoid the natural contraction of the orbicularis oculi. They were asked to maintain this pose for 15 s, while lifting the lip corners to indicate smiling. In contrast to faked happy expressions, there is virtually no literature on what the features of faked expressions of anger are (see Dawel et al., Citation2017 for a discussion). The actors were trained to display faked anger by clenching their teeth and pushing the upper lip against the bottom lip, but to relax the muscles above the eyes to avoid the natural contraction of the depressor supercilii, which lowers the eyebrows and pulls them together, resulting in reduced squinting of the eyes. They were again asked to maintain this pose for 15 s.

The main reason for choosing happiness and anger, rather than happiness and sadness, as the adapting emotions is that happiness and anger (i) are both approach-oriented (whereas sadness is avoidance-oriented), (ii) have an obvious positive and negative valence, respectively, and (iii) have faked versions that are commonly portrayed. According to the Circumplex model of affect (Russell, Citation1980), which represents emotions by values on the continuous dimensions of arousal and pleasantness, happiness and anger are similar in terms of arousal (with sadness evoking less arousal), but differ maximally in pleasantness. Furthermore, the extent to which their distinctive physical features differ geometrically from the neutral test expression is fairly similar, while the sadness expression is considerably less expressive (Calvo & Marrero, Citation2009).

All expressions were photographed at peak intensity. Images were then edited using Adobe Photoshop software (Adobe Systems, San Jose, California, U.S.A.) in order to align them horizontally, equalise luminance and contrast, and convert to grey scale bitmaps. Finally, a black oval mask covering any external facial features was applied to the images ().

Figure 1. Example of stimuli used for the adaptation experiments. (A) Neutral expression, (B) Happy expression, genuine and faked, (C) Angry expression, genuine and faked.

Calibration of the genuine and faked adaptation stimuli

Since the genuine and fake expressions – of either happiness or anger – of each identity were physically different, it was important to ensure that any properties other than the “authenticity” of the portrayed emotion could not explain differences in adaptation magnitude we might find between genuine and fake expressions. Two crucial properties in this respect are the perceived intensity and the low-level features of the expressions.

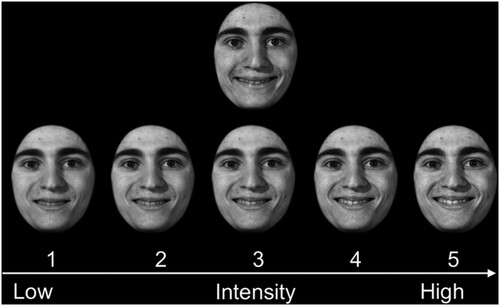

Perceived intensity. Genuine expressions may be perceived as more intense than faked expressions (Gunnery et al., Citation2013; Gunnery & Ruben, Citation2016; Hess et al., Citation1995; Zloteanu et al., Citation2018). As adaptation aftereffects are dependent upon the strength of the adapting stimulus (Webster, Citation2011, Citation2015), perceived intensity differences could affect the magnitude of the aftereffects. Therefore, in a separate experiment, we matched the genuine and faked expressions of happiness and anger for perceived intensity. Twenty-five participants (21 females, 4 males; age M = 20.8 years, SD = 3.7), who did not participate in the adaptation experiments, were presented with a target face depicting an image of the faked expression at peak intensity (100%) in the top row, and five test faces in the bottom row. The test faces were morphs of the original images of genuine expressions (peak, 100%) and a neutral expression, using Sqirlz Morph 2.0 software. The test faces varied from 60% intensity (morph containing 60% expression and 40% neutral) to 100% intensity (100% genuine expression) in 10% steps, and were presented with increasing intensity from left to right (). Participants were asked to choose the test face that matched the intensity of the target face by pressing the corresponding number on the keyboard. Stimuli were presented on the screen until a response was made, with 5 sec inter-trial intervals. Participants completed 8 trials in total (4 actors × 2 emotions). On average, the 100% faked angry expression was judged to be of similar intensity to the 78% (SD = 0.9%) genuine angry expression, and the 100% faked happy expression similar to the 81% (SD = 2.1%) genuine happy expression.

Figure 2. Expressive intensity matching task. Illustration of a single trial. Top face displays a faked expression of happiness at maximum intensity (100%). Bottom images represent morphs ranging from 60% Happiness and 40% Neutral (1) to 100% Happiness and 0% Neutral (5), in steps of 10%. Participants had to select the face from the bottom row that matched the top row face in expressive intensity.

For each actor (n = 4) and each expression, the genuine expression morphs that were perceived to be as intense as the 100% faked expressions were selected for the adaptation experiments. The faked expression adapting stimuli were always the 100% intensity expressions. For examples of genuine and fake stimuli matched for intensity see .

The above procedure ensured that, if anything, our faked expressions would be more intense than the genuine ones. If the faked expressions were less intense than the genuine ones then that could in itself lead to acceptance of our main hypothesis that faked expressions evoke smaller adaptation aftereffects. By using our maximally intense faked expressions and matching these with a range of genuine expressions of varying intensity, we adopted a conservative approach to avoid this risk.

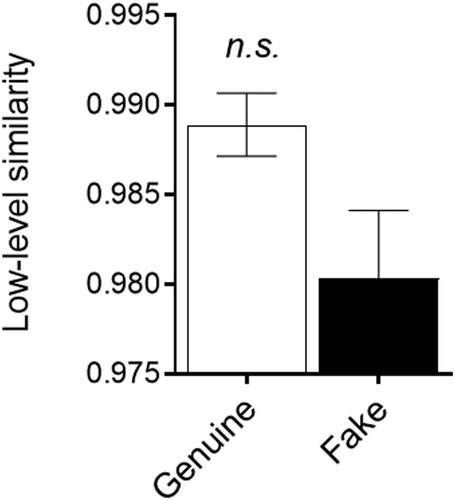

Low-level properties. The facial expressions selected in the perceptual calibration procedure were next subjected to a GIST analysis (Oliva & Torralba, Citation2001), to check whether for each identity the difference in low-level properties between the fake and neutral expression was similar to that between the genuine and neutral expression. The GIST descriptor measures the energy of each image through filters for four spatial frequencies, each with eight orientations (32 filters) across sixteen (4 × 4) spatial locations, producing a total of 512 values to describe each image. First, we validated the ability of GIST descriptors to reliably capture the physical differences between faces by calculating the similarity of low-level properties among the face images within an identity and of the face images between different identities. This analysis confirmed that the low-level properties of images from the same identity had a significantly higher similarity in low-level properties than face images from different identities (t(48) = 5.57, p < 0.001). We then calculated the low-level properties of each of the neutral, genuine and fake expressions, and correlated the low-level properties of each neutral expression face with the low-level properties of genuine and fake expression faces (higher correlation indicating higher low-level similarity). We then compared the magnitude of low-level similarity between the neutral and genuine expressions with that between the neutral and fake expressions (). There was no significant difference in the magnitude of low-level similarity between the genuine and neutral expressions (M = 0.99, SD = 0.005) and the fake and neutral expressions (M = 0.98, SD = 0.01; t(14) = 2.06, p = 0.059). The analysis showed that the difference in low-level properties was larger between neutral and fake expressions than between neutral and genuine expressions, albeit not significantly. Thus, if anything, on the basis of low-level properties the fake expressions should induce larger adaptation aftereffects. Given that our hypothesis is that faked expressions induce smaller adaptation effects, selecting these stimuli meant we adopted a conservative approach to the role of top-down modulation of face emotion aftereffects.

Figure 3. Mean (± 1SEM) similarity in low-level properties between neutral and genuine expressions (Genuine) and between neutral and fake expressions (Fake), n.s. p > 0.05.

Morphological properties. To further assess morphological characteristics of the authentic and faked expressions, we extracted the facial Action Units (AUs) associated with the expressions of happiness and anger, using the OpenFace 2.0 program (Baltrušaitis et al., Citation2016, Citation2018). Any facial expression can be represented as a combination of facial Action Units (Ekman & Friesen, Citation1978; Ekman et al., Citation2002). The OpenFace analysis program detects the presence and intensity of such AUs, based on computer vision and machine learning algorithms. AUs indicative of genuine expression are generally well defined (e.g. Ekman et al., Citation1981; Hess & Kleck, Citation1990). Expression of a genuine smile involves the activation of AU12 (lip corner puller; Zygomatic Major) and AU6 (cheek raiser; Orbicularis oculi), while the expression of a faked smile involves the activation of AU12, but reduced activation of AU6. Genuine anger involves the activation of AU4 (brow lowerer; Depressor Supercilli, Currugator), AU5 (upper lid raiser; Levator palpebrae superioris), AU7 (lid tightener; Orbicularis oculi, pars palpebralis) and AU23 (lip tightener; Orbicularis oris). However, the AUs indicative of posed anger are less defined and to our knowledge there are no reliable AU markers differentiating between genuine and posed anger. The results, aggregated across the four actor identities, are presented in .

Table 1. Presence and intensity of AUs.

Design and procedure

All experiments were controlled by a PC running E-prime (Psychology Software Tools, Inc., Pittsburgh). Stimuli were displayed in the centre of a 22” CRT monitor screen (Philips 202P40, 1600 × 1200 pixels, 100 Hz refresh rate). Participants sat approximately 50 cm away from the screen; they entered responses on the keyboard.

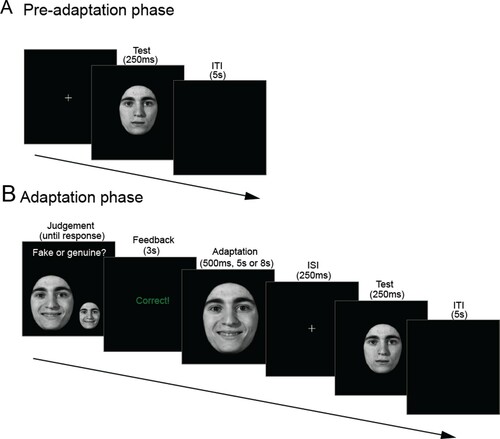

Pre-adaptation phase

All adaptation experiments began with a pre-adaptation phase (Keefe et al., Citation2016), which served as a baseline for calculating the adaptation aftereffects (see (A)). In this block participants judged the emotion portrayed in “neutral” expressions of the four actors. Each trial started with a brief fixation cross (250 ms), followed by a neutral facial expression presented on the screen for 250 ms. Participants’ responses were recorded on a 5-point Likert scale ranging from slightly angry (1) via neutral (3) to slightly happy (5) (Palumbo et al., Citation2015). Following the response, the screen remained blank for 5 s. Each of the test images was displayed twice in a random order (8 trials in total). The reason for including the pre-adaptation phase is that there is some subjective variability between participants in their perception of the expression designated as “neutral”, with some perceiving it as slightly happy or slightly angry. Inclusion of the pre-adaptation phase removed this inter-individual variability as it allowed us to calibrate the “neutral expression” on an individual basis. The scale ranged from slightly happy to slightly angry as the test faces were essentially neutral and would not be mistaken for full blown happy/angry (Palumbo et al., Citation2015), with even smaller deviations from “neutral” at points 2 and 4.

Adaptation phase

Directly following the pre-adaptation phase, 4 practice trials of the adaptation experiment were completed (2 actors × 2 emotions), followed by 16 randomised experimental trials: 4 actors × 2 facial expressions (happiness, anger) × 2 intentions (faked, genuine).

Validity checking procedure. Each trial began with a Validity checking procedure where participants had to judge whether a target face (used as the adaptor during the subsequent adaptation phase) portrayed either a genuine or a faked expression. Once a response was indicated, participants were provided with feedback about whether their judgment was correct or incorrect (see (B)). The rationale for implementing the Validity checking procedure was that we wanted to ensure that participants got to know, through an active internal evaluation process, whether the expression displayed during the subsequent adaptation phase was genuine or faked. When participants are not aware that some expressions are faked, they may not detect them as such as they may assume that the faked expressions were in fact somewhat peculiar or exceptional versions of genuine expressions. Simply instructing them beforehand that the target expression will be either faked or genuine does not mean they will be able to make correct attributions in all cases, as the differences between faked and genuine are often subtle. Instructing them by means of a label reading “fake” or “genuine” next to the target face would mean they would not make the judgement themselves, and such a label could easily be ignored in a repetitive adaptation paradigm like ours. These problems would have become even more poignant if we would not have used photographs of actors deliberately posing faked expressions, but would have used one and the same photograph for the genuine and fake expressions of each emotion per actor, and instructed the participant about the authenticity (genuine or fake). Because then, in addition to participants ignoring labels over time out of boredom, they would also quickly have lost confidence in the labels.

The current procedure ensured that participants established their knowledge/belief about the authenticity of the agent (whether genuine or fake) through an active internal evaluation of the expression, which we hypothesised to be more impactful and enduring. Because judgment of the authenticity (genuine or faked) of an expression can be quite difficult (e.g. Dawel et al., Citation2015, Citation2017; McLellan et al., Citation2010), and we wanted the participants to get it right in their first attempt, they simultaneously saw a small comparison face next to the target face, which depicted the same actor with the same expression but with the opposite congruency (for example, if the target face displayed genuine happiness then the comparison face displayed faked happiness). Such juxtaposition of fake and genuine expressions allowed the participants to make a correct judgment in their first attempt in 97% of all trials. The target face was presented centrally and the comparison face was presented in the right bottom corner of the display. Participants responded by pressing one of two marked keys on the keyboard. Both stimuli remained on the screen until a response was made. Directly following the response, feedback (“correct” or “incorrect”) was displayed for 3 s. If participants attributed the authenticity of the target face incorrectly, this phase of the trial was repeated until the correct answer was given (in only 3% of all trials the initial evaluation was incorrect, the 2nd attempt in these cases was always correct). It is important to note that if the active Validity checking procedure had not been included, we would not have known the participants’ beliefs regarding the agents’ congruency between expressed and felt emotion; no one, some, or all of them, could have formed the correct belief, making interpretation of the results impossible.

Adaptation task. Following correct labelling, the trial continued and participants were presented with an adapting face (durations of 500 ms in Experiment 1, 5 s in Experiment 2, 8 s in Experiment 3). The adapting face was always identical – with the same expression and congruency between expressed and felt emotion – to the target face in the directly preceding Validity checking procedure. Following the adapting face a brief fixation cross (duration 250 ms) was presented, followed by a smaller test face. This test face depicted the same actor, portraying a neutral expression and was presented for 250 ms. The test face was made smaller than the adapting face in order to minimise the potential impact of adaptation to low-level retinotopic image-based visual characteristics of the stimulus. Face aftereffects typically transfer across substantial changes in stimulus size (Leopold et al., Citation2001) as they reflect higher-level object-based representations of the face. Participants were required to indicate their judgement of the emotion expressed in the test face on a 5-point Likert scale identical to that used in the pre-adaptation phase, ranging from slightly angry (1) via neutral (3) to slightly happy (5). The use of emotion labels to assess the adaptation aftereffects is standard practice for emotion adaptation studies (e.g. Webster et al., Citation2004; Wincenciak et al., Citation2016). Once the response was registered the screen remained black for a further 5 s before the next trial began.

Analysis

As the magnitude of expression aftereffects depends on actor identity (Fox & Barton, Citation2007; Wincenciak et al., Citation2016), we calculated the aftereffects individually for each actor and then averaged these per condition. Ratings of the test stimulus on the 5-point Likert scale in the pre-adaptation phase were subtracted from ratings on the same 5-point Likert scale of the same test stimulus following adaptation to happy or angry (genuine and faked) expressions. Here, negative values indicate that following adaptation the test face appeared angry and positive values indicate that following adaptation the test face appeared happy.

Results

Data reduction

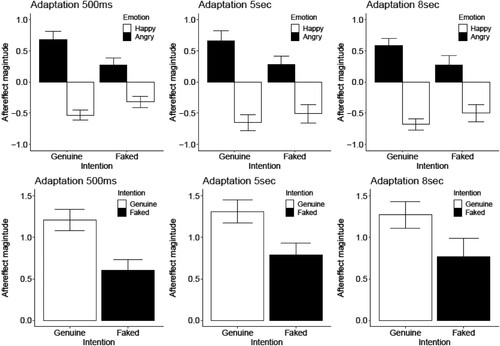

Given that adaptation decays with time, to ensure that participants remained adapted, reaction times exceeding 3500 ms were considered outliers and these trials were removed from the analysis (6.5% of trials across all experiments). Face expression aftereffects (for genuine expressions) are well documented (Campbell & Burke, Citation2009; Fox & Barton, Citation2007; Webster, Citation2011, Citation2015; Webster et al., Citation2004), nevertheless, two participants revealed a strong non-repulsive adaptation aftereffect (similar to object priming). The reason for this is not clear, but it may be that they had not complied with the task requirements and had judged the adapting rather than the test stimulus. Additionally, four participants showed no adaptation in both the genuine and fake expression adaptation conditions. Again, the reason for this is not clear, participants might have not complied with the task requirements, or did not pay attention to the adapting faces (cf. Rhodes et al., Citation2011). Our main research interest is in differences in adaptation aftereffects between the genuine and fake conditions, and not in adaptation aftereffects per se. Analyses done with and without these participants did not differ significantly. The two participants who showed significant priming with mean values exceeding 2 SD of the mean aftereffect, were also excluded from the analysis (6/61 participants in total; 3 in Experiment 1, none in Experiment 2 and 3 in Experiment 3). The final number of participants included in the analyses was: Experiment 1: 18 participants; Experiment 2: 20 participants; Experiment 3: 17 participants. Excluding such outliers who failed to show face expression aftereffects is a standard practice (Rammsayer & Verner, Citation2014; Yang et al., Citation2010). Aftereffects values (AE) were entered in a two-way repeated measures analysis of variance (ANOVA) with the factors Congruency (“genuine” or “fake”) and Emotion (happy or angry). The results of experiments 1, 2 and 3 are illustrated in .

Figure 5. Adaptation aftereffects. Top panels: Aftereffect magnitude following happy and angry adaptation. Aftereffects were calculated by subtracting the ratings of the test face obtained in the pre-adaptation phase from those following the happy and angry adaptation phase for the experiments with 500 ms, 5 and 8 s duration adaptations (from left to right). Here positive values indicate that the test face appeared happier, while negative values indicate that the test face appeared angrier. Bottom panels: For illustrative purposes, difference between happy and angry aftereffects are shown for the Genuine and Faked conditions. Aftereffects were calculated by subtracting the ratings of the test face following angry adaptation from ratings of the test face following happy adaptation directly. Here positive values indicate typical, repulsive aftereffects where the test stimulus appears less like the adapting stimulus. Error bars indicate SEM.

Experiment 1: 500 ms adaptation period

The analysis showed a typical adaptation effect, with a significant main effect of Emotion (F(1,17) = 91.38, p < .001, η2 = 0.52), where neutral test faces were judged as slightly angry (M = −.43) following happy adaptation and as slightly happy (M = 0.47) following angry adaptation. Importantly, the interaction between Emotion and Congruency was significant (F(1, 17) = 10.54, p < .01, η2 = 0.11), reflecting that in the genuine condition the sum of the absolute values of the happy and angry aftereffects (ΣAE = 1.21) was twice as strong as in the faked condition (ΣA E = 0.59). There was no main effect of Congruency on the judgement of test stimuli (F(1,17) = 1.91, p = .184, η2 = 0.01).

Experiment 2: 5 s adaptation period

Similar to Experiment 1, with a longer duration adaptor we observed a significant main effect of Emotion (F(1,19) = 137.64, p < .001, η2 = 0.41), where neutral test stimuli were judged as slightly angry (M = −0.58) following happy adaptation and as slightly happy (M = 0.47) following angry adaptation. As before, the important interaction between Emotion and Congruency was significant (F(1,19) = 6.21, p < .05, η2 = 0.04). The effects of happy and angry adaptation in the genuine condition (ΣAE = 1.31) were almost twice as strong as in the faked condition (ΣAE = 0.78). Finally, as in Experiment 1, there was no main effect of Congruency on the judgement of test stimuli (F(1,19) = 1.88, p = .186, η2 = 0.01).

Experiment 3: 8 s adaptation period

Similar to both Experiments 1 and 2, with an 8 s adapting stimulus a typical adaptation effect was found with a significant main effect of Emotion (F(1,16) = 34.43, p < .001, η2 = 0.50), neutral test stimuli were judged as slightly angry (M = −0.59) following happy adaptation and as slightly happy (M = 0.43) following angry adaptation. The interaction between Emotion and Congrueny was again significant (F(1,16) = 10.80, p < .01, η2 = 0.06); in the genuine condition the effects of happy and angry adaptation (ΣAE = 1.27) were almost twice as strong as in the faked conditions (ΣAE = 0.77). There was no main effect of Congruency on the judgement of test stimuli (F(1,16) = 1.55, p = .552, η2 = 0.01).

Discussion

We investigated whether having knowledge (actively obtained and verified) regarding the congruency between expressed and felt emotion of an agent affects the well-documented facial expression aftereffect. Results of three experiments suggest knowledge regarding emotion-expression congruency affects the perception of happy and angry facial emotional expressions; significantly smaller adaptation aftereffects were obtained for faked compared to genuine expressions – matched in intensity – following brief (500 ms), intermediate (5 s) and long (8 s) adaptation durations.

The markedly smaller aftereffects when participants adapted to the faked expressions, as compared to genuine expressions, can be interpreted as support for the premise that prior knowledge concerning the congruency between the facial expression displayed by the agent and the emotion felt by that agent (congruence between expression and emotion: genuine expression; incongruence between expression and emotion: faked expression) interacts with basic visual processes (e.g. Teufel, Fletcher, et al., Citation2010; Wiese et al., Citation2012). In real world social perception, such influences may even play a dominant role (Calvo & Nummenmaa, Citation2016).

Social contextual modulation of adaptation aftereffects has been reported for observed biological actions (De La Rosa et al., Citation2014) and contingent actions (Fedorov et al., Citation2018), but are far less studied for facial expression aftereffects.

It is somewhat surprising that we did not find any differences in the magnitude of the face aftereffects between the three experiments. Aftereffect magnitude was broadly comparable between experiments indicating that there was no effect of the duration of face exposure on the aftereffects measured here. Typically, aftereffect magnitude increases with the duration of exposure to the adapting stimulus (e.g. Barraclough et al., Citation2009, Citation2011; Leopold et al., Citation2005). As such we might have expected the largest aftereffects (for both faked and genuine expressions) in Experiment 3 (8 s) and the smallest aftereffects in Experiment 1 (500 ms). The insensitivity to adapting stimulus duration seen in our experiments could perhaps be explained by a quickly induced adaptation with early saturation even with brief (500 ms) exposure. This would result in maximal aftereffects with brief adapting face exposure (Experiment 1), and no further increases in aftereffect magnitude with increasing duration. Such effects have been reported previously (e.g. Fang et al., Citation2007; Kovacs et al., Citation2007). Another potential explanation for these results could be the impact of the Validity checking procedure at the start of each trial. Here, participants were exposed to both the adapting stimulus, and its alternative, however, the adapting face was much larger and was the focus of the participant’s attention. Exposure to the adapting face during the attribution task may have resulted in some degree of adaptation during this period. Some reports of face aftereffects (e.g. Carbon et al., Citation2007; Carbon & Ditye, Citation2011) show that face aftereffects can be induced quickly and can last for long periods of time, in some cases even longer than 24 h. Adaptation induced by the larger face during the attribution task may have resulted in saturation of adaptation, and thus varying the adaptation duration during Experiments 1–3 would have had little effect on aftereffect magnitude.

A mechanism that might possibly have contributed to the effects observed in the current experiment is embodiment. In the embodiment approach, visual processing of emotional information relies on the activation of neural states in the observer, which would normally be active when the observer experiences that emotion themselves (Gallese, Citation2007; Niedenthal, Citation2007; Niedenthal et al., Citation2009). Embodiment might contribute to the recognition of the faked versus genuine nature of facial expressions, as it could enable individuals to experience emotion contagion, where they automatically simulate the observed emotional expression. Indeed, individuals’ ability to recognise genuine and faked smiles has been shown to depend on their degree of emotional contagion (Krumhuber et al., Citation2014; Manera et al., Citation2013; Rychlowska et al., Citation2014). However, in the current study, we did not measure participants’ emotional contagion, so the role of embodiment in the observed effects here cannot currently be quantified.

Perceptual explanation

We argue that differences in the aftereffects observed in the present study are unlikely to result from any low-level perceptual differences between the fake and genuine stimuli, for the following reasons. (1) Even though genuine and faked expressions of happiness and anger share the main morphological features, such as a U-shaped mouth expressing the smile, genuine expressions are typically more intense than faked expressions (Gunnery et al., Citation2013; Gunnery & Ruben, Citation2016; Hess et al., Citation1995; Zloteanu et al., Citation2018). We, therefore, controlled for the perceived intensity of the adapting expressions in the calibration procedure. Furthermore, the difference in the perceived intensity between genuine and faked expressions is more apparent when the expressions are presented in dynamic form rather than in the static form used here (Zloteanu et al., Citation2018). (2) The GIST analysis performed on the genuine and faked expressions that were selected for equal intensity levels confirmed that their low-level features did not differ significantly. The faked expressions showed a tendency to differ from the neutral expressions to a larger extent than that the genuine expressions differed from neutral, which, if anything, should enhance the aftereffect magnitude for the faked expressions relative to the genuine expressions. Yet we observed significantly smaller aftereffects for the faked expressions. Further, perceptual and attentional factors, such as the amount of time fixating on the eye region or the scan-path, which can influence the adaptation procedure, do not account for variations in individuals’ ability to judge the authenticity of expressions (Manera et al., Citation2011).

One could argue that the faked happy and angry expressions do not represent prototypical facial expressions and that, therefore, their aftereffects also will not represent prototypical expression categories. As we offered the participant a choice between prototypical expression categories (happy and angry) for their response, the aftereffects of the faked expressions might not match with these categories. This could then result in participants opting to select a point on the 5-point Likert scale closer to the neutral point, rather than selecting the more extreme happy or angry options. However, if anything, the faked expressions showed more intensity (articulation) of facial features, especially of the mouth, than the genuine expressions, which would, if anything, repel the aftereffect further away from the neutral option. Furthermore, one could very well argue that our faked happy and angry expressions are similar enough to their genuine counterparts to be in the same happy and angry prototypical category. Faked and genuine expressions are notoriously difficult to distinguish (Dawel et al., Citation2015, Citation2017; McLellan et al., Citation2010), and without prior instruction that half of the expressions were faked, most participants would never have figured this out and would have believed that all expressions were genuine (which basically is the default assumption). Therefore, we believe that such a perceptual explanation is not sufficient to explain the current results. Further, the difference in the aftereffects are unlikely to result from differences in visual experience participants could have with genuine or faked expressions. Although this is difficult to quantify, it is likely that in our daily lives we encounter a similar number of instances of genuine and faked expressions (Iwasaki & Noguchi, Citation2016).

Limitations

The expressions in the congruent and incongruent conditions physically differed from each other. This means that the current data does not unequivocally demonstrate that prior knowledge caused the difference in adaptation aftereffects, as we cannot exclude the possibility that perceptual processes contributed to the results. Another limitation is that the genuine expressions were, to some extent, posed and not spontaneous. Future studies could address these issues possibly by using different, more naturalistic, expression induction methods, and by making the physical differences between the faked and genuine expressions still more subtle. The current range of response categories (happy and angry) were also somewhat limited, and could be widened in future studies by including other prototypical emotions. This may help better characterise the nature of the perceived aftereffect.

To further test the claim that prior knowledge concerning congruency between expressed and felt emotion modulated the adaptation aftereffects, in future studies a condition could be employed where the current face stimuli are presented while participants are unaware that half of the expressions represent a dis-congruency between felt and expressed emotion. We predict that such a condition would reveal no differences in strength of adaptation aftereffects between the two conditions (assuming that in a debrief participants indicate they didn’t notice the manipulation). Such an outcome would suggest that prior knowledge played a key role in causing the current results, rather than low-level morphological differences.

In conclusion, the current study is the first to argue that prior knowledge about the congruency between the facial emotional expression and the underpinning felt emotion modulates facial emotional aftereffects. We propose that these adaptation effects reflect that knowledge regarding the congruency between expressed and felt emotion impacted on, and modulated, the neural circuitry responsible for the perception and recognition of the emotional facial expressions, and form an integral part of the representation of the observed facial emotional expressions.

Acknowledgments

Special thanks to the Academia “Arvamus” in Rome and its artists for their contribution in the creation of the stimuli. J.W. and T.J. designed the study; J.W. conducted the experiments and analysed the data; L.P. created the stimuli; G.E. performed the GIST analysis; all authors contributed to the writing of the manuscript.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Adolphs, R. (2009). The social brain: Neural basis of social knowledge. Annual Review of Psychology, 60(1), 693–716. https://doi.org/10.1146/annurev.psych.60.110707.163514

- Allison, T., Puce, A., & McCarthy, G. (2000). Social perception from visual cues: Role of the STS region. Trends in Cognitive Sciences, 4(7), 267–278. https://doi.org/10.1016/S1364-6613(00)01501-1

- Baltrušaitis, T., Robinson, P., & Morency, L.-P. (2016). Openface: an open source facial behavior analysis toolkit. 2016 IEEE Winter Conference on Applications of Computer Vision (WACV).

- Baltrušaitis, T., Zadeh, A., Lim, Y. C., & Morency, L.-P. (2018). Openface 2.0: Facial behavior analysis toolkit. 2018 13th IEEE international conference on automatic face & gesture recognition (FG 2018).

- Barraclough, N., & Jellema, T. (2011). Visual aftereffects for walking actions reveal underlying neural mechanisms for action recognition. Psychological Science, 22(1), 87–94. https://doi.org/10.1177/0956797610391910

- Barraclough, N. E., Keith, R. H., Xiao, D.-K., Oram, M. W., & Perrett, D. I. (2009). Visual adaptation to goal-directed hand actions. Journal of Cognitive Neuroscience, 21(9), 1806–1820. https://doi.org/10.1162/jocn.2008.21145

- Barraclough, N. E., Page, S. A., & Keefe, B. D. (2016). Visual adaptation enhances action sound discrimination. Attention, Perception and Psychophysics, 79, 320–332. https://doi.org/10.3758/s13414-016-1199-z

- Bartlett, M. S., Littlewort, G. C., Frank, M. G., & Lee, K. (2014). Automatic decoding of facial movements reveals deceptive pain expressions. Current Biology, 24(7), 738–743. https://doi.org/10.1016/j.cub.2014.02.009

- Bublatzky, F., Kavcioğlu, F., Guerra, P., Doll, S., & Junghöfer, M. (2020). Contextual information resolves uncertainty about ambiguous facial emotions: Behavioral and magnetoencephalographic correlates. NeuroImage, 215, 116814. https://doi.org/10.1016/j.neuroimage.2020.116814

- Calvo, M. G., Fernández-Martín, A., & Nummenmaa, L. (2012). Perceptual, categorical, and affective processing of ambiguous smiling facial expressions. Cognition, 125(3), 373–393. https://doi.org/10.1016/j.cognition.2012.07.021

- Calvo, M. G., & Marrero, H. (2009). Visual search of emotional faces: The role of affective content and featural distinctiveness. Cognition & Emotion, 23(4), 782–806. https://doi.org/10.1080/02699930802151654

- Calvo, M. G., Marrero, H., & Beltrán, D. (2013). When does the brain distinguish between genuine and ambiguous smiles? An ERP study. Brain and Cognition, 81(2), 237–246. https://doi.org/10.1016/j.bandc.2012.10.009

- Calvo, M. G., & Nummenmaa, L. (2016). Perceptual and affective mechanisms in facial expression recognition: An integrative review. Cognition & Emotion, 30(6), 1081–1106. https://doi.org/10.1080/02699931.2015.1049124

- Campbell, J., & Burke, D. (2009). Evidence that identity-dependent and identity-independent neural populations are recruited in the perception of five basic emotional facial expressions. Vision Research, 49(12), 1532–1540. https://doi.org/10.1016/j.visres.2009.03.009

- Carbon, C.-C., & Ditye, T. (2011). Sustained effects of adaptation on the perception of familiar faces. Journal of Experimental Psychology: Human Perception and Performance, 37(3), 615–625. https://doi.org/10.1037/a0019949

- Carbon, C.-C., Strobach, T., Langton, S. R. H., Harsányi, G., Leder, H., & Kovács, G. (2007). Adaptation effect of highly familiar faces: Immediate and long lasting. Memory and Cognition, 35(8), 1966–1976. https://doi.org/10.3758/bf03192929

- Clifford, C. W., Webster, M. A., Stanley, G. B., Stocker, A. A., Kohn, A., Sharpee, T. O., & Schwartz, O. (2007). Visual adaptation: Neural, psychological and computational aspects. Vision Reseach, 47(25), 3125–3131. https://doi.org/10.1016/j.visres.2007.08.023

- Cole, P. M. (1986). Children’s spontaneous control of facial expression. Child Development, 57(6), 1309–1321. https://doi.org/10.2307/1130411

- Dawel, A., Palermo, R., O’Kearney, R., & McKone, E. (2015). Children can discriminate the authenticity of happy but not sad or fearful facial expressions, and use an immature intensity-only strategy. Frontiers in Psychology, 6. https://doi.org/10.3389/fpsyg.2015.00462

- Dawel, A., Wright, L., Irons, J., Dumbleton, R., Palermo, R., O’Kearney, R., & McKone, E. (2017). Perceived emotion genuineness: Normative ratings for popular facial expression stimuli and the development of perceived-as-genuine and perceived-as-fake sets. Behavior Research Methods, 49(4), 1539–1562. https://doi.org/10.3758/s13428-016-0813-2

- De La Rosa, S., Streuber, S., Giese, M., Bülthoff, H. H., & Curio, C. (2014). Putting actions in context: Visual action adaptation aftereffects are modulated by social contexts. PLoS One, 9(1), e86502. https://doi.org/10.1371/journal.pone.0086502

- Duclos, S. E., & Laird, J. D. (2001). The deliberate control of emotional experience through control of expressions. Cognition & Emotion, 15(1), 27–56. https://doi.org/10.1080/02699930126057

- Ekman, P., & Friesen, W. V. (1978). Facial action coding system: A technique for the measurement of facial movement. Consulting Psychologists Press.

- Ekman, P., Friesen, W. V., & Hager, J. C. (2002). The facial action coding system: A technique for the measurement of facial movement. Consulting Psychologists Press.

- Ekman, P., Hager, J. C., & Friesen, W. V. (1981). The symmetry of emotional and deliberate facial actions. Psychophysiology, 18(2), 101–106. https://doi.org/10.1111/j.1469-8986.1981.tb02919.x

- Engell, A. D., Todorov, A., & Haxby, J. V. (2010). Common neural mechanisms for the evaluation of facial trustworthiness and emotional expressions as revealed by behavioural adaptation. Perception, 39(7), 931–941. https://doi.org/10.1068/p6633

- Fang, F., Murray, S. O., & He, S. (2007). Duration-dependent fMRI adaptation and distributed viewer-centered face representation in human visual cortex. Cerebral Cortex, 17(6), 1402–1411. https://doi.org/10.1093/cercor/bhl053

- Fedorov, L. A., Chang, D.-S., Giese, M. A., Bülthoff, H. H., & De la Rosa, S. (2018). Adaptation aftereffects reveal representations for encoding of contingent social actions. Proceedings of the National Academy of Sciences, 115(29), 7515–7520. https://doi.org/10.1073/pnas.1801364115

- Firestone, C., & Scholl, B. J. (2016). Cognition does not affect perception: Evaluating the evidence for” top-down” effects. Behavioral and Brain Sciences, 39, E229. https://doi.org/10.1017/S0140525X15000965

- Fox, C. J., & Barton, J. J. S. (2007). What is adapted in face adaptation? The neural representations of expression in the human visual system. Brain Research, 1127(1), 80–89. https://doi.org/10.1016/j.brainres.2006.09.104

- Gallese, V. (2007). Before and below ‘theory of mind’: Embodied simulation and the neural correlates of social cognition. Philosophical Transactions of the Royal Society B-Biological Sciences, 362(1480), 659–669. https://doi.org/10.1098/rstb.2006.2002

- Gosselin, P., Kirouac, G., & Doré, F. Y. (1995). Components and recognition of facial expression in the communication of emotion by actors. Journal of Personality and Social Psychology, 68(1), 83. https://doi.org/10.1037/0022-3514.68.1.83

- Gunnery, S. D., Hall, J. A., & Ruben, M. A. (2013). The deliberate Duchenne smile: Individual differences in expressive control. Journal of Nonverbal Behavior, 37(1), 29–41. https://doi.org/10.1007/s10919-012-0139-4

- Gunnery, S. D., & Ruben, M. A. (2016). Perceptions of Duchenne and non-Duchenne smiles: A meta-analysis. Cognition and Emotion, 30(3), 501–515. https://doi.org/10.1080/02699931.2015.1018817

- Hess, U., Banse, R., & Kappas, A. (1995). The intensity of facial expression is determined by underlying affective state and social situation. Journal of Personality and Social Psychology, 69(2), 280. https://doi.org/10.1037/0022-3514.69.2.280

- Hess, U., Kappas, A., McHugo, G. J., Kleck, R. E., & Lanzetta, J. T. (1989). An analysis of the encoding and decoding of spontaneous and posed smiles – The use of facial electromyography. Journal of Nonverbal Behavior, 13(2), 121–137. https://doi.org/10.1007/BF00990794

- Hess, U., & Kleck, R. E. (1990). Differentiating emotion elicited and deliberate emotional facial expressions. European Journal of Social Psychology, 20(5), 369–385. https://doi.org/10.1002/ejsp.2420200502

- Hudson, M., & Jellema, T. (2011). Resolving ambiguous behavioral intentions by means of involuntary prioritization of gaze processing. Emotion, 11(3), 681. https://doi.org/10.1037/a0023264

- Iwasaki, M., & Noguchi, Y. (2016). Hiding true emotions: Micro-expressions in eyes retrospectively concealed by mouth movements. Scientific Reports, 6(1), 22049. https://doi.org/10.1038/srep22049

- Jellema, T., Pecchinenda, A., Palumbo, L., & Tan, G. E. (2011). Biases in the perception and affective valence of neutral facial expressions induced by the immediate perceptual history. Visual Cognition, 19(5), 616–634. https://doi.org/10.1080/13506285.2011.569775

- Keefe, B. D., Wincenciak, J., Jellema, T., Ward, J. W., & Barraclough, N. E. (2016). Action adaptation during natural unfolding social scenes influences action recognition and inferences made about actor beliefs. Journal of Vision, 16(9), 9–9. https://doi.org/10.1167/16.9.9

- Kovacs, G., Zimmer, M., Harza, I., & Vidnyanszky, Z. (2007). Adaptation duration affects the spatial selectivity of facial aftereffects. Vision Research, 47(25), 3141–3149. https://doi.org/10.1016/j.visres.2007.08.019

- Krumhuber, E., Lai, Y., Rosin, P. L., & Hugenberg, K. (2019). When facial expressions do and do not signal minds: The role of face inversion, expression dynamism, and emotion type. Emotion, 19(4), 746–750. https://doi.org/10.1037/emo0000475

- Krumhuber, E. G., Likowski, K. U., & Weyers, P. (2014). Facial mimicry of spontaneous and deliberate Duchenne and non-Duchenne smiles. Journal of Nonverbal Behavior, 38(1), 1–11. https://doi.org/10.1007/s10919-013-0167-8

- Leopold, D. A., O'Toole, A. J., Vetter, T., & Blanz, V. (2001). Prototype-referenced shape encoding revealed by high-level after effects. Nature Neuroscience, 4(1), 89–94. https://doi.org/10.1038/82947

- Leopold, D. A., Rhodes, G., Muller, K.-M., & Jeffery, L. (2005). The dynamics of visual adaptation to faces. Proceedings of the Royal Society of London B, 272(1566), 897–904 . https://doi.org/10.1098/rspb.2004.3022

- Levenson, R. W. (2014). The autonomic nervous system and emotion. Emotion Review, 6(2), 100–112. https://doi.org/10.1177/1754073913512003

- Manera, V., Del Giudice, M., Grandi, E., & Colle, L. (2011). Individual differences in the recognition of enjoyment smiles: No role for perceptual-attentional factors and autistic-like traits. Frontiers in Psychology, 2, 143. https://doi.org/10.3389/fpsyg.2011.00143

- Manera, V., Grandi, E., & Colle, L. (2013). Susceptibility to emotional contagion for negative emotions improves detection of smile authenticity. Frontiers in Human Neuroscience, 7, 6. https://doi.org/10.3389/fnhum.2013.00006

- McLellan, T., Johnston, L., Dalrymple-Alford, J., & Porter, R. (2010). Sensitivity to genuine versus posed emotion specified in facial displays. Cognition & Emotion, 24(8), 1277–1292. https://doi.org/10.1080/02699930903306181

- Niedenthal, P. M. (2007). Embodying emotion. Science, 316(5827), 1002–1005. https://doi.org/10.1126/science.1136930

- Niedenthal, P. M., Mermillod, M., Maringer, M., & Hess, U. (2010). The simulation of smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behavioral and Brain Sciences, 33(6), 417–U465. https://doi.org/10.1017/s0140525(10000865

- Niedenthal, P. M., Mondillon, L., Winkielman, P., & Vermeulen, N. (2009). Embodiment of emotion concepts. Journal of Personality and Social Psychology, 96(6), 6. https://doi.org/10.1037/a0015574

- Oliva, A., & Torralba, A. (2001). Modeling the shape of the scene: A holistic representation of the spatial envelope. International Journal of Computer Vision, 42(3), 145–175. https://doi.org/10.1023/A:1011139631724

- Palumbo, L., Burnett, H. G., & Jellema, T. (2015). Atypical emotional anticipation in high-functioning autism. Molecular Autism, 6(1), 1–17. https://doi.org/10.1186/s13229-015-0039-7

- Palumbo, L., & Jellema, T. (2013). Beyond face value: Does involuntary emotional anticipation shape the perception of facial expressions?. PLoS ONE, 8(2), 1–13, e56003. https://doi.org/10.1371/journal.pone.0056003

- Rammsayer, T. H., & Verner, M. (2014). The effect of nontemporal stimulus size on perceived duration as assessed by the method of reproduction. Journal of Vision, 14(5), 17–17. https://doi.org/10.1167/14.5.17

- Rhodes, G., Jeffery, L., Evangelista, E., Ewing, L., Peters, M., & Taylor, L. (2011). Enhanced attention amplifies face adaptation. Vision Research, 51(16), 1811–1819. https://doi.org/10.1016/j.visres.2011.06.008

- Russell, J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39(6), 1161–1178. https://doi.org/10.1037/h0077714

- Rychlowska, M., Canadas, E., Wood, A., Krumhuber, E. G., Fischer, A., & Niedenthal, P. M. (2014). Blocking mimicry makes true and false smiles look the same. PLoS One, 9(3), e90876. https://doi.org/10.1371/journal.pone.0090876

- Rychlowska, M., Jack, R. E., Garrod, O. G., Schyns, P. G., Martin, J. D., & Niedenthal, P. M. (2017). Functional smiles: Tools for love, sympathy, and war. Psychological Science, 28(9), 1259–1270. https://doi.org/10.1177/0956797617706082

- Stanislawski, K. S. (1975). La formation de l’acteur [Building a character]. Payot.

- Teufel, C., Alexis, D. M., Clayton, N. S., & Davis, G. (2010). Mental-state attribution drives rapid, reflexive gaze following. Attention Perception & Psychophysics, 72(3), 695–705. https://doi.org/10.3758/app.72.3.695

- Teufel, C., Alexis, D. M., Todd, H., Lawrance-Owen, A. J., Clayton, N. S., & Davis, G. (2009). Social cognition modulates the sensory coding of observed gaze direction. Current Biology, 19(15), 1274–1277. https://doi.org/10.1016/j.cub.2009.05.069

- Teufel, C., Fletcher, P. C., & Davis, G. (2010). Seeing other minds: Attributed mental states influence perception. Trends in Cognitive Sciences, 14(8), 376–382. https://doi.org/10.1016/j.tics.2010.05.005

- Webster, M. A. (2011). Adaptation and visual coding. Journal of Vision, 11(5), 5. https://doi.org/10.1167/11.5.3

- Webster, M. A. (2015). Visual adaptation. Annual Review of Vision Science, 1(1), 547–567. https://doi.org/10.1146/annurev-vision-082114-035509

- Webster, M. A., Kaping, D., Mizokami, Y., & Duhamel, P. (2004). Adaptation to natural facial categories. Nature, 428(6982), 557–561. https://doi.org/10.1038/nature02420

- Wiese, E., Wykowska, A., Zwickel, J., & Müller, H. J. (2012). I see what you mean: How attentional selection is shaped by ascribing intentions to others. PLoS One, 7(9), e45391. https://doi.org/10.1371/journal.pone.0045391

- Wieser, M. J., & Brosch, T. (2012). Faces in context: A review and systematization of contextual influences on affective face processing. Frontiers in Psychology, 3, 471. https://doi.org/10.3389/fpsyg.2012.00471

- Wincenciak, J., Ingham, J., Jellema, T., & Barraclough, N. E. (2016). Emotional actions are coded via two mechanisms: With and without identity representation. Frontiers in Psychology, 7, 693. https://doi.org/10.3389/fpsyg.2016.00693

- Yang, E., Hong, S. W., & Blake, R. (2010). Adaptation aftereffects to facial expressions suppressed from visual awareness. Journal of Vision, 10(12), 13. https://doi.org/10.1167/10.12.24

- Zloteanu, M., Krumhuber, E. G., & Richardson, D. C. (2018). Detecting genuine and deliberate displays of surprise in static and dynamic faces. Frontiers in Psychology, 9, 1184. https://doi.org/10.3389/fpsyg.2018.01184