?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Affective aspects of a stimulus can be processed rapidly and before cognitive attribution, acting much earlier for verbal stimuli than previously considered. Aimed for specific mechanisms, event-related brain potentials (ERPs), expressed in facial expressions or word meaning and evoked by six basic emotions – anger, disgust, fear, happy, sad, and surprise – relative to emotionally neutral stimuli were analysed in a sample of 116 participants. Brain responses in the occipital and left temporal regions elicited by the sadness in facial expressions or words were indistinguishable from responses evoked by neutral faces or words. Confirming previous findings, facial fear elicited an early and strong posterior negativity. Instead of expected parietal positivity, both the happy faces and words produced significantly more negative responses compared to neutral. Surprise in facial expressions and words elicited a strong early response in the left temporal cortex, which could be a signature of appraisal. The results of this study are consistent with the view that both types of affective stimuli, facial emotions and word meaning, set off rapid processing and responses occur very early in the processing stage.

In everyday lives, we organise meaningful information around emotion categories. It is the aggregation of information, along with resolving any conflicts, that makes us arrive at an emotion category that best coincides with the sensory input and priorly acquired experience. Disentangling complex cognitive-emotional behaviours and their conceptualisation is an ongoing debate (Pessoa, Citation2008) and it is clear that perceiving and contemplating rapidly presented emotional information involves largely involuntary visceral, motor, and brain processes, which causally affect how emotional information is processed (Niedenthal, Citation2007). These processes may not be easily accessible by questionnaire-based methods and require other techniques, including various forms of brain imaging. Considering the role of emotions in social interaction, it is expected that an ability to perceive emotions from a person's facial expressions accurately has vital importance (Lange et al., Citation2022) and is often considered to receive preferential processing when compared to emotional words (Beall & Herbert, Citation2008). This also explains why facial expressions, along with emotional words, are among the most popular tools for studying affective information processing.

1.1. Emotions in words and faces

Using two different modes of presenting emotions – words and facial expressions – is a productive technique for separating components of emotion processing (Bayer & Schacht, Citation2014; Frühholz et al., Citation2011; Herbert et al., Citation2013; Rellecke et al., Citation2011; Schacht & Sommer, Citation2009). While facial expressions can be directly read from images, the emotional content of words can only be accessed after language processing. Unlike spoken words, written components are acquired relatively late in life. Because understanding word meaning is a sophisticated process, analysis of emotional meaning must be postponed in time relative to reading emotion from facial expressions. Because differences in word semantics start to appear in ERP around 250 ms reaching maximum values 400 ms after stimulus onset (Kutas & Federmeier, Citation2011), they are clearly suspended when compared to the first difference in ERP in response to facial expressions.

1.2. Dimensions of emotions expressed in faces and words

Perceived similarities and differences among emotions can define the dimensions where emotions are apparent. According to an influential theory, human emotions can be represented in a two-dimensional space with pleasure-displeasure and degree-of-arousal coordinates (Russell, Citation1980). There is evidence that the two dimensions characterise both facial expressions (Russell & Bullock, Citation1985) and emotion-denoting words (Russell et al., Citation1989). However, this does not mean ERPs characterising recognition of emotions in faces or words have a similar structure. It has been repeatedly observed that ERPs for words have a positive bias consisting of a stronger response to words denoting positive emotions (Bayer & Schacht, Citation2014; Herbert et al., Citation2008; Kissler et al., Citation2009; Schacht & Sommer, Citation2009). In the case of pictures; however, negative stimuli seem to attract preferential processing, resulting in augmented emotion effects for negative as compared to neutral or positive stimuli (Carretié et al., Citation2001; Kreegipuu et al., Citation2013; Olofsson et al., Citation2008).

Appraisal theories of emotion maintain that emotions are also driven by the results of cognitive appraisals and that the feeling cannot be adequately represented by two dimensions (Fontaine et al., Citation2007; Scherer & Fontaine, Citation2019). In addition to valence and arousal, dimensions of power and novelty are needed (Gillioz et al., Citation2016). However, these additional dimensions may not be required if used stimuli have a reduced variability on one of these dimensions. In other words, a high-dimensional semantic space may have different configurations adapting to the needs of a current task (Cowen & Keltner, Citation2021). For instance, facial expressions may form a space in which specific emotions, more than valence or arousal, are relevant in organising emotional experience, expression, and neural processing. As a suggestion for a research strategy, it recommends choosing specific emotions as basic categories for the analysis, instead of opting for a rigid contrast between positive and negative emotional content.

1.3. Appraisal in affective processing

While affective neuroscience emphasises the automatic mechanisms that can be routinely activated prior to cognitive processes, it cannot be eliminated that automatic affective mechanisms that arise either after or during the performance of semantic analysis for word meaning also exist. It is generally agreed that emotions are not simply an initial psychophysiological response to a triggering event, but also involve a subsequent mental process known as appraisal (Ellsworth, Citation2013; Moors, Citation2010; Scherer, Citation1999; Scherer et al., Citation2001). Although appraisal is typically conceptualised as cognitive control over emotions, analysis of its' neural substrate reveals that many subcortical emotion-generative systems are involved (Ochsner & Gross, Citation2005; Öner, Citation2018). Although appraisal is mainly considered a deliberate cognitive operation, it has also been noticed that automaticity can be developed, making it a more mechanical early process (Ellsworth, Citation2013; Moors, Citation2010; Thiruchselvam et al., Citation2011).

Automatic processing of emotions from faces seems to differ from the comprehension of word meaning, which is performed exclusively by learned and symbolic operations. Recent neurolinguistic studies have challenged the notion that affective processing, especially semantics, cannot be applied to language. Numerous examples have witnessed that emotions interact with semantic processing, which could occur in different brain regions (Hinojosa et al., Citation2020; van Berkum, Citation2019). Emotions and triggers igniting them, such as facial expressions, existed long before language developed, but triggers of emotions can hook up to new ones via learning (van Berkum, Citation2019). With the help of emotional conditioning, for example, the non-natural signs studied by linguistics can also instigate automatic affective processes in the brain (van Berkum, Citation2019). These affective processes, van Berkum notices (p. 8), are not necessarily intensive. For example, affective evaluation is a very subtle process that is not easy to notice (Zajonc, Citation1980). Affective judgements may be fairly independent of, and precede in time, the sorts of cognitive operations with which language is usually analysed. Thus, it is possible that emotional meaning activates affective judgements, which have their signatures in brain potentials.

1.4. Regions of interest

Complex networks of regions work together in the brain to process sensory information and enable perception and recognition. Early processing of visual information occurs mainly in the occipital lobe, with areas in the occipito-temporal cortex initially responding to facial expression analysis (Haxby et al., Citation2000). Lindquist et al. (Citation2012) meta-analysis points to the anterior and medial temporal lobe, amygdala, insula and anterior midcingulate cortex as some of the consistently activated areas across studies assessing the experience or perception of emotional categories. The processing of words includes sublexical, phonological and lexico-semantic processing, generally considered to take place in the mid-fusiform gyus, dorsal and inferior supramarginal gyrus and middle and anterior temporal regions (see Hinojosa et al., Citation2020 for an overview). Lindquist et al. (Citation2012) also report the left and right anterior lobes and right superior temporal cortex as areas commonly activated.

For enhanced ERPs attempting to capture emotional tasks in face processing parietal, parietal-occipital, occipito-temporal and temporal areas have been used (see Citron, Citation2012 for a review). Similarly, emotional tasks involving enhanced ERP amplitudes in word processing have been recorded from central (Schacht & Sommer, Citation2009), centro-parietal (Bayer & Schacht, Citation2014; Schacht & Sommer, Citation2009), parietal-temporal (Bayer & Schacht, Citation2014), occipito-temporal (Kissler et al., Citation2007, Citation2009) and occipito-parietal (Bayer & Schacht, Citation2014; Ponz et al., Citation2014) areas. While the occipital, parietal, and temporal areas are commonly activated by both visual and verbal stimuli, studies on emotional word processing typically incorporate central regions more frequently.

1.5. Research questions

The current study aimed to investigate three questions of interest. Firstly, we analysed differences in ERPs between stimuli representing one of the six basic emotions (anger, happiness, sadness, surprise, fear, disgust) and neutral stimuli as an indicator of specific emotion processing. Secondly, we presented participants with either pictures depicting facial expressions of six emotions or printed words conveying the emotion label (such as ‘anger,’ ‘happiness’, ‘sadness,’ etc.). Because previous studies have demonstrated a negative bias for facial expressions and a positive bias for emotion words, one of the aims was to test these biases. Thirdly, to differentiate between temporal variations in information processing in brain regions, we recorded ERPs from three regions of interest: the occipital, central, and left temporal areas. Given that emotion words require linguistic analysis, we anticipated that relatively delayed ERPs recorded from the left temporal area, linked with language understanding and symbolic information processing, would be involved. Likewise, based on previous research on recording sites, we expected the discrimination between emotional and neutral content for verbal stimuli to be more prominent in the central region. To our knowledge, we are the first to compare the electrocortical processing of emotional stimuli for the six basic emotions for both facial expressions and words.

2. Methods

2.1. Participants

119 healthy volunteers participated in the study. The data of 3 participants were excluded due to technical errors, and the final sample consisted of 116 participants (45 men; M = 25.02; SD = 6.34; age range: 18–49 years) with normal or corrected-to-normal eyesight. Sample size (105) requirements were calculated a priori with G*Power (Faul et al., Citation2009) ANOVA (repeated measures, within factors) model, using α error probability (0.05), power (1 -β error probability; 0.85), effect size (f ; 0.15), number of groups (7), number of measurements (2), correlation among repeated measures (0.5) and nonsphericity correction (ϵ; 1). The sample consisted of 91 Estonian, 17 Russian, and 8 English-speaking participants. The experiment was carried out in the primary language of Estonian and Russian-speaking participants. English-speaking participants had completed the TOEFL iBT, IELTS Academic, Cambridge English or PTE Academic test. 107 participants described themselves as right-handed, 8 left-handed, and 1 (main hand right) ambidextrous. A preliminary ANOVA on the self-reported task confidence ratings of the groups based on sex, primary language, and handedness showed no significant differences, and all groups were included in the final analyses. Participation was reimbursed with a 15 EUR department store gift card. The study was approved by the Research Ethics Committee of the University of Tartu per the ethical standards of the Code of Ethics of the World Medical Association (Declaration of Helsinki), and a written signed consent form was obtained from all participants.

2.2. Measures and procedure

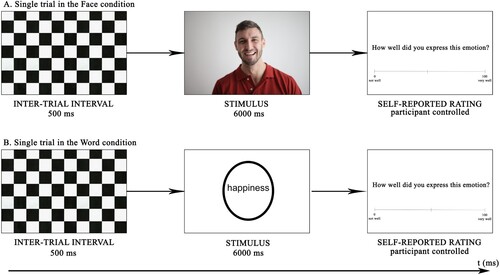

The recordings were conducted in an electrically shielded quiet room in the Laboratory of Experimental Psychology at the University of Tartu. Stimuli were presented in Psychtoolbox (MATLAB, MathWorks) on a standard PC monitor (LCD, 19' diagonal). Throughout the experiment, subjective reports, measures of EEG, skin conductance (SCR), and video recordings of participants' facial expressions were recorded. Due to the scope of the current paper, aspects of the subjective reports, video material, and SCR will not be analysed further. Figure illustrates the time course of a single trial in both conditions.

Figure 1. The general set-up of the face (A) and word (B) condition describing the time interval of a single trial in milliseconds (ms). The study consisted of two blocks: in block one, 42 depictions of an emotional or neutral face were shown, and in block two, 42 emotional or neutral words were presented for 6 ms. The picture depicting a face is an illustrative copyright-free stock photo and was not used in the experiment.

The facial expression stimuli were taken from the JACFEE dataset, a widely validated set of basic emotions including images of Japanese and Caucasian individuals (Matsumoto & Ekman, Citation1988) expressing six basic emotions: anger, disgust, happiness, sadness, surprise, and fear, and neutral facial expressions. The word stimuli included the words ‘anger’, ‘disgust’, ‘fear’, ‘happiness’, ‘sadness’, ‘surprise’, and ‘neutral’. The face condition block consisted of 7 (6 types of emotion and neutral) (different variants in one emotion category) stimuli. Different variants of pictures in an emotion category were used to attain variability in emotional expressions, avoid habituation and minimise the impact of low-level visual features such as the presence of teeth. The word condition block consisted of 7 (6 types of emotion and neutral)

repetitions. There were 42 trials for each condition. All emotion stimulus responses were compared to a neutral stimulus, which was a neutral face in the facial expression condition and the word ‘neutral’ in the word condition.

Stimuli were semi-randomised (one set of 7 stimuli was shown in random order). In the first block, the participants viewed a target face picture for 6 s, mirrored the emotion displayed, and reported the difficulty of the task on a scale of 0–100. In the second block, the participants viewed a target word picture for 6 s, expressed the emotion, and reported the difficulty of the task on a scale of 0–100. The face condition was always presented before the word condition to avoid participants assigning a categorical label to the facial expression when first exposed to a categorical label (the word in the word condition). Self-reported ratings were used to evaluate task difficulty. A black and white grid was shown in-between pictures for 500 ms to reduce the sequential effects from rapid image playback.

Conventionally, words bearing emotional connotations are used when measuring the emotional processing of semantic concepts. Direct opposition of facial expressions of basic emotions with words with strong emotional associations, such as ”kiss” or ”kill,” may not be an adequate method of comparison, however. It has been shown that emotion-label and emotion-laden words elicit different cortical responses at both early (N170) and late (LPC) stages (Zhang et al., Citation2017). Traditionally, differentiating between basic emotions in studies is done by choosing appropriate labels. We aimed not to group an emotion face directly with an emotion word (thus, participants did not have to label emotional faces); however, to avoid differentiating levels of valence and arousal between the face and word condition, we opted to use emotion-label words (i.e. ‘happiness’, ‘sadness’, etc) for a more direct comparison between the JACFEE faces and verbal stimuli.

2.3. EEG recordings and pre-processing

EEG signals were recorded using a BioSemi ActiveTwo active electrode system consisting of 64 scalp electrodes, two reference electrodes behind the earlobes, and four ocular electrodes (above and below the left eye and near the outer canthi of both eyes). The EEG signals were recorded with a band-pass filter of 0.16–100 Hz, a sampling rate of 512 Hz, and following the international 10–20 system (Homan et al., Citation1987). The EEG data were analysed offline using BrainVision Analyzer 2.1 (Brain Products GmbH). The data was referenced to the earlobe electrodes, corrected for eye blinks (Gratton et al., Citation1983), and filtered using a Butterworth Zero Phase filter (0.16–30 Hz; 24 dB/oct). Data segments from to

were extracted, and baseline correction made at 0–50

post-stimulus onset. The average EEG activity in each interval for each electrode for each stimulus for each participant was calculated, and data points at 0 μV and higher than 100 μV were removed, resulting in a loss of 2.15% of raw data. ERPs were calculated by averaging the segments from the six stimulus repetitions from all participants

, resulting in 696 trials per one stimulus category per condition. ERPs were average-mastoid referenced.

A singular value decomposition principal component analysis (PCA) based on a correlation matrix was conducted to identify individual clusters of electrocortical activity. Three distinct clusters of cortical activity were observed -- one in the occipital and occipital-parietal region (referred to as the occipital region), one in the central and central-parietal area (referred to as the central region) and one in the left temporal region. No hemispatial lateralisation was observed in the occipital-parietal and central electrode areas. The first cluster that emerged as an area of interest was concentrated around the visual cortex (represented by electrodes P7, P5, P3, P1, PO7, PO3, POz, Oz, O1, P2, P4, P6, P8, PO4, PO8, O2), the second cluster involved the central region electrodes (C5, C3, C1, Cz, C2, C4, C6, CP5, CP3, CP1, CPz, Pz, CP2, CP4 and CP6), and the third cluster concerned the language processing area in the left temporal region (electrodes F7, FT7, T7, TP7 and P9).

2.4. Transparency and openness

We have reported determination for our sample size, all data exclusions, all manipulations, and measures in the study, and follow JARS guidelines (Kazak, Citation2018). All data, analysis code and research materials are available at https://osf.io/9yjgk. Data were analysed using R, version 4.2.2 (R Core Team, 2022) and the packages tidyverse (Wickham et al., Citation2019), lmerTest (Kuznetsova et al., Citation2017), emmeans (Lenth, Citation2021), eegkit (Helwig, Citation2018), and ERP (Causeur et al., Citation2019). This study's design and analysis were not pre-registered.

3. Results

3.1. Behavioral data

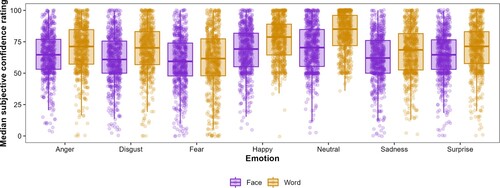

Self-reported confidence ratings for task success were lower in the face condition than for the word condition, as reflected in a main effect of condition, . Self-reported confidence was also influenced by emotion F(1,9117) = 177.09, p < .0001, f = 0.06) and by the interaction of emotion and condition F(1,9117) = 16.72, p < .0001, f = 0.006). A boxplot with median confidence ratings and interquartile range can be seen in Figure .

Figure 2. Median self-reported confidence ratings of task success (0–100) for the face and word condition. The lower and upper hinges correspond to the first and third quartiles (the 25th and 75th percentiles).

A post hoc test on emotion with a Bonferroni correction for multiple testing revealed that for subjectively reported confidence ratings expressing all emotions besides fear was significantly easier in the word condition. Expressing anger was reported higher in the word (M = 69.61; SD = 18.85) than in the face condition (M = 64.13; SD = 17.73), t(660) = 7.22, p < .0001; disgust was reported higher in the word (M = 68.71; SD = 19.54) than in the face condition (M = 61.39; SD = 19.64), t(660) = 9.17, p < .0001; happiness was reported higher in the word (M = 76.36; SD = 16.33) than in the face condition (M = 67.87; SD = 18.55), t(660) = 12.1, p < .0001; neutral was reported higher in the word (M = 82.06; SD = 15.65) than in the face condition (M = 69.93; SD = 18.87), t(660) = 17.74, p < .0001; sadness was reported higher in the word (M = 67.14; SD = 19.44) than in the face condition (M = 61.93; SD = 19.16), t(660) = 7.1, p < .0001; and surprise was reported higher in the face (M = 69.67; SD = 19.1) than in the word condition (M = 64.21; SD = 18.17), t(660) = 7.36, p < .0001. The only emotion with no significant differences in confidence ratings of expression between the face (M = 58.88; SD = 20.51) and word condition (M = 60.48; SD = 23.65) was fear, t(660) = 1.85, p = .064. The confidence rating of expressing fear was also the lowest in both conditions.

3.2. Event-related brain potentials

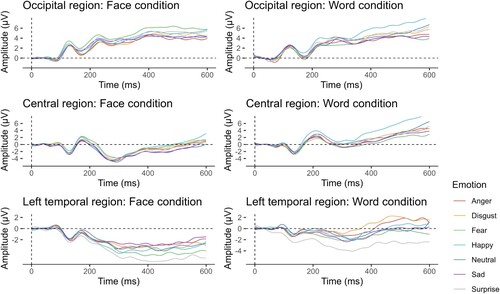

We used a linear mixed model with restricted maximum likelihood estimation, Satterthwaite's estimate degrees of freedom and participant as a random effect to determine the general effect of emotion on the amplitude of the event-related potentials. For all statistical tests, the level of significance was set to 0.05. There was a significant main effect of emotion on the amplitude of the event-related potentials . Figure depicts the general temporal progress of the ERP waves.

Figure 3. Grand average ERPs averaged in two conditions (face and word condition) and three pooled regions of interest (occipital, occipital-parietal and parietal marked as occipital, central and central-parietal marked as central, and left temporal). Grand average waves averaged into ROIs from all participants (N = 116) are time-locked to stimulus onset with the face condition depicted on the left and the word condition on the right. ERPs are average-mastoid referenced.

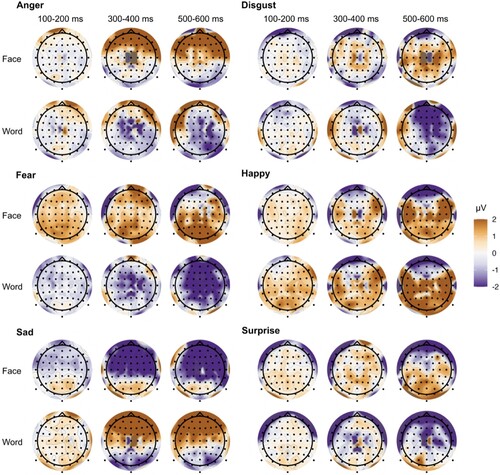

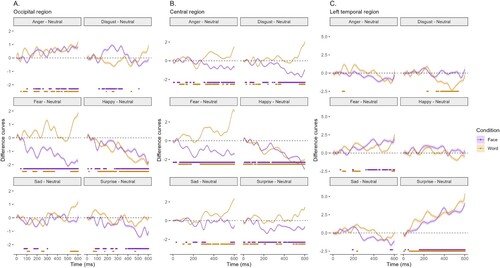

Additional point-wise significance analyses were conducted to investigate the initial moment of ERPs discriminating events carrying emotional content from events that are neutral towards emotional meaning. Data depicting the time intervals of interest (0–600 ms) of individual average ERP amplitudes were divided into 2 ms intervals and a time-varying coefficient multivariate regression model with fixed-time covariates implemented to test for the point-wise significance of an effect in a linear model. The model, including terms for the effect to be tested (emotion as a main effect and participant as random effect), was compared to a null model obtained by parameters specifying the effect set to zero (participant as random effect). The risk of erroneous identification of a significant time point, considering multiple comparisons and the high temporal dependence of residuals in linear model testing EEG data, was controlled by the Benjamini–Hochberg (BH) procedure (Benjamini & Hochberg, Citation1995). The false discovery rate (FDR) was controlled at a preset level (α = 0.05). Pairs of emotion and neutral were composed, the neutral condition subtracted from each emotion, and difference waves calculated to observe average differences of emotional content from the neutral stimuli (Figure ). 301 pairwise F-tests were run on each emotion-minus neutral value in the occipital, central and left temporal ROIs. Due to the number of tests run, all pairwise F-test statistics, contrast effect confidence intervals, values, and FDR- and FWER-controlling multiple testing corrected p-values using the Benjamini–Hochberg (BH) procedure can be found in the below-listed OSF repository.

Figure 4. Emotion-minus-neutral ERP difference curves with significant F-test results (5% significance level) controlled for multiple comparisons and temporal dependence for faces and words in the (A) occipital, (B) central, and (C) left temporal regions.

Figure demonstrates differences between ERPs to emotion-minus neutral face or word in the occipital, central and left temporal regions. The significant differences in the F-tests (5% significance level) are shown at the bottom of each graph for both faces and words. Figure depicts the topographical distribution of emotion-minus-neutral ERPs at three time periods of interest.

3.3. Results by emotion categories

Disgust and sad. ERPs elicited by disgust emerged steadily from 148–345 ms in the occipital and 343–600 ms in the central region, but not in the left temporal region in response to the face condition. For the disgust word, most significant responses appeared in the left temporal region. ERPs elicited by the sad face emerged inconsistently and for very short durations in the occipital, central, and left temporal region (for example, in the timeframe 73–103 ms in the occipital or 198–234 ms in the central region). ERPs elicited by the word ‘sad’ elicited a positive deflection in 527–600 ms in the occipital, and from 282–600 ms in the central region. No significant difference in the left temporal region was observed.

Anger. An angry face elicited an ERP in the occipital region 168–230 ms and 318–600 ms. In the central region, the significant differences were fragmented in the beginning and produced more stable negative differences in 305–600 ms. The word ‘angry’ produced somewhat consistent differences from the word ‘neutral’ from 65 ms onward in both the occipital and central region; however, only a fragmented difference was observed in the left temporal region (92–112 ms).

Fear. From about 70 ms after the onset of a fearful face, a very strong negative deflection of the ERP registered from the occipital and central region began to develop. In the left temporal region, a stable positive response emerged from 323 ms and continued until the end of the segment. On the contrary, the visual ERP in response to the word ‘fear’ was positive when compared to the ERP to the word ‘neutral’, and produced fragmented differences at 60–96 ms, 160–196 ms, 262–370 ms, and from 530 ms until the end of the segment. Similarly, the word ‘fear’ elicited two large positive ERP differences in the occipital region at 58–180 ms and 266–600 ms. In the left temporal region, there were two small significantly more positive segments at 94–122 ms and 192–264 ms for the word ‘fear’ than for ‘neutral’.

Happy. Both the happy face and the word ‘happiness’ produced a strong negative ERP compared to the neutral face and word meaning in the occipital and central regions. No significant differences for the ‘happy’ face or word ‘happy’ compared to neutral were found in the left temporal region.

Surprise. Surprise in the face elicited an ERP in the occipital region, which was significantly more negative than the ERP in response to a neutral face, with consistent differences emerging at 380 ms. Response to the word ‘surprise’ was fragmented. The same can be observed in the central region, where the face condition produced a significantly negative ERP most consistent at 146–600 ms. The word ‘surprise’ appeared fragmented with significant positivity emerging at 66–142 ms and 487–600 ms. A surprising find was made in the left temporal region where the surprised face and the word ‘surprise’ produce a significantly stronger positive ERP response when compared to the neutral face or word. The differences in the face condition emerged around 156–600 ms and for the word condition 60–600 ms.

4. Discussion

The present study aimed to examine differences in ERP presentations of emotions and neutral stimuli, compare emotional processing for facial expressions and words, and differentiate between processing areas by analysing data from the occipital, central, and left temporal areas. Emotion processing for facial expressions and words was successfully differentiated from neutral and processing revealed by ERPs was different for the specific emotions expressed either in facial expressions or word meaning, indicating both rapid and language-mediated processing. Self-reported confidence ratings indicated that all emotions, except fear, were more easily expressed in the word than in the face condition. Using a categorical label for emotion expression was significantly easier than simply mimicking a specified facial expression, a finding common in the previous literature (Palermo et al., Citation2013), although the result was not replicated for the elicitation of fear.

4.1. Affective processing

Affective processing means that emotion stimuli can induce congruent affective states, awareness of which could serve as an additional indicator of the emotional value of the presented stimuli. It is well-documented that pleasant or unpleasant pictures automatically induce specific affective reactions such as changes in heart rate and skin conductance (Codispoti et al., Citation2001). This study, together with numerous previous ones (Batty & Taylor, Citation2003; Bayer & Schacht, Citation2014; Frühholz et al., Citation2011; Herbert et al., Citation2013; Kreegipuu et al., Citation2013; Rellecke et al., Citation2011; Schacht & Sommer, Citation2009; Schupp et al., Citation2004; Uusberg et al., Citation2013), demonstrates that brain potentials can characterise automatic or relatively autonomous affective processes specific to a specific emotion category. We found remarkably different response patterns, which distinguish from one another starting from very early phases of processing and continuing to relatively late stages involving language processing. Our results are therefore in congruence with findings that emotional connotation can enhance cortical responses at all stages of emotional visual information processing.

4.2. Disgust and sad

Although disgust has been recognised as a basic emotion, it must also be a product of culture and learning because it is apparently absent in young children (Widen & Russell, Citation2013). Our study found that ERPs can effectively distinguish disgust from neutral very early in the processing stage. The differentiation of disgust from neutral was evident in the occipital and central regions between 148–345 ms and was particularly pronounced in the central area between 343–600 ms, a finding in line with previous studies (Batty & Taylor, Citation2003). Expressions of disgust are often mistaken for those of anger (Pochedly et al., Citation2012), but both the use of variability in facial expression stimuli and differences in self-reports and ERP responses indicate that the discrimination of these emotions from neutral was successful. The results regarding the emotion-label word ‘disgust’ showed only sparse differences from neutral in the occipital and central regions and a clear difference in the left temporal region at 320–538 ms. Our results could indicate a more semantic processing usually associated with the N400 component but this does not explain why no stable differences in the occipital and central regions were observed. A potential explanation is that the disgust emotion-label word isn't as salient a stimulus as disgust-laden words.

Previous studies have observed ERP components differentiating sad faces from neutral at 170 ms (Batty & Taylor, Citation2003), but no stable differences were found in our study. The bias for negative stimuli is well-documented in clinical patients where EEG studies have shown a greater ERP response for sad targets compared to neutral (Bistricky et al., Citation2014), specifically for groups with risk factors for depression. It is possible that the parts of neural circuitry observable by electrodes for processing sadness in non-clinical populations are not very different from those used for the analysis of faces in general without specified emotional expression. When facial expressions of different styles are projected into semantic space, the closest expression to neutral is sad (Hahn et al., Citation2019). The sad face may thus not be very well distinguishable from neutral unless there is a reason to be particularly alert to it, as observed in clinical populations.

4.3. Fear and happy: posterior negativity and parietal positivity

One of the key findings in emotion studies is the presence of a strong and early negative ERP in responses to negative facial emotions, particularly fear (Bayer & Schacht, Citation2014; Carretié et al., Citation2001; Kreegipuu et al., Citation2013; Olofsson et al., Citation2008; Rellecke et al., Citation2011; Schupp et al., Citation2004), as well as a later positive response to words denoting positive emotions (Bayer & Schacht, Citation2014; Herbert et al., Citation2008; Kissler et al., Citation2009; Schacht & Sommer, Citation2009). Results of this study by and large support these findings and provide additional details. The ERPs elicited by facial fear produced the largest deflection from zero, starting already around 70 ms and a strong positivity for the word ‘fear’ in the occipital and central regions. It has generally been proposed that processing pictorial material requires much greater attentional effort than processing words (Tempel et al., Citation2013). Alternatively, brain circuits responsible for detecting fear in faces could be involving the visual areas more intensively than the brain regions which participate in language processing. ERPs recorded from the occipital and central cortices detected differences between the verbal ‘fear’ and ‘neutral’ and just like the substantial ERP deviations observed in response to facial fear, the word ‘fear’ also created a departure of ERP from neutral. This negativity may be a brain signature of an appraisal process. It is not only fear in the face which triggers an early warning system, but also a word's emotional meaning that can cause an automatic process of affective evaluation. Although appraisal is typically thought of as a cognitive and deliberate process, automaticity can be developed in appraisal, and some spontaneous processes can be recruited through emotional conditioning (Ellsworth, Citation2013; Moors, Citation2010).

The word ‘happiness’ produced a significant ERP deflection already at about 80 ms post stimulus onset, coinciding with the time when a happy facial expression evoked a significant response. While semantic word processing is typically associated with the N400 ERP component (Kutas & Federmeier, Citation2011), previous studies have demonstrated early and late ERP sensitivity to emotional words (Herbert et al., Citation2008; Kissler et al., Citation2007). Some studies suggest differentiating emotion categories inferred from visual stimuli can act as fast or after than latencies required for object recognition (Kawasaki et al., Citation2001). Our results suggest that the emotional content of words, at least for some emotional categories, can be accessed as fast as facial expressions.

4.4. Anger and surprise: central effects and temporal positivity

Research suggests a preferential attentional and perceptual processing of threatening facial expressions (Kuldkepp et al., Citation2013; Rellecke et al., Citation2011; Schacht & Sommer, Citation2009; Schupp et al., Citation2004), reflecting them as particularly salient stimuli. We observed differences in response to angry faces in the occipital and central areas, consistent with previous research (Schacht & Sommer, Citation2009; Schupp et al., Citation2004). The rapid discrimination of angry faces from neutral supports the notion that the detection of angry expressions involves enhanced neural processing and automatic change detection, and thus such stimuli seem to be prioritised in early visual processing. Our study found that this preferential processing also applies to happy and fearful faces. The word ‘angry’ produced a similar neural response to the face stimulus in the occipital and central regions, indicating early processing. While semantic processing of words is typically observed around 400 ms post-stimulus onset, some studies have found that emotional connotations can be detected as early as 120 ms (Kissler et al., Citation2006). This suggests that a word's emotional connotation could be directly connected to the abstract representation of its visual form and emotional content amplifies early stages of semantic analysis in much the same way an instructed attention-enhancing processing task would.

Surprise falls between happiness and fear in the semantic space of emotions (Adolphs et al., Citation1996), it can be primed positively or negatively (Kim et al., Citation2004), and it is possible that positive and negative surprise may be two distinct emotions (Vrticka et al., Citation2014). The main components that differentiate surprise from all other emotions are novelty and unpredictability (Soriano et al., Citation2015). It is often accurately recognised in facial expression studies, and when mistaken, confused most frequently with fear, but rarely with happiness (Mill et al., Citation2009). Visual ERPs evoked by surprise in the face were similar to a strong negative deflection observed in response to fear, which seems to suggest that negative valence surprises are more expected than positive ones or surprise is initially coded as negative (Petro et al., Citation2018). Our study revealed a robust deviation in the processing of the word ‘surprise’ in the left temporal region from an early stage until the end of the segment. Soriano et al. (Citation2015) argue that while all other basic emotions can be effectively described on a valence-arousal scale, surprise requires additional dimensions (novelty and power) to be incorporated into existing scales and the relationship between valence and arousal is regulated by the perceived uncertainty of valence (Brainerd, Citation2018). Studies on emotion-producing words often use a gross contrast of positive and negative words, but investigating specific emotional categories is often neglected. It is possible that the perceived ambiguity in the surprise word acted as a factor, requiring additional processing of the stimulus. The positive deflection in response to the word ‘surprise’ could be indicative of affective reappraisal, the primary function of which may be increasing alertness to unexpected events.

4.5. Limitations

Due to the nature of the experimental set-up, we were not able to collect direct measurements of perceived emotional stimuli. It should be taken into consideration that while the category label was perceived as easier than copying a facial expression, the self-reported confidence rating for task success may not offer the most direct means of comparing emotion faces and words. Consequently, the revealed differences in the self-report could be linked to the differences in mimicking an expression or translating a word to an emotion and then attempting to generate the associated expression. Thus, these differences may not solely stem from the differences between perceiving emotion faces and words.

5. Conclusions

The processing of different emotions was found to activate dissimilar mechanisms that provided an emotional processing of the situation. Self-reported confidence ratings of copying a facial expression or responding to a categorical emotional label were not associated with early ERP processing. Using a category label was almost always perceived easier (with the exception of fear), but this did not translate into ERP responses. Brain responses to sad faces or words in the occipital and left temporal regions did not differ from those to neutral stimuli. As previously documented, the presentation of a fearful face elicited early and strong posterior negativity. Contrary to expectations, both the happy face and word elicited significantly more negative responses compared to neutral stimuli in the form of parietal negativity. Both forms of surprise elicited a strong positive response in the left temporal brain region, which could reflect an appraisal process. The distinctive outcomes of the surprise stimuli indicate that in the study of emotional stimulus processing, it could be useful to utilise a broader range of emotional categories instead of relying solely on a valence-based division. The processing of happy, fearful, and angry emotional stimuli appeared early and stable in both processing faces and words, which indicates that emotional content is processed rapidly in both mediums. The results of this study are consistent with the view that both types of affective stimuli, facial emotions and word meanings, are able to set off rapid processing of emotional stimuli, acting as early for words as they do for faces. This indicates that both mediums have a similar potential to carry emotional meaning in communication.

Acknowledgments

We wish to thank Svetlana Bolotova, Dariia Temirova, Jelena Gorbova and Ikechukwu Ofodile for assisting in data collection, Egils Avots for help with the set-up of the experimental program and Stênio Foerster for his valuable input. We also thank the reviewers for their thorough comments and insights.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are openly available at OSF at https://doi.org/10.17605/OSF.IO/9YJGK, reference number 9YJGK.

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Adolphs, R., Damasio, H., Tranel, D., & Damasio, A. R. (1996). Cortical systems for the recognition of emotion in facial expressions. The Journal of Neuroscience, 16(23), 7678–7687. https://doi.org/10.1523/JNEUROSCI.16-23-07678.1996

- Batty, M., & Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Cognitive Brain Research, 17(3), 613–620. https://doi.org/10.1016/S0926-6410(03)00174-5

- Bayer, M., & Schacht, A. (2014). Event-related brain responses to emotional words, pictures, and faces: A cross-domain comparison. Frontiers in Psychology, 5, 1106. https://doi.org/10.3389/fpsyg.2014.01106

- Beall, P. M., & Herbert, A. M. (2008). The face wins: stronger automatic processing of affect in facial expressions than words in a modified stroop task. Cognition and Emotion, 22(8), 1613–1642. https://doi.org/10.1080/02699930801940370

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. https://doi.org/10.1111/j.2517-6161.1995.tb02031.x

- Bistricky, S. L., Atchley, R. A., Ingram, R., & O'Hare, A. (2014). Biased processing of sad faces: An ERP marker candidate for depression susceptibility. Cognition and Emotion, 28(3), 470–492. https://doi.org/10.1080/02699931.2013.837815

- Brainerd, C. J. (2018). The emotional-ambiguity hypothesis: a large-scale test. Psychological Science, 29(10), 1706–1715. https://doi.org/10.1177/0956797618780353

- Carretié, L., Mercado, F., Tapia, M., & Hinojosa, J. A. (2001). Emotion, attention, and the 'negativity bias', studied through event-related potentials. International Journal of Psychophysiology, 41(1), 75–85. https://doi.org/10.1016/S0167-8760(00)00195-1

- Causeur, D., Sheu, C.-F., Chu, M.-C., & Rufini, F. (2019). ERP: Significance analysis of event-related potentials data.

- Citron, F. M. (2012). Neural correlates of written emotion word processing: A review of recent electrophysiological and hemodynamic neuroimaging studies. Brain and Language, 122(3), 211–226. https://doi.org/10.1016/j.bandl.2011.12.007

- Codispoti, M., Bradley, M. M., & Lang, P. J. (2001). Affective reactions to briefly presented pictures. Psychophysiology, 38(3), 474–478. https://doi.org/10.1111/psyp.2001.38.issue-3

- Cowen, A. S., & Keltner, D. (2021). Semantic space theory: A computational approach to emotion. Trends in Cognitive Sciences, 25(2), 124–136. https://doi.org/10.1016/j.tics.2020.11.004

- Ellsworth, P. C. (2013). Appraisal theory: old and new questions. Emotion Review, 5(2), 125–131. https://doi.org/10.1177/1754073912463617

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

- Fontaine, J. R. J., Scherer, K. R., Roesch, E. B., & Ellsworth, P. C. (2007). The world of emotions is not two-dimensional. Psychological Science, 18(12), 1050–1057. https://doi.org/10.1111/j.1467-9280.2007.02024.x

- Frühholz, S., Jellinghaus, A., & Herrmann, M. (2011). Time course of implicit processing and explicit processing of emotional faces and emotional words. Biological Psychology, 87(2), 265–274. https://doi.org/10.1016/j.biopsycho.2011.03.008

- Gillioz, C., Fontaine, J. R. J., Soriano, C., & Scherer, K. R. (2016). Mapping emotion terms into affective space: further evidence for a four-dimensional structure. Swiss Journal of Psychology, 75(3), 141–148. https://doi.org/10.1024/1421-0185/a000180

- Gratton, G., Coles, M. G., & Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology, 55(4), 468–484. https://doi.org/10.1016/0013-4694(83)90135-9

- Hahn, P., Castillo, S., & Cunningham, D. W. (2019). Fitting the style: The semantic space for emotions on stylized faces. In CASA '19: Proceedings of the 32nd International Conference on Computer Animation and Social Agents (pp. 21–24). ACM.

- Haxby, J. V., Hoffman, E. A., & Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–233. https://doi.org/10.1016/S1364-6613(00)01482-0

- Helwig, N. E. (2018). eegkit: Toolkit for electroencephalography data.

- Herbert, C., Junghofer, M., & Kissler, J. (2008). Event related potentials to emotional adjectives during reading. Psychophysiology, 45(3), 487–498. https://doi.org/10.1111/j.1469-8986.2007.00638.x

- Herbert, C., Sfaerlea, A., & Blumenthal, T. (2013). Your emotion or mine: Labeling feelings alters emotional face perception -- an ERP study on automatic and intentional affect labeling. Frontiers in Human Neuroscience, 7, 378. https://doi.org/10.3389/fnhum.2013.00378

- Hinojosa, J. A., Moreno, E. M., & Ferré, P. (2020). Affective neurolinguistics: Towards a framework for reconciling language and emotion. Language, Cognition and Neuroscience, 35(7), 813–839. https://doi.org/10.1080/23273798.2019.1620957

- Homan, R. W., Herman, J., & Purdy, P. (1987). Cerebral location of international 10-20 system electrode placement. Electroencephalography and Clinical Neurophysiology, 66(4), 376–382. https://doi.org/10.1016/0013-4694(87)90206-9

- Kawasaki, H., Adolphs, R., Kaufman, O., Damasio, H., Damasio, A. R., Granner, M., Bakken, H., Hori, T., & Howard, M. A. (2001). Single-neuron responses to emotional visual stimuli recorded in human ventral prefrontal cortex. Nature Neuroscience, 4(1), 15–16. https://doi.org/10.1038/82850

- Kazak, A. E. (2018). Editorial: journal article reporting standards. American Psychologist, 73(1), 1–2. https://doi.org/10.1037/amp0000263

- Kim, H., Somerville, L. H., Johnstone, T., Polis, S., Alexander, A. L., Shin, L. M., & Whalen, P. J. (2004). Contextual modulation of amygdala responsivity to surprised faces. Journal of Cognitive Neuroscience, 16(10), 1730–1745. https://doi.org/10.1162/0898929042947865

- Kissler, J., Assadollahi, R., & Herbert, C. (2006). Emotional and semantic networks in visual word processing: Insights from ERP studies. In Progress in Brain Research (Vol. 156, pp. 147–183). Elsevier.

- Kissler, J., Herbert, C., Peyk, P., & Junghofer, M. (2007). Buzzwords: early cortical responses to emotional words during reading. Psychological Science, 18(6), 475–480. https://doi.org/10.1111/j.1467-9280.2007.01924.x

- Kissler, J., Herbert, C., Winkler, I., & Junghofer, M. (2009). Emotion and attention in visual word processing – an ERP study. Biological Psychology, 80(1), 75–83. https://doi.org/10.1016/j.biopsycho.2008.03.004

- Kreegipuu, K., Kuldkepp, N., Sibolt, O., Toom, M., Allik, J., & Näätänen, R. (2013). vMMN for schematic faces: Automatic detection of change in emotional expression. Frontiers in Human Neuroscience, 7, 714. https://doi.org/10.3389/fnhum.2013.00714

- Kuldkepp, N., Kreegipuu, K., Raidvee, A., Näätänen, R., & Allik, J. (2013). Unattended and attended visual change detection of motion as indexed by event-related potentials and its behavioral correlates. Frontiers in Human Neuroscience, 7, 476. https://doi.org/10.3389/fnhum.2013.00476

- Kutas, M., & Federmeier, K. D. (2011). Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annual Review of Psychology, 62( 1), 621–647. https://doi.org/10.1146/psych.2011.62.issue-1

- Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2017). lmerTest package: Tests in linear mixed effects models. Journal of Statistical Software, 82(13), 1–26. https://doi.org/10.18637/jss.v082.i13

- Lange, J., Heerdink, M. W., & van Kleef, G. A. (2022). Reading emotions, reading people: Emotion perception and inferences drawn from perceived emotions. Current Opinion in Psychology, 43(1), 85–90. https://doi.org/10.1016/j.copsyc.2021.06.008

- Lenth, V. R. (2021). Emmeans: Estimated marginal means, aka least-squares means. R package version 1.6.1, pages. https://CRAN.R–project.org/package=emmeans

- Lindquist, K. A., Wager, T. D., Kober, H., Bliss-Moreau, E., & Barrett, L. F. (2012). The brain basis of emotion: A meta-analytic review. The Behavioral and Brain Sciences, 35(3), 121–143. https://doi.org/10.1017/S0140525X11000446

- Matsumoto, D., & Ekman, P. (1988). Japanese and Caucasian facial expressions of emotion (JACFEE). San Francisco, CA: Intercultural and Emotion Research Laboratory, Department of Psychology, San Francisco State University.

- Mill, A., Allik, J., Realo, A., & Valk, R. (2009). Age-related differences in emotion recognition ability: A cross-sectional study. Emotion, 9(5), 619–630. https://doi.org/10.1037/a0016562

- Moors, A. (2010). Automatic constructive appraisal as a candidate cause of emotion. Emotion Review, 2(2), 139–156. https://doi.org/10.1177/1754073909351755

- Niedenthal, P. M. (2007). Embodying emotion. Science, 316(5827), 1002–1005. https://doi.org/10.1126/science.1136930

- Ochsner, K. N., & Gross, J. J. (2005). The cognitive control of emotion. Trends in Cognitive Sciences, 9(5), 242–249. https://doi.org/10.1016/j.tics.2005.03.010

- Olofsson, J. K., Nordin, S., Sequeira, H., & Polich, J. (2008). Affective picture processing: An integrative review of ERP findings. Biological Psychology, 77(3), 247–265. https://doi.org/10.1016/j.biopsycho.2007.11.006

- Öner, S. (2018). Neural substrates of cognitive emotion regulation: A brief review. Psychiatry and Clinical Psychopharmacology, 28(1), 91–96. https://doi.org/10.1080/24750573.2017.1407563

- Palermo, R., O'Connor, K. B., Davis, J. M., Irons, J., & McKone, E. (2013). New tests to measure individual differences in matching and labelling facial expressions of emotion, and their association with ability to recognise vocal emotions and facial identity. PLOS ONE, 8(6), e68126. Publisher: Public Library of Science. https://doi.org/10.1371/journal.pone.0068126

- Pessoa, L. (2008). On the relationship between emotion and cognition. Nature Reviews Neuroscience, 9(2), 148–158. https://doi.org/10.1038/nrn2317

- Petro, N. M., Tong, T. T., Henley, D. J., & Neta, M. (2018). Individual differences in valence bias: fMRI evidence of the initial negativity hypothesis. Social Cognitive and Affective Neuroscience, 13(7), 687–698. https://doi.org/10.1093/scan/nsy049

- Pochedly, J. T., Widen, S. C., & Russell, J. A. (2012). What emotion does the 'facial expression of disgust' express?. Emotion, 12(6), 1315–1319. https://doi.org/10.1037/a0027998

- Ponz, A., Montant, M., Liegeois-Chauvel, C., Silva, C., Braun, M., Jacobs, A. M., & Ziegler, J. C. (2014). Emotion processing in words: A test of the neural re-use hypothesis using surface and intracranial EEG. Social Cognitive and Affective Neuroscience, 9(5), 619–627. https://doi.org/10.1093/scan/nst034

- Rellecke, J., Palazova, M., Sommer, W., & Schacht, A. (2011). On the automaticity of emotion processing in words and faces: Event-related brain potentials evidence from a superficial task. Brain and Cognition, 77(1), 23–32. https://doi.org/10.1016/j.bandc.2011.07.001

- Russell, J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39(6), 1161–1178. https://doi.org/10.1037/h0077714

- Russell, J. A., & Bullock, M. (1985). Multidimensional scaling of emotional facial expressions: Similarity from preschoolers to adults. Journal of Personality and Social Psychology, 48(5), 1290–1298. https://doi.org/10.1037/0022-3514.48.5.1290

- Russell, J. A., Lewicka, M., & Niit, T. (1989). A cross-cultural study of a circumplex model of affect. Journal of Personality and Social Psychology, 57(5), 848–856. https://doi.org/10.1037/0022-3514.57.5.848

- Schacht, A., & Sommer, W. (2009). Emotions in word and face processing: Early and late cortical responses. Brain and Cognition, 69(3), 538–550. https://doi.org/10.1016/j.bandc.2008.11.005

- Scherer, K. R. (1999). Appraisal theory. In T. Dalgleish & M. J. Power (Eds.), Handbook of cognition and emotion (pp. 637–663). John Wiley & Sons Ltd.

- Scherer, K. R., & Fontaine, J. R. J. (2019). The semantic structure of emotion words across languages is consistent with componential appraisal models of emotion. Cognition and Emotion, 33(4), 673–682. https://doi.org/10.1080/02699931.2018.1481369

- Scherer, K. R., Schorr, A., & Johnstone, T (2001). Appraisal processes in emotion: Theory, methods, research, Series in affective science, Oxford, New York: Oxford University Press.

- Schupp, H. T., Öhman, A., Junghöfer, M., Weike, A. I., Stockburger, J., & Hamm, A. O. (2004). The facilitated processing of threatening faces: An ERP analysis. Emotion, 4(2), 189–200. https://doi.org/10.1037/1528-3542.4.2.189

- Soriano, C. M., Fontaine, J. R. J., & Scherer, K. R. (2015). Surprise in the GRID. Review of Cognitive Linguistics. Published Under the Auspices of the Spanish Cognitive Linguistics Association, 13(2), 436–460. https://doi.org/10.1075/rcl

- Tempel, K., Kuchinke, L., Urton, K., Schlochtermeier, L. H., Kappelhoff, H., & Jacobs, A. M. (2013). Effects of positive pictograms and words: An emotional word superiority effect?. Journal of Neurolinguistics, 26(6), 637–648. https://doi.org/10.1016/j.jneuroling.2013.05.002

- Thiruchselvam, R., Blechert, J., Sheppes, G., Rydstrom, A., & Gross, J. J. (2011). The temporal dynamics of emotion regulation: An EEG study of distraction and reappraisal. Biological Psychology, 87(1), 84–92. https://doi.org/10.1016/j.biopsycho.2011.02.009

- Uusberg, A., Uibo, H., Kreegipuu, K., & Allik, J. (2013). EEG alpha and cortical inhibition in affective attention. International Journal of Psychophysiology, 89(1), 26–36. https://doi.org/10.1016/j.ijpsycho.2013.04.020

- van Berkum, J. J. A. (2019). Language comprehension and emotion: Where are the interfaces, and who cares? In G. I. de Zubicaray & N. O. Schiller, (Eds.), The Oxford Handbook of Neurolinguistics (pp. 1–34). Oxford University Press, Oxford.

- Vrticka, P., Lordier, L., Bediou, B., & Sander, D. (2014). Human amygdala response to dynamic facial expressions of positive and negative surprise. Emotion, 14(1), 161–169. https://doi.org/10.1037/a0034619

- Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L., François, R., Grolemund, G., Hayes, A., Henry, L., Hester, J., Kuhn, M., Pedersen, T., Miller, E., Bache, S., Müller, K., Ooms, J., Robinson, D., Seidel, D., Spinu, V., …Yutani, H. (2019). Welcome to the tidyverse. Journal of Open Source Software, 4(43), 1686. https://doi.org/10.21105/joss

- Widen, S. C., & Russell, J. A. (2013). Children's recognition of disgust in others. Psychological Bulletin, 139(2), 271–299. https://doi.org/10.1037/a0031640

- Zajonc, R. B. (1980). Feeling and thinking: Preferences need no inferences. American Psychologist, 35(2), 151–175. https://doi.org/10.1037/0003-066X.35.2.151

- Zhang, J., Wu, C., Meng, Y., & Yuan, Z. (2017). Different neural correlates of emotion-label words and emotion-laden words: An ERP study. Frontiers in Human Neuroscience, 11, 455. https://doi.org/10.3389/fnhum.2017.00455