?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Humans recognise reddish-coloured faces as angry. However, does facial colour also affect “implicit” facial expression perception of which humans are not explicitly aware? In this study, we investigated the effects of facial colour on implicit facial expression perception. The experimental stimuli were “hybrid faces”, in which the low-frequency component of the neutral facial expression image was replaced with the low-frequency component of the facial expression image of happiness or anger. In Experiment 1, we confirmed that the hybrid face stimuli were perceived as neutral and, therefore, supported implicit facial expression perception. In Experiment 2, the hybrid face stimuli were adjusted to natural and reddish facial colours, and their friendliness ratings were compared. The results showed that the expression of happiness was rated as more friendly than the expression of anger. In addition, the expression of happiness was rated as friendlier when the low-frequency happy component was red, but the friendliness rating of the expression of anger did not change when it was presented in red. In Experiment 3, we affirmed the implicit facial expression perception even in reddish colours. These results suggest that facial colour modulates the perception of implicit facial expressions in hybrid facial stimuli.

Human faces are social stimuli with important meanings. Facial information processing in daily life not only focuses on shape processing; facial surface information, such as facial colour and reflection, characteristics also plays an important role in face recognition. Previous studies have reported relationships between facial colour and perceived facial expression. Further, physiological studies have demonstrated an impact of emotional state on human facial colour. For example, Drummond studied how facial expressions affect facial blood flow; his findings supported the existence of “flushes with joy” (Drummond, Citation1994) or “flushes with rage” (Drummond & Quah, Citation2001). Additionally, several studies have indicated that facial colour also influences the perception of facial expressions (Kato et al., Citation2022; Minami et al., Citation2018; Thorstenson et al., Citation2022), such that reddish-coloured faces are judged as angrier or happier than faces with other tones (Nakajima et al., Citation2017). Thus, facial colour is a research topic of interest because such colour affects not only the perception of facial expression, but also perceptions of age, health condition, and attractiveness (Jones et al., Citation2004; Stephen et al., Citation2009; Tarr et al., Citation2001).

Such studies have regarded facial colour with “explicit” facial expressions, namely expressions that are clearly visible on the face. However, we are particularly concerned with the “implicit” effect of facial colour on facial expression perception to gain deep insights about emotional processing in the human visual system. Implicit emotions can be defined as emotions processed without conscious supervision or explicit intentions. That is, emotional processing can occur implicitly, without individuals being consciously aware of their engagement in such processing (Koole & Rothermund, Citation2011). Thus, such emotional information may involve facial colour at an unconscious level. For example, unlike explicit emotions, implicit emotions are often expressed indistinctly and may sometimes cause uncertainty for the perceiver. Although not clearly expressed, these latent emotions are still expressed through various facial features and these features can be perceived unconsciously. Bar et al. (Citation2006) stated that first impressions can be formed quickly based on information available within the first 39 ms. These perceptions are created by structural aspects of the face that carry information about an individual’s emotional state or mood. Additionally, Winkielman and Berridge (Citation2004) suggested that individuals were capable of recognising others’ emotional expressions at an implicit or subconscious level. Therefore, studying implicit emotional expression has several advantages for understanding facial expressions more universally.

To test the implicit processing of facial expressions, we focused on “hybrid” faces, which have been used as a stimulus by which to study the hidden emotions conveyed by the face (Laeng et al., Citation2010). In these faces, a facial expression is shown in the low spatial frequency component of the image, while the remaining frequency components are those of a neutral expression of the same face. In a previous study, participants judged these hybrid faces as having neutral expressions, but they rated hybrid happy faces (i.e. neutral faces with the low-frequency content of a happy face) as friendlier than hybrid angry faces (Laeng et al., Citation2010). In an electroencephalography study of emotional hybrid faces, Prete et al. (Citation2015) confirmed that these hybrid faces could elicit emotion-related and face-related components of event-related potentials such as P1, N170, and P2. Their findings indicate that hybrid faces are appropriate stimuli for studying implicit emotions.

Therefore, in this study, we employed “hybrid faces” to elicit an effect of the implicit facial expression and added facial colour (made the faces reddish) to the emotional hybrid faces to investigate whether such colour affected the perception of implicit happy and angry expressions. We hypothesised that facial colour influences not only the perception of explicit facial expressions but also implicit facial expressions, which are hidden on the face.

Experiment 1: Emotion labelling

This experiment was conducted to evaluate whether the specific hidden expressions in hybrid faces were considered neutral, as in a previous study (Laeng et al., Citation2010).

Participants

Thirty-four participants (16 women and 18 men; Mage = 22.9 years, SD = 1.5) completed this experiment. The sample size was determined based on a previous study (Prete et al., Citation2018) that recruited 30 participants. They did not know the purpose of the experiment.. According to self-report, all participants had normal or corrected-to-normal visual acuity, and none had colour blindness. They were all students at the Toyohashi University of Technology and provided written informed consent. The experimental procedures were approved by the Committee for Human Research at Toyohashi University of Technology and the experiment was strictly conducted in accordance with the approved guidelines of the committee and with the Declaration of Helsinki. This study was not preregistered.

The data of four participants (one female) were excluded from the sample because their ratings exceeded 1) twice the standard deviation from the mean for at least one expression, the same as Prete et al. (Citation2018), or 2) more than one standard deviation from the mean for more than one expression to more strictly address outliers in multiple emotional expressions.

Apparatus

Participants observed the stimuli in full-screen mode, centred on a 32-inch liquid crystal display (Display++, Cambridge Research Systems Ltd., Rochester, Kent, UK) with a resolution of pixels. The display was linearly calibrated by a built-in gamma-correction (the white point chromaticity was x = .276, y = .289, Y = 125.2 cd/m2 measured by a spectroradiometer (SR-3AR, TOPCON, Japan)). Each participant was required to sit on a chair in a dark booth with moderate lighting, with their head fixed on a chin rest at 78 cm from the display.

Stimuli

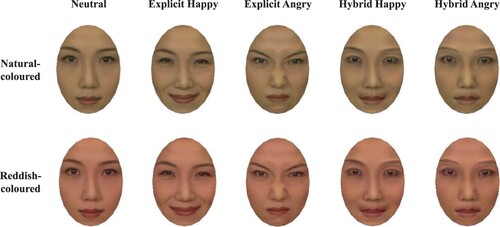

shows examples of the stimuli. The stimuli were created from photographs contained in the ATR Facial Expression Image Database (Ogawa et al., Citation1997), a database for emotional and neutral RGB colour face images. Photographs of the frontal view of three female and three male faces with happy, angry, and neutral expressions were selected.

Figure 1. Example Images of the Stimuli.

Note. The top and bottom rows indicate the natural – and reddish-coloured faces, respectively.

The spatial frequency content of each face image was filtered using MATLAB software (MathWorks, Natick, MA, USA) to generate hybrid faces. The original emotional (happy and angry) and neutral images were filtered into those with low ( cycles/image) and high (

cycles/image) spatial frequencies (Laeng et al., Citation2010). Then, the neutral high-pass face was combined with a low-pass component of the same face to obtain two hybrid faces containing either a happy or angry expression (examples in top row of .

We then collected 30 images as stimuli for this experiment, namely 18 original face images with explicit expressions (happy, angry, or neutral) and 12 hybrid faces with hidden expressions (happy or angry). External features such as ears, hairline, and neck were removed from the face images, and the images were cropped to an elliptical shape using MATLAB. In addition, the images were normalised to the mean luminance and root mean square contrast using MATLAB with the SHINE toolbox (Willenbockel et al., Citation2010). Finally, the average and standard deviation CIELAB colour of the stimuli in natural colour were 48.92 ± 8.18 in L*, 7.49 ± 3.96 in a*, 20.69 ± 6.71 in b*, respectively.

Procedure

Each trial began with a blank inter-stimulus interval screen for one second, followed by a fixation cross for one second, after which an expression face was presented. The participants were asked to judge the stimulus as one of three emotional expressions (1: happy, 2: neutral, or 3: angry) using a numerical keypad. Each participant was required to sit on a chair in a dark booth with moderate lighting, with their head fixed on a chin rest at a distance of 78 cm from the display. The size of the stimuli was of the visual angle (

pixels). Each stimulus was displayed on the screen until the participants made a key press, and then a new trial was presented. It was explained to each participant that they would see a group of faces, but not that some faces contained hidden expressions. The participants were given verbal instructions before the experiment (no text instructions were on the screen). We also provided a printed guideline listing the numbers and corresponding numerical keypad keys to help the participants easily check their responses. Each stimulus was repeated thrice, and the experiment consisted of 90 trials. The experiment took approximately 15 min to complete and was controlled using MATLAB with Psychtoolbox-3 (Brainard, Citation1997; Kleiner et al., Citation2007; Pelli, Citation1997).

Results and discussion

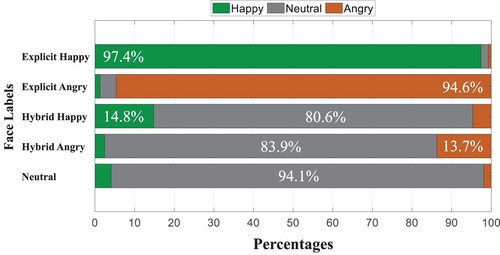

shows the average selection proportions of emotional labels for each facial expression. The average selection proportion of the correct label for explicit facial expressions was for happiness and

for anger. However, the average selection proportion of the correct label for hybrid faces was only

for happiness and

for anger. The average proportion of neutral selections for hybrid faces was

for happiness and

for anger. A binomial test with a 33% chance level of selection confirmed that the correct label for hybrid faces was selected less than 33% of the time for both happiness and anger (

). These results suggest that the hybrid stimuli did not convey a specific clear expression, although emotional expressions were present in the hybrid faces. That is, emotions were processed while viewing these faces, but they seem to have been consciously weakened. Although the stimuli were RGB colour images, our findings replicated the results of a previous study that used grayscale images (Laeng et al., Citation2010).

Experiment 2: Friendliness rating

This experiment was carried out to clarify whether happy hybrid faces are rated as more friendly than angry hybrid faces and to investigate the effect of facial colour on the expressions in hybrid faces. Experiment 2 was conducted after Experiment 1 following a 10-minute break.

Participants

The participants were the same as in Experiment 1.

Apparatus

The apparatus was the same as in Experiment 1.

Stimuli

We selected the 12 natural-coloured hybrid faces used in Experiment 1. The colour of each stimulus was adjusted to reddish to investigate the effect of facial colour on the expressions in the hybrid faces. In a previous study, the maximum value of the CIELAB parameter a* was increased by 12 units to determine the influence of reddish-coloured faces on the perception of expression (Nakajima et al., Citation2017). Thus, we also increased the a* of natural-coloured hybrid faces by 12 units using MATLAB to generate 12 new reddish-coloured hybrid images (examples in bottom row of ). This manipulation was only applied to facial areas. In addition, for each type of facial colour, the images were normalised for mean luminance and root-mean-square contrast using MATLAB with the SHINE toolbox (Willenbockel et al., Citation2010). Therefore, 24 images were used in this experiment. The average and standard deviation CIELAB colour of the stimuli in the reddish colour were 48.94 ± 8.19 in L*, 18.58 ± 6.20 in a*, 20.59 ± 6.81 in b*, respectively. Notably, the values changed slightly from the targets due to the luminance normalisation.

Procedure

The procedure was the same as in Experiment 1, except the participants were asked to rate “how friendly” the stimulus appeared, using a numerical keypad with a five-point scale (1: most unfriendly, 2: unfriendly, 3: neutral, 4: friendly, or 5: most friendly). The experiment was composed of 72 trials. Nothing was mentioned to the participants regarding colour or hidden expressions.

Results and discussion

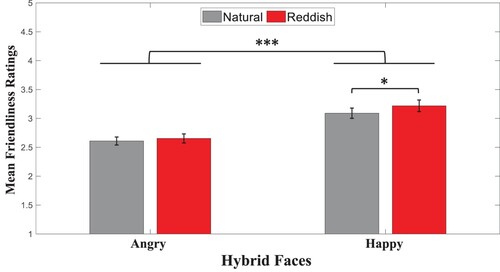

The data from four participants were excluded from the sample based on the criteria of Experiment 1. shows the mean friendliness rating for each hybrid face type. We performed a two-way repeated-measures analysis of variance for the expression and colour conditions. The main effect of expression was significant , such that happy hybrid faces were rated as more friendly than angry hybrid faces in both natural and reddish colours. A previous study reported that hybrid faces with positive and negative emotions received ratings toward the friendly and unfriendly ranges of the scale, respectively (Laeng et al., Citation2010). Although there was no significant main effect of colour

, there was a significant interaction

. A simple main effect in the happy hybrid faces

showed that happy hybrid faces with a reddish colour were rated as more friendly than were naturally coloured faces. These findings support previous studies reporting that feelings of happiness cause the face of the individual expressing the feeling to become reddish (Alkawaz et al., Citation2015; Drummond, Citation1994).

Figure 3. Mean Friendliness Ratings for each Hybrid Face Type.

Note. The horizontal axis indicates each face condition. The vertical axis indicates the mean friendliness ratings. Error bars indicate standard errors and asterisks indicate significant differences by analysis of variance .

Although a previous study stated that reddish-coloured faces enhance anger perception (Nakajima et al., Citation2017), our results showed that angry hybrid faces with reddish colour were not rated as less friendly than those with a natural colour. Prete et al. (Citation2018) also used the task of rating “how friendly” a face appeared to quantify the emotional aftereffects of both happy and angry hybrid faces. They clarified that the presence of a perceptual aftereffect in happy hybrid faces tends to be significant, whereas this effect was not found for angry hybrid faces. Therefore, we suggest that the friendliness ratings of angry hybrid faces were not affected despite the change in facial colour.

Experiment 3: Emotion labelling for reddish faces

Experiment 2 showed the effect of reddish colour on the perception of implicit facial expressions. However, we wondered whether the emotion labelling results would be the same using reddish stimuli in the paradigm of Experiment 1. Thus, we conducted Experiment 3.

Participants

We recruited 19 new participants (six females, thirteen males; none of whom participated in Experiments 1 and 2). The data of three participants (one female) were excluded from the sample with the same criteria as in Experiment 1.

Apparatus

Same as in Experiment 1.

Stimuli

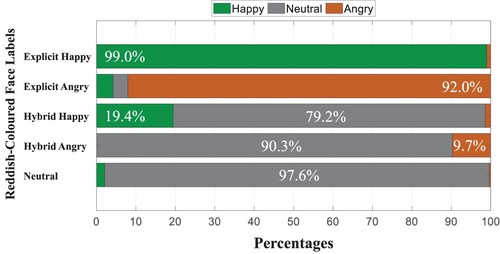

Same as Experiment 1, except the reddish face stimuli were used ().

Procedure

Same as Experiment 1.

Results and discussion

shows the average selection proportions of emotional labels for each facial expression. Neutral was selected as the expression for 79.2% of happy hybrid faces and 90.3% of angry hybrid faces. In addition, a binomial test with a 33% chance of selection confirmed that the average proportion of selecting the correct label for hybrid faces was less than 33% for happiness and anger (p < .001). These results showed that, similar to Experiment 1, the participants could not correctly judge the facial expressions of the hybrid faces even if they were reddish. Indeed, implicit facial expression perception of a reddish face affects friendliness ratings, even when the faces are perceived as neutral.

General discussion

In Experiment 1, we asked participants to select emotional labels for face stimuli. Our findings showed that the hybrid faces were judged to have neutral expressions, as assignation of angry or happy to such faces was infrequent (). Then, in Experiment 2, we used five-point ratings of friendliness, similar to previous studies (Laeng et al., Citation2010; Prete et al., Citation2015, Citation2018), to show that happy hybrid faces with a reddish colour were rated as more friendly than those with natural colour (), even the reddish hybrid faces were perceived as neutral in Experiment 3 (). These results suggest that facial expression perception based on facial colour occurs at an implicit level.

According to Laeng et al. (Citation2010), detecting hidden facial expressions in a low spatial frequency range is enough to create a robust first impression of an individual’s personality. Specifically, in terms of the friendliness of the scale, hybrid faces with negative expressions such as anger were rated into the unfriendly range, whereas positive expressions such as happiness were rated into the friendly range. Our findings support Laeng et al.’s results in that we found a difference in the friendliness scale between hybrid faces with positive and negative expressions. Moreover, we found that the friendliness ratings for different expressions were very close to each other because the emotional expressions in hybrid faces were detected implicitly at a subliminal level (Laeng et al., Citation2010; Prete et al., Citation2015). Thus, we replicated the results of previous studies by changing facial colour in both the emotion labelling and friendliness rating tasks, suggesting that facial colour influences both explicit and implicit processing of facial expressions in the visual system.

Our findings show that reddish colour affects the impression of happy expressions in hybrid faces in friendliness level () and emotional labelling (comparing and ). Drummond (Citation1994) found that changes in blood flow in the forehead and cheek support the popular notion that the face “flushes with joy”. Alkawaz et al. (Citation2015) also found a robust connection between facial colour triggered by blood haemoglobin oxygenation and emotional expressions based on texture. The results indicated that happiness makes a face slightly red, which supports our findings.

However, our results show that reddish colour does not affect the impression of angry expressions in hybrid faces. Several explanations may account for this finding. First, this study employed the friendliness level to measure the effect of two specific expressions (happy and angry) in the implicit stage. We speculate that friendliness and happiness are well correlated. In contrast, friendliness and anger are not well correlated. Thus, for example, changing the scale to likeness level (like and dislike), the reddish hybrid angry faces might be less perceived as like, i.e. more perceived as dislike. Previous studies have also reported the unique influence of angry expressions in approach-avoidance behaviour (Paulus & Wentura, Citation2016; Thorstenson & Pazda, Citation2021). Therefore, further investigation is required to clarify this interesting aspect of angry expression.

Second, this finding may be because interference among different cerebral areas when processing contrasting emotional information at different spatial frequencies reduces the emotional aftereffect. Specifically, when looking at a hybrid face, emotional information at low frequencies is processed through a rapid subcortical route (Tamietto & de Gelder, Citation2010). In contrast, neutral information at high frequencies is processed via a slow cortical route (Livingstone & Hubel, Citation1988). Based on these findings, Prete et al. (Citation2018) explained that when looking at an angry hybrid face, cortical activity interferes with subcortical activity, even though these separate pathways are activated by different inputs and have contrasting information structures. Here, we speculate that neutral expressions could have been perceived as positive information in this study. That is, the aforementioned interference causes a reduction in sensitivity to negative emotions. Thus, we suggest that a change in facial colour does not influence the friendliness ratings of negative hybrid faces, as evidenced by the lack of an emotional aftereffect.

This study has a few limitations. First, we used only Asian faces as visual stimuli. Nakakoga et al. (Citation2019) suggested that the effect of facial colour on expression does not differ among cultures, but they also reported that this result was only confirmed in Asia. Second, Elfenbein and Ambady (Citation2002) demonstrated that the accuracy of emotion recognition is higher when emotions are expressed and recognised by members of the same race. Our findings suggest that the effect of facial colour in implicit facial expressions could help understand facial expressions and their processing at a more universal level. Therefore, future studies should investigate the effect of facial colour on implicit facial expressions in other ethnic groups.

Conclusion

Although Experiment 1 and Experiment 3 reported that the hybrid faces with hidden expressions were labelled as neutral, Experiment 2 showed that the hybrid faces with happy expressions were rated as more friendly than the hybrid faces with angry expressions. Importantly, happy hybrid faces with a reddish colour were rated as more friendly than happy hybrid faces with natural colour. Therefore, we conclude that facial colour affects implicit facial expressions in positive hybrid faces.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Alkawaz, M. H., Mohamad, D., Saba, T., Basori, A. H., & Rehman, A. (2015). The correlation between blood oxygenation effects and human emotion towards facial skin colour of virtual human. 3D Research, 6, 13. https://doi.org/10.1007/s13319-015-0044-9

- Bar, M., Neta, M., & Linz, H. (2006). Very first impressions. Emotion, 6(2), 269–278. https://doi.org/10.1037/1528-3542.6.2.269

- Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436. https://doi.org/10.1163/156856897X00357

- Drummond, P. D. (1994). The effect of anger and pleasure on facial blood flow. Australian Journal of Psychology, 46(2), 95–99. https://doi.org/10.1080/00049539408259479

- Drummond, P. D., & Quah, S. H. (2001). The effect of expressing anger on cardiovascular reactivity and facial blood flow in Chinese and caucasians. Psychophysiology, 38(2), 190–196. https://doi.org/10.1017/S004857720199095X

- Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128(2), 203–235. https://doi.org/10.1037/0033-2909.128.2.203

- Jones, B. C., Little, A. C., Burt, D. M., & Perrett, D. I. (2004). When facial attractiveness is only skin deep. Perception, 33(5), 569–576. https://doi.org/10.1068/p3463

- Kato, M., Sato, H., & Mizokami, Y. (2022). Effect of skin colors due to hemoglobin or melanin modulation on facial expression recognition. Vision Research, 196, 108048. https://doi.org/10.1016/J.VISRES.2022.108048

- Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., & Broussard, C. (2007). What’s new in psychtoolbox-3. Perception, 36, 14. https://hdl.handle.net/11858/00-001M-0000-0013-CC89-F.

- Koole, S. L., & Rothermund, K. (2011). I feel better but I don’t know why”: The psychology of implicit emotion regulation. Cognition and Emotion, 25(3), 389–399. https://doi.org/10.1080/02699931.2010.550505

- Laeng, B., Profeti, I., Sæther, L., Adolfsdottir, S., Lundervold, A. J., Vangberg, T., Øvervoll, M., Johnsen, S. H., & Waterloo, K. (2010). Invisible expressions evoke core impressions. Emotion, 10(4), 573–586. https://doi.org/10.1037/a0018689

- Livingstone, M., & Hubel, D. (1988). Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science, 240(4853), 740–749. https://doi.org/10.1126/science.3283936

- Minami, T., Nakajima, K., & Nakauchi, S. (2018). Effects of face and background color on facial expression perception. Frontiers in Psychology, 9, 1012. https://doi.org/10.3389/fpsyg.2018.01012

- Nakajima, K., Minami, T., & Nakauchi, S. (2017). Interaction between facial expression and color. Scientific Reports, 7, 41019. https://doi.org/10.1038/srep41019

- Nakakoga, S., Nihei, Y., Kinzuka, Y., Haw, C. K., Shahidan, W. N. S., Nor, H. M., Yvonne-Tee, G. B., Abdullah, Z. B., Imura, T., Shirai, N., Nakauchi, S., & Minami, T. (2019). Facial color effect on recognition of facial expression: A comparison among Japanese and Malaysian adults and school children. Perception, 48, 147.

- Ogawa, T., Oda, M., Yoshikawa, S., & Akamatsu, S. (1997). Evaluation of facial expressions differing in face angles: Constructing a database of facial expressions. IEICE Technical Report, 97(388), 47–52.

- Paulus, A., & Wentura, D. (2016). It depends: Approach and avoidance reactions to emotional expressions are influenced by the contrast emotions presented in the task. Journal of Experimental Psychology: Human Perception and Performance, 42(2), 197–212. https://doi.org/10.1037/xhp0000130

- Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10(4), 437–442. https://doi.org/10.1163/156856897X00366

- Prete, G., Capotosto, P., Zappasodi, F., Laeng, B., & Tommasi, L. (2015). The cerebral correlates of subliminal emotions: An electroencephalographic study with emotional hybrid faces. European Journal of Neuroscience, 42(11), 2952–2962. https://doi.org/10.1111/EJN.13078

- Prete, G., Laeng, B., & Tommasi, L. (2018). Modulating adaptation to emotional faces by spatial frequency filtering. Psychological Research, 82(2), 310–323. https://doi.org/10.1007/S00426-016-0830-X/TABLES/2

- Stephen, I. D., Law Smith, M. J., Stirrat, M. R., & Perrett, D. I. (2009). Facial skin coloration affects perceived health of human faces. International Journal of Primatology, 30(6), 845–857. https://doi.org/10.1007/s10764-009-9380-z

- Tamietto, M., & de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nature Reviews Neuroscience, 11(10), 697–709. https://doi.org/10.1038/nrn2889

- Tarr, M. J., Kersten, D., Cheng, Y., & Rossion, B. (2001). It’s Pat! sexing faces using only red and green. Journal of Vision, 1(3), 337. https://doi.org/10.1167/1.3.337

- Thorstenson, C. A., McPhetres, J., Pazda, A. D., & Young, S. G. (2022). The role of facial coloration in emotion disambiguation. Emotion, 22(7), 1604–1613. https://doi.org/10.1037/EMO0000900

- Thorstenson, C. A., & Pazda, A. D. (2021). Facial coloration influences social approach-avoidance through social perception. Cognition and Emotion, 35(5), 970–985. https://doi.org/10.1080/02699931.2021.1914554

- Willenbockel, V., Sadr, J., Fiset, D., Horne, G. O., Gosselin, F., & Tanaka, J. W. (2010). Controlling low-level image properties: The SHINE toolbox. Behavior Research Methods, 42(3), 671–684. https://doi.org/10.3758/BRM.42.3.671

- Winkielman, P., & Berridge, K. C. (2004). Unconscious emotion. Current Directions in Psychological Science, 13(3), 120–123. https://doi.org/10.1111/J.0963-7214.2004.00288.X