ABSTRACT

The ability to quickly and accurately recognise emotional states is adaptive for numerous social functions. Although body movements are a potentially crucial cue for inferring emotions, few studies have studied the perception of body movements made in naturalistic emotional states. The current research focuses on the use of body movement information in the perception of fear expressed by targets in a virtual heights paradigm. Across three studies, participants made judgments about the emotional states of others based on motion-capture body movement recordings of those individuals actively engaged in walking a virtual plank at ground-level or 80 stories above a city street. Results indicated that participants were reliably able to differentiate between height and non-height conditions (Studies 1 & 2), were more likely to spontaneously describe target behaviour in the height condition as fearful (Study 2) and their fear estimates were highly calibrated with the fear ratings from the targets (Studies 1-3). Findings show that VR height scenarios can induce fearful behaviour and that people can perceive fear in minimal representations of body movement.

Imagine seeing a stranger walking down the same side of an otherwise empty street. What sources of information will be used to make inferences about that stranger when the only information available is what can be readily observed? We might infer that the stranger is friendly or unfriendly based on characteristics such as facial expression (Niedenthal et al., Citation2005), gestures (Ambady et al., Citation1999; Otta et al., Citation1994), eye gaze (Frith & Frith, Citation2007), gait (Sakaguchi & Hasegawa, Citation2006; Thoresen et al., Citation2012), clothing (Gillath et al., Citation2012; Oh et al., Citation2020), or attractiveness (Eagly et al., Citation1991). Humans have evolved numerous strategies for drawing inferences about others from visual characteristics (Ambady & Skowronski, Citation2008; Olivola & Todorov, Citation2010). Oftentimes, inferences about others are predicated on an estimation of their emotional state and accurate recognition of a conspecific's emotional state has clear evolutionary advantages. For example, recognising fear or anger expressed in the behaviour of others may signal danger, activating our fight, flight, or freeze response. Although the movement of bodies can be a rich source of information about emotional states, it has received much less attention in the emotion perception literature. Indeed, information from the movement of a body can be especially important when other visual cues (e.g. faces, appearance, identity) are obscured or too distant to be perceived clearly (Thoresen et al., Citation2012). The current research examines the perception of fear in others using motion-capture displays of individuals experiencing either a high-fear or a low-fear virtual reality experience.

Perceiving emotion in behaviour

Keeping with the example of the stranger on the street, further imagine that the stranger's behaviour leads you to perceive that they are afraid. This inference is likely to affect your behaviour, in turn. Is there something of which you should also be fearful? Are you the fear-inducing agent? From an evolutionary perspective, being able to communicate emotions such as fear via the body – as well as being able to accurately perceive those emotional cues in others – serves several adaptive functions. First, social fear learning allows individuals to learn to avoid an aversive event not by experiencing it directly, but by observing the social cues of conspecifics which signal a threat (Debiec & Olsson, Citation2017). Across species, organisms are able to engage in social fear learning, which helps individuals to identify and avoid danger without directly experiencing harm (Olsson & Phelps, Citation2007). Second, the accurate perception of fear also serves a predictive function. If we can identify the emotional state of another human, we have more information with which to assess the course of action that person might take. This is particularly important for emotions such as fear or anger, which signal the presence of a potential danger (Walk & Homan, Citation1984). Finally, perceiving emotions in others also enables us to empathise with and assist them, an important role in any social species (de Gelder, Citation2006).

Emotions in behaviour can be measured using vocal characteristics (i.e. prosody – rhythm, stress, and intonation), facial expression, and whole-body behaviour (Mauss & Robinson, Citation2009). However, the majority of non-verbal behaviour research has focused more heavily on perceiving emotional states from facial expressions and voice with far fewer investigations examining the diagnostic value of bodies in the emotion inference process (Van den Stock et al., Citation2007). Even when bodies are included in these studies, they are often included in a way that focuses attention on facial expressions and vocal characteristics. That is, static images of bodies (i.e. body postures) are often used as contextual cues that facilitate or inhibit inferences made from those other sources (Van Der Zant et al., Citation2021).

In terms of examining bodies as sources of emotional information, some research has examined how bodily displays of emotion affect the perception of emotional facial expressions. For example, Van den Stock and colleagues (Citation2007) presented photographs of posed body expressions of various emotions (e.g. angry, sad, fearful) along with either matching or non-matching facial expressions of emotions. Participants were tasked with identifying the facial expression. They found that participants demonstrated greater accuracy in perceiving facial expressions when the emotion signalled by the body was consistent with that of the face. Additionally, the more ambiguous the facial expression, the greater influence the body had on identifying the emotion. The finding that bodily representations of emotion can help in the identification of ambiguous emotional expressions was replicated in work by Aviezer et al. (Citation2012). Specifically, when ambiguously happy faces were put on unhappy bodies (and vice versa), the images were judged in line with the bodily representation (see also Wang et al., Citation2017). These findings highlight the importance of bodily cues in interpreting emotions conveyed by the face, but do not examine the use of body information, in isolation, as a source of emotional information.

A key limitation of many previous investigations is the fact that the experimental stimuli are presented as static images (Coulson, Citation2004) rather than incorporating dynamic movement. This is partially due to the way in which bodies are being used in these studies – as context cues that influence other features. But bodies move, and the manner in which they move conveys substantial information (Atkinson et al., Citation2007). Indeed, previous research has reliably demonstrated that perception of emotions is enhanced for dynamic, compared to static, displays (Ambadar et al., Citation2005; Atkinson et al., Citation2004; Nelson & Mondloch, Citation2017; Van Der Zant & Nelson, Citation2021). In an intriguing use of dynamic body movements, Van Der Zant and Nelson (Citation2021) presented static images or short dynamic videos of tennis players immediately after winning or losing an important point in a match. The players were presented as just faces, just bodies (from waist up), or bodies and faces combined. Participants were tasked with judging whether the player had won or lost the previous point. Both body-only and face + body conditions resulted in greater accuracy than the face-only condition. Additionally, accuracy was higher for the dynamic displays than for the static displays.

Research using dynamic point-light displays of motion finds that perceivers are able to identify a variety of characteristics of recorded figures including identity (Cutting & Kozlowski, Citation1977; Troje et al., Citation2005), gender and age (Kozlowski & Cutting, Citation1977; Montepare et al., Citation1987), activity engaged in (Alaerts et al., Citation2011), and status (Schmitt & Atzwanger, Citation1995). In terms of emotion recognition, Dittrich and colleagues (1996) presented participants with both fully lit and point-light displays of dancers who had been instructed to portray joy, surprise, anger, disgust, fear, or grief. Participants were able to correctly identify the emotion the dancer was attempting to portray above chance in both conditions. Although the actions in the Dittrich research were artistic representations of emotional experiences rather than actual expressions of emotional states, similar research has examined inferences about emotion from point-light displays of gait. For instance, Halovic and Kroos (Citation2018a; Citation2018b) showed point-light display gait videos of targets attempting to display various emotions (e.g. happiness, sadness, fear, anger) and had participants identify the emotion displayed. The researchers identified parameters of physical movement from the displays that distinguished between the different emotions (e.g. pace, stride length, arm swing) and noted that these differed somewhat from what participants reported using as visible cues – even though participants were accurate in identifying the intended emotion. These studies that directly uses body movement in and of itself as useful information about emotional states, come closer to showing how behaviour might be observed “in the wild.”

However, a second limitation of much of the existing research uses actors to convey a particular emotion either with their faces or with their bodies. In the previous examples, the “emotional” stimuli were created by asking people to produce behaviour that represents an emotional state either through dance (Dittrich et al., Citation1996) or communicated through their gait (Halovic & Kroos, Citation2018a; Citation2018b). If asked to make a “sad” face, one might produce a frown. But is that facial expression an accurate representation of how our facial expressions change when feeling sadness, or is the frown just an easily identifiable means of communicating sadness to another person? The behaviour may reflect only what people think an emotion looks like rather than what would actually be expressed if the emotion were being felt in the moment. In the Halovic and Kroos (Citation2018b) study, participants said that happy gaits had “more bounce” to the steps (because that matched their idea of a happy gait) even though no evidence for “bouncing” was found when extracting gait characteristics from the movement data. These beliefs about what emotions “look” like may result in exaggerated or stereotyped behaviours that can easily be identified because they are clearer symbols of emotion than what may be present in real life (Barrett, Citation2011). Indeed, Barrett and colleagues (Citation2019; Barrett et al., Citation2011) have argued quite convincingly that naturalistic facial expression are in fact poorly interpreted without context.

The nature of these reproductions brings up an important distinction between behaviour that is a direct manifestation of behaviour (i.e. expressive actions) and non-emotional actions that are performed in an emotional manner (Atkinson, Citation2013). For example, anger may involve the clenching of the jaw and hands and the flexing of muscle; these bodily changes represent expressive actions. Anger, however, could also be inferred from the performance of normally non-emotional actions such as slamming a door when closing it or aggressively handing documents to a colleague. When asked to act in a manner that represents and emotional state, individuals are likely to create actions with both expressive and non-expressive actions. Walking angrily might involve clenched fists and a body-forward posture (expressive emotions) but also involve exaggerated stomping in the gait which would serve as a symbol of anger.

A quick image search online will produce ample demonstrations of exaggerated poses of “fear.” Common features of these poses include wide eyes, open mouth (often screaming), hands raised protectively (or covering the face), palms out, fingers splayed, and body thrown back (i.e. away from the fear-inducing object). This stereotyped depiction of the emotional state likely contains both some combination of real expressive actions as well as more symbolic gestures. Much of the research that has focused on the congruency between bodily represented emotion and facial expressions have used exactly these stereotyped facial and body poses of fear, anger, or sadness (e.g. Aviezer et al., Citation2012; Martinez et al., Citation2016; Mondloch et al., Citation2013; Van den Stock et al., Citation2007). It is much rarer to find stimuli that were produced by individuals who were actually experiencing the emotion. One interesting exception comes from Li et al. (Citation2016). Li and colleagues recorded individuals walking while in a neutral mood and then again later after watching either a series of anger-inducing or happiness-inducing film clips (Yan et al., Citation2014). Although this study did not involve human perceivers inferring emotional states from the gait presentations, their machine learning algorithm was only able to accurately identify “neutral” from “emotional.” The ability to distinguish between happiness and anger was relatively poor. This inability to distinguish between oppositely-valenced emotions suggests that either the emotional information conveyed by gait information is far less obvious than when those behaviours are acted, and/or that humans are especially attuned to interpreting these less-clear bodily signals of emotional state. This finding may also reflect the fact that the affect induced by laboratory-based exposure to affective images (e.g. IAPS) and videos is often very weak (El Basbasse et al., Citation2023).

The current research

In the current research, we recorded the movement of individuals while completing a fear-inducing virtual reality (VR) “plank-walking” experience. Half of these individuals perceived that they were walking on a plank at ground-level (low-fear condition) and half that the plank was suspended eighty stories up the side of a building (high-fear condition). Several studies using this simulation demonstrate that walking the plank at height is associated with subjective fear, increased heart rate and sweating (skin conductance; Maymon et al., Citation2023) and with a rightward shift in frontal alpha activity – a marker of negative emotional state (El Basbasse et al., Citation2023). Their walking behaviour during the VR-based study was captured via tracker points and turned into short videos showing the four points (i.e. each foot, the controller, and the headset) for use in the current studies. We believe that these videos have significant advantage over the stimuli used in previous studies because the fear is actually felt by the target individuals. This allows us to examine how people interpret the natural bodily expression of fear rather than an actor's interpretation of what they think fear looks like. That is, we believe that the advantage of this approach is that the behaviours represent expressive actions rather than symbolic gestures (Atkinson, Citation2013).

Participants in the current studies watched these motion-capture videos and were asked to identify which condition the target was in (height or ground; Studies 1 & 2), to rate the intensity of fear being shown (Studies 1-3), or to name the emotion being felt by the target (Study 2). Participants in Study 3 were asked to identify the features of the body movements that they used in making their fear estimates. It was expected that participants: (a) Would be able to identify the target's condition at a level greater than chance; (b) Would be accurate in perceiving the intensity of fear shown by the targets (measured as a correlation with targets’ own ratings of fear; and c) Would be more likely to spontaneously describe motion-captures at height as representing fear than they would those on the ground.

Study 1

Methods

Participants and design

Forty-three students (32 female; Mage = 20.60 years, SDage = 5.73) enrolled in an Introductory Psychology course at Victoria University of Wellington participated for partial completion of a research participation requirement. No participants were excluded from analysis. The sample size for Study 1 was based on lab-practice of 40 participants per meaningful cell. Although power was not calculated a priori, analysis of achieved power using G*Power (version 3.1.9.7) indicates that the sample size was more than adequate to detect the effects reported below. All studies reported in this paper were approved by the Human Ethics Committee of Victoria University of Wellington. Materials and data for all studies reported in this paper are available at the following link: https://osf.io/vhjfe/?view_only = a259a8747e0e487c898de453bf83e8e5.

Materials

Thirty participants from an earlier study (Maymon et al., Citation2023, Experiment 2) were recorded while engaged in a virtual reality study. In that earlier study, participants walked along a wooden plank (which was also physically present in the room) in a virtual environment that either showed the plank emerging out the side of a tall building (i.e. high plank condition), or at street level (i.e. low plank condition). Participants walked the length of the plank at their own pace and their motion was recorded in real-time using Brekel OpenVR Recorder. Specifically, participant had HTC Vive trackers attached to each foot to record the position of the feet in 4D (i.e. movements within the 3D environment over time). The same recording of position over time was recorded for the hand-held controller and the VR headset (HTC Vive), both of which contain position trackers (see ). These data were then imported into Autodesk MotionBuilder to create the video stimuli for this study. The video clips were rendered beginning from the moment that the target participants started walking along the plank until the moment they reached the end. Thus, the videos involve direct motion-capture during the plank-walking task.

Additionally, participants reported their subjective level of fear at multiple timepoints during the VR experience and measures of physiological arousal (i.e. heartrate and skin conductance) were recorded throughout. As reported by Maymon et al. (Citation2023), participants in the height condition, compared to those in the non-height condition, reported feeling significantly greater fear. Measures of physiological arousal also showed much greater increases in both heartrate and skin conductance for participants in the height condition. The evidence that participants were experiencing realistic fear is further supported by studies that use similar height-based VR simulations (Cleworth et al., Citation2012; Coelho et al., Citation2009; El Basbasse et al., Citation2023; Gromer et al., Citation2019; Kisker et al., Citation2019; Nielsen et al., Citation2022).

Procedure

The study was conducted in a computer laboratory in which 5–15 participants per session completed the tasks on computers (separated by dividers). All tasks were presented using Qualtrics software (Qualtrics, Provo UT). All participants completed two tasks at their own pace. Prior to seeing the videos, participants read a description of the previous study. Specifically, they were told that the video clips were taken of people who were walking along a wooden plank that appeared to be at either street level or suspended 80 stories above the street on the side of a building.

In the first task (i.e. the “High/Low” task), participants viewed each of the 30 video clips (in random order) and selected whether they believed the person in the video clip was in the High plank or the Low plank condition. This judgment was made by clicking either a “high” or “low” radial button beneath each video (see ). After completion of the High/Low Task, participants viewed the same 30 video clips (in a different random order) and rated how much fear they thought each person was showing. This judgment was made on a five-point scale from 1 = “none at all” to 5 = “a great deal.” After completion of the fear rating task, participants were debriefed and thanked for their participation. All analyses reported in this paper were conducted using jamovi version 2.2 (The jamovi project, Citation2019).

Results and discussion

High/low task

Responses were coded as either correct or incorrect and the overall percentage of correct responses was submitted to a one-sample t-test with 0.50 as the comparison value (i.e. 50% representing random guessing in the two-choice task). Overall, participants correctly identified the condition 72.7% of the time (SD = 11.7%)., which was significantly better than chance, t(42) = 12.70, p < .001, [95% CI: lower = .191, upper = .263], d = 1.94. Participants correctly identified videos from the high plank condition 76.4% of the time, and videos from the low plank condition 69.1% of the time (both > 50%, p < .001). Overall, correct identification of targets in the height condition was significantly better than for targets in the non-height condition, t(42) = 2.40, p = .021, d = .366. These findings clearly demonstrate that participants were able to correctly identify whether motion-captures represented high- or low-fear conditions.

Fear ratings

Fear ratings were submitted to a paired-samples t-test comparing ratings for high versus low conditions. Targets in the high plank condition were judged to be showing more fear (M = 3.55, SD = .44) than targets in the low plank condition (M = 1.99, SD = .39), t(42) = 26.60, p < .001, [95% CI: lower = 1.44, upper = 1.68], d = 4.06. This finding demonstrates that, as expected, participants perceived greater fear for targets in the high plank condition than those in the low plank condition even when these target conditions were not explicitly labelled as such.

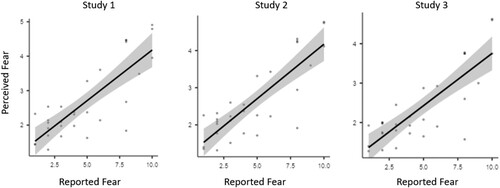

In order to examine how accurately participants were able to judge the self-reported level of fear felt by the target in the video, we calculated a correlation between the average judged rating of fear (i.e. made by the participants in this study) and the actual reported level of fear taken at the time that the target was on the plank (i.e. self-reported feelings of fear while on the plank). This analysis revealed that participants were very good at judging the self-reported levels of fear felt by the targets, r(28) = .80, p < .001 (see ). Further examination of these ratings by condition indicate that perceived fear ratings were significantly correlated with self-reported fear in the high plank (r(10) = .59, p < .05) but not in the low plank (r(16) = .30, p = .22) condition.

Study 2

Study 2 was a replication and extension of Study 1 in which we replicated the High/Low and Fear Rating tasks, but as between-subjects tasks. It was expected that the results of Study 2 would replicate those of Study 1. We also added a third condition in which participants were shown the motion-captures and asked to write down whatever emotion they thought the target was showing (i.e. open-ended response). It was expected that participants would be significantly more likely to spontaneously describe motion-captures from the high plank condition as fearful than the motion-captures from the low plank condition.

This new condition was included to examine the possibility that participants are using the body to judge the intensity of the emotion but that the body, in itself, does not convey the discrete emotion. Ekman and Friesen (Citation1967) suggest that emotional facial expressions are more important for emotional classification (i.e. what is the emotional state?) and the body is more important for judging emotional intensity (i.e. how strong is that state?). Because participants in the fear-rating task have had the emotion label provided for them, perhaps the body-only provides a sense of intensity. In the new condition, participants were only told that the recordings were made while individuals were in a VR environment with no mention of the height manipulation. By including this condition, we can see whether the limited information about body movements, by itself, and without context, is sufficient to elicit a perception of fear.

Methods

Participants

One hundred students (79 female; Mage = 19.40 years, SDage = 3.37) enrolled in an Introductory Psychology course at Victoria University of Wellington participated for partial completion of a research participation requirement. Participants completed the study online using Qualtrics software. No participants were excluded from analysis. Power analyses conducted with G*Power (version 3.1.9.7) indicated that a sample size of 19 would be sufficient to achieve an 80% likelihood of detecting a large effect size (.70, which is more conservative than the observed effect sizes in Study 1) with traditional alpha (.05). As there were three unique conditions, this meant a minimal sample size of 57 was recommended. The materials were the same as those using in Study 1.

Procedure

Participants in Study 2 completed one of three tasks. One third of the participants (N = 34) completed the High/Low Task as in Study 1. A second third (N = 35) of the participants completed the Fear Rating task as in Study 1. Finally, the last third (N = 31) completed an Emotion Description task in which they wrote down in a dialogue box whatever emotion they believed the person in the video clip was feeling. In this task, participants watched the 30 video clips (in random order) and were asked “What emotion do you think this person is experiencing?” Importantly, no mention was made about fear or heights as participants were told that the videos were made “ … in an earlier study in which participants walked around in a Virtual Reality simulation.” After completing one of these tasks, participants were debriefed and thanked for their participation.

Results and discussion

High/low task

The percentage of correct categorisations was submitted to a one-sample t-test with .50 as the comparison value. Replicating Study 1, participants correctly classified targets as belonging to the high or the low plank conditions 73.20% of the time (SD = 10.40%)., which was significantly better than chance, t(33) = 13.01, p < .001 [95% CI: lower = .196, upper = .269], d = 2.23. Accuracy for the high (M = 78.90%) and the low (M = 67.50%) plank conditions was better than chance (both p < .001). Similar to Study 1, participants were more accurate in correctly identifying targets in the height condition than targets in the non-height condition, t(33) = 3.38. p = .002, d = .58.

Fear rating

As in Study 1, judgments of fear were submitted to a paired-samples t-test. Participants judged targets in the high plank condition to be more fearful (M = 3.65, SD = 0.43) than those in the low plank condition (M = 1.99, SD = 0.39), t(34) = 26.9, p < .001 [95% CI: lower = 1.54, upper = 1.79], d = 4.55. Further replicating the ratings data from Study 1, the correlation between average judged fear and actual fear was r(28) = .83, p < .001 indicating that participants were well calibrated in their estimates (see ). Replicating the pattern from Study 1, the correlation between perceived and self-reported fear was significant in the height (r(10) = .64, p < .05), but not the non-height (r(16) = .35, p = .15) condition.

Emotion description

The open-ended responses were clustered into eight categories: (a) Fear (e.g. fear, scared, fright/frightened, afraid, terrified, panicked), (b) Anxious (e.g. anxious/anxiety, worry/worried, nervous, tense), (c) Cautious (e.g. caution, hesitance/hesitation, uncertain, uncertainty, carefulness), (d) Curiosity (e.g. curious/curiosity, intrigue, wonder, awe, amazed), (e) Confidence (confident/confidence, determined, certain/certainty, focused, brave, strong), (f) Excitement (e.g. excited, happy, energetic, joy, amused), (g) Calm (e.g. calm, related, chill, content, neutral, relieved), and (h) Sad (e.g. sad, depressed, lost, weary, down, regret, bored). These eight clusters accounted for 87.96% of the total responses. As can be seen in , all of the comparisons between high and low plank conditions were significant. Participants were more likely to spontaneously describe the motion-capture display as showing fear, anxiety, and caution when the target was in the high plank condition than when the target was in the low plank condition. In fact, these three clusters accounted for almost 71% of all responses describing high plank targets. Responses for the low plank targets, by comparison, showed a much greater variability in the terms used.

Table 1. Mean percentage of emotion categories spontaneous mentioned by condition.

Study 3

The results from Study 2 replicated the findings from Study 1 and also demonstrated that participants were more likely to spontaneously describe the motion-capture videos from the high plank condition as fearful even when given no context about the specific situation. In Study 3 we examined the strategies that participants use in judging fear in our motion-capture videos. Halovic and Kroos (Citation2018b) found that participants in their study reported using actors’ head movements and walking pace as diagnostic of fear. Interestingly, participants in their study suggested that both slower walking and faster walking indicated greater fear. The authors suggested that perhaps different actors interpreted fear as either “fearful approach” (which would result in slower walking) or as “fearful escape” (which would result in faster walking). Their kinematic analysis, however, indicated that what the fearful actors were doing was walking faster, but taking shorter steps.

Analysis of our own motion-capture data show that targets in the high plank (i.e. fear) condition did take shorter steps (21.81 cm) on average than targets in the low plank condition (25.74 cm) but that this difference was not significant (p = .11). On the other hand, the average number of steps (Mhigh = 13.77, Mlow = 6.90) as well as the amount of time taken to walk the same distance (Mhigh = 15.13s, Mlow = 5.93s) were significantly different between the conditions. Overall, high plank targets were taking significantly more, slightly shorter, but significantly slower steps than participants in the low plank condition. In Study 3, we determined whether observers were aware of these motion parameters and used them in their ratings.

Methods

Participants and design

Forty individuals (24 female; Mage = 40.15, SDage = 13.34) recruited via Prolific.co participated in the current study and were paid NZ$4.29. No participants were excluded from the analyses. Based on the previous studies we sought a sample size of 40 which had been more than adequate in detecting the effects reported. All materials and measures were presented online using Qualtrics survey software.

Procedure

The fear rating task was the same as in the previous two studies. After completion of the fear rating task, participants were asked to indicate what strategies they used, if any, in determining the level of fear displayed by the targets. This was an open-ended option and participants could type as much or as little as they wished. After the open-ended question about strategies, participants rated five potential characteristics in terms of how important they were in judging fear in the videos. The five items were: length of steps, speed/pace of steps, number of steps, head movements, and arm movements. All were rated on a scale ranging from 1 = “not at all important” to 5 = “very important” (midpoint 3 = “moderately important”). After completing this rating task participants were debriefed and thanked for their participation.

Results

Fear ratings

Judgments of fear were submitted to a paired-samples t-test. Participants judged targets in the high plank condition to be more fearful (M = 3.25, SD = 0.52) than those in the low plank condition (M = 1.81, SD = 0.42), t(39) = 19.4, p < .001 [95% CI: lower = 2.32, upper = 3.81], d = 3.07. The correlation between average judged fear and actual fear was r(28) = .82, p < .001 indicating that participants were well calibrated in their estimates (see ). As in the previous two studies, the correlation between perceived and self-reported fear was significant for targets in the high plank (r(10) = .67, p < .05) but not the low plank (r(16) = .32, p = .19) condition.

Strategies

By far the majority of the self-reported strategies for judging fear related to Pace/Speed of the steps (mentioned by 75% of participants) and the Length of the steps (mentioned by 53%). The next most common strategies involved the position or movement of the head (mentioned by 30%), the position or movement of the hands/arms (mentioned by 22%), and perceived “hesitancy” (mentioned by 27%). Another 7.5% mentioned pauses with a final 2.5% related to overall amount of movement. Interestingly, number of steps was not mentioned by any of the participants (although this is captured by the length of steps as shorter steps necessarily require a greater number to cover the same distance).

In terms of the explicit ratings of importance of the presented characteristics, the three factors related to steps (i.e. Pace, Length, and Number) were all rated as more important (Mpace = 4.25, Mlength = 4.15, Mnumber = 3.77) than either Head (M = 3.07) or Arm (M = 2.65) movements (all p < .05). None of the factors related to steps were significantly different from one another in terms of judged importance.

General discussion

Although the literature on non-verbal behaviour has placed a lot of emphasis on the importance of facial expression when judging emotions, the results of the current studies indicate that a discrete emotion such as fear can be detected through perceptible, measurable differences in actions. Across three studies, participants showed high levels of accuracy in identifying whether targets were in a high- or low-fear condition (Studies 1 & 2) and showed highly calibrated judgments of the fear that targets reported feeling (Studies 1-3). Additionally, participants were more likely to spontaneously describe target behaviour from the high-fear condition, compared to the low-fear condition, as fearful even when they were not told anything about the VR scenario or task (Study 2). Taken together, these studies clearly demonstrate that accurate judgements of emotion can be made based on very minimal body motion information.

A particular strength of the current research was that we tested whether people could perceive actual, self-reported fear. Venture et al. (Citation2014) argue that “It is difficult to provoke emotion naturally during motion-capture session, as it is a dynamic interaction process between cognition and basic emotions (p. 622).” They suggest using actors to produce gaits that are meant to reflect different emotions. This is the approach taken by almost all researchers who have examined these questions. This generally results in a small number of targets (e.g. Venture and colleagues had four actors) and opens up the possibility that some actors are “better” than others (which has been commented upon in multiple papers whenever there are mixed findings). But what does “better” mean in this context? Is the better actor the one that is able to send the clearest signal? That signal might, we argue, be the more exaggerated stereotypic signal, but not one that is commonly encountered in real life.

Of course, until recently it was not possible to place people in dangerous situations to record their fear behaviour. The current work demonstrates that VR-based immersive environments create a context in which people experience realistic fear, as indexed by subjective ratings, physiological responses, and behavioural changes. By creating a fear-inducing virtual environment, we were able to collect motion-capture data on participants who were actually feeling fearful at the time. As these were not actors, and they were not attempting to deliberately display fearful behaviours, the motion data that we extracted represents their emotional behaviour in situ. In terms of the behaviours themselves, there is no issue of one target being “better” than another because all of them are real. Some may be easier to interpret than others, but this natural variability in behaviour should be seen as a feature rather than a problem. This lends ecological validity to these findings, as observers were judging the naturally occurring variability that exists in emotional behaviour.

Given this natural variability, the strength of the relationship between observer ratings of fear and self-ratings of fear by the participants during the event was surprising. Across the three studies, the correlation between observer and self-ratings of fear were above .80 which is considered a “very strong” relationship. It seems, then, that targets were able to accurately signal not only their emotional state, but also the intensity of that emotional state. And perceivers were able to accurately identify both the state and the intensity of that state. The fact that we were able to replicate this positive relationship suggests that there are some behavioural characteristics that are broadly recognisable to people, indexing the subjective intensity of a target's emotional experience. The embodied nature of emotions suggests that perhaps the body serves as a more honest signal than the face. While facial expressions are arguably mostly communicative, something like a cautious gait on an actual high plank could be lifesaving.

The strength and stability of this relationship made us wonder what neural mechanism(s) might support such highly calibrated judgements of another person's subjective emotional state? One possibility is that humans sense the emotions of other humans via selective mirror neurons. In a recent investigation, Carrillo and colleagues (Citation2019) found evidence in mice that mirror neurons within the anterior cingulate cortex selectively activated when observing a conspecific experiencing pain. However, these same neurons did not activate when observing a conspecific expressing fear, indicating that these neurons are selective enough to differentiate between the observation of a conspecific experiencing vs anticipating a painful stimulus. Although substantial work is needed to bridge findings from animal research with theories about emotion recognition in humans, the findings presented in the current studies support the notion that emotion recognition is highly specific.

One important limitation of the current work is the fact that the motions available to the targets was reasonably constrained by having to walk along a plank on the floor. In previous research, the actors walked along a path (e.g. either back-and-forth, or on an L-shaped path) which allowed for a more naturalistic gait. Although all of our motion-capture targets engaged in the same behaviour, constrained to the same spatial dimensions, it did not allow for an individual to use their natural walking gait. Future research using VR-based emotion elicitation should focus on mood inductions that allow for more natural range of movement within the environment. For example, recording individuals walking down a virtual hallway (e.g. lined with emotion-evoking stimuli) would allow for less constrained movement.

A second limitation of the current research is that we focused solely on fear as the emotional state. That focus was largely based on the fact that the lab was already engaged in developing and using fear-based virtual environments in other research (Maymon et al., Citation2023). There is every reason to expect that any number of emotions could be evoked within virtual environments. In fact, other researchers have elicited joy, anger, boredom, anxiety, sadness (Felnhofer et al., Citation2015), and awe (Chirico et al., Citation2017) using VR. Future research would benefit greatly, then, by expanding the range to include other commonly examined emotional states. By eliciting emotions rather than having actors pretend, we can feel more confident that we are not, in Feldman Barrett’s (Citation2011) terms, continuing to create “a science of emotional symbols” (p. 402, italics in original).

Acknowledgements

Materials, stimuli, and data for all studies are available on OSF.io at the following link: https://osf.io/vhjfe/?view_only = a259a8747e0e487c898de453bf83e8e5

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Ahlström, V., Blake, R., & Ahlström, U. (1997). Perception of biological motion. Perception, 26(12), 1539–1548. https://doi.org/10.1068/p261539

- Alaerts, K., Nackaerts, E., Meyns, P., Swinnen, S. P., & Wenderoth, N. (2011). Action and emotion recognition from point light displays: An investigation of gender differences. PLoS One, 6(6), e20989. https://doi.org/10.1371/journal.pone.0020989

- Ambadar, Z., Schooler, J. W., & Cohn, J. F. (2005). Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science, 16(5), 403–410. https://doi.org/10.1111/j.0956-7976.2005.01548.x

- Ambady, N., Conner, B., & Hallahan, M. (1999). Accuracy of judgments of sexual orientation from thin slices of behavior. 10.

- Ambady, N., & Skowronski, J. J. (Eds.). (2008). First impressions. Guilford Publications.

- Atkinson, A. P. (2013). Bodily expressions of emotion: Visual cues and neural mechanisms. In J. Armony & P. Vuilleumier (Eds.), The Cambridge handbook of human affective neuroscience (pp. 198–222). Cambridge University Press. https://doi.org/10.1017/CBO9780511843716.012.

- Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., & Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception, 33(6), 717–746. https://doi.org/10.1068/p5096

- Atkinson, A. P., Tunstall, M. L., & Dittrich, W. H. (2007). Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition, 104(1), 59–72. https://doi.org/10.1016/j.cognition.2006.05.005

- Aviezer, H., Trope, Y., & Todorov, A. (2012). Holistic person processing: Faces with bodies tell the whole story. Journal of Personality and Social Psychology, 103(1), 20–37. https://doi.org/10.1037/a0027411

- Barrett, L. F. (2011). Was Darwin wrong about emotional expressions? Current Directions in Psychological Science, 20(6), 400–406. https://doi.org/10.1177/0963721411429125

- Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1–68. https://doi.org/10.1177/1529100619832930

- Barrett, L. F., Mesquita, B., & Gendron, M. (2011). Context in emotion perception. Current Directions in Psychological Science, 20(5), 286–290. https://doi.org/10.1177/0963721411422522

- Carrillo, M., Han, M., Migliorati, F., Liu, M., Gazzola, V., & Keysers, C. (2019). Emotional mirror neurons in the rat’s anterior cingulate cortex. Current Biology, 29(8), 1301–1312. https://doi.org/10.1016/j.cub.2019.03.024

- Chirico, A., Ferrise, F., Cordella, L., & Gaggioli, A. (2017). Designing awe in virtual reality: An experimental study. Frontiers in Psychology, (8). https://doi.org/10.3389/fpsyg.2017.02351

- Cleworth, T. W., Horslen, B. C., & Carpenter, M. G. (2012). Influence of real and virtual heights on standing balance. Gait & Posture, 36(2), 172–176. https://doi.org/10.1016/j.gaitpost.2012.02.010

- Coelho, C. M., Waters, A. M., Hine, T. J., & Wallis, G. (2009). The use of virtual reality in acrophobia research and treatment. Journal of Anxiety Disorders, 23(5), 563–574. https://doi.org/10.1016/j.janxdis.2009.01.014

- Coulson, M. (2004). Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence. Journal of Nonverbal Behavior, 28(2), 117–139. https://doi.org/10.1023/B:JONB.0000023655.25550.be

- Cutting, J. E., & Kozlowski, L. T. (1977). Recognizing friends by their walk: Gait perception without familiarity cues. Bulletin of the Psychonomic Society, 9(5), 353–356. https://doi.org/10.3758/BF03337021

- de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nature Reviews Neuroscience, 7(3), 242–249. https://doi.org/10.1038/nrn1872

- Debiec, J., & Olsson, A. (2017). Social fear learning: From animal models to human function. Trends in Cognitive Sciences, 21(7), 546–555. https://doi.org/10.1016/j.tics.2017.04.010

- Dittrich, W. H., Troscianko, T., Lea, S. E. G., & Morgan, D. (1996). Perception of emotion from dynamic point-light displays represented in dance. Perception, 25(6), 727–738. https://doi.org/10.1068/p250727

- Eagly, A. H., Ashmore, R. D., & Makhijani, M. G. (1991). What is beautiful is good, but … .: A meta-analytic review of research on the physical attractiveness stereotype. 20.

- Ekman, P., & Friesen, W. V. (1967). Head and body cues in the judgment of emotion: A reformulation. Perceptual and Motor Skills, 24(3, PT. 1), 711–724. https://doi.org/10.2466/pms.1967.24.3.711

- El Basbasse, Y., Packheiser, J., Peterburs, J., Maymon, C., Gunturkun, O., Grimshaw, G., & Ocklenburg, S. (2023). Walk the plank! Using mobile EEG to investigate emotional lateralization of immersive fear in virtual reality. Royal Society Open Science.

- Felnhofer, A., Kothgassnew, O. D., Schmidt, M., Heinzle, A.-K., Beutl, L., Hlavacs, H., & Kryspin-Exner, I. (2015). Is virtual reality emotionally arousing? Investigating five emotion inducing virtual park scenarios. International Journal of Human-Computer Studies, 82, 48–56. https://doi.org/10.1016/j.ijhcs.2015.05.004

- Frith, C. D., & Frith, U. (2007). Social cognition in humans. Current Biology, 17(16), R724–R732. https://doi.org/10.1016/j.cub.2007.05.068

- Gillath, O., Bahns, A. J., Ge, F., & Crandall, C. S. (2012). Shoes as a source of first impressions. Journal of Research in Personality, 46(4), 423–430. https://doi.org/10.1016/j.jrp.2012.04.003

- Gromer, D., Reinke, M., Christner, I., & Pauli, P. (2019). Causal interactive links between presence and fear in virtual reality height exposure. Frontiers in Psychology, 10. https://doi.org/10.3389/fpsyg.2019.00141

- Halovic, S., & Kroos, C. (2018a). Not all is noticed: Kinematic cues of emotion-specific gait. Human Movement Science, 57, 478–488. https://doi.org/10.1016/j.humov.2017.11.008

- Halovic, S., & Kroos, C. (2018b). Walking my way? Walker gender and display format confounds the perception of specific emotions. Human Movement Science, 57, 461–477. https://doi.org/10.1016/j.humov.2017.10.012

- The jamovi project. (2019). jamovi. (Version 1.0) [Computer Software]. https://www.jamovi.org.

- Kisker, J., Gruber, T., & Schöne, B. (2019). Behavioral realism and lifelike psychophysiological responses in virtual reality by the example of a height exposure. Psychological Research, 65, 68–81. https://doi.org/10.1007/s00426-019-01244-9

- Kozlowski, L. T., & Cutting, J. E. (1977). Recognizing the sex of a walker from a dynamic point-light display. Perception & Psychophysics, 21(6), 575–580. https://doi.org/10.3758/BF03198740

- Li, S., Cui, L., Zhu, C., Li, B., Zhao, N., & Zhu, T. (2016). Emotion recognition using Kinect motion capture data of human gaits. PeerJ, 4, e2364. https://doi.org/10.7717/peerj.2364

- Martinez, L., Falvello, V. B., Aviezer, H., & Todorov, A. (2016). Contributions of facial expressions and body language to the rapid perception of dynamic emotions. Cognition and Emotion, 30(5), 939–952. https://doi.org/10.1080/02699931.2015.1035229

- Maymon, C. N., Crawford, M. T., Blackburne, K., Botes, A., Carnegie, K., Mehr, S. A., Meier, J., Murphy, J., Miles, N. L., Robinson, K., Tooley, M., & Grimshaw, G. M. (2023). The presence of fear: How subjective fear, not physiological changes, shapes the experience of presence. Unpublished manuscript.

- Mauss, I. B., & Robinson, M. D. (2009). Measures of emotion: A review. Cognition & Emotion, 23(2), 209–237. https://doi.org/10.1080/02699930802204677

- Mondloch, C. J., Nelson, N. L., & Horner, M. (2013). Asymmetries of influence: Differential effects of body postures on perceptions of emotional facial expressions. PLoS One, 8(9), e73605. https://doi.org/10.1371/journal.pone.0073605

- Montepare, J. M., Goldstein, S. B., & Clausen, A. (1987). The identification of emotions from gait information. Journal of Nonverbal Behavior, 11(1), 33–42. https://doi.org/10.1007/BF00999605

- Nelson, N. L., & Mondloch, C. J. (2017). Adults’ and children’s perception of facial expressions is influenced by body postures even for dynamic stimuli. Visual Cognition. https://doi.org/10.1080/13506285.2017.1301615

- Niedenthal, P. M., Barsalou, L. W., Winkielman, P., Krauth-Gruber, S., & Ric, F. (2005). Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review, 9(3), 184–211. https://doi.org/10.1207/s15327957pspr0903_1

- Nielsen, E. I., Cleworth, T. W., & Carpenter, M. G. (2022). Exploring emotional-modulation of visually evoked postural responses through virtual reality. Neuroscience Letters, 777, 136586. https://doi.org/10.1016/j.neulet.2022.136586

- Oh, D., Shafir, E., & Todorov, A. (2020). Economic status cues from clothes affect perceived competence from faces. Nature Human Behaviour, 4(3), 287–293. https://doi.org/10.1038/s41562-019-0782-4

- Olivola, C. Y., & Todorov, A. (2010). Fooled by first impressions? Reexamining the diagnostic value of appearance-based inferences. Journal of Experimental Social Psychology, 46(2), 315–324. https://doi.org/10.1016/j.jesp.2009.12.002

- Olsson, A., & Phelps, E. A. (2007). Social learning of fear. Nature Neuroscience, 10(9), 1095–1102. https://doi.org/10.1038/nn1968

- Otta, E., Lira, B. B. P., Delevati, N. M., Cesar, O. P., & Pires, C. S. G. (1994). The effect of smiling and of head tilting on person perception. The Journal of Psychology, 128, 323–331. https://doi.org/10.1080/00223980.1994.9712736

- Sakaguchi, K., & Hasegawa, T. (2006). Person perception through gait information and target choice for sexual advances: Comparison of likely targets in experiments and real life. Journal of Nonverbal Behavior, 30(2), 63–85. https://doi.org/10.1007/s10919-006-0006-2

- Schmitt, A., & Atzwanger, K. (1995). Walking fast-ranking high: A sociobiological perspective on pace. Ethology and Sociobiology, 16(5), 451–462. https://doi.org/10.1016/0162-3095(95)00070-4

- Thoresen, J. C., Vuong, Q. C., & Atkinson, A. P. (2012). First impressions: Gait cues drive reliable trait judgements. Cognition, 124(3), 261–271. https://doi.org/10.1016/j.cognition.2012.05.018

- Troje, N. F., Westhoff, C., & Lavrov, M. (2005). Person identification from biological motion: Effects of structural and kinematic cues. Perception & Psychophysics, 67(4), 667–675. https://doi.org/10.3758/BF03193523

- van de Riet, W. A. C., & de Gelder, B. (2008). Watch the face and look at the body!: reciprocal interaction between the perception of facial and bodily expressions. Netherlands Journal of Psychology, 64(4), 143–151. https://doi.org/10.1007/BF03076417

- Van den Stock, J., Righart, R., & de Gelder, B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion, 7(3), 487–494. https://doi.org/10.1037/1528-3542.7.3.487

- Van Der Zant, T., & Nelson, N. L. (2021). Motion increases recognition of naturalistic postures but not facial expressions. Journal of Nonverbal Behavior, 45(4), 587–600. https://doi.org/10.1007/s10919-021-00372-4

- Van Der Zant, T., Reid, J., Mondloch, C. J., & Nelson, N. L. (2021). The influence of postural emotion cues on implicit trait judgements. Motivation & Emotion, 45(5), 641–648. https://doi.org/10.1007/s11031-021-09889-z

- Venture, G., Kadone, H., Zhang, T., Grèzes, J., Berthoz, A., & Hicheur, H. (2014). Recognizing emotions conveyed by human gait. International Journal of Social Robotics, 6(4), 621–632. https://doi.org/10.1007/s12369-014-0243-1

- Walk, R. D., & Homan, C. P. (1984). Emotion and dance in dynamic light displays. Bulletin of the Psychonomic Society, 22(5), 437–440. https://doi.org/10.3758/BF03333870

- Wang, L., Xia, L., & Zhang, D. (2017). Face-body integration of intense emotional expressions of victory and defeat. PLoS One, 12(2), e0171656. https://doi.org/10.1371/journal.pone.0171656

- Yan, W.-J., Li, X., Wang, S.-J., Zhao, G., Liu, Y.-J., Chen, Y.-H., & Fu, X. (2014). CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS One, 9(1), e86041. https://doi.org/10.1371/journal.pone.0086041