?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Face emotion recognition (FER) ability varies across the population, with autistic traits in the general population reported to contribute to this variation. Previous studies used photographs of posed facial expressions, while real social encounters involve dynamic expressions of varying intensity. We used static photographs and dynamic videos, showing peak and partial facial expressions to investigate the influence of dynamism and expression intensity on FER in non-clinical adults who varied in autistic traits. Those with high autistic traits had lower accuracy with both static peak and dynamic partial intensity expressions, when compared to low autistic trait participants. Furthermore, high autistic traits were linked to an accuracy advantage for dynamic compared with static stimuli in both partial and peak expression conditions, while those with low autistic traits demonstrated this dynamic advantage only for partial expressions. These findings reveal the differing importance of dynamism and expression intensity for FER across the non-clinical population and appear linked to self-reported social-communication skills. Furthermore, FER difficulties in autism might relate to the ability to integrate subtle, dynamic information, rather than static emotion categorisation alone.

One core feature of autism relates to anomalous patterns in social communication, including a well-recognised difficulty in face emotion recognition (FER) that affects the ability to detect and recognise nonverbal emotional cues from facial expressions (Yeung, Citation2022). FER is critical for the awareness and inference of others’ mental states that facilitate the interpretation of social and emotional cues (Laycock et al., Citation2020). Thus, difficulties in face processing could lead to cascading social difficulties, by making social environments unpredictable and incomprehensible.

Differences in the activation of the “social brain” regions associated with the perception of social information, including FER, have been reported between autistic and non-autistic individuals (Kim et al., Citation2015). In particular, for autistic individuals, reduced neural activity in response to facial expressions was found in the superior temporal sulcus (STS), a region that processes dynamic aspects of faces and inferences of others’ mental states, and the amygdala, which is responsible for rapid, automatic processing of the emotional or social significance of a stimulus (Kim et al., Citation2015; Pelphrey et al., Citation2007; Vandewouw et al., Citation2021).

Currently, face emotion processing is typically assessed through tasks requiring participants to label emotions from static photographs of facial expressions – an approach that has established that autistic individuals have significantly reduced static FER than non-autistic individuals (Yeung, Citation2022). However, a major limitation of this approach is the failure to recognise that facial dynamism, which encompasses motion, speed and direction, is fundamental in the ability to recognise complex emotional information from faces in real life.

Thus, a more realistic approach to assess FER is through dynamic displays of emotion (i.e. videos of actors smiling or frowning). In non-autistic individuals, there is evidence of greater FER accuracy with dynamic expressions compared with static, a phenomenon known as the dynamic advantage (Krumhuber et al., Citation2013). Through fMRI, it has been demonstrated that brain activity in the fusiform face area, STS and amygdala in non-autistic individuals is more pronounced with dynamic facial expressions compared to static expressions (Krumhuber et al., Citation2013). These findings suggest that static stimuli may fail to assess neural mechanisms accompanying the interpretation of real, dynamic facial expressions (Krumhuber et al., Citation2013).

It is less clear whether the expected dynamic advantage is evident in autism. While the overwhelming bulk of FER studies used static and not dynamic displays (Yeung, Citation2022), some fMRI studies have found reduced activation in the social brain of autistic individuals for dynamic faces (Pelphrey et al., Citation2007; Sato et al., Citation2012), or less differentiation between dynamic faces and flowers (Vandewouw et al., Citation2020), though no behavioural data on recognition accuracy was collected in these studies. However, these aforementioned studies used dynamic morphed faces created by artificially blending between static photographs, which could unintentionally alter perception. There is evidence for reduced FER accuracy for real dynamic expressions (Fridenson-Hayo et al., Citation2016), and using eye-tracking for altered dwell time on the eye region in real dynamic faces, in autistic compared with non-autistic participants (Stephenson et al., Citation2016).

Few studies have directly compared emotion recognition in dynamic and static faces. Compared to the non-autistic population, the difficulty in FER in autism has been suggested by some to be more pronounced with dynamic expressions compared to static, suggesting a lack of a dynamic advantage (Enticott et al., Citation2014; Rump et al., Citation2009; Tardif et al., Citation2007), however, this has not always been replicated (Georgopoulos et al., Citation2022).

The literature on dynamic faces is mixed, possibly as a consequence of the more common use of less realistic “morphed” dynamic faces in some studies (Enticott et al., Citation2014; Poljac et al., Citation2013) as well as the heterogeneous nature of autism. Also, the complexity of FER differences in autism could have been missed due to a large portion of studies using static rather than dynamic face stimuli. Thus, it is possible that these difficulties may not be confined to emotion labelling, as assessed with static face tasks, but also to the ability to process rapidly changing (social) information across time (Krumhuber et al., Citation2013).

Expression intensity is another important factor impacting FER that is underutilised in autism research. Many studies assessing FER in autism have used faces displaying exaggerated, peak expressions (e.g. Kessels et al., Citation2010), while real social perception typically requires the perception of subtle or partial facial expressions. The few studies that have investigated the influence of expression intensity in autism found that autistic individuals demonstrated more pronounced difficulties with subtle expressions (Kessels et al., Citation2010; Rump et al., Citation2009). This could be explained by a lack of experience in social situations, where subtle expressions are commonly encountered (Kessels et al., Citation2010; Poljac et al., Citation2013). Similarly, it has recently been shown that autistic participants have reduced FER when viewing dynamic faces displaying more realistic faces with less intense and stereotypical expressions (Cuve et al., Citation2021). Hence, this could be indicative of lower perceptual sensitivity to emotion in autism (Song & Hakoda, Citation2018).

Interestingly, there is evidence of a relationship between dynamism and expression intensity, whereby dynamism has been found to enhance recognition of more subtle expressions in non-autistic individuals (Krumhuber et al., Citation2013), warranting further exploration of this interaction in autism. Such research would be important for clarifying the nature of the challenges in autism and extending the ecological validity of previous findings that employed only static, peak expression stimuli.

Difficulty processing movement in facial expressions, particularly processing of transient stimuli as is more likely with subtle or partial expressions, could be expected based on studies linking autism to anomalies in the fast-conducting magnocellular visual pathway, which is involved in processing low spatial, but high temporal frequency information and ultimately facilitates motion perception (Laycock et al., Citation2020; Sutherland & Crewther, Citation2010). The magnocellular system also provides a direct connection through the superior colliculus-pulvinar pathway to the amygdala and hence anomalies in this system could disrupt automatic emotional responses to critical non-verbal social cues, including dynamic facial expressions (Laycock et al., Citation2020). Supporting this explanation, autistic traits in the general population are linked to atypical magnocellular response to dynamic stimuli (Sutherland & Crewther, Citation2010).

Additionally, autistic individuals have been found to benefit from dynamic facial expressions moving at speeds slower than reality (e.g. Tardif et al., Citation2007). This literature points to a problem of rapid information processing, whereby a rapidly changing visual environment presents information faster than can be processed in autism, resulting in disorganised and slower emotion interpretation (McPartland et al., Citation2004; Tardif et al., Citation2007).

The current study

Most studies have suggested that autism is associated with a generalised difficulty in recognising facial displays of emotion, with the vast majority of this literature having utilised static displays of basic expressions (Yeung, Citation2022). Furthermore, although there is evidence that dynamism and intensity of the expression may influence the degree of difficulty in those with autism, there is also evidence that autistic children processed dynamic and subtle emotional expressions as efficiently as non-autistic individuals of the same age (Kessels et al., Citation2010). Some of these discrepancies may be explained by the varying age between samples, the heterogeneity of autism diagnoses, as well as by the different test stimuli used. For example, while more recent studies have recognised the improved validity of using dynamic expressions, many of these studies used a computer-generated video dynamically morphing between two static images (Kessels et al., Citation2010; Poljac et al., Citation2013). Only a few studies have used real videos, rather than morphed dynamism, to examine FER, though these studies tended to not also include a static face comparison (Cassidy et al., Citation2015; Cuve et al., Citation2021; Fridenson-Hayo et al., Citation2016; Stephenson et al., Citation2016). Where a static comparison was included, these studies utilised morphed dynamism, or only examined neural responses without a behavioural FER paradigm (Enticott et al., Citation2014; Pelphrey et al., Citation2007; Sato et al., Citation2012; Vandewouw et al., Citation2020; Vandewouw et al., Citation2021). Thus, it is unclear whether the linear progression of facial movements involved with morphed videos is perceived in the same way as a real moving facial expression. As real-life facial expressions are exceptionally complex, emotion processing should be considered a process of integrating subtle and dynamic nonverbal cues to produce meaning. Therefore, the inclusion of realistic dynamism and varying expression intensity may be crucial for achieving a deeper understanding of social challenges in autism.

To begin to investigate these questions, this study adopted a dimensional approach to understanding autism. In this view, autistic traits fall across a continuum, with clinical cases forming one end; the broader autism phenotype describes individuals with autistic traits similar to, although milder than, those observed in individuals diagnosed with autism (Baron-Cohen et al., Citation2001). Importantly for the current study, the degree of autistic traits in the non-clinical population is inversely related to FER performance (Poljac et al., Citation2013), paralleling the emotion recognition difficulties reported in autism (Yeung, Citation2022).

This study aimed to use both dynamic/static and peak/partial facial expressions in a factorial design to investigate the influence of dynamism and expression intensity on FER in the broader autism spectrum. It was hypothesised, first, that individuals with more autistic traits would have significantly lower FER accuracy overall compared to individuals with fewer autistic traits; second, that the dynamic advantage would be significantly less pronounced in individuals with more autistic traits compared to those with fewer autistic traits; and third, that individuals with more autistic traits, when compared with individuals with fewer autistic traits, would demonstrate significantly lower FER accuracy in response to dynamic partial expressions, given that these expressions which most closely resemble expressions experienced in real social encounters are more transient and preferentially stimulate motion processing pathways linked to autism.

Method

Participants

An initial sample of 127 participants was recruited from the general population via social media. Some were university students and received course credit for participation, while the remainder was entered into a draw to win a $75 voucher. All participants provided informed consent and all procedures adhered to those approved by the RMIT University Human Research Ethics Committee.

Two groups of participants were selected based on self-reported autistic traits measured by the autism spectrum quotient (AQ; Baron-Cohen et al., Citation2001). Participants were included if they were aged between 18 and 40 years, reported normal or corrected-to-normal vision, and were excluded if they disclosed a diagnosis of a psychological or neurological condition, including autism (this latter criterion resulted in two exclusions from the low-AQ group and 10 exclusions from the high-AQ group). Participants scoring ≤ 13 on the AQ were allocated to the low-AQ group (n = 34) and those scoring ≥ 21 to the high-AQ group (n = 31), with cut-offs equivalent to one standard deviation from the mean in previous large samples (Stevenson & Hart, Citation2017). The final sample of 65 participants was included in analyses. See for group characteristics, indicating AQ groups were well-matched for age, gender and education.

Table 1. Descriptive statistics for participant demographics (N = 65).

A priori power analysis was conducted for sample size estimation based on the large effect size, d = 1.38, calculated by Poljac et al. (Citation2013) for overall emotion recognition accuracy comparing high and low-AQ groups. However, given that Poljac et al. used participants at the top/bottom 5% of the AQ distribution, we conservatively assumed a smaller effect size for power analysis, d = 0.8. A power analysis indicated the proposed sample size needed to detect this effect size was N = 52 (i.e. n = 26 for each AQ group), assuming α = .05 and power = .80.

Materials

Autistic traits

The AQ is a 50-item self-report questionnaire used to quantify the extent of autistic traits in individuals in the non-clinical population (Baron-Cohen et al., Citation2001). Participants indicated the degree to which they related to items on a 4-point Likert scale (definitely agree, slightly agree, slightly disagree or definitely disagree). As recommended by the authors, a binary scoring approach scored each item one point if the respondent recorded autistic traits, with the AQ score being the sum of all item scores (possible AQ scores range: 0–50). Higher AQ scores indicated more autistic traits. Internal consistency (Cronbach’s a = .79) and test-retest reliability (r = .86) for the AQ were strong (Stevenson & Hart, Citation2017).

Face emotion recognition (FER)

The Gorilla experiment builder (www.gorilla.sc) was employed to run the dynamic and static FER tasks. Both tasks involved the presentation of the faces of five male and five female actors each portraying eight emotional expressions (happiness, sadness, fear, anger, disgust, surprise, pride and contempt), at two levels of expression intensity: peak and partial expression, which were randomly inter-mixed throughout the static and dynamic tasks (i.e. 160 trials per task). Stimuli were acquired from the Amsterdam Dynamic Facial Expression Set (ADFES), a dataset of video facial expressions portrayed by actors (van der Schalk et al., Citation2011). Participants were allowed unlimited time to indicate which of the eight presented emotion labels most accurately described the facial expression observed. Task performance was assessed as the percentage of correct trial responses.

Dynamic emotion recognition

The dynamic emotion recognition task included a peak expression condition which consisted of 80 videos of actors who posed with an initial neutral expression for 500 ms (baseline), before moving to a peak emotional expression, with variable durations depending on the actor and the emotion (mean duration = 867 ms, SD = 413 ms). A partial expression condition included the same 80 videos of actors with a 500 ms neutral baseline before moving to a point where the emotion first emerged (mean duration = 275 ms, SD = 175 ms). The emergence of the emotion was determined per video, by stepping through frames until one or more facial features had moved. This was a somewhat subjective decision, though the partial video duration was 33% of the duration for the matched video. The validity of these decisions ultimately can be seen by the observation that across the whole sample accuracy for partial videos was significantly lower than for full videos (p < .001) but was still above chance (p < .001). For both conditions (peak and partial expression), the last frame of the video was then presented for 200 ms (see supplementary information, Figure S1 for an illustration of the trial sequence).

Static emotion recognition

The static emotion recognition task displayed photographs which were stills taken from the final frame of each video used in the dynamic task. This included 80 images from the peak expression videos and 80 images from the partial expression videos. The trial format was the same as for the dynamic task, with static faces presented for 800 ms.

Procedure

Participants accessed the study through a web link to Gorilla, completing the tasks online with access restricted to a desktop or laptop computer, after having viewed the Participant Information Statement. Informed consent was invited by selecting the “Next” button to continue. Participants first completed demographics and AQ questionnaires, and then the dynamic and static FER tasks were completed in a counterbalanced order. Each emotion recognition task took approximately 8–10 min to complete, with a break offered halfway through each. The entire testing session duration was approximately 30 min.

Results

Responses recorded on Gorilla were analysed using IBM SPSS Statistics 26 software. Accuracy scores on the FER tasks were analysed using a three-way mixed-design analysis of variance (ANOVA) to measure dynamism (static versus dynamic) and expression intensity (peak versus partial) as within-groups factors and AQ group (high versus low) as the between-groups factor. Simple main effects were used to interpret significant interaction effects. The dynamic advantage was defined as the mean difference in FER accuracy between conditions (dynamic minus static).

Prior to conducting analyses, the assumptions of normality and homogeneity of variance were tested. No assumption violations, missing values, or extreme outliers were found. A three-way mixed ANOVA revealed a main effect of AQ group (F(1,63) = 7.13, p = .010, = 0.10) with lower accuracy in the high-AQ group compared with the low-AQ group, a main effect of dynamism (F(1,63) = 30.65, p < .001,

= 0.33) where participants had greater accuracy for dynamic compared with static faces, and a main effect of expression intensity (F(1,63) = 746.45, p < .001,

= 0.92) where participants had greater accuracy for peak compared with partial expressions.

A significant interaction was found between dynamism and expression intensity (F(1,63) = 9.99, p = .002, = 0.14). Importantly though, there was a significant three-way interaction between dynamism, expression intensity and AQ group (F(1,63) = 5.46, p = .023,

= 0.08). There were no significant two-way interactions involving AQ group (p’s > .22).

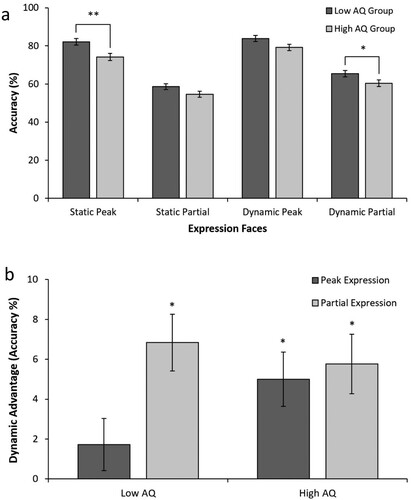

(a) shows that dynamic peak expressions yielded the greatest accuracy for both the high-AQ group (M = 79.19, SD = 9.53) and the low-AQ group (M = 83.86, SD = 9.36). Similarly, static partial expressions yielded the lowest accuracy for both the high-AQ group (M = 54.64, SD = 7.30) and the low-AQ group (M = 58.60, SD = 9.79).

Figure 1. (a) Mean percentage accuracy in FER across dynamism and expression intensities between AQ groups. **p < .01; *p < .05. (b) Magnitude of the dynamic advantage between AQ groups for peak and partial expressions. The figure shows the mean difference in percentage accuracy of FER (dynamic minus static), which is referred to as the dynamic advantage. *A significant dynamic advantage for that condition (i.e. mean difference is greater than 0), p < .001. Error bars indicate standard error of the mean.

Simple main effects were utilised to further investigate the significant three-way interaction (see (a)). Examination of the effect of AQ group revealed that the high-AQ group had significantly lower accuracy compared to the low-AQ group for static peak expressions (F(1,63) = 9.33, p = .003, = 0.13) and dynamic partial expressions (F(1,63) = 4.58, p = .036,

= 0.07). No group differences were found for static partial (p = .071) and dynamic peak (p = .051) expression faces.

Examination of the effect of dynamism – the dynamic advantage – revealed that for partial expression faces, accuracy was significantly greater for dynamic compared with static expressions for the low-AQ group (F(1,63) = 22.98, p < .001, = 0.27). However, for peak expressions, no significant difference between dynamic and static expressions was found in the low-AQ group. For the high-AQ group, both dynamic compared with static partial expressions (F(1,63) = 14.90, p < .001,

= 0.19), and dynamic compared with static peak expressions (F(1,63) = 13.43, p = .001,

= 0.18), yielded significantly greater accuracy. Comparison of the dynamic advantage for peak and partial expression in the AQ groups can be seen in (b). Finally, examination of the effect of expression intensity revealed significantly greater accuracy for peak expressions compared to partial, for both dynamic and static conditions in both AQ groups (p’s < .001).

While a chi-squared analysis suggested the gender ratio between AQ groups was non-significantly different (p = .08), there could still be a concern that the higher number of females in the low compared with high-AQ groups could impact results. However further analysis suggested gender was not associated with face dynamism nor intensity and was unlikely to explain AQ group differences (see supplementary information).

One limitation of the design of the present study is that stimulus duration is an inherent confound. Thus, it is difficult to make inferences about emotion intensity using peak and partial expressions given these conditions also differed in average stimulus duration (see Methods). However, an image-based analysis examining the correlation between video duration and FER accuracy suggested no relationship (see details in the supplementary information), rendering it more plausible that differences in performance between peak and partial expression relate to the intensity of the expression, rather than the stimulus duration per se.

Discussion

The current study sought to investigate the influence of dynamism and expression intensity on facial emotion recognition in the broader autism spectrum. We found lower FER accuracy in individuals with more autistic traits for both static peak, and dynamic partial intensity facial expressions. These results suggest that expression intensity differentially influences FER performance across the autism spectrum depending on the dynamism of the stimuli.

The first hypothesis, that individuals with more autistic traits would have lower FER accuracy compared to individuals with fewer autistic traits, was supported. High-AQ participants had significantly lower overall accuracy compared to those with low-AQ scores. This finding is consistent with past literature, confirming reduced performance in both static and dynamic FER associated with autistic traits (Poljac et al., Citation2013), as well as with autism (Yeung, Citation2022).

The second hypothesis, that the dynamic advantage (i.e. increased accuracy in identifying emotions from dynamic compared with static faces) would be significantly less pronounced in individuals with more autistic traits, was not supported. Past literature suggested that dynamic expressions can be more difficult to interpret compared to static expressions for individuals with autism, due to a relative difficulty with rapid processing (Enticott et al., Citation2014; Rump et al., Citation2009; Tardif et al., Citation2007). Hence, given that non-autistic participants with high-AQ scores have been shown to have anomalous magnocellular processing (Sutherland & Crewther, Citation2010), it was expected that the high-AQ group would demonstrate a reduced or absent dynamic advantage. Instead, consistent with a recent study (Georgopoulos et al., Citation2022), the present results found the high-AQ group did in fact demonstrate a dynamic advantage. Unexpectedly however, this was demonstrated for both peak and partial expressions, whereas the low-AQ group only did so for partial expressions.

It is possible that analyses revealed there was no dynamic advantage for peak expressions in the low-AQ group, because these exaggerated static expressions provided a sufficient amount of information for interpreting emotional expressions, such that dynamic movement provided no added benefit. Consistent with this interpretation, the low-AQ group did demonstrate a dynamic advantage for partial expressions, suggesting these more difficult static expressions lacked sufficient information and hence dynamism was useful for integrating extra facial data and improved recognition accuracy. It is noteworthy that it does not appear this absence of a dynamic advantage is due to ceiling effects, given that low-AQ group performance was 82-84% for static and dynamic expression, which is a high level of accuracy, but not at ceiling. In the high-AQ group, dynamism significantly enhanced FER for both peak and partial expressions, possibly because static expressions lacked sufficient information to facilitate interpretation of the expressions, or because dynamic expressions provided ample information to be deciphered. Regardless of interpretation, this unexpected finding reflects a difference in dynamic and static face processing in high-AQ participants that requires further exploration.

The third hypothesis, that individuals with more autistic traits would have lower accuracy in response to dynamic partial facial expressions compared to those with fewer autistic traits, was supported, and is consistent with a disadvantage for dynamic partial expressions as reported in autistic populations (Kessels et al., Citation2010; Rump et al., Citation2009). This condition may be considered the most difficult condition, as it required integration of dynamically moving facial features, while not reaching a more obvious peak of the expression. Additionally, the high-AQ group also had lower accuracy for static peak expressions, compared to the low-AQ group. Groups were not differentiated however with static partial or dynamic peak expressions, potentially because these facial expressions were equivalent in difficulty to process under these conditions.

Rump et al. (Citation2009) provide one of few studies to examine both dynamism and expression intensity, finding that autistic children had greater difficulty with lower intensity expressions. Although the current study utilised a different paradigm, our findings parallel those of Rump et al. However, we also found reduced static peak expressions with time-limited presentations, whereas Rump et al. did not find such differences where unlimited static presentations were provided.

As the current study demonstrated that FER accuracy differentiated between AQ groups with the most complex and realistic expressions (i.e. dynamic partial expressions) as well as with the simpler, less realistic expressions (i.e. static peak expressions), the current findings could be explained by a single “face perception” mechanism. However, an alternative explanation could imply two different cognitive and neural mechanisms are involved. Specifically, while both dynamic partial and static peak expressions require the recognition of an emotion, static recognition arguably relies on slower more deliberative category-based, template-matching ability. On the other hand, dynamic recognition requires ability to interpret rapidly changing information. This distinction is supported by literature demonstrating that these tasks rely on different neural mechanisms (Krumhuber et al., Citation2013; Pitcher et al., Citation2011). Further research should seek to resolve these different explanations and determine whether autism is linked to specific differences in neural mechanisms involved with processing transient moving information, or instead whether differences in mechanisms involved with emotion processing are sufficient to explain FER differences. Given past literature has established that the speed of facial dynamics is an important factor in dynamic FER in autism (Tardif et al., Citation2007), further investigation of the influence of expression intensity with slower dynamic facial expressions may be useful for understanding the nature of emotion recognition challenges in autism.

There are some limitations to the current study that need to be considered. While the dynamic videos used in this study were of varying durations (ranging from 560–2120 ms for peak expressions, and 80–1280 ms for partial expressions, with partial video duration on average 33% of the peak video duration), future studies should aim to control duration to confirm whether reduced performance in the high-AQ group was dependent on the brief nature of the dynamic partial expressions. This concern is ameliorated by follow-up analyses in the present work showing that stimulus duration did not correlate with FER accuracy (see supplementary information). In fact, when examining this association for partial and peak dynamic videos separately, the duration of peak videos had a negative correlation with accuracy. Reduced accuracy as the stimulus duration increased is surprising, but speculatively could be due to actors adding a second movement following an initial facial expression that could add complexity or confusion to the intended expression.

To replicate the subtle expressions encountered in real life, this study used partial expressions that did not display subtle emotion per se, but instead showed a movement towards a peak expression that was artificially cut short. Genuine subtle expressions might involve different muscle movements compared to the partial expressions used in the current study. For example, in reality, a person who is only mildly sad might only show a small facial expression, involving slightly different muscle movements compared to stopping part of the way towards extremely sad. The absence of a correlation between partial expression video duration and accuracy also provides some reassurance that the somewhat subjective criteria utilised to generate the partial videos did not introduce a bias in these stimuli. Another possible limitation of this study is that the faces adopted from the ADFES involved actors portraying staged emotional expressions. However, these real videos may still have greater ecological validity than past studies that used computer-generated stimuli that artificially morphed between images of different expressions (Kessels et al., Citation2010; Poljac et al., Citation2013). Nonetheless, it would be valuable for future studies to investigate whether dynamism and expression intensity elicit the same effect on FER in autism with more genuine emotional expressions, or live actors.

A further concern relating to the study design requiring consideration relates to the task order effect established. The order of completing dynamic and static tasks was counterbalanced across the sample, and we found that static FER accuracy was higher if completed as the second, rather than the first task. Dynamic FER accuracy did not show such an effect. We argue that as the ratio of those completing the static task first or second was the same in each AQ group, this practise effect would act to reduce any observed effects that we report. As sample size does not permit further subdividing of participant groups to test the main analysis separately for each order, this issue does warrant further examination, and indicates some caution in the interpretation of our main findings is necessary. Nevertheless, this unexpected learning effect appears to highlight the greater amount of information provided by dynamic faces, consistent with the broader dynamic face advantage phenomenon (Krumhuber et al., Citation2013), and could have interesting implications for designing learning and remediation approaches in future studies.

The online nature of the current study was advantageous by enabling access to a wider population, though participants were potentially exposed to more distractions than would be presented in a controlled laboratory environment. To mitigate this concern, frequent breaks were offered to participants throughout the study to increase engagement and reduce fatigue. As the sample size was adequate to detect the effects of dynamism and expression intensity on FER, there does not appear to be any issues with increased variability or generally noisy data. Nevertheless, it is still possible unknown factors were not controlled, and thus research in more controlled lab settings is warranted.

The use of a non-clinical sample in the current study was beneficial as it also facilitated access to a broader population and a larger sample. Although FER abilities were not directly tested in clinically diagnosed autistic participants, use of the AQ allowed the formation of a sample that had traits reliably similar, if less pronounced, to those observed in individuals with autism (Baron-Cohen et al., Citation2001). Though studying autistic traits in the general population does not substitute for research with participants diagnosed with autism, this methodological approach of modelling autism in the general population can complement and guide future directions of research with autistic populations to build on this foundational work.

Conclusion and implications

As facial expressions encountered in real life are exceptionally complex, emotion processing should be considered a process of integrating many subtle, rapidly changing nonverbal cues to produce meaning. While there is existing evidence of difficulties in static FER ability in autism, there is only limited behavioural evidence for emotion recognition performance using real dynamic facial displays. The current study revealed the importance of both dynamism and expression intensity in FER in the broader autism phenotype. This study highlights the benefits of improved research practices, and we suggest that the use of dynamic partial emotional expressions could provide greater insights for understanding what it is about real-life social interactions that are challenging in autism.

Supplementary Information.docx

Download MS Word (60.8 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17. https://doi.org/10.1023/A:1005653411471

- Cassidy, S., Mitchell, P., Chapman, P., & Ropar, D. (2015). Processing of spontaneous emotional responses in adolescents and adults with autism spectrum disorders: Effect of stimulus type. Autism Research, 8(5), 534–544. https://doi.org/10.1002/aur.1468

- Cuve, H. C., Castiello, S., Shiferaw, B., Ichijo, E., Catmur, C., & Bird, G. (2021). Alexithymia explains atypical spatiotemporal dynamics of eye gaze in autism. Cognition, 212(July), 104710. https://doi.org/10.1016/j.cognition.2021.104710.

- Enticott, P. G., Kennedy, H. A., Johnston, P. J., Rinehart, N. J., Tonge, B. J., Taffe, J. R., & Fitzgerald, P. B. (2014). Emotion recognition of static and dynamic faces in autism spectrum disorder. Cognition and Emotion, 28(6), 1110–1118. https://doi.org/10.1080/02699931.2013.867832

- Fridenson-Hayo, S., Berggren, S., Lassalle, A., Tal, S., Pigat, D., Bölte, S., Baron-Cohen, S., & Golan, O. (2016). Basic and complex emotion recognition in children with autism: Cross-cultural findings. Molecular Autism, 7(1), 52. https://doi.org/10.1186/s13229-016-0113-9

- Georgopoulos, M. A., Brewer, N., Lucas, C. A., & Young, R. L. (2022). Speed and accuracy of emotion recognition in autistic adults: The role of stimulus type, response format, and emotion. Autism Research, 15(9), 1686–1697. https://doi.org/10.1002/aur.2713

- Kessels, R., Spee, P., & Hendriks, A. (2010). Perception of dynamic facial emotional expressions in adolescents with autism spectrum disorders (ASD). Translational Neuroscience, 1(3), 228–232. https://doi.org/10.2478/v10134-010-0033-8

- Kim, S. Y., Choi, U. S., Park, S. Y., Oh, S. H., Yoon, H. W., Koh, Y. J., Im, W. Y., Park, J. I., Song, D. H., Cheon, K. A., & Lee, C. U. (2015). Abnormal activation of the social brain network in children with autism spectrum disorder: An FMRI study. Psychiatry Investigation, 12(1), 37–45. https://doi.org/10.4306/pi.2015.12.1.37

- Krumhuber, E. G., Kappas, A., & Manstead, A. S. R. (2013). Effects of dynamic aspects of facial expressions: A review. Emotion Review, 5(1), 41–46. https://doi.org/10.1177/1754073912451349

- Laycock, R., Crewther, S. G., & Chouinard, P. A. (2020). Blink and you will miss it: A core role for fast and dynamic visual processing in social impairments in autism spectrum disorder. Current Developmental Disorders Reports, 7(4), 237–248. https://doi.org/10.1007/s40474-020-00220-y

- McPartland, J., Dawson, G., Webb, S. J., Panagiotides, H., & Carver, L. J. (2004). Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry, 45(7), 1235–1245. https://doi.org/10.1111/j.1469-7610.2004.00318.x

- Pelphrey, K. A., Morris, J. P., McCarthy, G., & Labar, K. S. (2007). Perception of dynamic changes in facial affect and identity in autism. Social Cognitive and Affective Neuroscience, 2(2), 140–149. https://doi.org/10.1093/scan/nsm010

- Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C., & Kanwisher, N. (2011). Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage, 56(4), 2356–2363. https://doi.org/10.1016/j.neuroimage.2011.03.067

- Poljac, E., Poljac, E., & Wagemans, J. (2013). Reduced accuracy and sensitivity in the perception of emotional facial expressions in individuals with high autism spectrum traits. Autism, 17(6), 668–680. https://doi.org/10.1177/1362361312455703

- Rump, K. M., Giovannelli, J. L., Minshew, N. J., & Strauss, M. S. (2009). The development of emotion recognition in individuals with autism. Child Development, 80(5), 1434–1447. https://doi.org/10.1111/j.1467-8624.2009.01343.x

- Sato, W., Toichi, M., Uono, S., & Kochiyama, T. (2012). Impaired social brain network for processing dynamic facial expressions in autism spectrum disorders. BMC Neuroscience, 13(1), 99. https://doi.org/10.1186/1471-2202-13-99

- Song, Y., & Hakoda, Y. (2018). Selective impairment of basic emotion recognition in people with autism: Discrimination thresholds for recognition of facial expressions of varying intensities. Journal of Autism and Developmental Disorders, 48(6), 1886–1894. https://doi.org/10.1007/s10803-017-3428-2

- Stephenson, K. G., Quintin, E. M., & South, M. (2016). Age-related differences in response to music-evoked emotion among children and adolescents with autism spectrum disorders. Journal of Autism and Developmental Disorders, 46(4), 1142–1151. https://doi.org/10.1007/s10803-015-2624-1

- Stevenson, J. L., & Hart, K. R. (2017). Psychometric properties of the autism-spectrum quotient for assessing low and high levels of autistic traits in college students. Journal of Autism and Developmental Disorders, 47(6), 1838–1853. https://doi.org/10.1007/s10803-017-3109-1

- Sutherland, A., & Crewther, D. P. (2010). Magnocellular visual evoked potential delay with high autism spectrum quotient yields a neural mechanism for altered perception. Brain, 133(7), 2089–2097. https://doi.org/10.1093/brain/awq122

- Tardif, C., Lainé, F., Rodriguez, M., & Gepner, B. (2007). Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial-vocal imitation in children with autism. Journal of Autism and Developmental Disorders, 37(8), 1469–1484. https://doi.org/10.1007/s10803-006-0223-x

- van der Schalk, J., Hawk, S. T., Fischer, A. H., & Doosje, B. (2011). Moving faces, looking places: Validation of the Amsterdam dynamic facial expression set (ADFES). Emotion, 11(4), 907–920. https://doi.org/10.1037/a0023853

- Vandewouw, M. M., Choi, E., Hammill, C., Arnold, P., Schachar, R., Lerch, J. P., Anagnostou, E., & Taylor, M. J. (2020). Emotional face processing across neurodevelopmental disorders: A dynamic faces study in children with autism spectrum disorder, attention deficit hyperactivity disorder and obsessive-compulsive disorder. Translational Psychiatry, 10(1), 375. https://doi.org/10.1038/s41398-020-01063-2

- Vandewouw, M. M., Choi, E. J., Hammill, C., Lerch, J. P., Anagnostou, E., & Taylor, M. J. (2021). Changing faces: Dynamic emotional face processing in autism spectrum disorder across childhood and adulthood. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 6(8), 825–836. https://doi.org/10.1016/j.bpsc.2020.09.006

- Yeung, M. K. (2022). A systematic review and meta-analysis of facial emotion recognition in autism spectrum disorder: The specificity of deficits and the role of task characteristics. Neuroscience & Biobehavioral Reviews, 133(Feb), 104518. https://doi.org/10.1016/j.neubiorev.2021.104518.