?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Background: We previously developed and psychometrically validated a self-reported memory problem (SRMP) measure using principal component analysis. In the present study, we applied item response theory (IRT) analysis to further examined the construct validity and determine item-level psychometric properties for the SRMP.

Methods: The sample included 530 female breast cancer survivors (61% non-Hispanic White, mean age = 57 years) who were recruited from community-based organizations and large health care systems. We examined the construct validity, item-level psychometric properties, and differential item functioning (DIF) of the SRMP using confirmatory factor analysis (CFA), IRT and logistic regression analysis models.

Results: The CFA confirmed a one-factor structure for the SRMP (comparative fit index [CFI] = 0.996, root-mean-square-error-approximation [RMSEA] = 0.059). As expected, SRMP scores correlated significantly with pain, mood, and fatigue; but not spiritual health locus of control (SPR). DIF analysis showed no measurement differences based on race/ethnicity and age groups.

Conclusion: The CFA and DIF analysis supported the construct validity of the SRMP and its use in an ethnically diverse breast cancer population. These findings provide further evidence of the generalizability for the SRMP, and support its utilization as a psychometrically valid and reliable screening measure of cancer and treatment-related memory difficulties.

Introduction

Cancer and treatment-related neurocognitive dysfunction (CRND) is a debilitating condition that can impact multiple dimensions of psychosocial functioning, activities of daily living, and overall quality of life (QoL). CRND occurs in about 75% of survivors of non-central nervous system cancers (non-CNS) [Citation1–7] and involves mild to moderate difficulties in attention, memory, processing speed and executive functioning [Citation4,Citation8–10]. Difficulties remembering are the most commonly reported cancer-related neurocognitive sequelae [Citation11].

Memory performance is central to successful engagement in normal mental operations and activities of daily living. Studies have shown strong associations between subjective complaints of cognitive difficulties and deficits in daily functioning and QoL [Citation12–14]. The magnitude of CRND is also noteworthy. Individuals in the United States with a history of non-CNS cancer and cancer treatments are 40% more likely to experience memory problems compared with age-matched healthy controls without a history of cancer or cancer treatments [Citation10]. Finding ways to systematically screen cognitive impairments can have a positive health impact for cancer patients/survivors.

CRND is usually determined based on self-reports of cognitive difficulties or objective neuropsychological tests [Citation9–11,Citation15,Citation16]. However, these methods are debatable and commonly yield inconsistent outcomes [Citation9,Citation17,Citation18]. Self-reports are subjective and available objective neuropsychological tests may lack ecological validity for cancer populations [Citation9,Citation15,Citation19]. Consequently, there are no ‘gold standard’ measures for CRND, which underscores the need to develop ecologically validated screening measures [Citation11,Citation15,Citation19,Citation20].

We previously validated the self-reported memory problem (SRMP) measure in a sample of adult-onset non-CNS survivors using classical test theory (CTT) methods that yielded evidence of unidimensionality and high internal consistency [Citation19]. The CTT analysis focused on the internal structure of SRMP but did not establish criterion-related validity by examining the relationship of SMRP with other constructs. Additionally, the CTT analysis did not (a) distinguish between examinee’s and test items’ characteristics, (b) establish measurement invariance based on sociodemographics and (c) treated reliability or measurement precision as a constant (i.e., CTT considered reliability a property of test scores that applies equally to every participant, whereas reliability can vary depending on the latent trait level in IRT analysis). In the present study, we extend the CTT validation of the SRMP using IRT analysis and differential item functioning (DIF) based on age and race/ethnicity. Item parameter estimates (e.g., item difficulty, and discrimination and slope parameters based on item characteristic curve) obtained from this study will inform future applications of the SRMP.

Methods

Study population

The sample included 530 female breast cancer survivors, 25–82 years old, identified via community-based organizations (Bridge Breast Network, Sisters Network Inc., and Army of Women) and hospital systems in the Dallas/Fort Worth Metroplex (JPS Center for Cancer Care and Texas Health Resources). Participants identified via hospital systems were ascertained from hospital tumor registries. Most participants resided in the Dallas/Fort Worth Metroplex area, except those recruited from the Army of Women who were located throughout the United States. Participants completed a web-based survey (mostly non-Hispanic whites) from September to October 2015 or a paper survey (mostly African Americans) via postal mail between June 2014 and April 2015. Data were inputted into IBM-SPSS statistical analysis software package. Institutional Review Boards at the JPS Center for Cancer Care, Texas Health Resources, and academic institutions including the University of Alabama, Tuscaloosa, Alabama approved this study.

Measures

We analyzed data from a subset of items that were assessed as part of a screening survey for a larger randomized controlled trial (RCT). The larger RCT included questions about participants’ sociodemographics, medical history, lifestyle variables (i.e., diet, exercise, weight), and health-related QoL. This analysis is based on the QoL data.

SRMP. Participants completed this brief screening measure of global memory problems experienced over the past 2 weeks, and rated SRMP items [(1) my head feels heavy, (2) my brain feels muddled or scrambled, (3) it is difficult to think, (4) I cannot concentrate, and (5) it’s easy for me to forget things] on a Likert-type scale ranging from ‘1 = never’ to ‘5 = Several times a day’. Our previous psychometric validation of the SRMP using CTT in 781 cancer patients revealed adequate internal consistency and test–retest reliability, data factorability based on Kaiser–Meyer–Olkin test (KMO = 0.87), Bartlett’s sphericity test (p < .001), Kaiser’s simplest criterion test of eigenvalue ([λ] > 1), item-correlation coefficients ≥ 0.30 [Citation19]. Additionally, the contents of attention and memory often overlap. Therefore, SRMP items are expected to probe cognitive domains (e.g., aspects of attention) that are linked with memory. Previous studies showed significant relationships between attention and working memory [Citation21–23].

Fatigue interference. Participants completed an abbreviated version of the Fatigue Symptom Inventory (FSI) [Citation24,Citation25] that assessed how much fatigue interfered with general activity, walking ability, normal work, relationships with other people, and mood in the past 7 days. Items were rated on a Likert-type response scale ranging from ‘1 = never’ to ‘5 = all the time’. Internal consistency reliability (ICR) for this sample is appropriate (α = 0.93).

Pain interference. Patients completed a 7-item pain interference measure that assessed how much pain interfered with general activity, mood, walking ability, normal work, relations with other people, sleep, and enjoyment of life in the past 7 days. Items were rated on a Likert-type scale ranging from ‘1 = never’ to ‘5 = all the time’. The validity and reliability of the measure was previously established [Citation26]. ICR for this sample is suitable (α = 0.95).

Mood. We assessed mood using the 4-item Patient Health Questionnaire (PHQ-4) – a brief screening measure that quantifies symptoms of anxiety and depression over the past 2 weeks. Items were rated on a Likert-type scale ranging from ‘0 = Not at all’ to ‘3 = Nearly every day’ [Citation27]. The PHQ-4 is commonly used in clinical settings and has high sensitivity and specificity for diagnosing psychiatric outcomes. PHQ-4 scores ≥3 are indicative of anxiety and depression [Citation27]. ICR for this sample was adequate (α = 0.85).

Spiritual health locus of control (SPR). The SPR was developed for African Americans and includes subscales designed to capture four aspects of spiritual beliefs: (1) spiritual life/faith subscale that pertains to being healthy because of living a ‘good’ spiritual life (i.e., Through my faith in God, I can stay healthy), (2) active subscale that relates to the belief that one works with God to stay in good health (i.e., God gives me the strength to take care of myself), (3) passive subscale that relates to the belief that God has complete control over one’s health (i.e., There is no point in taking care of myself when it’s all up to God anyway), and (4) God’s grace subscale that refers to being healthy through prayer and God’s grace (i.e., God works through doctors to heal us). SPR items were rated on a Likert-type scale ranging from ‘1 = strongly disagree’ to ‘5 = strongly agree’. The SPR has appropriate construct and predictive validity, as well as ICR with Cronbach’s alphas ranging from 0.51 (Passive Spirituality subscale) to 0.81 (Spiritual Life/Faith subscale) [Citation28,Citation29].

Socio-demographics and medical data. The socio-demographics and medical data were self-reported by participants and included age (current, and at diagnosis), race/ethnicity, treatment, and disease stage at diagnosis. We categorized age as: Group 1 < 50 years, Group 2 (50–59 years), and Group 3 (60 years or older), and derived years since diagnosis from age at diagnosis and current age.

Statistical analysis

We conducted an exploratory factor analysis (EFA) that revealed only one-of-five eigenvalues (λ) > 1 (i.e., 3.469, 0.743, 0.365, 0.284 and 0.138), and applied Kaiser’s rule and scree plot inspection that confirmed the unidimensionality of the SRMP. We completed a confirmatory factor analysis (CFA) to evaluate the internal structure of the SRMP, DIF to assess measurement invariance/equivalence based on age and race/ethnicity, evaluation of criterion-related and discriminant validity using correlations among similar and different psychological constructs, and item response theory (IRT) analysis to examine item-level psychometrics. We used Mplus/IBM-SPSS for statistical analysis.

Confirmatory factor analysis

We conducted the CFA using Unweighted-Least-Squares-with-Mean-and-Variance adjustment that produced similar parameter estimates as Unweighted-Least-Squares and provided standard errors for the parameter estimates corrected by robust procedures. We treated item scores as ordinal. We estimated thresholds and polychoric correlations using a two-stage maximum likelihood estimation and obtained parameter estimates by minimizing the least squares fit function. We used recommended criteria (CFI ≥ 0.95 and RMSEA ≤ 0.06) to confirm the measurement model fit to the data [Citation30,Citation31].

Differential item functioning analysis

We calculated DIF to evaluate the SRMP performance based on age and race/ethnicity [Citation32]. A series of logistic regression models were computed to determine the presence or absence of DIF based on age groups and racial/ethnic categories:

where

is the response to item j,

is the matching variable (typically the total score on the scale), and G is group membership. G is 0 for the reference group (e.g., the majority group) and 1 for the focal group (e.g., the minority group). A

that is significantly smaller than ‘0’ indicates that individuals at the ability level (i.e., the same level of F) have a lower chance of scoring high on item j if they belong to the minority group, suggesting a DIF effect against the minority group. In contrast, a

that is significantly larger than ‘0’ indicates a DIF effect favoring the minority group.

We fitted five ordinal logistic regression models (one per item) because is ordinal instead of continuous. Either the total score or the latent trait estimate

could be used as matching variable. The groups were race/ethnicity (White vs. Others) and age (<50 years, 50–59 years, and 60+ years). Main parameters of interest were

(main effect) and

(interaction effect). A

significantly different from ‘0’ suggests that even when conditioning on the level of memory problems, scores on item j differ by race/ethnicity or age groups. This would indicate the uniform DIF effect of item j. A significant

would suggest non-uniform DIF. Whether DIF favors one group or another would depend on the level of the matching variable.

Criterion-related validity

To establish criterion-related validity, we calculated Pearson correlation coefficients between SRMP, and measures of pain, mood, fatigue, and SPR. Significant correlations were expected between SRMP and pain, mood and fatigue (i.e., concurrent validity). However, the correlation between SRMP and SPR was expected to be low (i.e., discriminant validity). We selected these variables because of previously reported associations between pain, fatigue, psychological distress and cognitive functioning [Citation33,Citation34]. Studies showed that pain can impact neurocognitive processes [Citation34]. Studies also reported that spirituality is linked to a positive life theme that may influence how one thinks [Citation35]. Although a direct link between spirituality and cognitive operations (e.g., memory) is not established, researchers have reported that spirituality may lead to cognitive biases [Citation35]. While spirituality may influence beliefs, it is not expected to directly affect memory processing. We expected age to influence SRMP scores. Thus, we completed an analysis of variance (ANOVA) with post-hoc comparisons to assess SRMP score differences among different age groups (<50 years, 50–59 years, and 60 years or above).

Item response theory analysis

We established the SRMP unidimensionality using CFA and fitted a unidimensional IRT model to the data. Prior to performing the latent trait analysis, we assessed the data to ensure all response categories contained sufficient sample sizes for stable estimates. This led us to collapse response categories 4 and 5 for item 1 since only two participants endorsed category 5. The unidimensional normal-ogive graded response model (NO-GRM) [Citation36] was fitted to the resulting data (i.e., collapsed categories 4 and 5 for item 1). This analysis was completed in Mplus using delta parameterization [Citation37] and assuming the latent factor had a variance of 1. Additionally, the unidimensional NO-GRM can be mathematically equivalent to the one-factor CFA under certain regularity conditions [Citation38,Citation39]. We present general model fit results under the CFA framework since that approach is often the most familiar to the general audience. However, item information is rarely discussed under the CFA framework. Therefore, we discussed item characteristics (e.g., item parameters and item information) using the IRT framework.

GRM is one of the most widely used IRT models to fit Likert-scale data. Suppose that the item responses for item j are scored 1, 2, 3, …, Kj. The cumulative response function of the unidimensional NO-GRM is defined as:

for j = 1, 2, …, J, J being the total number of items of the scale (in this case 5),

is the latent trait (memory problem) of individual i, and

is the normal CDF. The cumulative response function

captures the probability of individual i with latent trait

endorsing response category k or above on item j. When none of the items need to be reverse-coded, an individual with higher latent trait is more likely to endorse high response categories. In the case of SRMP, individuals with more severe memory problems will be more likely to report more frequent occurrences of memory difficulties. The category response function is defined as the difference between two adjacent cumulative response functions:

Here, is the probability of patient i endorsing exactly response category k on item j. When k = 1,

when k = Kj,

We obtained parameter estimates and standard errors for

and

We plotted category response curves for each SRMP item.

Results

Study participants

The sample consisted mostly of non-Hispanic White (61%) and African American (33%) breast cancer survivors, mean age 57 years (range: 25–82 years), and about 8 years since initial diagnosis. Participants were diagnosed with localized tumors (60%), m that spread to nearby tissues (30%), and distant metastases (3%). Treatment modalities included surgery (94%), supplemental chemotherapy (62%), radiotherapy (60%), and hormonal therapy (50%).

Confirmatory factor analysis and differential item functioning

The CFA fit the data adequately ( = 13.5, df = 5, p-value = .02, CFI = 0.996, RMSEA = 0.059). The CFI and RMSEA indicate a close fit of the one-dimensional model. DIF analysis showed the SRMP performed similarly among different race/ethnic and age groups. A logistic regression model was completed for each item, and we tested

and

for each model. Each item was evaluated for DIF separately. We applied a Bonferroni correction (p = .01) to correct for multiple tests [Citation40]. None of the

or

were significantly different from ‘0’ (p > .01). The smallest p-value obtained was p = .044 for item 3. The findings indicate invariance across groups and will enable interpretation of SRMP differences via group means comparison.

Discriminant validity

SRMP was significantly correlated with pain (r = 0.44, p < .01), fatigue interference (r = 0.58, p < .01) and mood (r = 0.54, p < .01), but not with SPR (r = −0.05, p = .40). The ANOVA showed significant differences in SRMP scores based on age (F = 10.51, p < .001). Post-hoc tests revealed significant differences between age Groups 1 (<50 years) and 2 (51–59 years), and Groups 1 and 3 (60 years or older). There was no significant difference between Groups 2 and 3. Overall, Group 1 had the highest mean SMRP score. Mean SRMP score difference was 1.55 (p = .01) between Groups 1 and 2, and 2.41 (p < .001) between Groups 1 and 3, suggesting that younger survivors reported more memory problems.

Item response theory analysis

Distribution of responses. Participants responded primarily at the lower end of the scale (i.e., ‘never’ and ‘about once a week’) for items 1 (91%), 2 (76%) and 3 (75%). Few participants responded at the higher end of the scale (i.e., ‘nearly every day’ and ‘several times a day’). Items 4 and 5 were distributed more toward the middle of the scale (i.e., ‘about once a week’ and ‘two-to-three time a week’). Response categories for ‘often’ and ‘all the time’ were collapsed for item 1 due to insufficient responses for ‘all the time’. The proportions for the remaining items and response categories were retained. The distribution of item responses is summarized in . These responses are positively skewed for all SRMP items, which explain the statistically significant test in the CFA.

Table 1. Distribution of item responses.

Parameter estimation. We obtained the factor loadings, threshold estimates and corresponding SEs using Delta Parameterization in Mplus (see ). The factor loadings range from 0.60 (‘item 1’) to 0.986 (‘item 3’) and were consistent with the CFA results confirming the unidimensionality of the SRMP. The loading of ‘item 3’ seems high. However, estimation with weighted least squares (WLS) corroborated the results from ULSMV, suggesting their robustness. Threshold parameters increased across the response scale and parameters’ SEs were small (mostly <0.10), indicating that the parameter estimates were precise. These parameter estimates can be translated to and

’s in the NO-GRM metric (see ). Given the invariance of the IRT model parameters, these parameter estimates can be applied in future analysis estimating latent SRMP scores using maximum likelihood estimation.

Table 2. Parameter estimates and SEs for fitting the item factor analytic model.

Table 3. Item parameter estimates for the normal-ogive graded response model.

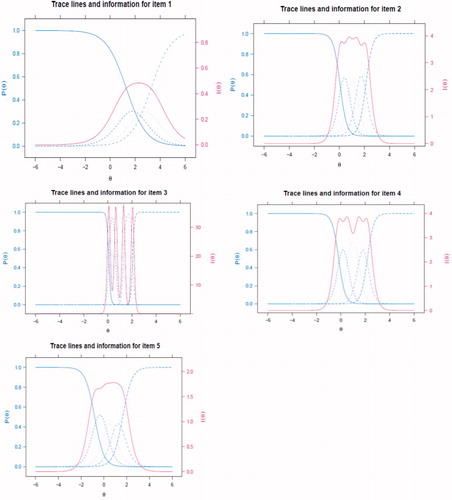

Item category characteristic curves (ICCCs) and item information functions (IIFs). ICCCs were plotted in blue for each item (see ) and showed the probability of endorsing each response category for each item at different latent trait levels. For example, respondents low on θ (i.e., low memory problems) would be more likely to endorse response category 1 (i.e., ‘never’) for item 4. In fact, when θ < −1 the chance of responding ‘never’ on item 4 is nearly 100%. Respondents with θ slightly above ‘0’ would have a much higher chance to endorse response category 2. Respondents with θ close to ‘1’ would have the highest chance to endorse category 3 of item 4 and so forth. The chance of endorsing higher response categories increases with higher θ. The patterns of the ICCCs met our expectations (see ).

Figure 1. Item category characteristic curves (blue) and item information functions (pink) for the five items of the self-reported memory problems (SRMP) measure.

The IIF describes information that a specific item provides in differentiating individuals who are either high or low on θ. Item information curves were plotted and are represented in pink (see ). An item with higher information at a certain θ level allows us to better differentiate people who are higher or lower than that level. Both items 2 and 4 have high information for θ between 0 and 2.5, suggesting that these items are suitable for people with CRND. However, items 2 and 4 provide little information for people with θ = −1. Item 5 provides substantial information for people with θ = −1 (i.e., relatively fewer memory problems).

Discussion

We applied unidimensional IRT analysis to assess item-level psychometrics for the SRMP. The results confirmed previous CTT analysis [Citation19] and revealed that the SRMP has appropriate psychometric properties, measurement invariance and construct validity. Younger breast cancer survivors scored higher on the SRMP, which could be due to younger individuals having higher baseline cognitive functioning, being more attuned to subtle changes in memory performance and experiencing higher level of distress. Additionally, younger survivors maybe engaging in more cognitively demanding activities that can exacerbate their cognitive problems. Interventions to mitigate cancer-related memory problems should consider the effects of age on cognition and rehabilitation and recovery of cognitive abilities.

The findings reveal specific characterization of the psychometric properties of each item through item parameter estimates, category characteristic curves and item information curves. Oftentimes, composite scores can show suitable psychometric properties (e.g., reliability) while it is unclear whether individual items function appropriately or need to be modified based on a scale-level analysis. IRT provides item-level information that allows examination of each item, complements scale-level analysis (i.e., CTT), and strengthens the psychometric evidence of an SRMP. These findings will inform future SRMP applications and strategies to enhance screening/monitoring of memory performance for cancer survivors.

Despite known deleterious impact of cancer and its treatments on cognition, CRND is not commonly assessed in oncology care. Self-report measures (e.g., SRMP) may be influenced by subjectivity biases. However, individuals have the benefit of self-knowledge and may report impairments that objective tests are not capturing. The SRMP can be used to facilitate screening/monitoring of CRND and inform referral decisions for comprehensive objective testing. Albeit not representative of objective cognitive dysfunction, self-reports of CRND are important and should be integrated into the treatment process. In fact, studies have reported strong associations between subjective complaints of cognitive difficulties and decreased participation in activities of daily living and QoL [Citation12,Citation13]. Future research should integrate self-reports with objective measures (e.g., blood biomarkers, neuropsychological tests, and neuroimaging) to facilitate the systematic evaluation of CRND. This approach will bridge gaps between self-reports and neuropsychological tests for CRND [Citation15].

Data in the present analysis are cross-sectional and include self-reports. We also used two modes of survey administration. Nevertheless, this study has several strengths. The results demonstrated the construct and discriminant validity of the SRMP in a diverse sample. We used brief measures with established reliability and validity to quantify psychosocial outcomes that reduced participant burden, and we applied robust statistical methods to determine item-level characteristics. Overall, findings of this IRT analysis support the use of the SRMP as a brief screening measure for CRND.

Conclusions

Establishing the psychometric properties of the SRMP addresses a significant research gap. This IRT analysis complements previous CTT validation and supports the use of the SRMP as a reliable and valid screening measure of cancer-related memory sequelae that could inform decisions for follow-up objective testing to determine the presence/severity of CRND. Future studies should examine the relationships between the SRMP and other measures of cognitive performance, establish the ecological validation of objective neuropsychological tests, and facilitate the comparison and integration of self-reports and objective measures for CRND.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Gonzalez BD, Jim HS, Booth-Jones M, et al. Course and predictors of cognitive function in patients with prostate cancer receiving androgen-deprivation therapy: a controlled comparison. J Clin Oncol. 2015;33(18):2021–2027.

- Ahles TA, Saykin AJ, Furstenberg CT, et al. Neuropsychological impact of standard-dose systemic chemotherapy in long-term survivors of breast cancer and lymphoma. J Clin Oncol. 2002;20(2):485–493.

- Falleti MG, Sanfilippo A, Maruff P, et al. The nature and severity of cognitive impairment associated with adjuvant chemotherapy in women with breast cancer: a meta-analysis of the current literature. Brain Cogn. 2005;59:60–70.

- Saykin AJ, Ahles TA, McDonald BC. Mechanisms of chemotherapy-induced cognitive disorders: neuropsychological, pathophysiological, and neuroimaging perspectives. Semin Clin Neuropsychiatry. 2003;8(4):201–216.

- Cruzado JA, López-Santiago S, Martínez-Marín V, et al. Longitudinal study of cognitive dysfunctions induced by adjuvant chemotherapy in colon cancer patients. Support Care Cancer. 2014;22(7):1815–1823.

- Tchen N, Juffs HG, Downie FP, et al. Cognitive function, fatigue, and menopausal symptoms in women receiving adjuvant chemotherapy for breast cancer. J Clin Oncol. 2003;21(22):4175–4183.

- Hess LM, Huang HQ, Hanlon AL, et al. Cognitive function during and six months following chemotherapy for front-line treatment of ovarian, primary peritoneal or fallopian tube cancer: an NRG oncology/gynecologic oncology group study. Gynecol Oncol. 2015;139(3):541–545.

- Correa DD, Ahles TA. Neurocognitive changes in cancer survivors. Cancer J. 2008;14(6):396–400.

- Jean-Pierre P, Johnson-Greene D, Burish TG. Neuropsychological care and rehabilitation of cancer patients with chemobrain: strategies for evaluation and intervention development. Support Care Cancer. 2014;22(8):2251–2260.

- Jean-Pierre P, Winters PC, Ahles TA, et al. Prevalence of self-reported memory problems in adult cancer survivors: a national cross-sectional study. J Oncol Pract. 2012;8(1):30–34.

- Jean-Pierre P, McDonald BC. Neuroepidemiology of cancer and treatment-related neurocognitive dysfunction in adult-onset cancer patients and survivors. Handb Clin Neurol. 2016;138:297–309.

- Kohli S, Griggs JJ, Roscoe JA, et al. Self-reported cognitive impairments in patients with cancer. J Oncol Pract. 2007;3(2):54–59.

- Shilling V, Jenkins V. Self-reported cognitive problems in women receiving adjuvant therapy for breast cancer. Eur J Oncol Nurs. 2007;11(1):6–15.

- Von Ah D, Habermann B, Carpenter JS, et al. Impact of perceived cognitive impairment in breast cancer survivors. Eur J Oncol Nurs. 2013;17(2):236–241.

- Jean-Pierre P. Integrating functional near-infrared spectroscopy in the characterization, assessment, and monitoring of cancer and treatment-related neurocognitive dysfunction. Neuroimage. 2014;85:408–414.

- Lambert M, Ouimet LA, Wan C, et al. Cancer-related cognitive impairment in breast cancer survivors: an examination of conceptual and statistical cognitive domains using principal component analysis. Oncol Rev. 2018;12(2):371.

- Pullens MJ, De Vries J, Roukema JA. Subjective cognitive dysfunction in breast cancer patients: a systematic review. Psychooncology. 2010;19(11):1127–1138.

- Hutchinson AD, Hosking JR, Kichenadasse G, et al. Objective and subjective cognitive impairment following chemotherapy for cancer: a systematic review. Cancer Treat Rev. 2012;38(7):926–934.

- Jean-Pierre P, Morrow GR, Mohile SG, et al. A brief patient self-report screening measure of cancer treatment-related memory problems: latent structure and reliability analysis. Treat Strateg Oncol. 2011;2(1):93–95.

- Jean-Pierre P. Management of cancer-related cognitive dysfunction-conceptualization challenges and implications for clinical research and practice. US Oncol. 2010;6:9–12.

- Kintsch KC, Healy AF, Hegarty M, et al. Models of working memory: eight questions and some general issues. In: Miyake A, Shah P, editors. Models of working memory: mechanisms of active maintenance and executive control. New York (NY): Cambridge University Press; 1999. p. 412–441.

- Miyake A, Shas P. Towards unified theories of working memory: emerging general consensus unresolved theoretical issues, and future research directions. In: Miyake A, Shah P, editors. Models of working memory: mechanisms of active maintenance and executive control. New York (NY): Cambridge University Press; 1999. p. 442–481.

- Awh E, Vogel EK, Oh SH. Interactions between attention and working memory. Neuroscience. 2006;139(1):201–208.

- Hann DM, Jacobsen PB, Azzarello LM, et al. Measurement of fatigue in cancer patients: development and validation of the Fatigue Symptom Inventory. Qual Life Res. 1998;7(4):301–310.

- Hann DM, Denniston MM, Baker F. Measurement of fatigue in cancer patients: further validation of the Fatigue Symptom Inventory. Qual Life Res. 2000;9(7):847–854.

- Cleeland CS, Ryan KM. Pain assessment: global use of the Brief Pain Inventory. Ann Acad Med Singapore. 1994;23(2):129–138.

- Löwe B, Wahl I, Rose M, et al. A 4-item measure of depression and anxiety: validation and standardization of the patient health questionnaire-4 (PHQ-4) in the general population. J Affect Disord. 2010;122(1–2):86–95.

- Holt CL, Clark EM, Klem PR. Expansion and validation of the spiritual health locus of control scale: factor analysis and predictive validity. J Health Psychol. 2007;12(4):597–612.

- Holt CL, Clark EM, Kreuter MW, et al. Spiritual health locus of control and breast cancer beliefs among urban African American women. Health Psychol. 2003;22(3):294–299.

- Hu L, Bentler P. Cutoff criteria for fit indices in covariance structure analysis: conventional criteria versus new alternatives. Struct Equat Model. 1999;6(1):1–55.

- MacCallum C, Browne MW, Sugawara HM. Power analysis and determination of sample size for covariance structure modeling. Psychol Meth. 1996;1(2):130–149.

- Zumbo BD. 1999. A handbook on the theory and methods of differential item functioning (DIF): logistic regression modeling as a unitary framework for binary and Likert-type (ordinal) item scores. Ottawa: Directorate of Human Resources Research and Evaluation, Department of National Defense.

- Hae JK, Se JS, Chang HYY, et al. The association between pain and depression, anxiety, and cognitive function among advanced cancer patients in the hospice ward. Korean J Fam Med. 2013;34(5):347–356.

- Spindler M, Koch K, Borisov E, et al. The influence of chronic pain and cognitive function on spatial-numerical processing. Front Behav Neurosci. 2018;12:165.

- Verno KB, Cohen SH, Patrick JH. Spirituality and cognition: does spirituality influence what we attend to and remember? J Adult Dev. 2007;14(1–2):1–5.

- Samejima F. The graded response model. In: van der Linden WJ, Hambleton R, editors. Handbook of modern item response theory. New York (NY): Springer; 1996. p. 85–100.

- Paek I, Cui M, Gubes N, et al. Estimation of an IRT model by Mplus for dichotomously scored responses under different estimation methods. Educ Psychol Meas. 2018;78(4):569–588.

- Takane Y, de Leeuw J. On the relationship between item response theory and factor analysis of discretized variables. Psychometrika. 1987;52(3):393–408.

- Bolt DM. Limited and full-information IRT estimation. In: Maydeu-Olivares A, McArdle J, editors. Contemporary psychometrics. Mahwah, NJ: Lawrence Erlbaum Associates; 2005. p. 27–71.

- Samuels P, Gilchrist M. Statistical hypothesis testing. Technical report. 2014. [cited 2019 Nov 4]. Available from: http://www.statstutor.ac.uk/resources/uploaded/statisticalhypothesistesting2.pdf.