ABSTRACT

This study introduces a model of Bloom's taxonomy aimed at deepening learners’ cognitive thinking. It investigates the process of questioning and reflection within the structured framework of Bloom's taxonomy and explores how these insights can be applied in students’ utilisation of generative Artificial Intelligence. An experiment was conducted in a Year 5 class at an International Baccalaureate Primary school in Hong Kong, involving 25 students. The findings indicate that Creating and Evaluating were the dominant aspects in the students’ questioning and answering process. However, the skill of ‘Applying’ showed a significantly low influence, suggesting a lack of proficiency in applying AI conversations to other learning areas. This research contributes to the field by providing insights into the integration of generative AI within Bloom's taxonomy. Educators and researches can utilise these findings to enhance critical thinking and learning outcomes through AI integration.

The application of artificial intelligence (AI) in the field of education holds great promise for enhancing the learning process across various domains. According to a review conducted by Chen, Chen, and Lin (Citation2020), AI technologies have been successfully employed in personalised intelligent teaching, assessment and evaluation, smart schools, as well as online and mobile remote education. Notably, there has been significant progress in the development of conversational AI, such as chatbots, which facilitate human-machine interactions through text or voice-based conversations (Adamopoulou and Moussiades Citation2020). An example of such an application is ChatGPT, which is powered by Generative Pre-trained Transformer (GPT-3), a machine learning software developed by OpenAI. ChatGPT leverages pre-existing data to identify patterns, generate relevant responses to user queries, and provide word and phrase suggestions (Rospigliosi Citation2023). Its primary function is to facilitate student engagement by posing questions and offering follow-up inquiries for students to consider.

While teaching with conversational AI allows us to explore students’ cognitive thinking skills and their relationship with questioning and reflective abilities, we often overlook a crucial connection to the development of higher-order thinking skills in problem-solving within the educational context. Furthermore, this cognitive relationship has not been adequately investigated within a specific problem-solving framework in a quantifiable manner (Ottenbreit-Leftwich et al. Citation2021; Sit et al. Citation2020). On the other hand, although some researchers have developed thinking models to support questioning and reflective thinking using AI tools, these models are mostly confined to specific fields, such as language education (Liu et al. Citation2023).

To address this research gap, this study proposes the utilisation of Bloom's Taxonomy model to deepen learners’ cognitive thinking by (1) examining the process of questioning and reflection within the structured framework of Bloom's Taxonomy, and (2) determining how the insights gained through questioning and reflective practice can be applied to students’ utilisation of generative artificial intelligence. ChatGPT was selected as the platform for testing this thinking framework due to its ability to facilitate students’ questioning and rethinking process, which lies at the heart of interactive learning (Rospigliosi Citation2023). In early 2023, while some schools worldwide prohibited the use of generative AI, the International Baccalaureate Organisation published an article in The Times endorsing the use of ChatGPT, a generative AI, and expressing the belief that AI technology will become an integral part of everyday life (Glanville Citation2023). The IB encourages its students to embrace the opportunities presented by AI tools. However, the pedagogical instructions provided by the IBO were primarily conceptual and lacked practical guidelines. This study aims to explore the utilisation of AI tools and the application of questioning and reflective thinking among non-university students, building upon this context.

Conceptual framework

Discussions among scholars have revolved around effective strategies for engaging students in interactive learning through questioning and rethinking. As early as the 1970s, researchers introduced the interaction theory, which emphasises the collaborative nature of learning, where learners interact and communicate with others to articulate and share knowledge (Pask Citation1975). Within this theory, students employ their everyday language to delve into specific subjects and engage in metacognitive reflection during the learning process. The interaction theory has also been integrated into the field of artificial intelligence in education. Harel and Papert (Citation1990) identified three key purposes of questioning in interactive learning: appropriability, evocativeness, and integration. Appropriability refers to students’ ability to personalise the information they receive, ask follow-up questions, and seek clarification. Evocativeness allows students to scaffold their questioning and answering skills, enabling deeper personal reflection. Integration enables students to merge their existing knowledge with new information, fostering conceptual understanding. These theories underscore the significance of developing students’ interactive, knowledge, and metacognitive skills.

However, some students experience anxiety when engaging in authentic communication and receiving feedback through conversational AI. To tackle this issue, scholars propose that scaffolding students’ questioning and feedback-receiving processes within AI platforms can create a less intimidating learning environment (Ji, Han, and Ko Citation2023). Currently, only a limited number of thinking models are available that scaffold students’ questioning and responding skills when utilising artificial intelligence. Spector & Ma (Citation2019)’s framework of critical thinking was used to explore the inquiry process using artificial intelligence, which include steps of interpretation, explanation, reasoning, analysis, evaluation, synthesis, reflection, and judgement; Liu et al. (Citation2021)’s RTP AIEW was used to scaffold English as foreign language learners to make summary, questions, feedback, and reflection during writing; Shin (Citation2021) put forth a learning approach that leverages AI capabilities to memorise information, comprehend relationships, apply skills, and interpret AI responses using general skills; In terms of creativity, Creely (Citation2023) devised a thinking model that explores the intersection of creativity and AI, envisioning diverse applications of human-AI interactions.

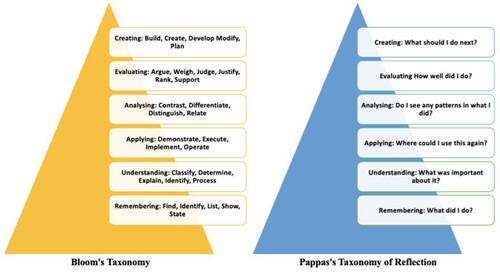

These thinking models only cover a single aspect of subject knowledge or cognitive skills and are fundamentally based on the revised Bloom's Taxonomy. Bloom initially created Bloom's Taxonomy in 1956, establishing six cognitive levels in a hierarchical order. In 2002, Krathwohl further revised the taxonomy by replacing ‘Synthesis’ with ‘Creating’ and identified the following six cognitive skills: remembering, understanding, applying, analysing, evaluating, and creating (Muhayimana, Kwizera, and Nyirahabimana Citation2022). The hierarchical structure was then divided into two levels: Higher-Order Thinking Skills (creating, evaluating, and analysing) and Lower-Order Thinking Skills (applying, understanding, and remembering). In 2010, Pappas developed the Taxonomy of Reflection to assess students’ ability to utilise reflective thinking skills by integrating Bloom's cognitive levels. Pappas discovered that students’ cognitive styles differ, resulting in variations in their ability to think reflectively (Syamsuddin Citation2020). Both the revised Bloom's Taxonomy and the Taxonomy of Reflection are employed to delve into students’ mental processes. While there have been numerous studies focusing on the cognitive processes of AI usage, these studies often neglect the development of human affective reactions, such as curiosity and attention to detail, as well as how machines support human social activities (Osbeck and Nersessian Citation2011; Fu and Zhou Citation2020). Joksimovic et al. (2023) suggested that monitoring students’ metacognitive processes can enhance their learning. However, research exploring the depth of thinking skills and the frequency of different cognitive levels in questioning and reflecting is lacking (Kubota, Miyazaki, and Matsuba Citation2021).

To address this gap and recognise the metacognitive relationship when children employ generative artificial intelligence in various aspects, I propose connecting Bloom's Taxonomy with Pappas's Taxonomy of Reflection as a cognitive thinking model for using AI. The communication process consists of four stages: questioning, receiving feedback, reflecting on feedback, and finalising solutions. These stages occur within an authentic problem-solving context. In the questioning stage, students generate and input their questions based on the six levels of the cognitive domain in the revised Bloom's Taxonomy. During the feedback-receiving process, students receive and interpret answers generated by chatbots. After receiving answers from the chatbots, students reflect on the answers, referencing the levels of the Taxonomy of Reflection. Based on their reflection, students generate follow-up questions, referencing the cognitive domain once again. The first three stages repeat in a loop until students are satisfied with the solutions, after which they move on to finalising the solutions (see ).

When exploring the intersection of education and AI, researchers have extensively discussed the impact of AI on students’ learning experiences. Chatbots, in particular, have emerged as a valuable tool with the potential to enhance learning in various ways. Scholars have highlighted the benefits of chatbots in offering personalised learning opportunities, providing on-demand support, and promoting student engagement (Biswas Citation2023; Fuchs Citation2023; Maboloc Citation2024). However, concerns have also been raised regarding the limitations of chatbots, such as their accuracy in providing information and their analytical capabilities, as well as the potential risk of excessive reliance on them (Biswas Citation2023; Fuchs Citation2023). Amidst these discussions, Kalla and Smith (Citation2023) have outlined three pedagogical applications of Chat GPT in learning. Firstly, chatbots can be utilised to develop tutoring programmes that cater to individual students’ learning needs. Secondly, they can simulate real-world interactions and respond to students’ inputs, offering immersive learning experiences. Lastly, chatbots can assist students with their academic work, providing guidance and support throughout the learning process. These applications are further supported by Mhlanga's (Citation2023) research, which examined students’ usage of chatbots. In a literature review by Casal-Otero et al. (Citation2023) on AI education in school contexts, the integration of AI across various subject fields is acknowledged. However, it is suggested that AI studies should not solely focus on subject-specific knowledge but also address the development of students’ transferable skills through the use of AI tools. Casal-Otero proposes that real problem-solving scenarios incorporating AI tools should serve as the foundation for the learning process. Building on these perspectives, this study aims to utilise problem-solving scenarios as a basis for students to integrate their questioning and reflecting processes with the Revised Bloom's taxonomy model. By engaging with AI tools within a problem-solving context, students can not only acquire a deeper understanding of subject matter but also enhance their critical thinking and metacognitive skills.

Context

In early 2023, while some schools around the world banned the usage of generative AI in schools, the International Baccalaureate Organisation (IBO) published an official document welcoming the usage of generative AI tools, ‘Rather than shying away from artificial intelligence (AI), the IB is excited by the opportunities that these tools bring to education to enhance learning experiences and provide additional support to our students.’ (IBO Citation2023) The IB curriculum also tries to help students identify the bias inherent in the content that an AI tool produces and to critically review it. However, how students can review the AI generated content is still yet to be explored since the IB guidelines were mostly conceptual, without any practical guidelines. Meanwhile, the IB implementation also depends on the local government-level policy documents on students’ learning. In Hong Kong, under the expansion of IB curriculum around the world, the IB curriculum becomes substantive legitimacy as the international curriculum of choice in Hong Kong (Lee, Kim, and Wright Citation2022), but it is also influenced by the local education system (Dulfer Citation2019). Some IB schools are under the Direct Subsidy Scheme (DSS) in which schools received funding from the government to offer the IB programme to provide students with an additional curriculum choice. They are required to offer both a local and IB curriculum (LEGCO, Citation2019). This leads to the trend of some IB schools trying to balance the educational approaches and curriculum demands from the Hong Kong Education Bureau (Lai, Shum, and Zhang Citation2014).

In the Hong Kong context, there are no explicit government-level policy documents; a study conducted by the Hong Kong Legislative Council stressed the necessity of seising AI opportunities in critical sectors like education to maintain competitiveness and prosperity for 2047 and beyond (Legislative Council Citation2019). In spite of efforts in policy advocacy and allocation of public resources, the preparedness of individual schools to integrate AI into primary education has fallen short of desired outcomes. UNESCO (Citation2019) has highlighted that schools across various settings encounter difficulties in adopting AI in education. These difficulties manifest at the systemic, school, and students levels, encompassing issues such as infrastructure readiness (Wang and Cheng Citation2020), students’ lack of intention to use AI with the lack of AI literacy skills and of well-defined curriculum directives from education authorities regarding pedagogy of incorporating AI into the students’ learning, and facilitation of students’ critical reflection on AI responses (Chai et al. Citation2021). The chosen school in this study is a DSS school which is implementing both IB and Hong Kong primary curriculum. It shows the struggle of schools which lack pedagogical insights from Hong Kong local government on AI instructions under the trend of incorporating AI tools in learning and teaching.

Methods

An experiment was conducted in a Year 5 class in an IB PYP school. The school arranged for the participants to be enrolled in a bi-weekly course covering basic AI knowledge and digital skills. Specifically, the course covered the usage of Chat GPT, applications (e.g. content recommendation, and machine learning), and the ethical use of AI. As noted in classroom observations, the participants participated in the hands-on use of an AI tool, Chat GPT, and discussions on the use of Chat GPT. In the study, 25 students (13 boys and 12 girls) spent an average of 2.5 h on AI-related learning activities. Then they spent 30 min learning about the interface of the Chat GPT, including how to input prompts and ask follow up questions. Before the activities, a short online survey was distributed to students to assess their prior-understanding of AI, including their experience of AI usage and impression of AI. There are only four questions in total, including a yes-no choice question asking students about whether they have used AI tools before. Also, three open-ended questions were included to ask about students’ experience, impression and understanding of AI functions. 92% of the students have heard of AI, mainly through digital media (i.e. youtube), but none of them have used AI tools before. Most of them consider AI useful for generating images, essays and responses which is beneficial to their study.

The students spent 2 h participating in the experimental learning with the proposed bloom's taxonomy model. Based on Kalla and Smith's (Citation2023) and Mhlanga (Citation2023) work, three themes, including students’ individualised learning experiences, acting out real-world interactions and responding to students input; and aiding students with their academic use, are emphasised. Under each themes, two experiential tasks were created, including:

to develop a detailed research plan related to a topic

to describe how an unfamiliar term functions

to come across a text in an unfamiliar language

to draft an email

to write a narrative text about a memorable adventure for learning task

to share a different viewpoint for learning task

In the experiment, the students were instructed to maintain an online journal throughout the task completion process. The journal entries consisted of three key components: (1) initial questions posed by the students, (2) responses provided by the AI system (referred to as POE), and (3) reflections on the POE's responses. To analyse the collected data, the journals were downloaded as Word files, and the questions, POE responses, and student reflections were compiled into an Excel file. The content analysis methodology was employed to identify and analyse the specific cognitive domains present in the journal entries. The questioning phase of the journals was analysed first. The questions were coded using action verbs associated with the six cognitive levels of the revised Bloom's Taxonomy: remembering, understanding, applying, analysing, evaluating, and creating. A checklist developed by Das, Das Mandal, and Basu (Citation2022), which includes cognitive levels, cognitive domains, and corresponding action verbs, was used as a reference during the coding process. The reflections in the journals were then coded using Pappas's (Citation2020) framework, which aligns with the six cognitive domains used in the questioning phase. This coding process facilitated the categorisation of reflections based on the specific cognitive activities involved. The coding results, along with their frequencies, were recorded in an Excel file, providing an overview of the distribution and prevalence of different cognitive levels and domains within the students’ questioning and reflecting processes.

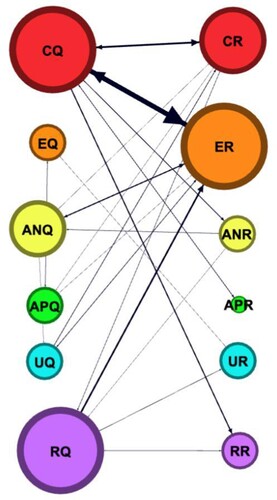

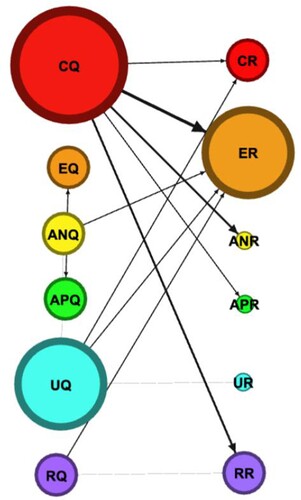

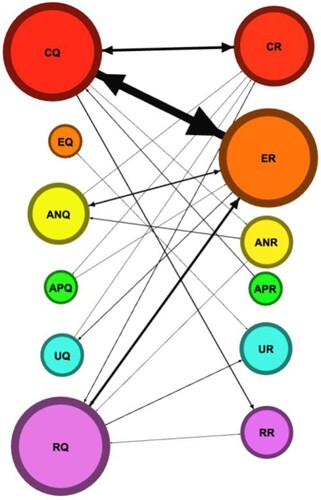

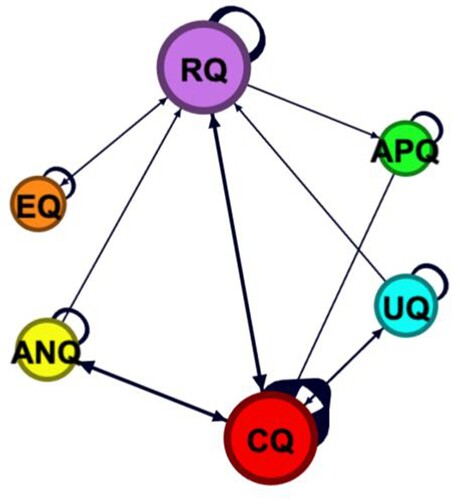

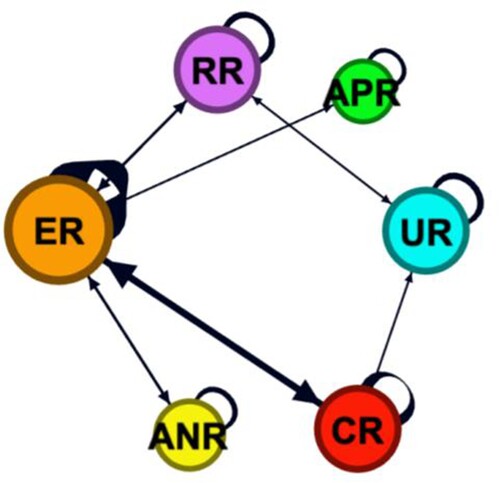

A questioning-reflecting network model was constructed based on the six cognitive dimensions outlined in Bloom's Taxonomy. Each cognitive level was assigned a short-form label: Creating (CQ), Evaluating (EQ), Analysing (ANQ), Applying (APQ), Understanding (UQ), and Remembering (RQ). The same short-form labels were used for the reflecting levels: Creating (CR), Evaluating (ER), Analysing (ANR), Applying (APR), Understanding (UR), and Remembering (RR). By considering the cognitive levels as nodes, the network module structure was identified, revealing the interconnectedness between different cognitive levels within the questioning-reflecting process. The relationships between the overall questioning-reflecting process, one-way questioning-reflecting, and two-way questioning-reflecting processes were also examined. Gephi, a network analysis and visualisation tool, was utilised to analyse the structural characteristics of the overall questioning-reflecting mode and visualise the network. Through this comprehensive analysis, the aim was to gain insights into the cognitive patterns and dynamics present in the students’ questioning and reflecting activities. This analysis sheds light on the interplay between these cognitive processes and provides an understanding of the overall structure of the questioning-reflecting mode.

Results

In the study, a total of 120 responses were generated by the 25 participating students. After filtering, 83 valid responses containing both questions and reflections were obtained. Among these responses, approximately 70% (55 responses) consisted of two-way dialogues between students and the CHAT GPT, while the remaining half comprised one-off interactions. The overall interactive model exhibited an average degree of 2.75, indicating that, on average, each question prompted around 2.75 reflections. The graph density of the model was 0.25, suggesting relatively sparse connectivity between questions and reflections. In the one-way questioning-reflecting model, the average degree was slightly lower at 2.33, and the graph density was 4.833, indicating a denser network of connections. In contrast, the two-way questioning-reflecting model had an average degree of 2.5 and a higher graph density of 8.667, suggesting an even more interconnected network of questions and reflections. These metrics provide insights into the typical number of reflections prompted by a single question and the level of connectivity and interdependence between questions and reflections within the interactive model.

Across the three models, it was consistently observed that students employed higher-order thinking in both their questioning and reflecting processes. In the overall model, the collective degree of higher-order questions (Creating, Evaluating, and Analysing) exceeded that of lower-order questions by a factor of 1.4. ‘Creating’ exhibited the highest weighted degree (49.0) in the questioning phase, while ‘Evaluating’ and ‘Creating’ had the highest weighted degrees (47.0 and 15.0, respectively) during reflection. This dominance of ‘Creating’ was consistent across both one-way and two-way interactions. Additionally, ‘Remembering’ and ‘Understanding’ played significant roles in questioning, with degrees of 19.0 and 5.0, respectively. Typically, these cognitive levels manifested as follow-up questions within the overall and two-way models.

Regarding the relationship between questioning and reflecting, it was observed that students generally did not employ the same cognitive levels for both. However, the combination of Creating questions (CQ) and Creating reflections (CR) exhibited the highest degree across all models, encompassing overall, one-way, and two-way interactions, with a degree of 24.0. On the other hand, ANQ-ER (Analysing questions – Evaluating reflections) and RQ-ER (Remembering questions – Evaluating reflections) were more prevalent in mutual interactions, while CQ-ANR (Creating questions – Analysing reflections) and CQ-RR (Creating questions – Remembering reflections) were more commonly observed in one-way interactions. It is worth noting that Applying, both in questioning and reflecting, demonstrated the lowest degree. This observation suggests that students may have lacked the skills to apply their AI conversations to other realms of learning ().

In the two-way interaction model, the relationship among cognitive levels in generating questions was investigated. A total of 58 questions were analysed, and the findings revealed that ‘Creating’ was the most significant element within the network. It had the highest degree (9.0) and weighted degree (136.0), indicating its strong connectivity and influence. ‘Creating’ was connected to and attracted the most connections from other thinking elements, as reflected in its highest weighted in-degree (68.0) and weighted out-degree (68.0). This suggests that ‘Creating’ received inputs and influences from a wide range of thinking elements and had strong connections and influence on other cognitive levels in generating questions. Remembering also played an important role in the network, ranking second in both degree and weighted degree. It was noteworthy that Remembering linked with other higher-order thinking levels, such as Creating, Analysing, and Evaluating, as consecutive questions. For example, remembering-creating and creating-remembering pairs had higher weights (4.0 and 3.0), indicating their significance in the questioning process.

In the reflection process, which included 59 valid responses, there was a greater emphasis on evaluating compared to the questioning process. This was evident from the high degree of Emphasising, which ranked significantly higher at 130.0 compared to the second-ranking degree of Creating at 39.0. Additionally, both the weighted in-degree and weighted out-degree of Emphasising were the highest in the network, measuring 65.0 each. These findings indicate that evaluating the Chat GPT resources played a prominent role in students’ interactive communication with CHAT GPT. It was observed that most students engaged in consecutive evaluations of the Chat GPT resources during their interactions. The occurrence of Creating and Evaluating questions in sequence suggests a certain connection between them. Specifically, Creating-Evaluating pairs had a degree of 7.0, ranking third in the network, while Evaluating-Creating pairs had a degree of 6.0, ranking fourth. This pattern suggests that students often followed up their creation of questions with an evaluation of the responses received.

Discussion

Based on the analysis conducted on the network of questions and reflections, it was determined that the cognitive level of ‘Creating’ played a significant role, demonstrating strong connectivity and influence within the network. ‘Creating’ exhibited the highest degree and weighted degree, indicating its prominence. It attracted connections from various cognitive levels, implying input and influence from a diverse range of thinking elements. Additionally, ‘Remembering’ also held importance as it was associated with higher-order thinking levels such as creating, analysing, and evaluating in a sequential manner. During the reflection process, the emphasis on evaluating surpassed that of questioning. ‘Emphasising’ emerged as the cognitive level with the highest degree and weighted degree, signifying its pivotal role in evaluating the resources provided by Chat GPT. The consecutive occurrence of creating and evaluating questions suggested a connection between these two processes, with students frequently assessing the responses they received after generating their own questions. These findings underscore the significance of higher-order thinking skills, particularly creating and evaluating, in the interactive communication between students and AI tools. Teachers can employ the revised Bloom's taxonomy to effectively support and guide students in utilising AI tools while engaging in questioning and reflection. It is important to note, however, that the study had a limited sample size, necessitating further research with larger and more diverse samples across various age groups and cultural contexts to validate the findings and enhance the framework for integrating thinking skills in AI interactions. The proposed taxonomy model and cognitive framework have the potential to benefit researchers evaluating learning processes using AI in different fields. Nevertheless, extensive testing is required to establish the model's reliability and explore its applicability in diverse educational settings. The framework serves as a starting point for students to interact with AI tools and suggests strategies for enhancing their questioning and reflection skills within the rapidly evolving landscape of AI technologies and emerging educational futures. Future research should adopt a quantitative approach with larger samples of students to further validate the model, while a comparative approach can investigate variations among different student groups and cultural contexts.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Adamopoulou, E., and L. Moussiades. 2020. “Chatbots: History, Technology, and Applications.” Machine Learning with Applications 2:100006. https://doi.org/10.1016/j.mlwa.2020.100006.

- Biswas, S. 2023. “Role of Chat GPT in Education.” Available at SSRN 4369981.ISO: 690.

- Casal-Otero, Lorena, Alejandro Catala, Carmen Fernández-Morante, Maria Taboada, Beatriz Cebreiro, and Senén Barro. 2023. “AI Literacy in K-12: A Systematic Literature Review.” International Journal of STEM Education 10 (1). https://doi.org/10.1186/s40594-023-00418-7.

- Chai, C. S., P.-Y. Lin, M. S.-Y. Jong, Y. Dai, T. K. F. Chiu, and J. Qin. 2021. “Perceptions of and Behavioral Intentions Towards Learning Artificial Intelligence in Primary School Students.” Educational Technology & Society 24 (3): 89–101. https://www.jstor.org/stable/27032858

- Chen, L., P. Chen, and Z. Lin. 2020. “Artificial Intelligence in Education: A Review.” IEEE Access 8:75264–75278. https://doi.org/10.1109/ACCESS.2020.2988510.

- Creely, E. 2022. “Conceiving Creativity and Learning in a World of Artificial Intelligence: A Thinking Model.” In Creative Provocations: Speculations on the Future of Creativity, Technology & Learning. Creativity Theory and Action in Education, edited by D. Henriksen and P. Mishra, vol. 7. Cham: Springer. https://doi.org/10.1007/978-3-031-14549-0_3.

- Das, S., S. K. Das Mandal, and A. Basu. 2022. “Classification of Action Verbs of Bloom’s Taxonomy Cognitive Domain: An Empirical Study.” Journal of Education 202 (4): 554–566. https://doi.org/10.1177/00220574211002199.

- Dulfer, N. 2019. “Differentiation in the International Baccalaureate Diploma Programme.” Journal of Research in International Education 18 (2): 142–168. https://doi.org/10.1177/1475240919865654.

- Fu, Z., and Y. Zhou. 2020. “Research on Human–AI co-Creation Based on Reflective Design Practice.” CCF Transactions on Pervasive Computing and Interaction 2 (1): 33–41. https://doi.org/10.1007/s42486-020-00028-0.

- Fuchs, K. 2023. “Exploring the Opportunities and Challenges of NLP Models in Higher Education: Is Chat GPT a Blessing or a Curse?” In Frontiers in Education, 1166682, Vol. 8. Frontiers.

- Glanville, M. 2023. “Why ChatGPT is an Opportunity for Schools.” International Baccalaureate®. Accessed 8 March, 2024. https://www.ibo.org/news/news-about-the-ib/why-chatgpt-is-an-opportunity-for-schools/.

- Harel, I., and S. Papert. 1990. “Software Design as a Learning Environment.” Interactive Learning Environments 1 (1): 1–32. https://doi.org/10.1080/1049482900010102. Taylor & Francis Online], [Google Scholar.

- IBO. 2023. “Artificial Intelligence (AI) in Learning, Teaching, and Assessment.” International Baccalaureate. Accessed 8 March, 2024. https://www.ibo.org/programmes/artificial-intelligence-ai-in-learning-teaching-and-assessment/#:~:text=At%20the%20IB%2C%20we%20will,examiners%20during%20the%20marking%20process.

- Ji, H., I. Han, and Y. Ko. 2023. “A Systematic Review of Conversational AI in Language Education: Focusing on the Collaboration with Human Teachers.” Journal of Research on Technology in Education 55:48–63. https://doi.org/10.1080/15391523.2022.2142873.

- Kalla, D., and N. Smith. 2023. “Study and Analysis of Chat GPT and its Impact on Different Fields of Study.” International Journal of Innovative Science and Research Technology 8 (3): 7.

- Kubota, S., M. Miyazaki, and R. Matsuba. 2021. “INTED Proceedings.” In INTED2021 Proceedings, 5658–5662. https://doi.org/10.21125/inted.2021.1141.

- Lai, C., M. S. K. Shum, and B. Zhang. 2014. “International Mindedness in an Asian Context: The Case of the International Baccalaureate in Hong Kong.” Educational Research 56 (1): 77–96. https://doi.org/10.1080/00131881.2013.874159.

- Lee, M., H. Kim, and E. Wright. 2022. “The Influx of International Baccalaureate (IB) Programmes Into Local Education Systems in Hong Kong, Singapore, and South Korea.” Educational Review 74 (1): 131–150. https://doi.org/10.1080/00131911.2021.1891023.

- Legislative Council (LEGCO). 2019. “Study of Development blueprints and Growth Drivers of Artificial Intelligence in Selected Places.” Accessed 8 March, 2024. https://www.legco.gov.hk/research-publications/english/1920in01-study-of-development-blueprints-and-growth-drivers-of-artificial-intelligence-in-selected-places-20191023-e.pdf.

- Liu, C., J. Hou, Y.-F. Tu, Y. Wang, and G.-J. Hwang. 2023. “Incorporating a Reflective Thinking Promoting Mechanism Into Artificial Intelligence-Supported English Writing Environments.” Interactive Learning Environments 31 (9): 5614–5632, https://doi.org/10.1080/10494820.2021.2012812.

- Maboloc, Christopher Ryan. 2024. “Chat GPT: The Need for an Ethical Framework to Regulate its use in Education.” Journal of Public Health 46 (1): e152. https://doi.org/10.1093/pubmed/fdad125.

- Mhlanga, D. 2023. “The Value of Open AI and Chat GPT for the Current Learning Environments and the Potential Future Uses.” SSRN Electric Journal. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4439267.

- Muhayimana, T., L. Kwizera, and M. R. Nyirahabimana. 2022. “Using Bloom’s Taxonomy to Evaluate the Cognitive Levels of Primary Leaving English Exam Questions in Rwandan Schools.” Curriculum Perspectives 42:51–63. https://doi.org/10.1007/s41297-021-00156-2.

- Osbeck, L. M., and N. J. Nersessian. 2011. “Affective Problem Solving: Emotion in Research Practice.” Mind & Society 10 (1): 57–78. https://doi.org/10.1007/s11299-010-0074-1.

- Ottenbreit-Leftwich, A., K. Glazewski, M. Jeon, C. Hmelo-Silver, B. Mott, S. Lee, and J. Lester. 2021. “How do Elementary Students Conceptualize Artificial Intelligence?” In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education (pp. 1261). ACM. https://doi.org/10.1145/3408877.3439642.

- Pappas, P. 2020. A Taxonomy of Reflection: Critical Thinking for Students, Teachers, and Principals (part 1). Copy / Paste. https://peterpappas.com/2010/01/taxonomy-reflection-critical-thinking-students-teachers-principals.html.

- Pask, G. 1975. Conversation, Cognition, and Learning. New York: Elsevier.

- Rospigliosi, P. 2023. “Artificial Intelligence in Teaching and Learning: What Questions Should We Ask of ChatGPT?” Interactive Learning Environments 31 (1): 1–3. https://doi.org/10.1080/10494820.2023.2180191.

- Shin, S. 2021. “A Study on the Framework Design of Artificial Intelligence Thinking for Artificial Intelligence Education.” International Journal of Information and Education Technology 11 (9): 392–397. https://doi.org/10.18178/ijiet.2021.11.9.1540.

- Sit, C., R. Srinivasan, A. Amlani, K. Muthuswamy, A. Azam, L. Monzon, D. S. Poon, et al. 2020. “Attitudes and Perceptions of UK Medical Students Towards Artificial Intelligence and Radiology: A Multicentre Survey.” Insights Into Imaging 11:14. https://doi.org/10.1186/s13244-019-0830-7.

- Spector, J. M., and S Ma. 2019. “Inquiry and Critical Thinking Skills for the Next Generation: From Artificial Intelligence Back to Human Intelligence.” Smart Learning Environments 6. https://doi.org/10.1186/s40561-019-0088-z.

- Syamsuddin, A. 2020. “Describing Taxonomy of Reflective Thinking for Field Dependent-Prospective Mathematics Teacher in Solving Mathematics Problem.” International Journal of Scientific & Technology Research 9:4418–4421.

- UNESCO. 2019. “Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development.” https://unesdoc.unesco.org/ark:/48223/pf0000366994.

- Wang, T., and E. C.-K. Cheng. 2020. “Thinking Aloud and Progressing Together: Cultivating Communities of Practice for Supporting Hong Kong K-12 Schools in Embracing Artificial Intelligence.” Paper Presented at The International Conference on Education and Artificial Intelligence 2020 (ICEAI 2020), Hong Kong, People’s Republic of China.