ABSTRACT

The use of VR in engineering education provides a means for contextualising learning through authentic and engaging scenarios, e.g. the touring and operation of a chemical plant. This paper presents the design of an immersive VR simulation of an existing pilot plant in the School and its evaluation through a randomised non-inferiority trial to test student learning compared to a more traditional learning medium. Specifically, the study evaluates information retention (i.e. memory and understanding) when using the VR simulation and compares this to information retention when presented as a multimedia instructional video. Student self-efficacy in plant operation and design (e.g. equipment function and operation), as well as engagement in the learning activity, are also evaluated. The results indicate that both the VR and multimedia-based activities lead to comparable data retention and student self-efficacy. Moreover, students enjoyed the VR experience and welcomed the addition of the resource to their educational materials.

1. Introduction

There has been considerable interest in the use of virtual reality (VR) in education in recent years (Douglas-Lenders, Holland, and Allen Citation2017) due to the increased availability of VR technology to end-consumers. It is recognised that VR can provide exciting opportunities to support teaching and learning (Zyda Citation2016). The use of this technology in training and education contexts has in most cases showed success (Shen et al. Citation2017), and although the technology suffers limitations and implementation challenges, educational goals seem to be achievable through and engaging format. Examples of successful use include military training applications (Pallavicini et al. Citation2016), simulations of laboratories within engineering contexts (Ren et al. Citation2015) and workplace learning and professional development scenarios (Cakula, Jakobsone, and Motejlek Citation2013; Jakobsone, Motejlek, and Cakula Citation2014).

The initial research on the use of end-consumer VR in education has mainly focussed on technological aspects. For example, broad overviews of VR-based laboratories have been presented by Potkonjak et al. (Citation2016) whereby trends in the development of VR learning tools (such as headsets and positional tracking components) for science and technology education are described. Although the authors argue that in addition to the technical issues, questions also arise concerning pedagogy in the use of VR, these questions were not addressed. Similarly, various technological systems for education are described by Hilfert and König (Citation2016), but focus given on their cost and accessibility. However, in order to drive the use of VR in education, there is a need for evaluation of the learning materials from an educational standpoint, and indeed methods of evaluation for effective comparison against traditional learning approaches. A large number of researchers have focussed on the qualitative assessment of student behaviour in a VR learning environment, for example, the feeling of presence (Bailey, Bailenson, and Casasanto Citation2016) or aspects of social interaction (Pan and Hamilton Citation2018). Studies researching the specific nature of learning in VR, such as effects on student memory or an ability to navigate in unknown locations, are rare, but with some notable exceptions such as research in developing spatial ability (Sun, Wu, and Cai Citation2018) or visual memory (Kisker, Gruber, and Schöne Citation2021) in VR. To date, there has been relatively little examination of how VR affects a student’s ability to remember information conveyed in a fully immersive 3D virtual environment. Nevertheless, the work of Allcoat and von Mühlenen (Citation2018) suggests that students taught in a VR environment perform better than those who receive traditional learning materials (i.e. textbook and video), albeit the study does not provide details on how the VR learning materials were constructed and how they differed from the traditional materials.

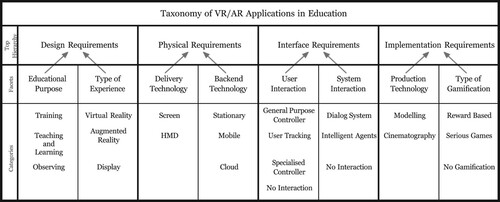

In this study, in order to compare the cognitive effects of VR and a more traditional education format, an experimental method of assessing student information retention has been developed. Specifically, the work compares the retention of verbal information in the VR learning environment with that of a multimedia educational video. A taxonomy for the use of VR in education (Motejlek and Alpay Citation2021) was used to aid the design of the activities and to specify which kind of VR to employ; see . This considered both technology-related areas as well as education and interaction-related matters.

In this taxonomy, ‘educational purpose’ categories were developed through consideration of Bloom’s taxonomy of learning (Bloom et al. Citation1956) as well as the thematic characterisation of current VR-based educational tools. Bloom’s taxonomy involves three fundamental domains: cognitive, affective, and psychomotor, each of which could be associated with a class of VR applications based on their core design purpose. Thus, for the taxonomy, the educational purpose of training is defined as the development of very specific, practical, psychomotor skills for later real-world application. Examples include VR simulations with haptic devices to simulate medical and dental procedures (Bayram and Caliskan Citation2021), and indeed to simulate mechanical workshop procedures such as welding (Chan et al. Citation2022). The cognitive domain represents knowledge transfer, application and higher-order processing, and is best captured by the cognitive process dimension of the revised Bloom’s taxonomy described by Krathwohl (Citation2002). Specifically, cognitive progression based on the six dimensions of remember, understand, apply, analyse, evaluate, and create in order of increasing cognitive demand. VR applications supporting student learning in Higher Education have to date focused on the remember and understand dimensions, but with much scope for use across the cognitive domain (see also the discussion in Section 4 below). For purposes of this work, we refer to this cognitive use of VR as teaching and learning and is the central focus of the current study. Finally, the affective domain relates to emotions and their connections to underlying student values, ethics, and beliefs. In this work, VR applications designed to profoundly motivate and engage the student in a new subject area or issue are categorised as observing. Examples include applications appearing in public galleries and discovery centres.

Information processing, as tested in this study is part of the second educational purpose of teaching and learning. The goal of teaching general knowledge is to prepare the student to retain and understand knowledge to be used in other contexts. The student is being exposed to theory and underlying principles, and such knowledge is expected to be transferable to other situations and environments; c.f. the two-dimensional aspect of knowledge integration into the cognitive dimensions discussed by Krathwohl (Citation2002). Currently, the number of examples of successful VR educational applications is relatively low, as often their development is challenging (Holmes Citation2007) with success relying on effective scaffolding (Coleman Citation1998) as well as effective integration of assessment and feedback. However, some examples of successful applications exist in areas of language teaching (Shih and Yang Citation2008), general lab work (Ren et al. Citation2015), and agriculture (Laurel Citation2016).

There are a number of types of learning resources that have emerged over the years that achieve the goal of teaching general knowledge well (BIS Citation2013). These include educational videos, Khan’s academy, Massive Open Online Courses (MOOCs), and similar multimedia resources. With reference to the taxonomy, the type of VR being considered in the current work is fully immersive 3D (which displays the visuals to the user using a head mounted display (HMD)), and thus distinct from typical online media. The user can interact with the virtual world using tracked controllers, and the virtual world with the students through intelligent agents in the form non-player characters (NPCs). This potentially creates a highly engaging learning environment; see also below. However, there are currently at least three challenges specific to the use of VR for this educational purpose, which are not present in the other multimedia learning resources:

Students cannot read very well in VR

Students cannot pause the VR in the way educational videos can be paused

Students cannot take notes as fluently (e.g. physical pen or keyboard) when immersed in VR (see further discussion below)

The majority of the information given to the student in VR is spoken by an NPC.

The information is split into small chunks and the student can choose which one he or she wants to hear.

Given the advantages and disadvantages of use of VR in chemical engineering, this study aims to answer the following questions:

Do students retain (i.e. remember and understand) the same amount of information when conveyed in either VR or multimedia video?

Do students feel the same level of confidence in their abilities when information is conveyed in either VR or multimedia video?

The specific hypotheses are:

Hypothesis 1: The student retention of verbal information in VR is at least as effective as when using multimedia video.

Hypothesis 2: The student reported self efficacy after a VR learning experience is comparable to multimedia video learning experience.

A useful case for testing the benefits of a VR application was for a chemical plant facility within the department of Chemical and Process Engineering, University of Surrey; see Section 2.1.1. Specifically, the motivation for a VR equivalent of the plant include: overcoming the logistical, safety, and cost (materials and supervision) issues associated with student access and use of industrial equipment; allowing repetitive and self-paced learning of plant operation and design elements; and offering learning scenario simulations, such as fault simulation and extreme hazard occurrences, that would not be possible or desirable with the real plant.

To answer the above research questions a non-inferiority test was used. Ordinarily, the null hypothesis sets out that the measured and expected data sets are not different from each other, whereas the alternative hypothesis is accepted in the case where the difference between the two datasets is statistically significant. In the case of non-inferiority trials, the null hypothesis sets out that the datasets are different (e.g. that the measured is inferior to the expected) and if the difference is not statistically significant the alternative hypothesis is accepted (Walker and Nowacki Citation2010). For this reason, the non-inferiority test was chosen because the goal of this study is to prove that the new educational approach using VR is no less effective than the equivalent approach currently being employed in the real world, whilst of course still offering the advantages of educational logistics, engagement and learning experiences that may be impossible in a real industrial environment. Non-inferiority tests use an equivalence margin (see section Quiz) to establish if the two datasets are not inferior to each other. Such a test is commonly used in medical sciences but are increasingly being employed in psychology and education research contexts (Lakens, Scheel, and Isager Citation2018).

2. Methodology

The work centres around a Pilot Plant facility within the Department of Chemical and Process Engineering at the University of Surrey. The plant constitutes a number of equipment items, including a reaction vessel, heat exchangers, filters, pumps, a carbon dioxide absorption column, and water deioniser.

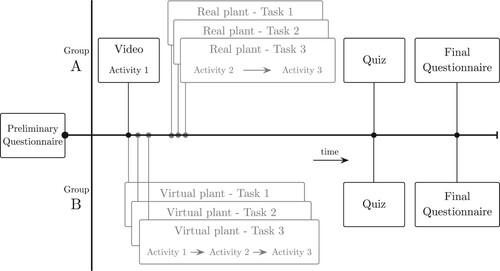

This study was designed to assess non-inferiority of using a multimedia video to explain equipment function compared to using a VR platform. As part of the partially randomised control trial the students were separated into two groups: real plant group (control) and the VR group (experimental). Both groups received educational content with the same content matter but in a different order. The student performance, and increase of performance, in a VR activity will depend on their prior experience with VR (Grantcharov et al. Citation2003; Gomoll et al. Citation2007) and indeed understanding of the educational content. Thus, in order to have a similar student VR background in each group, the students first completed a preliminary questionnaire which measured their current level of knowledge of the chemical plant. Students with higher previous experience/knowledge were subsequently spread across the two groups.

The Real Plant group first viewed an introductory video containing information about the chemical plant and then proceeded to perform tasks on the real chemical plant which involved locating certain valves. On both the virtual and real plant the tasks involved identifying the correct valve to perform a certain action on the plant and then locating the valve on the plant. This was followed by a quiz on plant understanding, taken approximately 30 min after viewing the introductory video. The VR group used an interactive VR application which presented them with the same information as the video, and then proceeded to perform the same tasks in the VR version of the plant. The same quiz as the Real Plant group was then administered. Both experiments were performed with the students individually and took approximately 60 min per student in total. Both groups then filled in a self-efficacy questionnaire designed to gauge their perceived learning gain and experience. The tasks did not affect the students’ final scores and allowed for spacing out the initial exposition to the information and its testing. A questionnaire was also used to measure the student experience of VR relative to traditional learning materials (i.e. common slide and video content), including any adverse effects. Further questionnaire (and implementation) details are presented below.

2.1. Educational materials

Novel VR educational materials were created and assembled for this study. These included a 3D Pilot Plant simulation and VR (NPC) narration. Further description of these materials is given below; see also for a summary of the research design.

2.1.1. 3D plant

A realistic 3D model of the facility was created as part of this project and necessary VR-related interaction was developed using a game engine – Unity3D. In VR, students interacted with an NPC which was responsible for vocalising the narration and describing equipment through 3D imagery. Realism plays a very important role in such training, which in our work has been achieved through a combination of structure light scanning and modelling in the Unity3D and Blender software platforms (see ). A short video illustrating some of the features of the VR experience can be found here: https://youtu.be/VWP0ctoajrA.

The simulation was designed as an intelligent virtual environment (Luck and Aylett Citation2000). Behaviour trees (Colledanchise and Ögren Citation2017) were used to programme semi-autonomous non-player characters (NPCs) that interact with the student and provide the narration (see ).

2.1.2. VR narration

The study focuses on information processing which requires the students to understand explanations about the function of the equipment within the chemical plant (e.g. reactor, valves, pumps). Students in the control group listened to the information in the introduction video. Students in the experimental group were presented with the narration in VR format. Information given to the students is general and applicable to the same type of instrument used in different scenarios and different locations. For this reason, it is considered universal knowledge in terms of educational purpose.

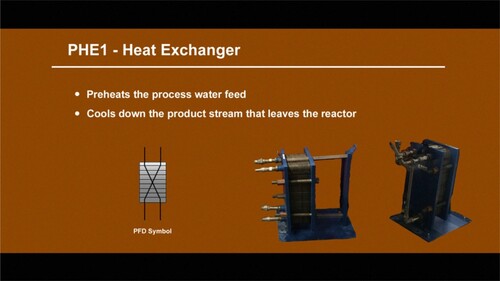

Information about each piece of equipment was broken down into self-contained sentences, each representing one fact about the given instrument. Three most important facts about each instrument were selected and a short narration was produced about each instrument, containing only the three relevant factual points. This means that for 12 instruments, 36 facts were created and compiled into 12 narrations, one for each instrument. These narrations were recorded as non-player character narrations for VR and as background voiceover for the introductory video. Examples of facts and narrations can be seen in . An example of the NPC providing the narration regarding a heat exchanger (PHE1) can be seen in . Students in the control group viewed the instructional video. Students in the experiment group accessed the narration using an interactive board. Measurement of information processing was obtained through the use of a quiz which the students were required to undertake after performing three tasks on either the real or virtual plant.

Figure 5. Still image captured from the VR application. Narration: "This is a heat exchanger, PHE1. It preheats the process water feed and cools down the product stream which leaves the reactor".

Table 1. Example of fact and narration.

The same information was conveyed in the video using the identical narration. In addition to this, similar images were displayed to the students of equipment and flow diagram symbology, but the video depicted 2D renditions of equipment of course, and so no opportunity for the student to move around the item (see example in and the VR simulation video mention above).

2.1.3. Quiz

Students were presented with a quiz which tested their knowledge after they finished the assigned tasks. The quiz is based on 36 facts constructed for the narration of the information about each instrument.

The first fact about each instrument was not used for the quiz as it simply presented the name of the instrument. The remaining two facts about each instrument (24 facts in total) were used in the quiz. Twelve random facts were used as statements describing the correct instrument (true statements) and 12 facts were randomly assigned to the wrong instrument (false statements).

During the quiz, the students were shown 12 facts about 6 instruments (two statements per instrument) and tasked to recognise which are true and which are false. Six of those statements were related to the three instruments to which their three tasks were related to and the remaining six statements were about three other random instruments on the plant.

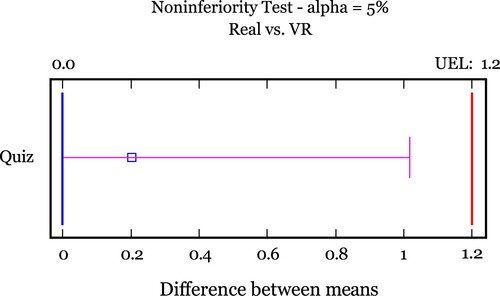

This means that the student can achieve a maximum of 12 correct answers (points). The equivalence margin used in non-inferiority testing of the results of the quiz was set to a difference of 1.2, which is the number of errors the student has to make in order for his hypothetical grade from the quiz to be lowered.

2.1.4. Questionnaires

Two questionnaires were designed and provided to the students.

Preliminary Questionnaire

The preliminary questionnaire was designed to test three blocking factors, the student’s past use of and experience with VR, knowledge of the function of devices typically found on chemical plants, and the ability to understand process flow diagrams (PFDs). Students in this study were distributed into three blocks and distributed into the control and experimental groups from the blocks separately to achieve a similar student background distribution in both groups. The complete preliminary questionnaire is given in Appendix 1.

Self-efficacy Questionnaire – VR

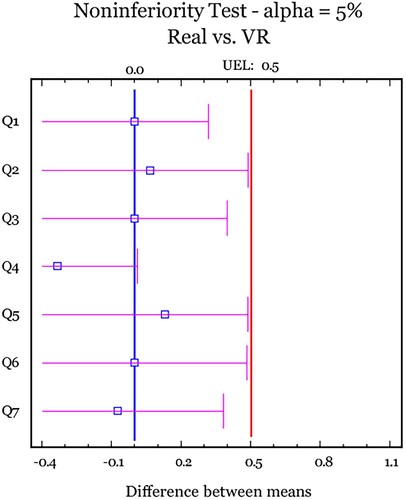

The self-efficacy questionnaire was used to gauge the student learning experience with the VR platform. Detrimental effects of the experience were recorded along with their self-efficacy of plant layout and function; see Section 3.2 () for further details about the questionnaire Students’ answers to each question in the final questionnaire were compared using the same non-inferiority test as the quiz (see 2.1.3). The equivalence margin was set to 0.5, which means that the answers for the given question are considered the same as long as the students change their answer by a theoretical half point on the Likert scale.

2.1.5. Pilot plant introduction video

The control group – group A – viewed the introduction video before they were assigned the tasks to perform. The video was designed using a combination of pre-recorded audio narration and images of the equipment applying multimedia learning principles as specified in the cognitive theory of multimedia learning (Mayer Citation2005).

Mayer’s cognitive theory assumes that humans have two distinct information processing channels, one for verbal and one for visual information processing, called ‘Dual Channel Assumption’. Students are seemingly limited in the amount of understandable or retainable visual and auditory information, thus it was crucial to create a video that was not too extensive or unstimulating, yet covers the same ‘fact points’ and to the same extent as the VR narration. The final educational video was 10:04 min long.

3. Results

Fifteen students of chemical engineering finished the exercise on the real chemical plant and 15 other students performed the same exercise in VR. These 30 students then completed the quiz and the questionnaire. To answer the research question, the study thus used a between-subjects research design. Each hypothesis (see Section §1) was tested in turn using the quiz and questionnaire data.

3.1. Information retention – quiz

In order to test hypothesis 1, i.e.

Hypothesis 1: The student retention of verbal information in VR is at least as effective as when using multimedia video.

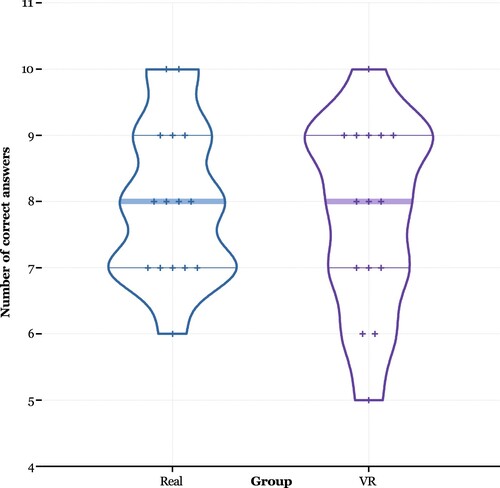

the number of correct answers to the quiz (see ) was recorded and a non-inferiority test performed. Summary of the results of the quiz can be seen in . The number of correct answers to the quiz can be seen in (a), where n is number of observations (15 students per group), µ is mean value and σ is standard deviation. (b) shows the number of correct answers for each instrument on the plant. Full results for each student and instrument on the plant are given in Appendix 2 (Table A1 – real plant group, and Table A2 VR group).

Table 2. Examples of the quiz questions.

Table 3. Summary of the results – Quiz.

shows a violin graph of the number of correct answers of individual students. The distribution of the number of correct answers can be compared for each group.

summarises the equivalence analysis, where SEM denotes standard error, CL confidence level, and t-value and p-value were calculated for one-sided test.

Table 4. Equivalence analysis.

The first null hypothesis is rejected based on the fact that the p-value is less than 0.05, which means that non-inferiority of results of group B (VR) has been demonstrated and the alternative hypothesis which states that student’s retention of verbal information in VR is not worse than from multimedia video can be accepted. In other words, the students retention of verbal information in VR is at least as effective as when using multimedia video.

Graphical representation of the non-inferiority test can be seen in . The same effect can be observed by comparing the value of 95% confidence level which is smaller than the upper equivalence limit (UEL).

3.2. Self-efficacy – questionnaire

In order to test hypothesis 2, i.e.

Hypothesis 2: The student reported self efficacy after a VR learning experience is comparable to multimedia video learning experience.

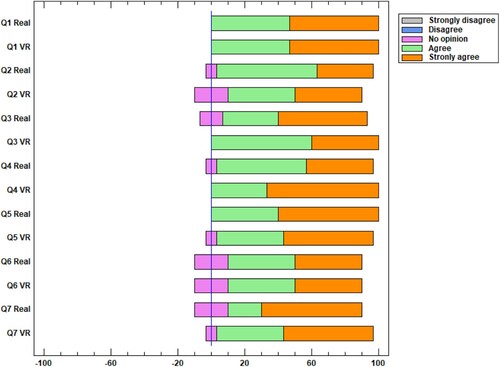

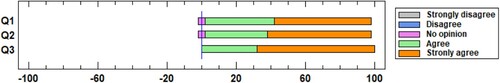

the number frequency of choices to questions in the questionnaire was recorded and a non-inferiority test was performed. The questions used to measure self-efficacy after the exercise and the summary of the questionnaire can be seen in . Values 1.5 represent the values on the standard Likert scale where 1 denotes Strongly disagree, 2 Disagree, 3 Neither agree nor disagree, 4 Agree and 5 Strongly agree. (See also Appendix 3 for a complete set of results.)

Table 5. Self-Efficacy (final) questionnaire.

Graphical representation of the results can be seen in the diverging stack bar graph in .

Non-inferiority test was performed for each question related to self-efficacy. As stated in Section 2.1.4, the equivalence margin was set to 0.5. The results of the analysis can be seen in . As p-value is less than 0.05 in each case we can conclude that the non-inferiority is satisfied. For this reason we reject the second null hypothesis and accept the second alternative hypothesis which states that students’ self efficacy reported after watching multimedia video is the same compared to VR.

Table 6. Self-efficacy non-inferiority test results.

shows minimum and maximum values, mean, standard deviation (SD), difference of the means (diff), standard error of the means (SEM), upper 95% confidence level (95% CL), upper t-value, and p-value. The results of the non-inferiority test for each question can be seen in .

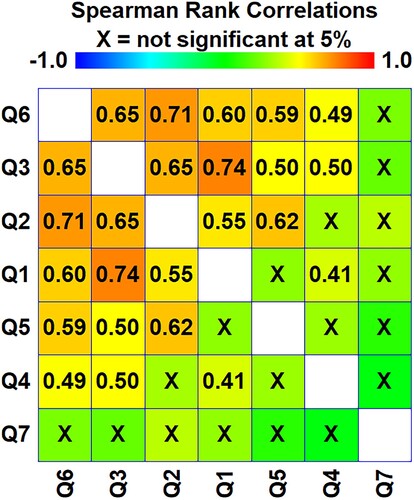

In order to explore the connection between questions a Spearman Rank correlation was performed. As the results in show, students’ confidence (Q1, Q2, Q3, Q4, Q5) correlates with how successful they find the video in creating an interesting experience (Q6). However, the students did not change their answers based on their opinion regarding the sound quality of the voice recording.

3.3. Students’ opinion regarding VR

The group of students performing the task in the real world was asked to perform the same exercise in VR as well, results of 5 students had to be omitted as they did not participate in the VR experiment. In total 25 students experienced the learning materials in VR. Students were asked about their opinion about the use of VR in chemical engineering education, using the same Likert scale. The results of 3 questions related to the reception of VR as part of the learning materials are presented in and visualised in .

Table 7. Students’ opinion about VR.

3.4. Adverse effects

Adverse effects of VR were recorded after the students performed the tasks. Students could choose from a list of 12 possible effects, previously developed by Ames, Wolffsohn, and McBrien (Citation2005), item ‘boredom’ was omitted as not relevant in this research. Six students reported six adverse effects (see ), most common problem students reported was having tired eyes (4 students). The more severe problem (nausea) was reported only in a single case (4%).

Table 8. Observed adverse effects.

4. Discussion

Chemical engineering educators can benefit from the use of VR in the education process, solving many issues with access to specialised instruments and facilities as well as logistics regarding large groups of students in these potentially dangerous spaces. Furthermore, VR provides students with an alternative to passive learning methods such as educational videos and textbooks. The aim of this study was to consider whether student information retention (i.e. remembering and understanding) delivered through a VR platform is comparable to that of conventional method using video and slide-based information. The work also allowed exploration as to whether the students’ belief in their abilities (self-efficacy) is also comparable. The total number of correct answers by the students in the quiz was 120 for those performing the experiment on the real plant with a mean of 8 correct answers per student, and 117 for those in VR with a mean of 7.8. The non-inferiority test showed that the results of students in the VR group were not worse than those performing the tasks on the real plant and therefore the amount of retained information is at least comparable. Students in both groups reported equal results in self-efficacy as confirmed by the non-inferiority test. Students in the VR group reported slightly higher belief in better ability to competently provide reasoning to the use of specific items (67% strongly agree) than the group using the video (40% strongly agree).

The VR learning resource was well received by the students as the participants in both groups agreed that VR should be used in chemical engineering education and 68% of them strongly agreed that VR is a useful learning resource alongside the traditional sources. However, six students reported adverse effects, with tired eyes being the most common reported problem. The frequency of the adverse effects on students was smaller than the frequency measured by Ames, Wolffsohn, and McBrien (Citation2005) which could be due to the advancements in technology used for the experiment.

Critical to student engagement, and indeed effective transferability to real-world practice, is the realism of the digital twin. High-quality visual realism, as demonstrated in this work, can be achieved through current 3D scanning and video rendering technologies. However, work is needed for improving the ease of content creation, and thus accessibility to educators without specific programming skills. Likewise, linking visual realism with process model-based operation is not trivial, but for the reasons already described an investment that is likely to bring much value with regards to the educational gains. A final challenge in realism is the haptic experience. Although this can be critical in psychomotor and related practical skills development, it may be less important in developing student understanding of systems, functions, processes, or awareness raising of, for example, hazards, safety considerations, and the relevance of physical principles. Nevertheless, haptic technologies are rapidly evolving, offering exciting future possibilities (see, e.g. Yang et al. (Citation2021) and Giri, Maddahi, and Zareinia (Citation2021)).

The results of this study indicate that it is possible to produce a VR experience that students find useful, achieving the set of educational goals normally associated with in-person, physical plant, and laboratory experiences. As mentioned earlier in the text, the VR platform is not necessarily considered as an alternative to real experiences, but rather to complement these through a self-regulated, flexible, and accessible learning medium. The VR also provides opportunities for unique extension to skills development, including higher order challenges for critical thinking, e.g. for students to apply, analyse, and evaluate (re the revised Bloom’s taxonomy) though scenarios that would not be viable with actual kit or within an actual plant or laboratory environment. Indeed, as part of the information retention approach used in this work, students were asked to apply the understanding to specific actions on the plant, such as opening a valve to result in a desired outcome.

In summary, as VR technology improves, it can be expected that more, higher quality and purpose-specific experiences will be created, helping to create accessible and safe and inclusive learning environments for all students. Further studies exploring the effects of VR on other areas of the VR taxonomy, such as psychomotor skills training and motivational and emotive observing, will be needed. Further research for the minimisation of the adverse effects of VR is also needed, especially with expected availability and accessibility of such content. Finally, this study was only performed on students of the University of Surrey which may induce a homogeneity problem in the results; performing a similar study in a different institution would increase the reliability of the results.

5. Conclusions

Immersive VR provides an engaging and accessible learning platform to complement laboratory and industrial plant experiences. This work has demonstrated the equivalent learning that is achievable using immersive VR for tuition and exercise. Specifically, a non-inferiority test was used to show that the VR approach is no less effective than an equivalent (but conventional) non-VR learning approach, whilst offering the additional advantages of educational logistics (such as flexible and self-directed learning), engagement and learning experiences that may be impossible in a real laboratory or industrial environment. Similarly, student self-efficacy related to the learning objectives of the experience was again found to be comparable between the VR and non-VR learning approaches. Student feedback on the future use of VR was found to be highly favourable, however ongoing work is needed to better understanding and minimising some reported adverse effects, especially eyestrain.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Jiri Motejlek

Jiri Motejlek received his master’s degree in applied informatics from the Finance University in Prague and a postgraduate degree in higher education of information and communication technologies at Charles University in Prague. He recently attained his PhD degree in the Department of Chemical and Processing Engineering at the University of Surrey. His research interests include virtual reality and its use in higher education.

Esat Alpay

Esat Alpay is Associate Dean (Education) in the Faculty of Engineering and Physical Sciences. He received his BSc (Hons) degree in Chemical Engineering from the University of Surrey, and his PhD from the University of Cambridge. He also holds an MA degree in the Psychology of Education (with distinction) from the Institute of Education (UCL). He has wide interests in the educational support and skills development of undergraduate and postgraduate students.

References

- Allcoat, Devon, and Adrian von Mühlenen. 2018. “Learning in Virtual Reality: Effects on Performance, Emotion and Engagement.” Research in Learning Technology 26 (0): 1–13.

- Ames, Shelly L, James S Wolffsohn, and Neville A McBrien. 2005. “The Development of a Symptom Questionnaire for Assessing Virtual Reality Viewing Using a Head-Mounted Display.” Optometry and Vision Science 82 (3): 168–176. doi:10.1097/01.OPX.0000156307.95086.6

- Bailey, Jakki O, Jeremy N Bailenson, and Daniel Casasanto. 2016. “When Does Virtual Embodiment Change Our Minds?” Presence: Teleoperators and Virtual Environments 25 (3): 222–233. doi:10.1162/PRES_a_00263

- Bayram, Sule Biyik, and Nurcan Caliskan. 2021. “An Innovative Approach in Psychomotor Skill Teaching for Nurses: Virtual Reality Applications.” Journal of Education and Research in Nursing 18 (3): 356–364. doi:10.5152/jern.2021.81542

- BIS (Department of Business and Innovation Skills). 2013. “The Maturing of the MOOC: Literature Review of Massive Open Online Courses and Other Forms of Online Distance Learning.” Research paper 130, September, 1–123.

- Bloom, Benjamin S, Max D Engelhart, Edward J Furst, Walker H Hill, and David R Krathwohl. 1956. Taxonomy of Educational Objectives, Handbook I: Cognitive Domain. London: Longmans, Green and Co Ltd.

- Cakula, Sarma, Andra Jakobsone, and Jiri Motejlek. 2013. “Virtual Business Support Infrastructure for Entrepreneurs.” Procedia Computer Science 25: 281–288. doi:10.1016/j.procs.2013.11.034

- Chan, Vei Siang, Habibah Norehan Haron, M. I. B. M. Isham, and Farhan Bin Mohamed. 2022. “VR and AR Virtual Welding for Psychomotor Skills: A Systematic Review.” Multimedia Tools Applications 81: 12459–12493. doi:10.1007/s11042-022-12293-5

- Chen, Yi-Ting, Chi-Hsuan Hsu, Chih-Han Chung, Yu-Shuen Wang, and Sabarish V Babu. 2019. “iVRNote: Design, Creation and Evaluation of an Interactive Note-Taking Interface for Study and Reflection in VR Learning Environments.” 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Mar., 172–180.

- Coleman, Elaine B. 1998. Using Explanatory Knowledge During Collaborative Problem Solving in Science. Journal of the Learning Sciences, 7(3–4), 387–427. doi:10.1080/10508406.1998.9672059

- Colledanchise, Michele, and Petter Ögren. 2017. Behavior Trees in Robotics and AI - An Introduction. Boca Raton, FL: Chapman & Hall.

- Douglas-Lenders, Rachel Claire, Peter Jeffrey Holland, and Belinda Allen. 2017. “Building a Better Workforce: A Case Study in Management Simulations and Experiential Learning in the Construction Industry.” Education + Training 59 (1): 2–14. doi:10.1108/ET-10-2015-0095

- Giri, G. S., Y. Maddahi, and K. Zareinia. 2021. “An Application-Based Review of Haptics Technology.” Robotics 10 (1): 29–47. doi:10.3390/robotics10010029

- Gomoll, Andreas H, Robert V O’Toole, Joseph Czarnecki, and Jon J P Warner. 2007. “Surgical Experience Correlates with Performance on a Virtual Reality Simulator for Shoulder Arthroscopy.” The American Journal of Sports Medicine 35 (6): 883–888. doi:10.1177/0363546506296521

- Grantcharov, Teodor P, Linda Bardram, Peter Funch-Jensen, and Jacob Rosenberg. 2003. “Learning Curves and Impact of Previous Operative Experience on Performance on a Virtual Reality Simulator to Test Laparoscopic Surgical Skills.” The American Journal of Surgery 185 (2): 146–149. doi:10.1016/S0002-9610(02)01213-8

- Hilfert, Thomas, and Markus König. 2016. “Low-cost Virtual Reality Environment for Engineering and Construction.” Visualization in Engineering 4 (1): 1–18. doi:10.1186/s40327-015-0029-z

- Holmes, Jeffrey. 2007. “Designing Agents to Support Learning by Explaining.” Computers & Education 48 (4): 523–547. doi:10.1016/j.compedu.2005.02.007

- Jakobsone, Andra, Jiri Motejlek, and Sarma Cakula. 2014. “Customized Work Based Learning Support System for Less Academically Prepared Adults in Online Environment.” 523–528 of: IEEE Global Engineering Education Conference (EDUCON). IEEE.

- Kaufmann, Hannes. 2002. Collaborative Augmented Reality in Education. Dec., 1–4.

- Kisker, Joanna, Thomas Gruber, and Benjamin Schöne. 2021. “Experiences in Virtual Reality Entail Different Processes of Retrieval as Opposed to Conventional Laboratory Settings: A Study on Human Memory.” Current Psychology 40 (9): 1–8. doi:10.1007/s12144-019-00257-2.

- Krathwohl, D. R. 2002. “A Revision of Bloom’s Taxonomy: An Overview.” Theory Into Practice 41 (4): 212–218. doi:10.1207/s15430421tip4104_2

- Lakens, Daniël, Anne M Scheel, and Peder M Isager. 2018. “Equivalence Testing for Psychological Research: A Tutorial.” Advances in Methods and Practices in Psychological Science 1 (2): 259–269. doi:10.1177/2515245918770963

- Laurel, Brenda. 2016. “AR and VR: Cultivating the Garden.” Presence: Teleoperators and Virtual Environments 25 (3): 253–266. doi:10.1162/PRES_a_00267

- Luck, Michael, and Ruth Aylett. 2000. “Applying Artificial Intelligence to Virtual Reality: Intelligent Virtual Environments.” Applied Artificial Intelligence 14 (1): 3–32. doi:10.1080/088395100117142

- Mayer, Richard E. 2005. “Cognitive Theory of Multimedia Learning.” books.google.com, Jan.

- Motejlek, Jiri, and Esat Alpay. 2021. “Taxonomy of Virtual and Augmented Reality Applications in Education.” IEEE Transactions on Learning Technologies 14 (3): 415–429. doi:10.1109/TLT.2021.3092964

- Pallavicini, Federica, Luca Argenton, Nicola Toniazzi, Luciana Aceti, and Fabrizia Mantovani. 2016. “Virtual Reality Applications for Stress Management Training in the Military.” The Journal of Strength and Conditioning Research 29 (Suppl 11(Oct.)): S77–S81. doi:10.3357/AMHP.4596.2016.

- Pan, Xueni, and Antonia F de C Hamilton. 2018. “Why and How to Use Virtual Reality to Study Human Social Interaction: The Challenges of Exploring a New Research Landscape.” British Journal of Psychology 109 (3): 395–417. doi:10.1111/bjop.12290

- Potkonjak, Veljko, Michael Gardner, Victor Callaghan, Pasi Mattila, Christian Guetl, Vladimir M Petrović, and Kosta Jovanović. 2016. “Virtual Laboratories for Education in Science, Technology, and Engineering: A Review.” Computers & Education 95 (c): 309–327. doi:10.1016/j.compedu.2016.02.002

- Ren, Shuo, Frederic D McKenzie, Sushil K Chaturvedi, Ramamurthy Prabhakaran, Jaewan Yoon, Petros J Katsi- oloudis, and Hector Garcia. 2015. “Design and Comparison of Immersive Interactive Learning and Instructional Techniques for 3D Virtual Laboratories.” Presence: Teleoperators and Virtual Environments 24 (2): 93–112. doi:10.1162/PRES_a_00221

- Shen, Chien-wen, Jung-tsung Ho, Ting-Chang Kuo, and Thai Ha Luong. 2017. “Behavioral Intention of Using Virtual Reality in Learning.” 129–137 of: WWW ‘17: 26th International World Wide Web Conference. New York: ACM Press.

- Shih, YaChun, and MauTsuen Yang. 2008. “A Collaborative Virtual Environment for Situated Language Learning Using VEC3D.” International Forum of Educational Technology Society 11 (1): 56–68.

- Sun, Rui, Yenchun Jim Wu, and Qian Cai. 2018. “The Effect of a Virtual Reality Learning Environment on Learners’ Spatial Ability.” Virtual Reality 23 (4): 385–398. doi:10.1007/s10055-018-0355-2.

- Walker, Esteban, and Amy S Nowacki. 2010. “Understanding Equivalence and Noninferiority Testing.” Journal of General Internal Medicine 26 (2): 192–196. doi:10.1007/s11606-010-1513-8

- Yang, T.-H., J. R. Kim, H. Jin, H. Gil, J.-H. Koo, and H. J. Kim. 2021. “Recent Advances and Opportunities of Active Materials for Haptic Technologies in Virtual and Augmented Reality.” Advanced Functional Materials 31: 2008831. (1–30). doi:10.1002/adfm.202008831

- Zyda, Mike. 2016. “Why the VR You See Now Is Not the Real VR.” Presence: Teleoperators and Virtual Environments 25 (2): 166–168. doi:10.1162/PRES_a_00254

Appendices

Appendix 1. Preliminary Questionnaire

Appendix 2. Complete results – Quiz

Tested student’s undertook a quiz after completing tasks on real plant or in VR. The abbreviations in the table are:

No Student’s number

G Gender

VR Experience with Virtual Reality block PK Previous knowledge block

RV1 Reactor

HV1 Heating Vessel

CV1 Feed Stock Reservoir PF1A/B Process Filters P1A/B Process Pumps

PHE1 Primary Heat Exchanger PHE2 Secondary Heat Exchanger DI1 Deioniser

PV1 Product Vessel

PF2 Polishing Filter

UT19-C1 Adsorption Unit Column

UT19-P1 Adsorption Unit Recirculation Pump CA Total Number of Correct Answers

Numbers 1 and 0 are used to capture whether the student’s answer was correct (1) or incorrect (0).

The blocks were used to ensure even distribution of students based on their experience with VR and previous knowledge.

Table A1. Complete individual results (Real plant group).

Table A2. Complete individual results (VR group).

Appendix 3. Complete results – Questionnaire

Self-efficacy and students’ opinion about VR.

Table A3. Group A (real plant).

Table A4. Group B (VR).

Table A5. Adverse effects – results (part 1).

Adverse health effects

1. General discomfort

2. Fatigue

3. Drowsiness

4. Headache

5. Dizziness

6. Difficulty concentrating

7. Nausea

8. Tired Eyes

9. Sore/aching eyes

10. Eyestrain

11. Blurred vision

12. Difficulty focusing eyes

The Table A5 shows numbers of students (column ‘No’) and whether they reported the adverse effect (as numbered in the list). First five students are not in the list as they didn’t perform the VR exercise.

Table A6. Adverse effects – results (part 2).