ABSTRACT

Self and peer assessments have been identified as effective strategies to develop a deeper understanding of complex concepts, enhance meta-cognitive capacity, and support learner self-efficacy. This study examines data related to peer and self-assessment exercises completed within a university engineering programme (n=61). Data related to peer-assessment scores, self-assessment scores, and expert assessor scores are analysed. Quality of feedback generated during the peer assessment activity is also considered. An open data set is included within the manuscript and hosted on the Open Science Framework. The results of this study suggest relatively high peer-assessment reliability when compared to expert assessments, although peer scoring tends to be consistently overestimated. In contrast, self-assessment scores demonstrate relatively low reliability when compared with expert assessor scores. The quality of feedback provided during the peer assessment exercise demonstrated a strong relationship with student performance. Recommendations for further refinement and implementation with larger cohorts are discussed.

Introduction

Peer assessment is a pedagogical strategy that requires students to evaluate their peers efforts within an educational context, with a view to supporting their future learning. While this can be summative in nature, where a student simply awards a grade based on a set of criteria, it is more frequently used in a formative manner so that it generates feedback that can support future efforts. While there are a wide range of factors that can impact the effectiveness of this strategy, the vast majority of research suggests that it has considerable benefits and significant impacts on student learning (H. Li et al. Citation2020; van Zundert, Sluijsmans, and van Merriënboer Citation2010). In their meta-analysis examining over 50 studies, Double, McGrane, and Hopfenbeck (Citation2020, 500) conclude that ‘Overall, the results suggest that there is a positive effect of peer assessment on academic performance in primary, secondary, and tertiary students’.

Self-assessment is frequently used alongside peer assessment as a pedagogical strategy, and this is reflected in the research literature where the two techniques are often evaluated within individual studies. This is due to the similar nature of the activity, required student capacities, and supporting systems. Self-assessment also requires students to evaluate work based on set criteria, but is complicated by interactions with self-theories. How individuals’ perceive themselves in terms of ability can impact their capacity for self-evaluation. In this manner, an individual could exhibit a reliable capacity for assessing others, but a lower capacity for self-assessment due to how they view themselves within a specific context influencing this process (Ehrlinger Citation2008). Belief regarding oneself, and resultant impacts on behaviours or capacities, are considered self-theories (Dweck Citation2013). In their meta-analysis, Panadero, Jonsson, and Botella (Citation2017) identify the effects of engaging in self-assessment on students self-regulated learning and self-efficacy. This additional complexity is reflected in studies that consider the reliability of self and peer assessments relative to expert assessment. The majority of research suggests significantly lower reliability when students’ self-assess, but they also note the increased potential benefits of motivational factors such as self-efficacy (Andrade Citation2019; Panadero, Brown, and Strijbos Citation2016).

Factors impacting peer assessment effectiveness

With appropriate supports, peer assessments are comparable in terms of reliability to those of a lecturer, however, variances exist across disciplines (Double, McGrane, and Hopfenbeck Citation2020; Magin Citation2001). When utilised effectively students benefit through increased feedback, improved comprehension of performance criteria, develop their understanding of their own learning process, and ultimately increase their performance (Ballantyne, Hughes, and Mylonas Citation2002). However, these benefits should be considered in light of the additional time demands placed on students by such strategies (Sung et al. Citation2003). The use of technology to facilitate peer assessment can enhance the effectiveness of a peer assessment strategy (Nicol and Milligan Citation2006). These are particularly useful for co-ordinating the peer assessment process and for ensuring peer level anonymity, which has been identified as prerequisite for high-quality peer evaluations (Li et al. Citation2016; L. Li Citation2017; Lin, Liu, and Yuan Citation2001). Drawbacks to peer assessment can include students’ distrust (Topping Citation2010) and poor assessment performance (Walvoord et al. Citation2008). These issues can be mitigated by explicitly defining performance criteria, peer assessment training (van Zundert, Sluijsmans, and van Merriënboer Citation2010), and through quality assurance systems implemented by the educator (Planas Lladó et al. Citation2014). Panadero and Alqassab (Citation2019), in their review of the role of anonymity in peer assessment and peer feedback, conclude that suitably deployed systems that facilitate anonymity can effectively mitigate many of the shortcomings of peer review. They suggest that suitable levels of anonymity enhanced the criticality of feedback, resulted in students perceiving feedback as more valuable, and led to higher reviewer performance as well as positive impacts on social/team dynamics within learning environments. Additional supporting resources, such as rubrics, have also been linked to strategy effectiveness.

Rubrics and peer/self-assessment

Rubrics are explicit communications of performance criteria for a given activity. They typically provide outlines/descriptions for each criterion at a given level of performance (Reddy and Andrade Citation2010). As such these detailed scoring guides serve to enhance the reliability of assessment in a traditional sense where an educator reviews student work, but they can also support numerous formative strategies including peer and self-assessments (Wiliam Citation2011). Panadero and Jonsson (Citation2013), in their review of rubric use in supporting peer and self-assessment, suggest that rubrics can play a considerable role in enhancing both summative and formative activities. They found that rubric use enhanced transparency, reduced anxiety, improved self-efficacy, and ultimately supported student self-regulation. As educators attempt to scale approaches in order to support larger classes, many positive impacts of rubrics in terms of student autonomy should be strongly considered when deploying self or peer assessment. However, the effectiveness of rubrics can be subject to moderation from parallel strategies or other factors (Panadero and Jonsson Citation2013). While parallel strategies, such as self-assessment or meta-cognition, can be seen as potentially confounding from a research methodology perspective, they are complimentary in nature when considered from a pedagogical perspective, and as such tend to be studied in combination with rubric use (Reddy and Andrade Citation2010). This interplay has been highlighted by some researchers as contributing to the null findings of some research examining rubric use (Halonen et al. Citation2003), while others highlight the vast differences in outcomes based on domain, approach, and supporting systems (Topping Citation1998; van Zundert, Sluijsmans, and van Merriënboer Citation2010).

Peer assessment in blended and digital learning

As a response to demands for more flexible learning paths, and more recently the COVID-19 pandemic, educators have expedited their adoption of blended and digital learning systems. These systems offer several advantages over traditional approaches including increased flexibility and autonomy for students. However, they have also been linked to several negative outcomes including reduced student motivation, reports of isolation, reduced peer communication, and ultimately student disengagement (Muljana and Luo Citation2019). In their review of 40 studies published between 2010 and 2018, Muljana and Luo (Citation2019, 20) identified ‘institutional support, the level difficulty of the programmes, promotion of a sense of belonging, facilitation of learning, course design, student behavioural characteristics, and demographic variables along with other personal variables’ as the primary factors associated with low retention. The review concludes that ‘high-quality instructional feedback and strategies, [and] guidance to foster positive behavioural characteristics’ are suitable strategies to tackle retention. The link between retention and feedback within digital learning environments was also explored by Panigrahi, Srivastava, and Sharma (Citation2018) who stated ‘the feedback mechanism which is an antecedent of students’ continuation intention has a lot of scope to be studied in the virtual community context’. Self and peer assessments have the potential to mitigate some of the negative impacts of digital learning. They facilitate increased peer interactions that can generate useful feedback (Ballantyne, Hughes, and Mylonas Citation2002; Nicol and Milligan Citation2006; Planas Lladó et al. Citation2014). These interactions can enhance engagement within online learning systems and build online learner readiness (Hunt et al. Citation2022; J. Power et al. Citation2022). The integration of self-assessment prior to peer assessment allows for reviewer capacity assessment and enhances meta-cognitive capacity (Seifert and Feliks Citation2019). Peer assessment has also been identified as suitable for large class sizes with feedback generated that would be otherwise resource intensive, while simultaneously increasing student motivation (Pintrich and Zusho Citation2002; Zimmerman and Schunk Citation2001). The consideration of strategies impact on non-cognitive factors, such as motivation or self-efficacy, is essential as it has been repeatedly linked with retention and performance (J. Power, Lynch, and McGarr Citation2019).

In their meta-analysis of 54 studies, Double, McGrane, and Hopfenbeck (Citation2020) noted a marked robustness in terms of the positive impacts of peer assessment across a range of settings, systems, and disciplines. Notably, they also highlighted a positive impact for the reviewer, as well as the reviewee (Topping Citation1998; van Zundert, Sluijsmans, and van Merriënboer Citation2010). These benefits focus on developing the assessors’ understanding of performance criteria as well as supporting broader meta-cognitive advances (Seifert and Feliks Citation2019). This can have later positive impacts on their learning, but does require careful planning of when the peer assessment is deployed in order to give individuals suitable opportunity to utilise their increased knowledge of performance criteria and peer quality. Particularly relevant to the current study, and engineering education faculties, Double, McGrane, and Hopfenbeck (Citation2020, 501) concluded that ‘peer grading was beneficial for tertiary students but not beneficial for primary or secondary school students’. This is possibly due to older students demonstrating the increased capacity to engage with performance criteria and associated critical analysis. Boud and Falchikov (Citation2006) outline the many benefits for the assessor that can result from engagement with peer assessment including increased collaborative capacity, communication of knowledge, management of one’s own learning as well as improved research skills.

Peer assessment in engineering education

Research on the use of peer assessment within engineering education is relatively scarce. In examples where engineering students are part of the larger sample group, they are frequently studied in broader domains such as cross-faculty writing classes (Zou et al. Citation2018). While these studies can inform applications of peer assessment within engineering education, research examining its utility within an engineering-specific context would likely further enhance adoption and effectiveness.

Although limited, there are some engineering-specific evaluations of peer and self-assessment. Rafiq and Fullerton (Citation1996) examined the use of peer assessment within civil engineering and combined with group-based learning. They conclude that the use of peer assessment enhanced the effectiveness of group-based learning. Similarly Hersame, Luna, and Light (Citation2004) report on the implementation and design of a programme that compliments group-based learning with peer assessment. Willey and Freeman (Citation2006) also examined peer assessment with a focus on desirable engineering graduate attributes and team dynamics. They conclude that their application resulted in a lower administrative load on the educators, while also enhancing student collaboration, communication, and satisfaction. Although peer assessment research that explicitly targets knowledge and skills that may be unique to engineering is relatively scarce, there is some evidence that suggests peer assessment has positive impacts on domain-specific skills and experience of peer assessment results in positive student affect towards peer assessment (van Zundert, Sluijsmans, and van Merriënboer Citation2010).

Aim of the study

Taking into consideration the various challenges related to successfully implementing peer and self-assessment, this study aims to examine the relationships between peer, self, and expert assessment outcomes and in doing so provide further supporting evidence for engineering educators who may wish to adopt this approach in the future. In order to achieve this aim the study has been designed around the following research questions:

What is the nature of the relationship between peer and expert assessment scores?

What is the nature of the relationship between self and expert assessment scores?

What is the nature of the relationship between expert assessment scores and the quality of peer formative feedback?

Context of the study

Students in their final year of an engineering technology degree, complete a 12-week module which is worth 6-ECTS credits. The associated learning outcomes and professional knowledge requirements are presented as relatively advanced and represented a suitable final year of an undergraduate degree. The topic was focused on industrial manufacturing and design. As part of the assessment procedure, students write a technical report and engage in a peer/self-assessment activity. Students were provided with a 1-week period to complete this task beginning in week 9 of the 12-week semester. As part of the module, students were required to take part in the self/peer assessment activity. However, they could choose if they wished to grant permission for the associated data to be used for research purposes. This was on an opt-in, informed-consent basis. All potential participants were provided with information sheets, afforded independent time to consider, and further questioning time was also provided. Independent alternative contacts and later opt-out options were clearly communicated within approved information sheets and verbal briefings. Ethical clearance for the current project was granted by the relevant faculty ethics committee.

Method

Students submitted their reports through the University Learning Management System which is based on Sakai. Reports followed outlined structures, which aligned with the performance rubrics. Rubrics were issued at the start of the module and students were advised as to their purpose and utility. This briefing occurred within a scheduled lecture slot and included a Q&A session. The Learning Management System was used to facilitate self-assessment and later anonymous peer assessment. Students first engaged in self-assessment and subsequently completed the peer assessment exercise. Both self and peer assessment students were required to utilise a specified rubric: https://osf.io/t3vxw/?view_only=ebf58543a8ff42d4a6db80931df7f2a0. When engaging in peer assessment each individual was assigned three reports. The ‘peer’ score referred to throughout the document is a mean of these three scores. In addition to scoring based on the rubric, students were required to provide formative feedback in order to support further development of student work.

For the purposes of comparing scores on assessed work, the ‘expert’ score is considered a baseline. However, ‘expert’ scores should not be considered as devoid of variance. Rather they are presented as a best approximation and variances associated with ‘expert’ scores are acknowledged, but are typically more reliable than either peer or self-assessments (Li et al. Citation2016). T-tests are used when considering differences between ‘expert’ and ‘peer’ or ‘self’ scores. Associated effect sizes are also calculated on this basis. A Bland Altman graph is presented in order to explore the differences between ‘expert’ and ‘self’ scores within defined limits (Giavarina Citation2015). Due to sample size, tests have been selected based on suitability for use within limited data sets.

The module co-ordinator independently scored all student assignments, which are referred to as ‘expert’ scores in the later analyses. All scores and peer comments were reviewed by the module co-ordinator prior to circulation. The quality of peer feedback was subsequently evaluated by the module co-ordinator. The criteria and scoring guide is available through the OSF link above. In alignment with J. R. Power (Citation2021), the authors value the role of open data and broader Open Science principles. Anonymised data collected during the current study, and analysed below, is also available through the Open Science Framework for the purpose of review or reuse, subject to Creative Commons Attribution 4.0 International Public License: https://osf.io/t3vxw/?view_only=ebf58543a8ff42d4a6db80931df7f2a0

Results

Results are presented relative to the previously outlined research questions.

Overall means related to expert, peer, and self-assessments are presented in above. Peer and self-assessments consistently resulted in higher scores relative to expert scores. Self-assessment scores also demonstrated the largest standard deviation.

Table 1. Means of expert, peer, and self-assessment scores.

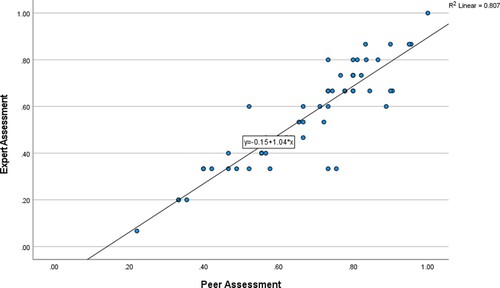

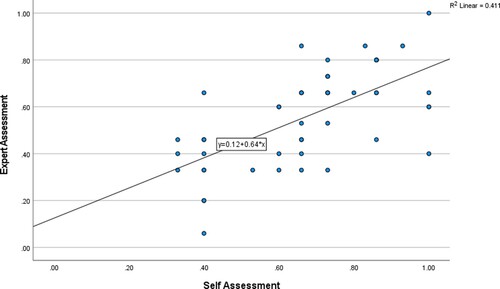

outlines the correlations between self, peer, and expert scores. Peer scores are considered as a mean of the three scores collected for each individual assignment that was subject to peer assessment. Peer/expert shows the highest shared variance (R2 = 0.806) when compared to self/expert (R2 = 0.23) and self/peer (R2 = 0.108). These results suggest that peer and expert share considerably more variance than either self and peer or self and expert.

Table 2. Correlation matrix of expert, peer and self-assessment scores.

What is the nature of the relationship between peer and expert assessment scores?

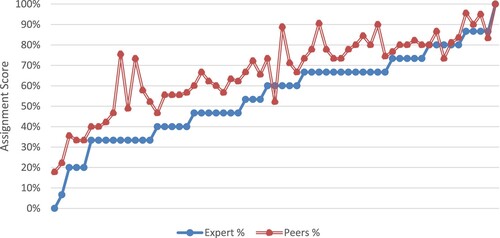

A paired samples T-test identified a significant difference between expert (M = .561, SD = .203) and peer assessment scores (M = .68, SD = .175; t (59) = 10.258, p < .001). The related Cohen’s d of 1.324 suggests a large inflationary effect size of peer assessment. This is reflected in which shows consistent inflation of peer scores compared to expert scores. The difference in peer and expert scores is greatest in the mid-range and reduced in the higher-scoring items.

An interclass correlation of .888 was observed between peer and expert scores, this is considered a high inter-rater reliability (κ = .888 (95% CI, .820 to .932, p < .0005) (Fleiss Citation1986). This suggests that when assessing peer work participants are demonstrating a considerable understanding of the performance criteria and are closely aligned with expert assessments. When evaluated in conjunction with the previously calculated effect size this suggests that while peer assessments are reliable, they are also consistently overestimating peer performance.

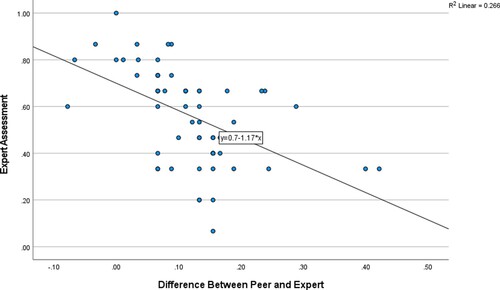

and consider differences between peer and expert scores relative to individuals’ expert scores. This suggests that peer assessment scores deviated from expert scores to a greater degree in lower-scored assignments and primarily in a positive direction.

What is the nature of the relationship between self and expert assessment scores?

An interclass correlation of .463 was observed between self and expert scores (κ = .463 (95% CI, .225 to .649, p < .0005), this is considered as poor inter-rater reliability (Fleiss Citation1986). These results suggest participants were less accurate in their evaluations of their own performance than that of their peer performance outlined in .

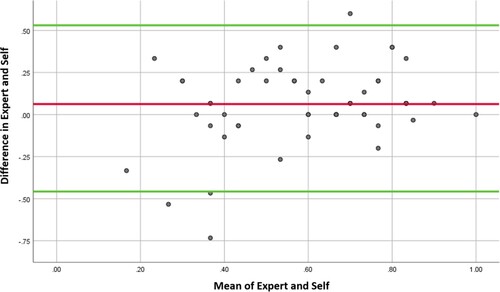

shows a Bland Altman graph, containing mean and confidence intervals, that examines the differences in Expert and Self-Assessment scores. A subsequent linear regression was statistically significant (R2 = .08, F(1, 52) = 4.726, p = .34) indicating proportional bias. Results suggest lower-scoring students, based on expert assessor scores, have a greater tendency to overestimate the quality of their own work.

What is the nature of the relationship between expert assessment scores and quality of peer formative feedback?

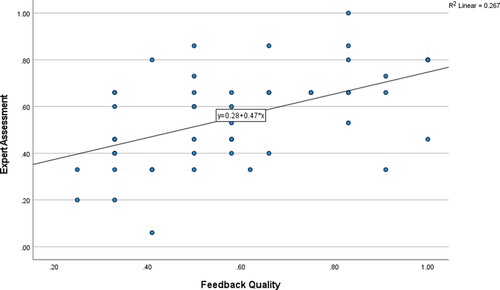

A significant correlation was observed between expert assessments of student performance and the quality of feedback that these students produced within the peer assessment exercise (r = .516, p < .001).

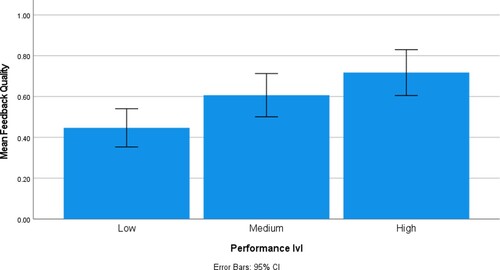

The scatter plot presented in outlines the relationship between the performance of students, as defined by the expert assessment score of their work, and the quality of feedback that they provided in the peer assessment exercise. These results suggest that students who demonstrate greater academic performance are likely to provide higher-quality feedback to peers. When participants are grouped based on their academic performance (Low, Medium, and High), this pattern is more easily observed (see ).

A subsequent one-way ANOVA confirms statistically significant differences between quality of feedback provided within the peer assessment exercise when compared across groups based on their performance within the module (F(2,46) = 7.65, p < .001).

Results summary

Peer-based assessment is highly aligned with expert assessment scores. Greater alignment is observed at the extreme ends of work quality. Although peer assessments are consistently overestimated, they demonstrate discriminant validity that is comparable to expert assessments. Self-assessment scores have comparatively poor reliability. The results suggest a proportional bias, with students who received lower expert assessment scores more likely to overestimate their own performance.

Discussion

The results of this study suggest that peer assessment is a suitable strategy for use within engineering education and has the potential to meet many of the challenges associated with university level engineering education. Specifically, peer assessment demonstrates the considerable potential for generating student feedback in a time where increasing class sizes and diminishing resources are making this increasingly challenging. Peer assessment and expert scores demonstrated suitable levels of reliability. However, a consistent positive inflation was observed when comparing peer with expert scores. The results of this study represent a higher degree of reliability between expert and peer assessment scores than observed in many other studies (Double, McGrane, and Hopfenbeck Citation2020; Magin Citation2001). This is potentially due to the use of a rubric, or the complimentary nature of self-assessment. While further research is required in order to explain the causal mechanism that resulted in relatively high reliability, from a functional perspective, the findings of this study suggest that peer assessment is suitable for use within this specific engineering education context and warrants further development.

A key benefit of this approach is increased feedback for students with a comparably reduced workload for the educator. This highlights the scalability of this strategy. A key factor that influences this scalability is the nature in which peer assessment is facilitated. Numerous studies have found that digital peer assessment systems demonstrate a greater benefit to learners than comparable in person mediums (Li et al. Citation2020). Related research focusing on the impact of anonymity identifies the relatively easy implementation within online learning systems as a factor that influences this increased effectiveness (Lin, Liu, and Yuan Citation2001). Online systems also facilitate more efficient review of feedback quality as well as the capacity to include supporting systems such as rubrics (Panadero and Jonsson Citation2013; Reddy and Andrade Citation2010). It is worth noting that the benefits of this process go beyond the valuable feedback provided. Numerous studies highlight the positive impact that engaging in peer assessment can have for the assessor in terms of meta-cognitive capacities, perceptions regarding value as well motivational benefits (Li et al. Citation2020; Panadero and Alqassab Citation2019).

Although the self-assessment data presented within this study demonstrates comparably poor reliability when compared to peer assessments, it is markedly higher than contemporary findings (Brown, Andrade, and Chen Citation2015; Lew, Alwis, and Schmidt Citation2010). This is possibly due to the nature of engineering outcomes and the relatively definitive conclusions that can be drawn when compared to other fields where subjective interpretations play a greater role in self-assessment (Panadero, Jonsson, and Botella Citation2017). A meta-analysis by Panadero, Jonsson, and Botella (Citation2017) highlights the value of self-assessment as a means of improving students’ use of learning strategies and the positive impacts it can have on motivational factors such as self-efficacy. The design of the current study required participants to complete self-assessments prior to engaging in peer assessments. It is possible that the self-assessment exercise enhanced later peer assessment as it required participants to engage with the same performance criteria and systems. Familiarity with criteria, systems, and general practice have been shown to enhance both self (Panadero, Jonsson, and Botella Citation2017) and peer (Li et al. Citation2020) assessment performance.

Self-assessment and expert scores demonstrated poorer levels of reliability. However, it should be noted that the peer assessment scores used within this comparison are based on a mean score of three peers. This likely reduced variability when compared to self-assessment scores. The data also indicated proportional positive bias when self-assessing. This suggests that lower-performing students, as defined by expert score, were more likely to overestimate the quality of their work when compared to their higher-performing peers. This aligns with the conclusions of Lew, Alwis, and Schmidt (Citation2010), although it is worth noting that reliability and proportional bias in this study were markedly lower. This is potentially due to meta-cognitive attributes that facilitate an understanding of performance criteria (Panadero, Jonsson, and Botella Citation2017). Students who cannot accurately evaluate their own work in light of these criteria are predictably unlikely to be able to evaluate the work of others using the same criteria. Compounding this issue is the potential role of self-perceptions which are likely to play a part in this reduced capacity for self-evaluation (Brown, Andrade, and Chen Citation2015). Future sequential designs would provide valuable additional data as to whether first being exposed to peer assessment could improve self-assessment performance.

When considering the quality of feedback generated within the peer assessment exercise, a distinct relationship between the academic performance of the student assessor and the quality of the resultant feedback is evident. Previous research has linked academic performance to the capacity to provide quality feedback (Double, McGrane, and Hopfenbeck Citation2020; Li et al. Citation2020), it is possible that an understanding of performance criteria is partially responsible as it would impact performance in both areas. Pellegrino (Citation2002) outlines that self and peer assessments offer valuable feedback data in the broader movement towards increased formative assessment. Armed with this information educators can modify practice and provide additional targeted supports where needed. In the context of the current study, these resources could yield increased returns if peer assessment participants who tend to demonstrate low reliability are specifically targeted. This could both increase the value of the activity, but also develop lower-performing students’ understanding of performance criteria and ultimately increase their performance within the relevant education structures. Ultimately the mechanism by which this potential improvement could be achieved is based within meta-cognition. By supporting lower-performing students in developing their understanding of performance criteria, educators are essentially developing a capacity for meta-cognition. This is a capacity that has been linked to increased performance in a wide range of domains (de Boer et al. Citation2018). The data analysed within this paper suggest a link between an understanding of these criteria and academic performance. However, the nature of this relationship and any claims to causality are beyond the scope of the current research project.

Conclusions

The results of this study suggest that this combination of peer and self-assessment is suitable for scaling to large cohorts in engineering disciplines. However, the success of such a system at a greater scale will be largely dependent on a suitable Learning Management System that can facilitate anonymous peer assessment, suitable supporting rubrics as well as development opportunities for students in order to support capacity for reliable use (van Zundert, Sluijsmans, and van Merriënboer Citation2010). One particular finding from the current study that should interest those intending to deploy similar methods at scale is the relationship between expert scores, self-assessment scores, and validity of formative feedback provided to peers. The data within this study suggests that students who demonstrate the largest differences in their self-assigned score and that of the expert assessor are less likely to provide high-quality formative feedback and demonstrate reduced reliability when scoring peers. While research on this facet of peer assessment is limited, academic performance has been previously linked to peer assessment capability (van Zundert, Sluijsmans, and van Merriënboer Citation2010). If an educator wished to focus their quality assurance of peer feedback, it may prove fruitful to identify students with the greatest discrepancies between self and expert scores. It is likely that this type of variable could be built into algorithms in the future for Online Learning Management systems and is already being utilised within experimental systems that employ machine learning (Rico-Juan, Gallego, and Calvo-Zaragoza Citation2019). As a pedagogical strategy, peer and self-assessments are supported by a strong evidence base, demonstrate considerable benefits to learning outcomes, positively impact motivational factors, and show potential for further development within engineering education.

Limitations and recommendations for future research

While the sample size of the current study limits the veracity and generalisability of findings, this study does highlight unique relationships between peer, self, and expert assessments that have the potential to inform future practice and research. This also influenced the selection of statistical methods and prevented the use of more advanced modelling techniques such as structural equational modelling. The strength and significance of these relationships highlight the potential value of more refined research approaches and the utility of peer assessment in university engineering education environments.

Future practice could be informed by sequential designs that examine the reliability of peer assessment over multiple experiences and potential training effects. In the current study, participants completed self-assessment prior to peer assessment. It is probable that this had a positive impact on peer assessment performance. A control, or alternating sequence, could provide further insight on this potential training effect and likely increased systems familiarity. Existing research highlights the variability in rubric effectiveness, but also the role that familiarity with these systems plays in their effectiveness (Panadero and Jonsson Citation2013; Reddy and Andrade Citation2010). This approach has the potential to provide further clarity and inform practitioners regarding appropriate student supports in order to maximise reliability. This could be further strengthened through a mixed methods approach that has the potentially to shed light on the factors that lead to peer assessment inflation.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Jason Richard Power

Dr. Jason Richard Power is a lecturer within the School of Education at the University of Limerick, Ireland. His research interests are focused on affective and cognitive factors that are associated with student performance in STEM education environments. He currently leads an international project supporting evidence-based practice in engineering education.

David Tanner

Prof. David Tanner is a faculty member in the School of Engineering where he lectures undergraduate students in manufacturing process technology, researches and implements best pedagogic practice, and is an active researcher in manufacturing processes including metal casting and additive manufacturing techniques.

References

- Andrade, H. L. 2019. “A Critical Review of Research on Student Self-Assessment.” Frontiers in Education 4, doi:10.3389/feduc.2019.00087.

- Ballantyne, R., K. Hughes, and A. Mylonas. 2002. “Developing Procedures for Implementing Peer Assessment in Large Classes Using an Action Research Process.” Assessment & Evaluation in Higher Education 27 (5): 427–441. doi:10.1080/0260293022000009302.

- Boud, D., and N. Falchikov. 2006. “Aligning Assessment with Long-Term Learning.” Assessment & Evaluation in Higher Education 31 (4): 399–413. doi:10.1080/02602930600679050.

- Brown, G. T. L., H. L. Andrade, and F. Chen. 2015. “Accuracy in Student Self-Assessment: Directions and Cautions for Research.” Assessment in Education: Principles, Policy & Practice 22 (4): 444–457. doi:10.1080/0969594X.2014.996523.

- de Boer, H., A. S. Donker, D. D. N. M. Kostons, and G. P. C. van der Werf. 2018. “Long-term Effects of Metacognitive Strategy Instruction on Student Academic Performance: A Meta-Analysis.” Educational Research Review 24: 98–115. doi:10.1016/j.edurev.2018.03.002.

- Double, K. S., J. A. McGrane, and T. N. Hopfenbeck. 2020. “The Impact of Peer Assessment on Academic Performance: A Meta-Analysis of Control Group Studies.” Educational Psychology Review 32 (2): 481–509. doi:10.1007/s10648-019-09510-3.

- Dweck, C. S. 2013. Self-theories: Their Role in Motivation, Personality, and Development. New York: Psychology press.

- Ehrlinger, J. 2008. “Skill Level, Self-Views and Self-Theories as Sources of Error in Self-Assessment.” Social and Personality Psychology Compass 2 (1): 382–398. doi:10.1111/j.1751-9004.2007.00047.x.

- Fleiss, J. L. 1986. “Reliability of Measurement.” In The Design and Analysis of Clinical Experiments, 1–32. doi:10.1002/9781118032923.

- Giavarina, D. 2015. “Understanding Bland Altman Analysis.” Biochem Med (Zagreb) 25 (2): 141–151. Doi:10.11613/bm.2015.015.

- Halonen, J. S., T. Bosack, S. Clay, M. McCarthy, D. S. Dunn, G. W. Hill Iv, … K. Whitlock. 2003. “A Rubric for Learning, Teaching, and Assessing Scientific Inquiry in Psychology.” Teaching of Psychology 30 (3): 196–208. doi:10.1207/S15328023TOP3003_01.

- Hersame, M. C., M. Luna, and G. Light. 2004. “Implementation of Interdisciplinary Group Learning and Peer Assessment in a Nanotechnology Engineering Course.” Journal of Engineering Education 93 (1): 49–57. doi:10.1002/j.2168-9830.2004.tb00787.x.

- Hunt, I., J. Power, K. Young, and A. Ryan. 2022. “Optimising Industry Learners’ Online Experiences – Lessons for a Post-Pandemic World.” European Journal of Engineering Education, 1–16. doi:10.1080/03043797.2022.2112553.

- Lew, M. D. N., W. A. M. Alwis, and H. G. Schmidt. 2010. “Accuracy of Students’ Self-Assessment and Their Beliefs About its Utility.” Assessment & Evaluation in Higher Education 35 (2): 135–156. doi:10.1080/02602930802687737.

- Li, L. 2017. “The Role of Anonymity in Peer Assessment.” Assessment & Evaluation in Higher Education 42 (4): 645–656. doi:10.1080/02602938.2016.1174766.

- Li, H., Y. Xiong, C. V. Hunter, X. Guo, and R. Tywoniw. 2020. “Does Peer Assessment Promote Student Learning?” A Meta-Analysis. Assessment & Evaluation in Higher Education 45 (2): 193–211. doi:10.1080/02602938.2019.1620679.

- Li, H., Y. Xiong, X. Zang, L. Kornhaber, M. Lyu, Y. Chung, K. S. & K, and H. Suen. 2016. “Peer Assessment in the Digital age: A Meta-Analysis Comparing Peer and Teacher Ratings.” Assessment & Evaluation in Higher Education 41 (2): 245–264. doi:10.1080/02602938.2014.999746.

- Lin, S. S., E. Z.-F. Liu, and S.-M. Yuan. 2001. “Web-Based Peer Assessment: Feedback for Students with Various Thinking-Styles.” Journal of Computer Assisted Learning 17 (4): 420–432. doi:10.1046/j.0266-4909.2001.00198.x.

- Magin, D. J. 2001. “A Novel Technique for Comparing the Reliability of Multiple Peer Assessments with That of Single Teacher Assessments of Group Process Work.” Assessment & Evaluation in Higher Education 26 (2): 139–152. doi:10.1080/02602930020018971.

- Muljana, P. S., and T. Luo. 2019. “Factors Contributing to Student Retention in Online Learning and Recommended Strategies for Improvement: A Systematic Literature Review.” Journal of Information Technology Education: Research 18: 19–57. doi:10.28945/4182.

- Nicol, D. J., and C. Milligan. 2006. “Rethinking Technology-Supported Assessment in Terms of the Seven Principles of Good Feedback Practice.” In Innovative Assessment in Higher Education, edited by C. Bryan and K. Clegg, 84–98. London: Taylor and Francis Group Ltd.

- Panadero, E., and M. Alqassab. 2019. “An Empirical Review of Anonymity Effects in Peer Assessment, Peer Feedback, Peer Review, Peer Evaluation and Peer Grading.” Assessment & Evaluation in Higher Education 44 (8): 1253–1278. doi:10.1080/02602938.2019.1600186.

- Panadero, E., G. T. L. Brown, and J.-W. Strijbos. 2016. “The Future of Student Self-Assessment: A Review of Known Unknowns and Potential Directions.” Educational Psychology Review 28 (4): 803–830. doi:10.1007/s10648-015-9350-2.

- Panadero, E., and A. Jonsson. 2013. “The use of Scoring Rubrics for Formative Assessment Purposes Revisited: A Review.” Educational Research Review 9: 129–144. doi:10.1016/j.edurev.2013.01.002.

- Panadero, E., A. Jonsson, and J. Botella. 2017. “Effects of Self-Assessment on Self-Regulated Learning and Self-Efficacy: Four Meta-Analyses.” Educational Research Review 22: 74–98. doi:10.1016/j.edurev.2017.08.004.

- Panigrahi, R., P. R. Srivastava, and D. Sharma. 2018. “Online Learning: Adoption, Continuance, and Learning Outcome—A Review of Literature.” International Journal of Information Management 43: 1–14. doi:10.1016/j.ijinfomgt.2018.05.005.

- Pellegrino, J. W. 2002. “Knowing What Students Know.” Issues in Science and Technology 19: 48–52.

- Pintrich, P. R., and A. Zusho. 2002. “Student Motivation and Self-Regulated Learning in the College Classroom.” In W.G. (Eds.), Higher Education: Handbook of Theory and Research (Vol. 17), edited by S. J. C. & T, 55–128. Dordrecht: Springer.

- Planas Lladó, A., L. F. Soley, R. M. Fraguell Sansbelló, G. A. Pujolras, J. P. Planella, N. Roura-Pascual, L. M. Moreno. 2014. “Student Perceptions of Peer Assessment: An Interdisciplinary Study.” Assessment & Evaluation in Higher Education 39 (5): 592–610. doi:10.1080/02602938.2013.860077.

- Power, J. R. 2021. “Enhancing Engineering Education Through the Integration of Open Science Principles: A Strategic Approach to Systematic Reviews.” Journal of Engineering Education 1 (6): 509–514. doi:10.1002/jee.20413.

- Power, J., P. Conway, CÓ Gallchóir, A.-M. Young, and M. Hayes. 2022. “Illusions of Online Readiness: The Counter-Intuitive Impact of Rapid Immersion in Digital Learning due to COVID-19.” Irish Educational Studies, 1–18. doi:10.1080/03323315.2022.2061565.

- Power, J., R. Lynch, and O. McGarr. 2019. “Difficulty and Self-Efficacy: An Exploratory Study.” British Journal of Educational Technology 51: 281–296. doi:10.1111/bjet.12755.

- Rafiq, Y., and H. Fullerton. 1996. “Peer Assessment of Group Projects in Civil Engineering.” Assessment & Evaluation in Higher Education 21 (1): 69–81. doi:10.1080/0260293960210106.

- Reddy, Y. M., and H. Andrade. 2010. “A Review of Rubric use in Higher Education.” Assessment & Evaluation in Higher Education 35 (4): 435–448. doi:10.1080/02602930902862859.

- Rico-Juan, J. R., A.-J. Gallego, and J. Calvo-Zaragoza. 2019. “Automatic Detection of Inconsistencies Between Numerical Scores and Textual Feedback in Peer-Assessment Processes with Machine Learning.” Computers & Education 140: 103609. doi:10.1016/j.compedu.2019.103609.

- Seifert, T., and O. Feliks. 2019. “Online Self-Assessment and Peer-Assessment as a Tool to Enhance Student-Teachers’ Assessment Skills.” Assessment & Evaluation in Higher Education 44 (2): 169–185. doi:10.1080/02602938.2018.1487023.

- Sung, Y.-T., C.-S. Lin, C.-L. Lee, and K.-E. Chang. 2003. “Evaluating Proposals for Experiments: An Application of web-Based Self-Assessment and Peer-Assessment.” Teaching of Psychology 30 (4): 331–334. doi:10.1207/S15328023TOP3004_06.

- Topping, K. 1998. “Peer Assessment Between Students in Colleges and Universities.” Review of Educational Research 68 (3): 249–276. doi:10.3102/00346543068003249.

- Topping, K. 2010. “Methodological Quandaries in Studying Process and Outcomes in Peer Assessment.” Learning and Instruction 20 (4): 339–343. doi:10.1016/j.learninstruc.2009.08.003.

- van Zundert, M., D. Sluijsmans, and J. van Merriënboer. 2010. “Effective Peer Assessment Processes: Research Findings and Future Directions.” Learning and Instruction 20 (4): 270–279. doi:10.1016/j.learninstruc.2009.08.004.

- Walvoord, M. E., M. H. Hoefnagels, D. D. Gaffin, M. M. Chumchal, and D. A. Long. 2008. “An Analysis of Calibrated Peer Review (CPR) in a Science Lecture Classroom.” Journal of College Science Teaching 37: 66–72.

- Wiliam, D. 2011. “What is Assessment for Learning?” Studies in Educational Evaluation 37 (1): 3–14. doi:10.1016/j.stueduc.2011.03.001.

- Willey, K., and M. Freeman. 2006. “Improving Teamwork and Engagement: The Case for Self and Peer Assessment.” Australasian Journal of Engineering Education February: 2–19. doi:10.3316/aeipt.157674. https://search.informit.org/doi/10.3316aeipt.157674.

- Zimmerman, B. J., and D. H. Schunk. 2001. “Reflections on Theories of Self-Regulated Learning and Academic Achievement.” In Self-regulated Learning and Academic Achievement: Theoretical Perspectives, edited by J. B. Zimmerman, and D. H. Schunk, 289–307. New York.

- Zou, Y., C. D. Schunn, Y. Wang, and F. Zhang. 2018. “Student Attitudes That Predict Participation in Peer Assessment.” Assessment & Evaluation in Higher Education 43 (5): 800–811. doi:10.1080/02602938.2017.1409872.