?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

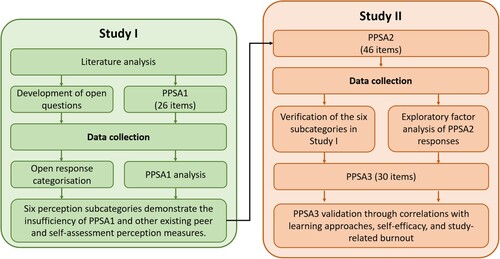

This study explores Finnish chemical engineering students’ peer and self-assessment perceptions. It is divided into two substudies. In Study I, we investigate students’ perceptions of peer and self-assessment based on their responses to open questions and Likert-items adopted from existing literature. We identify six perception dimensions from these open responses: Learning and reflection, Motivation and participation, Self-efficacy, Exhaustion and workload, Cynicism, and Self-criticism. In Study II, we develop a new questionnaire for engineering students’ peer and self-assessment perceptions, as pre-existing questionnaires fail to adequately capture these dimensions. Exploratory factor analysis is used to investigate how student perceptions form larger factors, and how they relate to theoretically connected pedagogical measures like study-related burnout, self-efficacy, and learning approaches. We find that negative perceptions correlate with study-related burnout and an unreflective approach to learning. Meanwhile, positive perceptions of self-efficacy and learning in peer and self-assessment are associated with the deep approach, underlining the importance of facilitating positive assessment experiences in students.

Introduction

The rapid pace of development in engineering has underlined the importance of training our graduates to become lifelong learners (Guest Citation2006). To be lifelong learners, students should be able to employ deep learning strategies and self-regulate their learning and motivation (Malan and Stegmann Citation2018). Consequently, engineering educators should adopt teaching and assessment strategies that facilitate both learning and developing such skills, including peer and self-assessment (Nieminen, Asikainen, and Rämö Citation2021; Wanner and Palmer Citation2018).

In general, any assessment decision includes two steps: identifying assessment criteria and determining how well the assessed work meets these criteria (Boud and Falchikov Citation1989). Self-assessment involves learners in judging their own learning, especially their learning outcomes or achievements (Boud and Falchikov Citation1989). Meanwhile, in peer assessment students make these evaluations of similar status peers (Topping Citation1998). These general definitions encapsulate assessment practices including peer- and self-review; peer and self-evaluation; peer and self-grading and peer feedback (Panadero and Alqassab Citation2019). They do not, however, delineate the respective roles the teachers and students play in determining the assessment criteria or processes.

Peer and self-assessment have been employed in engineering education to assess tasks including oral presentations (Liow Citation2008; Nikolic, Stirling, and Ros Citation2018), written reports (Andersson and Weurlander Citation2019; Rafiq and Fullerton Citation1996 Van Hattum-Janssen, Pacheco, and Vasconcelos Citation2004;), and mathematical problems (Boud and Holmes Citation1981; O’moore and Baldock Citation2007). While the validity and benefits of peer and self-assessment have largely been established by previous research (Ashenafi Citation2017; Falchikov and Boud Citation1989; Falchikov and Goldfinch Citation2000; Hadzhikoleva, Hadzhikolev, and Kasakliev Citation2019; Topping Citation1998; Wanner and Palmer Citation2018; Yan and Brown Citation2017), the literature on students’ peer and self-assessment perceptions is scarcer (Andrade and Du Citation2007; Asikainen et al. Citation2014; Li, Huang, and Cheng Citation2022; Planas Lladó et al. Citation2014; Wanner and Palmer Citation2018).

Students’ perceptions of peer and self-assessment depend on how the assessment is implemented (Planas Lladó et al. Citation2014) and might serve as important mediators for the benefits associated with these practices. Consequently, carefully constructed questionnaires of student perceptions are necessary to identify successful practices, as well as the specific advantages of a given practice. Unfortunately, engineering education researchers, and the peer and self-assessment research community in general, have typically utilised self-developed surveys to gauge student impressions with little statistical consideration for their reliability or the underlying conceptual structures (Andersson and Weurlander Citation2019; Boud and Holmes Citation1981; Chang and de Lemos Coutinho Citation2022; González De Sande and Godino-Llorente Citation2014; McGarr and Clifford Citation2013; Nikolic, Stirling, and Ros Citation2018; O’moore and Baldock Citation2007; Planas Lladó et al. Citation2014; Van Hattum-Janssen and Lourenço Citation2008; Willey and Gardner Citation2010). When such analyses have been conducted, the Cronbach’s α (Cronbach Citation1951) reliabilities of the emergent subscales have been as low as 0.28 (Moneypenny, Evans, and Kraha Citation2018), which raises concern over poor interrelatedness or an insufficient number of factor items (Taber Citation2018). Thus, in this study we examine chemical engineering students’ perceptions of peer and self-assessment to develop a carefully validated perception questionnaire. The article is divided into two substudies: Study I focuses on the qualitative investigation of students’ peer and self-assessment perceptions and Study II on the development of our Perceptions of peer and self-assessment (PPSA) questionnaire.

Study I – exploring students’ peer and self-assessment perceptions

In a recent review, Andrade (Citation2019) called for more research on the cognitive and affective mechanisms of self-assessment. To answer this call and to provide groundwork for a carefully validated measure of student perceptions on peer and self-assessment, we investigated these perceptions in a chemical engineering course. Our key research questions in Study I are ‘What kinds of perceptions do students describe after participation in peer and self-assessment?’, and ‘Do these perceptions form broader categories with underlying communalities?’

In general, research suggests that students wish to be involved in the assessment process and that their perceptions of peer assessment skew towards the positive (Andersson and Weurlander Citation2019; Bazvand and Rasooli Citation2022; Carvalho Citation2013; Mulder et al. Citation2014; Nikolic, Stirling, and Ros Citation2018; Vickerman Citation2009; Wen and Tsai Citation2006). For example, students maintain that participation in peer assessment increases their understanding of the assessment criteria (Boud and Holmes Citation1981; Ndoye Citation2017; Wanner and Palmer Citation2018), helps them learn better (Asikainen et al. Citation2014; Moneypenny, Evans, and Kraha Citation2018; Mulder et al. Citation2014; Mulder, Pearce, and Baik Citation2014; Partanen Citation2020), develops critical thinking skills (Andersson and Weurlander Citation2019), improves motivation (Hanrahan and Isaacs Citation2001; Planas Lladó et al. Citation2014; Van Hattum-Janssen and Lourenço Citation2008), and enhances communication skills and autonomy (Moneypenny, Evans, and Kraha Citation2018; Shen, Bai, and Xue Citation2020). However, students can also find peer assessment time consuming and intimidating (Hanrahan and Isaacs Citation2001; Moneypenny, Evans, and Kraha Citation2018; Sluijsmans et al. Citation2001) and concern over the validity and fairness of peer assessment is commonplace (Andersson and Weurlander Citation2019; Carvalho Citation2013; Kaufman and Schunn Citation2011; Patton Citation2012; Van Zundert, Sluijsmans, and van Merriënboer Citation2010; Wanner and Palmer Citation2018). Some further worry about the impartiality and competence of their peer-assessors (Carvalho Citation2013; Kaufman and Schunn Citation2011; Mulder, Pearce, and Baik Citation2014; Willey and Gardner Citation2010) or their own competence as assessors (Van Hattum-Janssen and Lourenço Citation2008). Accordingly, students can be more supportive of peer assessment as a formative rather than a summative exercise (Patton Citation2012), although some feel that they should not be forced to do the teacher’s job (Van Hattum-Janssen and Lourenço Citation2008; Willey and Gardner Citation2010).

Perceptions of peer assessment are impacted by its implementation (Planas Lladó et al. Citation2014), the field of study (McGarr and Clifford Citation2013), and the assessor’s gender (Moneypenny, Evans, and Kraha Citation2018; Wen and Tsai Citation2006). Participation in peer assessment seems to improve students’ attitudes towards it (Mulder et al. Citation2014; Van Zundert, Sluijsmans, and van Merriënboer Citation2010), plausibly due to improvements in assessment efficacy. However, some studies have obtained conflicting results, with students’ attitudes decreasing or becoming more polarised after peer assessment (Mulder, Pearce, and Baik Citation2014; Planas Lladó et al. Citation2014). Kaufman and Schunn (Citation2011) suggest that students’ perceptions of fairness are chiefly determined by whether peer assessment was perceived as useful and positive. Overall, reservations over fairness and validity may be mitigated with measures such as anonymity, individualised assessment of group work, inclusion of instructor assessment, and sufficient training and guidelines (Hanrahan and Isaacs Citation2001; Kaufman and Schunn Citation2011; Planas Lladó et al. Citation2014; Sridharan, Muttakin, and Mihret Citation2018; Vickerman Citation2009).

Though scarcer, the self-assessment literature similarly indicates that students tend to possess positive perceptions towards self-assessment (Andrade and Du Citation2007; Hanrahan and Isaacs Citation2001; Micán and Medina Citation2017; Wanner and Palmer Citation2018), especially if they are actively engaged in formative self-assessment (Andrade Citation2019). University students also feel that self-assessment helps them take responsibility for learning (Bourke Citation2014; Lopez and Kossack Citation2007; Ndoye Citation2017) and apply newfound skills (Murakami, Valvona, and Broudy Citation2012). According to students, it additionally guides evaluation and revision of work (Micán and Medina Citation2017), fosters self-regulated learning and reflection (Wang Citation2017), and facilitates critical thinking (Siow Citation2015; van Helvoort Citation2012). Furthermore, self-assessment practices can increase students’ intrinsic motivation by supporting autonomy (Gholami Citation2016). On the negative side, some experience self-assessment as frustrating and difficult when the evaluation criteria are not clear (Andrade and Du Citation2007; Hanrahan and Isaacs Citation2001). However, as in peer assessment, students’ attitudes tend to improve as they gain more self-assessment experience (Andrade and Du Citation2007).

To summarise, there appear to be many parallels between student perceptions of peer and self-assessment. Indeed, in some learning contexts, such as peer and self-assessment of contributions to a group task, it might not even make sense to separate the two. Consequently, past studies have often studied peer and self-assessment simultaneously (Hanrahan and Isaacs Citation2001; Ndoye Citation2017; Nikolic, Stirling, and Ros Citation2018; Van Hattum-Janssen and Lourenço Citation2008; Wanner and Palmer Citation2018; Willey and Gardner Citation2010). In Study I, we seek to understand how these literature trends are reflected in chemical engineering students’ perceptions of peer and self-assessment and whether these perceptions form broader underlying categories.

Methods

Course background

The peer and self-assessment perception data were collected from a bachelor’s level Chemical thermodynamics course at Aalto University in Finland in 2019. The course was an obligatory part of the chemical engineering curriculum. Although some courses at the university utilise peer and self-assessment, it is still a relatively novel and infrequently encountered teaching tool for the chemical engineering students.

Six sets of course problems were graded through peer and self-assessment, which constituted 10% of the course grade. The peer and self-assessment scheme shared elements with the Peer Assessment Learning Sessions proposed by O'moore and Baldock (Citation2007): The problem deadlines were spread throughout the course and relayed to the students from the start. The actual problems were published at least a week before the deadline. During this time, students could participate in up to three walk-in study halls where they could work on the problems collaboratively, with teaching staff at hand to provide aid. Each problem set typically consisted of 2–3 problems with subtasks. These subtasks spanned the second and third knowledge categories and third through fifth cognitive processing categories of the Revised Bloom's taxonomy (Anderson and Krathwohl Citation2001).

The students submitted their solutions digitally to the course’s online learning platform where they also performed the peer and self-assessment. Each student assessed their own solutions and those of two peers. All peer assessments were conducted anonymously. Clear assessment instructions, an assessment rubric, and model solutions were available to guide the assessment process. These were created by the course instructor, so the students did not participate in the shaping of the assessment criteria or practices. The peer and self-assessments combined grading with a formative component: In addition to numerical marks, students were obligated to provide written feedback and justifications for their evaluation in an open response field. For example, when students deducted marks, they were instructed to include an explanation of these deductions that was based on the assessment rubric. The open response fields were only automatically checked for whether they contained text or not. The content or quality of student feedback was not examined.

The final mark was calculated as an average of the three assessments students received. If the minimum and maximum marks differed substantially, a course instructor provided the ultimate verdict. Students received credit based both on the number and quality of their numerical assessments. The teacher also provided general feedback based on trends observed in the solutions.

Data collection

An outline of Study I is provided in . The data was gathered from an online survey that the students responded to at the end of the course. It included two open questions and the first version of the Perceptions of peer and self-assessment (PPSA1) questionnaire. The questionnaire contained 26 items on a five-point Likert scale with higher values indicating more agreement. Participants received a small amount of credit, irrespective of whether they provided research permission. They were also informed that they could renege research permission at any time with no detrimental effects on their course performance. The course homepage contained detailed information on the research. Based on national guidelines from the Finnish Advisory Board on Research Integrity, the research did not need a prior ethical review statement from the ethical board as it did not utilise sensitive personal information, participants were legally competent adults, and the research did not risk the physical integrity or cause mental harm or threats of safety to the participants beyond everyday life.

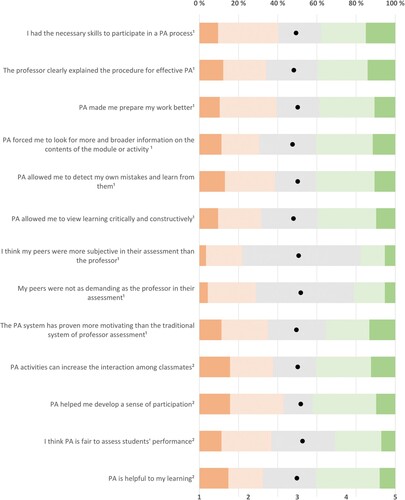

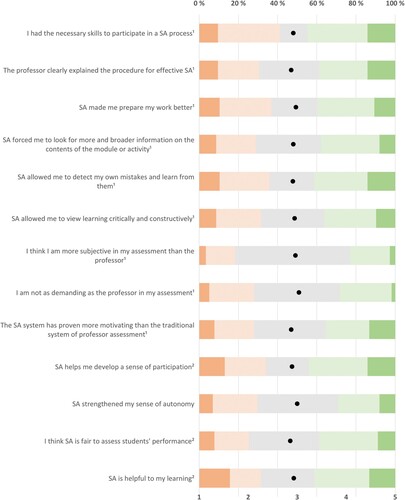

The PPSA1 items are shown in and . They were modified from existing peer assessment questionnaires (Planas Lladó et al. Citation2014; Wen and Tsai Citation2006), although we added one additional item regarding autonomy to the self-assessment part. The open questions included ‘What kind of thoughts and emotions did peer assessment and self-assessment evoke in you?’ and ‘How have peer and self-assessment affected your studying and learning?’

Figure 2. Percentages of different Likert-responses for perceptions of peer assessment (PA) in the PPSA1 and the mean values for each question. The response range at the bottom of the Figure is from Strongly disagree (= 1) to Strongly agree (= 5). Meanwhile, the superscripts after the items on the left indicate their source with 1 = Planas Lladó et al. (Citation2014) and 2 = Wen and Tsai (Citation2006).

Figure 3. Percentages of different Likert-responses for perceptions of self-assessment (SA) in the PPSA1 and the mean values for each question. The response range at the bottom of the Figure is from Strongly disagree (= 1) to Strongly agree (= 5). Meanwhile, the superscripts after the items on the left indicate their source with 1 = Planas Lladó et al. (Citation2014) and 2 = Wen and Tsai (Citation2006).

Of the 149 enrolled 134 (90%) provided research permission. Meanwhile, 114 (96%) of the 119 students who did not drop out during the course responded to the end-of-course survey. Of these, 103 provided open responses. Half (52%) of the participants were females. Their mean age was 21.4, with a range of 19–35 years. Most (78%) were doing their second year.

Analysis

The data were analysed through abductive content analysis which implies a shift in analysis between inductive and deductive approaches (Graneheim, Lindgren, and Lundman Citation2017). In our case, the categories emerged from the data but theory was also used in the categorisation process. All responses were independently read through by two of the authors to identify common characteristics. Whenever a characteristic was recognised, that text segment was coded, and the segments showing similar characteristics were grouped. One response could potentially include multiple characteristics.

Results

Students’ perceptions of peer and self-assessment in the open responses

Initially, students’ perceptions of peer and self-assessment were categorised as positive (35%), negative (34%), both positive and negative (27%), or neutral (4%). Neutral responses were typically short and noncommittal, such as ‘It was ok’ or ‘I have no opinion.’ Next, three subcategories of positive and negative experiences were identified: The first positive category was Learning and reflection, which included participants’ expressions of peer or self-assessment improving learning. The second positive category, Motivation and participation, consisted of expressions showing motivation, inspiration, and increased involvement. The third positive category, Self-efficacy, described expectations of capability and success in assessment. Meanwhile, the first negative category, Exhaustion and workload, contained perceptions of assessment taking too much time while being exhausting. The second negative category, Cynicism, comprised of expressions of not seeing value in peer and self-assessment. The third negative category, Self-criticism, covered descriptions of assessment being difficult. Students in this category also doubted their abilities to assess or felt uncomfortable exposing their solutions to peers. displays examples of both peer and self-assessment perceptions in all categories.

Table 1. Examples of characteristic responses in different open response categories for peer and self-assessment in the questionnaires.

Questionnaire results

Student responses to the 26 questions of the PPSA1 are summarised in and which show the percentages of the five Likert categories from Strongly Disagree (= 1) to Strongly Agree (= 5) and the means for each of these categories as black dots. In accordance with the qualitative results, student experiences of peer and self-assessment varied widely with all the means in the middle quintile. Meanwhile, the Strongly Disagree and Strongly Agree categories typically constituted less than 15% of the responses. The Neither Agree nor Disagree category was particularly prevalent for the two questions related to the subjectivity and demandingness of the assessor for both peer and self-assessment. An explorative factor analysis of the PPSA1 results failed to produce a reasonable factor structure for the questionnaire.

Discussion

In Study I, we investigated chemical engineering students’ perceptions of peer and self-assessment. These perceptions were initially categorised as either positive, negative, or neutral. Then, three subcategories were identified for both positive and negative perceptions.

The positive perception subcategories were Learning and reflection, Motivation and participation, and Self-efficacy. Student responses in these categories highlighted how peer and self-assessment increased motivation and understanding of the assessment criteria. Many also described how peer and self-assesment improved learning by facilitating reflection, critical thinking, and review. These positive perceptions closely echoed the ones found in the literature review at the beginning of this study. For example, civil engineering students in Van Hattum-Janssen and Lourenço’s (Citation2008) study also felt that peer assessment increased their motivation to study. Meanwhile, electrical engineering students in Andersson and Weurlander’s (Citation2019) study described how seeing others’ work benefitted their learning, similarly to the students in this study.

The negative perception subcategories were Exhaustion, Cynicism, and Self-criticism. Perceptions in these categories included concerns over increased workload, their own or peers’ ability to assess, and the impartiality of both peer and self-assessment. These were also in close agreement with the existing literature on student peer and self-assessment perceptions. For instance, akin to the engineering students in Willey and Gardner’s study (Citation2010), several students questioned the competence of their peer-assessors. In the meantime, others suspected their own capability to assess, in line with Van Hattum-Janssen and Lourenço’s (Citation2008) results.

Even though there was evidence of all six identified perception subcategories in the literature, existing measures of student’s peer and self-assessment perceptions miss some of them. For example, the questionnaire by Wen and Tsai (Citation2006) seemingly fails to tap into the self-efficacy and self-criticism aspects of student perceptions. On the other hand, both the interdisciplinary questionnaire by Planas Lladó et al. (Citation2014) and our PPSA1 lack questions in the Exhaustion and workload category. There might also be some structural issues with these questionnaires, as a recent study (Moneypenny, Evans, and Kraha Citation2018) showed Cronbach’s reliabilities below 0.4 for two of the four subscales in the questionnaire by Wen and Tsai (Citation2006). Even though Cronbach’s

reliabilities are sample-specific (Taber Citation2018) and Wen and Tsai themselves report values above 0.6 for all subscales, this raises concerns over poor interrelatedness or an insufficient number of factor items in the questionnaire (Taber Citation2018). These shortcomings in existing questionnaires combined with the failure of PPSA1 to produce a reasonable factor structure necessitated an overhaul of the PPSA1 based on the perception subcategories.

Study II – developing the perceptions of peer and self-assessment -questionnaire

In Study I, we found that current instruments failed to capture key aspects of chemical engineering students’ peer and self-assessment perceptions. These results underscored the need for a carefully constructed and validated student perception questionnaire that includes all six of the student perception subcategories identified in Study I. In Study II, we aim to develop such a questionnaire. We validate our questionnaire by looking at the connections between peer and self-assessment perceptions, learning approaches, self-efficacy, and study-related burnout.

Students’ predispositions towards a particular learning approach colour their perceptions of assessment (Struyven, Dochy, and Janssens Citation2005). Two learning approaches have predominated the literature: the deep approach and the unreflective approach (Entwistle Citation2009; Entwistle and McCune Citation2004; Lindblom-Ylänne, Parpala, and Postareff Citation2019). Students high in the deep approach tend to be intrinsically motivated to understand the topic, focus on critical thinking, and seek to connect new material with pre-existing knowledge. Meanwhile, students displaying the unreflective approach tend to utilise repetitive learning strategies to memorise key aspects of the course, which results in a fragmented knowledge base.

The connection between peer and self-assessment and the deep approach appears to be bidirectional in the sense that the mere participation in peer and self-assessment fosters a deeper approach (Lynch, McNamara, and Seery Citation2012; Nieminen, Asikainen, and Rämö Citation2021). Students applying the deep approach also tend to perceive the teaching-learning environment more positively, whereas students applying the unreflective approach hold more negative views (Parpala et al. Citation2010). This implies that positive perceptions of peer and self-assessment are more likely associated with the deep approach and negative perceptions with the unreflective approach. Students applying the deep approach also seem to benefit more from peer and self-assessment (Yang and Tsai Citation2010) and are likely better equipped to recognise the benefits associated with peer and self-assessment, which may further catalyse positive attitudes toward these activities.

In addition to impacting students’ learning approaches (Scouller Citation1998; Struyven, Dochy, and Janssens Citation2005), assessment practices like peer and self-assessment can impact students’ self-efficacy (Bandura Citation1997; Van Dinther, Dochy, and Segers Citation2011). Self-efficacy is defined as a person’s perception of their capability to organise and execute required actions to achieve a desired outcome (Bandura Citation1997). It is one of the most central non-intellective determinants of academic performance (e.g. Richardson, Abraham, and Bond Citation2012; Schneider and Preckel Citation2017). Nieminen, Asikainen, and Rämö (Citation2021) demonstrated that summative self-assessment can promote self-efficacy in addition to facilitating a deep approach to learning. In general, self-assessment practices seem to improve particularly female students’ self-efficacy, although the effect size depends on the implementation (Panadero, Jonsson, and Botella Citation2017).

In peer and self-assessment, self-efficacy can be impacted through experiences of successful performance or through seeing others perform (Bandura Citation1997; Van Dinther, Dochy, and Segers Citation2011). Indeed, some students report that peer assessment improves learning because it lets them see alternative solutions, while others think seeing these solutions helps them better estimate their own level of understanding (Partanen Citation2020). Based on the results of Study I, students seem to possess a sense of peer and self-assessment efficacy, as exemplified by their perceptions of increased understanding of the assessment criteria and fears over their ability to evaluate others. This narrow sense of peer and self-assessment efficacy is likely correlated with the more general sense of self-efficacy.

As perceived workload is intimately connected to feelings of exhaustion, students’ perceptions of peer and self-assessment as time-consuming (Hanrahan and Isaacs Citation2001; Moneypenny, Evans, and Kraha Citation2018) likely contribute to study-related burnout. Inexperience, fear of hurting others, and the fear of being hurt can also make peer and self-assessment more stressful than traditional assessment, further contributing to feelings of burnout, especially for female students (Pope Citation2005). Study-related burnout is defined as a syndrome with three key components: emotional exhaustion that is the outcome of high perceived study demands, the development of a cynical and detached attitude towards one’s studies, and feelings of inadequacy (Salmela-Aro et al. Citation2009). These components bear a striking similarity the negative subcategories of Exhaustion, Cynicism, and Self-criticism identified from student perceptions in Study I. Thus, while peer and self-assessment can lead to improved performance, for students with negative perceptions it might also contribute to study-related burnout, especially for students applying an unreflective approach (Asikainen et al. Citation2020).

Based on the above literature review, we hypothesise first that negative experiences of peer and self-assessment are linked to the unreflective approach to learning and all three components of study-related burnout. Second, positive experiences should be connected to the deep approach to learning and high self-efficacy. We use these hypotheses to provide convergent validity for our questionnaire. Therefore, our central research questions in Study II are: ‘Do students’ peer and self-assessment perceptions form theoretically relevant factors?’ and ‘Do these factors relate to existing measures of learning approaches, self-efficacy, and study-related burnout in a reasonable way?’

Methods

Data collection

An outline of Study II can be found in . The data was collected from a bachelor’s level Chemical thermodynamics course at Aalto University in Finland in 2020. The data collection practices and the peer and self-assessment tasks were similar to the ones in described in Study I. One notable difference between the years was that in 2020, each problem set was subdivided into smaller assessment tasks so that students didn’t have to assesses problems they had not attempted.

The students responded to a pre-survey at the beginning of the course, and a post-survey at the end of the course. The pre-survey contained a 5-item measure for general self-efficacy utilising a five-point Likert scale, which originated from the Motivated Strategies for Learning Questionnaire (MSLQ; Pintrich Citation1991). The post-survey included a 12-item measure for learning approaches with a five-point Likert scale (Parpala and Lindblom-Ylänne Citation2012), and a 9-item study-related burnout measure with a six-point scale (Salmela-Aro et al. Citation2009; Salmela-Aro and Read Citation2017). It also included the second version of the Perceptions of Peer and Self-Assessment -questionnaire (PPSA2). The PPSA2 contained 46 items with a five-point Likert scale and was based on the six open response subcategories of Study I. It is shown in Appendix 1. In all measures, higher values on the Likert scale indicate more agreement. The post-survey also included the open questions from Study I, but the questions were asked separately for peer and self-assessment.

Of the 155 enrolled students, 145 (94%) provided research permission. Meanwhile, 124 (83%) of 149 students who did not drop out during the course responded to both surveys. Of these, 68 and 48 provided open responses on their peer and self-assessment perceptions, respectively. Most (79%) of the participants were in their second year, and half (49%) were females. The mean age was 21.9, with a range or 19–43 years.

Analysis

The open response data from 2020 was abductively content analysed to verify the categorisation that had emerged in Study I. After this, the broader structures emerging from the PPSA2 were investigated with explorative factor analyses (EFAs). The data included the 124 respondents from the 2020 post-survey. There were no missing values. Factor stability was investigated using Bartlett’s test for sphericity (Bartlett Citation1954) and the Kaiser-Mayer-Olkin (KMO) measure for sampling adequacy (Kaiser Citation1974). We used both parallel analysis and the Velice’s minimum average partials (VMAP) -criterion to deduce the optimal number of factors to extract, as recommended by recent reviews in EFA (Gaskin and Happell Citation2014; Howard Citation2016; Watkins Citation2018). The final analysis utilised the principal axis method with direct oblimin (quartimin) rotation using polychoric correlations (Howard Citation2016; Watkins Citation2018).

After identifying the optimal number of factors, the number of scale items was reduced using weak and multiple factor loadings. The resulting scales were further refined based on the Cronbach’s α (Cronbach Citation1951), and McDonald’s ωt (McDonald Citation1999) values of the individual factors and the test as a whole. McDonald’s ωt indices were employed due to the multiple issues associated with the use of Cronbach’s α measure for reliability (Dunn, Baguley, and Brunsden Citation2014). A minimum of 3 items were required per factor, as recommended by Watkins (Citation2018). Sum scales were formed based on the factor solutions and Pearson correlations were used to explore the relationship between these, learning approaches, self-efficacy, and study-related burnout.

Results

PPSA2 factor analysis

The statistical indicators for the EFAs are summarised in . Prior to the EFA, the PPSA2 included 24 items for peer assessment and 22 for self-assessment. The statistically significant values (i.e. below p = 0.05) on Bartlett’s test, combined with KMO values higher than 0.7 in demonstrate that the sample was suitable for factor analysis (Watkins Citation2018). Meanwhile, the parallel analysis and VMAP values show that optimal number of factors was three. After the number of scale items was reduced, the final PPSA3 contained 15 items for peer and self-assessment each. The Cronbach’s values were 0.88 for peer assessment and 0.90 for self-assessment, while the McDonald’s ωt indices were 0.92 and 0.94, respectively.

Table 2. The relevant statistical EFA indicators for the PPSA2 and the PPSA3.

The final three-factor solutions were similar for peer and self-assessment experiences: Of the original six PPSA2 subcategories, the positive experience subcategories of Learning and reflection, and Motivation and interest collapsed into a single factor in PPSA3, as did all three negative experience subcategories. The factors for peer assessment were named Peer assessment as supporting learning (PF1), Self-efficacy in peer assessment (PF2), and Negative experiences of peer assessment (PF3). Analogous names and labels were used for self-assessment. The factor loadings, factor means, standard deviations, and McDonald’s ωt reliabilities are reported in for peer assessment and in for self-assessment. For both peer and self-assessment perceptions, the emerging factors possessed values above 0.82.

Table 3. The factors structure of the peer assessment items in PPSA3, together with the means, standard deviations, and McDonald’s omega values of the factors.

Table 4. The factors structure of the self-assessment items in PPSA3, together with the means, standard deviations, and McDonald’s omega values of the factors.

Correlations between PPSA3 factors and other pedagogical measures

Correlations between the PPSA3 factors and learning approaches, self-efficacy, and the three components of study-related burnout are reported in . As hypothesised, negative perceptions of both peer and self-assessment were positively associated with the unreflective learning approach. We also observe statistically significant connections between negative perceptions of both peer and self-assessment and the different components of study-related burnout, except for PF3 and exhaustion.

Table 5. Correlations between the peer and self-assessment factors in PPSA3 and the measures of learning approaches, study-related burnout, and general self-efficacy.

On the positive side, self-efficacies in both peer and self-assessment were linked to general self-efficacy, as expected. In addition, self-efficacy in self-assessment was positively correlated with the deep approach and negatively correlated with both the unreflective approach and the cynicism component of study-related burnout. Meanwhile, self-efficacy in peer assessment correlated positively with the deep approach but did not correlate with the unreflective approach. Almost no correlations were observed for the supporting learning factors: Only PF1 showed positive correlations with exhaustion and inadequacy. While statistically significant, all observed correlations were small.

Discussion

In Study II, a new version of the PPSA (PPSA2) was developed based on the results from Study I. Exploratory factor analysis was then used to reduce this 46-item PPSA2 into the final 30-item PPSA3. Three similar factors emerged from the peer and self-assessment perception data: The first factor had to do with peer or self-assessment as supporting learning. It combined items in the open response categories of Learning and reflection and Motivation and participation from Study I. The second factor was analogous to the self-efficacy open response category for both peer and self-assessment. The third factor subsumed all items in the three negative experience open response subcategories. For both peer and self-assessment perceptions, the emerging factors showed values greater than 0.82 and contained substantial loadings only from PPSA3 items that were a part of that factor, underscoring the reliability of the instrument. At the same time, the

values were not extremely close to one, which is good as such values could indicate excessive redundancies between factor items. As expected from the open responses of Study I, a two-factor solution further collapsed the supporting learning and self-efficacy factors into a single positive experience factor, recovering the positive versus negative perception dichotomy that formed the basis of the open response categorisation in Study I.

To investigate the convergent validity of the PPSA3, we studied correlations between the identified factors and learning approaches, study-related burnout, and self-efficacy. As expected, based on the finding that self-assessment can promote self-efficacy (Nieminen, Asikainen, and Rämö Citation2021; Panadero, Jonsson, and Botella Citation2017), the self-efficacy factors in both peer and self-assessment were correlated with global self-efficacy. Our results also aligned Parpala et al. (Citation2010), in that students with the deep approach tended to perceive the teaching and learning environment more positively as evidenced by the positive correlation between the deep approach and the self-efficacy factors for peer and self-assessment. At the same time, the unreflective approach was positively correlated with negative views of peer and self-assessment and negatively correlated with perceptions of self-efficacy in self-assessment.

Surprisingly, the self-assessment as supporting learning factor showed no correlations at all, while the corresponding peer assessment factor showed weak positive correlations with the measures of exhaustion and inadequacy. These correlations are small, so they might just be a statistical artefact caused by the relatively small sample size. However, many students praised peer and self-assessment as supporting learning while simultaneously berating them for the extra workload in their open responses in Study I. Perhaps this phenomenon is visible here, as it is the more work-intensive peer assessment that is connected to the study-related burnout variables.

Besides their connections to the unreflective approach, negative experiences of both peer and self-assessment were associated with the exhaustion, cynicism, and inadequacy facets of study-related burnout. These connections are in line with the hypotheses drawn from the literature, as students applying the unreflective approach tend to experience more study-related burnout symptoms than those applying the deep approach (Asikainen et al. Citation2020). The strongest correlations were observed between students’ negative experiences of self-assessment and the measures of inadequacy, underlining how negative perceptions of one’s ability to succeed in one’s studies are tied to negative perceptions of self-assessment. This stands to reason, given that the PPSA3 included items from the Self-criticism category of the PPSA2, and self-criticism in general is closely related to both depression and burnout (Hashem and Zeinoun Citation2020; López, Sanderman, and Schroevers Citation2018).

Limitations

There were several limitations in our study: First, the numbers of open responses of peer and self-assessment perceptions were relatively small. A second limitation is that we only used self-reports in this study, although this seems appropriate given our aim of exploring students’ perceptions. Third, our data consisted solely of students assessing course problems in one course at one university. Consequently, the PPSA needs to be further tested with other types tasks, in other contexts, and with larger populations.

Conclusions and practical implications

This study explored Finnish chemical engineering students’ perceptions of peer and self-assessment. In the students’ view, peer and self-assessment possess both shared and unique advantages, with different students preferring and benefitting more from one or the other activity. Negative perceptions of peer and self-assessment seem to be generally linked to students’ doubts in their abilities, unreflective studying, and lack of well-being. Meanwhile, positive experiences of assessing own or others’ work may enhance self-efficacy and well-being and facilitate the adoption of a deep approach to learning. Teachers should thus work towards creating positive assessment experiences for their students. Future studies should also carefully consider whether treating peer and self-assessment as two different but complementary assessment practices rather than a single entity makes sense in their learning context. At present, peer assessment appears somewhat more prevalent in engineering education than self-assessment based on our literature review. Therefore, teachers should consider implementing self-assessment activities together with peer assessment to facilitate learning.

We developed the PPSA questionnaire based on previous literature and our qualitative investigation of students’ perceptions of peer and self-assessment in Study I. In Study II, we showed that the PPSA measures student peer and self-assessment perceptions in higher education chemical engineering courses both reliably and validly. Next, the PPSA should be tested with larger populations and in different learning contexts. However, due to the relatively large number of items within the PPSA, we recommend that teachers add a time estimate at the beginning of the questionnaire.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Lauri J. Partanen

Dr. Lauri J. Partanen is a university lecturer in physical chemistry at Aalto University in the School of Chemical Engineering. His research interests span computational and theoretical chemistry, particularly in the fields of quantum mechanics and thermodynamics but the principal focus is on chemistry education, learning, and assessment.

Viivi Virtanen

Dr. Viivi Virtanen is a principal research scientist in the EDU-research unit at the Häme University of Applied Sciences in Finland. She also has a docentship/adjunct professorship at the University of Helsinki. Her research interest include broadly teaching and learning in higher education with specific interests in well-being, and researcher education and careers especially in STEM fields.

Henna Asikainen

Dr. Henna Asikainen is a senior university lecturer in university pedagogy at the University of Helsinki in the Centre for University Teaching and Learning and a research director in the WELLS-project. She has the title of docent in university pedagogy. Her research interests comprise student and teacher wellbeing and its relation to learning and assessment in learning.

Liisa Myyry

Dr. Liisa Myyry is a senior university lecturer in university pedagogy at the University of Helsinki in the Centre for University Teaching and Learning. She has the title of docent in social psychology. Her research interests are moral and adult development, personal values, digitalization of teaching and learning and assessment practices in higher education.

References

- Anderson, L. W., and D. R. Krathwohl. 2001. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. New York, NY: Longman.

- Andersson, M., and M. Weurlander. 2019. “Peer Review of Laboratory Reports for Engineering Students.” European Journal of Engineering Education 44 (3): 417–428. https://doi.org/10.1080/03043797.2018.1538322.

- Andrade, H. L. 2019. “A Critical Review of Research on Student Self-Assessment.” Frontiers in Education 4 (87): 1–13.

- Andrade, H., and Y. Du. 2007. “Student Responses to Criteria-Referenced Self-Assessment.” Assessment & Evaluation in Higher Education 32 (2): 159–181. https://doi.org/10.1080/02602930600801928.

- Ashenafi, M. M. 2017. “Peer-assessment in Higher Education – Twenty-First Century Practices, Challenges and the Way Forward.” Assessment & Evaluation in Higher Education 42 (2): 226–251. https://doi.org/10.1080/02602938.2015.1100711.

- Asikainen, H., K. Salmela-Aro, A. Parpala, and N. Katajavuori. 2020. “Learning Profiles and Their Relation to Study-Related Burnout and Academic Achievement among University Students.” Learning and Individual Differences 78: 101781. https://doi.org/10.1016/j.lindif.2019.101781.

- Asikainen, H., V. Virtanen, L. Postareff, and P. Heino. 2014. “The Validity and Students’ Experiences of Peer Assessment in a Large Introductory Class of Gene Technology.” Studies in Educational Evaluation 43: 197–205. https://doi.org/10.1016/j.stueduc.2014.07.002.

- Bandura, A. 1997. Self-efficacy: The Exercise of Control. New York, NY: W H Freeman and Company.

- Bartlett, M. S. 1954. “A Note on the Multiplying Factors for Various Chi-Square Approximations.” Journal of the Royal Statistical Society, Series B 16: 296–298.

- Bazvand, A. D., and A. Rasooli. 2022. “Students’ Experiences of Fairness in Summative Assessment: A Study in a Higher Education Context.” Studies in Educational Evaluation 72: 101118. https://doi.org/10.1016/j.stueduc.2021.101118.

- Boud, D., and N. Falchikov. 1989. “Quantitative Studies of Student Self-Assessment in Higher Education: A Critical Analysis of Findings.” Higher Education 18 (5): 529–549. https://doi.org/10.1007/BF00138746.

- Boud, D. J., and W. H. Holmes. 1981. “Self and Peer Marking in an Undergraduate Engineering Course.” IEEE Transactions on Education 24 (4): 267–274. https://doi.org/10.1109/TE.1981.4321508.

- Bourke, R. 2014. “Self-assessment in Professional Programmes within Tertiary Institutions.” Teaching in Higher Education 19 (8): 908–918. https://doi.org/10.1080/13562517.2014.934353.

- Carvalho, A. 2013. “Students’ Perceptions of Fairness in Peer Assessment: Evidence from a Problem-Based Learning Course.” Teaching in Higher Education 18 (5): 491–505. https://doi.org/10.1080/13562517.2012.753051.

- Chang, K., and L. de Lemos Coutinho. 2022. “Administering Homework Self-Grading for an Engineering Course.” College Teaching 70 (3): 387–395. https://doi.org/10.1080/87567555.2021.1957666.

- Cronbach, L. J. 1951. “Coefficient Alpha and the Internal Structure of Tests.” Psychometrika 16 (3): 297–334. https://doi.org/10.1007/BF02310555.

- Dunn, T. J., T. Baguley, and V. Brunsden. 2014. “From Alpha to Omega: A Practical Solution to the Pervasive Problem of Internal Consistency Estimation.” British Journal of Psychology 105 (3): 399–412. https://doi.org/10.1111/bjop.12046.

- Entwistle, N. 2009. Teaching for Understanding at University: Deep Approaches and Distinctive Ways of Thinking: Universities into the 21st Century. Basingstoke, Hampshire: Palgrave Macmillan.

- Entwistle, N., and V. McCune. 2004. “The Conceptual Bases of Study Strategy Inventories.” Educational Psychology Review 16 (4): 325–345. https://doi.org/10.1007/s10648-004-0003-0.

- Falchikov, N., and D. Boud. 1989. “Student Self-Assessment in Higher Education: A Meta-Analysis.” Review of Educational Research 59 (4): 395–430. https://doi.org/10.3102/00346543059004395.

- Falchikov, N., and J. Goldfinch. 2000. “Student Peer Assessment in Higher Education: A Meta-Analysis Comparing Peer and Teacher Marks.” Review of Educational Research 70 (3): 287–322. https://doi.org/10.3102/00346543070003287.

- Gaskin, C. J., and B. Happell. 2014. “On Exploratory Factor Analysis: A Review of Recent Evidence, an Assessment of Current Practice, and Recommendations for Future use.” International Journal of Nursing Studies 51 (3): 511–521. https://doi.org/10.1016/j.ijnurstu.2013.10.005.

- Gholami, H. 2016. “Self Assessment and Learner Autonomy.” Theory and Practice in Language Studies 6 (1): 46–51. https://doi.org/10.17507/tpls.0601.06.

- González De Sande, J. C., and J. I. Godino-Llorente. 2014. “Peer Assessment and Self-Assessment: Effective Learning Tools in Higher Education.” International Journal of Engineering Education 30 (3): 711–721.

- Graneheim, U. H., B.-M. Lindgren, and B. Lundman. 2017. “Methodological Challenges in Qualitative Content Analysis: A Discussion Paper.” Nurse Education Today 56: 29–34. https://doi.org/10.1016/j.nedt.2017.06.002.

- Guest, G. 2006. “Lifelong Learning for Engineers: A Global Perspective.” European Journal of Engineering Education 31 (3): 273–281. https://doi.org/10.1080/03043790600644396.

- Hadzhikoleva, S., E. Hadzhikolev, and N. Kasakliev. 2019. “Using Peer Assessment to Enhance Higher Order Thinking Skills.” TEM Journal 8 (1): 242–247.

- Hanrahan, S. J., and G. Isaacs. 2001. “Assessing Self- and Peer-Assessment: The Students’ Views.” Higher Education Research & Development 20 (1): 53–70. https://doi.org/10.1080/07294360123776.

- Hashem, Z., and P. Zeinoun. 2020. “Self-compassion Explains Less Burnout among Healthcare Professionals.” Mindfulness 11: 2542–2551. https://doi.org/10.1007/s12671-020-01469-5.

- Howard, M. C. 2016. “A Review of Exploratory Factor Analysis Decisions and Overview of Current Practices: What We are Doing and how Can We Improve?” International Journal of Human-Computer Interaction 32 (1): 51–62. https://doi.org/10.1080/10447318.2015.1087664.

- Kaiser, H. F. 1974. “An Index of Factorial Simplicity.” Psychometrika 39 (1): 31–36. https://doi.org/10.1007/BF02291575.

- Kaufman, J. H., and C. D. Schunn. 2011. “Students’ Perceptions about Peer Assessment for Writing: Their Origin and Impact on Revision Work.” Instructional Science 39: 387–406. https://doi.org/10.1007/s11251-010-9133-6.

- Li, J., J. Huang, and S. Cheng. 2022. “The Reliability, Effectiveness, and Benefits of Peer Assessment in College EFL Speaking Classrooms: Student and Teacher Perspectives.” Studies in Educational Evaluation 72: 101120. https://doi.org/10.1016/j.stueduc.2021.101120.

- Lindblom-Ylänne, S., A. Parpala, and L. Postareff. 2019. “What Constitutes the Surface Approach to Learning in the Light of New Empirical Evidence?” Studies in Higher Education 44 (12): 2183–2195. https://doi.org/10.1080/03075079.2018.1482267.

- Liow, J.-L. 2008. “Peer Assessment in Thesis Oral Presentation.” European Journal of Engineering Education 33 (5–6): 525–537.

- Lopez, R., and S. Kossack. 2007. “Effects of Recurring Use of Self-Assessment in University Courses.” The International Journal of Learning 14 (4): 203–216.

- López, A., R. Sanderman, and M. J. Schroevers. 2018. “A Close Examination of the Relationship between Self-Compassion and Depressive Symptoms.” Mindfulness 9 (5): 1470–1478. https://doi.org/10.1007/s12671-018-0891-6.

- Lynch, R., P. M. McNamara, and N. Seery. 2012. “Promoting Deep Learning in a Teacher Education Programme through Self- and Peer-Assessment and Feedback.” European Journal of Teacher Education 35 (2): 179–197. https://doi.org/10.1080/02619768.2011.643396.

- Malan, M., and N. Stegmann. 2018. “Accounting Students’ Experiences of Peer Assessment: A Tool to Develop Lifelong Learning.” South African Journal of Accounting Research 32 (2–3): 205–224. https://doi.org/10.1080/10291954.2018.1487503.

- McDonald, R. P. 1999. Test Theory: A Unified Treatment. Mahwah, NJ: Lawrence Erlbaum.

- McGarr, O., and A. M. Clifford. 2013. “‘Just Enough to Make You Take it Seriously’: Exploring Students’ Attitudes towards Peer Assessment.” Higher Education 65: 677–693. https://doi.org/10.1007/s10734-012-9570-z.

- Micán, D. A., and C. L. Medina. 2017. “Boosting Vocabulary Learning through Self-Assessment in an English Language Teaching Context.” Assessment & Evaluation in Higher Education 42 (3): 398–414. https://doi.org/10.1080/02602938.2015.1118433.

- Moneypenny, D. B., M. Evans, and A. Kraha. 2018. “Student Perceptions of and Attitudes toward Peer Review.” American Journal of Distance Education 32 (4): 236–247. https://doi.org/10.1080/08923647.2018.1509425.

- Mulder, R., C. Baik, R. Naylor, and J. Pearce. 2014. “How Does Student Peer Review Influence Perceptions, Engagement and Academic Outcomes? A Case Study.” Assessment & Evaluation in Higher Education 39 (6): 657–677. https://doi.org/10.1080/02602938.2013.860421.

- Mulder, R. A., J. M. Pearce, and C. Baik. 2014. “Peer Review in Higher Education: Student Perceptions Before and After Participation.” Active Learning in Higher Education 15 (2): 157–171. https://doi.org/10.1177/1469787414527391.

- Murakami, C., C. Valvona, and D. Broudy. 2012. “Turning Apathy into Activeness in Oral Communication Classes: Regular Self- and Peer-Assessment in a TBLT Programme.” System 40 (3): 407–420. https://doi.org/10.1016/j.system.2012.07.003.

- Ndoye, A. 2017. “Peer/Self Assessment and Student Learning.” International Journal of Teaching and Learning in Higher Education 29 (2): 255–269.

- Nieminen, J. H., H. Asikainen, and J. Rämö. 2021. “Promoting Deep Approach to Learning and Self-Efficacy by Changing the Purpose of Self-Assessment: A Comparison of Summative and Formative Models.” Studies in Higher Education 46 (7): 1296–1311. https://doi.org/10.1080/03075079.2019.1688282.

- Nikolic, S., D. Stirling, and M. Ros. 2018. “Formative Assessment to Develop Oral Communication Competency Using YouTube: Self- and Peer Assessment in Engineering.” European Journal of Engineering Education 43 (4): 538–551. https://doi.org/10.1080/03043797.2017.1298569.

- O’moore, L. M., and T. E. Baldock. 2007. “Peer Assessment Learning Sessions (PALS): An Innovative Feedback Technique for Large Engineering Classes.” European Journal of Engineering Education 32 (1): 43–55. https://doi.org/10.1080/03043790601055576.

- Panadero, E., and M. Alqassab. 2019. “An Empirical Review of Anonymity Effects in Peer Assessment, Peer Feedback, Peer Review, Peer Evaluation and Peer Grading.” Assessment & Evaluation in Higher Education 44 (8): 1253–1278. https://doi.org/10.1080/02602938.2019.1600186.

- Panadero, E., A. Jonsson, and J. Botella. 2017. “Effects of Self-Assessment on Self-Regulated Learning and Self-Efficacy: Four Meta-Analyses.” Educational Research Review 22: 74–98. https://doi.org/10.1016/j.edurev.2017.08.004.

- Parpala, A., and S. Lindblom-Ylänne. 2012. “Using a Research Instrument for Developing Quality at the University.” Quality in Higher Education 18 (3): 313–328. https://doi.org/10.1080/13538322.2012.733493.

- Parpala, A., S. Lindblom-Ylänne, E. Komulainen, T. Litmanen, and L. Hirsto. 2010. “Students’ Approaches to Learning and Their Experiences of the Teaching-Learning Environment in Different Disciplines.” British Journal of Educational Psychology 80 (2): 269–282. https://doi.org/10.1348/000709909X476946.

- Partanen, L. 2020. “How Student-Centred Teaching in Quantum Chemistry Affects Students’ Experiences of Learning and Motivation – A Self-Determination Theory Perspective.” Chemistry Education Research and Practice 21: 79–94. https://doi.org/10.1039/C9RP00036D.

- Patton, C. 2012. “‘Some Kind of Weird, Evil Experiment’: Student Perceptions of Peer Assessment.” Assessment & Evaluation in Higher Education 37 (6): 719–731. https://doi.org/10.1080/02602938.2011.563281.

- Pintrich, P. R. 1991. “A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ).”

- Planas Lladó, A., L. Feliu Soley, R. M. Fraguell Sansbelló, G. Arbat Pujolras, J. Pujol Planella, N. Roura-Pascual, J. J. Suñol Martínez, and L. Montoro Moreno. 2014. “Student Perceptions of Peer Assessment: An Interdisciplinary Study.” Assessment & Evaluation in Higher Education 39 (5): 592–610. https://doi.org/10.1080/02602938.2013.860077.

- Pope, N. K. L. 2005. “The Impact of Stress in Self- and Peer Assessment.” Assessment & Evaluation in Higher Education 30 (1): 51–63. https://doi.org/10.1080/0260293042003243896.

- Rafiq, Y., and H. Fullerton. 1996. “Peer Assessment of Group Projects in Civil Engineering.” Assessment & Evaluation in Higher Education 21 (1): 69–81. https://doi.org/10.1080/0260293960210106.

- Richardson, M., C. Abraham, and R. Bond. 2012. “Psychological Correlates of University Students’ Academic Performance: A Systematic Review and Meta-Analysis.” Psychological Bulletin 138 (2): 353–387. https://doi.org/10.1037/a0026838.

- Salmela-Aro, K., N. Kiuru, E. Leskinen, and J.-E. Nurmi. 2009. “School Burnout Inventory (SBI) Reliability and Validity.” European Journal of Psychological Assessment 25 (1): 48–57. https://doi.org/10.1027/1015-5759.25.1.48.

- Salmela-Aro, K., and S. Read. 2017. “Study Engagement and Burnout Profiles among Finnish Higher Education Students.” Burnout Research 7: 21–28. https://doi.org/10.1016/j.burn.2017.11.001.

- Schneider, M., and F. Preckel. 2017. “Variables Associated with Achievement in Higher Education: A Systematic Review of Meta-Analyses.” Psychological Bulletin 143 (6): 565–600. https://doi.org/10.1037/bul0000098.

- Scouller, K. 1998. “The Influence of Assessment Method on Students’ Learning Approaches: Multiple Choice Question Examination versus Assignment Essay.” Higher Education 35: 453–472. https://doi.org/10.1023/A:1003196224280.

- Shen, B., B. Bai, and W. Xue. 2020. “The Effects of Peer Assessment on Learner Autonomy: An Empirical Study in a Chinese College English Writing Class.” Studies in Educational Evaluation 64: 100821. https://doi.org/10.1016/j.stueduc.2019.100821.

- Siow, L.-F. 2015. “Students’ Perceptions on Self- and Peer-Assessment in Enhancing Learning Experience.” Malaysian Online Journal of Educational Sciences 3 (2): 21–35.

- Sluijsmans, D. M. A., G. Moerkerke, J. J. G. Van Merrienboer, and F. J. R. Dochy. 2001. “Peer Assessment in Problem Based Learning.” Studies in Educational Evaluation 27 (2): 153–173. https://doi.org/10.1016/S0191-491X(01)00019-0.

- Sridharan, B., M. B. Muttakin, and D. G. Mihret. 2018. “Students’ Perceptions of Peer Assessment Effectiveness: An Explorative Study.” Accounting Education 27 (3): 259–285. https://doi.org/10.1080/09639284.2018.1476894.

- Struyven, K., F. Dochy, and S. Janssens. 2005. “Students’ Perceptions about Evaluation and Assessment in Higher Education: A Review1.” Assessment & Evaluation in Higher Education 30 (4): 325–341. https://doi.org/10.1080/02602930500099102.

- Taber, K. S. 2018. “The Use of Cronbach’s Alpha when Developing and Reporting Research Instruments in Science Education.” Research in Science Education 48: 1273–1296. https://doi.org/10.1007/s11165-016-9602-2.

- Topping, K. 1998. “Peer Assessment between Students in Colleges and Universities.” Review of Educational Research 68 (3): 249–276. https://doi.org/10.3102/00346543068003249.

- Van Dinther, M., F. Dochy, and M. Segers. 2011. “Factors Affecting Students’ Self-Efficacy in Higher Education.” Educational Research Review 6 (2): 95–108. https://doi.org/10.1016/j.edurev.2010.10.003.

- Van Hattum-Janssen, N., and J. M. Lourenço. 2008. “Peer and Self-Assessment for First-Year Students as a Tool to Improve Learning.” Journal of Professional Issues in Engineering Education and Practice 134 (4): 346–352. https://doi.org/10.1061/(ASCE)1052-3928(2008)134:4(346).

- Van Hattum-Janssen, N., J. A. Pacheco, and R. M. Vasconcelos. 2004. “The Accuracy of Student Grading in First-Year Engineering Courses.” European Journal of Engineering Education 29 (2): 291–298. https://doi.org/10.1080/0304379032000157259.

- van Helvoort, A. A. J. 2012. “How Adult Students in Information Studies Use a Scoring Rubric for the Development of Their Information Literacy Skills.” The Journal of Academic Librarianship 38 (3): 165–171. https://doi.org/10.1016/j.acalib.2012.03.016.

- Van Zundert, M., D. Sluijsmans, and J. van Merriënboer. 2010. “Effective Peer Assessment Processes: Research Findings and Future Directions.” Learning and Instruction 20 (4): 270–279. https://doi.org/10.1016/j.learninstruc.2009.08.004.

- Vickerman, P. 2009. “Student Perspectives on Formative Peer Assessment: An Attempt to Deepen Learning?” Assessment & Evaluation in Higher Education 34 (2): 221–230. https://doi.org/10.1080/02602930801955986.

- Wang, W. 2017. “Using Rubrics in Student Self-Assessment: Student Perceptions in the English as a Foreign Language Writing Context.” Assessment & Evaluation in Higher Education 42 (8): 1280–1292. https://doi.org/10.1080/02602938.2016.1261993.

- Wanner, T., and E. Palmer. 2018. “Formative Self-and Peer Assessment for Improved Student Learning: The Crucial Factors of Design, Teacher Participation and Feedback.” Assessment & Evaluation in Higher Education 43 (7): 1032–1047. https://doi.org/10.1080/02602938.2018.1427698.

- Watkins, M. W. 2018. “Exploratory Factor Analysis: A Guide to Best Practice.” Journal of Black Psychology 44 (3): 219–246. https://doi.org/10.1177/0095798418771807.

- Wen, M. L., and C.-C. Tsai. 2006. “University Students’ Perceptions of and Attitudes toward (Online) Peer Assessment.” Higher Education 51: 27–44. https://doi.org/10.1007/s10734-004-6375-8.

- Willey, K., and A. Gardner. 2010. “Investigating the Capacity of Self and Peer Assessment Activities to Engage Students and Promote Learning.” European Journal of Engineering Education 35 (4): 429–443. https://doi.org/10.1080/03043797.2010.490577.

- Yan, Z., and G. T. L. Brown. 2017. “A Cyclical Self-Assessment Process: Towards a Model of how Students Engage in Self-Assessment.” Assessment & Evaluation in Higher Education 42 (8): 1247–1262. https://doi.org/10.1080/02602938.2016.1260091.

- Yang, Y.-F., and C.-C. Tsai. 2010. “Conceptions of and Approaches to Learning through Online Peer Assessment.” Learning and Instruction 20 (1): 72–83. https://doi.org/10.1016/j.learninstruc.2009.01.003.