ABSTRACT

In this literature review, we survey student naïve ideas (frequently referred to as ‘misconceptions’) that plausibly relate, at least in part, to difficulty in understanding probability. We collected diverse naïve ideas from a range of topics in physics: Non-linear Dynamics; Cosmology; Thermal Physics; Atomic, Nuclear, and Particle Physics; Elementary Particle Physics; Quantum Physics; and Measurements and Uncertainties. With rare exception, these naïve ideas are treated in the literature to be topic-specific. For example, the idea that ‘only one measurement is needed because successive measurements will always yield the same result’ is treated to be a misconception in Measurements and Uncertainties. In our review, however, we raise the possibility that these diverse naïve ideas have something in common: they are enabled, to varying degrees, by the stance that ‘random is incompatible with predictions and laws’ that researchers in mathematics education have documented. This is important, as it may inform instruction. Namely, it may be the case that it is more effective to treat this underlying cause of student difficulty, rather than the individual naïve ideas themselves.

Introduction

To date, the field of physics education research has published findings of the existence and resilience of thousands of students’ naïve ideas spanning virtually every topic of physics. (Duit, Citation2009; Flores et al., Citation2014; McDermott & Redish, Citation1999) Naïve ideas tend to be described within a single physics topic, without discussion of similar ideas in other topics. This is likely due in part to how physics courses themselves are structured. Due to the breadth of material covered by the field of physics and regarded as important by physicists and physics teachers alike, many students take several physics classes, with content separated between the classes. For example, at the college level, mechanics is typically taught in a different course than electromagnetism. Within a given course, content is further segmented into topics such as kinematics, dynamics, energy, momentum, torque, etc. In this literature review, we present publications on naïve ideas from diverse physics topics that seem to share a common feature: they plausibly stem, at least in part, from student difficulty in understanding the concept of probability. The notion that these naïve ideas might have a common origin is relevant for educators. Namely, if it turns out that this is indeed the case, it would imply that effective instruction for students with these naïve ideas would involve focusing on the underlying cause, instead of trying to treat many symptoms as though they are unrelated.

Literature, particularly from mathematics education, has documented the existence of myriad student difficulties in understanding probability and randomness (e.g. Barragués et al., Citation2006; Batanero et al., Citation2016; Buechter et al., Citation2005; Döhrmann, Citation2005; Green, Citation1983; Kahneman & Tversky, Citation1973; Shaughnessy & Ciancetta, Citation2002; Shaughnessy & Zawojewski, Citation1999; D. Stavrou et al., Citation2003). Perhaps because of the emphasis given in the classroom on coins, dice, and cards for teaching probability, students often assume that something must have equal probability of occurring (1 out of 52 for drawing a particular card, for example) in order for it to qualify as ‘random’. (Buechter et al., Citation2005) A well-known naïve idea is gambler’s fallacy, the idea that, for example, if a roulette wheel has been black the past several times in a row, then it is more likely to be red with the next spin. (Buechter et al., Citation2005; Green, Citation1983) The opposite naïve idea has also been documented, that when a good luck streak is broken, people search for an explanation (instead of understanding that it is regression to the mean). (Kahneman & Tversky, Citation1973) These two naïve ideas suggest a hesitancy for people to accept the idea that things can actually be random. In the case of the roulette wheel, for example, it is as though there is a belief in an invisible but intentional agent at work ensuring that the next spin will be red to ‘compensate’ for all the black prior results. Consistent with this is the idea that things only appear random because there is insufficient information to know for sure what will happen. (Barragués et al., Citation2006; Döhrmann, Citation2005; D. Stavrou et al., Citation2003) Plausibly indicating this view, when asked what would be necessary to be able to predict where a ball travelling down a plinko board (a vertically hanging board with many pegs protruding from it) would land at the bottom, few students described the approach of repeating the experiment many times to see which outcome is the most likely. Rather, students attempted to remove the aspect of randomness by saying that one should get more details, for example, about the material that the ball and pegs are made out of.Footnote1 (Barragués et al., Citation2006) The idea that a prediction cannot be made from situations where randomness is involved has been described for other situations as well (e.g. Stavrou & Duit, Citation2014).

It seems that for many students, ‘random’ is not compatible with ‘lawful/predictable’ (Buechter et al., Citation2005; Gougis et al., Citation2017; Stavrou et al., Citation2003) in the sense that things can either be predicted in their entirety with the governing laws or that there are no rules and nothing is certain, making predictions useless. However, much of physics concerns making predictions about phenomena that are intrinsically uncertain but nevertheless are governed by laws. In a sealed gas, for example, the force an individual molecule exerts on a wall at a given moment is considered random. Nevertheless, we predict that the pressure of the gas will increase as the container is compressed, in accordance with the ideal gas law. This literature review concerns itself with physics topics in which ideas about randomness and probability are relevant. We find it plausible that many of the seemingly disparate naïve ideas discussed in this paper are related to each other, in that they stem from this stance of ‘random is incompatible with predictable’. Despite this common feature, of the 70 articles reviewed (15 in Non-linear Systems, 2 in Cosmology, 34 in Measurements and Uncertainties, 9 in Thermal Physics, and 10 from the ‘Structure of Matter’ heading) only 9 of them mentioned student difficulties in multiple fields, and then only briefly.

Many of the naïve ideas that we review here (for example, that students often conflate heat and temperature) are likely to be familiar to the reader, and so we will not provide unnecessarily detailed descriptions of the ideas. Of course, we provide references to the works we cite so that interested readers can find more description of the naïve ideas and the studies that documented them. We will, however, interpret these ideas, from the perspective that they may in part be the result of the stance that ‘random is incompatible with predictable’. To assist our interpretation, we will first briefly discuss the ontologies framework, which will give credence to our hypothesis that the naïve ideas we review, despite being in disparate physics topics, could be due to a common cause.

The ontologies framework

In describing misconceptionsFootnote2 and conceptual change, Chi discussed how misconceptions can vary in how resilient they are. A ‘false belief’, for example, that the heart oxygenates blood, can often be easily corrected just by telling students that the lungs oxygenate blood. Other times, however, students will have ‘an organized collection of individual beliefs’ that, despite being normatively incorrect, are nevertheless self-consistent with each other. (Chi, Citation2013) At times, these collections of beliefs can be expansive and self-coherent to the extent that they can be referred to as ‘theories’. (e.g. Carey, Citation2009; Lattery, Citation2016; Strike & Posner, Citation1982; Vosniadou & Skopeliti, Citation2014) For example, students learning mechanics are often consistent in their views that a moving object carries a force propelling it in the direction of motion, which is comparable in some ways to the ‘impetus theory’ of Galileo. (e.g. Lattery, Citation2016) Chi argues that the misconceptions that are most resistant to correction are those resulting from learners assigning the relevant physics concept into the wrong ontology, or mental category into which an individual divides the world. (Keil, Citation1979) Two examples of ontologies are ‘entities’ (with which people categorise things like sharks and water) and ‘process’ (like a baseball game or evolution). A ‘shark’ is not only a distinctly different thing than a ‘baseball game’; it is a distinctly different type of thing. Evidence for this comes from the different predicates used to describe entities in the two ontologies. For example, whereas a ‘process’ has the attribute of ‘having certain duration’, ‘entities’ do not: although a ‘baseball game’ can be ‘two hours long’, it is nonsensical to talk about a ‘shark’ in this way. Chi et al. argue that many concepts such as electric current, heat, and light are difficult for students to learn because, whereas the scientific understanding assigns these concepts into the ontology of ‘process’, learners tend instead to see them as ‘entities’ (termed ‘substances’ or ‘matter’ in earlier work). (Chi, Citation2013; Chi & Slotta, Citation1993; Chi et al., Citation1994; Reiner et al., Citation2000; Slotta & Chi, Citation2006; Slotta et al., Citation1995)(Chi et al., Citation1994; Chi & Slotta, Citation1993; Reiner et al., Citation2000; Slotta & Chi, Citation2006; Slotta et al., Citation1995)

Within each ontology is nested sub-ontologies. Although Chi et al. specify that they are ‘not committed to this exact hierarchy’ (Chi & Slotta, Citation1993), they provide an example hierarchy in which ‘event’ is nested within ‘process’ and ‘random’ nested as a type of ‘event’. In light of the findings discussed above that learners find ‘random’ things to be incompatible with predictions, we find it plausible that an additional sub-ontology with which people categorise ‘events’ is one that attributes lawfulness and predictability to the event. We will call this hypothetical ontology the ‘deterministic’ ontology.

The ‘deterministic’ ontology is a mental category people use to classify events they perceive to be governed by rules, demonstrate a pattern, and lead to predictable outcomes. Events classified in this ontology do not exhibit randomness. The motion of a projectile in an introductory physics class can be assigned into this ontology, and it is thus appropriate to use kinematic equations to predict the trajectory.

The ‘random’ ontology is a mental category people use to classify events they perceive to be lawless, patternless, and with unpredictable outcomes. For these events, equations and other models are not useful.Footnote3 Chi and Slotta give the example of ‘mutation’ for this ontology. (Chi & Slotta, Citation1993) The time at which a radioactive nucleus decays can also be assigned into this ontology.

The ontologies framework seems promising to characterise the wide spread of naïve ideas reviewed in this paper for two reasons. First, whereas a naïve idea or theory is specific to a given physics topic (for example, a naïve idea about ‘force’ is specific to the topic of mechanics), an ontology spans across topics, which is a necessary criteria for describing the underlying probability-related difficulty we hypothesise to be behind the naïve ideas we review. That is, Chi et al. argued that disparate naïve ideas relating to electricity, thermodynamics, and light have a shared cause of learners assigning the phenomena incorrectly into the ‘entities’ ontology. We likewise argue that a variety of probability-related naïve ideas may have a shared cause of learners assigning the phenomena incorrectly into either the ‘random’ or ‘deterministic’ ontologies. The second reason why this framework seems promising is the mutual exclusiveness of the ontologies. Just as it is senseless to talk about a ‘shark’ (a type of ‘entity’) being ‘two hours long’ (predicates for a ‘process’), so sub-ontologies are mutually exclusive (it is senseless to talk about a ‘shark’ that ‘wilts’ or a ‘flower’ that ‘bites’). In the same way, ‘deterministic’ events are incommensurate with randomness. Other events, assigned into the ‘random’ ontology, are forbidden to have deterministic properties. We find that this framework can thus account for a stance of ‘random is incompatible with predictable’. As a result of this mutual exclusiveness, the ontology framework argues that trying to help students learn a concept located in one ontology by drawing on their ideas in a different ontology would likely only lead to confusion.(Chi & Slotta, Citation1993; Slotta & Chi, Citation2006) For example, Slotta and Chi write

Teachers should not try to “bridge the gap” between students’ misconceptions and the target instructional material, as there is no tenable pathway between distinct ontological conceptions. For example, students who understand “force” as a property of an object cannot come gradually to shift this conception until it is thought of as a process of interaction between two objects. Indeed, students’ learning may actually be hindered if they are required to relate scientifically normative instruction to their existing conceptualizations. (p. 286 of Slotta & Chi, Citation2006)

Methodology

This paper is a literature review of naïve ideas published in papers in physics topics related to probability. The topics investigated were decided via a Delphi study, which we will now describe. Following that, we will describe how we selected the naïve ideas within each topic to include in this literature review.

Delphi study

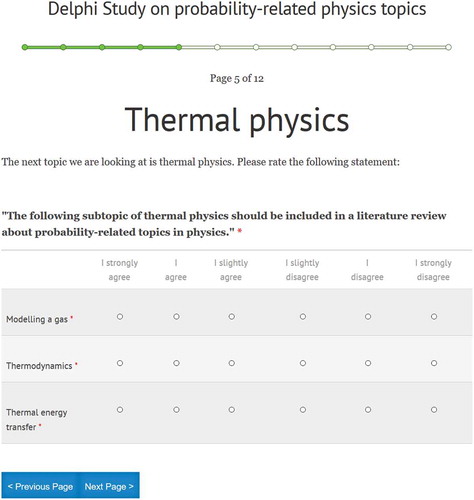

A Delphi study is a means of interviewing a group, often of experts, via several rounds of surveys. Unlike group discussions conducted in person, this method enables participants to remain anonymous. This ensures, for example, that more introverted experts have space to voice their opinions without the discussion being dominated by extroverted experts. The second author conducted a Delphi study with the goal of building consensus among the participating experts (Häder, Citation2009) via a website where respondents should rate different topics according to their relevance to probability. The authors primarily utilised the physics section of the International Baccalaureate Curriculum (IBO, Citation2014) compilation of topics and associated subtopics for the study. Several subtopics from the compilation were not included in the study, as they were deemed to be mathematical without a specified physical context (e.g. ‘Vectors and Scalars’), historical in nature (e.g. ‘The Beginnings of Relativity’), or specific applications of physics topics (e.g. ‘Medical Imaging’). In addition, we added two topics, Non-linear Systems and Elementary Particle Physics, as we were interested in how respondents would perceive the relationship of these two topics to probability. This resulted in eleven topics, each consisting of two to six subtopics, as shown in . The website instructed the participants of this study to rate these subtopics according to their relevance to a literature review on probability-related physic topics. shows an example item.

Table 1. The topics and subtopics of the Delphi study. Red shading indicates consensus (at least 8 out of 10 in agreement) after the first round that the subtopic should not be included. Green shading indicates consensus that it should be

Since our Delphi study intended to answer the question ‘Which physics topics are relevant in terms of probability?’, we needed experts with a broad knowledge of physics. We chose physicists at CERN as our target population. Although one might think at first that only particle physicists work at CERN, there are actually a large number of employees specialising in theoretical physics, accelerator physics, thermal physics, nuclear physics, and technical physics. Prior to the main study, the second author invited three physicists and three physics educators to post responses to the website as a pilot study. The participants needed about 10 minutes to finish. All respondents found the topic selection complete and well structured. Following the successful pilot study, the second author contacted twelve CERN physicists for the field study, of which ten completed and submitted the form.

In Delphi studies, a so-called ‘definition of consensus’ of 80% is common. (Diamond et al., Citation2014) In the case of this study, that meant that if eight or more of the experts vote for or against relevance of a subtopic, then the experts have reached consensus, and no follow-up voting on these subtopics is necessary. We determined consensus by collapsing the six-point scale into a two-point scale. If the experts responded to the statement that a subtopic should be included in the literature analysis with ‘I strongly agree’, ‘I agree’ or ‘I agree slightly’, we coded the response as ‘vote to include’. Otherwise, the response was coded as ‘vote to NOT include’. In the first round, experts reached consensus on 27 of the 43 subtopics, as shown in , and so we removed these subtopics from the second round.

Following the first round, the second author informed the experts about the 27 subtopics for which consensus had been obtained, and asked them a second time to judge whether or not the remaining 16 subtopics should be included in the literature review. She also encouraged the experts to provide comments. After this round, experts agreed not to include the subtopics ‘Motion’, ‘Forces’, and ‘Momentum and Impulse’, implying that the topic of ‘Mechanics’ should not be included in the literature review. Correspondingly, we do not discuss naïve ideas in mechanics below. In the same way, experts agreed that the topics of ‘Electricity and Magnetism’, ‘Relativity’, and ‘Engineering Physics’ should not be included. On the other hand, experts agreed that the following topics should be included in a literature review concerning probability, and we discuss them below:

Measurements and Uncertainties

Thermal Physics

Atomic, Nuclear and Particle Physics

Non-linear systems

Elementary Particle Physics

Finally, while experts agreed that the subtopics of ‘Single Slit Diffraction’ and ‘Interference’ within the topic of ‘Wave Phenomena’ should be included, they also agreed that the subtopics of ‘Doppler Effect’ and ‘Simple Harmonic Motion’ should not be included. From the comments of the experts, it became clear that the reason for inclusion of ‘Single Slit Diffraction’ and ‘Interference’ is that the experts saw a connection to quantum physics and therefore considered the topics as related to probability. We accounted for this by including in our literature review naïve ideas regarding quantum physics. Finally, out of the subtopics in Astrophysics, experts reached consensus on only one, ‘Cosmology’, that it should be included. Consequently, we have a section of our literature review examining naïve ideas in cosmology.

Collection of naïve ideas

When possible, we utilised two databases of student naïve ideas, Ideas Previas (Flores et al., Citation2014) and the database compiled by Duit. (Duit, Citation2009) The Ideas Previas website, under various headings like ‘Structure of Matter’ (which contains naïve ideas related to Quantum Physics; Atomic, Nuclear and Particle Physics; and Elementary Particle Physics), catalogues more than 1,000 naïve ideas documented in physics education research. Each naïve idea has its own short descriptive title like ‘The half-life of a radioactive isotope is the period in which [it] loses its radioactivity. During this period [it] is dangerous’, together with a citation for the source which documented the idea. In addition to this ‘Structure of Matter’ heading, we considered also the list of naïve ideas under the heading ‘Thermodynamics’, and ‘Astronomy’ (from which we found naïve ideas related to cosmology). We will describe below how we decided which naïve ideas from these lists to include in this literature review. As the Ideas Previas website does not contain naïve ideas relating to Non-linear Systems and Measurements and Uncertainties, we took a different approach for these topics. Namely, for Non-linear Systems, we used the main source for the Ideas Previas website, Duit’s database of articles documenting naïve ideas. (Duit, Citation2009) The Duit database contains 23 journal articles related to student naïve ideas about Non-linear Systems. All of these articles were read by either the first author (if the articles were in English) or the second author (if the articles were in German), and naïve ideas from the articles were gleaned for inclusion in this literature review. Since even the Duit database does not contain articles related to student naïve ideas about Measurements and Uncertainties, we utilised yet a third method. Here, we sought out recent publications by researchers prominent for their studies of naïve ideas in the field of measurement in English (Saalih Allie) and in German (Susanne Heinecke). We then read the citations of these papers that 1) were either in English or in German and that 2) seemed relevant in that they potentially related to student difficulty with probability. We then read the citations of those cited papers, and so on, until no additional citations met these two criteria.

Selection of naïve ideas from ideas previas

All three authors coded all naïve ideas on the Ideas Previas website under the headings of ‘Structure of Matter’ and ‘Thermodynamics’ for their potential relevance to this literature review. The coding scheme was initially generated by examining a small subset of the naïve ideas taken from various physics topics from the Ideas Previas website. After discussing this small subset to calibrate coding, all three authors then individually coded all the naïve ideas under the heading of ‘Thermodynamics’ for whether we felt that the idea relates to probability. With duplicates and correct ideas removed, this totalled 273 naïve ideas coded. In our coding, we decided to err on the side of having too many articles compared to too few. As such, we would read all articles that, after discussion, at least one of us thought might be relevant to our study, and so we coded for whether or not we thought the naïve idea should definitely not be included in the study. There were 174 disagreements, meaning that one or two authors had coded ‘no’ that the idea does not relate to probability, but not all three authors. This corresponds to 38.5% agreement (κ = 0.18). After discussion of these disagreements, we reached 100% agreement regarding which naïve ideas to definitely exclude from the literature review. Furthermore, we reached a considerably high consensus of 97.4% (κ = 0.97) regarding which naïve ideas definitely should be included. During this discussion, we decided to include any naïve ideas that consider both a microscopic and macroscopic view, as they would reflect probability-related confusion. In the previous case of the pressure increasing with the compression of an ideal gas, for example, we could envision a student with the naïve idea that each molecule would always exert an increased force on the container walls. Such an approach of attributing properties of the collective whole (here, pressure) to the individuals making up that whole would indicate a failure to understand the probabilistic description of the gas. This heuristic (that the naïve idea is to be included if it considers both a microscopic and macroscopic picture) led to the inclusion of many naïve ideas that did not explicitly discuss probability, such as ‘At a very high altitude over the sea level air molecules are far less numerous, therefore pressure at such altitude is considerable less than pressure at the sea level’ [sic]). We likewise decided that naïve ideas which do not consider both views (like ‘The hot water is heavier because its volume expanded’) are to be excluded.

After coding the thermodynamics naïve ideas, we repeated the process with the 127 naïve ideas (removing again duplicates and ideas marked as ‘correct’) listed under the ‘Structure of Matter’ heading on the Ideas Previas website. In this second round, all three authors again coded each idea independently for whether we felt that the idea should definitely be excluded from this literature review. In doing so, we bore in mind the two conditions discussed from the coding of thermodynamics-related naïve ideas (all naïve ideas considering both a microscopic and a macroscopic view are to be included; those which do not consider both views are to be excluded). Agreement (meaning that all three authors coded ‘no, the idea should definitely not be included’) was initially quite low, at 29.9% (κ = 0.07). After a prolonged discussion, we decided that naïve ideas pertaining to safety (like ‘X-rays machine is based in that radioactive rays or waves go through a body without harm, and it projects an image of internal hard parts.’ [sic]) do not necessarily relate to student reasoning about probability. As such, merely relating to safety was insufficient criteria to be included. We also decided that, in the minds of students, radiation is not necessarily at a smaller level than the object with which it interacts. Consequently, we decided to exclude statements like ‘If a radioactive substance is near wood. It would get into the wood, some things are affected more than others.’ [sic] As a result of these and other smaller points of discussion, agreement rose to 100% regarding which naïve ideas should definitely be excluded. We also reached a considerable consensus of 96.8% (κ = 0.96) regarding which naïve ideas should definitely be included.

In summary, the three of us reached agreement that, out of the 273 naïve ideas in thermodynamics, 186 do not satisfy the criteria described above, and hence are not to be included. Twenty-one of the remaining 87 naïve ideas were cited as coming from a total of 5 papers written in Spanish, and this language barrier prevented us from reading them. We then read the journal articles from which the remaining 66 naïve ideas were taken. We discovered, for 26 of these 66 ideas, discrepancy between the categorisation of Ideas Previas and the articles corresponding to the ideas. Namely, either the article did not discuss the idea reported by Ideas Previas at all, or the article used the idea as an example of an answer considered to be correct. For example, one of the 66 ideas from Ideas Previas that we decided meets our criteria was ‘Heat makes the molecules that are in [the material] … to move faster … causing more pressure because they bump against each other.’ (Hewson and Hamlyn, Citation1984) The article, however, uses this student statement to demonstrate part of the (canonically correct) kinetic gas theory. Since it is not used in the article as an example of a naïve idea, we of course do not include it here in this literature review either. The remaining 40 naïve ideas that are included in this literature review are in in the Appendix. Out of the 124 naïve ideas in Structure of Matter, the three of us agreed that 67 are not relevant, and 15 of the remaining 57 are from articles written in Spanish. We read the journal articles from which the remaining 42 naïve ideas were taken. Here again there were inconsistencies between Ideas Previas’s categorisation and the descriptions within the corresponding articles, and this led to the removal of 14 ideas. The remaining 28 naïve ideas that are included in this literature review are in in the Appendix.

Ideas Previas catalogues naïve ideas related to Cosmology under the heading of ‘Astronomy’. In total, there are 118 naïve ideas under this heading. After individual coding and discussion similar to that just described, we decided that 102 of them are not related to Cosmology, as they do not explicitly relate to the formation of heavenly bodies (e.g. ‘The night occurs because the Sun goes behind hill.’ [sic])Footnote4 Of the 16 cosmology-related ideas, we decided that 8 of them would not be relevant for this paper, as they do not plausibly relate to probability in some way. The remaining 8 naïve ideas are presented in in the Appendix.

To recap, with the exception of the topic Measurements and Uncertainties, the papers we analysed came exclusively from Ideas Previas and the Duit database. As these catalogues only span until 2007, our literature review for these topics does not include more recent sources. As such, we cannot claim that our review is exhaustive. That is not, however, necessary for our purpose of illustrating that many naïve ideas in diverse fields of physics are due, to varying extent, to student difficulty with probability. As we will elaborate upon in the Discussion section below, we are in fact aware of additional naïve ideas that our methodology did not uncover, but that nevertheless could be included in a more exhaustive review of probability-related naïve ideas.

While not necessary for our argument, we did nevertheless hope that our literature review would be representative of the range of probability-related naïve ideas in physics. We felt justified in the decision to use Ideas Previas and the Duit database with the hypothesis that the topics within had been extensively researched by the point at which the databases stopped collecting data, and that findings had approached saturation. We checked this hypothesis by the following means: first, we searched Google Scholar with the Boolean string ‘allintitle: thermodynamics student OR students OR conception OR misconception’ and the requirement that the year be 2008 or later. This yielded 151 articles. The first and second authors read the titles of the articles and the corresponding abstracts. In total, there were 53 articles that at least one of us felt might potentially contain naïve ideas that are related to probability. Thirteen of these articles were not readily accessible, but the first author randomly chose and read ten articles from the remaining 40. Of these ten, three contained naïve ideas that plausibly were enabled by student difficulty with probability: Sriyansyah et al. found that, given two processes, one in which much work is done and one in which little work is done to reach the same ending state, students think that the internal energy is different, depending on the process. (Sriyansyah et al., Citation2015) Saricayir et al. discussed how students struggle with recognising the role that mass plays in the heating and cooling of objects. Students conflate heat capacity and specific heat, or think that a glass of hot water has more internal energy than the ocean. The researchers also noted student difficulty with heat transfer between two objects, thinking that equilibrium is when the internal energies of the two bodies are equal (as opposed to the two temperatures). (Saricayir et al., Citation2016) We see the naïve ideas from these two papers as being consistent with difficulty in distinguishing heat from temperature, which we found in the Ideas Previas database and which we discuss below. Finally, Bowen et al. documented how some students try to use the ideal gas law or the first law of thermodynamics to reason about a single molecule getting hit by a moving piston in a sealed box. (Bowen et al., Citation2015) This naïve idea is rather distinct from what we discuss in this literature review. In summary, from our random sampling of recent papers in Thermal Physics, we found only one naïve idea not captured by what we review below, consistent with our hypothesis that our review is representative of probability-related naïve ideas.

Results

Here we present the results of our literature search. We begin with a review of naïve ideas relating to Non-linear Systems from articles listed in the Duit database. (Duit, Citation2009) We then turn our attention to naïve ideas in Thermal Physics; Atomic, Nuclear and Particle Physics; Elementary Particle Physics; Quantum Physics; and Cosmology from articles listed in Ideas Previas. (Flores et al., Citation2014) We end with a review of articles pertaining to naïve ideas in Measurements and Uncertainties.

Non-linear systems

Although much formal science education involves linear systems (where doubling x results in doubling y), most everyday experiences are non-linear in nature. Many non-linear systems, despite being deterministic, are nevertheless unpredictable because infinitesimally small perturbations to the initial state give rise to dramatically different outcomes. (Boeing, Citation2016) The classic example is to consider the flapping of a butterfly’s wings giving rise to a hurricane on the other side of the world. Meteorologists do not expect to account for the flapping of every butterfly’s wings. Nevertheless, they are able to forecast the weather by running simulations that combine a range of potential perturbations, treated as being random effects (in the sense that they cannot be predicted), with deterministic laws of how air will move when faced with temperature and pressure gradients. Randomness must be combined with things which are predictable in diverse contexts ranging from the migration of birds, to the build-up of traffic jams, to the response of the public to a new government regulation.

Although relatively little research has been conducted on student understanding of non-linear systems, the studies that have been published suggest that people have a tendency to either think that things happen by chance or that they are predictable, and not that deterministic laws could lead to unpredictable outcomes, depending sensitively upon initial conditions (e.g. Stavrou & Duit, Citation2014). Some students, for example, display so-called ‘Laplace determinism’, the view that all processes are deterministic. (Buecker et al., Citation1999; Komorek, Citation1998; Stavrou et al., Citation2003, Citation2005; Stehlik & Pade, Citation2006) Several researchers observed student difficulty with various typical chaotic systems, such as a chaotic pendulum, and found the student view that if a situation can be predicted with outcomes that vary only slightly, then the situation is deterministic. If not, it is random. (Komorek, Citation1998; Stavrou et al., Citation2008) Some students assume that if the starting conditions are similar, then the results must be similar as well or, conversely, that similar effects must have similar causes. (Buecker et al., Citation1999) In looking at dendrite formation, students either expect the same number of branches to form the second time because they think there is no randomness in science, or they think that the structure is entirely random, and they are then surprised to see that it is always dendritic. (Komorek et al., Citation1999, Citation2001) Similarly, in situations where both static and dynamic descriptions of a situation coexist, such as in modelling population stability, students have a tendency to think exclusively in a static way. (Bell & Euler, Citation2001) These naïve ideas can be described well with the ontologies framework. Namely, we can account for them with the argument that students assign some events (where outcomes of repeated trials vary only slightly, for example) into the ‘deterministic’ ontology. As such, these events could not have characteristics of randomness. ‘Laplace determinism’, for example, could be described as the learner assigning every process into this ontology. Other events (where the outcomes vary more dramatically), assigned into the ‘random’ ontology, would be forbidden to have predictable properties.

Non-linear systems are often visualised with fractal images. One type of fractal image that can be found approximately in nature, for example, in fern leaves, is formed by repeating a system rule over and over again (e.g. the Serpiensky triangle follows the rule of starting with a triangle and recursively subdividing into smaller equilateral triangles). As one might expect, students find the process of fractal formation un-intuitive at first. As such, when shown the first three steps in the formation of the Koch curveFootnote5 and asked to draw the fourth step, some students draw a star emerging from the line. Likewise, when shown a cylindrical electrode and asked to predict what shape of precipitate will grow on the electrode, students predict a cylindrical structure instead of the dendritic shape. (Komorek et al., Citation1999) When given images of ferns, etc. and asked to organise them into groups that make sense to them, students who have not yet learned about fractals do not focus on the aspect of self-similarity. At best, they pick up on the characteristic of being ‘branched’ or of being ‘branched repeatedly’. (Komorek et al., Citation2001) Thinking in terms of the ‘deterministic’ and ‘random’ ontologies, perhaps the circular electrode, being of a geometry frequently encountered in science and maths classes that prioritise problems posed as having a definite solution, promotes assignment of phenomena into the ‘deterministic’ ontology. From within this viewpoint, it is natural that one would expect that a pattern should emerge which exhibits circular symmetry.

Some research concerns instruction aiming to equip students with general thinking strategies for reasoning about non-linear systems in general, independent of the specific situation. However, students tend to focus on superficial features instead of building awareness of the key ideas, such as that a minor perturbation can give rise to vastly different outcomes. (Bell & Euler, Citation2001; Wilbers & Duit, Citation2001) When asked to describe the motion of a chaotic pendulum with two magnets, for example, students explained the deviation of the pendulum from the plane of symmetry as arising from the rotation of the Earth, likening it to Foucalt’s pendulum, seemingly ignoring the fact that sometimes the pendulum ends up near one magnet and sometimes the other. (Duit et al., Citation1998) Some researchers have argued that part of the difficulty is a linguistic one. Apparently, for some students, the phrase “chaotic system“ is an oxymoron, as “system“ denotes a sense of order, which therefore cannot be chaotic. (Roth & Duit, Citation2003) Students generally associate the word ‘order’ with things that are static, like a clean room or a balanced see-saw (Bell, Citation2007) or with things that are predictable. (Stehlik & Pade, Citation2006) Although students are familiar with the word ‘feedback’ from music, they do not associate it with dynamic balance found prevalently in chaotic systems. (Bell, Citation2007) It is also plausible that prior education in maths and physics classes, where topics that are deterministic and well-ordered are the focus, make it difficult for students to grasp chaotic systems. In the case of fractals, Vacc wrote that children who had previously studied geometry had a harder time describing the shapes of fractal-shaped objects like popcorn and kale leaves. (Vacc, Citation1999) In terms of the ontologies framework, these naïve ideas can also be explained with the argument that students assign some things (like the pendulum in the anecdote above) into the ‘deterministic’ ontology and other things into the ‘random’ ontology. This dichotomous categorisation scheme is encouraged by language use and typical classroom experiences and it makes the core ideas of non-linear systems untenable.

Cosmology

We will next review naïve ideas discussed in literature specific to the field of Cosmology, continuing to utilise the ontologies perspective. Like with Non-linear Systems, randomness is intertwined with predictable outcomes in Cosmology. The normative view utilises the nebular theory (that is, that most heavenly bodies form from the coalescing of gas and dust). At a microscopic level, it is considered random which areas of space will contain what concentrations of cosmic dust. However, it is nevertheless predictable that, given a critical mass of this dust in a given location, that coalescence will occur. Education researchers have documented several student naïve ideas in this field, and we again find the ontologies framework productive for thinking about their commonality.

Marques and Thompson found that many students think that nothing existed before the Earth and that, for example, it was formed immediately after the Big Bang. Other students attribute spiritual significance to the beginning of the Earth, thinking, for example, that there was ‘something like the Garden of Eden’ before the Earth was formed. Other students in their study, reflecting exposure either formally or informally to scientific ideas on the subject, described the presence of ‘vacuum’ with a few small particles and ‘fire’ before the Earth was formed. (Marques & Thompson, Citation1997) Some students describe the Earth originating by the collision of planets (Marques & Thompson, Citation1997) or rocks. (Sharp, Citation1996) Students attribute collisions, for example, of the Sun with ‘something’, to the formation of other planets as well. (Sharp, Citation1996) Sharp found that some students, in discussing the Big Bang, described everything in the universe ‘whooshing out’ when the gases inside exploded. (Sharp, Citation1996) From the perspective of the ontologies framework, we can account for at least some of these naïve ideas by noting how they deviate from the nebular theory. A student assigning the formation of a stellar body into the ‘deterministic’ ontology would be expected to reject the idea of dust accumulation, as it inherently involves randomness. Instead, we would expect such a student to attribute intentional (for example, spiritual) design to the Earth’s creation or to assume that it was always present. Given exposure to the idea of the Big Bang, we might think such a student would imagine the Earth to be pre-formed inside the ‘big lump’ to come rushing out when the gases exploded.

Atomic, nuclear, and particle physics; Elementary particle physics; And quantum physics

Although the time at which an individual radioactive nucleus fissions is taken to be random, when enough of these atoms are collected, one can predict how long it will take for half of them to fission (the ‘half-life’). Such a phenomenon would not be conceptually accessible for a student who is assigning the process into the ‘deterministic’ ontology. We should expect, instead, that such a student would think that it is also predictable when individual nuclei will fission – for example, that all atoms will have fissioned after one half-life. This is consistent with research findings on student naïve ideas, for example, that the material will be safe and no longer radioactive after one half-life. (Lijnse et al., Citation1990) It could be the case that students similarly conflate radioactive material and radiation (Alsop, Citation2001; Millar & Gill, Citation1996) as a result of assigning the effects of radiation on the human body into the ‘deterministic’ ontology. The normative view is to treat it as random whether or not a piece of ionising radiation will interact with DNA, and it is further considered random whether or not that ionisation event will lead to cancer. When many of these individual events take place, however, we can predict the outcome: sufficient over-exposure to ionising radiation leads to death. Such a two-level picture where randomness prevails in the microscopic level is likely difficult for students to come to terms with. It is easier to label the ionising radiation as ‘bad’ or ‘dangerous’ and, by extension, the radioactive material likewise. Using the ‘deterministic’ ontology, learners may group radiation and radioactive material together as a ‘poison’ in this way. An additional result of this conflation could be decreased importance given in the minds of learners to the origin of this ‘poison’. Rather than recognising nuclear energy as arising from interactions between constituents of the nucleus, students instead describe it as being released as a result of collisions between nuclei, atoms, molecules, or ‘particles’. (Cros et al., Citation1988)

As just discussed, students attribute characteristics of the whole to the constituents comprising that whole when reasoning about radioactivity (for example, that an individual atom has a half-life, implying that all the material will lose its radioactivity at the same time. (Lijnse et al., Citation1990) Education researchers have found the same tendency to attribute the macroscopic characteristics to the microscopic level in other areas of atomic physics as well. For example, when asked to describe the internal structure of metal, many students described it as looking like lamina or little stones, things that one might expect to see if one would cut the metal in half and look with the naked eye. Although such a description could arise from students not understanding what was meant by ‘internal structure’, other students described the atoms of a metal as being packed more closely together than they are for glass because some space must exist in the glass for light to be able to pass through. (De Posada, Citation1997) Other students have described the atoms of solids as being harder than the atoms of liquids. (Harrison & Treagust, Citation1996)

In using the analogy of an electron ‘shell’, we attempt to explain to students that, although it is random where the electron in an atom will be found should a position measurement be made, the energy will predictably be that corresponding to its shell. A student who either 1) focuses on the predictable results of energy measurements and assigns the atomic configuration into the ‘deterministic’ ontology, or 2) focuses on the random location of the electron and hence uses the ‘random’ ontology is likely to have difficulty comprehending this analogy and using it effectively. Education research has indeed found naïve ideas regarding this and other analogies. For some students, the metaphor conjures an image of protection. They hence think that electron shells protect the nucleus in much the same way that egg shells protect the egg. (Harrison & Treagust, Citation1996) Other students think of the shell as having a fixed radius that confines the electrons, and that it is this rigidity that prevents electrons from falling into the nucleus under the electrostatic attraction. (Fischler & Lichtfeldt, Citation1992) Similarly, when learning about an electron ‘cloud’, students think of a material within which raindrops are embedded and attribute a material-like property to the electron cloud itself, as a sort of stuff within which electrons are embedded. Accordingly, students attribute electrons in this cloud with the property of being very small (like a raindrop). In fact, although electrons exhibit point-like behaviour when outside of an atom (for example, when boiled off an electrode in an electron gun), within an atomic electron cloud, it is more appropriate to model the electron as being a charge distribution. A dramatic example of metaphor and language-related difficulties is students thinking that the nucleus of an atom contains instructions for the atom to follow and is responsible for the division of the atom. Apparently, these students are confusing the nucleus of an atom with the nucleus of a cell. (Harrison & Treagust, Citation1996) Many students think that atoms look like the figures used to represent them. (Griffiths & Preston, Citation1992; Hogan & Fisherkeller, Citation1996) When asked to ‘sketch what you would see if you could take an atom and look at it under a microscope so powerful that all the details of the atom could be seen’, for example, many students drew a sphere with components inside. (Griffiths & Preston, Citation1992)

Taber found that students struggle in understanding the electrostatic forces involved in keeping electrons inside of atoms. For example, some students claimed that each electron in an atom, regardless of its distance from the nucleus, would experience the same force pulling it towards the centre. Some of these students further explained that the nucleus has a fixed amount of force with which it can attract all of the electrons, and that the electrons need to share this force equally. If one electron is removed in an ionisation event, it follows that the remaining electrons will each have a larger share of the force on them. This faulty model is used even by students who demonstrate mastery of the use of Coulomb’s law on traditional physics questions with stationary charges. (Taber, Citation1998) We find it plausible that one reason why the student model is popular in this context is that students are assigning the situation into the ‘deterministic’ ontology. Namely, faced with predictable outcomes of various changes (ionisation energy increases with successive ionisation events, atomic radius generally increases as the number of electrons does), students create a simple and predictable scheme leading to those results. At the same time, we speculate that Coulomb’s law is relatively inaccessible to students in this context, because it relies upon positions of charges, which have inherent randomness here. For a student assigning the physical location of the electrons into the ‘random’ ontology, Coulomb’s law, which is conceptualised as a tool to make predictions, would not be applicable.

Thermal physics

Above, we discussed the naïve idea that transparent solids have atoms that are more spread out so as to allow the light to pass through. (De Posada, Citation1997) An analogous naïve idea in the field of Thermal Physics has been documented, that materials in which heat travels faster have atoms that are less densely packed. (Hewson & Hamlyn, Citation1984) We find it plausible that both naïve ideas are fostered by assigning the process (light/heat passing through) into the ‘deterministic’ ontology. We can imagine a student argument such as ‘we know that heat will predictably travel better from one end to the other if it is metal than if it is wood, so there must be some difference inside that allows for that to happen. Maybe it is like water flowing in a pipe – the less clogged the pipe is, the better the flow. The only thing inside the material is atoms, so less clogged means that the atoms are less tightly packed together. The atoms predictably are located at a greater distance from each other.’ As with this example, many naïve ideas in Thermal Physics involve students erroneously attributing an aspect of predictability found in the collective to the individual elements that comprise that collective.

Temperature and heat transfer

Life is full of everyday experiences of warming and cooling. If we imagine a learner assigning these processes into the ‘deterministic’ ontology, we would imagine an account like the following: ‘Some objects are hot and others are cold, and they interact with each other. If a hot object has some structure (is made up of parts), then those parts are hot as well, and likewise for cold objects.’ For this user of the ‘deterministic’ ontology, the idea that some parts of the hot object are randomly less ‘hot’ than other parts does not seem tenable, as processes assigned into the ‘deterministic’ ontology do not have attributes of randomness. Students with this view are unlikely to see a need to distinguish between heat and temperature (Erickson, Citation1979; Hewson & Hamlyn, Citation1984; Kesidou & Duit, Citation1993; Rozier & Viennot, Citation1991; Summers, Citation1983), as they think of heat (or cold for that matter (Erickson, Citation1979)) as being a substance (fumes or bubbles (Erickson, Citation1979) or warm wavy-air from a fire (Hewson & Hamlyn, Citation1984), for example) contained within and exchanged between objects. Within this (caloric) view of objects containing heat, researchers have found students explaining things like metal balls swelling when heated in terms of the ball holding more heat (Hewson & Hamlyn, Citation1984) and cooling as taking place when fumes previously contained within the object are released. (Erickson, Citation1979)

Learning a particle description seems to be insufficient for learners to relax this ontological assignment. Some students, for example, say that temperature is the speed of particles, which suggests that the particles have a uniform speed as opposed to a random distribution. (Kesidou & Duit, Citation1993) Other students continue to conflate heat and temperature, thinking, for example, that heat is the movement of particles (Kesidou & Duit, Citation1993) or the energy of moving particles. (Summers, Citation1983) We would expect students using the ‘deterministic’ ontology to argue that, if hot objects give heat to cold objects and both are made up of particles, then it must be the case that hot particles give heat to cold particles. Indeed, this naïve idea has been documented by researchers. (Kesidou & Duit, Citation1993)

Although it is admittedly a more tenuous claim, it may be the case that assigning heat transfer into the ‘deterministic’ ontology contributes to the naïve idea that, if heat makes metal increase its size, then it follows that the individual particles are increasing as well, either in size or number. (Hewson & Hamlyn, Citation1984; Kesidou & Duit, Citation1993) Similarly, if heating is thought to predictably destroy an object (like burning wood, for example), then it may follow for some students assigning the process into the ‘deterministic’ ontology that, in the case of heating metal, the inner composition is being destroyed. (Hewson & Hamlyn, Citation1984)

Phase transitions

Although heat transfer is, in fact, predictable (we can assume an overall heat transfer from hotter objects to colder objects), it is driven by stochastic processes (in the case of conduction, for example, molecules of the hot object collide with molecules of the cold object, usually losing but sometimes gaining kinetic energy). Phase transitions in thermodynamics similarly are predictable on the macroscopic scale but stochastic on the microscopic scale, and we can similarly consider that students who assign these processes into either the ‘deterministic’ or ‘random’ ontologies might exhibit naïve ideas here as well. Research has indeed documented naïve ideas pertaining to phases and their transitions. While some students may succeed in reciting that condensation on the inside of a sealed bottle containing a bit of water is driven by a temperature difference without being able to justify how that happens (Chang, Citation1999), others may mis-memorise the statement as being due to a pressure difference or argue that condensation arises when something encounters heat. (Chang, Citation1999) It has been found that some students think condensation is a process of air (instead of water vapour) turning to liquid water (Chang, Citation1999; Ure & Colinvaux, Citation1989) and vice versa for evaporation. (Ure & Colinvaux, Citation1989) Regarding condensation on the outside of a glass of cold water, some students think that this water comes out through the glass walls and still others think that the water comes from within the glass itself and is extruded as sweat. (Ure & Colinvaux, Citation1989)

Research has found other naïve ideas from learners that can perhaps be considered to be more advanced in their learning. Many learners explain that the phase of an object depends only on temperature because the particles decrease in motion (which cannot explain why a solid glass and liquid water within it can be at the same temperature). (Rozier & Viennot, Citation1991) Some students attribute an important role to the air above an open bottle of water as facilitating the evaporation of the water molecules by allowing them to combine with the air molecules (Chang, Citation1999) and some think that solute particles evaporate together with the water. (Hwang & Hwang, Citation1990) A student assigning phase transitions into the ‘deterministic’ ontology, for example, might be imagined to reason ‘if there is less salt water than there was before, then the salt water must have turned into a gas’. Lacking from the argument is the microscopic picture of water molecules that are bonded together less tightly than salt molecules. It is plausible that one reason why such a microscopic picture is lacking is that the microscopic picture is random in nature, and hence does not belong with a predictable phenomenon like water evaporating.

Ideal gases

In the previous section, we conjectured that the stance ‘random is incompatible with predictable’ might be part of the cause for students failing to map the microscopic stochastic picture of particles colliding and bonding with the macroscopic predictable picture of changes of state. If this conjecture is correct, then we should expect to find additional naïve ideas in the context of ideal gases, where several such mappings must take place simultaneously. Rozier and Viennot documented naïve ideas in this topic. In an adiabatic (that is, no heat exchanged) compression of an ideal gas, students explained that the pressure increases because the volume is decreasing, resulting in a greater collision density. Although it is indeed true that the collision density is increasing, what these students fail to discuss is that the temperature of the gas is also increasing during the compression (this is due to the work done on the box. Gas molecules that collide with the moving lid will rebound with a greater kinetic energy than if the lid were stationary, in which case the collision is elastic). When asked specifically about the temperature of the gas increasing, rather than the correct explanation (of work being done by the lid), students use the increasing pressure as an intermediate step in their explanation. For example, they argue that there is ‘an increase of temperature because there are more collisions between particles [greater pressure] and more energy is produced due to friction [increasing the temperature].’ (Rozier & Viennot, Citation1991) This naïve idea was also observed by Manthei. (Manthei, Citation1980) The fallacy of this argument, as Rozier and Viennot point out, is that the ideal gas model assumes elastic collisions between molecules. Indeed, the authors argue, if such collisions produced heat, then a gas kept in an adiabatic container would soon explode – steady-state conditions would be impossible. Related to this is the naïve idea that the temperature of a gas in a container being heated increases less when the box is bigger and the (same number of) particles are hence more diffuse (Rozier & Viennot, Citation1991) – students with this idea seem to be conflating pressure (and the corresponding collisions between particles) with temperature. An additional example of failing to bear in mind multiple macroscopic quantities at a time while reasoning with a particulate picture is the idea that the pressure decreases as altitude increases as a result of there being fewer air molecules and hence fewer collisions (the failure is again in noting that the temperature also drops with altitude). (Rozier & Viennot, Citation1991)

Summary: student use of ontologies in reasoning about Thermal Physics

Situations where a stochastic description must be combined with a predictable description abound in Thermal Physics. One situation discussed was heat conduction, regarding which researchers have found the naïve idea that hot particles give heat to cold particles. (Kesidou & Duit, Citation1993) This naïve idea is a particularly clear example of our argument, that students struggle in part because of assigning a given process into either the ‘deterministic’ or ‘random’ ontology. In this case, if learners assign heat conduction into the ‘deterministic’ ontology, then we should not be surprised that the idea that it is random whether an individual atom gains or loses energy in a collision does not make sense. It is plausible, we argue, that assignment into these two ontologies causes difficulties for students in less obvious situations where a macroscopic description must be connected with a microscopic description as well. For example, we can envision naïve ideas such as ‘condensation of water on the surface of glass occurs as a result of a pressure difference’ (Chang, Citation1999) to be the result of a student who tried to memorise by rote and ended up memorising incorrect information. Although a student might attempt to memorise without understanding for a number of reasons, we would expect it to be more common in situations where the explanation (in this case, ‘the motion of water molecules is random, but they have a distribution that changes in a predictable way when the water is heated or cooled’) is untenable to the student. This would surely be the case for a student who thinks that things are either predictable OR random, but NOT BOTH at the same time.

To be clear, we do not claim that categorising with ontologies is the ONLY cause of the naïve ideas discussed above. With the naïve idea that heat is better conducted in metal than wood because the atoms are more spread out (Hewson & Hamlyn, Citation1984), for example, a correct explanation would require understanding that heat is conducted in metal via free electrons scattering off impurities and lattice defects, whereas in wood it is through collisions between adjoining molecules. Our argument is that, if we envision a student thinking of heat conduction from within the ‘deterministic’ ontology, we find it unsurprising that the student would propose the model that atoms inside of a metal must be more spread out to allow the heat to flow unimpeded.

Measurements and uncertainties

Like science in general, a key aspect of physics is its empirical nature, and hence measurement plays a paramount role. Physics teachers often assume that students will automatically come to understand the measurement and data analysis process in carrying out labs, but education research has shown that this is largely not the case. Even after having a number of physical science laboratory experiences, myriad naïve ideas remain. As with the previous sections, we will consider the utility of the ontologies framework in understanding these naïve ideas, specifically in service to the hypothesis that naïve ideas are enabled by students assigning phenomena into either the ‘deterministic’ ontology or the ‘random’ ontology.

Multiple measurements and taking an arithmetic mean

Education researchers looking at student difficulties with measurement have commonly documented students who do not see the need to take multiple measurements, unless, of course, the instructor asks them to do so, or something obviously went wrong with the first measurement. (Abbott, Citation2003; Allie et al., Citation1998; Buffler et al., Citation2001; Davidowitz et al., Citation2001; Gott & Duggan, Citation1995; Hackling & Garnett, Citation1992; Heinicke, Citation2012; Lubben & Millar, Citation1996; Millar et al., Citation1996; Millar & Lubben, Citation1996; Rollnick et al., Citation2001; Sere et al., Citation1993) As an example, when Cauzinille-Marmeche et al. had children test to see if container shape affects the time for a covered candle to extinguish itself, the children saw with the trial of two different containers that there was a difference in time. They did not repeat the experiment, however, as they did not expect that there could be a difference in time if the same container were used twice. (Cauzinille-Marmeche et al., Citation1985) A similar phenomenon is observed when students are presented with data. Given a single trial of a robot arm throwing ball A and ball B with the result that ball A travelled farther, third graders were confident that the next time the balls were thrown that ball A would again travel farther. (Masnick & Morris, Citation2002) Students such as these do not see a need to repeat their measurement because they expect the results to be identical, and are perplexed when the results are not. (Cauzinille-Marmeche et al., Citation1985; Coelho & Sere, Citation1998) Generally, research has documented students collecting observations or making measurements with no apparent awareness of the uncertainty associated with the measurement process. (Deardorff, Citation2001; Ibrahim, Citation2006; Lubben & Millar, Citation1996; Warwick et al., Citation1999) This is exactly what one would expect if students are assigning the process of measurement into the ‘deterministic’ ontology. Namely, such a student might argue that measuring is a thing that predictably tells you information about the object being measured. Since it is predictable, it is not possible for there to be randomness involved, and hence every measurement would produce the same result.

A slightly preferable version of assigning measurement into the ‘deterministic’ ontology is the view that a measurement should (assuming all goes as expected) predictably yield the same (true) property of the object being measured. We might expect students with this view to recognise that readings will vary from measurement to measurement, and to attribute ‘error’ to this variance. However, when asked to identify such potential sources of error, we would not be surprised if these students tend to exclusively think about mistakes that they themselves might have made (a naïve idea documented by Goedhart and Verdonk (Citation1991)), as they see the variation in the data as being due to personal mistakes (a view documented by Heinicke (Citation2012), Leach et al. (Citation1998), Pillay et al. (Citation2008), and Rollnick et al. (Citation2001)). We might further expect that such students would consequently repeat the experiment to get closer to a ‘true value’, thinking that they are increasing accuracy (whereas they are actually only increasing precision) (a naïve idea documented by Allie et al. (Citation1998), Evangelinos et al. (Citation2002), and Sere et al. (Citation1993)). We might expect these students to thus report only one result despite taking many trials or to use only one data point to make a conclusion, an expectation confirmed by research. (Austin et al., Citation1991; Deardorff, Citation2001; Gott & Duggan, Citation1995; Lubben & Millar, Citation1994; Millar et al., Citation1994) Further aligned with what we would expect from students assigning measurement into the ‘deterministic’ ontology in this way, research has documented students who report only the last result, since that came after the most amount of ‘practice’ or who report the result that is a repeated value, since that is most likely to be the ‘true value’. (Allie et al., Citation1998; Buffler et al., Citation2001; Davidowitz et al., Citation2001; Ibrahim, Citation2006; Lubben & Millar, Citation1994, Citation1996; Millar & Lubben, Citation1996; Pillay et al., Citation2008; Rollnick et al., Citation2001; Sere et al., Citation2001, Citation1993; Warwick et al., Citation1999) Research has shown that, even when presented a data set and asked ‘what value to report as the final result’, students answer with a single value (as opposed to reporting a range). (Buffler et al., Citation2001; Volkwyn et al., Citation2008)

Consistent with the student inclination to consider ‘error’ as comprised exclusively of experimenter mistakes, research has documented the student view that, given precise enough tools, a measurement with zero uncertainty can be obtained. (Deardorff, Citation2001; Heinicke, Citation2012; Ibrahim, Citation2006; Pillay et al., Citation2008) One student, for example, wrote ‘The distance is exactly 423.7 mm, since electronic things always make no mistakes.’ (Pillay et al., Citation2008) Perhaps because of the perception that less human judgement is required, Coelho and Sere found students with the view that if the distance measured lines up with the marking on the ruler, then it is a more precise measurement. On the other hand, they described other students who thought that the true value can be obtained in a measurement that is sufficiently COARSE, again, perhaps, because less human judgement is involved. (Coelho & Sere, Citation1998)

Still other students have been found who, despite taking multiple measurements, choose the first measurement (Lubben & Millar, Citation1996; Sere et al., Citation1993), for example, ‘because the equipment was clean’. (Lubben & Millar, Citation1996) This raises the question of why the student bothered to take multiple trials at all, with the likely answer being that students are taking multiple trials because they know that more measurements lead to a better result, but they are unsure about what exactly is better. (Lubben & Millar, Citation1994; Sere et al., Citation1993) One student, for example, explained the reason for repeating measurements to be ‘you are meant to do an experiment three times’ without feeling a need for further elaboration. (Lubben & Millar, Citation1996)

One reason to take multiple data points is to calculate an arithmetic mean. This is advantageous, as the arithmetic mean is generally more representative of the object being measured than an individual data point. If the object were to be measured an additional time, that measurement is most likely to be close to the mean than to, for example, a measurement that happens to have repeated. Researchers have found students who understand that it is important to calculate an arithmetic mean, but who do not understand why. For example, some students say that an experiment should be repeated with different parameters before an average is taken. Lubben and Millar write that this ‘seems to arise from the student’s attempt to reconcile the taught routine of repeating measurements to obtain a spread of values which can then be averaged, with the belief that repeating exactly the same measurement will yield exactly the same result.’ (Lubben & Millar, Citation1996) Using the ontologies framework, it seems likely that these students, despite being aware of the importance of calculating arithmetic mean, have failed to make sense of the tool because of their assignment of measurement into the ‘deterministic’ ontology. If taking a measurement predictably yields the desired information about the object being measured, then it makes no sense to utilise arithmetic mean, as it is a tool designed to minimise the effects of randomness. Similar symptoms of students trying to memorise protocol without understanding is the misuse of the word ‘average’ (for example, to mean choosing a value that is repeated twice) or describing the correct procedure to find the arithmetic mean, but then abandoning that approach when presented with actual data that includes recurring values. (Lubben et al., Citation2001; Lubben & Millar, Citation1994) Finally, there are students who calculate the mean but then choose the data point closest to that mean to report their value, as demonstrated in the following quote. ”They should not take the average because this does not refer to a result obtained from one of the experiments.” (Buffler et al., Citation2001) Students seem to have a strong attachment to the idea that the true value is one of the measurements. (Ibrahim, Citation2006; Leach et al., Citation1998)

For some students, the importance of taking multiple measurements and taking an arithmetic mean is context-dependent. For example, although a student might not think to repeat a distance measurement, they might do so for time measurements (Allie et al., Citation1998; Heinicke, Citation2012) with an argument like ‘it is tricky to measure time accurately with a stopwatch, so I reckon that you should take more than 2 readings. More readings would eliminate human error in stopping and starting the stopwatch when the average is taken.’ (Allie et al., Citation1998) Although these students do seem to have an understanding of the benefit of the arithmetic mean, their decision to only repeat measurements where ‘human error’ is salient reflects the view, again, that a single measurement should (assuming all goes well) give you the true value of the object being measured.

Using data spread

Consistent with the naïve ideas just discussed, research has found students who ignore data spread after taking multiple trials and, when asked to provide a confidence interval, report only the precision of the measuring instrument. Some students will hence report this ‘confidence interval’ for each trial. (Sere et al., Citation1993) When given two sets of data with identical means but different spreads and asked which set of data is better, many students answer that they are equally good because the averages are the same (e.g. Lubben & Millar, Citation1994) or because ‘results are never wrong’. (Rollnick et al., Citation2001) Particularly this latter view is consistent with assignment of measurement into the ‘deterministic’ ontology. In addition, some students report that a larger spread represents a better measurement set (Lubben & Millar, Citation1994; Sere et al., Citation2001), which is consistent with the idea discussed above that different parameters should be used before taking the mean. The naïve idea that both sets are equally untrustworthy because ‘every time you do it you will get a different answer’ (Lubben & Millar, Citation1996) has also been documented. This can perhaps reflect students assigning measurement into the ‘random’ ontology. Namely, they might be thinking ‘when I take a measurement it is random what value I get. Things that are random are not predictable. Hence, neither set of data helps me in predicting what the next measurement will yield/what the true property of the object being measured is.’

Just as students seem capable of taking multiple measurements and calculating an arithmetic mean without understanding why, so too can they make decisions involving data spread with a lack of conceptual understanding. When presented with the two data sets just described (same means, different spreads), many students report correctly that the set with the smaller spread is better. In spite of that, many of these students do NOT answer that way when the mean of the two sets is different. Instead, they now ignore the scatter of data and express dependence on a ‘true value’ or the uncertainty of individual measurements. (Heinicke, Citation2012; Lubben & Millar, Citation1996; Sere et al., Citation2001) Similarly, when asked whether two data sets with different means but overlapping spreads agree with each other or not, many of these students ignore data scatter and just use the arithmetic means (Abbott, Citation2003; Allie et al., Citation1998; Cauzinille-Marmeche et al., Citation1985; Davidowitz et al., Citation2001; Deardorff, Citation2001; Ibrahim, Citation2006; Leach et al., Citation1998; Lubben et al., Citation2001; Lubben & Millar, Citation1996; Millar & Lubben, Citation1996; Pillay et al., Citation2008; Rollnick et al., Citation2001; Sere et al., Citation2001, Citation1993) or individual data points. (Allie et al., Citation1998; Buffler et al., Citation2001; Davidowitz et al., Citation2001; Deardorff, Citation2001; Heinicke, Citation2012; Ibrahim, Citation2006; Rollnick et al., Citation2001; Sere et al., Citation2001; Volkwyn et al., Citation2008) This suggests that the correct answer on the original question with identical means may have come about for some reason other than deep conceptual understanding about spread. Students who do consider data spread in deciding whether two sets of data agree with each other or not will often say that the data agrees if the size of the spread is the same whether there is overlap or not. (Allie et al., Citation1998; Buffler et al., Citation2001; Coelho & Sere, Citation1998; Deardorff, Citation2001; Kung, Citation2005) Finally, even when students do compare by looking at data overlap, many do not consider the extent of overlap (Cauzinille-Marmeche et al., Citation1985), for example, in comparison to data spread within a single set of data. (Masnick & Morris, Citation2002) Regarding combining two sets of data with different spreads, research has documented the attitude that ‘an average of numbers, obtained with different uncertainties, would have no sense.’ (Sere et al., Citation1993) We can interpret these naïve ideas using the ontologies framework. Although we cannot predict exactly what value a measurement will yield, the spread of values obtained allows us to be more confident that the next measurement will lie somewhere within that spread. In this sense, we can predict the extent of the randomness inherent in measurement. It seems plausible that students (even those who make correct decisions regarding spread in some situations!) struggle with this conceptually because, if they are assigning measurement into the ‘deterministic’ ontology, then the idea of randomness inherent in the measurement is not sensible. Conversely, if they are assigning measurement into the ‘random’ ontology, then the benefits of data spread would not be recognisable.

More advanced statistics

As discussed above, many researchers have documented a sense of carrying out learned procedures without an understanding of the meaning behind them. (Deardorff, Citation2001; Evangelinos et al., Citation2002; Lippmann, Citation2003; Lubben & Millar, Citation1996; Millar et al., Citation1994; Sere et al., Citation1993; Volkwyn et al., Citation2008) This issue of lacking conceptual knowledge behind the procedural tools used is present with more advanced tools as well. (Abbott, Citation2003; Garratt et al., Citation2010; Holmes, Citation2011; Sere et al., Citation1993) For example, the mistake of trying to calculate standard deviation by putting N-1 in the numerator has been documented. This mistake suggests ‘that the student did not understand that the formula expresses that increasing the number of measurements improves the precision.’(Sere et al., Citation1993) Abbott discussed students measuring the mass of a paperclip (approximately 0.3 g) on a scale with a 0.1 g resolution multiple times so as to calculate standard deviation, despite only recording identical values. (Abbott, Citation2003)

The confidence interval is the range that contains with a certain probability the value to be measured. The probability is defined with the corresponding confidence level. For example, for 100 tosses of a penny, the number of heads will lie within the confidence interval of 46–54 with a confidence level of 95%. Research has documented students having trouble understanding confidence intervals. (Garratt et al., Citation2010; Goedhart & Verdonk, Citation1991) For example, some students think that the real result MUST lie within one standard deviation of the mean value (in fact, for normal distributed values, there is only a 67% confidence level.) (Goedhart & Verdonk, Citation1991) This view that the standard deviation sets bounds within which the true value must lie is consistent with students assigning measurement into the ‘deterministic’ ontology. With such a viewpoint, the idea that the true value need not lie within those bounds is not sensible, because it implies randomness.