ABSTRACT

There remain barriers to securing robust and complete datasets from counselling embedded in Higher Education (HE). This study aimed to provide the first step towards developing a national dataset of student counselling outcomes drawn from differing outcome measures, platforms and reporting on all clients. Data from four counselling services using two clinical outcome measures and two platforms were pooled and analysed. Students presented to counselling with low levels of wellbeing and functioning, and high levels of depression, anxiety, academic distress and trauma. Counselling was particularly effective for improving depression, anxiety, wellbeing, hostility, social anxiety and academic distress. Results demonstrate value in pooling complete data from HE counselling services and we argue for developing a national dataset of university counselling data.

Introduction

The concerns about annual growth in students presenting to counselling with complex needs have been widely discussed over the past two decades and are ongoing (Auerbach et al., Citation2016; Royal College of Psychiatrists, Citation2011). Research suggests this rise in numbers is a growing and global problem, leading to additional demands on student mental health services and further strains on both academic and therapeutic staff (Advance HE, Citation2018; Byrom, Citation2015; Thorley, Citation2017). Universities have been under pressure to respond and demonstrate the effectiveness of their mental health services (Randall & Bewick, Citation2016). However, there are challenges to accurately measuring student mental health. Counselling data can be difficult to access, and services have not necessarily been developed with data usage as an integral component to their systems (Barden & Caleb, Citation2019; Broglia, Millings, & Barkham, Citation2017). Efforts to collect data from the general student population have, at times, outweighed the attention on students accessing counselling, and have resulted in a vast array of survey data. Examples include Trendence UK (Citation2019) who found that 45.5% of students reported “mental health” as a main concern, while another survey of 38,000 students showed that 20% of students reported a diagnosed mental health problem (The Insight Network & Dig-In, Citation2019). Combined survey findings suggest a high-level of emotional distress and increasing experiences of mental health problems amongst university students (Equality Challenge Unit, Citation2017).

Though surveys are necessary for the sector and provide useful observations, they are subject to limitations. Barkham et al. (Citation2019) emphasised that surveys tend to use single-item questions with inconsistent terminology that lead to ambiguous results and prevent comparisons with other reports. Surveys also typically capture small samples of self-selecting students that are not necessarily representative of the wider student population (Sills & Song, Citation2002). Even surveys with a large sample size can have limited generalisability at the institution level, for example, when only a few students from each institution participate. This issue also exists at the student level as data from one institution would not be large enough to explore mental health data on minority groups (e.g. postgraduate, disability status, or black, Asian and minority ethnic/BAME) and doing so would risk identifying students (Cokley, Hall-Clark, & Hicks, Citation2011). Thus, it is important to have a sufficient sample size from each institution and to generate larger samples of minority groups in order to make meaningful and representative conclusions. These considerations led Barkham et al. (Citation2019) to call for a more coordinated approach to data collection and to strive for a minimum national dataset for higher education (HE) services. The adoption of such a minimum dataset would yield high-quality data from multiple institutions.

The benefits of pooling data have been demonstrated in the UK’s National Health Service (NHS) with the Improving Access to Psychological Therapies (IAPT) service, which collects routine patient data and uses this data to improve services (NHS Digital, Citation2019). A similar initiative in the HE sector would allow for a more coordinated approach between HE mental health services and the NHS. This, in turn, would facilitate government aims to improve access to psychological therapies for young people (NHS, Citation2019) and would improve referral pathways between services. Pooling data from student counselling services appears to be a credible solution for obtaining data that would enable production of an evidence base upon which to guide policies and strategies for improving student mental health. However, it is not without challenges, with perhaps the biggest being to ensure the collection of data on all students referred to counselling services rather than only reporting on those who complete the intervention. Such datasets would provide representative samples to shape policy decisions for inclusive services and interventions (Center for Collegiate Mental Health, Citation2019) and analyses of specific dimensions within outcome measures (e.g. anxiety) would enable the profiling of students’ presenting issues and how they change through the course of counselling. Such analyses would bring focus to the question “Is counselling effective?” by asking “What does counselling effectively address?”.

The culture and practice of collecting routine data varies across student counselling services and whilst this is not unique to HE, it prevents the analysis of outcomes (Boswell, Kraus, Miller, & Lambert, Citation2015). It is important to recognise the reasons for service variability and to acknowledge that institutions adapt their therapeutic offer in response to students’ needs and available funding. Using measures requires adequate resource and training as well as time and space to complete them. Even with enough resources, there is the issue of which measure to choose, recognising that some do not tap into the unique experience and context of students (Broglia et al., Citation2017).

A survey of 113 HEIs found the most common measure used in HE is the Clinical Outcomes in Routine Evaluation-Outcome Measure (CORE-OM; Evans et al., Citation2002) or the shorter 10-item version (CORE-10; Barkham et al., Citation2013), followed by a minority of services using the Patient Health Questionnaire (PHQ-9; Spitzer, Kroenke, & Williams, Citation1999) or the Counseling Center Assessment of Psychological Symptoms (CCAPS; Locke et al., Citation2011) – see Broglia, Millings, and Barkham (Citation2018). There are also multiple computer platforms available to administer the forms and some services may only have the option of using paper forms. The varied use of outcome measures and platforms reflects the history of choice and independence in UK services and the resulting issue of whether it is feasible to pool data from different measures and platforms is a significant one. There is value in establishing these procedures so that institutions continue to make these decisions, whilst being part of larger coordinated national effort.

Despite the challenges associated with the creation of a national dataset, the Center for Collegiate Mental Health (CCMH) from the United States (US) has shown this can be achieved (Castonguay, Locke, & Hayes, Citation2011). The CCMH currently collects routine clinical data across 550 college and university counselling services and has produced a standardised database of student mental health data, making it possible to predict outcomes that might be observed in a UK national dataset. However, the CCMH project utilises one outcome measure specifically designed for this population, namely the Counseling Center Assessment of Psychological Symptoms (CCAPS; Locke et al., Citation2011). Whilst the adoption of a single common outcome measure carries advantages in terms of implementation, it also provides no option of choice in terms of preferred measures to be used by individual services. There is an argument that greater adoption rates of outcome measures may be facilitated by accommodating choice within a selection of bona fide outcome measures providing it can be shown that differing measures collected through different IT platforms can be pooled and that the relationship between the differing measures is understood (i.e. there is a common currency for agreeing on reliable improvement).

Key findings from CCMH reports show that counselling services are effective in reducing mental health distress; depression and anxiety are the most common student concerns; and there has been an increasing trend in student uptake of counselling (CCMH, Citation2019). Similar to these findings, Connell, Barkham, and Mellor-Clark (Citation2008) found UK student counselling services to be effective with 70% of students showing reliable improvement on the CORE-OM post-counselling. However, the latter study was based only on those students completing counselling. In addition, more recent UK students have been found to experience higher levels of distress compared with those in the US, suggesting that UK students may delay entry into counselling until their needs are more severe (Broglia et al., Citation2018). These UK studies provide valuable information on the similarities and differences between UK and US students. However, a UK national dataset would enable regular, detailed comparisons including assessments of trends in student mental health over time.

In light of this context, the overall aim of the current study was to provide the first step towards informing the development of a national dataset of student counselling outcomes drawn from differing outcome measures and different IT platforms and, most importantly, reporting on all students in receipt of counselling. Specifically, it aimed to (1) profile student mental health issues and presenting conditions from a small cluster of HE counselling services that used different outcome measures; and (2) determine the effectiveness of in-house student counselling based on the total sample of students in the service.

Method

Design and setting

Data were pooled from four university counselling services who were part of a research-practice consortium exploring Student Counselling Outcomes, Research and Evaluation (SCORE; see http://www.scoreconsortium.group.shef.ac.uk/). The universities represented small and large city, town and rural campuses across different regions. The overall student population registered at each university ranged from 11,000 to 28,000 students and approximately 8%–10% of the student population were attending the counselling service. Each institution contributed retrospective and pseudonymised service data from the 2017/18 academic year for the purpose of service evaluation as detailed in the service client contract. Ethical approval was received from each participating institution to transfer pseudonymised data to the research team at the British Association for Counselling and Psychotherapy (BACP). Service data were merged to enable analysis on the aggregate data. This activity took place during 2018–2019 for approximately 12-months.

Participants

Participants were 5,568 students registered at one of four UK universities with a mean age of 25 years (min = 17, max = 76, SD = 6.42). provides a breakdown of sample size and percentages across students’ demographic characteristics recorded upon registration and the characteristics of students who were referred to and attended counselling and students referred to alternative services (e.g. groupwork or long-term therapy). Across services, students referred to counselling received short-term one-to-one face-to-face in-house counselling. Referral data collected by services varied and this meant that the sub-sample of students in the group referred to alternative services includes any student who provided demographic information during registration and did not have any clinical outcome data. Across all samples, most students studied at their home/birth country, were mostly undergraduate, female, described their ethnicity as white, and did not declare a disability or were not registered with the disability service.

Table 1. Breakdown of sample size and percentages across students’ demographic characteristics recorded at the initial assessment for counselling.

Counselling intervention

Services were embedded into UK Universities and counselling was delivered by professional staff with training and experience of working in the HE context. Staff were predominately accredited by the British Association for Counselling and Psychotherapy or the United Kingdom Council for Psychotherapy. Across the services, staff were trained in humanistic, psychotherapy, cognitive behavioural therapy, psychodynamic, and were integrative in their thinking. The data included in the present study represents clinical outcomes from short-term ongoing and face-to-face counselling whereby students received a minimum of two counselling sessions.

Measures

The four institutions used clinical outcome measures within the counselling services as part of routine practice. Two services used the Clinical Outcomes in Routine Evaluation-Outcome Measure (CORE-OM; Evans et al., Citation2002) and two services used the Counseling Center Assessment of Psychological symptoms (CCAPS; Locke et al., Citation2011). The measures were administered electronically using computer systems that are commonly used by university counselling services; two services used CORE Net (http://www.coreims.co.uk) and two services used the Titanium Schedule® (http://www.titaniumschedule.com). All services administered measures when clients first visited the service (i.e. initial assessment or triage) as well as at the start of every counselling session.

Intake and demographic information

Services collected different intake (e.g. presenting issues) and demographic (e.g. gender) data and there were further differences between the categories used within each data field. The intake data that were sufficiently similar across the services included: home status (e.g. home/EU), faculty (e.g. humanities) and level of study (e.g. undergraduate). The only demographic data fields that could be compared across the services were: gender, ethnicity, and disability status.

CORE-OM (Clinical Outcomes in Routine Evaluation-Outcome Measure)/CORE-10

The CORE-OM is a 34-item measure of psychological function (Evans et al., Citation2002) and is completed at the start and end of therapy (pre–post) to measure clients’ experiences over the previous week with specific attention to experiences of depression, anxiety, physical problems, social relationships, close relationships, general functioning, wellbeing, trauma, and risk to self or others. Items are rated on a 5-point Likert scale (0 = not at all, 4 = most or all of the time) whereby higher scores indicate higher severity. Scores range from 0 to 40 with a clinical cut-off score of 10. Psychometrically, the measure has strong internal reliability, convergent validity, test-retest stability and sensitivity to change (for a summary, see Barkham et al., 2010). The CORE-OM has been used in the general population and has been validated in student populations (Connell et al., Citation2008). Services using the CORE-OM also used the shorter 10-item version at the start of every session (Barkham et al., Citation2013). In cases when a client had an unplanned ending, responses from the last available CORE-10 measure were recorded as the post-counselling measure.

CCAPS (Counseling Center Assessment of Psychological symptoms)

The CCAPS provides a 62-item and a 34-item version that are used interchangeably to measure psychological experiences specific to the student population (Locke et al., Citation2011). The CCAPS-62 is typically completed at the start of counselling and the shorter 34-item version is used at the start of every session thereafter. Items refer to the previous 2-week period and monitor symptom change across depression, generalised anxiety, social anxiety, academic distress, eating concerns, hostility, substance use, family distress and suicidal ideation. The CCAPS-34 also monitors change in these areas except for family distress, which does not appear, and substance use, which is replaced with alcohol use. Items are rated on a 5-point Likert scale (0 = not at all like me, 4 = extremely like me) with higher scores indicating higher severity. Clinical cut-offs within each scale can be used to determine low and elevated clinical severity for each domain as well as an overall mean score of distress. The CCAPS is a psychometrically robust measure that correlates with domain specific measures including alcohol use, depression, anxiety, social phobia and eating concerns (see Locke et al., 2012). The CCAPS has also been used with UK student counselling samples and has been validated against the CORE-10 (see Broglia et al., Citation2017, Citation2018).

Collating counselling service data

Feasibility work involved identifying the required skills and procedures needed to access and process data from services using different measures and IT platforms, with varying needs and resources in order to inform the development of a national dataset of student counselling outcomes. Feasibility work also involved identifying overlaps and inconsistencies across the intake and demographic data collected routinely across the pilot services.

Final dataset

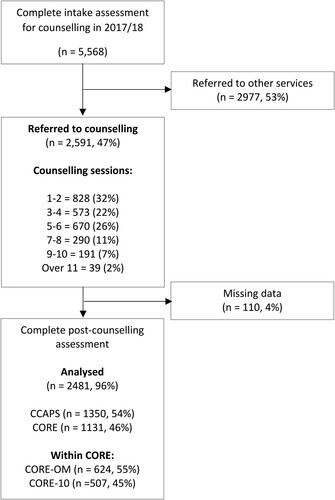

The pooled sample comprised 5,568 clients from four university student counselling services (min = 652, max = 2,391) and the breakdown of data processing has been provided in . Of the 5,568 clients who completed the initial assessment, 2,591 (47%) were referred to counselling and 2,481 (96%) had complete pre- and post-counselling data either on the CCAPS (1,350, 54%) or CORE-OM/10 (1,131, 46%). Within the CORE-OM/10 data, 624 (55%) students had planned endings and subsequently completed the CORE-OM whereas 507 (45%) students had unplanned endings and data from the last available CORE-10 was provided. Intake and demographic data were available for 3,569–5,040 (64%–91%) of clients across the data fields and the maximum number of missing data due to service inconsistencies, for any demographic field, was for ethnicity (missing = 528, 9.5%).

Primary outcomes: mental health profiles and outcomes

The primary outcomes concerned the psychological factors in the final pooled dataset comprising: (1) average waiting times and counselling duration; (2) baseline mental health profiles of students accessing counselling; and (3) outcomes of student counselling determined by indices of reliable improvement and also by reliable and clinically significant improvement (Jacobson & Truax, Citation1999).

Secondary outcomes: feasibility of pooling service data across different measures and platforms

The secondary outcomes were a series of factors relating to the feasibility of accessing, processing and pooling data collected routinely from university counselling services. The feasibility outcomes were: (1) use of intake and demographic information, (2) use of outcome measures, and (3) data pooling procedures and challenges.

Analysis

Analyses were performed on the aggregate service data in SPSS statistics package (version 25). Descriptive analyses (e.g. mean, standard deviation) were performed on the baseline CORE-OM and CCAPS-62 subscales to characterise the mental health and psychological profile of students and symptom severity. Descriptive analyses were also used to identify the average number of working days students waited to receive counselling and the overall duration of counselling. Service effectiveness was determined by calculating the effect size (ES) difference between the clinical measures used at the start of counselling (pre) and at the last available counselling session (post). For the CORE-OM, the last available CORE-10 measure was used if an end-of-therapy CORE-OM was not available. For the calculation of ESs, or for simple pre–post change, we combined CORE-OM and CORE-10 scores without adjustment. By contrast, when we applied categorical criteria to the extent of change in terms of severity bandings or reliable and clinically significant improvement, we applied adjusted criteria to account for the differential responsiveness of the CORE-10 as a result of all 10 items being more frequently endorsed compared with all 34 items in the CORE-OM. For the CORE-OM, a value of 5 points was used to determine the index of reliable improvement and the more stringent criterion of reliable and clinically significant improvement comprised both a change of 5 points and also the pre- to post-counselling score passing the cut-off score of 10. These values have been used in previous publications (see Connell, Barkham, & Mellor-Clark, Citation2007). Where the CORE-10 was the last available data, the equivalent values were 6 for reliable change and a cut-off of 11 (Barkham et al., Citation2013). The reliable improvement criterion for the CCAPS Distress Index was 0.8 – there was no equivalent cut-off score. The difference between the pre- and post-counselling clinical scores was calculated for all clients irrespective of the severity of their intake scores to determine reliable improvement.

Results

Pathways to receipt of counselling

Attended counselling sessions and waiting times

The average number of counselling sessions students attended, across the sample and excluding the initial assessment or triage appointment, was 4.46 (SD = 3.03, min = 1, max = 26). On average, students received 2.6 more counselling sessions at services using the CCAPS compared to services using the CORE-OM/10 (CCAPS: mean = 5.9, SD = 2.9; CORE-OM/10: mean = 3.3, SD = 2.8). shows that the highest percentage of students who received counselling was for 1–2 sessions (32%) and the lowest was for students who received 11 or more sessions (2%). The average duration of counselling from start to finish was 13.24 weeks (min = 0, max = 84.3, SD = 11.2, median = 10.1, mode = 5). Across the services, the median number of days students waited for assessment was 14 days (10 working days, SD = 28).

Psychological profiles: intake

CORE-OM

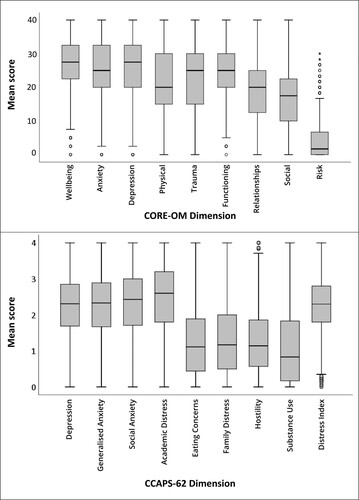

The percentage of students above the CORE-OM clinical cut-off pre-counselling was 99.7%. shows that the highest pre-counselling levels of distress on the CORE-OM was for wellbeing and the lowest was for risk. illustrates two symptom clusters on the CORE-OM as denoted by scores above 20, with higher scores for wellbeing, depression, anxiety, functioning and trauma.

Table 2. Means together with their ranks and SDs of the scores at the start and end of counselling for CORE-OM/10 and CCAPS-62/34.

CCAPS-62

Students’ pre-counselling scores on the CCAPS-62 were the highest for academic distress and the lowest for substance use (). further shows two clusters on the CCAPS-62 with higher academic distress, social anxiety, generalised anxiety and depression. Combined observations across the CORE-OM and CCAPS-62 show that students' scores were noticeably elevated on wellbeing, functioning, academic distress, general anxiety, social anxiety and depression.

Psychological profiles: improvement

presents the means (SDs) and ranks for the pre- and post-counselling scores for all clients and the pre–post counselling ESs.

Effect sizes

Inspection of shows that for all students, the ESs for both CORE and CCAPS were 0.87 and 0.65 respectively, indicative of medium-to-large effects. Importantly, these data include clients who had unplanned endings. The equivalent ESs for those students above clinical threshold at intake were 1.12 and 1.47 for CORE and CCAPS respectively. Both values denote large effects but worthy of note is that the extent of change was virtually identical on both measures – 5.8 on CORE and 0.59 on CCAPS, which is equivalent to 5.9 when using the same scaling. The difference in ESs is therefore a function of the smaller SD (0.40, i.e. 4.0) on CCAPS compared with 5.2 for CORE. Data for unplanned endings was identifiable in the CORE data set and showed poorer outcomes of 0.49 and 0.67 for all clients and those scoring above clinical threshold respectively. Differentiation between planned and unplanned endings was not available for the CCAPS data.

At the specific level of psychological conditions, for clients meeting clinical criteria, the change for depression on the CORE-OM and CCAPS was substantial and broadly similar – 1.40 and 1.21 respectively – with the slight difference likely due to the CCAPS data including clients with unplanned endings. With the exception of anxiety and general functioning as measured by the CORE-OM in the clinical sample (ES = 1.40, 1.07 respectively), and the well-being CORE-OM domain, all other ESs derived from CORE-OM and CCAPS were <1.0.

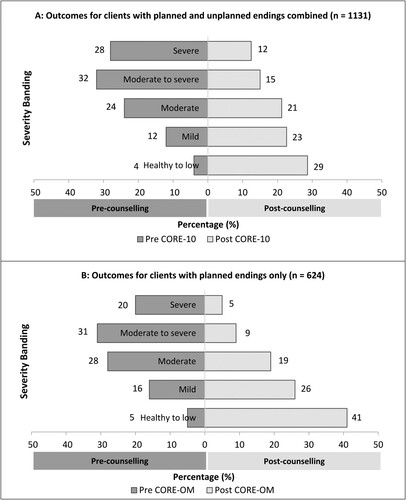

Change in severity bandings

Inspection of and show improvement rates in terms of changes in severity bandings for the CORE-OM and also for CCAPS bandings of low and high clinical levels. To permit analysis of all client outcomes on the CORE-OM, irrespective of whether clients had planned (i.e. CORE-OM) or unplanned (i.e. CORE-10) outcomes, the embedded CORE-10 items were extracted from the CORE-OM from planned endings and combined with the CORE-10 items from unplanned endings. Severity bandings for clients with planned endings were also compared using the CORE-OM against the embedded CORE-10 items from CORE-OM to identify whether differences were attributed from different items or versions of the measure. There was no difference in outcomes across the CORE-OM and embedded 10 items. This analysis was also performed using adjusted bandings to provide more stringent bandings (with cut-offs of 10, 16, 20 and 26) and differences were negligible. Supplementary tables can be provided on request.

Figure 3. Percentage breakdown of students’ scores across the CORE-OM/10 severity bandings for all clients and clients with planned endings only before and after counselling.

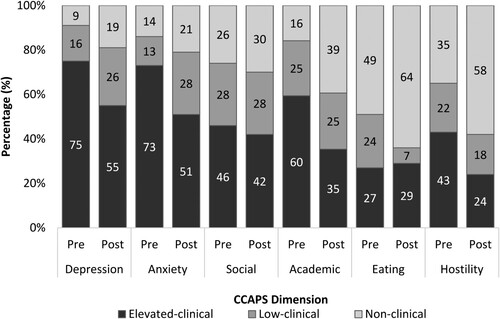

Figure 4. Percentage breakdown of students’ scores across the CCAPS clinical bandings and dimensions for all clients before and after counselling.

Inspection of (a) shows the combined rate for severe and moderately severe distress fell from 60% at pre-counselling to 27% post-counselling for all clients ((a)). Similarly, the combined rate of healthy or low severity distress (collectively defined as non-clinical) rose from 4% pre-counselling to 29% post-counselling. Clients with planned endings were also explored separately using the CORE-OM as the data permitted this distinction. (b) shows that clients with planned endings demonstrated more improvement, with clients’ severe and moderately severe distress falling from 52% pre-counselling to 15% post-counselling. Likewise, clients’ healthy/low distress rose from 4% pre-counselling to 40% post-counselling. shows for the CCAPS, academic distress showed the largest pre to post-counselling fall from 60% to 35% in terms of clients categorised as elevated-clinically. Other domains showing largest falls were 20% for depression and 22% for anxiety. It was not possible to separate clients with planned endings from clients with unplanned endings based on the data provided, therefore outcomes of clients using the CCAPS combine clients with planned and unplanned endings (), which is comparable to (a).

Reliable change & reliable and clinically significant change

The rate of reliable improvement, based on all clients, for the CCAPS distress index was 21% (, column A), while for the CORE-OM/CORE-10 it was 44% (, column B). Considering clients who scored above clinical threshold at intake and also had a planned ending to counselling, rates for reliable and clinically significant improvement on the CORE-OM were 36% and 30% for reliable improvement only, yielding a total of 66% meeting the criterion of reliable improvement (, column C). To determine whether the CORE-10 would yield equivalent outcomes to the CORE-OM, the CORE-10 items embedded in the CORE-OM for planned endings were calculated (, column D) and showed near identical rates for the CORE-OM when using the adjusted criteria for the CORE-10. Rates for reliable and clinically significant improvement for clients with unplanned endings on the CORE-10 were 14% and 18% for reliable improvement only (, column E). Reliable deterioration rates are also reported (, column E). and present full results.

Table 3. Percentages of reliable change after counselling according to the CCAPS and CORE-OM.

Discussion

The objectives of this study were to determine the current profile of problems experienced by students presenting to university counselling services and establish the effectiveness of in-house services using different assessment and outcome measures, and reporting data on all clients. These objectives were in service of an overall aim of determining the viability of establishing a national database of university counselling based on the principles of services making their own choice of preferred outcome measure and the provision of complete data.

Previous reports of UK student counselling service data have invariably reported on single-service data and, thereby, citing data from a single measure (Biasi, Patrizi, Mosca, & De Vincenzo, Citation2017; Murphy, Rashleigh, & Timulak, Citation2012). The only other attempt to collate data from multiple sources was Connell et al. (2007) who reported data using the CORE-OM from seven services and 846 clients but for which pre–post CORE-OM data were available for less than 40% of clients. Accordingly, the present study represents a tangible advance in terms of drawing together evidence from two major outcome measures, via different IT platforms, with data that was representative of the services as it comprised data on all clients and thereby yielding a dataset of significant size. As such, this is, in effect, proof of concept that such a task is feasible.

The present study showed that depression and anxiety dominate in terms of severity along with social anxiety and academic distress. But CCAPS showed that academic distress was the source of highest concern, confirming findings reported by McKenzie, Murray, Murray, and Richelieu (Citation2015) that clients reporting academic concerns returned higher CORE-OM scores. Such a finding underpins the argument that academic distress needs to be included in any battery of student counselling and wellbeing. It is not an additional area – it may be the key area of skills that makes embedded university counselling services uniquely placed to support students.

In terms of effectiveness, our findings inform the topics of both assessment and outcomes. In terms of assessments, the pre-counselling CORE-OM/10 score in this present study was a full 2 points higher than the mean of 17.8 reported by Connell et al. (2007). A similar trend emerged for CCAPS as reported in the present study compared with data from 2015/16 reported by Broglia et al. (Citation2017). The mean values in the present study, range 2.29–2.34, were higher than those reported by Broglia et al. (Citation2017) who found mean values of 1.93–2.08 from two UK counselling services. Again, the rise in CCAPS mean values observed in UK counselling services is most apparent for depression, generalised anxiety, social anxiety and overall distress. The data suggests that, if the current data is representative of UK students’ experienced severity, then over the past decade there has been an appreciable rise in the psychological distress levels of students, which would logically place a greater strain on university counselling services.

In terms of outcomes, we reported metrics at the overall as well as cluster level/domain levels and for clients with planned and unplanned endings on the CORE-OM/10. When considering the magnitude of change, effect sizes provide the most appropriate index and, regardless of the measure used, the data showed services to be effective and were similar to those student clients with planned endings reported by Connell et al. (2007): 1.57 (2007) compared with 1.45 in the current CORE-OM planned ending sample. The rates of reliable improvement for CORE-OM data with planned endings was slightly less compared with Connell et al. (2007): 76.1% (2007) vs. 66% in the current dataset. However, recall that the intake scores for the current sample was 2 CORE-OM points higher. This would also account for the lower rate of reliable and clinically significant improvement in the current study.

The reported data shows the broad effectiveness of services but also some nuanced differences. However, we are cautious about interpreting any difference between the two measures as these are totally confounded with different services; recall that the services using CCAPS received, on average, nearly twice the amount of sessions compared with the CORE services. In addition, the time frame for the two measures differs: one week for CORE and two weeks for CCAPS. Notwithstanding these points, the current study demonstrated the added value of exploring dimensions within the clinical measures, which enabled profiling of students’ psychological needs and found that counselling was particularly effective for improving depression, anxiety and wellbeing on the CORE-OM. According to the CCAPS, counselling reliably reduced experiences of depression, distress and hostility and led to improvements with social anxiety, eating concerns and academic distress. Both measures highlight counselling to be particularly effective for depression, which is noteworthy as students on average received 4 counselling sessions. National improvements observed in the UK’s Improving Access to Psychological Therapies initiative report improvement rates of 50.8% for clients following an average of 6.9 counselling sessions (NHS Digital, Citation2019).

Lessons from the SCORE collaborative

Services within the present consortium instilled a positive culture across therapeutic teams for routinely using outcome measures. This practice resulted in high quality service data from bona fide measures that were predominately complete. The methods to access, export and process data were relatively simple, albeit time-consuming, and relied on a dedicated member of staff. All services used measures at the start of every counselling session, which not only meant that data were more complete, but it also ensured that outcomes of clients with unplanned endings could be explored. Importantly, such practice enabled analyses of clients with unplanned endings on the CORE-10, which were noticeably lower than clients with planned endings and raises the importance of practitioners emphasising the benefit of working to an agreed planned ending with clients. However, the level of detail for clients with unplanned endings varied for the two measures. Unplanned endings for clients in services that used the CORE-OM had data from the last available session that used the CORE-10, which provides a broad indication of the overall level of distress. By contrast, services that used the CCAPS-34 every session gained additional information on clients’ academic distress, alcohol misuse, eating concerns, general anxiety, social anxiety and depression at the point at which they dropped out. Measure differences also arose for identifying clients with unplanned endings. Not all services in the current sample recorded whether client endings were planned or unplanned. This was true for services using the CORE-OM and CCAPS. However, it was easy to identify clients with unplanned endings by extracting clients with CORE-10 post counselling. The current study identifies the importance of exploring outcomes for clients with planned and unplanned endings and services would benefit from ensuring that such outcomes are recorded.

Despite the potential benefits of using the CCAPS as an assessment measure, it is longer than the combination of the CORE-OM and CORE-10, which can discourage services from using measures particularly if they are using measures for the first time. In addition, the CCAPS appeared to be less sensitive to change as indicated by the Distress Index, a function of having a reliable change index of 0.8 compared with an equivalent index of 5, equating to 0.5 for the CORE-OM. However, the current study did not aim to impose that services adopt a specific measure. Rather, supporting the wider practice of using a validated measure is more relevant and providing services with evidence to inform such decisions encourages the sector to be critical of the data being collected (Lambert, Citation2010). This adoption is needed across the sector to ensure that services are evaluated by appropriate metrics and lead to evidenced-base interventions that improve student outcomes. Service development has often been driven by the need to reduce waiting times and whilst this is important, service models have adapted to offering fewer counselling sessions to see more students (Barden & Caleb, Citation2019). It may be that, rather than being judged on rates of reliable and clinically significant improvement, which is the most stringent criteria, it is more appropriate to be judged on the criteria of reducing the level of severity sufficiently to enable academic learning to continue.

Towards a national dataset of student counselling outcomes

The feasibility of pooling service data was established with the support of a practice-research consortium that brought together practitioners from varied counselling services. The research activity was led by practitioners, supported by researchers and focused on service evaluation and development. These methods support a wider movement of embedding research into practice to ensure that it becomes routine and sustainable rather than one-off or reliant on research funding. As a model, it builds evidence from clinical practice, positively contributes to the culture of using measures, cascades skills across the sector, and provides a representative picture to inform service and policy decisions (Barkham & Mellor-Clark, Citation2003; Bartholomew, Pérez-Rojas, Lockard, & Locke, Citation2017; Castonguay et al., Citation2010).

Working with varied services identified organisational differences and introduced early barriers, particularly when linking services with ethics committees. Some services needed to establish these links for the first time and streamlining an ethics approval route for institutional services will support research that is led by services. These methods align with practice-based evidence and evidence-based practice, which have built the evidence base for psychotherapeutic practice and provide an exemplar for evaluating therapeutic practice in the context of student mental health (Barkham et al., Citation2001; Green & Latchford, Citation2012; Margison et al., Citation2000). Moreover, encouraging collaboration between mental health services and academics offers evidence for varied interventions and client groups that are not typically targeted by clinical trials. These methods in the present study led to larger sub-samples of international, postgraduate, male and medical students compared to the typical demographic recorded in a standalone university counselling service.

Limitations and future directions

The broader context of developing a standardised minimum dataset (SMD) has been adopted by many disciplines (e.g. Castonguay et al., Citation2010; Johnson, Citation1997; Lutomski et al., Citation2013, Citation2015; NHS Digital, Citation2019; Palmer et al., Citation2013; Wheeler et al., Citation2015). Developing a SMD for student mental health and wellbeing would encourage institutions to review the quality and type of data being collected on students and inform the broader debate of using appropriate metrics to evaluate interventions. There are known data inconsistencies across institutions as well as challenges for counselling services to implement routine outcome measures (Broglia et al., Citation2017). The current study identified further data gaps in demographic, intake and referral data collected by institutions. These inconsistencies led to missing data at the aggregate level, which prevented detailed comparisons of outcomes for different student groups.

Developing a standardised minimum dataset to characterise the unique demographic of students would address these issues. Such a SMD would be advantageous for HE providers and the NHS as the sectors align to deliver the NHS long-term plan (NHS, Citation2019). This closer alignment provides a necessary opportunity to clarify the role of in-house counselling services in supporting the short-term mental health needs of students and supporting their progress successfully through university. By contrast, NHS services are designed to support long-term mental health needs and ensure that clients reach mental health wellness. Aligning these practices would provide robust and diverse data on student mental health and inform the development of interventions that lead to improved outcomes for all students.

Acknowledgements

We would like to thank the services and therapeutic teams that processed and contributed data on behalf of SCORE members, in particular: Rob Barnsley, Deborah Ceccarelli, and Stephanie Griffiths. Our thanks also to Alex Curtis Jenkins and John Mellor-Clark for providing support and advice for extracting service data. We thank Dr Naomi Moller for supporting the development of the SCORE consortium, the British Association for Counselling and Psychotherapy (BACP) for supporting and funding this research, and the United Kingdom Council for Psychotherapy (UKCP) for supporting training.

Disclosure statement

EB declares that she is employed by the British Association of Counselling and Psychotherapy (BACP), which supports this research in the form of staff time. MB declares that he was involved with the development of the CORE–OM and CORE–10 measures, and there have been no financial gains associated with any outputs linked to this work. All other authors have no conflicts to declare.

Additional information

Funding

Notes on contributors

Emma Broglia

Emma Broglia is a postdoctoral researcher working across the Department of Psychology, University of Sheffield and the Research Department at the British Association for Counselling and Psychotherapy. She is the project manager of the SCORE consortium, and oversees a longitudinal project measuring student mental health (https://www.smarten.org.uk/longitudinal-studies.html#).

Gemma Ryan

Gemma Ryan is a doctoral student at City, University of London, studying developmental psychology and health interventions. She previously researched mental health interventions for children and young people at BACP and her current work is in communication interventions for autistic children.

Charlotte Williams

Charlotte Williams is an Organisational Consultant, Counsellor, and expert advisor for SCORE.

Mark Fudge

Mark Fudge is Head of Counselling and Mental Health Support at Keele University, UK and is a practitioner member of SCORE.

Louise Knowles

Louise Knowles is Head of Mental Health and Psychological Therapy Services, at the University of Sheffield Counselling Service, UK and Deputy chair of SCORE.

Afra Turner

Afra Turner is a Cognitive Behaviour Psychotherapist in the Student Support & Wellbeing Services at King's College London, UK. She is also a PhD student at King's College London and Chair of SCORE.

Géraldine Dufour

Géraldine Dufour is Head of Counselling at Cambridge University, UK and visiting professor at Tongji University. Geraldine is a practitioner member of SCORE.

Alan Percy

Alan Percy is Head of Counselling at the University of Oxford, UK and a visiting Professor of Psychology at Fudan University, Shanghai. Alan is a practitioner member of SCORE.

Michael Barkham

Michael Barkham is a Professor of Clinical Psychology and works out of the PEARLS Research Lab (https://pearlsresearchlab.group.shef.ac.uk) in the Department of Psychology at The University of Sheffield. A common thread is the complementary methodologies of practice-based evidence (large routine datasets) and pragmatic randomised controlled trials in the psychological therapies together with a commitment to student mental health. Michael is an expert advisor for SCORE.

The Student Counselling Outcomes Research and Evaluation (SCORE) consortium is a practice-research network that aims to create a shared routine outcomes database to improve service delivery and provide evidence on counselling embedded into Higher Education (http://www.scoreconsortium.group.shef.ac.uk/).

References

- Advance HE. (2018). Equality and higher education: Students statistical report 2018. Retrieved from www.advance-he.ac.uk/newsand-views/Equality-in-higher-education-statistical-report-2018

- Auerbach, R. P., Alonso, J., Axinn, W. G., Cuijpers, P., Ebert, D. D., Green, J. G., … Nock, M. K. (2016). Mental disorders among college students in the World Health Organization world mental health surveys. Psychological Medicine, 46, 2955–2970. doi:10.1017/S0033291716001665

- Barden, N., & Caleb, R. (2019). Student mental health and wellbeing in higher education: A practical guide. London: SAGE Publications Limited.

- Barkham, M., Bewick, B., Mullin, T., Gilbody, S., Connell, J., Cahill, J., … Evans, C. (2013). The CORE-10: A short measure of psychological distress for routine use in the psychological therapies. Counselling and Psychotherapy Research, 13, 3–13. doi:10.1080/14733145.2012.729069

- Barkham, M., Broglia, E., Dufour, G., Fudge, M., Knowles, L., Percy, A., … SCORE Consortium. (2019). Towards an evidence-base for student wellbeing and mental health: Definitions, developmental transitions and data sets. Counselling and Psychotherapy Research, 19, 351–357. doi:10.1002/capr.12227

- Barkham, M., Margison, F., Leach, C., Lucock, M., Mellor-Clark, J., Evans, C., … McGrath, G. (2001). Service profiling and outcomes benchmarking using the CORE-OM: Toward practice-based evidence in the psychological therapies. Journal of Consulting and Clinical Psychology, 69, 184. doi:10.1037//0022-006x.69.2.184

- Barkham, M., & Mellor-Clark, J. (2003). Bridging evidence-based practice and practice-based evidence: Developing a rigorous and relevant knowledge for the psychological therapies. Clinical Psychology & Psychotherapy, 10, 319–327. doi:10.1002/cpp.379

- Bartholomew, T. T., Pérez-Rojas, A. E., Lockard, A. J., & Locke, B. D. (2017). “Research doesn’t fit in a 50-minute hour”: The phenomenology of therapists’ involvement in research at a university counseling center. Counselling Psychology Quarterly, 30(3), 255–273. doi:10.1080/09515070.2016.1275525

- Biasi, V., Patrizi, N., Mosca, M., & De Vincenzo, C. (2017). The effectiveness of university counselling for improving academic outcomes and well-being. British Journal of Guidance & Counselling, 45(3), 248–257. doi:10.1080/03069885.2016.1263826

- Boswell, J. F., Kraus, D. R., Miller, S. D., & Lambert, M. J. (2015). Implementing routine outcome monitoring in clinical practice: Benefits, challenges, and solutions. Psychotherapy Research, 25(1), 6–19. doi:10.1080/10503307.2013.817696

- Broglia, E., Millings, A., & Barkham, M. (2017). The Counseling Center Assessment of Psychological Symptoms (CCAPS-62): Acceptance, feasibility, and initial psychometric properties in a UK student population. Clinical Psychology & Psychotherapy, 24(5), 1178–1188. doi:10.1002/cpp.2070

- Broglia, E., Millings, A., & Barkham, M. (2018). Challenges in addressing student mental health in embedded counselling services: A survey of UK higher and further education institutions. British Journal of Guidance and Counselling, 46, 441–455. doi:10.1080/03069885.2017.1370695

- Byrom, N. 2015. A summary of the report to HEFCE: understanding provision for students with mental health problems and intensive support needs. Retrieved from www.studentminds.org.uk/uploads/3/7/8/4/3784584/summary_of_the_hefce_report.pdf

- Castonguay, L. G., Locke, B. D., & Hayes, J. A. (2011). The center for collegiate mental health: An example of a practice-research network in university counseling centers. Journal of College Student Psychotherapy, 25, 105–119. doi:10.1080/87568225.2011.556929

- Castonguay, L. G., Nelson, D. L., Boutselis, M. A., Chiswick, N. R., Damer, D. D., Hemmelstein, N. A., … Spayd, C. (2010). Psychotherapists, researchers, or both? A qualitative analysis of psychotherapists’ experiences in a practice research network. Psychotherapy: Theory, Research, Practice, Training, 47(3), 345–354. doi:10.1037/a0021165

- Center for Collegiate Mental Health. (2019, January). 2018 Annual Report. Retrieved from https://sites.psu.edu/ccmh/files/2019/01/2018-Annual-Report-1.30.19-ziytkb.pdf

- Cokley, K., Hall-Clark, B., & Hicks, D. (2011). Ethnic minority-majority status and mental health: The mediating role of perceived discrimination. Journal of Mental Health Counseling, 33(3), 243–263. doi:10.17744/mehc.33.3.u1n011t020783086

- Connell, J., Barkham, M., & Mellor-Clark, J. (2007). CORE-OM mental health norms of students attending university counselling services benchmarked against an age-matched primary care sample. British Journal of Guidance & Counselling, 35, 41–57.

- Connell, J., Barkham, M., & Mellor-Clark, J. (2008). The effectiveness of UK student counselling services: An analysis using the CORE System. British Journal of Guidance & Counselling, 36(1), 1–18. doi:10.1080/03069880701715655

- Equality Challenge Unit. (2017). Equality in higher education: Students statistical report 2017. Retrieved from www.ecu.ac.uk/publiccations/equality-in-higher-education-statistical-report-2017/.

- Evans, C., Connell, J., Barkham, M., Margison, F., McGrath, G., Mellor-Clark, J., & Audin, K. (2002). Towards a standardised brief outcome measure: Psychometric properties and utility of the CORE–OM. British Journal of Psychiatry, 180(1), 51–60. doi:10.1192/bjp.180.1.51

- Green, D., & Latchford, G. (2012). Maximising the benefits of psychotherapy: A practice-based evidence approach. John Wiley & Sons. doi:10.1002/9781119967590

- Jacobson, N. S., & Truax, P. (1992). Clinical significance: A statistical approach to defining meaningful change in psychotherapy research. Journal of Consulting and Clinical Psychology, 59, 12–19.

- Johnson, A. (1997). Follow up studies: A case for a standard minimum data set. Archives of Disease in Childhood - Fetal and Neonatal Edition, 76(1), F61–F63. doi:10.1136/fn.76.1.f61

- Lambert, M. J. (2010). Prevention of treatment failure: The use of measuring, monitoring, and feedback in clinical practice. American Psychological Association. doi:10.1037/12141-000

- Locke, B. D., Buzolitz, J. S., Lei, P.-W., Boswell, J. F., McAleavey, A. A., Sevig, T. D., & Hayes, J. A. (2011). Development of the counseling center assessment of psychological symptoms-62 (CCAPS-62). Journal of Counseling Psychology, 58, 97–109. doi: 10.1037/a0021282

- Lutomski, J. E., Baars, M. A., Schalk, B. W., Boter, H., Buurman, B. M., den Elzen, W. P., … Rikkert, M. G. O. (2013). The development of the Older Persons and Informal Caregivers Survey Minimum DataSet (TOPICS-MDS): A large-scale data sharing initiative. PloS One, 8(12), e81673. doi:10.1371/journal.pone.0081673

- Lutomski, J. E., van Exel, N. J. A., Kempen, G. I. J. M., van Charante, E. M., den Elzen, W. P. J., Jansen, A. P. D., … Melis, R. J. F. (2015). Validation of the care-related quality of life instrument in different study settings: Findings from The Older Persons and Informal Caregivers Survey Minimum DataSet (TOPICS-MDS). Quality of Life Research, 24(5), 1281–1293. doi:10.1007/s11136-014-0841-2

- Margison, F. R., Barkham, M., Evans, C., McGrath, G., Clark, J. M., Audin, K., & Connell, J. (2000). Measurement and psychotherapy. British Journal of Psychiatry, 177(2), 123–130. doi:10.1192/bjp.177.2.123

- McKenzie, K., Murray, K. R., Murray, A. L., & Richelieu, M. (2015). The effectiveness of university counselling for students with academic issues. Counselling and Psychotherapy Research, 15, 284–288.

- Murphy, K. P., Rashleigh, C. M., & Timulak, L. (2012). The relationship between progress feedback and therapeutic outcome in student counselling: A randomised control trial. Counselling Psychology Quarterly, 25(1), 1–18. doi:10.1080/09515070.2012.662349

- NHS. (2019). The NHS long term plan. Retrieved from https://www.longtermplan.nhs.uk/

- NHS Digital. (2019). Retrieved from https://digital.nhs.uk/data-and-information/data-collections-and-datasets/data-sets/improving-access-to-psychological-therapies-data-set

- Palmer, C. S., Davey, T. M., Mok, M. T., McClure, R. J., Farrow, N. C., Gruen, R. L., & Pollard, C. W. (2013). Standardising trauma monitoring: The development of a minimum dataset for trauma registries in Australia and New Zealand. Injury, 44(6), 834–841. doi:10.1016/j.injury.2012.11.022

- Randall, E. M., & Bewick, B. M. (2016). Exploration of counsellors’ perceptions of the redesigned service pathways: A qualitative study of a UK university student counselling service. British Journal of Guidance & Counselling, 44, 86–98. doi:10.1080/03069885.2015.1017801

- Royal College of Psychiatrists. (2011). Mental health of students in higher education. College Report CR166. London: Royal College of Psychiatrists.

- Sills, S. J., & Song, C. (2002). Innovations in survey research: An application of web-based surveys. Social Science Computer Review, 20(1), 22–30. doi:10.1177/089443930202000103

- Spitzer, R. L., Kroenke, K., & Williams, J. B. W. (1999). Validation and utility of a self-report version of PRIME-MD: The PHQ primary care study. JAMA, 282, 1737–1744. doi:10.1001/jama.282.18.1737

- The Insight Network and Dig-in. (2019). University student mental health survey 2018: A large scale study into the prevalence of student mental illness within UK universities. Retrieved from https://assets.websitefiles.com/561110743bc7e45e78292140/5c7d4b5d314d163fecdc3706_Mental%20Health%20Report%202018.pdf

- Thorley, C. (2017). Not by degrees: Improving student mental health in the UK’s Universities. Institute for Public Policy Research. Retrieved from http://www.ippr.org/research/publications/not-by-degrees.

- Trendence UK. (2019). Only the lonely – Loneliness, student activities and mental wellbeing at university. Retrieved from https://wonkhe.com/blogs/only-the-lonely-loneliness-student-activities-and-mentalwellbeing/

- Wheeler, R., Steeds, R., Rana, B., Wharton, G., Smith, N., Allen, J., … Sharma, V. (2015). A minimum dataset for a standard transoesophageal echocardiogram: A guideline protocol from the British Society of Echocardiography. Echo Research and Practice, 2(4), G29–G45. doi:10.1530/ERP-15-0024