?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This study investigates the influence of generative artificial intelligence (GAI), specifically AI text generators (ChatGPT), on critical thinking skills in UK postgraduate business school students. Using Bloom’s taxonomy as theoretical underpinning, we adopt a mixed-method research employing a sample of 107 participants to investigate both the influence and challenges of these technologies in higher education. Our findings reveal that the most significant improvements occurred at the lower levels of Bloom’s taxonomy. We identify concerns relating to reliability, accuracy, and potential ethical implications of its application in higher education. The significance of this paper spans across, pedagogy, policy and practice, offering insights into the complex relationship between AI technologies and critical thinking skills. While highlighting the multifaceted aspects of the impact of AI in education, this article serves as a guide to educators and policymakers, stressing the importance of a comprehensive approach to fostering critical thinking and other transferable skills in the higher education landscape.

1. Introduction

In an era marked by rapid digital innovation and widespread data availability, integrating technology with critical thinking skills in higher education (HE) settings has become increasingly important (Calma and Davies Citation2021; Lincoln and Kearney Citation2019). Critical thinking, defined as the ability to evaluate information, challenge assumptions, and generate innovative solutions (Curzon-Hobson Citation2003), is fundamental in business education. Recent studies indicate a shift in university education focus from discipline-specific knowledge towards developing critical thinking and problem-solving abilities, addressing concerns that graduates may lack these crucial skills (Bartunek and Ren Citation2022; Garcia-Chitiva and Correa Citation2023).

In recent years, the noticeable digital transformation HE has been driven by technological advancement (De Nito et al. Citation2023; Peres et al. Citation2023). The integration of AI in reshaping traditional teaching methods represents a major shift in educational practices, merging technology, teaching, and learning (O’Dea and O’Dea Citation2023). This evolution introduces novel challenges and opportunities for developing critical thinking skills. GAI, used in creating various content types including audio, text, images, and videos (Davenport and Mittal Citation2022; McKinsey Citation2023), is increasingly prominent in HE. Text generators, e.g. ChatGPT, demonstrate the potential of GAI to transform education by enhancing learning experiences and skill development (Cano, Venuti, and Martinez Citation2023; Rudolph, Tan, and Tan Citation2023). Concurrently, the use of chatbots and other AI tools in HE is gaining traction, offering innovative learning and skill development approaches (Hwang and Chang Citation2023). This illustrates how AI can provide diverse perspectives but also foster creativity and rigorous analysis among students (Bearman, Ryan, and Ajjawi Citation2023). Furthermore, AI enhances problem-solving and creative thinking through various learning explorations (Hooda et al. Citation2022).

However, the rapid integration of these technologies poses questions about their impacts on traditional/conventional learning processes. Although discussions on the educational impact of GAI (O’Dea and O’Dea Citation2023; Peres et al. Citation2023) are increasing, and concerns about academic integrity persist (Ashour Citation2020; Cotton, Cotton, and Shipway Citation2023), empirical evidence of AI’s impact on critical thinking in business education remains scarce (Lincoln and Kearney Citation2019). To address this gap, we address the following research questions: (1) What is the impact of AI text generators on critical thinking skills in UK business school postgraduate students? and (2) what are the potential drawbacks of using AI text generators for developing critical thinking skills? In addressing these questions, we examined a sample of 107 postgraduate students drawn from a population of UK business schools randomly divided into two groups: (1) a control group and (2) an experimental/intervention group (using ChatGPT as a supplementary tool). Our study makes two contributions. First, it presents a harmonised approach to examining how AI helps to build critical thinking skills. Prior studies suggest the utility of AI in personalised learning and administrative task automation (Dwivedi et al. Citation2021; Xue et al. Citation2022). Recent policy documents have applied principles to draft policies and approaches to using AI text generators responsibly and ethically (JISC Citation2023; QAA Citation2023). Our study provides a perspective on the complex relationship between AI text generators and critical thinking, influencing policy decisions and teaching strategies for their optimal integration in postgraduate UK business HE. Second, we provide insights for educators, policymakers, and technologists by helping to harness AI text generators that could improve learning outcomes – especially critical thinking skills.

The paper is structured as follows: Section 2 reviews relevant literature, Section 3 explains our research methodology, Section 4 presents results and analysis, Section 5 presents the discussion while Section 6 concludes with key insights and recommendations for future research.

2. AI and critical thinking skills: challenges and gaps

2.1. AI in education and the emergence of GAI

The growing integration of AI in education is ushering in a transformative era (Moscardini, Strachan, and Vlasova Citation2022). This technology has brought personalised learning, predictive analytics, and automated administrative tasks to the forefront of educational digitisation. As a specific AI branch, GAI has gained academic interest by its ability to generate diverse content forms (Davenport and Mittal Citation2022). The potential of GAI in reshaping education has been explored in the literature, for instance, the role of GAI in HE (Olga et al. Citation2023), its impact on teaching and learning (Lodge, Thompson, and Corrin Citation2023), Leiker et al. (Citation2023) suggesting GAI as a viable alternative to traditional learning practices.

On the promise of GAI, Peres et al. (Citation2023) discovered a strong connection between the perceived value of GAI in education and the intention to use it. This highlights widespread acceptance and readiness for its transformative role in education. The literature offers a comprehensive, continually evolving insight into the potential of GAI to revolutionise education. It underscores the promising capacity of these technologies to improve educational processes, outcomes, and experiences, ushering in a new era in education.

2.2. AI text generators in education and critical thinking skills

Critical thinking has been extensively studied, with scholars defining it as ‘reflective thinking aimed at decision-making’ (Ennis Citation1993) and the conceptualisation, application, analysis, synthesis, and evaluation of information gathered from observation, experience, reflection, reasoning, or communication (Bukoye and Oyelere Citation2019). Recent studies describe critical thinking as a controlled and measured thinking process (Calma and Davies Citation2021). The growing importance of nurturing critical thinking across various academic disciplines (e.g. business education) has been well-documented (Murawski Citation2014; Shaw et al. Citation2020) including its potential on employability (Penkauskienė, Railienė, and Cruz Citation2019).

To understand how AI text generators can enhance critical thinking skills, Bloom's taxonomy is a relevant framework and taxonomy in evaluating educational learning objectives (Schoepp Citation2019; Calma and Davies Citation2021). It categorises cognitive skills into distinct levels: remembering, understanding, applying, analysing, evaluating, and creating (Bloom et al. Citation1956; Krathwohl Citation2002), reflecting the progressive complexity inherent in critical thinking. GAI can play a role in education by creating complex scenarios for students to analyse and evaluate, aligning with Bloom's taxonomy (JISC Citation2023). Moreover, interacting with AI text generators can encourage students to generate innovative solutions, stimulating the highest cognitive skill level (creating) in Bloom's taxonomy (Rudolph, Tan, and Tan Citation2023).

2.3. Challenges of AI text generators in education

The introduction of AI text generators in education presents unique challenges, highlighting the complexity of integrating them into current teaching methods (Lancaster Citation2023; Mucharraz y Cano and Venuti Citation2023). A major challenge is the issue of AI prompts. Users often find it difficult to provide relevant and detailed prompts that align effectively with their questions. This is due to various factors, including limitations in AI models, difficulties in understanding user intent, and the trade-off between generating equal and diverse text (Moscardini, Strachan, and Vlasova Citation2022). Creating appropriate and targeted prompts is crucial for making the most of AI text generators to enhance student critical thinking (Moscardini, Strachan, and Vlasova Citation2022). Another challenge is the accuracy of AI-generated content, with nonsensical responses a typical experience, indicating that AI sometimes fails to grasp context correctly, raising doubts about its accuracy. Ensuring reliability in AI tools requires robust fact-checking and ongoing review mechanisms (Leiker et al. Citation2023).

Ethical considerations come into play as well, with the ‘Hallucination effect’ becoming a well-known problem of ChatGPT. This is the likelihood to create convincing text outputs that are extremely difficult to identify as being AI generated (O’Dea and O’Dea Citation2023). In addition, there are concerns about potential misuse of AI text generators that could undermine academic integrity. Cotton, Cotton, and Shipway (Citation2023) suggest that students might use these tools to complete assignments without the intellectual effort traditionally associated with learning. Lastly, lack of digital literacy among educators and students also poses a significant barrier (Lincoln and Kearney Citation2019).

2.4. Gaps in the current literature

There is a dearth of empirical research investigating the impact of AI text generators on critical thinking skills, particularly within the context of postgraduate business education (Lincoln and Kearney Citation2019). While the broader role of AI in education has been extensively studied (Essien, Chukwukelu, and Essien Citation2021; Lim et al. Citation2023), the specific focus critical thinking in HE has been largely overlooked (QAA Citation2023). This presents a compelling opportunity for further research. This gap becomes all the more essential given the importance of critical thinking skills in the constantly evolving business world.

3. Methodology

3.1. Research design

This research study employs a quasi-experimental design featuring pre-test and post-test assessments to measure critical thinking skills development. Participants were drawn from a population of UK business school postgraduate students and randomly divided into control and experimental groups, with the latter group using ChatGPT as a supplementary learning tool. Initially, both groups underwent pre-testing to assess their baseline critical thinking abilities. The intervention phase then followed, during which the experimental group utilised ChatGPT to aid their learning, while the control group did not. To gather a comprehensive understanding of the participants’ experiences and perceptions of using AI in learning, we collected both qualitative and quantitative data through a single survey instrument.Footnote1 Post-intervention, we conducted a post-test exclusively for the experimental group to evaluate the impact of AI tools on their critical thinking skill development.

3.1.1. Pre-test design and validation

In designing the pre-test for this study, we meticulously developed questions that align with the distinct levels of Bloom's taxonomy (Krathwohl Citation2002). Each question was crafted to target specific cognitive skills ranging from basic recall to complex creative thinking. To ensure the validity of these questions, initially, subject matter experts in business education and cognitive assessment were consulted for question development, which is a vital step in creating effective educational assessments (Suskie Citation2018). Following this, a pilot study was conducted with a smaller cohort of students to test and refine assessment tools.

Feedback from the pilot study led to iterative refinements of the questions, ensuring each aligns accurately with the intended cognitive level of Bloom's taxonomy. Furthermore, we ensured the questions covered a range of scenarios and applications pertinent to contemporary business education and higher-order thinking skills (Brookhart Citation2010).

3.2. Sampling approach

The research study employed purposeful random sampling to ensure that the selected participants were specifically postgraduate students in a business school setting, aligning with the research questions (Etikan, Musa, and Alkassim Citation2016). The students selected were from a population of UK business school postgraduate students. A total of 107 postgraduate students were sampled, with 56 students allocated to the control group and 51 students in the experimental group. The distribution considered factors such as age, gender and prior exposure to AI technologies to ensure a balanced representation.

3.3. Data collection

The data collection for our study was executed using a single surveyFootnote2 instrument that integrated both quantitative and qualitative elements. The quantitative section encompassed multiple-choice and Likert-scale questions formulated to gauge the participants’ current level of critical thinking skills, frequency and manner of AI text generator use, and their perceived effects of the technology on their skills. On the other hand, the qualitative section comprised open-ended questions (see the below for details) designed to provide a deep-dive into the students’ personal experiences and perspectives regarding the integration of AI text generators in their learning journey. The decision to adopt a mixed-methods approach is rationalised as in Creswell and Creswell (Citation2017), where the authors elucidated the advantages of harnessing both quantitative and qualitative data. Specifically, this approach offers a comprehensive understanding, allowing for a numerical analysis of data while also capturing rich, contextual insights from participants.

Table 1. Sample open-ended questions.

3.4. Data analysis

We used IBM SPSS Statistics, version 28 for the analyses in our study. Accordingly, quantitative analysis encompassed descriptive and inferential statistical techniques to comprehensively evaluate the impact of an educational intervention on UK business school postgraduate students. Descriptive statistics were employed to characterise the demographic profile of the participants, including age distribution and familiarity with AI text generators. Additionally, pre-test and post-test mean scores, along with standard deviations, were analysed to assess changes in participants’ cognitive performance across different levels of Bloom's Taxonomy. Inferential statistical methods, such as paired-sample t-tests and independent sample t-tests, were employed to determine the significance of differences within and between groups.

107 participants answered the open ended questions. Their answers were analysed using Braun and Clarke’s six steps of thematic analysis (Braun and Clarke Citation2006) and were carried out by two authors of the team. This analytical method is widely adopted in the field of social sciences and is an interactive process. It includes the following steps: 1) familiarising yourself with your data; 2) generating initial codes; 3) searching for themes: 4) reviewing for themes; 5) defining and naming themes; and 6) producing the report. Prior to analysis, the comments of the participants were extracted into a spreadsheet, enabling easy comparison and coding.

During the analysis process, the authors developed an initial familiarity with the data by reading through the comments of the participants carefully and repeatedly. At the end of this step, the responses were colour coded according to their similarity independently by each author, and compared and checked jointly by both authors. Consequently, seven categories were identified. The initial codes were then generated for each coloured category. At the next step, both authors worked together to search for themes in the codes across. A thematic map was subsequently developed. In order to ensure quality and rigour of the research (Gioia, Corley, and Hamilton Citation2013), the themes were reviewed and checked independently by the other two authors in the team. After completing this step, the themes were finalised and clustered into two levels based on the similarities of the themes (). The detailed explanation of individual themes is presented in the findings below.

3.5. Reliability and validity

To ensure reliability in our research, several steps were taken. First, the survey instrument was pilot-tested with a smaller sample of students outside the main study. We used the feedback obtained from this pilot to refine questions and improve clarity. This minimises any potential ambiguities and aids in consistent response interpretation. Additionally, for the qualitative data analysis, the process of dual coding by two authors further enhanced the reliability. This strategy is known as investigator triangulation and it ensures that the identified themes genuinely reflect the participant perspectives, rather than representing the biases of a single researcher (Archibald Citation2016).

Content validity was ensured by drafting survey questions that were relevant and directly related to the objectives of the research. Several experts in the field of educational research and AI were consulted to verify the content of the survey instrument, which further strengthened its validity (Haynes, Richard, and Kubany Citation1995). Construct validity was also considered in the actualisation of our research study. The quantitative assessments in our data collection instrument, which are based on pre-tests and post-tests, were anchored in the Bloom's Taxonomy framework – a widely recognised taxonomy in the field of educational psychology for measuring cognitive abilities (Krathwohl Citation2002).

4. Findings

4.1. Quantitative results of the postgraduate student profiles

In our study, participant demographics among UK business school postgraduate students revealed a notable distribution. The largest cohort belonged to the 25–34 year age category (56%), indicating significant representation. A substantial proportion, 36%, comprised students aged 18–24 years, while 8.5% were aged 35–44 years (see ). This age diversity offers a varied perspective on AI text generators across different age groups.

Table 2. Overview of quantitative survey respondents (n = 107).

Familiarity with AI text generators varied, with 40% reporting being ‘Somewhat familiar,’ 25% ‘Very familiar,’ and 36% ‘Not familiar at all’ (). Notably, 60% had prior experience using AI text generators (). These insights into demographics and familiarity set the stage for a nuanced examination of responses to AI text generators.

presents the mean scores and standard deviations for both the pre-test and post-test assessments across distinct cognitive categories within Bloom's Taxonomy. For instance, within the ‘Remember’ category, participants displayed notable improvement in factual recall abilities, as seen in Q9, with a pre-test mean score of 3.15 (SD = 1.21) rising significantly to 4.76 (SD = 0.06) post-intervention. Similarly, in the ‘Apply’ category, Q15 revealed a substantial increase in the mean post-test score, from 3.18 (SD = 1.36) to 4.52 (SD = 0.13). These observations highlight the effectiveness of the intervention in enhancing participants’ memory retention and practical application of knowledge. In contrast, questions categorised under ‘Create’ demonstrated a significant positive shift as well. For instance, Q20 exhibited a noteworthy increase in the post-test mean score, rising from 2.98 (SD = 1.31) to 3.72 (SD = 0.19), emphasising the intervention role in fostering creative thinking abilities.

Table 3. Pre-test and post-test mean scores with standard deviation.

4.2. Inferential statistics

4.2.1. Paired-sample t-test for evaluating the impact of the intervention

We used the paired-sample t-test to assess the efficacy of the intervention on respondent cognitive responses. This method compared means of pre-test and post-test scores across six distinct levels of Bloom's Taxonomy. A total of 12 individual paired-sample t-tests were conducted for the intervention group, examining the difference between pre-test and post-test scores for specific questions.

Hypothesis statement 1

For each of the 12 questions investigated in this study, the following hypotheses are formulated:

H0: No significant difference exists between pre-test and post-test mean scores within the intervention group.

H1: A statistically significant difference is observed.

A predetermined significance level, typically set at α = 0.05, is employed as the threshold for assessing statistical significance. This ensures a robust evaluation of the intervention's impact, aligning with standard scientific practices.

presents paired-sample t-test outcomes assessing intervention impact on participants’ cognitive performance across Bloom's Taxonomy. The table displays key statistics for each test pair, which consists of a pre-intervention question (e.g. Q9) and a corresponding post-intervention question (e.g. Q23), as well as the mean scores before and after the intervention, t-values, degrees of freedom (), and

-values. To illuminate the implications of these statistical findings, we provided an analysis of specific test pairs from .

Table 4. Paired-Sample t-test results for intervention impact on Bloom's taxonomy levels.

The intervention significantly increased participants’ mean scores on Remember-level questions (3.15 to 4.07) with a highly significant difference (t = −7.36, p < .001), showcasing its profound impact on factual recall. Understand-level questions displayed noteworthy improvement (3.19 to 4.07), a highly significant difference (t = −6.76, p < .001), indicating the intervention's pivotal role in enhancing comprehension abilities.

Apply-level questions saw moderate improvement (4.15 to 4.42), with a statistically significant difference (t = −2.72, p = .008*). Notably, the effect size is smaller compared to Remember and Understand levels, suggesting a more modest impact on application. Create-level questions showed a significant increase (2.98 to 3.28) with a smaller effect size (t = −2.40, p = .018*), implying a relatively milder impact on creative thinking skills.

The findings, presented in , collectively underscore the multifaceted impact of the intervention across the different levels of cognitive learning. The results highlight substantial improvements in participants’ abilities to remember facts, comprehend concepts, and apply knowledge. However, the extent of these improvements varies across Bloom's Taxonomy levels, with a more pronounced impact observed at the lower-order cognitive levels (remember and understand) compared to the higher-order levels (apply, analyse, evaluate, and create). This insight is crucial for educators and curriculum developers tailoring strategies to specific cognitive domains.

4.2.2. Independent sample t-test for group comparisons

In this phase of the analysis, we employed the independent sample t-test to scrutinise the pre-test scores across the control group and the intervention group concerning each of the 12 questions. This meticulous examination serves the essential purpose of affirming the comparability of these two groups before the implementation of the intervention.

The independent variable under consideration is the group to which participants belong, specifically categorised as either the control group or the intervention group. The dependent variable represents the scores obtained on the 12 distinct questions administered as part of the pre-test assessment. A series of 12 independent sample t-tests are conducted, one for each question, to assess the disparities in pre-test scores between the control and intervention groups. These tests collectively contribute to our understanding of the baseline equivalence of the two groups.

Hypothesis statement 2

For each of the 12 questions in our investigation, we formulate the following hypotheses:

H0: No significant difference exists in the mean pre-test scores between the control and intervention groups.

H1: A statistically significant difference is observed.

To ensure robust statistical rigour, we adopted a predetermined significance level (α = 0.05). offers a comprehensive summary of the outcomes obtained from independent sample t-tests conducted to scrutinise the disparities in pre-test scores between the control group and the intervention group. It presents key statistical measures for each question, encompassing the mean scores, standard deviations (SD), t-values, degrees of freedom (df), and p-values.

Table 5. Results of independent sample t-test for pre-test scores between control and intervention groups.

In the Remember-level analysis (Q9), both the control group (M = 2.82, SD = 1.21) and the intervention group (M = 2.90, SD = 1.20) demonstrated comparable factual knowledge recall, as indicated by a non-significant t-test result (t = −0.33, p = .74). This underscores the initial equivalence of the groups in basic memory-based tasks. Moving to the Understand category (Q11), both groups exhibited a similar understanding of conceptual content, with the control group scoring a mean of 2.94 (SD = 1.39) and the intervention group scoring 3.10 (SD = 1.46). The t-test showed a non-significant difference (t = −0.56, p = .58), reaffirming the initial comparability of the groups in grasping fundamental concepts.

However, at the Apply-level (Q13), a significant difference emerged. The control group scored a mean of 4.02 (SD = 1.22), while the intervention group scored 4.50 (SD = 0.86), with a statistically significant t-test result (t = −2.27, p = .03*). This highlights the intervention group's superior ability to apply learned concepts to real-world scenarios, signifying a significant impact of the intervention. Transitioning to the Evaluate category (Q18), both groups demonstrated a similar capacity for critical evaluation. The control group obtained a mean score of 3.20 (SD = 1.31), and the intervention group achieved a mean score of 3.08 (SD = 1.41), with a non-significant t-test result (t = 0.44, p = .66). This underscores the initial comparability of the groups in terms of evaluative skills. The results in affirm that the two groups were generally comparable concerning their abilities, with notable disparities indicating a significant advantage in the intervention group's application of knowledge.

4.3. Thematic analysis of qualitative results

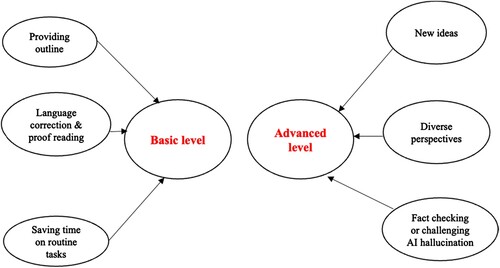

The comments of the participants are categorised into two main themes – advanced level and basic level. With regards to the Bloom’s Taxonomy, the basic level is associated with categories such as applying, understanding, and remembering, while the advanced level is associated with categories such as evaluating and analysing (please see ).

Figure 2. Key themes.

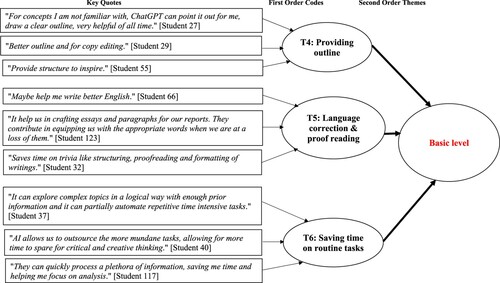

4.3.1. Basic level

This theme is interconnected more closely with the following three categories of the Bloom’s Taxonomy, namely remembering, understanding, and applying. They refer to the more fundamental stages of learning, such as memorising facts, being able to explain ideas, and using learned knowledge in new situations and contexts. The comments of the students show that some of participants (33 out of 105) did not use ChatGPT to develop their critical thinking skills, but a utility tool to streamline their research process and speed up routine tasks. For example, a small number of participants (5 out of 33) used ChatGPT as a proofreading tool to check grammar and typos. According to Student (33), ‘[ChatGPT helps me] save time on trivia like structuring, proofreading and formatting of writings.’ In addition, Student (26) emphasised this impact, as follows: ‘[ChatGPT is] good for me, can help me with proof reading my work’.

For some participants (16 out of 33), ChatGPT offered useful assistance in creating an outline for their dissertation. This was emphasised by some students. For example, ‘For concepts I am not familiar with, ChatGPT can point it out for me, draw a clear outline, very helpful of all time’ (Student 27) and ‘I normally use it to develop outline [for my assignments]’ (Student 27). Structuring an academic assignment, in particular a dissertation or a capstone project can be a daunting task for postgraduate students, due to their status as ‘novice researchers’.

It is well known that ChatGPT has a large database. It enables the tool to generate responses to different types of queries, but also different responses to the same queries, and in different languages if required. For this reason, some participants (12 out of 33) used it to help save time on routine tasks, such as offering a summary of literature review. Student (40) pointed out that ‘AI allows us to outsource the more mundane tasks, allowing for more time to spare for critical and creative thinking.’ Likewise, Student (107) added: ‘They can quickly process a plethora of information, saving me time and helping me focus on analysis.’

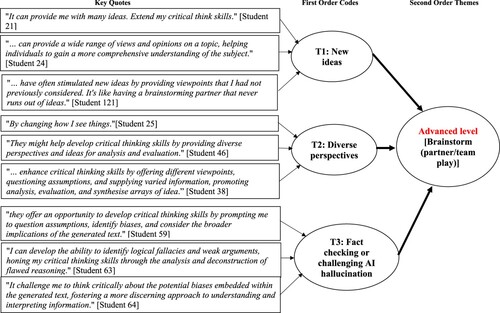

4.3.2. Advanced level

The comments of the participants (72 out of 105) indicate that they believed ChatGPT had a positive impact on their critical thinking skills. However, analysing and evaluating are considered as more advanced stages of learning, and refer to students’ ability to examine ideas, find connections between them, and appraise a decision or situation based on their existing knowledge and understanding. This theme corresponds to these two learning categories and describes students’ self-initiated activities in using ChatGPT as a learning partner or assist to help develop or enhance their critical thinking skills. It is divided further into three sub-themes, namely: new perspectives, new ideas and challenging AI hallucination. For example, most of the participants (53 out of 72) reported that use of ChatGPT exposed them to different viewpoints in a time-saving manner. This had prompted them to think outside the box and evaluate viewpoints from different lenses. Student (38) supported: ‘AI text generators enhance critical thinking skills by offering different viewpoints, questioning assumptions, and supplying varied information, promoting analysis, evaluation, and synthesising arrays of ideas.’

Some participants (10 out of 72) also commented that they used ChatGPT to brainstorm new ideas which they could potentially use as a foundation for their academic work. Student (57) mentioned: ‘I can expose myself to a wide range of viewpoints and arguments, which challenges my thinking and forces me to critically evaluate different perspectives.’ This was corroborated by Student (21), who added: ‘AI text generators have often stimulated new ideas by providing viewpoints that I had not previously considered. It's like having a brainstorming partner that never runs out of ideas.’

Challenging AI hallucination is the third sub theme. Data show that some participants (9 out of 72) became more aware of the issue than others and commented on the importance of fact-checking when conducting their academic research. In support, Student (12) emphasised that: ‘The use of AI text generators compels me to validate the information it provides critically. It's like a constant fact-checking process that sharpens my critical thinking skills.’

While our empirical data supports the impact of ChatGPT on students’ ability to remember, understand and apply (basic level) as well as analysing and evaluation (from advance level), it is important to note that no participants commented on the use of ChatGPT in producing new and original work, which is the highest order of learning according to the Bloom’s Taxonomy (Krathwohl Citation2002). Despite this concern, our findings indicate that participants are still able to demonstrate critical thinking skills as they can infer conclusions from AI text generated data but not create new knowledge. Next, we explored the challenges involved in using AI text generators to develop critical thinking skills.

4.4. Challenges of using AI text generators for developing critical thinking skills

The thematic analysis conducted in our study identified four (4) primary themes, which are discussed below.

4.4.1. Reliability and accuracy

The study participants raised concerns about the reliability and accuracy of answers provided by AI text generators. The very foundation of critical thinking rests upon accurate and comprehensive information (Lincoln and Kearney Citation2019), yet these generators can sometimes provide incomplete or misleading outputs. For instance, participants mentioned the risk of ‘relying on inaccurate or biased information’ (Student 71). This challenge is further emphasised by another observation that: ‘The AI might not be updated with the latest information or research on a topic, providing outdated perspectives’ (Student 75). In some instances, as one respondent noted, ‘The text generator occasionally misinterprets my inquiries, leading me to research irrelevant materials, thereby straying my thought process’ (Student 17).

4.4.2. Ethical concerns

From an ethical standpoint, the use of AI text generators raises concerns about detaching the human connection from the process of reading and understanding information. According to Student (59), ‘The impersonal nature of automated tools may hinder the development of empathy and understanding,’ which are pivotal to critical thinking. Furthermore, there is the risk, as another participant shared, that: ‘It seems like the AI just reinforces what I already believe, rather than challenging my preconceptions’ (Student 21), thus potentially stifling the ability to think critically.

4.4.3. Digital literacy

A high degree of digital literacy is needed to navigate the complexities of AI text generators effectively. This can have an impact on the development of critical thinking skills especially in postgraduate students in UK business schools. This complexity was mirrored in another sentiment where a Student (66) mentioned: ‘It is hard to understand how it works.’ Another participant pointed out that inaccuracies can arise as sometimes AI generators ‘give information that is not align[ed] with the input I have provided’ (Student 22).

4.4.4. Bias

The objectivity of AI text generators is often praised, but they can also be a double-edged sword. A significant concern is that if AI systems, such as text generators, are trained on biased data, they might perpetuate those biases (Manyika and Silberg Citation2019). Our findings also echo this sentiment, with the participants highlighting this barrier or potential drawback to the application of AI text generators for critical thinking skills. Specifically, as Student (63) pointed out, machine-generated responses ‘may not always reflect the complexity and nuances of real-world situations’. Overreliance on automated tools might also ‘result in a narrow focus on the generated content, overlooking the value of multidisciplinary approaches’ (Student 64), which can limit the breadth of one's critical thinking.

5. Discussion

5.1. The impact of AI text generators on developing critical thinking skills

Our study aimed to explore the role of AI text generators in critical thinking skills in UK business school postgraduate students. The findings suggest that AI text generators (specifically ChatGPT) has the potential to impact critical thinking skill development, aligning well with existing studies (e.g. Moscardini, Strachan, and Vlasova Citation2022; QAA Citation2023). Specifically, ChatGPT was found to significantly aid in developing lower-level cognitive skills, such as understanding and application, as outlined in Bloom's Taxonomy. This aligns with recent studies suggesting the utility of AI in personalised learning and administrative task automation (Cano, Venuti, and Martinez Citation2023; Dwivedi et al. Citation2021; Xue et al. Citation2022). Consequently, we argue that GAI can transform education by facilitating personalised learning experiences, predictive analytics, and automating administrative tasks. Our findings extend this by showing that ChatGPT contributes to a personalised, student-centric approach in higher education. This is consistent with Tlili et al. (Citation2023), who illustrated that ChatGPT serves as a clear indicator of a paradigm shift in the education domain. Their study underscored the association between the perceived value of GAI in education and the intention to incorporate it, indicating a preparedness for pedagogical transformation driven by GAI.

Our findings reveal that ChatGPT enables students to engage more deeply with complex scenarios, thereby enhancing their analytical and evaluative skills. This finding echoes the narrative of JISC (Citation2023), emphasising the role of AI in creating learning environments that challenge and extend student capabilities. Our analysis indicates that AI text generators play a significant role in reinforcing foundational cognitive skills. This aligns with findings from existing studies (Cano, Venuti, and Martinez Citation2023; Dwivedi et al. Citation2021; Lim et al. Citation2023). However, their effectiveness in cultivating higher-order cognitive abilities (correlating to the advanced level), such as critical analysis and creative problem-solving, is more complex and less straightforward. This suggests that while AI tools may be beneficial in educational settings, their integration needs to be carefully calibrated to ensure they complement rather than replace traditional learning methods, thus supporting a comprehensive cognitive development that spans both basic and advanced skills (JISC Citation2023; Xue et al. Citation2022).

In this evolving AI-enabled education landscape, AI text generators (i.e. ChatGPT) play a dual role. They enhance basic skills and also promote advanced cognitive abilities, transitioning from simple learning aids to catalysts for deeper, more engaged learning experiences. This reflects the potential of AI text generators to foster a cognitive environment that effectively nurtures both fundamental and higher-order skills (JISC Citation2023). In fact, AI’s impact on critical thinking introduces a new dimension of complexity and potential to educational technology, moving beyond basic ‘question-answer’ functions to stimulate more profound, meaningful engagement with learning materials and concepts. By automating tasks such as summarisation and proofreading, these GAI tools (e.g. ChatGPT) can provide a ‘cognitive dividend,’ freeing mental resources for higher-level thinking, thus acting as a ‘cognitive amplifier.’ This balanced cognitive ecology allows foundational and advanced skills to reinforce each other. Our findings corroborate the observations from JISC (Citation2023), demonstrating that ChatGPT can help students analyse complex business scenarios, thereby enhancing their critical thinking skills.

In our study, two main themes emerged when categorising our findings on the impact of AI text generation (i.e. ChatGPT) on critical thinking skills. These are: (i) basic level and (ii) advanced level. The findings are framed within the Bloom's Taxonomy, dividing the impact on critical thinking into basic and advanced levels.

5.1.1. Basic level (Remember, understand, apply)

The findings from our empirical data suggested that there are significant improvements in the basic levels of critical thinking using AI text generators, such as the ability to recall information (Remember) and understand complex concepts (Understand). Although the ‘Apply’ category also demonstrated progress, the study highlighted that the main use was in the practical application and task efficiency rather than critical thinking per se. At the basic cognitive level, participants found ChatGPT useful for streamlining research processes and mundane tasks like proofreading and structuring academic assignments. While these activities primarily fall under task efficiency, they indirectly support critical thinking by freeing up time and cognitive resources that can be allocated to more complex reasoning tasks.

5.1.2. Advanced level (analyse, evaluate, create)

The analysis showed moderate (yet significant) improvements in advanced aspects of critical thinking, although no statistically significant impact was found on the highest level (i.e. ‘Create’ level) concerning the development of entirely new and original work. A majority of participants reported that ChatGPT helped them in the ‘analyse’ and ‘evaluate’ components of critical thinking. Specifically, ChatGPT exposed them to new perspectives, helped in brainstorming new ideas, and even instigated a heightened awareness of the necessity for fact-checking due to the potential for ‘AI hallucination.’

The cross-level interactions in this study provides an intriguing illustration of how technological tools, such as ChatGPT, can function not just as a utility but as a comprehensive facilitator of cognitive growth (QAA Citation2023). Traditionally, the cognitive activities described in the Bloom’s Taxonomy are treated as hierarchical stages, each building on the one before it. However, the cross-level interactions suggest a more dynamic, symbiotic relationship between the basic and advanced levels of critical thinking when mediated by technology. For example, lower-level activities like proofreading, summarising, and structuring often act as prerequisites to more advanced intellectual endeavours such as analysing, evaluating, and creating. These are not isolated tasks but interdependent steps in the learning and research process. What the study highlights is that GAI, via a tool such as ChatGPT, can streamline this interconnected cognitive landscape.

In the fast-paced academic environment, time is a scarce resource. By saving time on basic tasks, GAI tools can allow students the luxury of time, which can be the deciding factor in whether or not higher-order thinking even occurs. Therefore, ChatGPT essentially serves as a cognitive ‘force multiplier’. It doesn't merely add to the students’ capabilities at different levels but multiplies their efficiency and effectiveness across the board. This has profound implications for educational practices, potentially reshaping how we think about the role of AI in learning and critical thinking development.

5.2. Challenges in delivering critical thinking skills using AI text generators

While AI-driven tools can undoubtedly enrich the learning experience, they are not without their limitations, particularly when fostering critical thinking skills. These challenges range from reliability issues to ethical concerns, each of which merits careful consideration. Four major challenges emerged from our findings, and we discuss these against the existing literature to draw parallels and conclusions.

First, reliability and accuracy of AI text generators could be challenging (Essien, Chukwukelu, and Essien Citation2021; Lim et al. Citation2023). Our study did observe the ‘hallucination effect,’ wherein ChatGPT occasionally produced contextually inappropriate responses. However, this limitation also had a silver lining, which led students to exercise extra caution, thereby inadvertently fostering their evaluative skills. Second, Cotton, Cotton, and Shipway (Citation2023) highlighted ethical concerns, particularly regarding academic integrity. In our study, however, we found that none of the participants used ChatGPT for plagiaristic activities, suggesting that having clear ethical guidelines can effectively prevent misuse of AI text generators.

Third, digital literacy barriers in educational AI integration are significant in the literature (Lincoln and Kearney Citation2019), and this is evident in our study. However, initial difficulties faced by students using ChatGPT lessened with repeated use. Fourth, bias in AI text generators, such as ChatGPT, trained on extensive datasets, remains a critical issue. These datasets may contain inherent cultural, racial, or gender biases. Educators and students must be acutely aware of these biases when utilising AI tools. Biased AI outputs can reinforce stereotypes and misrepresentations, particularly impacting marginalised groups. In educational settings, such biases may skew perspectives, limiting students’ exposure and potentially shaping their beliefs and attitudes..

6. Conclusions

Our study suggests potential insights into how AI text generators might impact the development of critical thinking skills in UK higher education, particularly in business schools. It underscores AI's potential to reshape teaching strategies, inspire the creation of more sophisticated educational tools, and even influence the development of responsible AI-related educational policies. The integration of AI text generators in education could potentially enhance both basic and advanced levels of critical thinking, as conceptualised within Bloom's Taxonomy. While our study provides indicative insights into how AI text generators might influence critical thinking skills development, it is important to recognise that critical thinking is a complex and multifaceted skill influenced by various factors beyond AI integration in education.

With the increasing prevalence of AI in education, it appears likely that teacher roles may evolve from traditional information deliverers to guides that facilitate responsible AI use. This transformation raises ethical considerations, highlighting the importance of educators teaching not only ‘how’ but also ‘why’ ethical AI use matters. Overall, our study findings suggest that integrating AI-driven learning can promote critical thinking skills. It encourages educators to explore these possibilities and rethink their teaching methods for a holistic learning experience.

6.1. Theoretical implications

Our study presents two major implications to theory. First, our study adds to the existing understanding of AI text generators within the context of critical thinking skills development. While prior studies on AI have focused on various applications of AI in education (Davenport and Mittal Citation2022; Michel-Villarreal et al. Citation2023), our study provides a deeper perspective by exploring the impact and challenges of AI text generators on critical thinking skills (QAA Citation2023). In so doing, we extend prior studies on AI text generators (Ashour Citation2020; Cano, Venuti, and Martinez Citation2023) by fostering critical thinking by exploring these concepts from different business schools within the UK HE sector.

Secondly, our study contributes to the dialogue around the practical application of AI text generators in academia. It identifies how AI text generators – specifically, ChatGPT – can be integrated into educational strategies to enhance student engagement and learning outcomes. This aspect of our study builds upon existing literature (e.g. Ashour Citation2020; Cano, Venuti, and Martinez Citation2023) by providing concrete analyses of AI utility in educational settings, highlighting its potential to revolutionise the way critical thinking skills are taught and learned in business education. Extant studies on AI and, in particular, AI text generators have significantly investigated the principles and tools to draft official policies and approaches to using AI text generators (JISC Citation2023; QAA Citation2023). Furthermore, we present four major challenges that emerged from our findings, namely: reliability and accuracy, ethical concerns, digital literacy and bias.

6.2. Practical implications

The adoption of GAI and AI text generators, particularly ChatGPT, in the educational landscape can bring about significant time-efficiency. Students – and educators alike – can employ these tools to streamline research and mundane tasks, thereby freeing up valuable time for more in-depth cognitive activities. Furthermore, our findings show that ChatGPT can also serve as a tool for fostering critical thinking skills (Lacka, Wong, and Haddoud Citation2021; Lim et al. Citation2023), a skill set increasingly necessary in today's fast-paced, information-saturated world. This offers educational institutions a strong incentive to integrate these AI-driven tools into their learning systems to facilitate students’ engagement (Kremantzis Citation2023).

Given the increasing relevance of critical thinking in modern HE curricula, educational policymakers should seriously consider how to effectively integrate AI text-generators into the learning environment. The potential benefits are manifold, from nurturing advanced cognitive skills, such as evaluation and analysis to streamlining research and administrative tasks. As AI becomes more sophisticated and capable, it becomes important for policymakers to get ahead of the curve and establish guidelines for the ethical and effective use of AI in higher educational settings (Moscardini, Strachan, and Vlasova Citation2022).

Integrating AI into the curriculum should not just be about adopting the latest technology but about preparing students for a future where AI will likely be a ubiquitous part of daily life. Therefore, policymakers should commence discussions around the enactment of policies that need to reflect not just the immediate educational benefits but also longer-term life skills, such as digital literacy and ethical considerations when interacting with AI. This includes creating a policy framework that ensures equitable access to these technologies, so that all students, regardless of socio-economic background, can benefit from the enhanced learning experiences that tools like ChatGPT can provide.

6.3. Limitations and future research

Our selected research approach and theoretical framing have limitations. Firstly, the scope of our research could be expanded in future studies to include more students and conduct longitudinal analyses (e.g. Moscardini, Strachan, and Vlasova Citation2022) for deeper insights into AI's long-term impact on critical thinking and academic performance. Additionally, future research could examine the ethical and social implications (e.g. QAA Citation2023) in greater depth, considering factors including accessibility and the digital divide. Additionally, while we employed Bloom's taxonomy for structuring critical thinking skills analysis, it may not fully encapsulate the skill diversity required for comprehensive examination. Future research could integrate broader competency frameworks for a more nuanced understanding. Importantly, extending our study to include European/Global business schools could showcase how distinct socio-economic and cultural factors may influence student experiences differently than in the UK. This would permit comparative analysis, offering insights into region-specific challenges and opportunities in HE.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

References

- Archibald, M. M. 2016. “Investigator Triangulation: A Collaborative Strategy with Potential for Mixed Methods Research.” Journal of Mixed Methods Research 10 (3): 228–50. https://doi.org/10.1177/1558689815570092.

- Ashour, S. 2020. “How Technology has Shaped University Students’ Perceptions and Expectations Around Higher Education: An Exploratory Study of the United Arab Emirates.” Studies in Higher Education 45 (12): 2513–25. https://doi.org/10.1080/03075079.2019.1617683.

- Bartunek, J. M., and I. Y. Ren. 2022. “Curriculum Isn’t Enough: What Relevant Teaching Means, how it Feels, why it Matters, and What It Requires.” Academy of Management Learning & Education 21 (3): 503–16. https://doi.org/10.5465/amle.2021.0305.

- Bearman, M., J. Ryan, and R. Ajjawi. 2023. “Discourses of Artificial Intelligence in Higher Education: A Critical Literature Review.” Higher Education 86 (2): 369–85. https://doi.org/10.1007/s10734-022-00937-2.

- Bloom, B. S., M. D. Englehart, E. J. Furst, W. H. Hill, and D. R. Krathwohl. 1956. Taxonomy of Educational Objectives, Handbook I: The Cognitive Domain. New York: David McKay Co.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. https://doi.org/10.1191/1478088706qp063oa.

- Brookhart, S. M. 2010. How to Assess Higher-Order Thinking Skills in Your Classroom. Virginia, USA: Ascd.

- Bukoye, T., and M. Oyelere. 2019. “Assessing the Value of Capstone Unit in Developing Critical Thinking Skills in MSc Students.” In BAM 2019 Conference, Aston University, Birmingham, UK. British Academy of Management.

- Calma, A., and M. Davies. 2021. “Critical Thinking in Business Education: Current Outlook and Future Prospects.” Studies in Higher Education 46 (11): 2279–95. https://doi.org/10.1080/03075079.2020.1716324.

- Cano, Y. M., F. Venuti, and R. H. Martinez. 2023. Chatgpt and AI Text Generators: Should Academia Adapt or Resist. Massachusetts, USA: Harvard Bus. Publ. Educ.

- Cotton, D. R. E., P. A. Cotton, and J. R. Shipway. 2023. “Chatting and Cheating: Ensuring Academic Integrity in the era of ChatGPT.” Innovations in Education and Teaching International, 1–12. https://doi.org/10.1080/14703297.2023.2190148.

- Creswell, J. W., and J. D. Creswell. 2017. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. California, USA: Sage publications.

- Curzon-Hobson, A. 2003. “Higher Learning and the Critical Stance.” Studies in Higher Education 28 (2): 201–12. https://doi.org/10.1080/0307507032000058091.

- Davenport, T., and N. Mittal. 2022. How Generative AI Is Changing Creative Work [WWW Document]. Accessed March 28, 2023. URL https://hbr.org/2022/11/how-generative-ai-is-changing-creative-work.

- De Nito, E., T. A. Rita Gentile, T. Köhler, M. Misuraca, and R. Reina. 2023. “E-learning Experiences in Tertiary Education: Patterns and Trends in Research Over the Last 20 Years.” Studies in Higher Education 48 (4): 595–615. https://doi.org/10.1080/03075079.2022.2153246.

- Dwivedi, Y. K., L. Hughes, E. Ismagilova, G. Aarts, C. Coombs, T. Crick, Y. Duan, R. Dwivedi, J. Edwards, and A. Eirug. 2021. “Artificial Intelligence (AI): Multidisciplinary Perspectives on Emerging Challenges, Opportunities, and Agenda for Research, Practice and Policy.” International Journal of Information Management 57: 101994. https://doi.org/10.1016/j.ijinfomgt.2019.08.002.

- Ennis, R. H. 1993. “Critical Thinking Assessment.” Theory Into Practice 32 (3): 179–86. https://doi.org/10.1080/00405849309543594.

- Essien, A., G. Chukwukelu, and V. Essien. 2021. Opportunities and Challenges of Adopting Artificial Intelligence for Learning and Teaching in Higher Education. In: Fostering Communication and Learning With Underutilized Technologies in Higher Education. IGI Global, pp. 67–78.

- Etikan, I., S. A. Musa, and R. S. Alkassim. 2016. “Comparison of Convenience Sampling and Purposive Sampling.” American Journal of Theoretical and Applied Statistics 5 (1): 1–4. https://doi.org/10.11648/j.ajtas.20160501.11.

- Garcia-Chitiva, M. del P., and J. C. Correa. 2023. “Soft Skills Centrality in Graduate Studies Offerings.” Studies in Higher Education, 1–25.

- Gioia, D. A., K. G. Corley, and A. L. Hamilton. 2013. “Seeking Qualitative Rigor in Inductive Research: Notes on the Gioia Methodology.” Organizational Research Methods 16 (1): 15–31. https://doi.org/10.1177/1094428112452151.

- Haynes, S. N., D. Richard, and E. S. Kubany. 1995. “Content Validity in Psychological Assessment: A Functional Approach to Concepts and Methods.” Psychological Assessment 7: 238. https://doi.org/10.1037/1040-3590.7.3.238.

- Hooda, M., C. Rana, O. Dahiya, A. Rizwan, and M. S. Hossain. 2022. “Artificial intelligence for assessment and feedback to enhance student success in higher education.” Mathematical Problems in Engineering 2022.

- Hwang, G.-J., and C.-Y. Chang. 2023. “A Review of Opportunities and Challenges of Chatbots in Education.” Interactive Learning Environments 31 (7): 4099–112. https://doi.org/10.1080/10494820.2021.1952615.

- JISC. 2023. Does ChatGPT mean the End of the Essay as an Assessment Tool? | Jisc [WWW Document]. Accessed March 28, 2023. URL https://www.jisc.ac.uk/news/does-chatgpt-mean-the-end-of-the-essay-as-an-assessment-tool-10-jan-2023.

- Krathwohl, D. R. 2002. “A Revision of Bloom’s Taxonomy: An Overview.” Theory Into Practice 41 (4): 212–8. https://doi.org/10.1207/s15430421tip4104_2.

- Kremantzis, M. D. 2023. Employability and Student Engagement from a Business Analytics Perspective.

- Lacka, E., T. C. Wong, and M. Y. Haddoud. 2021. “Can Digital Technologies Improve Students’ Efficiency? Exploring the Role of Virtual Learning Environment and Social Media use in Higher Education.” Computers & Education 163:104099. https://doi.org/10.1016/j.compedu.2020.104099.

- Lancaster, T. 2023. “Artificial Intelligence, Text Generation Tools and ChatGPT–Does Digital Watermarking Offer a Solution?” International Journal for Educational Integrity 19:10. https://doi.org/10.1007/s40979-023-00131-6.

- Leiker, D., A. R. Gyllen, I. Eldesouky, and M. Cukurova. 2023. Generative AI for Learning: Investigating the Potential of Synthetic Learning Videos. arXiv Prepr. arXiv2304.03784.

- Lim, W. M., A. Gunasekara, J. L. Pallant, J. I. Pallant, and E. Pechenkina. 2023. “Generative AI and the Future of Education: Ragnarök or Reformation? A Paradoxical Perspective from Management Educators.” The International Journal of Management Education 21 (2): 100790. https://doi.org/10.1016/j.ijme.2023.100790.

- Lincoln, D., and M.-L. Kearney. 2019. “Promoting Critical Thinking in Higher Education.” Studies in Higher Education 44 (5): 799–800. https://doi.org/10.1080/03075079.2019.1586322.

- Lodge, J. M., K. Thompson, and L. Corrin. 2023. “Mapping Out a Research Agenda for Generative Artificial Intelligence in Tertiary Education.” Australasian Journal of Educational Technology 39: 1–8. https://doi.org/10.14742/ajet.8695.

- Manyika, J., and J. Silberg. 2019. What Do We Do About the Biases in AI? [WWW Document]. Accessed September 20, 2023. URL https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai.

- McKinsey. 2023. “What is ChatGPT, DALL-E, and generative AI? | McKinsey”, available at: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-generative-ai (accessed 28 March 2023).

- Michel-Villarreal, R., E. Vilalta-Perdomo, D. E. Salinas-Navarro, R. Thierry-Aguilera, and F. S. Gerardou. 2023. “Challenges and Opportunities of Generative AI for Higher Education as Explained by ChatGPT.” Education Sciences 13: 856. https://doi.org/10.3390/educsci13090856.

- Moscardini, A. O., R. Strachan, and T. Vlasova. 2022. “The Role of Universities in Modern Society.” Studies in Higher Education 47 (4): 812–30. https://doi.org/10.1080/03075079.2020.1807493.

- Mucharraz y Cano, Y., and F. Venuti. 2023. ChatGPT and AI Text Generators: Should Academia Adapt or Resist? | Harvard Business Publishing Education [WWW Document]. Accessed September 20, 2023. URL https://hbsp.harvard.edu/inspiring-minds/chatgpt-and-ai-text-generators-should-academia-adapt-or-resist.

- Murawski, L. M. 2014. “Critical Thinking in the Classroom … and Beyond.” Journal of Learning in Higher Education 10: 25–30.

- O’Dea, X. C., and M. O’Dea. 2023. “Is Artificial Intelligence Really the Next Big Thing in Learning and Teaching in Higher Education? A Conceptual Paper.” Journal of University Teaching & Learning Practice 20 (5): 1–19.

- Olga, A., A. Saini, G. Zapata, D. Searsmith, B. Cope, M. Kalantzis, V. Castro, T. Kourkoulou, J. Jones, and R. A. da Silva. 2023. Generative AI: Implications and Applications for Education. arXiv Prepr. arXiv2305.07605.

- Penkauskienė, D., A. Railienė, and G. Cruz. 2019. “How is Critical Thinking Valued by the Labour Market? Employer Perspectives from Different European Countries.” Studies in Higher Education 44 (5): 804–15. https://doi.org/10.1080/03075079.2019.1586323.

- Peres, R., M. Schreier, D. Schweidel, and A. Sorescu. 2023. “On ChatGPT and Beyond: How Generative Artificial Intelligence may Affect Research, Teaching, and Practice.” International Journal of Research in Marketing 40 (2): 269–275. https://doi.org/10.1016/j.ijresmar.2023.03.001.

- QAA. 2023. Maintaining quality and standards in the ChatGPT era: QAA advice on the opportunities and challenges posed by Generative Artificial Intelligence [WWW Document]. Accessed September 20, 2023. URL https://www.qaa.ac.uk/docs/qaa/members/maintaining-quality-and-standards-in-the-chatgpt-era.pdf.

- Rudolph, J., Samson Tan, and Shannon Tan. 2023. “ChatGPT: Bullshit Spewer or the end of Traditional Assessments in Higher Education?” Journal of Applied Learning and Teaching 6 (1): 342–363. https://doi.org/10.37074/jalt.2023.6.1.9.

- Schoepp, K. 2019. “The state of course learning outcomes at leading universities.” Studies in Higher Education 44 (4): 615–627.

- Shaw, A., O. L. Liu, L. Gu, E. Kardonova, I. Chirikov, G. Li, S. Hu, N. Yu, L. Ma, and F. Guo. 2020. “Thinking Critically About Critical Thinking: Validating the Russian HEIghten® Critical Thinking Assessment.” Studies in Higher Education 45 (9): 1933–48. https://doi.org/10.1080/03075079.2019.1672640.

- Suskie, L. 2018. Assessing Student Learning: A Common Sense Guide. San Francisco: John Wiley & Sons.

- Tlili, A., B. Shehata, M. A. Adarkwah, A. Bozkurt, D. T. Hickey, R. Huang, and B. Agyemang. 2023. “What if the Devil is my Guardian Angel: ChatGPT as a Case Study of Using Chatbots in Education.” Smart Learning Environments 10: 15. https://doi.org/10.1186/s40561-023-00237-x.

- Xue, M., X. Cao, X. Feng, B. Gu, and Y. Zhang. 2022. “Is College Education Less Necessary with AI? Evidence from Firm-Level Labor Structure Changes.” Journal of Management Information Systems 39 (3): 865–905. https://doi.org/10.1080/07421222.2022.2096542.