?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The public release of ChatGPT in November 2022 brought excitement and concerns regarding students’ use of language models in higher education. However, little research has empirically investigated students’ intention to adopt ChatGPT. This study developed a theoretical model based on the Unified Theory of Acceptance and Use of Technology (UTAUT) with an additional construct- anxiety, to investigate university students’ adoption intention of ChatGPT in two higher education contexts– the UK and Nepal. 239 and 226 questionnaires were deemed sufficient for data analysis for Nepal and the UK, respectively. We utilised the structural equation modelling technique to test the hypotheses. Our results reveal that performance expectancy, effort expectancy and social influence significantly impacted the adoption intention of ChatGPT for both countries. However, anxiety's impact varied between Nepal and the UK. Integrating UTAUT with a cross-country comparative approach provides insights into how ChatGPT's reception diverges between different higher education contexts. Our results also have implications for technology companies aiming to expand language models’ availability worldwide.

Introduction

This study investigates university students’ adoption intention of ChatGPT in two higher education contexts – Nepal and the UK – through the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh et al. Citation2003).

Students are key higher education stakeholders who may wish to avoid adopting tools like ChatGPT in their learning (Strzelecki Citation2023). Prior research (see ) indicates that higher education context reflects how students intend to adopt such tools. shows, for instance, that ‘Personal Innovativeness’ was not equally significant in higher education in Poland and Malaysia (Foroughi et al. Citation2023; Strzelecki Citation2023). Also, educators and higher education institutes oppose tools such as ChatGPt, arguing that such tools escalate plagiarism and cheating among students (Strzelecki and ElArabawy Citation2024). Accordingly, there are a multitude of challenges and considerations that contribute to the understanding of the adoption of tools like ChatGPT in higher education (Strzelecki and ElArabawy Citation2024). Such challenges and considerations can be known only by studying ChatGPT adoption in different higher education contexts.

Table 1. Studies employed UTAUT in studying ChatGPT adoption in higher education.

The UK is a developed country, while Nepal is the least developed country. Comparing ChatGPT adoption among students in both countries will provide interesting insights regarding behavioural intention and subsequent usage behaviour due to differences in technological access and infrastructure, cultural attitudes, economic impacts, education systems, and regulatory approaches. Accordingly, adopting a tool like ChatGPT merits further study in Nepal and the UK. The two countries have different cultural contexts. While English is the official language in the UK, Nepalese speak English with significant dialects, accents, and usage differences. The two countries also have varied technological infrastructures (Department for Business and Trade Citation2024; Mannuru et al. Citation2023). The two countries also vary in AI-related regulations. While the UK has issued related guidelines for using tools such as ChatGPT in education (Department for Education Citation2023), Nepal has yet to issue such guidelines (Jha and Yadav Citation2022).

Other studies (e.g. Strzelecki and ElArabawy Citation2024) have recognised the significance of differences in higher education contexts when adopting tools like ChatGPT. We also reasoned that students in the UK are more aware of the appropriate and expected use of GenAI than students in Nepal due to differences in the higher education systems (Department for Education Citation2023). We wanted to examine how two different contexts, context is drastically different, reflect the relationship between anxiety and the UTAUT model and the adoption of ChatGPT.

UTAUT is an appropriate theoretical lens to study the voluntary use and acceptance of new technologies such as ChatGPT, and it examines the relationship between the perceived usefulness of new technologies, perceived ease of use, social influence, facilitating conditions to adopt new technologies (Venkatesh et al. Citation2003).

This study contributes to theory, practice, and methodology. At the theory level, it extends the UTAUT theory with the anxiety construct (students experience anxiety when adopting and using tools such as ChatGPT). The analysis reveals that performance expectancy, effort expectancy, and social influence significantly influence ChatGPT adoption intention in the context of higher education in both countries. However, the impact of anxiety on ChatGPT adoption intention varies between Nepal and the UK. For practice, this study draws the attention of educators, developers, regulators, and policymakers to the emotional dimension (anxiety) of adopting ChatGPT. The results hold implications for technology companies seeking to expand the global availability of language models. On a methodological level, the study adapts an existing framework to study emerging technologies like ChatGPT and urges future studies to consider extending the framework with other constructs, such as trust.

Literature review

Generative artificial intelligence (GenAI), a group of machine learning algorithms, generates content from a large volume of data that has been trained on when responding to a prompt (Chan and Hu Citation2023). One example is ChatGPT, a large language model (Chan and Hu Citation2023).

ChatGPT is ‘an artificial intelligence (AI) powered chatbot that creates surprisingly intelligent-sounding text in response to user prompts, including homework assignments and exam-style questions’ (Stokel-Walker Citation2022, para 1). ChatGPT predicts the next high probable word when generating text; thus, unlike humans, it has no stake and takes no responsibility for the generated content (Lindebaum and Fleming Citation2023).

Accordingly, GenAI helps with information processing (Alavi, Leidner, and Mousavi Citation2024) and reduces cognitive load (Zirar Citation2023) when developing knowledge in the AI era. Such tools can analyse vast amounts of data when generating outputs. However, they also introduce risks we know about, such as over-reliance or yet-to-know risks (Alavi, Leidner, and Mousavi Citation2024; Zirar Citation2023). Knowledge development, therefore, suggests a balancing act between human-derived and AI-derived knowledge creation (Alavi, Leidner, and Mousavi Citation2024). Also, in this balancing act, the usefulness of GenAI tools such as ChatGPT depends on continuously improving the quality of the output (Camilleri Citation2024); the impact of GenAI tools gradually unfolds as they are improved further and various stakeholders interact with them in various contexts (Sabherwal and Grover Citation2024).

In higher education, one concern is using ChatGPT by students to generate assessed work (Bin-Nashwan, Sadallah, and Bouteraa Citation2023). However, ChatGPT lacks intelligence and understanding and relies on pattern recognition over vast data (Dwivedi et al. Citation2023). The material generated by ChatGPT, in terms of knowledge and accuracy, is questionable unless checked (Dwivedi et al. Citation2023). Therefore, text generation solely through ChatpGPT-like tools is incompatible with the principles of scientific responsibility (Lindebaum and Fleming Citation2023).

As key stakeholders in higher education, students can potentially engage with such tools to ask questions, receive feedback, and prepare for assignments. These use cases include ‘ideation and feedback, writing, background research, data analysis, coding, and mathematical derivations.’ (Korinek Citation2023, 1281) ChatGPT can be a complementary teaching-learning aid for students (Ali et al. Citation2024). However, such uses depend on further improving the quality of responses from tools such as ChatGPT to reduce misinformation, bias, hallucination and adversarial prompts (Camilleri Citation2024).

How students use tools like ChatGPT is debatable. Solely using ChatGPT to generate content is incompatible with scientific responsibility, as such tools are irresponsible for the content they generate (Lindebaum and Fleming Citation2023). They also undermine the purpose of assessment in student learning (Newton and Xiromeriti Citation2024). They likely improve student learning outcomes in the short term, and when the novelty effect wears off, the expected impact of such tools on student learning outcomes will diminish in the longer term (Wu and Yu Citation2024). However, a counterargument is that tools such as ChatGPT increase productivity and decrease inequality between individuals (Noy and Zhang Citation2023). Therefore, if students are prevented from using such tools, they will miss out on essential skills such as prompt writing and skills that help them use such tools to become more productive later in the workplace. Rather than prohibiting students from using such tools, this line of research suggests increasing student awareness and training them to use such tools (Strzelecki and ElArabawy Citation2024). Also, higher education institutes need to adopt ‘technological explicitness’ in their academic integrity policies (Perkins and Roe Citation2023). Therefore, these tools will likely gradually ‘play a specific and defined role’ in student learning (Zirar Citation2023).

However, a recent study suggests that higher education institutions (HEI) still needed to have a revised academic integrity policy to explain students’ use of GenAI tools, such as ChatGPT, six months after the public release of ChatGPT (Perkins and Roe Citation2023). Instead, HEI has focused on issuing guidance documents with a positive viewpoint of GenAI tools and advising caution when students use them (Perkins and Roe Citation2023). Perkins and Roe (Citation2023) suggest that HEI must introduce ‘Technological explicitness’ in academic integrity policies to increase student awareness. ‘Technological explicitness’ in academic integrity policies requires higher education institutions and policymakers to debate the impact of GenAI, consequences and opportunities, considering the speed at which such technologies advance (Zirar Citation2023).

This discussion indicates that research on how students intend to adopt and subsequently use language models is developing; therefore, further research is needed to understand students’ behavioural intentions to use such tools to augment their abilities.

In this study, the UTAUT is employed to understand factors influencing the intention to use language models, such as ChatGPT. There are alternatives to UTAUT to investigate technology adoption, such as the Technology Acceptance Model (TAM) (Davis Citation1989) and the Diffusion of Innovations (DOI) (Rogers Citation2003). However, UTAUT has a higher predictive power and is more comprehensive than the alternatives (Venkatesh et al. Citation2003). For example, UTAUT outperforms TAM in predicting user adoption behaviour in various contexts by considering more factors (Venkatesh et al. Citation2003). Further, the DOI focuses on how innovations spread through social networks (Rogers Citation2003). The DOI has been influential, but unlike UTAUT, it focuses on the characteristics of innovation.

UTAUT has attracted criticism, for instance, for not considering hedonic factors of attractiveness and enjoyment (Abadie, Chowdhury, and Mangla Citation2024). On the other hand, UTAUT is well-established (Gulati et al. Citation2024; Strzelecki Citation2023), synthesises preexisting models (Strzelecki and ElArabawy Citation2024); found as a reliable measure of higher education student's adoption of technology (Gulati et al. Citation2024; Strzelecki and ElArabawy Citation2024). UTAUT is employed to investigate the adoption of new technologies in higher education (Gulati et al. Citation2024; Strzelecki Citation2023) and helps understand the acceptance process and considers individual differences (Strzelecki and ElArabawy Citation2024)

Further, in higher education (see ), recently, UTAUT has been employed to understand the factors influencing the intention to use ChatGPT for educational purposes (Foroughi et al. Citation2023). Foroughi et al. (Citation2023) found that performance expectancy, effort expectancy, hedonic motivation, and learning value significantly influenced the intention to use ChatGPT. Strzelecki (Citation2023) found that habit was the main predictor of behavioural intention, followed by performance expectancy and hedonic motivation. For use behaviour, the principal determinant was the behavioural intention, then personal innovativeness (Strzelecki Citation2023).

However, the existing understanding is still developing and requires further research. Existing literature (e.g. Dwivedi et al. Citation2023; Strzelecki Citation2023) emphasises the need for further research on adopting language models in higher education. Further, suggests that context influences behavioural intention and subsequent usage behaviour.

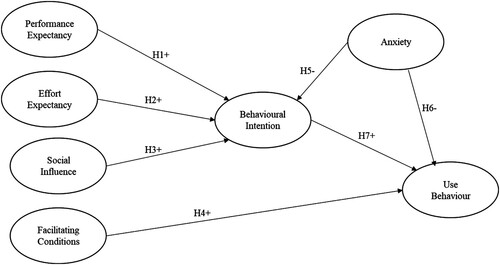

Accordingly, this study developed a theoretical model from the four components of the UTAUT: performance expectancy, effort expectancy, social influence, and facilitating conditions. The additional construct of anxiety was added to the model, as the adoption of ChatGPT for students is also likely to be impacted by this construct (Bin-Nashwan, Sadallah, and Bouteraa Citation2023). Other studies have added constructs to or removed constructs from UTAUT to reflect GenAI tools like ChatGPT. For instance, Strzelecki (Citation2023) removed ‘Price Value’ from UTAUT, reasoning that ChatGPT offers a free version to everyone and added ‘Personal Innovativeness’ to UTAUT to investigate if willingness to change and learn new things also predict ChatGPT adoption among students. Following previous studies, we extended the UTAUT model with an additional construct, anxiety. Recent publications (e.g. Cotton, Cotton, and Reuben Shipway Citation2023; Dwivedi et al. Citation2023; Strzelecki and ElArabawy Citation2024) suggest that ‘anxiety’, such as ‘feeling of unfair advantage (false negative)’, ‘feeling of being caught’, ‘feeling of being monitored closely’, ‘feeling of being accused wrongly (false positive)’, also predicts students’ behavioural intention and subsequent usage behaviour of ChatGPT.

Hypotheses

Performance expectancy

Performance expectancy refers to the degree to which an individual considers that using a particular technology improves their ability to carry out specific tasks or achieve goals (Venkatesh et al. Citation2003). It impacts individuals’ behavioural intention to adopt a particular technology (Kumar and Bervell Citation2019). Performance expectancy is critical to determine whether a particular technology is adopted in academic contexts (Martín-García, Martínez-Abad, and Reyes-González Citation2019). In this study, performance expectancy refers to the extent to which students in Nepal and UK higher education believe using ChatGPT will improve their academic achievement and productivity. Therefore, we hypothesise:

H1. Performance expectancy positively and significantly impacts the behavioural intention to use ChatGPT.

Effort expectancy

Effort expectancy refers to the degree to which an individual expects that using a particular technology is simple and requires little effort (Venkatesh et al. Citation2003). It impacts individuals’ behavioural intention to adopt a particular technology (Hu, Laxman, and Lee Citation2020). In this study, effort expectancy depicts the degree to which Nepal and UK higher education students believe ChatGPT is simple and requires little effort. Therefore, we hypothesise:

H2. Effort expectancy positively and significantly impacts the behavioural intention to use ChatGPT.

Social influence

Social influence refers to the degree to which an individual believes that people who are important to them think they should use a particular technology (Venkatesh et al. Citation2003). In this study, social influence refers to how much students in Nepal and UK higher education believe their colleagues, teachers, and other influential people in their social network encourage them to utilise ChatGPT. Therefore, we hypothesise:

H3. Social influence positively and significantly impacts the behavioural intention to use ChatGPT.

Facilitating conditions

‘Facilitating conditions’ refers to the degree to which individuals perceive they have the required resources and support to effectively use a specific technology (Venkatesh et al. Citation2003). Research has demonstrated that facilitating conditions determine students’ behavioural intention to use new technologies (e.g. Kumar and Bervell Citation2019; Nikolopoulou, Gialamas, and Lavidas Citation2020; Samsudeen and Mohamed Citation2019). In this study, facilitating conditions would refer to how much students believe they have adequate access to ChatGPT (free and paid versions) and the necessary knowledge and resources to use ChatGPT effectively in their learning. Therefore, we hypothesise:

H4. Facilitating conditions have a positive and significant impact on the use behaviour of ChatGPT.

Manifestations of anxiety in UTAUT

Students experience anxiety when faced with new technologies (Meinhardt-Injac and Skowronek Citation2022). ‘Anxiety’ refers to apprehension and fear of using new and unfamiliar technologies (Simonson et al. Citation1987). Students with low confidence in their task abilities will likely resort to ChatGPT and experience anxiety about being caught (Bin-Nashwan, Sadallah, and Bouteraa Citation2023). Therefore, anxiety is likely to impact students’ adoption of ChatGPT. Thus, we hypothesise:

H5. Anxiety negatively and significantly impacts the behavioural intention to use ChatGPT.

H6. Anxiety has a negative and significant impact on the use behaviour of ChatGPT.

Behavioural intention

Behavioural intention has a positive, direct, and significant impact on the use behaviour of technology (see e.g. Hu, Laxman, and Lee Citation2020; Kumar and Bervell Citation2019; Nikolopoulou, Gialamas, and Lavidas Citation2020). For example, a study on teachers’ behavioural intention and usage behaviour of information technology (IT) in lectures found that performance expectancy and effort expectancy directly affected teachers’ behavioural intention, which later influenced their use behaviour (Pham et al. Citation2020). This study proposes that performance expectancy, effort expectancy, and social influence directly and positively impact behavioural intention and subsequently positively impact use behaviour. Therefore, we hypothesise:

H7. Behavioural intention has a positive and significant impact on the use behaviour of ChatGPT.

illustrates the theoretical framework.

Research method

Measurement instruments

Measurement scales were primarily adapted from earlier studies and modified to fit ChatGPT adoption in Nepal and the UK context. The items for all the seven latent variables, namely, PE, EE, SI, FC, ANX, BI and UB were derived from the widely accepted UTAUT model developed by Venkatesh et al. (Citation2003). The scale items of the independent variables were measured by a five-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). Two academics pre-tested the survey questionnaire to assess its face and content validity. Based on their comments, we revised some of the items. The revised version of the questionnaire was pilot tested with 40 students. The Cronbach’s alpha values of all constructs exceeded the threshold (i.e. α > 0.6–0.7) recommended by Ursachi, Horodnic, and Zait (Citation2015).

Data collection

The data was collected through a Qualtrics online survey in Nepal and UK higher education institutions between May and September 2023. We only used a questionnaire in English because students from Nepal studied in English medium and had sufficient command of the English language to fill out the survey. Convenience sampling was employed to collect the required data. Survey questionnaires were randomly distributed to 1400 students from both countries and received 510 responses, representing 36.42% of the surveys. A total of 45 responses were discarded due to missing values. As a result, the number of usable questionnaires in this study was 465. The respondents were university students, and the sample characteristics are summarised in .

Table 2. Sample characteristics.

Results

We used IBM SPSS AMOS (v. 28) statistical software to perform the structural equation modelling (SEM).

Measurement model

We assess the measurement properties of the constructs using confirmatory factor analyses (CFA). As shown in , the results of model goodness-of-fit indicate that both models achieve a good overall fit. In particular, the Chi-Square value relative to the degree of freedom (CMIN/DF) is less than the cut-off value of 3.00, suggesting an acceptable fit (Schermelleh-Engel, Moosbrugger, and Müller Citation2003). In addition, the comparative fit indices (CFIs) of 0.921 and 0.922 indicate a good fit (Hu and Bentler Citation1999). Tucker–Lewis index (TLI) values are above the threshold level of 0.90, indicating a good fit. Finally, the root mean square error of approximation (RMSEA) also indicates the acceptable fitness of both Nepal and the UK models since their RMSEA values are below the threshold of 0.08 (Hu and Bentler Citation1999).

Table 3. Fit-Indices of the measurement models.

Hair et al. (Citation2010) argued that all standardised factor loadings should be at least 0.5 and our constructs meet this threshold and are statistically significant (p < 0.000). A general accepted rule is the Cronbach alpha of 0.6–0.7 indicates an acceptable level of reliability (Ursachi, Horodnic, and Zait Citation2015). All our constructs meet this criteria, which noticeably means that they have good internal consistency (c.f. ).

Table 4. Reliability and validity.

The average variance extracted (AVE) and construct reliability were calculated to verify convergent validity. Generally, an AVE of 0.5 or greater (Hair et al. Citation2010) and a CR of 0.6 or greater is desirable (Fornell and Larcker Citation1981). As shown in , composite reliability (CR) for all the latent constructs (except PE construct of Nepal) was greater than the recommended value. Likewise, AVEs of the seven latent constructs ranged from 0.681–0.863, above the recommended value and thus, the convergent validity was confirmed.

To verify discriminant validity, the square root of the AVE and the correlation coefficients with the other variables were compared. If the square root of the AVE is greater than the correlations with other variables, discriminant validity holds (Fornell and Larcker Citation1981). As shown in , all the latent variables met this condition, thus confirming the discriminant validity.

Table 5. Discriminant validity results.

In addition, Harman’s (Citation1967) single factor test was conducted to check for the existence of common method variance. We found that a single factor explains only 27.259% (less than the 50 percent threshold) of the total variation. Thus, no common factor emerged (Podsakoff, MacKenzie, and Podsakoff Citation2012). To search for possible multicollinearity issues, the variance inflation factor (VIF) was analysed. The all-constructs VIF value was less than 5 (see Appendix A), suggesting that our model does not show multicollinearity (Kock and Lynn Citation2012).

Structural model

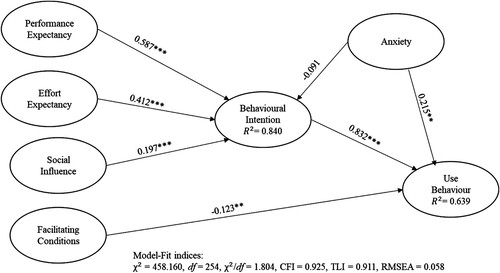

To test the proposed hypotheses, a path analysis was used to assess the relationships among the constructs of the structural models of the two nations: Nepal and the UK; the outcome of the testing hypotheses are presented in . The results show that the relationships between PE and BI (β = 0.587, t = 5.765, p < .01), EE and BI (β = 0.412, t = 5.151, p < .01), SI and BI (β = 0.197, t = 3.607, p < .01), and BI and UB (β = 0.832, t = 8.031, p < .01) were significant. Thus, H1, H2, H3 and H7 were supported for Nepal. However, unexpectedly, ANX → UB path was insignificant at p > 0.05 in the Nepal context. It can be shown in that a proportion of the variance in the BI with value of 0.840 indicates the good explanatory power of the proposed model. Moreover, an excellent

value of 0.639 was documented for use behaviour, which, in turn, facilitates the explanatory power of the proposed model.

Table 6. Hypotheses testing.

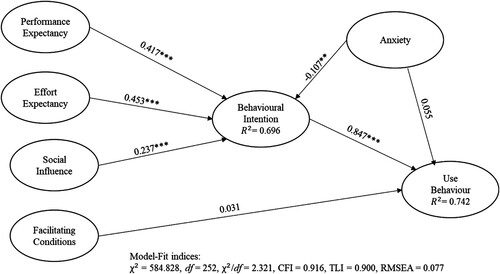

However, shows the results of structural analysis in the UK. The results indicate that the association between PE and BI (β = 0.417, t = 6.201, p < .01), EE and BI (β = 0.453, t = 6.880, p < .01), SI and BI (β = 0.237, t = 4.502, p < .01), ANX and BI (β = −0.107, t = −2.050, p < .05) and BI and UB (β = 0.847, t = 8.918, p < .01) were significant. Therefore, H1, H2, H3, H5 and H7 were supported; however, surprisingly, FC →UB and ANX → UB paths were insignificant. Thus, H4 and H6 were not supported at the p > 0.05 level in the context of the UK. shows there is a large portion of the variances in the behavioural intention with value of 0.696 and the actual use behaviour with

value of 0.742 indicates the explanatory power of the current study model.

Discussion and implications

This study aimed to identify the predictors of behavioural intention and use behaviour towards ChatGPT among students in the UK and Nepal. The findings indicate that in both Nepal and the UK, Performance Expectancy (PE), Effort Expectancy (EE), and Social Influence (SI) had a significant direct effect on students’ intent to use ChatGPT. Additionally, Anxiety (ANX) was found to significantly but adversely predict behavioural intention in the UK. However, no significant relationships were found between Facilitating Conditions (FC) and Use Behaviour (UB) in either country.

In this study, PE has the greatest effect on the behavioural intent to use ChatGPT in Nepal. This is consistent with previous studies that examined the intent to adopt AI-based technology among students using the UTAUT model (Bin-Nashwan, Sadallah, and Bouteraa Citation2023; Kwak, Seo, and Ahn Citation2022).

This emphasises that a higher perception of benefits compared to costs is linked to increased adoption intentions. This is in line with the findings of recent research that has emphasised two significant positive drivers of ChatGPT adoption in academia: its time-saving features and its perceived capacity to enhance academic efficiency and reduce stress among users (Bin-Nashwan, Sadallah, and Bouteraa Citation2023), and improve skills, ultimately leading to increased productivity (Dwivedi et al. Citation2023).

In the UK, EE emerged as the strongest predictor of the intent to use ChatGPT. Effort expectancy refers to users’ perceived level of effort required for a particular task and the ease of the task (Venkatesh, Thong, and Xu Citation2012). Multiple studies have documented that effort expectancy predicts intent to use and attitude toward technology (El-Masri and Tarhini Citation2017; Kwak, Seo, and Ahn Citation2022).

The significance of SI in academic technology adoption is supported by multiple studies (El-Masri and Tarhini Citation2017; Maican et al. Citation2019). Moreover, the positive links between behavioural intention and usage behaviour in both the UK and Nepal affirm the pivotal role of intention in shaping actual use. Strong intention corresponds to heightened engagement. This aligns with earlier studies highlighting intention's significance in technology adoption (Šumak and Šorgo Citation2016).

Anxiety's impact varied between Nepal and the UK. In Nepal, anxiety lacked a significant impact on usage behaviour, whereas in the UK, it negatively affected behavioural intention but not usage behaviour. This suggests that UK students might hesitate due to anxiety, yet this does not necessarily impede actual use, potentially due to other factors like perceived usefulness and social encouragement outweighing anxiety-induced hesitation. This finding extends prior research acknowledging the psychological dimension of technology adoption (Meinhardt-Injac and Skowronek Citation2022).

Anxiety's relevance in technology acceptance is particularly notable in voluntary technology use contexts (Khechine and Lakhal Citation2018). Existing evidence establishes anxiety's direct negative effect on adoption intention (Holtzman, Pereira, and Yeung Citation2018; Maican et al. Citation2019), while other studies confirm its role in predicting usage behaviour (Khechine and Lakhal Citation2018). Recent research indicates that incoming undergraduates at a UK university demonstrated confidence in their grasp of academic integrity, as observed by Newton (Citation2016). The heightened awareness of academic integrity and the consequential penalties for violations among UK students could potentially elucidate the apprehension associated with utilising ChatGPT. Bin-Nashwan, Sadallah, and Bouteraa (Citation2023) point out the impact of academic integrity on the adoption of ChatGPT by academics. Their findings reveal an inverse relationship between academic integrity and the utilisation of ChatGPT, suggesting that higher levels of academic integrity correspond to lower usage of the AI tool. However, academic integrity also serves as a positive moderator for other factors influencing ChatGPT usage. This highlights the necessity for collaborative efforts among stakeholders to effectively address these concerns. Shelton and Hill (Citation1969) establish a link between anxiety and increased instances of student cheating. Their research of how anxiety and awareness of peer performance shape academic dishonesty attributes cheating to the negative emotions associated with the fear of failure.

The non-significant influence of facilitating conditions on usage behaviour in both contexts enriches discussions on resource and institutional support roles. This aligns with prior examinations of facilitating conditions in technology adoption (Kumar and Bervell Citation2019; Nikolopoulou, Gialamas, and Lavidas Citation2020). This insignificance implies that while technology access and institutional support matter, they might not be primary drivers for ChatGPT use. Notably, the FC to BI relationship, unverified in the original UTAUT, is validated in UTAUT2 (Venkatesh, Thong, and Xu Citation2012) and corroborated by other studies (El-Masri and Tarhini Citation2017; Maican et al. Citation2019).

The research findings carry significant implications: The divergent impact of anxiety in the two countries underscores the necessity of tailoring technology adoption approaches due to cultural and contextual influences on emotional responses. Emphasising ChatGPT's role in academic enhancement (PE), user-friendliness (EE), and social support (SI) can incentivise students to embrace and utilise the technology. Despite its insignificant effects on usage, institutions must allocate resources to create an environment conducive to seamless tech integration, irrespective of facilitating conditions and anxiety levels. Addressing technology-related anxiety, particularly in the UK, through awareness initiatives can enhance students’ comfort with ChatGPT. The findings regarding facilitating conditions and anxiety call for further studies, aligning with the demand for nuanced investigations.

This research makes contributions at theoretical, practical, and methodological levels to understanding AI acceptance, especially within student settings. It enriches the understanding of AI acceptance, especially in student contexts, by integrating technology anxiety into the UTAUT model. This expansion acknowledges the emotional dimension in addition to the cognitive aspects of technology adoption. By identifying links between technology anxiety and key UTAUT predictors, the study offers practical insights for educators, developers, and regulators/policy makers seeking to enhance AI adoption in education. Furthermore, the research contributes methodologically by showcasing the importance of adapting existing frameworks to accommodate emerging technologies like ChatGPT, urging future studies to consider emotional dimensions in technology acceptance models for a more comprehensive understanding of user behaviour. The findings on anxiety's influence on technology adoption behaviour underline the importance of a nuanced approach to teaching, learning, and knowledge development within Higher Education Institutions (HEIs). Educators in HEIs need to tailor their pedagogical approaches to accommodate the diverse cultural backgrounds and psychological dispositions of students. For example, in Nepal, where anxiety has less impact on technology usage, educators can focus on highlighting the practical benefits and social encouragement of technology adoption. Conversely, in the UK, where anxiety negatively affects behavioural intention, educators should consider implementing additional support mechanisms to alleviate student apprehensions and promote effective technology utilisation. Furthermore, HEIs should reassess their technology integration strategies, incorporate anxiety-related modules into curricula, provide professional development opportunities for staff to address psychological dimensions of technology adoption, and foster collaborative initiatives among stakeholders to tackle the complex interplay between anxiety, technology adoption, and academic integrity. It highlights the necessity of adapting adoption theories to different contexts while acknowledging cultural disparities and barriers. Methodologically, it establishes variable validity and reliability, providing a framework for studying ChatGPT adoption in universities within the UK and Nepal.

Conclusions, limitations and future research

This study aimed to examine university students’ adoption of ChatGPT in Nepal and the UK, employing the Unified Theory of Acceptance and Use of Technology. It contributes to the literature by investigating how factors such as technological access and cultural differences affect adoption intention and usage behaviour. Furthermore, the study extends the UTAUT theory by integrating anxiety, highlighting the emotional aspect of technology adoption.

Results indicated that performance expectancy, effort expectancy, and social influence significantly impacted intent to use in both countries, with anxiety negatively affecting intention in the UK but not in Nepal. Facilitating conditions did not predict usage behaviour. In Nepal, perceived benefits were the strongest predictor, while ease of use (effort expectancy) was most influential in the UK.

The study highlights the importance of addressing technology-related anxiety, particularly in the UK, and suggests tailoring technology adoption strategies to cultural nuances, while emphasising benefits, user-friendliness, and social support. It also emphasises the need to consider emotional dimensions in technology acceptance models and recommends integrating anxiety-related modules into curricula. Overall, this research enhances our understanding of AI tool adoption in higher education and provides valuable insights for both theory and practice.

While the study provides valuable insights into the adoption of ChatGPT in higher education, it is essential to acknowledge several limitations. Firstly, the research was confined to the UK and Nepal, potentially limiting the generalisability of its findings to other contexts. Secondly, the reliance on self-reported data and a snapshot approach may introduce biases and overlook long-term changes in adoption behaviour. Thirdly, while the UTAUT framework is robust, it may not encompass all relevant factors influencing ChatGPT adoption. Lastly, the quantitative focus of the study may overlook qualitative aspects of students’ experiences with ChatGPT.

To address these limitations, further research is needed to enhance our understanding of ChatGPT adoption. This could involve expanding to diverse educational contexts beyond the UK and Nepal, employing longitudinal and qualitative research methods to capture nuanced changes over time, exploring additional factors such as trust and perceived risks associated with ChatGPT, and investigating the roles of educators and institutions in facilitating or hindering adoption. Such endeavours would provide deeper insights into the complex dynamics surrounding ChatGPT adoption in higher education.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abadie, Amelie, Soumyadeb Chowdhury, and Sachin Kumar Mangla. 2024. “A Shared Journey: Experiential Perspective and Empirical Evidence of Virtual Social Robot ChatGPT's Priori Acceptance.” Technological Forecasting and Social Change 201:123202. https://doi.org/10.1016/j.techfore.2023.123202

- Alavi, Maryam, Dorothy E. Leidner, and Reza Mousavi. 2024. “A Knowledge Management Perspective of Generative Artificial Intelligence.” Journal of the Association for Information Systems 25 (1): 1–12. https://doi.org/10.17705/1jais.00859

- Ali, Omar, Peter A. Murray, Mujtaba Momin, Yogesh K. Dwivedi, and Tegwen Malik. 2024. “The Effects of Artificial Intelligence Applications in Educational Settings: Challenges and Strategies.” Technological Forecasting and Social Change 199:123076. https://doi.org/10.1016/j.techfore.2023.123076

- Bin-Nashwan, Saeed Awadh, Mouad Sadallah, and Mohamed Bouteraa. 2023. “Use of ChatGPT in Academia: Academic Integrity Hangs in the Balance.” Technology in Society 75 (November): 102370. https://doi.org/10.1016/j.techsoc.2023.102370

- Camilleri, M. A. 2024. “Factors Affecting Performance Expectancy and Intentions to use ChatGPT: Using SmartPLS to Advance an Information Technology Acceptance Framework.” Technological Forecasting and Social Change 201:123247. https://doi.org/10.1016/j.techfore.2024.123247

- Chan, Cecilia Ka Yuk, and Wenjie Hu. 2023. “Students’ Voices on Generative AI: Perceptions, Benefits, and Challenges in Higher Education.” International Journal of Educational Technology in Higher Education 20 (1): 43. https://doi.org/10.1186/s41239-023-00411-8

- Cotton, Debby R. E., Peter A. Cotton, and J. Reuben Shipway. 2023. “Chatting and Cheating: Ensuring Academic Integrity in the era of ChatGPT.” Innovations in Education and Teaching International 61 (2): 228–239.

- Davis, Fred D. 1989. “Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology.” MIS Quarterly 13 (3) Management Information Systems Research Center, University of Minnesota: 319–340. https://doi.org/10.2307/249008

- Department for Business and Trade. 2024. “UK infrastructure – great.gov.uk international.” Accessed 12 March 2024. https://www.great.gov.uk/international/content/investment/why-invest-in-the-uk/uk-infrastructure/.

- Department for Education. 2023. “Generative Artificial Intelligence (AI) in Education. Education, Training and Skills.” Accessed 24 February 2024. https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education.

- Dwivedi, Yogesh K., Nir Kshetri, Laurie Hughes, Emma Louise Slade, Anand Jeyaraj, Arpan Kumar Kar, Abdullah M. Baabdullah, et al. 2023. “‘So What If ChatGPT Wrote It?’ Multidisciplinary Perspectives on Opportunities, Challenges and Implications of Generative Conversational AI for Research, Practice and Policy.” International Journal of Information Management 71 (August): 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642.

- El-Masri, Mazen, and Ali Tarhini. 2017. “Factors Affecting the Adoption of E-learning Systems in Qatar and USA: Extending the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2).” Educational Technology Research and Development 65 (3): 743–763. https://doi.org/10.1007/s11423-016-9508-8

- Fornell, Claes, and David F. Larcker. 1981. “Structural Equation Models with Unobservable Variables and Measurement Error: Algebra and Statistics.” Journal of Marketing Research 18 (3): 382–388. https://doi.org/10.1177/002224378101800313

- Foroughi, Behzad, Madugoda Gunaratnege Senali, Mohammad Iranmanesh, Ahmad Khanfar, Morteza Ghobakhloo, Nagaletchimee Annamalai, and Bita Naghmeh-Abbaspour. 2023. “Determinants of Intention to Use ChatGPT for Educational Purposes: Findings from PLS-SEM and fsQCA.” International Journal of Human–Computer Interaction 0 (0) Taylor & Francis: 1–20.

- Gulati, Anmol, Harish Saini, Sultan Singh, and Vinod Kumar. 2024. “Enhancing Learning Potential: Investigating Marketing Students’ Behavioral Intentions to Adopt ChatGPT.” Marketing Education Review, 1–34. https://doi.org/10.1080/10528008.2023.2300139

- Hair, Joseph F., David Mary Celsi, J. Ortinau, and Robert P. Bush. 2010. Essentials of Marketing Research. New York: McGraw-Hill.

- Harman, D. 1967. “A Single Factor Test of Common Method Variance.” Journal of Psychology 35:359–378.

- Holtzman, Adam L., Deidre B. Pereira, and Anamaria R. Yeung. 2018. “Implementation of Depression and Anxiety Screening in Patients Undergoing Radiotherapy.” BMJ Open Quality 7 (2): e000034.

- Hu, Li-tze, and Peter M. Bentler. 1999. “Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives.” Structural Equation Modeling: A Multidisciplinary Journal 6 (1): 1–55. https://doi.org/10.1080/10705519909540118

- Hu, Sailong, Kumar Laxman, and Kerry Lee. 2020. “Exploring Factors Affecting Academics’ Adoption of Emerging Mobile Technologies-an Extended UTAUT Perspective.” Education and Information Technologies 25 (5): 4615–4635. https://doi.org/10.1007/s10639-020-10171-x

- Jha, Jivesh, and Alok Kumar Yadav. 2022. “How is Artificial Intelligence Changing the World? What Nepal should do to Tap it?” Nepal Live Today, Accessed 12 March 2014. https://www.nepallivetoday.com/2022/02/10/how-is-artificial-intelligence-changing-the-world-what-nepal-should-do-to-tap-it/.

- Khechine, Hager, and Sawsen Lakhal. 2018. “Technology as a Double-edged Sword: From Behavior Prediction with UTAUT to Students’ Outcomes Considering Personal Characteristics.” Journal of Information Technology Education: Research 17 (April): 63–102. https://doi.org/10.28945/4022

- Kock, Ned, and Gary Lynn. 2012. “Lateral Collinearity and Misleading Results in Variance-Based SEM: An Illustration and Recommendations.” Journal of the Association for Information Systems 13 (7): 546–580. https://doi.org/10.17705/1jais.00302

- Korinek, A. 2023. “Generative ai for Economic Research: Use Cases and Implications for Economists.” Journal of Economic Literature 61 (4): 1281–1317. https://doi.org/10.1257/jel.20231736

- Kumar, Jeya Amantha, and Brandford Bervell. 2019. “Google Classroom for Mobile Learning in Higher Education: Modelling the Initial Perceptions of Students.” Education and Information Technologies 24 (2): 1793–1817. https://doi.org/10.1007/s10639-018-09858-z

- Kwak, Yeunhee, Yon Hee Seo, and Jung-Won Ahn. 2022. “Nursing Students’ Intent to use AI-based Healthcare Technology: Path Analysis Using the Unified Theory of Acceptance and Use of Technology.” Nurse Education Today 119:105541. https://doi.org/10.1016/j.nedt.2022.105541

- Lindebaum, D., and P. Fleming. 2023. “Chatgpt Undermines Human Reflexivity, Scientific Responsibility and Responsible Management Research.” British Journal of Management, 1–12.

- Maican, Catalin Ioan, Ana-Maria Cazan, Radu Constantin Lixandroiu, and Lavinia Dovleac. 2019. “A Study on Academic Staff Personality and Technology Acceptance: The Case of Communication and Collaboration Applications.” Computers & Education 128 (January): 113–131. https://doi.org/10.1016/j.compedu.2018.09.010.

- Mannuru, Nishith Reddy, Sakib Shahriar, Zoë A. Teel, Ting Wang, Brady D. Lund, Solomon Tijani, Chalermchai Oak Pohboon, et al. 2023. “Artificial Intelligence in Developing Countries: The Impact of Generative Artificial Intelligence (AI) Technologies for Development.” Information Development: 1–19.

- Martín-García, Antonio Víctor, Fernando Martínez-Abad, and David Reyes-González. 2019. “TAM and Stages of Adoption of Blended Learning in Higher Education by Application of Data Mining Techniques.” British Journal of Educational Technology 50 (5): 2484–2500. https://doi.org/10.1111/bjet.12831

- Meinhardt-Injac, Bozana, and Carina Skowronek. 2022. “Computer Self-efficacy and Computer Anxiety in Social Work Students: Implications for Social Work Education.” Nordic Social Work Research 12 (3): 392–405. https://doi.org/10.1080/2156857X.2022.2041073.

- Newton, P. 2016. “Academic Integrity: A Quantitative Study of Confidence and Understanding in Students at the Start of their Higher Education.” Assessment & Evaluation in Higher Education 41 (3): 482–497. https://doi.org/10.1080/02602938.2015.1024199

- Newton, Philip, and Maira Xiromeriti. 2024. “ChatGPT Performance on Multiple Choice Question Examinations in Higher Education. A Pragmatic Scoping Review.” Assessment & Evaluation in Higher Education 1–18.

- Nikolopoulou, Kleopatra, Vasilis Gialamas, and Konstantinos Lavidas. 2020. “Acceptance of Mobile Phone by University Students for their Studies: An Investigation Applying UTAUT2 Model.” Education and Information Technologies 25 (5): 4139–4155. https://doi.org/10.1007/s10639-020-10157-9

- Noy, Shakked, and Whitney Zhang. 2023. “Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence.” Science 381 (6654): 187–192. https://doi.org/10.1126/science.adh2586

- Perkins, Mike, and Jasper Roe. 2023. “Decoding Academic Integrity Policies: A Corpus Linguistics Investigation of AI and Other Technological Threats.” 1–20.

- Pham, Thi Bich Thu, Lan Anh Dang, Thi Minh Hue Le, and Thi Hong Le. 2020. “Factors Affecting Teachers’ Behavioral Intention of Using Information Technology in Lecturing-Economic Universities.” Management Science Letters, 2665–2672. https://doi.org/10.5267/j.msl.2020.3.026.

- Podsakoff, Philip M., Scott B. MacKenzie, and Nathan P. Podsakoff. 2012. “Sources of Method Bias in Social Science Research and Recommendations on How to Control it.” Annual Review of Psychology 63 (1): 539–569. https://doi.org/10.1146/annurev-psych-120710-100452

- Rogers, Everett M. 2003. Diffusion of Innovations. 5th ed. New York, NY, USA: Free Press.

- Sabherwal, R., University of Arkansas, and V. Grover. 2024. “The Societal Impacts of Generative Artificial Intelligence: A Balanced Perspective.” Journal of the Association for Information Systems 25 (1): 13–22. https://doi.org/10.17705/1jais.00860

- Samsudeen, Sabraz Nawaz, and Rusith Mohamed. 2019. “University Students’ Intention to Use e-Learning Systems: A Study of Higher Educational Institutions in Sri Lanka.” Interactive Technology and Smart Education 16 (3): 219–238. https://doi.org/10.1108/ITSE-11-2018-0092

- Schermelleh-Engel, Karin, Helfried Moosbrugger, and Hans Müller. 2003. “Evaluating the fit of Structural Equation Models: Tests of Significance and Descriptive Goodness-of-fit Measures.” Methods of Psychological Research Online 8 (2): 23–74.

- Shelton, Jev, and John P. Hill. 1969. “Effects on Cheating of Achievement Anxiety and Knowledge of Peer Performance.” Developmental Psychology 1:449–455. https://doi.org/10.1037/h0028010

- Simonson, Michael R., Matthew Maurer, Mary Montag-Torardi, and Mary Whitaker. 1987. “Development of a Standardized Test of Computer Literacy and a Computer Anxiety Index.” Journal of Educational Computing Research 3 (2): 231–247. https://doi.org/10.2190/7CHY-5CM0-4D00-6JCG.

- Stokel-Walker, C. 2022. “AI Bot ChatGPT Writes Smart Essays — Should Professors Worry?” Nature (December). https://doi.org/10.1038/d41586-022-04397-7.

- Strzelecki, A. 2023. “To Use or Not to Use ChatGPT in Higher Education? A Study of Students’ Acceptance and Use of Technology.” Interactive Learning Environments 0 (0) Routledge: 1–14. https://doi.org/10.1080/10494820.2023.2209881

- Strzelecki, A., and S. ElArabawy. 2024. “Investigation of the Moderation Effect of Gender and Study Level on the Acceptance and use of Generative AI by Higher Education Students: Comparative Evidence from Poland and Egypt.” British Journal of Education Technology, 1–22.

- Šumak, Boštjan, and Andrej Šorgo. 2016. “The Acceptance and Use of Interactive Whiteboards among Teachers: Differences in UTAUT Determinants between Pre- and Post-adopters.” Computers in Human Behavior 64 (November): 602–620.

- Ursachi, George, Ioana Alexandra Horodnic, and Adriana Zait. 2015. “How Reliable are Measurement Scales? External Factors with Indirect Influence on Reliability Estimators.” Procedia Economics and Finance 20:679–686. https://doi.org/10.1016/S2212-5671(15)00123-9

- Venkatesh, Viswanath, Michael G. Morris, Gordon B. Davis, and Fred D. Davis. 2003. “User Acceptance of Information Technology: Toward a Unified View.” MIS Quarterly 27 (3): 425–478. https://doi.org/10.2307/30036540

- Venkatesh, Viswanath, James Y. L. Thong, and Xin Xu. 2012. “Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology.” MIS Quarterly 36 (1): 157–78. https://doi.org/10.2307/41410412

- Wu, Rong, and Zhonggen Yu. 2024. “Do AI Chatbots Improve Students Learning Outcomes? Evidence from a Meta-analysis.” British Journal of Educational Technology 55 (1): 10–33. https://doi.org/10.1111/bjet.13334

- Zirar, A. 2023. “Exploring the Impact of Language Models, Such as ChatGPT, on Student Learning and Assessment.” Review of Education 11 (3): e3433. https://doi.org/10.1002/rev3.3433