Abstract

After suffering heavy losses from a string of natural disasters in the early 1990s, the US (re)insurance sector embraced a new technology called catastrophe, or ‘cat’, models to help solve pervasive underestimations of risk. These first models succeeded in crafting consensus about how risks for future disasters should be spread via (re)insurance, but as extreme weather begins to diverge from historical records, cat-modelling firms have struggled to integrate new information about climate change and climate variability into their forecasts. Initial attempts to upgrade models have generated contentious reactions from homeowners and regulators, casting doubt on modellers’ position as privileged arbiters of risk. As a result, this case shows how efforts to incorporate knowledge about climate impacts into routine economic processes, such as insurance pricing, trigger broader political disputes about how these risks should be socially distributed. These disputes are decoupled, to an extent, from the epistemological validity of how risks are actually calculated.

Introduction

In December 2005, members of the National Association of Insurance Commissioners (NAIC) convened at the Sheraton Hotel in Chicago to discuss the fallout from two record-breaking hurricane seasons. Between 2004 and 2005, 25 hurricanes and tropical storms had pummelled US coastal communities, causing hundreds of billions of dollars in damages and claiming the lives of nearly 2,000 Americans. The NAIC, made up of state-level US regulators responsible for overseeing local insurance markets, was intent on making sure that insurers met their obligations to help homeowners rebuild. But the NAIC was also concerned by another issue: that the spate of recent storms was a harbinger of worse things to come. A growing body of science pointed to the exacerbating influence of climate change on extreme weather events and the officials wanted to know whether this was true, and what, if anything, they should do about it.

Among those addressing the group was Robert Muir-Wood, Chief Research Officer of the catastrophe-modelling firm Risk Management Solutions (RMS). In his presentation at the Sheraton, Muir-Wood suggested that the computerized weather simulations insurers used to forecast hurricane risk – like those designed by RMS – were no longer adequate for creating accurate damage estimates. ‘We’ve gotten to the point where climate change needs to be included when thinking about modelling for the near future’ (NAIC, Citation2006, p. 89). According to Muir-Wood, bringing climate change into these models, known as catastrophe, or ‘cat’ models, would allow signals about ‘climate risk’ to begin working their way into insurance markets. This would shift how individuals made decisions about where to build and purchase future homes, helping protect individuals from making bad choices while also reduce the underlying exposure to the whole industry.

In fact, Muir-Wood told the audience, RMS was already working on a solution. Just a couple months before the NAIC meeting, the company ‘convened a session involving three key climatologists that represent different perspectives on planet change, with considerable depth of knowledge of all facets of hurricane activity [and] hurricane climatology’. The result of this ‘expert elicitation’ was that ‘we actually got them to arrive at a consensus on what was going to be the activity of hurricanes at landfall as well as in the basins over the next five years’ (NAIC, Citation2006, p. 93).

Cat models shape perceptions of risk, and these perceptions structure conversations between insurers, reinsurers, regulators, brokers, rating agencies and consumer advocates about how to fix homeowner premiums, establish capital adequacy requirements, make decisions about purchasing reinsurance, and evaluate the financial health of insurance companies. In the United States, the setting of premium rates involves annual negotiations between insurers and the very individuals gathered in Chicago listening to Muir-Wood’s presentation.Footnote1 When RMS finally released its new hurricane estimates, however, it became clear that incorporating climate risk into homeowner insurance would lead to major premium increases along the Gulf and Atlantic seaboards.Footnote2 As a result, rather than forging a new consensus about natural disaster risk, the climate-enhanced model provoked strong dissent from those most affected – consumer advocates, homeowners and the public officials who represented them. Loathe to raise rates on their constituents, regulators rejected applying the model to future windstorm premiums, betting (and hoping) that storm activity would remain more or less unchanged (Interview with regulator from Florida Office of Insurance, telephone, 7 August, 2019).

This failure to achieve agreement on model updates sheds light on how insurance, and the often invisible and mundane practices of risk assessment, serve as an increasingly crucial terrain over which people are struggling to define how and when climate change should be institutionally taken into account. RMS’ effort to improve its models was driven by a credible scientific concern that future weather could no longer be inferred by past trends. This concept of a baseline climate around which weather varies in a statistically dependable fashion – called ‘stationarity’ – seemed less and less valid with respect to hurricanes. But the elicitation of expert opinion about the behaviour of future storms represented a radical shift in how catastrophe-modelling firms measured hurricane activity.Footnote3 And a ‘non-stationary’ approach to event simulation threatened to unravel a web of embedded social assumptions about the relationship between insurance pricing, real estate development, economic growth and the American Dream of homeownership in places such as Florida, South Carolina and Louisiana.Footnote4

Drawing on this extended episode in the life of a catastrophe model, this paper examines the uneven consequences of efforts to make climate change information meaningful for realms of economic decision-making. Situating cat models within a broader genealogy of insurance technologies, the paper builds on perspectives from the sociology of risk showing that insurance operates not as a singular form of knowing, but through ‘combinations’ (Bougen, Citation2003; Ewald, Citation1991) or ‘assemblages’ (Collier, Citation2008; Dean, Citation1998) of practices that simultaneously assess and assign responsibility for risk (Ericson & Doyle, Citation2004a). Cat models, I argue, overcome conditions of actuarial uncertainty by creatively translating different modes of calculation – what Ian Hacking (Citation1992) calls ‘styles of reasoning’ – into the singular idiom of statistical probabilities. This epistemological creativity, however, is constrained by the need of cat modellers to maintain the institutional legitimacy of their risk evaluation techniques, pitting experts’ scientifically plausible approaches to forecast future storm conditions against the problematic political effects these forecasts have on how risk is socially distributed.

Additionally, this paper focuses on a particular set of (re)insurance actors – catastrophe modelling firms – who are deliberately trying to assess climate science in an effort to reconcile forecasts of change with the profit-making imperatives of insurers. In doing so, I want to turn attention away from the oft-analysed cases of corporate denialism and obfuscation of climate change (Brulle, Citation2014; Farrell, Citation2016; Jacques et al., Citation2008), toward private sector actors who embrace the science. Unlike Oreskes and Conway’s (Citation2010) merchants of doubt, who are in the business of delegitimating climate science for capital gain, cat modellers are trying to edge out their competition by legitimating the science. Thus, the paper extends previous analysis in STS of the role climate modelling plays in shaping public agendas of environmental governance (Edwards, Citation2010; Heymann et al., Citation2017; Howe, Citation2014) to look at how climate-related knowledge is being woven into private technologies of risk assessment to influence mundane economic activities, such as the underwriting of homeowner insurance.

The paper proceeds in five parts and begins with (i) a consideration of the problem of climate change in the context of other sociological accounts of insurance (understood as an institutional blend of expertise and political rationalities that mutually shape the governance of risk); (ii) a brief presentation of the paper’s data and methods; (iii) a history of the development of cat models and their initial success in creating social consensus around catastrophe loss estimates; (iv) an analysis of the 2004/2005 North Atlantic hurricane seasons as a pivotal moment where the introduction of ‘non-stationary’ modelling practices disrupts the shared acceptability of model outputs; and (v) concludes with a discussion of how expert efforts to institutionalize climate risks into insurance pricing presage a growing category of political struggles over how to distribute responsibility for the increasing burdens of climate change.

Catastrophes, climate change and the question of insurability

Sociologists have long been interested in understanding how insurance structures social and economic attitudes toward the future (Ewald, Citation1991; Giddens, Citation1990; Reith, Citation2004). Prominent among early studies was Ulrich Beck’s Risk society (Citation1992a), in which he identifies insurance, and its ability to spread the negative consequences of specific events across a population of uncorrelated individuals, as a key motor of modernity. For Beck, insurance serves to socialize risk, softening in particular the dangers that industrialization posed to the health and safety of workers and citizens: ‘Modernity, which brings uncertainty to every niche of existence, finds its counter-principle’ as Beck writes, ‘in a social compact against industrially produced hazards and damages, stitched together out of public and private insurance agreements’ (Beck, Citation1992b, p. 100; emphasis from the original).Footnote5

Yet, the same technological and economic progress associated with early modernity, according to Beck, also paradoxically threatens society with new classes of ‘post-industrial’ hazards – e.g. nuclear accidents, technologically accelerated epidemics and rampant genetic modification. Not only is it difficult to estimate the likelihood of these catastrophic events (due to their extreme rarity), but also of socializing any subsequent damages if they do occur (because of their systemic nature). Thus, post-industrial catastrophes undermine the political function of insurance as a means of providing security against modern life’s random but calculable hazards.

Climate change, for Beck, serves as a quintessential case of this new class of hazards since it results from the very burning of fossil fuels that provided the basis for industrialization in the first place (Beck, Citation2008). In addition, the impacts of climate change are difficult to predict within the scales of time and space typically involved in existing frameworks for distributing risks (Bulkeley, Citation2001; Levin et al., Citation2012). For Beck, this twin problem of the ‘incalculability’ and ‘unspreadability’ of damages from post-industrial hazards anticipates the demise of traditional risk mitigation mechanisms, such as private insurance. In this context, climate change points to a new landscape of ‘conflicts of accountability’ as social disputes erupt over how the consequences of new ‘risk(s) can be distributed, averted, controlled and legitimated’ (Beck, Citation1996, p. 28).

While Beck put his finger on theoretically salient problems, recent research in the sociology of risk suggests that actuarial techniques for assessing and transferring risks are more adaptable than he initially supposed (Collier, Citation2008; Dean, Citation1998; Johnson, Citation2014; O’Malley, Citation2004). For instance, computer simulations, packaged commercially as catastrophe models, have allowed insurers to extend their markets even in the face of growing types of uncertainty (Bougen, Citation2003). These devices operate as alternative forms of calculation that expand the instruments and instances through which risk – particularly property and casualty risks – can be economized. As Stephen Collier (Citation2008) has noted, simulation technologies represent a different assemblage of calculative techniques than the predominantly probabilistic frameworks that Beck considered indissociable from private insurance. By exchanging one ‘style of reasoning’ (what Collier calls ‘statistical archival’ reasoning) with another (what Collier calls ‘enactment’), simulations help render more manageable the uncertainties heralded by extreme forms of risk. In doing so, they extend the social compact of private insurance beyond the limits initially proposed by Beck (Bougen, Citation2003; Ericson & Doyle, Citation2004b).

Cat models, for instance, reinforce traditional forms of risk transfer by expanding the role of reinsurance contracts and securitized forms of property risk called catastrophe bonds within local insurance markets (Jarzabkowski et al., Citation2015; Johnson, Citation2014). Yet, as cat modellers (under pressure from these higher order risk aggregators) try and come to terms with growing evidence that past data on losses is no longer predictive of future disasters, their adjusted hazard assessments are prone to cause major dislocations at the level of domestic policyholders. By increasing perceptions of risk in the private markets of property and casualty insurance, cat modellers participate in a mode of governing risk that further individualizes the responsibility of homeowners to bear the burden of climate risks (O’Malley, Citation1996). This is despite the fact that, for many individuals, the decision to live on the coast was both encouraged and supported by the very system of private insurance now attempting to hold them accountable for a choice that might have occurred long in the past.Footnote6

The conflict that ensues between actors operating at different scales of the insurance field serves as a case study in how the institutionalization of climate risk depends not just on the accuracy of future damage estimates, but on addressing concerns about who should bear the burden of increased perceptions of risk. In other words, returning to Beck, technical advances in risk assessment do not address the underlying problems climate change poses to current social arrangements of insurance: How, in a world where the evaluation of risks grows to encompass horizons of choice that stretch backward and forward in time, do institutions of risk governance adjudicate the responsibilities tied to those decisions?

Methods and data

The underlying analysis in this paper draws on 27 semi-structured interviews with key informants from the (re)insurance field (conducted between January 2017 and August 2019). Semi-structured interviews were used as a means to develop a dense and descriptive mapping of the historical development of cat modelling techniques within US insurance markets. Interviews also served to triangulate some of the tensions and positioning of the different actors engaged in pricing homeowner premiums along the Gulf and Atlantic coasts following the 2004/2005 hurricane seasons. Individuals were selected for interviews because of their involvement in model development and their role in assessing catastrophe risk and managing the use of cat models in actuarial decisions, reinsurance purchasing and ratemaking. Since the specific identity of interviewees is unimportant to my analysis, information attributed to these interviews is anonymized.Footnote7

Additional data include primary sources related to the development of the first catastrophe models as well as the specific events surrounding the 2005 model updates. These documents encompass testimony and regulatory filings from hurricane modellers to the Florida Commission on Hurricane Loss Projection Methodology (FCHLPM),Footnote8 public hearings before the National Association of Insurance Commissioners (NAIC) and select Congressional committees, letters from national consumer advocate associations to the NAIC, notes to clients on model updates from catastrophe modellers, insurance brokerage firms, and rating agencies, scientific publications authored by catastrophe modelling employees that accompanied the release of model updates, as well as local media coverage of these events.

Institutionalizing risk metrics for ‘small-number’ hazards

To appreciate how climate change upends current forms of estimating catastrophe losses, we must understand how catastrophe models succeeded in creating legitimacy around a particular set of risk assessment techniques in the first place. Prior to cat models, property and casualty insurers largely avoided underwriting for natural disasters (e.g. floods, earthquakes and hurricanes). Because of their rarity, these extreme events do not conform to what statisticians call the ‘law of large numbers’, the type of regularly occurring event sets that create the conditions for probabilistic analysis (Desrosières, Citation1998). But in the late 1980s, techniques emerged that offered insurers a relatively standard framework for quantifying catastrophes through computer simulation, making natural disasters and other improbable but devastating events subject to more traditional methods of actuarial pricing (Grossi & Kunreuther, Citation2005).

Synthetic storms and statistical norms

The idea that computer simulations could be leveraged to fill in blank spots in insurers’ knowledge of natural disasters was proposed as early as the 1960s by Don Friedman (Citation1975), Director of Research at Travelers Insurance. Building on Friedman’s work, Karen Clark, a former research engineer with the US branch of British Commercial Union, presented ‘A formal approach to catastrophe risk assessment and management’ at the 1986 annual meeting of the Casualty and Actuarial Society.Footnote9 Sensing the potential for a new business service, Clark went on to launch the first cat modelling firm – Applied Insurance Research (AIR), based in Boston (Jahnke, Citation2010). Almost simultaneously on the West Coast, Stanford Business School graduate Hermant Shah and a doctoral student in civil engineering, Weimin Dong, created Risk Management Solutions (RMS), a cat modelling firm that initially specialized in forecasting earthquake risk (commercializing research conducted by Shah’s father and Dong’s PhD advisor, Stanford Engineering Professor Haresh Shah) (Muir-Wood, Citation2016). AIR, meanwhile, focused on improving estimates of North Atlantic hurricane risk.

Beginning with the sparse record of landfalling hurricanes maintained by the National Oceanic and Atmospheric Administration – called HURDAT – Clark proposed using numerical simulations of past storms to augment the number of possible hurricane events that might strike a insurers’ portfolio of properties. By stochastically sampling from the historical storms’ primary parameters (e.g. minimum central pressure, radius of maximum winds, forward speed and track direction of the storm) and recombining them using Monte Carlo techniques, Clark produced tens of thousands of plausible, ‘synthetic’ storm events. In doing so, she leveraged a ‘small-number’ data set into a big population of computer-generated storms, translating rare weather events into the idiom of the ‘law of large numbers’ and the framework of probabilism that governs the majority of insurable risks (Collier, Citation2008; Ericson & Doyle, Citation2004a).Footnote10

By drawing on the entire record of past storms, Clark premised her analysis on what I call ‘stochastic impartiality’. Her simulations relied, like many probabilistic calculations in the insurance industry, on the notion of long-term averages and indifference to year-on-year variation. As other scholars have pointed out, developing impartial techniques of risk assessment has been a fundamental condition for the success of insurance as an institution. Significant innovations in both health insurance and fire insurance, for instance, were driven by efforts to provide contentious consumers and doubtful regulators with evidence that premiums were not based on arbitrary or biased calculations (Bühlmann & Lengwiler, Citation2016; Porter, Citation2000; Schneiberg & Bartley, Citation2001). The cat models accomplished a similar function, fulfilling the conditions for what the historian of science Ted Porter (Citation1995) calls a ‘trust in numbers’. Like insurance technologies before them, they proposed a ‘shift away from informal expert judgment toward reliance on quantifiable objects’ that promoted ‘public standards over private skills (Porter, Citation2000, p. 226).Footnote11

Social compact under stress

Despite cat models’ sophistication, insurers were not initially unconvinced of the problems the models claimed to solve – i.e. that underwriters were overexposed to catastrophe risks. The situation changed rather dramatically after a string of major natural disasters at the end of the twentieth century caught insurers’ attention (Hurricane Hugo, 1989; Hurricane Andrew, 1992; the Northridge Earthquake, 1994; and the Kobe Earthquake, 1995). Hurricane Andrew, in particular, caused a panic after a group of 11 insurers in Florida went bankrupt by underestimating their exposure to wind loss from the Category 5 storm (Jahnke, Citation2010; McChristian, Citation2012).Footnote12 As a result, roughly 16,000 homeowners suffered $465 million in unpaid insurance claims that the state had to eventually step in and cover (Wilson, Citation1992). As public officials worked to hold companies accountable for outstanding contracts, insurance companies debated whether to continue underwriting at all in the state. This put additional pressure on officials to find ways to coax still solvent insurers to renew homeowner policies for the coming hurricane season (Trigaux, Citation1993; Wilson, Citation1993).

Contemporaneous newspaper stories capture local Floridians’ worries about the repercussions of a world without hurricane insurance. ‘Coastal residents, or potential coastal residents’, according to one article, ‘may have trouble financing their homes because lending institutions require the windstorm coverage’. As a result, ‘Residents, coastal officials, local bankers, insurance agents and builders [are concerned] that new insurance restrictions will destroy the county’s coastal residential market’ (Thompson, Citation1993). These press accounts capture the network of local commitments and individual aspirations held together by the ‘social compact’ of insurance (Beck Citation1992b), as well as the threat of its potential undoing – the collapse of local property values. As one Republican legislator from Tampa quipped to a regional newspaper, ‘We all recognize that insurance is not a luxury. It is very much a necessity’ (Moss, Citation1993).

Cat models arrived as a response to multiplying concerns, providing a set of stable representations of catastrophe risk that helped convince numerous stakeholders of the need for premium hikes. AIR’s model, which had estimated $13 billion in potential damages before Hurricane Andrew struck land, tracked tightly with actual losses and quickly emerged as an ‘obligatory passage point’ (Callon, Citation1986) not just for anxious actuaries but for the entire field of (re)insurance (Grossi & Kunreuther, Citation2005). By impartially sampling across the full historical data set of storms, cat models promised to smooth out the knee-jerk premium increases that had plagued the industry following previous cycles of catastrophes, building future price increases into current premium rates by averaging losses across long scales of time (Interview with former Gulf state Insurance Commissioner, telephone, 26 October, 2017). As a result, the models forged a new consensus about rate increases not only in Florida, but across all US coastal property markets.

Perceptual unification and field reconfiguration

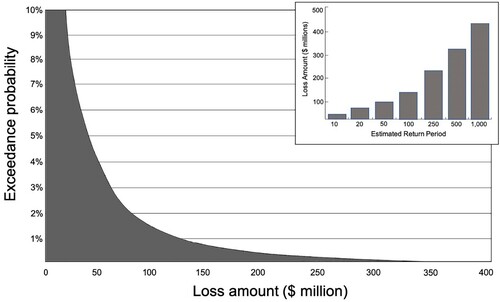

The integration of simulation techniques into the regulatory circuitry of insurance pricing rapidly reconfigured discussions in the property and casualty sector around the central cat model output – the Exceedance Probability (EP) curve. A simple, right-facing, downward sloping line (see ), the EP curve synthesizes the risk of future disaster and shapes negotiations about the price of property insurance between regulators, insurers, reinsurers, capital markets and other stakeholders (Jarzabkowski et al., Citation2015). The EP curve gives this constellation of actors an aggregated view of risk over a given return period (typically 10 years), called the annual average loss (AAL), as well as an idea of the probable maximum loss (PML) for a specific event in any given year (say the loss from an event with a 0.4 per cent chance of occurring, what is colloquially called a 1-in-250 year event).

One of the principal reasons that so many insurers went under after Hurricane Andrew was because they lacked sufficient amounts of reinsurance. Reinsurers provide insurers access to capital (at a price) in case of large, catastrophic losses – an insurance policy for insurers. Following Andrew, reinsurers were understandably reluctant to offer significant new ‘capacity’, to the Florida market (Stark, Citation1993). The cat model’s ability to provide a PML, however, helped pave the way for increased participation from the reinsurance sector across the Gulf coast and Eastern seaboard as risk perceptions of insurers, reinsurers and regulators converged around the same probability curves.Footnote13 By transforming the question of ‘how do we prevent the collapse of the insurance sector’ into ‘what does the EP curve say about the loss potential for an aggregated set of exposures’, the cat models successfully translated new uncertainties about hurricanes into the traditional insurance logic of pooled risk spreading, albeit with a larger role for both private and public reinsurance.Footnote14

This influence, however, would prove to be double-edged, as new concerns – brought on by another record-breaking season of storms, as well as emerging research on climate variability and climate change – challenged cat modelling firms to account for a world where hurricane risk was no longer assumed to be constrained by a historical baseline. Navigating this new and uncertain knowledge, the response of cat modellers to this notion that the past is no longer predictive of the future threatened to undermine their hard-earned legitimacy as trustworthy purveyors of disaster loss. If stochastic simulations overcame the problem of small-number hazards by translating them into the risk idiom of probabilism, climate change troubled assumptions around stochasticity, and with it, the tenuous political settlements achieved by the ‘stationary-view’ of hurricane risk.

Unravelling of catastrophe consensus-pricing

To everything there is a season (or two)

The twin North Atlantic hurricane seasons of 2004 and 2005 upset the general consensus that had emerged about how to price catastrophe risk after Hurricane Andrew. The two seasons brought record-breaking levels of damage, with nine landfalling hurricanes in 2004 and an unprecedented 16 in 2005. Four major hurricanes (Charley, Frances, Ivan and Jeanne) struck the US states of Florida and Alabama in 2004, causing roughly $57 billion in economic damages and another three (Katrina, Rita and Wilma) hit Louisiana, Mississippi and Texas in 2005, causing a staggering $148 billion in total damages (Blake et al., Citation2011).Footnote15 Hurricane Katrina itself was responsible for roughly $108 billion in damages and over 1,200 fatalities, making it the most destructive natural disaster in US history (Blake et al., Citation2011).Footnote16

The effect on the (re)insurance sector in the United States was also significant. On the one hand, the models seemed to have done their job. Even though insured losses for 2004/2005 totalled a record $80 billion in 2005 dollars ($22 billion and $58 billion for each respective season), there was no string of bankruptcies, as was the case in 1992 (Guy Carpenter, Citation2014). In the decade after Hurricane Andrew, the arrival of event-catalogs and EP curves pushed regulators, underwriters, homeowner advocates and homebuilder associations to agree to distribute risk via higher coastal premiums, tighter building codes, hurricane deductibles, and enhanced purchasing of reinsurance and other alternative risk transfer mechanisms (e.g. catastrophe bonds).Footnote17 This mix of precautionary logics and logics of speculation (Ericson & Doyle, Citation2004a) had kept losses within expected model distributions.

On the other hand, however, the models underperformed in their estimation of something called ‘demand surge’ (i.e. the increase in costs of construction materials and repair work following multiple and repeated loss events in adjacent territories), and did poorly at anticipating specific kinds of property damage, missing roughly 30–60 per cent of losses in some of the coastal counties where the storms hit because of poor exposure data (Guy Carpenter, Citation2014). In addition to problems in the models’ damage estimates, the back-to-back, record-breaking seasons sent tremors across the industry about a more basic set of questions: how variable was hurricane activity? Was this variability properly reflected in the cat models? And if not, by how much could insurers be under-pricing future exposure? To top it all off, these doubts were further amplified by a growing number of scientific studies linking the effects of extreme weather to climate change.

Here come the warm jets: Climate cycles versus climate change

Cat modellers faced mounting pressure from their insurance and reinsurance clients to respond to the 2004/2005 seasons. In an analysis produced after Katrina, Munich Re, the largest reinsurance company, argued ‘There is no doubt that the models used to simulate the hurricane risk in the North Atlantic need adjusting’ (Munich Re, Citation2006, p. 4). While reinsurers covered about one-third of the insured losses from 2004 (roughly what reinsurers expect in a severe year), they ended up carrying two-thirds of losses in 2005, a major over-extension of their capital reserves (Nutter, Citation2006).

Partly as a result of reinsurer discontent, the cat-modelling firm RMS made a decision to fundamentally change the way it analysed and presented catastrophe risk from hurricanes (Author’s interviews with RMS staff, telephone, 11 Septemnber, 2019). Rather than sticking to improvements of its standard annual estimate of hurricane risk, RMS began developing what it called a Medium-Term Rate (MTR) view of risk – a five-year, forward-looking forecast attuned to evidence that hurricane activity was, in fact, not a stationary phenomenon that could be measured through annualized historical averages. In doing so, RMS was leveraging two rival but largely complementary agendas in the scientific community of hurricane researchers – one linked to advances in knowledge about climatological cycles and the other linked to advances in knowledge about climate change.

Embracing this new knowledge would mean discarding old assumptions. For instance, use of the full HURDAT dataset was a methodological convention that had morphed into a de facto best practice in the sector since first introduced by Karen Clark in 1986. But in the early 2000s, researchers at NOAA’s National Hurricane Center, the scientists responsible for managing and maintaining HURDAT, published an influential paper in the journal Science (Goldenberg et al., Citation2001) suggesting there was significant variability in the decadal frequency of tropical cyclones in the North Atlantic. The new research seemed to justify not using the full dataset when estimating annual loss from future hurricanes.

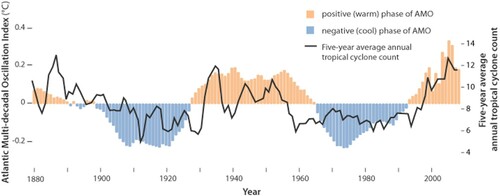

Their article called attention in particular to the influence of the El Niño Southern Oscillation (ENSO) and something they called the ‘Atlantic multi-decadal mode – now identified as the Atlantic Multi-decadal Oscillation, or AMO – on Atlantic hurricane activity.Footnote18 Rather than occurring in a steady stream of annual events, hurricanes appeared to arrive in decadal waves, with more storms occurring between 1930s and 1960s, followed by a significant decline from the 1970s to 1990s (see ). ‘Because these changes exhibit a multi-decadal time scale’, they argued, ‘the present high level of hurricane activity is likely to persist for an additional ∼10 to 40 years. The shift in climate calls for a re-evaluation of preparedness and mitigation strategies’ (Goldenberg et al., Citation2001, p. 474, emphasis added).

In short, the National Hurricane Center’s lead scientists were proposing that, in the years following Andrew, the North Atlantic was moving into a period of heightened storms. From a (re)insurer’s perspective, if there was an actual fluctuation in the frequency of hurricanes on the scale of decades, then the sector was potentially heading into a stormy period with a drastic increase in loss events. While Goldenberg et al do not mention climate change in their article nor attribute any shifts in hurricane activity to climate change – indeed, none of them are climate scientists – a separate cohort of climate researchers, using much of the same data, began to analyse, and detect, a potential influence of anthropogenic climate change layered onto the ‘natural’ climatological cycles.

In a prophetic article published in the journal Nature just weeks before Hurricane Katrina made landfall, MIT climate scientist Kerry Emanuel argued that while there was minimal proof that climate change was driving any increase in the frequency of hurricanes, it did appear that a gradual warming of the average sea surface temperature was driving an increase in the intensity of storms. ‘My results suggest that future warming may lead to an upward trend in the tropical cyclone destructive potential, and – taking into account an increasing coastal population – a substantial increase in hurricane-related losses in the twenty-first century’ (Emanuel, Citation2005, p. 686). In a subsequent article, co-authored with noted climatologist Michael Mann, Emanuel stated the case even more bluntly: ‘anthropogenic factors are likely responsible for long-term trends in tropical Atlantic warmth and tropical cyclone activity’ (Mann & Emanuel, Citation2006, p. 233). Discounting the effects of AMO on sea surface temperatures, Mann and Emanuel explicitly linked sea-surface warming to warming trends connected to increased greenhouse gases in the atmosphere.

Eliciting the future

Citing both of these communities of scientists, RMS modellers began developing a new approach to simulating hurricanes that abandoned the use of the long-term average of hurricane activity as their ‘primary-view’ of risk. As they argued in a scientific publication rolling out their new model:

The assumption of using the average of the historical record to represent the risk over the next few years holds as long as there are neither trends in activity or evidence that we are currently in an extended period of heightened or reduced activity. The record shows however that there have been prolonged periods of rates significantly different from the long-term average and a clear demonstration that we are now within one of these elevated periods. (Lonfat et al., Citation2007, p. 500)

In addition to changing how they sampled from the historical record, RMS’ modellers also turned their attention to the problem of climate change. Unlike general circulation models of climate change, catastrophe models are not based on fundamental physical equations of atmospheric behaviour. The computational demands of dynamically estimating phenomena like hurricane activity remains an expensive and commercially untested modelling approach (Author interviews with both AIR and RMS cat modelling engineers, telephone, 11 September, 2018 and 16 April, 2019). Including information about climate change in catastrophe risk estimations required new forms of calculation that went beyond either the statistical archival’ or ‘enactment’ idioms of risk that the models had already creatively brought together. So, instead of building an event catalogue of synthetic storms based solely on sampling parameters from the historical record, RMS turned toward the elicitation of experts to select and weight the importance of different climatological factors in storm generation.

This elicitation process, called the ‘Delphi-method’, was pioneered in the 1950s by the RAND Corporation (Andersson, Citation2018) and uses expert judgment to compensate for a lack of empirical data in decision-making. It is frequently applied to assess risk in situations of deep uncertainty, including, infamously, estimates for which American cities were the likeliest targets of a Soviet nuclear strike (Dalkey & Helmer, Citation1951). While considered standard operating procedure in certain realms of military planning, corporate strategy, and even in the pricing of commercial insurance against terrorist strikes, Delphi methods were new territory for insurance-related risk assessments of extreme weather. They represented the layering of additional modes of calculation into the already complex causal logic of cat models.

Based neither on empirical data nor historical records, the elicitation consisted of asking climate experts to weight 13 different parameters that drive hurricane activity (e.g. ENSO, AMO, wind shear predictors, etc.). Iterative rounds of weighting allowed experts to adjust their rankings of which parameters they thought would be most influential in the coming five years until the processes reached ‘a group consensus on the distribution of alternative interpretations’ of future storm activity (Lonfat et al., Citation2007; Author interview with RMS cat modeller, telephone, 18 March, 2020).Footnote19 The weightings were inserted upstream of the cat model simulation processes, modifying the Monte Carlo results by giving extra credence to specific climatological drivers of hurricane behaviour.

This new calculative pluralism departed radically from the ‘stochastic impartiality’ that characterized the success of the initial cat models in structuring social agreement about insurance prices. Compared to the previous styles of reasoning that cat models had so artfully combined – both premised around a stationary understanding of hurricane activity – elicitation proposed a very different epistemological basis from which to simulate weather-based catastrophes. Estimates were tied explicitly to experts’ professional concerns about the influence of climate change on future storms. Their convergence, as reported by RMS, was the solution that Robert Muir-Wood had hinted at in his 2005 Chicago presentation to US insurance regulators.

Forecasting hurricane activity for the period between 2006 and 2010, RMS’s first MTR model estimated a 21–40 per cent increase in risk from hurricane peril for the Atlantic and Gulf Coast states (Begos, Citation2007a; Lonfat et al., Citation2007). Increased risk meant the need for increased insurance premiums, an argument with obvious appeal to insurance companies. Within the year, the other main cat modellers, AIR and EQECAT, followed with their own versions of the new Medium-Term Rate model. RMS submitted the new model to the Florida Commission on Hurricane Loss Projection Methodology (FCHLPM, see endnote 8) for validation in the summer of 2006, but it was already having a major impact on negotiations around insurance policy renewals for 2006 (St. John, Citation2010).Footnote20

Five years forward, two steps back

As insurers began submitting rates to regulators for higher insurance premiums in line with the MTR assessments, they were met with strong reactions from homeowner associations, state legislators and consumer advocates who demanded an outright rejection of the new RMS model (Begos, Citation2007b). Despite RMS’ scientific publications and presentations about this new method (Jewson et al., Citation2009; Lonfat et al., Citation2007; NAIC, Citation2006) its approach brought intense scrutiny to the position of cat modelling firms in the ecosystem of homeowner insurance pricing. The Consumer Federation of America and the Center for Economic Justice, two national consumer advocate organizations expressed deep scepticism in a joint letter to the President of the National Association of Insurance Commissioners.Footnote21 Accusing RMS of colluding with the insurance industry to raise premium rates, they called into question the status of cat modellers as independent, third party certifiers of risk. But they also claimed that insurers were violating their initial promises to homeowner organizations about the integrity of the models.

This [5-year] approach is the complete opposite of that promised by insurers when these models were first introduced. Consumers were told that, after the big price increases in the wake of Hurricane Andrew, they would see price stability. This was because the projections were not based on short-term weather history, as they had been in the past, but on very long-term data from 10,000 to 100,000 years of projected experience. (Hunter & Birnbaum, Citation2006, p. 2)

Consumer groups were not alone in their consternation with the Medium-Term Rate models – experts also spoke out. One of the modelling specialists reviewing the model for the Florida Commission, told reporters that RMS ‘wasn’t able to satisfactorily answer some questions about the model’, especially concerning how the company ‘used a small group of experts to justify the five-year forecast’ (Begos, Citation2007c). And Mark Frankel, the Director of the Science and Ethics Programme at the American Association for the Advancement for Science, responding in an article in the Tampa Tribune simply asked: ‘Is there any sound science to believe in this model? That seems to me very much up in the air’ (Begos, Citation2007b).

Politicians in Florida also began mobilizing against rate increases. While Louisiana suffered the brunt of the 2005 season and its losses, it was the risk that a similar event might hit Florida that really concerned insurers. The models suggested (and continue to suggest) that a direct hit from a category 5 hurricane on Miami could see total losses of $300 billion (in 2017 dollars) and insured losses upwards of $180 billion (Swiss Re, Citation2017). Such an event could cripple the entire sector and thrust the state’s real-estate-based economy into a downward spiral (Weinkle, Citation2015). RMS’ MTR model increased the odds on this kind of event happening in the next five years, but Florida politicians refused to allow yet another round of major premium hikes on homeowner policies (Ubert, Citation2017).

RMS, meanwhile, argued that its new models were correcting an actuarially unsound pool of risk and protecting the general taxpayer from footing future disaster bills for under-insured coastal property owners (NAIC, Citation2006). As Hemant Shah, the CEO of RMS, told the Sarasota, FL-based Herald-Tribune, ‘How are you going to incent people to mitigate their homes if you don't have the right kind of signaling on what risk really is?’ (St. John, Citation2010). Expert elicitation, from RMS’ perspective, was a critical tool for governing and distributing the meteorological uncertainties of climate-fuelled catastrophes.

While these battles played out in the press and public forums, insurers had to wait for approval from the Florida Commission before they could actually use the MTR model to set homeowner premiums. But other (re)insurance actors, not subject to state regulation, were rapidly incorporating the five-year perspective into their risk assessments, putting a squeeze on primary insurers. Both reinsurers and rating agencies hardened their views on underwriting in the region (Guy Carpenter, Citation2014; Munich Re, Citation2006). By applying RMS’ MTR model, Moody’s, S&P’s and AM Best determined that insurers across the southern Atlantic states, but particularly in Florida, were facing an $82 billion shortfall in their capacity to cover the increased loss estimates of the MTR model (St. John, Citation2010). The negative assessment of capital adequacy put extra pressure on insurers from their investors to increase premiums or risk reductions in their stock price.

The dynamics of insurance regulation made finding common ground between the insurers and coastal state officials extremely difficult, with shareholders pressing for higher rates and citizens endorsing political candidates who were guaranteeing no significant price hikes. As a result, many insurance firms opted to reduce coverage across the entire region. To slow the exodus, Florida state legislators passed a series of tough exit laws, but they also moved to protect homeowners from losing their insurance by expanding what was known as the ‘residual’ market, a state-run insurance fund called Citizens Property (Ubert, Citation2017). Intended as an insurance scheme of last resort, Citizens quickly ballooned from representing a ‘residual’ pool of homeowner risk to become the largest insurer of residential property in the state (Weinkle, Citation2015).Footnote22

If Florida played a particularly visible role in how this overall dynamic played out, it was far from alone. Nearly every Gulf and Atlantic coastal state was affected by the MTR model changes, and almost all took cues from the Florida model review commission on whether or not to approve the use of the new models in ratemaking. After a series of unfavourable presentations to the commission, RMS withdrew the MTR model from the validation process in 2007 (Begos, Citation2007c; Author interview with FLOIR staff, telephone, 18 July, 2019). This did not stop many states from eventually passing their own pre-emptory bans of the new assessment techniques. South Carolina’s Department of Insurance 2014 law on ‘The Use of Hurricane Models in Property Insurance Ratemaking’ is exemplary. It specifies that the Department does not ‘permit the use of any of the following model variations: ‘short-term’, ‘near-term’, ‘medium-term’, ‘warm-phase’, ‘warm-water’, ‘warm sea surface temperature’, or any other variation that, although different in the title than the aforementioned examples, has a similar effect of limiting the modelled period. Only the long-term variation of catastrophe models is permitted’ (South Carolina DOI, Citation2014, p. 3).

What had been an informal best practice based on the combination of two risk idioms – ‘simulated’ event catalogues based on the full ‘archival’ record of hurricanes – became a regulated standard. Previously an implicit norm, ‘stochastic impartiality’ was codified as part of the models’ legal status. It was now an official source of their legitimacy.

Reclassification and retreat

Despite withdrawing from the Florida hurricane commission review in 2007, RMS continued to conduct annual elicitations through 2008 and promote the MTR to clients as its ‘primary-view’ of risk. Other cat modellers shifted tactics earlier on. According to a team of AIR scientists, the medium-term rate models, what they called their ‘near-term model’, depended on too many leaps of causality to justify its use in rate setting; each additional leap ‘introduced too much uncertainty into the determination of hurricane activity’ (Dailey et al., Citation2009). In their own publication in the peer-reviewed Journal of Applied Meteorology and Climatology, the AIR team argued that even if the warmer sea surface temperatures identified as a phase of the AMO is a good proxy of hurricane activity, warmer temperatures alone do not indicate more land-falling hurricanes because of other intervening climate patterns, including El Niño and a differential pressure system called the Bermuda High, among other factors. Each step of logic in the five-year perspective, beginning especially with the explicit deployment of human judgment, presented additional challenges to translating risk estimations into the idiom of statistical probability that was the cornerstone of the models’ initial success.

As their technical problems mounted, AIR modellers pivoted in 2007 and, rather than offering a five-year forward-looking model, introduced what they call their ‘warm sea surface temperature’ (WSST) catalogue. They make no claims about capturing any data regarding climate change in their model, but emphasize the correlation between the conditions of warming sea surfaces in the Atlantic Basin and increased hurricane intensity. They sell the model as a ‘supplemental’ view of risk, rather than their ‘primary’ view. In other words, where cat modellers initially emerged after Hurricane Andrew as guarantors of a ‘stochastically impartial’ view of catastrophic risk, they were now offering a proliferation of risk perspectives, asking their different audiences to pick and choose from multiple assessment offerings.

Of all the actors in the insurance constellation, this move toward multi-modal modelling was perhaps most favourable to (re)insurance brokers. It increased their value as agents who can help their clients ‘own their view of risk’, by ‘blending’, ‘morphing’ and ‘fusing’ outputs from multiple models into bespoke views of risk (Guy Carpenter, Citation2014; Major, Citation2013). But the move toward climate conditioned catalogues also heralded the end of a particular logic in cat modelling, where the occurrence of natural disasters, despite their randomness, were nonetheless characterized by an underlying ‘stationarity’.

Perhaps the most significant challenge, however, to the Medium-Term perspective came from the hurricanes themselves. Instead of cooperating with the dire expectations of increased damages, storm activity shifted drastically across the entire basin. There was not a single major landfalling hurricane for the entire period between 2006 and 2010.Footnote23 The scholar Robert Pielke Jr. (who also served as an expert in one of RMS’ latter elicitations) wrote a harsh commentary comparing the ‘skillfullness’ of the MTR models in forecasting future hurricane seasons as roughly similar to that of a ‘room full of monkeys’ (Pielke, Citation2009). Whether or not such derision was deserved, until the recently devastating 2017 season, the southern Atlantic coast of the United States experienced a roughly 13-year ‘drought’ of major hurricanes – the longest such period in NOAA’s historical record.

Already facing strong headwinds of doubt, compounded by problematic updates in 2011 to its inland wind field simulations (Willis Re, Citation2012), RMS quietly reverted to the long-term historical view of risk for many of its key services and clients. The company continues to offer the MTR perspective (like AIR), but its current efforts have focused on reanalysis and bootstrapping of the decadal cyclone and sea-surface temperature data sets, rather than actually dealing with questions of climate change (Bonazzi et al., Citation2014; Caron et al., Citation2018). Despite cat modellers efforts to push damage estimates into a world where the statistical analysis of hurricanes is decoupled from their past behaviour, dissent from homeowner advocates, regulators, politicians, and eventually the storms themselves, created a broader loss of consensus with climate-enhanced cat models.

Conclusion: Multiplication of risk perspectives

This paper examines how expert understandings of peril from severe tropical storms in the North Atlantic shifted away from a ‘stationary-view’ toward a ‘non-stationary view’ of weather-related risk. The effort to embrace non-stationarity in risk estimates caused one of the principal cat modelling firms, Risk Management Solutions, to dramatically alter the way it projected future hurricane losses, and thus how insurers, reinsurers, rating agencies and regulators negotiated the value of insurance premiums. The shift in expert perception was driven largely by the unprecedentedness of the 2004/2005 hurricane seasons and engagement by the (re)insurance sector with a broader scientific debate about the influence of climate cycles and climate change on the underlying stochastic nature of extreme weather. If cat models initially served to create consensus about how to price the risk to property from rare events, the resulting model revisions pursued by RMS after 2005 triggered new disputes about the acceptable role of expertise in determining the affordability of insurance.

RMS’s initial approach to the problem of non-stationarity was to combine new empirical sampling techniques based on climate variability with expert elicitation in the selection of models believed to be most predictive of the coming five-years of storm conditions. The former technique addressed the influence of natural climate cycles on hurricane formation (such as the Atlantic Multidecadal Oscillation) whereas the latter targeted expert understandings of the influences of climate change. The overall impact was an increase in the model’s disaster loss estimates from hurricanes by 21–40 per cent across the Atlantic and Gulf coasts compared to the long-term, stationary view (Lonfat et al., Citation2007).

In mixing statistical, simulative and subjective ‘styles of reasoning’ – three different idioms of risk identification – RMS was, in some ways, merely extending the combinatorial approach that made the models a success in the first place. Delphi-style assessment techniques, however, proved one bridge too far for stakeholders in the field of homeowner insurance to cross. Although widely used in areas of strategic planning, as well as by cat modellers in assessing commercial insurance for terrorist attacks and nuclear accidents, expert elicitation is generally at odds with the kind of trust in numbers’ that has arisen in the more actuarially strict world of homeowner pricing. Its inclusion in the simulated environment of hurricane models introduced a troubling element of epistemological hybridity that threatened the status of the models, revealing the frontiers of translatability between different idioms of risk.

Yet even when elicitation was finally renounced, selective sampling of the HURDAT record continued to upset previous political agreements of how to assess hurricane risk based on ‘stochastic impartiality’, prompting coastal states to officially exclude new modelling techniques from ratemaking. Rather than promoting a single view of hazard risk, modellers now offer multiple visions of risk (a primary and a supplemental), with multiple time horizons (one to five years). The multiplication of risk perspectives means that adversely situated actors can calibrate the perspective that best corresponds to their position and interests within the constellation of insurance-related actors: capital markets, reinsurers and rating agencies prefer more conservative models; regulators, consumer advocates and legislators prefer models that prevent price hikes on their constituents; and insurers, caught between a rock and a hard place, try to navigate between the two.

The net result is that model outputs now require more interpretive work from their clients and create even less of a shared scientific basis for agreement about prices then they did in the past (Weinkle & Pielke, Citation2017). While MTR, WSST and other models are not directly allowed into ratemaking for homeowner premiums, they still have a significant impact on insurance prices since reinsurers and capital markets use them to assess the cost of reinsurance, a cost that is passed back along to customers. In the coastal markets of Florida, Louisiana and Texas the reinsurance portion of the premium (which covers catastrophe risk), can be upwards of one-third of the entire premium (Gilway, Citation2017; Interview with Head actuary of Gulf state department of insurance, telephone, 16 September, 2019).

One of the most remarkable developments since 2005, however, is that despite the selective use of these models in certain corners of (re)insurance, premium prices have not spiked as many feared. The decades long absence of major disasters, and the entrance of alternative risk transfer capital (enabled by the models) have contributed to keeping coastal (re)insurance prices lower than predicted when the alternative models were first released. Beginning in 2017, a new spate of devastating storms have since hit the US, but it remains to be seen how these storms will impact risk assessment practices going forward. By denying direct use of the Medium-Term models in ratemaking and extending subsidized forms of public insurance, coastal state regulators seem, for the moment at least, to be intent on shoring up the existing system of risk distribution. In doing so, they are also dodging a fuller accounting of how to address the ongoing and worsening impacts of climate change in their regions, particularly regarding how the inexorable creep of sea level rise will complexify (and potentially render moot) the question of windstorm underwriting along the coasts.

As of this writing, many questions remain about the relationships between climate variability and climate change on hurricane formation. A growing body of sciences points out that the frequency of North Atlantic hurricanes might actually decrease (due to countervailing effects of simultaneous warmer sea surface temperatures in the Indo-Pacific, which promotes wind shear), but become more intense, move more slowly overland and produce more rain (Kossin et al., Citation2020; Sobel et al., Citation2016; Trenberth et al., Citation2018). One thing is certain, however, and that is that the issue of non-stationarity is not going away. And it is not a matter isolated just to hurricane risks, nor just to US Gulf states. Similar dynamics are already at play for other perils such as fire, drought, heatwaves and rain-induced flooding around the globe. The assessment of hurricane risk analysed here serves as a bellwether of the political disputes about the extent to which climate-influenced risks should be assessed as problems of individual property-holders and framed in the context of insurance, or require a new social compact of risk sharing. Pressure will continue to build on developing loss-estimate tools that can account for a dynamic, non-stationary world of weather, but new techniques will not foreclose the deeper questions of how to fairly distribute the escalating economic burdens of a changing climate.

Acknowledgements

The author wishes to thank Stephen Collier, Stefan Timmermans, Eve Chiapello, Jayme Walenta, Ed Walker and Hannah Landecker as well as participants from the University of Hamburg workshop on ‘Finance as a response to the Ecological Crisis?’, the New School for Social Research workshop on ‘Climate Change and Insurance’, and the Max Planck Institute for the History of Science’s ‘Knowledge of and about the Anthropocene’ seminar for feedback on earlier drafts of this paper.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Ian Gray

Ian Gray is a PhD candidate in the Department of Sociology at UCLA and a Visiting Predoctoral Fellow at the Max Planck Institute for the History of Science. His work focuses on institutional responses to coping with climate change and the social consequences of these responses.

Notes

1 The US insurance market, through a quirk of federalism, is regulated at the state level, creating 50 different insurance markets. Individual state insurance regulators are often political positions: in 11 states these are elected positions, while in 19 states the governor appoints the regulator (cf. Lehmann, Citation2018). As a result, US property and casualty markets tend to be more politicized than other mature insurance markets such as in Europe or Japan.

2 Cat models have evolved to cover a wide range of perils. This paper focuses specifically on efforts to model the ‘wind’ peril from hurricanes; flood damages from storm surge are also modelled, but not subject to inclusion in ratemaking, since flood risk in the United States is currently assessed by federally determined Flood Insurance Rate Maps (cf Elliott, Citation2017), although this is beginning to change and cat modelling is playing an increasing role in shifting the calculation of flood hazard toward parcel-level assessments.

3 Elicitation is a widely used tool of risk assessment for catastrophic events with little to no record of occurrences (e.g. nuclear reactor meltdowns or terrorist attacks), but it is ‘radical’ in the context of evaluating the price of homeowner insurance.

4 Paul Pierson (Citation2004) argues that institutions often persist because they draw legitimacy from complementary sets of supporting institutions. It is fruitful to think about US homeownership in this context of embedded and interdependent institutional relations.

5 Despite the notion of ‘counter-principle’, the development of insurance should not be read as something that happens in contra-distinction to capitalism: ‘The socialization of risk does not seek to undermine capitalist inequality, precisely the opposite. It is a means of treating the effects of that inequality’ (Dean, Citation1998, p. 31).

6 What I mean by this is that for many individuals, the ‘choice’ to live on the coast was potentially influenced by decisions made generations ago by a grandparent or prior ancestor; made perhaps with the hope of being able to pass along property to future descendants. When these initial choices were made, there was no inkling of the problems of climate change, either for homeowners or the insurance industry. Other individuals, however, are making these decisions contemporaneously, even in the face of well-mediatized information about future coastal impacts from climate change. Cat models, like the rest of the private insurance market, do not differentiate between the temporality of ‘choices’ to live on the coast. For the ethics of risk temporalities see Elliott (this issue).

7 Interview participants included individuals from all three major catastrophe modelling firms (RMS, AIR and CoreLogic), two of the largest global reinsurance companies, two major (re)insurance brokerage firms, a large commercial property owner, a major US domestic insurance company, a national US consumer advocacy group, a former US Gulf-coast insurance regulator and West-coast insurance regulator, two hedge funds involved in developing and investing in catastrophe bonds and professional staff from the Departments of Insurance of the two most hurricane prone Gulf-states.

8 The FCHLPM is an advisory commission to the Florida Office of Insurance Regulation (FLOIR) composed of experts in the field of meteorology, weather modelling, engineering and finance that reviews the models used for ratemaking in the state of Florida. The FCHLPM is currently the only state-level commission in the United States with the technical capacity or public mandate to review the scientific assumptions of catastrophe models. The Commission does not pass regulations itself, but advises the FLOIR on which models meet approved standards for the purposes of ratemaking. Almost every other coastal state insurance office in the US follows the lead of the FCHLPM. As a result, it acts, for all intents and purposes, as the sole forum for scrutinizing cat models and holding them publicly accountable.

9 Clark’s initial cat modelling scheme was itself deeply reflective of the combinatorial creativity that Ewald (Citation1991) and Bougen (Citation2003) note as constitutive of insurance technologies. Clark borrowed extensively from Friedman’s computational structure, particularly with regard to modelling exposure of the built environment (what Friedman called the ‘vulnerability module’ of the model). But in a fruitful act of bricolage, she enhanced Friedman’s deterministic simulation of storm events (the ‘hazard module’) by drawing on the work of Canadian wind engineer Alan Davenport and his collaborators, who had introduced stochastic, Monte Carlo simulations of hurricanes to statistically characterize the full range and distribution of wind events that might affect building projects for which Davenport’s firm served as structural engineering consultant (Georgiou et al., Citation1983).

10 At the time of Clark’s, Citation1986 article, HURDAT covered roughly 151 landfall hurricane incidents going back to 1900; that number has grown in the past 30 years to include 49 more storms, or less than two per year. Not a large number. The HURDAT dataset is available at http://www.aoml.noaa.gov/hrd/hurdat/All_U.S._Hurricanes.html (Last accessed on 04/09/2019).

11 This is not to suggest that the models erase informal or tacit knowledge in the process of assessing and insuring cat risks. Ericson and Doyle (Citation2004a) write convincingly that such practices are irreducible to how insurance functions since decisions about risk depend also on aesthetic, emotional and experiential judgments (see also Jarzabkowski et al., Citation2015; O’Malley, Citation2004). Following Porter, what the models achieve is a displacement and re-embedding of discretion into denser structures of formal standards and professional norms, although others (particularly Weinkle & Pielke, Citation2017) have questioned the accountability of these standards since significant portions of the models remain proprietary and inscrutable by the public or its representatives.

12 Insurance companies had more or less pegged the upper end of catastrophic loss in Florida at $4 billion, based on the previous record-setting losses from Hurricane Hugo. With Andrew, they were off by a factor of four (McChristian, Citation2012).

13 While I have been using Hurricane Andrew to analyse the impact of cat models on hurricane insurance, the metrics of PML and AAL have become institutionalized for a wide range of global catastrophe perils. On the one hand, the PML figures prominently in negotiations between reinsurers and local insurance companies regarding triggers or attachments points for reinsurance contracts; discussion between insurers and regulators, on the other hand, tends to focus more on the AAL, which helps create consensus about the minimum amount of premiums primary insurers need to collect in order to maintain adequate reserves in the case of not just ‘cat’ losses, but losses from smaller storms as well (what are known in the industry as ‘kittens’).

14 In addition to increasing the position of reinsurance companies in cat risk markets, Andrew also pushed exposed states to strengthen residual insurance markets by creating public reinsurance schemes. In 1993, the Florida legislature created the Florida Hurricane Fund, a state trust that offers additional risk capital to primary insurers at reduced cost because of its tax-exempt status. The state-administered residual homeowner insurance market, which became known as Citizen’s Property Insurance, is a separate entity and is discussed further below.

15 A major hurricane is considered any storm between a Category 3–5 on the Saffir-Simpson hurricane scale, which means any storm with sustained winds above 111 miles per hour (178 km/h). The monetary figures do not take inflation into account.

16 The 2017 hurricane season recently surpassed 2005 as the costliest year of natural disasters on record, with three major hurricanes and aggregated losses in excess of $300 billion. But Katrina, when adjusted for inflation, still remains the single most destructive storm in US history.

17 Catastrophe bonds are part of a niche but growing financial sector called ‘insurance-linked securities’ (ILS) which also consumes information from cat models (Bougen, Citation2003). While ILS proponents laud the ability of cat bonds to expand catastrophe coverage, some analysts see them as destabilizing traditional reinsurance by taking away reinsurers’ business (Jarzabkowski et al., Citation2015), or even worse, opening up new and exploitive avenues of disaster-based capital accumulation (Johnson, Citation2014). Despite the importance of ILS to questions of insuring climate risks, I bracket them here in order to stay with the cat models.

18 AMO is characterized as a macro-climatological system linked to the thermohaline circulation that transfers warm waters from the Indian Ocean to the Northern Atlantic along a giant underwater ‘conveyor-belt’ of oceanic currents. AMO alters the sea surface temperatures of the Northern Atlantic between cooler and warmer phases. Its warmer phases appear to correspond to periods in which more hurricanes form.

19 In the first elicitation, the four climate experts involved were Kerry Emanuel from MIT, James Elsner from Florida State University, Tom Knutson from NOAA’s Geophysical Fluid Dynamics Laboratory and Mark Saunders from University College London. According to an interview with one of these participants, none of the experts understood the exact goal of the exercise until after the fact (cf., also Begos, Citation2007a). Extra context was also gained in an Author interview with an RMS executive involved in the elicitation 09/13/18.

20 Homeowner insurance is largely bought and sold on an annual basis; the seasonality of the renewal market is driven principally by the reinsurance sector, which finalizes the vast majority of catastrophe contracts on 1 January each year.

21 The letter was also sent to all the Insurance Commissioners of the Atlantic and Gulf States, as well as the Attorney Generals of these states.

22 The changes to the Florida insurance markets following 2004/2005 are a connected but separate story to the innovations in cat modelling. They are covered extensively in Ubert (Citation2017), Weinkle (Citation2015) and Johnson (Citation2011) (particularly chapter 6). Since Citizens was capped from increasing premiums beyond a specific percentage per year (currently 10 per cent), it effectively subsidized prices for coastal properties, even those that might otherwise afford actuarily prudent insurance. Its solvency is ‘guaranteed’ by a legislatively granted authority to levy a premium increase on all rate payers across the state should a disaster strike and Citizens’ surplus prove inadequate to pay its claims. The political and financial consequences of this guarantee have not been tested.

23 The year 2010 actually saw a very high number of hurricanes and tropical storms (19), just none of them made landfall in the United States. The 2011 and 2012 seasons continued in the same vein, while most storms remained relatively weak in strength, two hurricanes struck the United States (Irene in 2011 and Sandy in 2012), although neither hit the Gulf coast.

References

- Andersson, J. (2018). The future of the world: Futurology, futurists, and the struggle for the post-cold war imagination. Oxford University Press.

- Beck, U. (1992a). Risk society: Towards a new modernity. Sage Publications.

- Beck, U. (1992b). From industrial society to the risk society: Questions of survival, social structure and ecological enlightenment. Theory, Culture & Society, 9(1), 97–123.

- Beck, U. (1996). Risk society and the provident state. In S. Lash, B. Szerszynski & B. Wynne (Eds.), Risk, environment and modernity: Towards a new ecology (pp. 27–43). Sage Publications.

- Beck, U. (2008). World at risk. Polity.

- Begos, K. (2007a, January 7). Insurance risk forecast called faulty. Tampa Tribune.

- Begos, K. (2007b, March 4). Insurance modeling review is tricky. Tampa Tribune.

- Begos, K. (2007c, May 16). Storm model fizzles. Tampa Tribune.

- Blake, E. S., Landsea, C. W. & Gibney, E. J. (2011). NOAA technical memorandum NWS NHC-6. 49.

- Bonazzi, A., Dobbin, A. L., Turner, J. K., Wilson, P. S., Mitas, C. & Bellone, E. (2014). A simulation approach for estimating hurricane risk over a 5-yr horizon. Weather, Climate, and Society, 6(1), 77–90.

- Bougen, P. D. (2003). Catastrophe risk. Economy and Society, 32(2), 253–274.

- Brulle, R. J. (2014). Institutionalizing delay: Foundation funding and the creation of US climate change counter-movement organizations. Climatic Change, 122(4), 681–694.

- Bühlmann, H. & Lengwiler, M. (2016). Calculating the unpredictable: History of actuarial theories and practices in reinsurance. In N. V. Haueter & G. Jones (Eds.), Managing risk in reinsurance (pp. 118–143). Oxford University Press.

- Bulkeley, H. (2001). Governing climate change: The politics of risk society? Transactions of the Institute of British Geographers, 26(4), 430–447.

- Callon, M. (1986). Some elements of a sociology of translation: Domestication of the scallops and the fishermen of St Brieuc Bay. In J. Law (Ed.), Power, action, and belief: A new sociology of knowledge? (pp. 196–223). Routledge.

- Caron, L.-P., Hermanson, L., Dobbin, A., Imbers, J., Lledó, L. & Vecchi, G. A. (2018). How skillful are the multiannual forecasts of Atlantic hurricane activity? Bulletin of the American Meteorological Society, 99(2), 403–413.

- Clark, K. M. (1986). A formal approach to catastrophe risk assessment and management. Proceedings of the Casualty Actuarial Society, 73, 69–92.

- Clark, K. M. (2002). The use of computer modeling in estimating and managing future catastrophe losses. Geneva Papers on Risk and Insurance – Issues and Practice, 27(2), 181–195.

- Collier, S. J. (2008). Enacting catastrophe: Preparedness, insurance, budgetary rationalization. Economy and Society, 37(2), 224–250.

- Dailey, P. S., Zuba, G., Ljung, G., Dima, I. M. & Guin, J. (2009). On the relationship between North Atlantic Sea surface temperatures and US hurricane landfall risk. Journal of Applied Meteorology and Climatology, 48(1), 111–129.

- Dalkey, N. & Helmer, O. (1951). The use of experts for the estimation of bombing requirements: A project Delphi experiment. Rand Corporation.

- Dean, M. (1998). Risk, calculable and incalculable. Soziale Welt, 49(1), 25–42.

- Desrosières, A. (1998). The politics of large numbers: A history of statistical reasoning. Harvard University Press.

- Edwards, P. N. (2010). A vast machine: Computer models, climate data, and the politics of global warming. MIT Press.

- Elliott, R. (2017). Who pays for the next wave? The American welfare state and responsibility for flood risk. Politics & Society, 45(3), 415–440.

- Emanuel, K. (2005). Increasing destructiveness of tropical cyclones over the past 30 years. Nature, 436(7051), 686–688.

- Ericson, R. & Doyle, A. (2004b). Catastrophe risk, insurance and terrorism. Economy and Society, 33(2), 135–173.

- Ericson, R. V. & Doyle, A. (2004a). Uncertain business: Risk, insurance, and the limits of knowledge. Scholarly Publishing Division.

- Ewald, F. (1991). Insurance and risk. In G. Burchell, C. Gordon & P. Miller (Eds.), The Foucault effect (pp. 197–210). University of Chicago Press.

- Farrell, J. (2016). Corporate funding and ideological polarization about climate change. Proceedings of the National Academy of Sciences, 113(1), 92–97.

- Friedman, D. G. (1975). Computer simulation in natural hazard assessment. University of Colorado Press.

- Georgiou, P. N., Davenport, A. G. & Vickery, B. J. (1983). Design wind speeds in regions dominated by tropical cyclones. Journal of Wind Engineering and Industrial Aerodynamics, 13(1), 139–152.

- Giddens, A. (1990). The consequences of modernity. Stanford University Press.

- Gilway, B. (2017). Citizens Property Insurance Corporation 2018 public rate hearing. CEO Testimony at Florida Office Insurance Regulation, August 27, North Miami, FL. Retrieved from https://www.floir.com/Sections/PandC/ProductReview/CitizensPublicRateHearing2017.aspx [Accessed 19 October 2019]

- Goldenberg, S. B., Landsea, C. W., Mestas-Nunez, A. M. & Gray, W. M. (2001). The recent increase in Atlantic hurricane activity: Causes and implications. Science, 293(5529), 474–479.

- Grossi, P. & Kunreuther, H. (Eds.). (2005). Catastrophe modeling: A new approach to managing risk (Vol. 25). Kluwer Academic Publishers.

- Guy Carpenter. (2014). Ten-year retrospective of the 2004 and 2005 Atlantic hurricane seasons Part I and II. Guy Carpenter.

- Hacking, I. (1992). ‘Style’ for historians and philosophers. Studies in History and Philosophy of Science Part A, 23(1), 1–20.

- Heymann, M., Gramelsberger, G. & Mahony, M. (Eds.). (2017). Cultures of prediction in atmospheric and climate science: Epistemic and cultural shifts in computer-based modelling and simulation. Routledge.

- Howe, J. P. (2014). Behind the curve: Science and the politics of global warming. University of Washington Press.

- Hunter, J. R. & Birnbaum, B. (2006, March 27). Letter to President of NAIC from the Consumer Federation of America and the Center for Economic Justice.

- Jacques, P. J., Dunlap, R. E. & Freeman, M. (2008). The organisation of denial: Conservative think tanks and environmental scepticism. Environmental Politics, 17(3), 349–385.

- Jahnke, A. (2010). The acts of god algorithm: Why the insurance industry is headed for the perfect storm. Bostonia: The alumni magazine of Boston University, (Summer), 29–33.

- Jarzabkowski, P., Bednarek, R. & Spee, P. (2015). Making a market for acts of god: The practice of risk trading in the global reinsurance industry. Oxford University Press.

- Jewson, S., Bellone, E., Khare, S., Laepple, T., Lonfat, M., Nzerem, K., … Coughlin, K. (2009). 5 year prediction of the number of hurricanes which make US landfall. In J. B. Elsner & T. H. Jagger (Eds.), Hurricanes and climate change (pp. 73–99). Springer.

- Johnson, L. (2014). Geographies of securitized catastrophe risk and the implications of climate change: Securitized catastrophe risk. Economic Geography, 90(2), 155–185.

- Johnson, L. T. (2011). Insuring climate change? Science, fear, and value in reinsurance markets. Dissertation in Geography. University of California, Berkeley, United States.

- Kossin, J., Knapp, K., Olander, T. & Velden, C. (2020). Global increase in major tropical cyclone exceedance probability over the past four decades. Proceedings of the National Academy of Sciences, 117(22), 11975–11980.

- Landsea, C. W., Vecchi, G. A., Bengtsson, L. & Knutson, T. R. (2010). Impact of duration thresholds on Atlantic tropical cyclone counts. Journal of Climate, 23(10), 2508–2519.

- Lehmann, R. J. (2018). 2018 insurance regulation report card (R Street Policy Study No. 163). R Street.

- Levin, K., Cashore, B., Bernstein, S. & Auld, G. (2012). Overcoming the tragedy of super wicked problems: Constraining our future selves to ameliorate global climate change. Policy Sciences, 45(2), 123–152.