?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The performance of a university depends on departmental activities in a network framework. The university is efficient only if these departments are efficient in their operations. The connection between departments is fundamentally complicated and should be scrutinised to offer more suitable ways for more enhancement. However, to our knowledge, no single study exists which assesses the performance of South African universities in a network structure accounting for the effects of exogenous factors on the overall and structural efficiencies. Our study employed the network-based DEA method to examine the performance of South African higher education institutions in a network structure of teaching and research for the period 2009/10–2016/17. The findings reveal that the efficiency of teaching activities is 0.942, while the efficiency of research operations is 0.782. The network-based performance of South African universities is 0.844, strongly associated with research efficiency. The findings also reveal that the percentages of staff with PhD and Master’s degrees and professional staff, student fees, personnel grant, and government funding influence the efficiency of research activities. A definite need for government organisations and other higher education-related stakeholders should incorporate these findings to strategies for National Development Plan 2030 targets.

1. Introduction

The development of tertiary education systems over the last few decades has attracted a lot of attention to the topic of ‘efficiency’ from both policymakers and economists (Agasisti Citation2017; Lee and Johnes Citation2021). Efficiency refers to the ability of higher education institutions (HEI) to produce the maximum amount of educational output (e.g. graduates and publications) with given resources (e.g. academic staff and budget) (Agasisti Citation2017; Johnes Citation2015). Following the 2008 Global Financial Crisis, and recently, the impact of COVID-19 on many governments’ budgets, there is a drive to improve the efficiency of universities. Policymakers are interested in improving public universities’ efficiencies because of the amount of funding universities receive from government and the objective of ensuring funding achieve value for public money (Agasisti Citation2017). As observed by Johnes (Citation2015), the interest in this area has tripled in recent times, owing to the increase in cost of education, changes in funding mechanisms and advances in methodologies used to quantify educational performance (see De Witte and López-Torres Citation2017 for a comprehensive review).

In South Africa, following the end of the apartheid regime in 1994, the new government has pursued several policies aimed at promoting efficiency, equity, and effectiveness in the higher education (Ministry of Education Citation2003). Higher education policies, structures, and systems in South Africa seek to redress apartheid-era structural deficits in the education system, make education more accessible, and address the system’s inherent inequalities (Menon Citation2015). Through the New Funding Framework (NFF), a performance-based funding (PBF) scheme, the government distributes government grants to universities in line with the National Plan on Higher Education and institutional plans (Steyn and De Villiers Citation2007). The NFF scheme comprises block grants – funding provided to universities based on their performance to cover their operational costs on teaching and research-related services, as well as earmarked grants – which are designated for specific purposes (Steyn and De Villiers Citation2007; DHET Citation2019). Block grants make up to 70% of the total state budget towards universities (DHET Citation2019). Through the block grant system, universities are required, as part of public accountability, to report to the Ministry of Higher Education and Training on the efficient and effective spending of the funding, what they have achieved with the resources, and how they have contributed towards national policy and priorities (DHET Citation2019, 4). The reported university outputs are used to determine a university’s share of funded units in each of the four funded categories – teaching inputs, institutional factors, actual teaching outputs and actual research outputs.

Even though the NFF programme has been in existence for years and used as a key source of funding for public universities in South Africa, little is known about its impacts on institutions teaching and research performance. Available evidence is limited to government publications which reports partial measures of productivity and efficiency such as throughput rates (i.e. the number of students going through the university per year), dropout rates and graduation rates. Similarly, the academic research is predominately qualitative (e.g. Steyn and De Villiers Citation2007). The lack of empirical (quantitative) investigation is somewhat surprising given that block grants were developed with the expectation that they will influence behaviour of universities in ways that increase their research and teaching efficiency.

According to CHE (Citation2009), significant progress has been made in South Africa in terms of equity and effectiveness of higher education, but little is known about efficiency. Thus, the motivation of this study is to assess the efficiency of South African higher education institutions while taking into consideration the effect of the government funding on teaching and research efficiency. In the context of our study, efficiency refers to the ability of public university to generate maximum output with a given set of technology and inputs. Specifically, we want to gain an understanding on how teaching and research efficiency affect the performance of higher education institutions. This study draws an inspiration from a growing number of empirical studies assessing the influence of performance-based funding (PBF) schemes on higher education performance (e.g. Lee and Worthington Citation2016; Yang, Fukuyama, and Song Citation2018; Cattaneo, Meoli, and Signori Citation2016). Specifically, we aim to investigate the performance of South African higher education in a network DEA (NDEA) structure including two core nodes: teaching and research that establish the overall efficiency of universities. In doing so, we provide the first application of NDEA approach to the South African higher education literature and provide an indirect evaluation of the influence of the PBF scheme on the HEI performance in South Africa.

In addition, this paper examines the influences of exogenous factors on the efficiency score of each node (teaching and research activities) and the overall efficiency at the university level. Previous HEI efficiency studies in South Africa, apart from Marire (Citation2017) and Temoso and Myeki (Citation2023), have not investigated determinants of efficiency in HEI (Taylor and Harris Citation2004; Myeki and Temoso Citation2019). As discussed by Lee and Worthington (Citation2016), analysing the sources of inefficiency for both teaching and research nodes can provide important insights to both policymakers and management on the HEI weaknesses and directions for future performance improvement. Therefore, this is the first paper that estimates efficiency and its determinants for South African public universities using NDEA.

The remainder of the paper proceeds as follows. The upcoming section deals with the background of higher education in South Africa as well as a review of existing literature with special emphasis on studies conducted through application of stochastic frontier and DEA methodologies. Section 3 discusses the methodology employed in the paper focusing on empirical models, specification of their functional forms, data description and estimation procedure. The empirical findings are presented in Section 4 followed by Section 5 with discussion. Section 6 ends with conclusion and policy application.

2. Higher education in South Africa: a brief literature review

2.1. Background of higher education in South Africa

Higher education in South Africa is intended to meet individual self-development goals, provide high-level labour-market skills, generate knowledge of social and economic value, and develop critical citizens (Department of Education Citation1997). South African HEI are mandated to achieve the following broad objectives to address inherent societal challenges such as inequalities and inefficiencies in the education system: (1) increase and broaden participation; (2) be responsive to social interest and needs; (3) cooperate and be a partner in governance; and (4) generate funding to sustain the institutions (Republic of South Africa [RSA, Citation1997]). Most universities, particularly technology and comprehensive universities, focus on teaching to produce graduates who are skilled and knowledgeable in the labour market and contribute to economic development (Tewari and Ilesanmi Citation2020; CHE Citation2009). Traditional universities, on the other hand, produce a large proportion of research outputs in South Africa, as well as innovative outputs such as patent data, in addition to teaching (CHE Citation2009). South Africa is a scientific output leader on the African continent, owing to both the quality (in terms of research) of a significant number of its universities and the relative sophistication of its economy, which has a much greater potential for innovation than elsewhere on the continent (Pillay Citation2015).

To determine whether the higher education system is meeting its objectives, it is necessary to define success and which indicators best reflect success or failure. However, measuring the success of HEI is difficult due to their multiple competing goals and the fact that appropriate indicators are not always readily available, particularly in a developing country like South Africa (CHE Citation2009). The purpose of this study is to assess the efficiency of South African HEI, which is defined as the ability of a decision-making unit (for example, a university) to produce maximum outputs with the existing levels of inputs (Farrell Citation1957; Fried et al. Citation2008). Higher education efficiency is directly related to quality measures (CHE Citation2009), so quality is a product of several variables, including the size and quality of academic staff in universities, as well as staff-student ratios (Temoso and Myeki Citation2023; Tewari and Illesami Citation2020).

In higher education, Kenny (Citation2008) discovered that efficiency is not the same as effectiveness, and that efficiency measures how well a university does what it does, whereas effectiveness relates to performing the correct activity. Furthermore, Lookheed and Hanushek (Citation1994) stated that effectiveness is unrelated to resource utilisation, so what is effective is not always what is most efficient. According to Kenny (Citation2008), the drive for efficiency is reducing the effectiveness and quality of learning and teaching in Australia through staff reduction and the adoption of new technology. This would affect equity of learners. The most effective or efficient policy may not be always the optimal policy for society (Lookheed and Hanushek Citation1994). In other words, to some extent, efficiency and effectiveness may not go the same direction. As a result, Menon (Citation2015) contends that because HEI are responsible for teaching and learning, identifying the causes of poor performance, such as low graduation rates, is critical. This can be investigated from the standpoint of efficiency, which is the focus of our research. Some issues concerning effectiveness and equity, on the other hand, are beyond the institutions’ control and may necessitate interventions at the educational system level in terms of strengthening school systems and reconceptualising education (Menon Citation2015). In South Africa, for example, most first-year students are unprepared for university due to a mismatch between high school and university teaching and learning (Tewari and Ilesanmi Citation2020). Similarly, there is a problem with declining funding for secondary and technical colleges, which feed into the higher education system (Menon Citation2015). Both issues may have a long-term impact on higher education performance.

In this paper, we focus on the efficiency of South African universities to understand how well they use their resources to provide educational services given their existing quality. We focus on efficiency analysis because methodologies and data indicators for measuring it are well established in higher education literature, and such data are available in South Africa, covering most universities and multiple time periods. While indicators of effectiveness and equity are important, there is a scarcity of comprehensive data in our case to conduct those analyses. Given our efficiency findings, future empirical studies should look into the effectiveness and equity of HEI.2.2 Empirical literature on South African higher education

Regarding university efficiency, there is an extensive literature that deals with the measurement of HEI efficiency (De Witte and López-Torres Citation2017). A large number of these studies tend to use non-parametric approaches such as Data Envelopment Analysis (DEA) (see Thanassoulis et al. [Citation2016] for an overview), and several contributions have applied parametric methods including stochastic frontier analysis (SFA) (see Gralka [Citation2018] for an overview). The choice of the methodological approach is typically driven by the research question and availability of data.

In the South African higher education context, both DEA and SFA approaches have been applied (Taylor and Harrison Citation2002; Taylor and Harris Citation2004; Marire Citation2017; Myeki and Temoso Citation2019; Temoso and Myeki Citation2023). Taylor and Harris (Citation2002, Citation2004) are the first studies to apply frontier approaches (i.e. DEA) in the South African HEI performance context. However, these studies are limited in terms of scope because only a few (10) universities were included in the analysis and the data are outdated while some universities and policies did not exist when it was conducted (Myeki and Temoso Citation2019). Marire (Citation2017) used a SFA approach to assess the cost-efficiency of public universities in South Africa and estimated that, on average, public universities were 12.7% cost inefficient. However, the use of SFA in analysing HEI performance has been criticised in the literature because it relies on restrictive functional forms (Gralka Citation2018).

Recent studies (Myeki and Temoso Citation2019; Temoso and Myeki Citation2023) applied the standard DEA approaches to measure technical efficiency and total factor productivity of public universities in South Africa, covering the post separation of HEI Department from the then ministry of education (2009 – 2016). These studies consider both research and teaching as outputs by aggregating them using a single-stage DEA model. However, as argued by Monfared and Safi (Citation2013), the main limitation of the standard ‘black box’ DEA approach is that it ignores the internal structures and linkages of activities within the production process of universities, which makes it difficult to accurately evaluate the impact of teaching and research activities on the overall efficiency of the university. Hence, the use of standard DEA models may not be appropriate in modelling the complex production process of universities. This observation is supported by Lee and Worthington (Citation2016) who concluded that the use of a single DEA approach overstated the efficiency levels of universities in Australia.

Given the limitations of a single DEA approach, a new DEA approach (network DEA) has been proposed (Fare and Grosskopf Citation1996b, Citation2000; Tone and Tsutsui Citation2009). This approach accounts for both departmental (nodes) efficiencies and overall efficiency. Since its development, several studies have applied NDEA in the higher education context (e.g. Johnes Citation2013; Aviles-Sacoto et al. Citation2015; Lee and Worthington Citation2016; Monfared and Safi Citation2013; Yang, Fukuyama, and Song Citation2018; Despotis, Koronakos, and Sotiros Citation2015, amongst others). For example, Aviles-Sacoto et al. (Citation2015) applied the approach to 37 business schools from 37 universities across the United States and Mexico. Lee and Worthington (Citation2016) used it to investigate the research performance of Australian universities. Focussing on academic departments within a university in Iran, Monfared and Safi (Citation2013) concluded that NDEA provides a superior picture of inefficiencies as compared to traditional single-stage DEA models. Despotis, Koronakos, and Sotiros (Citation2015) used NDEA approach to assess the efficiency of academic research activity by estimating relative efficiency of individual academics with respect to their research activity. The model assumed the first stage as a representation of individual research productivity, whilst the second stage assessed the impact of their research work.

Recently, Lee and Johnes (Citation2021) investigated the teaching quality of higher education in England by incorporating qualitative and quantitative data in the NDEA approach. To capture the sources of inefficiencies, the study applied a fractional regression model (FRM) approach that was proposed by Ramalho, Ramalho, and Henriques (Citation2010). They selected FRM approach rather than the commonly used second stage regression approaches such as ordinary linear squares (OLS) and Tobit models because of its ability to handle bounded and proportional responses such as DEA scores (Ramalho, Ramalho, and Henriques Citation2010).

Although NDEA approach has been widely applied in HEI efficiency analysis, it has been limited to developed economies, whilst emerging countries such as South Africa have not been investigated. To the best of our knowledge, NDEA approach has not been applied to measure the efficiency of HEI in South Africa and generally in Africa. Hence, this study aims to fill this gap by measuring the teaching and research efficiency of public universities in South Africa using an NDEA approach. Moreover, this study assesses the sources of inefficiency in the South African HEI, an area less exploited, which should provide important evidence for enhancing the sector’s performance.

3. Method of analysis

This part aims to present the empirical models to estimate the overall, teaching and research efficiencies and investigate the effect of exogenous variables against the overall, teaching and research efficiency, respectively. The data and data sources are also presented in this section.

3.1. Network-based-data envelopment analysis (NDEA)

The literature of efficiency reveals that DEA is extensively employed in measuring the performance of organisations. However, the fundamental DEA method does not examine the internal structure of an organisation. An extended DEA was developed to address this problem (Färe Citation1991; Färe and Grosskopf Citation1996a; Färe and Grosskopf Citation2000; Lewis and Sexton Citation2004). Tone and Tsutsui (Citation2009) proposed a slack-based network DEA to capture divisional efficiency of a departmental structure of an organisation.

In higher education, some studies (e.g. Tran and Villano Citation2018, Citation2021; Lee and Worthington Citation2016; Ding et al. Citation2020; Monfared and Safi Citation2013, among others) have implemented the network DEA in temporal periods with different divisions/nodes fitted with their research contexts. However, to our knowledge, very little is known about the performance of South African universities in a network structure accounting for the effects of exogenous factors on the overall and structural efficiencies. Our paper comes to fill the gap in the efficiency literature of the South African higher education sector.

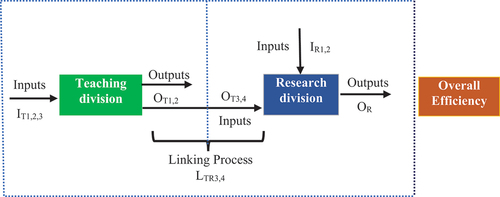

The network DEA slacks-based model (Tone and Tsutsui Citation2009) is employed in this paper to estimate the performance of South African universities in a network structure. It should be noted that, in addition to teaching and research, South African universities generate a wide range of other outputs, including intellectual property and community and industry engagement. However, due to a lack of comprehensive data covering all time periods and universities, those outputs are not included in our analysis. The NDEA model in this study demonstrates an advantage for assessing the decisive effect on teaching and research of each university – the most important tasks of higher education institutions. This is helpful for constructing a best practice of academic performance in the presence of exogenous factors that are beyond the control of universities. Regardless of administrative divisions, teaching and research play a crucial role in academic operations of universities, demonstrating how universities are efficient in teaching and research, thus contribute to their reputation and sustainable development, given the quality of training. Accordingly, these two divisions are chosen to make up for the performance of South African universities and investigated in a structural network. We have adapted the Tone and Tsutsui (Citation2009)’s model to investigate the performance of universities and the theoretical model is expressed in the appendix A1. demonstrates the network model in a university structure to undertake teaching and research operations.

The university teaching division is intended to train graduates and provide them with high-level labour-market skills, generate knowledge of social and economic value, and develop critical citizens (Department of Education Citation1997). This is accomplished by teaching undergraduate and postgraduate students and supervising higher education research (HDR) students who contribute to research and development through thesis and journal publications, among other things. This stage provides resources of knowledge, workload of staff for the research division. On the other hand, the primary target of the research division is to provide research outputs for universities (e.g. publications, research grants, PhD thesis, etc.). Both divisions are vital for the academic operational process of universities, thus constitute the university performance.

Our approach is similar to previous NDEA-based studies on higher education efficiency. For example, Monfared and Safi (Citation2013) estimated the network DEA efficiency of academic colleges at Alzahra University in Iran using the same model with two nodes, teaching and research. The authors discovered that in both the single stage and network models, overall teaching quality outperforms research productivity. In the same vein, Tran and Villano (Citation2021) discovered that the academic teaching node was more efficient than the finance node and was strongly correlated to the overall network of Vietnamese universities using the network DEA with two nodes, finance, and academic teaching. Lee and Worthington (Citation2016) found that Australian universities are more efficient in producing publications than in winning grants when they investigated the efficiency of research and grant application in a network DEA framework. The authors hypothesised, however, that another endogenous factor could influence the outcome of total research grants. The preceding literature indicates that teaching and research are important enhancers of overall university performance and should be investigated in a network structure rather than a ‘black box’ DEA.

Regarding inputs and outputs in a network DEA model, as shown in , three input variables including the number of undergraduate enrolments, the number of postgraduate (masters, postgraduate diploma, and PhD) enrolments and total expenditure are used for the teaching division. Total expenditure includes all expenses for academic operations at individual universities, including staff costs; thus, to avoid overlapping and double counting, we did not include the number of staff (professional, technical, and other support staff) in the model. Recent studies (e.g. Nkohla et al. Citation2021; Myeki and Temoso Citation2019; Temoso and Myeki Citation2023) on the efficiency of South African universities used total expenditure, academic staff, and non-academic staff as inputs in the DEA model without separating staff costs from total expenditure of universities. This would cause a double count in inputs, thus leads to biases in the efficiency estimations. The input variables in the teaching division are linked through the provision of research capacity to the research division together with additional sources such as academic staff and research grant.

Table 1. Description of variables used in the empirical NDEA model.

For the research division, we have two main input variables including academic staff who has responsibility of research activities, and research grant provided from the government block grant for individual universities. Previous studies (e.g. Lee and Worthington Citation2016; Monfared and Safi Citation2013) used academic staff or PhD students as inputs for the research node in the network structure. The key output of the research division refers to total university research outputs, which include Master and PhD theses as well as journal publications that were externally published in the referred journals by academic staff and could be cited and added to university research indexes. While recent research such as Nkohla et al. (Citation2021), Myeki and Temoso (Citation2019) used weighted research outputs, our paper utilised actual numbers of publications to reflect the nature of research activities of universities.

The number of Master Research and PhD completion (graduates) is used as two linking variables from the teaching node to the research node. Master Research and PhD graduates could contribute to research activities of universities via producing papers for publication extracted from their thesis. In other words, the numbers of Master Research and PhD graduates are considered as mediating variables that would contribute to research outputs of universities. In addition, we assume that university’s ability to implement teaching and research activities are equally important. Accordingly, the importance of teaching and research tasks is assumed to be equal, that is, 0.5 for each division.

3.2. The second-stage DEA analysis: a fractional regression model

In the second-stage DEA analysis, we examine whether contextual factors affect teaching, research and overall efficiencies of universities. There is no agreement in the literature in terms of the choice of regression model for this second stage. For instance, the ordinary least squares (OLS) model is considered unsuitable since the predicted values of the dependent variable may be outside the unit interval while employing a two-limit Tobit model with limits at zero and unity to model DEA scores is also debatable because the accumulation of observations at unity of DEA scores is not the result of censoring (Tran and Villano Citation2018). In addition, DEA efficiency scores of zero are not observed, thus the domain of the two-limit is different from a data-generating process (DGP) of DEA (Ramalho, Ramalho, and Henriques Citation2010; Simar and Wilson Citation2007).

Simar and Wilson (Citation2007) have suggested a coherent DGP via providing a set of assumptions so that the use of estimates rather than true efficiency scores does not impact on the consistency of the second-stage regression parameters. On the other hand, Banker and Natarajan (Citation2008) proposed a formal statistical foundation for the two-stage DEA analysis to generate consistent estimators. However, their DGP is less restrictive than that of Simar and Wilson (Citation2007) and the distributional assumptions about the error term of the second stage are required to re-estimate efficiency scores because the dependent variable is the logarithm, rather than the level of the DEA scores. On top of that, Ramalho, Ramalho, and Henriques (Citation2010) proposed fractional regression models in the second stage using simple statistical tests. Their method could help test influences of contextual factors on both inefficient and efficient decision-making units if the proportion of the full frontier values (equal to one) is sufficiently large. What is more, the regression analysis with the robust variances is a valid inference in their framework. In this paper, we used the fractional regression model to examine the influences of external factors on the performance of South African universities.

The fractional regression model is presented as in which

, nonlinear function satisfying

(see details in Ramalho, Ramalho, and Henriques Citation2010).

The contextual factors that may impact teaching, research and overall efficiencies of universities include the proportion of staff with PhD and Masters’ degrees, the proportions of budget surplus, the proportion of government funding, the proportion of students’ fees, the proportion of private income, the proportion of professional support staff, and the proportion of technical and other staff. Most of these factors were used in Marire (Citation2017) and Temoso and Myeki (Citation2023) to examine the influences of external factors on cost-efficiency and total factor productivity in South African higher education, respectively. They are expected to have a positive relationship with teaching, research, and overall efficiencies of universities. In addition, the time variable is added into the model to capture the change in efficiency scores over time at teaching and research divisions and the whole system.

3.3. Data and data source

The data source for this paper is drawn from the Centre of Higher Education and Trust (CHET), Department of Higher Education and Training (DHET) and Higher Education Data Analyser (HEDA) portals over the period 2009/10 to 2016/17. Totally, there are 26 universities in South African higher education system. However, after screening the data for a panel framework, our dataset includes 22 public South African universities for the 8-year period in a pooled structure of analysis. The summary statistics of input, output and contextual variables are presented in .

Table 2. Definitions and summary statistics of variables, 2014/15–2018/19 (n = 79).

4. Empirical results

4.1. Teaching, research and overall efficiencies of universities

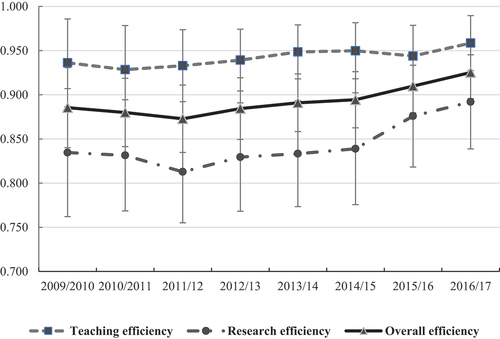

The findings from reveal that the average teaching efficiency, despite fluctuating slightly from 0.928 to 0.959 during the reported period, is relatively high. The average teaching efficiency score of South African universities is 0.942 for the whole period. This implies that while universities perform admirably, they could improve their performance by 0.058 to achieve full efficiency in their academic activities. Regarding this node, the number of fully efficient universities (score equal to one) varies across years, ranging from 8 to 13, accounting for 36% to 59% of total universities investigated. The mean teaching efficiency score of South African universities is relatively equal to the mean efficiency score of Tanzanian universities at 0.94 (Bangi et al. Citation2014; Kipesha and Msigwa Citation2013), and slightly higher than the mean teaching efficiency score of African universities as found in Kiwanuka (Citation2015), 0.936 and 0.90, respectively.

Table 3. Network efficiency of South African universities, 2009/10–2015/16.

Regarding the research division, the research efficiency score of South African universities witnesses a variation of 0.137 over 8 years, averaging a score of 0.844 over the whole period. These scores are slightly lower than teaching efficiency scores, implying that research activities of South African universities need to improve their research performance by 0.156 to achieve the full efficiency. The Hotelling test indicates that changes in the teaching and research efficiency scores across 8 years are significant at the 1% level of significance. The average research efficiency of South African universities is lower than the average research efficiency of African universities (Kiwanuka Citation2015), 0.844 versus 0.971, respectively. Abe and Mugobo (Citation2021) revealed that ‘heavy workload, career ambiguity, poaching, staffing, sabbatical leave policy, large student numbers, unawareness of incentives, poor retention strategies, institutional history, understanding of research mandate, clarity of policies and procedures could emerge as the contributing factors to low research output’. This should be a concern by managers of South African universities to improve their research performance.

shows that the overall efficiency of South African universities has an average of 0.893 over the reported period. This suggests that South African universities could increase by 10.7% to get the unity efficiency in their academic operations. The Hotelling F-test shows that the changes in overall efficiency of South African universities are significant at the 1% level of significance over the surveyed period. Moreover, the Hotelling F-test also shows that the difference between teaching, research and overall efficiency scores is significant at the 1% level of significance for each year and across the reported period.

As can be observed, the overall efficiency score obtained in this paper is relatively lower than the average efficiency score found in previous studies using the traditional DEA approach (e.g. Nkohla et al. Citation2021; Myeki and Temoso Citation2019; Taylor and Harris Citation2002, Citation2004). This means that the network DEA model offers more tight assessment by examining universities who get the unity efficiency at nodes, teaching and research, respectively.

We have conducted the Spearman’s rank test to investigate the correlation among the teaching, research and overall performance. shows that there is a significant association between efficiencies of the teaching division and overall efficiency of universities. Similarly, the performance of research divisions has contributed significantly to the entire performance of universities. The whole performance of universities is strongly correlated to the research efficiency rather than the teaching efficiency, 0.847 versus 0.513, at the 1% level of significance. This implies that the research activities, rather than teaching activities, play a dominant role in operations of South African universities. However, in a recent study by Tran and Villano (Citation2018) who investigated the performance of Vietnamese universities via the dynamic network DEA method, the teaching activities contributed to the efficiency score of Vietnamese universities rather than financial efficiency. This is to say that educational managers in the South African higher education, should be more concerned about research activities of universities and have more appropriate strategies to improve their performance in research.

Table 4. Pairwise Pearson correlation between teaching, research and overall efficiency based on the network DEA model.

illustrates the variation of university efficiency using the network DEA model for each division and the overall performance of universities for the 8-year period. The teaching, research and overall efficiencies demonstrate an increasing trend over the reported period. The research efficiency scores are lower than both the teaching and overall efficiency scores. The confidence intervals for the teaching, research and overall efficiencies have been constructed at the 5% level of significance. The confidence intervals of the research efficiency are quite wider than those of the teaching and overall efficiencies, implying that the research capacity of individual universities largely varies across the surveyed period. This finding provides insightful information for the university managers and policymakers to have more appropriate research strategies to improve their research capacity. Meanwhile, the teaching capacity is relatively adequate across years that would help improve the overall efficiency of South African universities ().

Figure 2. The average network efficiency of South African universities over 2009/10–2016/17.

Table 5. Fractional regression results of teaching, research and overall efficiency, 2009/10–2015/16 (n = 22 universities).

4.2. The influences of external variables on teaching, research and overall efficiencies

As can be seen from , the teaching efficiency of South African universities increased overtime although the estimated coefficient of time trend is not significant at the 5% level of significance. Academic staff with Masters qualification contributed significantly to the teaching efficiency at the 5% level of significance. Despite having a positive sign, the estimated coefficient of staff with a PhD is not statistically significant in terms of teaching efficiency. This result may be explained by the fact that these academic staff turn to spend more time on research activities rather than teaching. The budget surplus, personnel grant, proportions of professional and technical staff, and other staff all significantly contributed to the teaching efficiency of universities.

Regarding research efficiency, staff with a PhD degree is significantly and positively correlated with research efficiency, whereas the relationship between research efficiency and staff with a Master’s degree is negative at the 5% significance level. This is in line with our expectation that PhD staff would contribute to research activities of universities rather than Masters staff who would focus on teaching operations. In a recent study conducted by Marire (Citation2017), the author discovered that an increase in the proportion of PhD staff would result in a rise in cost inefficiency, implying that staff expenses would inevitably increase. However, our paper demonstrated that PhD staff would contribute to the efficiency of research. Interestingly, personnel grants decreased research efficiency while improving teaching efficiency. A possible explanation is that universities receive personnel grants to support disadvantaged students rather than research activities. On the other hand, government funding, student fees, private income, and the proportions of professional, technical, and other staff all contribute significantly to the research efficiency of universities, except for the time trend variable. Staff with a Master’s degree and personnel grants have had a negative impact on the overall research efficiency of universities. This is worthy of concern of educational leaders and policymakers to have a better research strategy for universities.

The overall fractional regression models show integrated influences of external variables on the whole performance drawn from the divisional efficiency of teaching and research. Except for the time trend and Masters staff variables, the remaining variables are significantly correlated with the overall efficiency of universities in terms of the appropriate signs of the estimated coefficients derived from either teaching or research fractional models. It is observed that personnel grant has a negative sign and is statistically significant at the 5% level of significance, as indicated by the research fractional model, indicating that the overall efficiency of South African universities has been driven by research efficiency rather than teaching efficiency. This result is consistent with the pairwise Pearson correlation results in . In addition, the financial management of universities through the budget surplus, government funding, and the various sources of income and grant, as well as the professional, technical, and other staff, contribute effectively to universities’ overall performance. However, while the estimated coefficient of staff with Master’s degrees is not statistically significant, it carries a negative sign that may have a long-term impact on the overall efficiency of universities. As a result, this should be pursued further to find better solutions for improving the research capacity of staff with Masters (e.g. study further with a PhD degree, research grant, research training, reducing teaching load, etc.), thereby increasing research outputs for universities.

5. Discussions

The network DEA model proposed by Tone and Tsutsui (Citation2009) is useful for estimating the efficiency of individual divisions that can be used to determine the overall efficiency of an organisation. Despite the fact that each university’s organisational structure varies, the teaching and research divisions are regarded as one of the core departments to support the sector’s academic activities (Monfared and Safi Citation2013). The teaching division is responsible for providing training services to students and conducting administrative work (Tran and Villano Citation2018, 2018b), while the research division is responsible for conducting research activities that improve universities’ research capacity, innovation, and international ranking (Lee and Worthington Citation2016).

In a network structure, although the efficiencies of teaching and research operations establish the overall efficiencies of each university, universities can merge or split up these nodes into sub-divisions to uncover their in-depth academic performance. For example, Yang, Fukuyama, and Song (Citation2018) combined teaching and research into one division and science, technology, and achievements into another. However, Lee and Worthington (Citation2016) investigated a network structure of research division and grant application division to estimate Australian universities’ research efficiency. Ding et al. (Citation2020), on the other hand, examined a network structure of faculty research and student research for 38 departments at a Chinese university. Lee and Worthington (Citation2016) acknowledged that investigating a network structure of teaching and research would be beneficial for improving resource allocation once inefficiencies were identified. Thus, in this paper, we have focused on teaching and research divisions based on their importance in the whole production process of South African universities. As a result, estimating the efficiency of each division and testing their relationships to each other and to the overall performance of universities is critical for designing more appropriate policies, particularly during the recession period caused by the Covid-19 pandemic.

The results of the internal structure model show that the teaching performance of South African universities is quite good at 0.942, which is comparable to the teaching efficiency of Iranian universities (Monfared and Safi Citation2013). To put it differently, they can improve their performance by 0.058 to reach full frontier efficiency. However, South African universities should be concerned about student access, higher education quality, and developing ways to improve student experience (Akoojee and Nkomo Citation2007; Moloi, Mkwanazi, and Bojabotseha Citation2014; Bhagwan Citation2017).

In terms of research performance, universities’ research efficiency is found to be on average 0.844, implying a further improvement of 0.156 to achieve full efficiency. Research is the most important of the three functions of academics in South African universities, which include research, teaching and community engagement. Accordingly, research publication serves as a significant indicator of academic achievement, influencing the strength and funding of universities through the research outputs produced. However, research inefficiency in South African universities can be attributed to overwork in teaching and concern about whether labour contracts will be terminated (Moosa Citation2018). Moreover, the movement of academic staff to other industries has put significant strain on the existing staff in terms of professional services and research delivery (Cloete, Maassen, and Bailey Citation2015). These difficulties would have an impact on academic researchers’ research capacity, reducing research efficiency and effectiveness. As a result, educational administrators should reconsider this carefully to improve their institutions’ research activities.

The overall performance of South African universities is strongly linked to research efficiency rather than teaching efficiency, with 0.847 and 0.513, respectively. This demonstrates the importance of research activities in strengthening the university’s position (Abe and Mugobo Citation2021). Furthermore, our findings revealed that the efficiency relationship between the teaching and research divisions is not significant. Moreover, we discovered that the majority of universities are efficient in the teaching node but inefficient in the research node, with only one university being fully efficient in both divisions. As a result, there are no public universities that are efficient for the entire reported period.

From institutional perspectives, universities can only be fully efficient if they are efficient in all internal operations such as teaching, research and community engagement (Abe and Mugobo Citation2021). As a result, universities could pursue a policy of output expansion by increasing access, equity and participation while reducing their use of input resources (Bunting Citation2004; Boulton and Lucas Citation2008; Tran and Vilano 2018a).

From policy perspectives, pursuing global education standards should be considered for the purpose of increasing equal access to standardised education programme in South African universities. This implies that the overall performance of universities should be normalised to improve access and generate broader participation. In addition, an inclusive higher education environment should be offered to everyone from different social backgrounds, irrespective of their race and living standards (Mzangwa Citation2019).

The influence of external variables on the teaching, research and overall efficiencies of public universities reveals that the proportion of staff with a PhD degree is important in research activities and South African university performance. It is widely considered that research achievement is an influential factor of university ranking (Chipeta and Nyambe Citation2012; Masaiti and Mwale Citation2017). As a result, educational administrators should devise better strategies to entice academics to participate in research activities.

In addition, the government funding and various sources of income (e.g. student fees, private income) and personnel grants contributed significantly to the overall performance by increasing incomes for research activities. That is, increased government support and income from various sources could contribute to financial resources, thereby improving the effectiveness of education, including teaching and research as desired. To facilitate this, the government should monitor, support and regulate the processes and policies used in universities (Mzangwa Citation2019). In addition, professional, technical and other support staff, along with academics, were found to significantly contribute to the efficiency of universities. This suggests that both academics and non-academic staff are important contributors to improving university performance (Noe et al. Citation2017; Tran, Battese, and Villano Citation2020).

6. Conclusions

Our paper aimed to examine the overall performance of South African universities as well as the impact of determinants over an 8-year period, 2009/10–2016/17. The network DEA model was used to examine the internal structure of each university, which included two divisions: teaching and research. Thus, by analysing the operational efficiency in a network structure, it contributes to a better understanding of the performance of South African universities. Thus, the study contributes to a better understanding of the performance of South African universities by analysing operational efficiency in a network structure.

The key findings obtained from this paper are as follows. First, the network efficiency score of South African universities fluctuates over the reported period, with an increasing trend. These changes are statistically significant at the 1% significance level. A closer look at the internal structure results reveals that the teaching efficiency is relatively high on average at 0.942, indicating that South African universities are operating efficiently; however, their efficiency score could be marginally increased by 0.058 to reach the full efficiency score. This is a positive indicator of university teaching performance. However, while research efficiency has increased over the last 8 years, the average score is 0.844, indicating that there is still room for improvement. Second, the overall performance of universities is strongly linked to research efficiency than to teaching efficiency, with 0.847 and 0.513, respectively. Moreover, the research efficiency is lower than the teaching efficiency. More information on this subject should be researched to develop appropriate solutions to move universities forward.

Finally, the second-stage DEA analysis using the fractional regression model showed that the government funding and various sources of income and grant play a significant role to improve the efficiency of universities. However, the role of staff qualifications was not beneficial in improving university research activities. These findings should be further perused in a qualitative analysis to have in-depth information about this.

Although the results of our paper are informative, there is still room for future research on the efficiency of South African universities. More specifically, additional inputs and outputs (e.g. scholarly outputs, educational quality, etc.) should be included in the models for a more robust assessment of university performance. Second, in addition to research and teaching nodes, additional nodes such as student services and financial services could be considered. Third, rather than using the time trend in the second-stage fractional regression model, future studies could capture changes in the efficiency of each division and overall performance using the dynamic model, which would be useful to compare with our findings. Finally, efficiency in relation to effectiveness and equity should be investigated further to provide more insights to policymakers in designing public policies for the South African higher education sector.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abe, I., and V. Mugobo. 2021. “Low Research Productivity: Transformation, Institutional and Leadership Concern at a South African University.” Perspectives in Education 39 (2): 113–127.

- Agasisti, T. 2017. “Management of Higher Education Institutions and the Evaluation of Their Efficiency and Performance.” Tertiary Education and Management 23 (3): 187–190. doi:10.1080/13583883.2017.1336250.

- Akoojee, S., and M. Nkomo. 2007. “Access and Quality in South African Higher Education: The Twin Challenges of Transformation.” South African Journal of Higher Education 21 (3): 385–399.

- Aviles-Sacoto, S., W. D. Cook, R. Imanirad, and J. Zhu. 2015. “Two-Stage Network DEA: When Intermediate Measures Can Be Treated as Outputs from the Second Stage.” Journal of the Operational Research Society 66 (11): 1868–1877. doi:10.1057/jors.2015.14.

- Bangi, Y., A. Sahay, G. N. Ncr, and U. Pradesh. 2014. “Efficiency Assessment of the Tanzanian Universities.” Journal of Education and Practice 5 (14): 130–143.

- Banker, R. D., and R. Natarajan. 2008. “Evaluating Contextual Variables Affecting Productivity Using Data Envelopment Analysis.” Operations Research 56 (1): 48–58. doi:10.1287/opre.1070.0460.

- Bhagwan, R. 2017. “Towards a Conceptual Understanding of Community Engagement in Higher Education in South Africa.” Perspectives in Education 35 (1): 171–185. doi:10.18820/2519593X/pie.v35i1.13.

- Boulton, G., and C. Lucas. 2008. What are Universities For? Amsterdam: League of European Research.

- Bunting, I. 2004. “The Higher Education Landscape under Apartheid.” In Transformation in Higher Education: Global Pressures and Local Realities in South Africa, edited by N. Cloete, P. Maassen, R. Fehnel, T. Moja, H. Perold, and T. Gibbon, 35–52. 2nd Revised ed. Dordrecht, The Netherlands: Kluwer Academic Publishers.

- Cattaneo, M., M. Meoli, and A. Signori. 2016. “Performance-Based Funding and University Research Productivity: The Moderating Effect of University Legitimacy.” The Journal of Technology Transfer 41 (1): 85–104. doi:10.1007/s10961-014-9379-2.

- CHE (Council on Higher Education). 2009. “Higher Education Monitor- The State of Higher Education in South Africa.” A Report of the CHE Advice and Monitoring Directorate. No. 8, October.

- Chipeta, J., and I. A. Nyambe. 2012. “Publish or Perish: Ushering in UNZA-JABS.” Journal of Agricultural and Biomedical Sciences 1 (1): 1–4.

- Cloete, N., P. Maassen, and T. Bailey. 2015. Knowledge Production and Contradictory Functions in African Higher Education. Cape Town: African Minds.

- De Witte, K., and L. López-Torres. 2017. “Efficiency in Education: A Review of Literature and a Way Forward.” Journal of the Operational Research Society 68 (4): 339–363. doi:10.1057/jors.2015.92.

- Despotis, D. K., G. Koronakos, and D. Sotiros. 2015. “A Multi-Objective Programming Approach to Network DEA with an Application to the Assessment of the Academic Research Activity.” Procedia Computer Science 55: 370–379. doi:10.1016/j.procs.2015.07.070.

- DHET (Department of Higher Education and Training). 2019. Ministerial Statement on University Funding: 2021/22 and 2022/23. Pretoria, South Africa: Pretoria.

- Ding, T., J. Yang, H. Wu, Y. Wen, C. Tan, and L. Liang. 2020. “Research Performance Evaluation of Chinese University: A Non-Homogeneous Network DEA Approach.” Journal of Management Science and Engineering. (in press). doi:10.1016/j.jmse.2020.10.003.

- Färe, R. 1991. “Measuring Farrell Efficiency for a Firm with Intermediate Inputs.” Academia Economic Papers 19: 329–340.

- Färe, R., and S. Grosskopf. 1996a. “Productivity and Intermediate Products: A Frontier Approach.” Economics Letters 50 (1): 65–70. doi:10.1016/0165-1765(95)00729-6.

- Färe, R., and S. Grosskopf. 1996b. Intertemporal Production Frontiers: With Dynamic DEA. Boston, MA, US: Kluwer Academic.

- Färe, R., and S. Grosskopf. 2000. “Network DEA.” Socio-Economic Planning Sciences 34 (1): 35–49. doi:10.1016/S0038-0121(99)00012-9.

- Farrell, M. J. 1957. “The Measurement of Productive Efficiency.” Journal of the Royal Statistical Society: Series A (General) 120 (3): 253–281. doi:10.2307/2343100.

- Fried, H. O., C. K. Lovell, S. S. Schmidt, and S. S. Schmidt, Eds. 2008. The Measurement of Productive Efficiency and Productivity Growth. New York, US: Oxford University Press.

- Gralka, S. 2018. “Stochastic Frontier Analysis in Higher Education: A Systematic Review.” CEPIE Working Paper, No. 05/18, Dresden: Technische Universität Dresden, Center of Public and International Economics (CEPIE). http://nbn-resolving.de/urn:nbn:de:bsz:14-qucosa2-324599

- Johnes, G. 2013. “Efficiency in English HE Institutions Revisited: A Network Approach.” Economics Bulletin 33: 2698–2706.

- Johnes, J. 2015. “Operational Research in Education.” European Journal of Operational Research 243 (3): 683–696.

- Kenny, J. 2008. “Efficiency and Effectiveness in Higher Education: Who Is Accountable for What?” Australian Universities’ Review, The 50 (1): 11–19.

- Kipesha, E. F., and R. Msigwa. 2013. “Efficiency of Higher Learning Institutions: Evidence from Public Universities in Tanzania.” Journal of Education and Practice 4 (7): 63–73.

- Kiwanuka, N. R. 2015. “Technical Efficiency and Total Factor Productivity Growth of Selected Public Universities in Africa: 2000-2007.” PhD diss., Africa: Makerere University.

- Lee, B. L., and J. Johnes. 2021. “Using Network DEA to Inform Policy: The Case of the Teaching Quality of Higher Education in England.” Higher Education Quarterly. (in press). doi:10.1111/hequ.12307.

- Lee, B. L., and A. C. Worthington. 2016. “A Network DEA Quantity and Quality-Orientated Production Model: An Application to Australian University Research Services.” Omega 60: 26–33. doi:10.1016/j.omega.2015.05.014.

- Lewis, H. F., and T. R. Sexton. 2004. “Network DEA: Efficiency Analysis of Organisations with Complex Internal Structure.” Computers & Operations Research 31 (9): 1365–1410. doi:10.1016/S0305-0548(03)00095-9.

- Lockheed, M. E., and E. A. Hanushek. 1994. Concepts of Educational Efficiency and Effectiveness. Washington, DC: World Bank.

- Marire, J. 2017. “Are South African Public Universities Economically Efficient? Reflection Amidst Higher Education Crises.” South African Journal of Higher Education 31 (3): 116–137. doi:10.20853/31-3-1037.

- Masaiti, G., and N. Mwale. 2017. “University of Zambia: Contextualization and Contribution to Flagship Status in Zambia.” In Flagship Universities in Africa, edited by D. Teferra, 467–505. Switzerland: Palgrave Macmillan.

- Menon, K. 2015. “Supply and Demand in South Africa”. In Higher Education in the BRICS Countries, edited by Schwartzman, Simon, Rómulo Pinheiro, and Pundy Pillay, 171–190. Dordrecht: Springer.

- Ministry of Education. 2003. “Funding of Public Higher Education.” Government Notice No. 2002. November. Pretoria.

- Moloi, K. C., T. S. Mkwanazi, and T. P. Bojabotseha. 2014. “Higher Education in South Africa at the Crossroads.” Mediterranean Journal of Social Sciences 5 (2): 469.

- Monfared, M. A. S., and M. Safi. 2013. “Network DEA: An Application to Analysis of Academic Performance.” Journal of Industrial Engineering International 9 (1): 1–10.

- Moosa, R. 2018. “World University Rankings: Reflections on Teaching and Learning as the Cinderella Function in the South African Higher Education System.” African Journal of Business Ethics 12 (1): 38–59. doi:10.15249/12-1-165.

- Myeki, L. W., and O. Temoso. 2019. “Efficiency Assessment of Public Universities in South Africa, 2009-2013: Panel Data Evidence.” South African Journal of Higher Education 33 (5): 264–280. doi:10.20853/33-5-3582.

- Mzangwa, S. T. 2019. “The Effects of Higher Education Policy on Transformation in Post-Apartheid South Africa.” Cogent Education 6 (1): 1592737. doi:10.1080/2331186X.2019.1592737.

- Nkohla, T. V., S. Munacinga, N. Marwa, and R. Ncwadi. 2021. “A non-parametric Assessment of Efficiency of South African Public Universities.” South African Journal of Higher Education 35 (2): 158‒187. doi:10.20853/35-2-3950.

- Noe, R. A., J. R. Hollenbeck, B. Gerhart, and P. M. Wright. 2017. Human Resource Management: Gaining a Competitive Advantage. New York: McGraw-Hill Education.

- Pillay, P. 2015. “Research and Innovation in South Africa”. In Higher Education in the BRICS Countries, edited by Schwartzman, Simon, Rómulo Pinheiro, and Pundy Pillay, 463–485. Dordrecht: Springer.

- Ramalho, E. A., J. J. Ramalho, and P. D. Henriques. 2010. “Fractional Regression Models for Second Stage DEA Efficiency Analyses.” Journal of Productivity Analysis 34 (3): 239–255. doi:10.1007/s11123-010-0184-0.

- Republic of South Africa (RSA). 1997. “Higher Education Act No. 101 of 1997.” Republic of South Africa Government Gazette. https://www.gov.za/documents/higher-education-act

- Simar, L., and P. W. Wilson. 2007. “Estimation and Inference in Two-Stage, Semi-Parametric Models of Production Processes.” Journal of Econometrics 136 (1): 31–64. doi:10.1016/j.jeconom.2005.07.009.

- Steyn, A. G. W., and A. P. De Villiers. 2007. “Public Funding of Higher Education in South Africa by Means of Formulae.” In Council on Higher Education (Ed). Review of Higher Education in South Africa, edited by Council on Higher Education, 11–51. Pretoria: Council on Higher Education. Selected Themes.

- Taylor, B., and G. Harris. 2002. “The Efficiency of South African Universities: A Study Based on the Analytical Review Technique.” South African Journal of Higher Education 16 (2): 183–192. doi:10.4314/sajhe.v16i2.25258.

- Taylor, B., and G. Harris. 2004. “Relative Efficiency among South African Universities: A Data Envelopment Analysis.” Higher Education 47 (1): 73–89. doi:10.1023/B:HIGH.0000009805.98400.4d.

- Temoso, O., and L. W. Myeki. 2023. “Estimating South African Higher Education Productivity and Its Determinants Using Färe-Primont Index: Are Historically Disadvantaged Universities Catching Up?” Research in Higher Education 64 (2): 206–227. doi:10.1007/s11162-022-09699-3.

- Tewari, D. D., and K. D. Ilesanmi. 2020. “Teaching and Learning Interaction in South Africa’s Higher Education: Some Weak Links.” Cogent Social Sciences 6 (1): 1740519. doi:10.1080/23311886.2020.1740519.

- Thanassoulis, E., K. De Witte, J. Johnes, G. Johnes, G. Karagiannis, and M. Portela. 2016. Applications of DEA in Education. Boston, MA: Springer.

- Tone, K., and M. Tsutsui. 2009. “Network DEA: A Slacks-Based Measure Approach.” European Journal of Operational Research 197 (1): 243–252. doi:10.1016/j.ejor.2008.05.027.

- Tran, C. D., G. E. Battese, and R. A. Villano. 2020. “Administrative Capacity Assessment in Higher Education: The Case of Universities in Vietnam.” International Journal of Educational Development 77: 102198. doi:10.1016/j.ijedudev.2020.102198.

- Tran, C. D. T., and R. A. Villano. 2018. “Measuring Efficiency of Vietnamese Public Colleges: An Application of the DEA‐Based Dynamic Network Approach.” International Transactions in Operational Research 25 (2): 683–703. doi:10.1111/itor.12212.

- Tran, C. D. T. T., and R. A. Villano. 2021. “Financial Efficiencies of Vietnamese Public Universities: A Second-Stage Dynamic Network Data Envelopment Analysis Approach.” Singapore Economic Review 66 (5): 1421–1442. doi:10.1142/S0217590818500133.

- Yang, G. L., H. Fukuyama, and Y. Y. Song. 2018. “Measuring the Inefficiency of Chinese Research Universities Based on a Two-Stage Network DEA Model.” Journal of Informetrics 12 (1): 10–30. doi:10.1016/j.joi.2017.11.002.

Appendix

A1. The network-based-DEA approach applied in South African universities

Let be universities (UNI) (

,

be nodes/nodes (

and

be inputs and outputs to node

in that order. The connection from node

to node

is

and the set of links by

. The inputs, outputs and linking variables are described as follows:

is linking intermediate products of

from node

to node

in period

, where

is the number of items in the link from

to

; (3)

Regarding the objective function, input-output constraints and linking constraints, the production possibility is defined as set be defined by

where is the intensity vector corresponding to node

.

There are two nodes – teaching and research in performance model of South African universities. It is assumed no linking inputs to starting nodes and no linking outputs from terminal nodes. The variable-return-to-scale approach is employed to count for the influences of environmental factors on the university performance

For UNIo

, input and output constraints can be expressed by

where and

are input and output matrices, and and

are, respectively, input and output slacks.

Regarding the linking constraints, a fixed link value is applied in this setting, implying that linking activities are kept unchanged (nondiscretionary) between the two nodes. Accordingly, the linking constraint is expressed as:

The input-orientated approach is employed to measure the efficiency score of universities since the objective of universities is to minimise inputs to obtain their existing teaching and research outputs.

The overall and divisional efficiencies are therefore depicted by the following formulae:

Input-orientated overall efficiency

where ,

is the relative weight of Node k, which is determined with respect to its importance, and subject to input output constraints and linking constraint as mentioned above.

Input-orientated divisional efficiency

where is optimal input slacks for the input orientated overall efficiency.

is the divisional efficiency index which optimises the overall efficiency

. If

, then UNI0 is called input-efficient for the node k. The overall input-orientated efficiency score is the weighted arithmetic mean of the divisional scores: