ABSTRACT

Internationally, second level curriculum policy for STEM education is concentrating its efforts on promoting curriculum-making pedagogies, with enquiry-based teaching and learning at the forefront of this change. Policy aspirations have not translated well into practice, evidenced by science practical lessons consistently being delivered as recipes to be followed. In recognition of this, national STEM policies are calling for quality pedagogical resources that can support teachers to engage in teaching practical work through scientific enquiry. This article describes how Design Based Research was used as a methodology to create and evaluate a resource, the Structured Enquiry Observation Schedule (SEOS), as a tool to identify student achievement of procedural (enquiry and laboratory) skills in practical biology lessons. Data collected using the SEOS, was triangulated with interview, video and audio data over three iterative research cycles. Findings indicated that the SEOS provides a lens to compare and align policy intentions with classroom enactment of practical work by identifying the basic procedural skills that should underpin any enquiry-based practical lesson, and by highlighting the importance of student attainment of those skills. If used by practising teachers, it has potential to answer calls for quality resources to support the transition to enquiry-based science pedagogy.

Introduction

The focus of this paper is on curriculum innovation in science education, with an emphasis on practical activities in the science classroom, taking practical activities to mean ‘any teaching and learning activity which at some point involves the students in observing or manipulating the objects or materials they are studying’ (Millar Citation2004, 2). Biology was chosen for this research because of its unique status as the most popular STEM subject at upper secondary which gives it the greatest potential to provide teachers and students with an understanding of what it means to engage in enquiry-based pedagogies (State Exams Commission Citation2021).

Irish senior cycle STEM curricula are currently under review after a series of reports that have deemed learner participation in STEM education as ‘less than satisfactory’ (DES Citation2017). Unsurprisingly, one of the main problems is an over-emphasis on propositional knowledge and an under-emphasis on epistemic and procedural knowledge (NCCA Citation2019). This paper grounds the context for this issue in the gap between policy and practice at every level of the STEM curriculum, from the global to the local. Contradictions between policy and practice worldwide have resulted in practical activities being taught by recipe and examined by rote (Burns et al. Citation2018; Hyland Citation2014). At the very root of the issue is what O’Neill (Citation2022) terms the ‘enquiry vacuum’, which is the unoccupied educational and pedagogical space between two epistemologies (recipe and enquiry) that makes transitioning between them almost impossible.

The development of ‘quality’ resources to bridge this space between recipe teaching and enquiry teaching has been identified as essential in Ireland and across International STEM policy (Australian Government Citation2015; Costello et al. Citation2020; DES Citation2017; McLoughlin et al. Citation2020; Tasiopoulou et al. Citation2022). The purpose of this paper is to present how one such resource, the Structured Enquiry Observation Schedule (SEOS), was constructed from policy documents for practical work in upper secondary biology. Developed as Design Based Research (DBR) artefact, it is designed to document the extent to which students are utilising procedural (enquiry and laboratory) skills in Irish practical biology lessons. Within the DBR methodology, part of the construction of any artefact is an evaluation of it in its target setting through iterative design cycles, according to four quality criteria: relevance, consistency, practicality and effectiveness (Nieveen and Folmer Citation2013). The SEOS is evaluated here for use as a quality pedagogical tool, by trialling it in practical biology lessons in each of three design cycles, each increasingly enquiry-based cycle evaluating how the SEOS serves its purpose as a tool to document the procedural skills in the lesson. Therefore, this investigation first describes the construction of the SEOS and then consolidates its design through evaluative research cycles that determine its ability to accurately record the use by students of procedural skills in practical lessons. The final design cycle presents a summative evaluation of the SEOS according to four quality criteria.

The research question addressed here is:

How can procedural knowledge of scientific enquiry be identified and included in enacted practical biology lessons at upper secondary level?

The problem of teaching practical enquiry – an examination of STEM policy doublespeak and its impact on classroom enactment of practical lessons (Cycle 1):

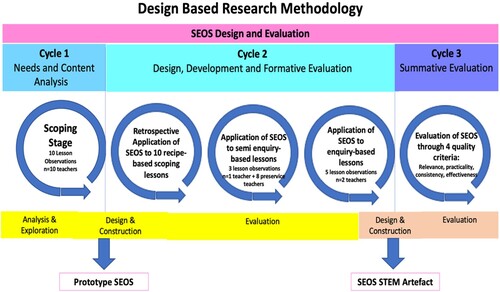

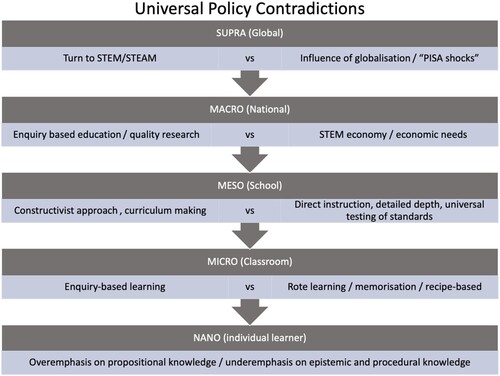

Adapted from a view of curriculum as occupying five levels (Van den Akker et al. Citation2006) provides an overview of how contradictions within STEM policy at the top three levels (Supra, Macro and Meso) of the curriculum have a knock-on effect on the teaching and learning of scientific enquiry at the lower two levels (Micro and Nano).

Figure 1. Universal policy contradictions in STEM education. The three stages of a Design-Based Research Project.

At the Supra level, within international common frameworks of reference, a global push for STEM education is underway, with a glut of recent publications examining STEM practices across Europe (see for example Costello et al. Citation2020; McLoughlin et al Citation2020; Nistor et al. Citation2018; Scientix Citation2018; Tasiopoulou et al. Citation2022). Common to these reports are four ways to increase the quality of STEM education: develop high-quality teachers and teaching resources, integrate core competences into the curriculum, promote enquiry-based learning as an appropriate pedagogy, and promote STEM careers through collaboration with industry partners. There is historical evidence that these aspirations can be overturned in the face of ‘PISA shocks’ which prompt swift alterations to national education policies, particularly for science and mathematics, resulting in returns to more traditional forms of teaching (Freeman, Marginson, and Tytler Citation2019). Notable examples of reversal of curriculum policies occurred following PISA shocks in Germany (Bank Citation2012) and Japan (Ishikawa, Moehle, and Fujii Citation2015), which evidences the contradiction between universal testing and curriculum innovation in STEM.

At the Macro (national) level, policies reflect the trends in global publications, promoting enquiry-based pedagogies to increase the quality of teaching (Australian Government Citation2015; DES Citation2017; NRC Citation2012), What policy does not seem to be able to reconcile is how national assessment procedures are having a washback effect on the type of teaching and learning that is happening science classrooms (Burns et al. Citation2018). Lynch (Citation2021) has called out national STEM policies aimed at educational improvement because of their human-capital-led and terminal assessment based frame of education; ‘making oneself productive and entrepreneurial … .. the goal is to outcompete others, personally and professionally’ (Lynch Citation2021, 211). This view exposes the economic purposes of STEM education policies whose emphasis on meeting the needs of dwindling STEM workforces to maintain a competitive edge over other economies in many countries (Carter Citation2005; English National Curriculum for Science Citation2013), is concealed among aspirations to be ‘the best’ at STEM education (DES Citation2017). When metrics, such as international PISA scores or the Leaving Certificate points system in Ireland, are used to quantify a person's educational value, the emphasis on being productive excludes making time to explore knowing and understanding alternative epistemologies (such as scientific enquiry), with the result that competitive individualism and traditional teaching methods remain stubbornly unchanged in science classrooms (Lynch Citation2021).

At the Meso level within schools, curriculum reforms have led to an emphasis on curriculum making (as opposed to curriculum delivering) with loosely defined curriculum learning outcomes and an underpinning social constructivist ethos replacing traditional prescribed syllabi (Priestley et al. Citation2021). This change in policy has not been easy to implement in classrooms. Recent reforms to the lower secondary science Irish curriculum in favour of this constructivist enquiry-based approach have been roundly criticised by science teachers, union leaders and other stakeholders, who are pushing back for a more clearly defined depth of treatment in the curriculum, ahead of similar impending changes to upper secondary science curricula (DES Citation2023). In the United States, Short (Citation2021) makes the point that teachers who engaged in curriculum making in the wake of policy reforms, did so out of the need to begin the process to implementing the new science standards (because commercial publishers of curriculum materials were too slow in doing so) and they failed to meet the rigour of the new standards in their curriculum making efforts. This suggests that policy makers do not properly consider that curriculum making is an alien epistemology for many science teachers. Evidence to this effect comes in the form of disappointing reports that enquiry-based instructional reform in American classrooms remains ‘enigmatic and rare’ (Crawford Citation2014), with similar reports internationally from England (Abrahams and Millar Citation2008), Australia (Kidman Citation2012), Singapore (Kim and Tan Citation2011) and Europe (OECD Citation2016). Sophisticated reforms to policy internationally are not having the effect in practice that policy makers had hoped for, with enquiry-based learning classed as ‘misunderstood and badly used’ across the board (Osborne Citation2015). This has led to the recognition in emerging Irish policy that enquiry-based learning, while worthwhile, poses challenges to STEM education provision (DES Citation2017; Citation2023).

The effects of the policy contradictions within the Supra, Macro and Meso levels of curriculum become concentrated towards the lower two levels of curriculum. At the Micro (classroom instruction) and Nano (student experience) levels within classrooms, the policy for enquiry-based practical work does not translate so well into practice in the classroom, particularly as students advance towards upper secondary level, where practical lessons tend to be recipe-based, with students following a list of instructions to produce a predetermined phenomenon (O’Neill Citation2022; Scientix Citation2018; Sharpe and Abrahams Citation2020). The learning that comes from recipe style practical lessons is described by Abrahams and Millar as ‘hands-on’ but not ‘minds-on’ where the focus is on the substantive science content and the sole aim is to produce the phenomenon (2008). O’Neill (Citation2022) argues that Irish practical lessons could be described as ‘hands-off and minds-off’ because of the dearth of scientific enquiry and laboratory (procedural) skills incorporated into them. There exists an incorrect assumption that explanatory conceptual ideas will ‘emerge’ from observation, if students produce the correct phenomenon (Abrahams and Reiss Citation2012), despite the wealth of academic evidence that attests to the incongruence of recipe-based teaching with conceptual understanding of scientific principles and ideas (Abrahams and Millar Citation2008; Dillon Citation2008; Hodson Citation2014; Millar Citation2009). Aspects of scientific enquiry, scientific skills and conceptual understanding written into policy documents, are not a feature of practical work in senior cycle biology in Ireland or internationally (Capps and Crawford Citation2013; Millar Citation2009; O’Neill Citation2022). The type of experience that this leads to for students at the Nano level is stultifying. Students may be able to recall what they did during a practical lesson, but they do not know why they did it because they cannot connect the experiment to its underpinning concept (O’Neill Citation2022).

In summary, enquiry-based teaching and learning is promoted at all levels of the curriculum as a pedagogical method that enhances teaching and learning. For reasons outlined above it does not translate into practice. It may never be possible to establish genuine enquiry-based teaching and learning if STEM ambitions for productivity and competitiveness are written into policy. One direct result of this policy is the reluctance to let go of terminal high-stakes examinations which maintain the narrative of competitiveness, despite clear evidence that their influence on classroom practice has resulted in a narrowing of the taught curriculum (including the part of the curriculum relevant to practical activities) to what may be assessed in the terminal examination, with teaching approaches primarily promoting recall and repetition rather than scientific thinking, reasoning and creativity (Childs and Baird Citation2020). Teachers’ practicum has been curtailed to delivering recipe-based practical lessons because they are working within an epistemology that rewards memorisation and knowledge transmission (Burns et al. Citation2018). For scientific enquiry to work, there needs to be a balanced focus on propositional, procedural and epistemic knowledge, yet, in the Irish system, only propositional knowledge is evident (NCCA Citation2019).

The other major issue is that there is no clear definition of the meaning of enquiry-based teaching and learning in policy documents available to teachers, which has led to widespread misinterpretation of what enquiry looks like in the science classroom, resulting in its loose interpretation into ‘hands-on’ practical experiences, which are generally not enquiry-based (OECD Citation2016; Shiel Citation2016). This masks an even deeper issue within science education that O’Neill (Citation2022) terms the ‘enquiry vacuum’, where teachers do not know how to transition from recipe-teaching to enquiry teaching because there are no educational or pedagogical tools available to support them. Irish policy reforms superficially acknowledge these problems and make reference to supporting teachers to embrace their changing role as curriculum makers through ‘a range of quality professional learning experiences’ (Department of Education and Skills Citation2023), but there exists a lack of clarity around how teachers will be supported to make these changes. The kind of changes to STEM teaching in science classrooms that the Irish government are hoping for, have already failed in other countries because policy reform consistently falls short of providing teachers with the kind of professional development and pedagogical tools that are necessary to effect change (Loughran, Berry, and Mulhall Citation2012). The SEOS is designed as a ‘quality resource’ (Department of Education and Skills Citation2023), with value as a pedagogical tool that can reintroduce procedural skills into practical lessons, thus initiating engagement in enquiry-based pedagogy. The next section outlines the theoretical frame within which the SEOS sits and is followed by a description of how the SEOS was constructed and evaluated using DBR, by drawing on Irish biology curriculum documents.

Theoretical framework

The construction of the SEOS is anchored by a theoretical framework grounded in Dewey's teachings about knowledge which provides a lens through which enquiry-based policy and recipe-based practice are identified as two different epistemologies. Dewey's view of knowledge provides insights into the epistemological origins of recipe teaching, and explains that it persists today because what is taught-

…is thought of as essentially static. It is taught as a finished product, with little regard either to the ways in which it was originally built up or to changes that will surely occur in the future ([Citation1938] 2015, 19)

Genuine science is impossible as long as the object esteemed for its own intrinsic qualities is taken as the object of knowledge. It's completeness, its immanent meaning, defeats its use as indicating and implying (Dewey Citation[1925] 1958, 130).

To know, means that men have become willing to turn away from precious possessions, willing to let drop what they own, however precious, in[sic] behalf of a grasp of objects that they do not as yet own ([1925] 1958, 131).

The following section describes how these skills were identified from policy documents for practical work in biology.

Another important feature of the SEOS is its emphasis on the student (rather than the teacher) using the skills that are set out in it. Teaching through enquiry changes the role of the teacher from one who shares facts to be learned and recited to one who teaches students how to think intelligently as part of a community of scholars. It is the teacher's responsibility to see that the student can take advantage of a learning occasion by exercising his/her intelligence through the skills of enquiry.

Materials and methods

Research methodology

This study adopted a Design-Based Research (DBR) methodology because DBR characteristically engineers pragmatic interventions in the form of educational artefacts that bridge the ‘credibility gap’ between educational research (including policy) and educational practice, by fulfilling the ‘need for new research approaches that speak directly to problems of practice’ (Design-Based Research Collective Citation2003, 5). DBR involves the creation of innovative design tools that can advance ‘our knowledge about the characteristics of interventions and the processes of designing and developing them’ (Nieveen and Folmer Citation2013, 153). The SEOS is a design tool that was developed track and evaluate the procedural enquiry skills achieved by students in practical biology lessons, and the process of designing and developing it is documented here.

While there is no rigid structure to the methodology, there are three generally agreed research cycles to any DBR project in an educational setting (McKenney and Reeves Citation2018; Plomp Citation2013; Thijs and Van den Akker Citation2009):

Needs and content analysis – a review of literature, scoping stage classroom observations, identification of the problem.

Design, development and formative evaluation of the artefact.

Semi-summative evaluation according to quality criteria.

Cycle 2 – design of the artefact

This section documents the process of designing the SEOS from Irish policy documents for practical work. Within the existing biology curriculum there is a clear emphasis on the process of scientific enquiry, on learning practical skills and on the application of the scientific method. It is recognised here that the scientific method is seen as outdated in many jurisdictions because of the linear way in which it presents scientific investigation (Cullinane et al. Citation2023; Erduran et al. Citation2021; Ioannidou and Erduran Citation2021), however it is written into Irish curriculum documents, and is included in this research as a result.

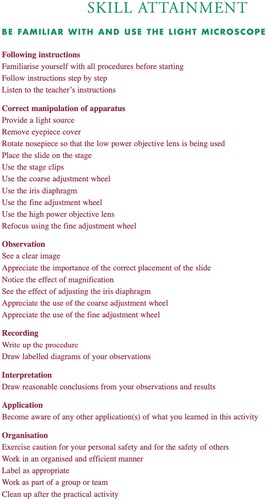

Irish biology teachers have three policy documents available to them to guide their practical pedagogy: The Biology Syllabus (Government of Ireland (GOI) Citation2001), The Guidelines for Teachers document (GOI Citation2002) and the Biology Support Materials Handbook (GOI Citation2003). All these documents emphasise scientific enquiry as the preferred method of teaching practical work. Conversely, the Biology Support Materials Handbook (GOI Citation2003), the main document used by teachers to teach practical work, prescribes the step-by-step set up and experimental method for each of the 22 mandatory experiments on the Leaving Certificate syllabus, indicating a knowledge-as-transmission approach to practical teaching. The handbook also emphasises that ‘the main focus of these activities for students is the attainment of practical skills’. (GOI Citation2003, 2). These skills are referred to as procedural skills in this paper. The specific procedural skills to be developed are: ‘manipulation of apparatus, following instructions, observation, recording, interpretation and observation of results, practical enquiry and application of results’ (GOI Citation2003, 4). The SEOS was developed to document the presence of these specific procedural skills; therefore, they form the backbone of its design. Every practical activity prescribed in the handbook has a list of these skills divided into sub-skills specific to the activity (see for an example)

Figure 3. An example of the skill attainment section of one Leaving Certificate mandatory activity as outlined in the Teacher's Handbook for Laboratory Work (GOI Citation2003, 24).

The sub-skills listed under the headings Following Instructions, Interpretation, Application and Organisation are identical. Interpretation and Application are the ‘minds-on’, end-in-view enquiry skills, yet in the handbook they are reduced to vague one line identical statements across all experiments, evidencing how enquiry is misrepresented in policy (). The only sub-skills that change between activities are Correct Manipulation of Apparatus, Observation and Recording. These refer to the hands-on skills that Abrahams and Millar (Citation2008) identified as the main skills evident in practical work. When they alone are present (without Interpretation, Application, or Practical Enquiry), practical lessons tend to be recipe based, regardless of how the sub-skills within them change between experiments, because the learning that comes from them is reduced to knowledge as an end-in-itself. indicates how the subskills for Interpretation, Application and Practical Enquiry listed in the SEOS, were derived directly from their description in the introduction to the handbook, which proposes them as end-in view (Abrahams and Millar Citation2008, 4), rather than from their vague description in the skills section of each experiment.

Figure 4. The reference to interpretation, application and practical enquiry subskills in practical policy (GOI Citation2003, 4).

There is an additional section added to the end of the SEOS that evaluates the use of the scientific method since the handbook claims that ‘the study of biology is incomplete without the study and application of the scientific method’ (Abrahams and Millar Citation2008, 3).

Following Lankshear and Knobel’s (Citation2004) recommendations for observation schedules, the SEOS was tightly planned, detailed and included a checklist of scientific skills that align with policy recommendations. These are listed in the left-hand column of the SEOS as seen in which shows an example of one practical microscopy activity, taught by an in-service teacher.

Table 1. An example of the SEOS for a Microscopy practical lesson.

The handbook also clearly stipulates the role of the student as central to practical work. In the recipe-based view of knowledge, the teacher presents the student with ‘ready-made intellectual pabulum to be accepted and swallowed’ (Dewey Citation[1910] 2012, 198). With enquiry-based lessons, it is essential that the student is included in the learning. In the SEOS this is documented by recording the extent to which students engage with each practical skill. Fradd et al. (Citation2001) developed a science enquiry matrix for classifying the level of enquiry in a practical lesson, by documenting whether the teacher or the student carried out the main elements of enquiry teaching (questioning, planning, implementing, concluding, reporting, applying) according to a Likert scale. Their matrix was adapted to the SEOS here using a similar scale to determine the extent to which the student is engaged in the different skills listed in the SEOS:

0 = This skill is a feature of the syllabus recommendations but not evident in this lesson

1 = Teacher completes this skill with no input from students (>95% teacher input)

2 = Teacher mostly completes this skill with a little input from students (>75% teacher input)

3 = Most students complete this skill with some assistance from teacher (50% each)

4 = Most students complete this skill with a little assistance from teacher (>75% student input)

5 = Most students complete this skill without assistance from teacher (>95%student input)

Participants and sample size

The SEOS was used over three DBR research cycles as outlined in . It was retrospectively applied in cycle 2 (sub-cycle 1) to 10 scoping stage lessons taught by 6 in-service biology teachers (). Interviews with six in-service teachers and 16 students were conducted. Cycle 2 (sub-cycle 2) further evaluated the functionality of the SEOS in one in-service teacher setting. It was also used in 2 pre-service teacher settings (n = 8), with audio-recordings used to corroborate the data gathered (). In third sub-cycle, the SEOS was trialled in 5 enquiry-based lessons taught by two in-service teachers. These were video and audio-recorded, with the SEOS retrospectively applied to each lesson (). Interview data from two teachers and four students triangulated the SEOS data from this sub-cycle. provides information about the location and description of the schools that were involved in the sample size. All schools were mixed sex.

Table 2. The sample of participants that took part in each stage of the research.

Table 3. Location and description of schools in the sample studied.

Ethical approval for this research was granted as a part of the doctoral process.

Triangulation of the SEOS with other data collection methods

Data collected with the SEOS is triangulated here with unstructured observations, interview, audio and video evidence. A description of how each of these methods were used is presented here. All data collection methods were conducted by one doctoral researcher.

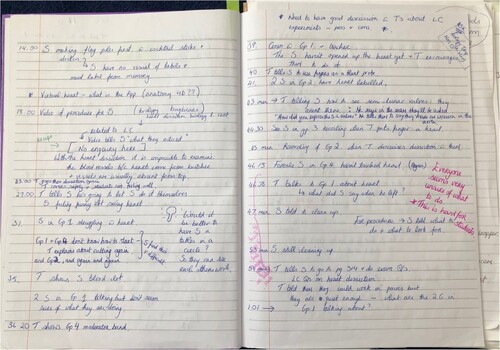

Unstructured observations were utilised to gain insights into how events in practical lessons were perceived by students and teachers (Simpson and Tuson Citation2003). Descriptive field notes were taken, which allowed a broad and flexible log of the sequence of events in each practical lesson observed (Simpson and Tuson Citation2003). Following Spradley's guidelines (Citation2016), the field notes included: a record of the physical space, actions activities and events in the situation – recorded as a chronological time stamped log, and a record of the materials used. In addition: appropriate dialogue was recorded; it was noted where students displayed signs of uncertainty or confusion. shows a sample of field notes taken during a heart dissection practical lesson; note how they indicate the recipe based nature of this lesson.

Informed by the literature review, the field notes were used to identify the lack of enquiry skills in Irish practical biology lessons. This observation was then investigated by applying the SEOS retrospectively to video recordings of the lessons.

Semi-structured interviews were employed here to corroborate the observation that practical work did not include scientific enquiry skills. Mason's guidelines for planning interview questions were followed, documenting ‘big’ questions which were informed by the literature review. For example, do the syllabus requirements and classroom enactment of practical work align? (Abrahams and Reiss Citation2012; Government of Ireland Citation2003; Government of Ireland Citation2002; Government of Ireland Citation2001; Grunwald and Hartman Citation2010; Hofstein and Lunetta Citation2004; Kind Citation2011; Lunetta, Hofstein and Clough Citation2013; Llewellyn Citation2013; National Research Council Citation2012). ‘Mini’ questions were developed from big questions to allow flexibility to examine relevant topics in greater depth while also accounting for unforeseen topics to be explored (Mason Citation2017). For example, the big question above was broken into mini questions asking teachers and students whether each of the following was present in practical lessons; observation of a phenomenon, formulation of hypotheses, interpretation of data, application of scientific concepts to new ideas and experiments (Grunwald and Hartman Citation2010; Hofstein and Lunetta Citation2004; Llewellyn Citation2013; National Research Council Citation2012).

In each practical lesson that was observed, a Dictaphone recorded the conversations of one group of students recorded. The purpose of this was to corroborate whether the SEOS was accurately documenting the student involvement in the enquiry skills that were evident in the lesson. For example, the only way to know whether students were able to formulate n hypothesis was to listen to the way in which they discussed it.

Transcripts of interview and audio data were analysed using Template Analysis because it occupies a position that bridges inductive and deductive analysis of data (Brooks et al. Citation2015; King Citation2012). King (Citation2012) advocates for the definition of initial higher level inductive a priori themes (in this case there was one theme – policy vs practice) from which lower level themes can deductively emerge. The bulk of the data analysis was a bottom up reading through interview and audio transcripts to code all relevant areas of interest and ensure nothing was overlooked.

Results

Cycle 2 – formative evaluation of the SEOS

In DBR, design and formative evaluation of educational artefacts go hand in hand (Nieveen and Folmer Citation2013). The development of the SEOS as a quality tool is contingent on its ability to evaluate the procedural skills utilised by students in practical lessons. Therefore, it is trialled in three increasingly enquiry-based settings which are documented in this section as three evaluative sub-cycles. This formative evaluation of the SEOS corroborates data collected using the SEOS with interview and audio data, providing strength to the claims made here about the SEOS as a quality tool for examining practical procedural skills.

Sub-cycle 1

Field notes recorded from 10 unstructured scoping observations in cycle 1 reported the absence of enquiry in all lessons (see ). Each lesson began with the teacher asking a series of oral or written questions, followed by an explanation of the experiment as a step-by-step process, after which students followed the procedure to produce a phenomenon. Whether the phenomenon was produced or not, the teacher wrote the results on the board, and to conclude, students finished their laboratory reports. These lessons were identified as recipe-style only.

The SEOS was retrospectively applied to each of the 10 experiments observed in the scoping cycle to evaluate the procedural skills evident (). The only skill consistently achieved by students was the hands-on skill Following Instructions as indicated by scores of between 3 and 5 in . Correct Manipulation of Apparatus, associated with laboratory skills, showed an inconsistent approach to teaching laboratory skills across all lessons, with field notes indicating little evidence of laboratory skills, such as measurement or accuracy, being taught to students.

Table 4. Scoping stage observations of 10 practical lessons using the SEOS.

Under the Observation section, specifically the sub-skill ‘complete observation of the phenomenon under study’ the SEOS indicated that, in seven of the ten lessons, students did not produce any phenomenon. Interview evidence confirmed this to be the case for students in much of the practical work they undertook:

At the moment with practical work, what are the major barriers to your learning?

We don't really understand it (practical work) in the first place to actually understand what's going on as we’re doing it like

That it doesn't really work as well, like maybe if it worked, we’d understand why it worked. Whereas when it doesn't work, you’re just like ‘alright, it didn't work’ and we don't know why it didn't work or how we could change it to make it work.

And it's not even like, say like, ours didn't work but somebody else's worked, that did, so you could compare, like, it's just, nobody's did.

Student interview 1- Cycle 1

In terms of recording and presenting data, in all but three lessons, there was no emphasis on any kind of data representation such as graphs or diagrams, by the students (scores of 0 or 1).

The minds-on skills associated with enquiry, Interpretation, Application, Practical Enquiry were conducted by the teacher if they were present (scores of 1). In all cases there was no hypothesis formation or application of ideas. Any conclusions that were reached were done by the teacher (scores of 1) without any student involvement in the process.

Looking at the bottom of , no aspect of the scientific method was used to teach practical work in biology classrooms, even though it is stipulated in curriculum documents as the pedagogy of choice. Teacher interviews provided supporting evidence that teachers were aware that their practical lessons did not incorporate it:

Every experiment was supposed to incorporate the scientific method. Would you say that happens?

No

Ever?

Definitely not, no.

Mr. Jones interview – Cycle 1

Ok. You’ve studied the scientific method?

Yeah

Ok. Do you ever use the scientific method to do an experiment? So, do you ever pose a hypothesis? Do you ever ask a question? Do you ever collect data and analyse your data?

We should be

But we’re not

Is that a part of Leaving Cert biology?

No

Student interview 1 – Cycle 1

Sub-cycle 2

The second design cycle trialled the SEOS in two settings (): one in-service teacher lesson and two pre-service teacher lessons (n = 8). All participants were asked to teach an enquiry-based laboratory lesson that included a focus on one laboratory skill for teachers (preparation of chemical solutions) and one for the students (correct and accurate use of laboratory equipment). One enquiry skill was also included (formation of a hypothesis). Audio-recordings of these lessons were used to support SEOS data.

Table 5. Data collected from the second design cycle using the SEOS.

From the SEOS, the focus on laboratory skills was evident in the in-service teacher lesson, because students did observe the phenomenon they were intended to observe (see Correct Manipulation of Apparatus in ). For pre-service teachers, there was also an emphasis on laboratory skills in the lesson, with the phenomenon produced in one lesson, while the other lesson did not produce the phenomenon. Across all three lessons, there was an improvement in student attainment of the physical manipulative skills, compared to the previous sub-cycle. In terms of enquiry skills (interpretation, application, practical enquiry) there was no significant improvement in this area. It should be noted however, that this was the first time students were asked for a hypothesis in an in-service teacher (IST in ) lesson, albeit with more input from the teacher than the students, hence a rating of 2 towards the bottom of beside ‘forming an hypothesis’:

Oh, I’ll ask one question. What was our hypothesis from yesterday?

That we would be able to extract DNA

To find DNA

Why?

Ok bio-? [No answer] It's a something kind of biomolecule?

Stable

Stable biomolecule. It is a stable … right lads off you go

05:40

I’m so bad at coming up with a hypothesis

I don't know what a hypothesis is

It's a statement and then you have to pick a side

I feel like I don't even know what a hypothesis is about

Well, I was never taught, like, “this is a hypothesis”

I think it's a part of the scientific method –

-yeah, but like, that was never tested, yeah –

-but like, who actually gives a **** about the scientific method?

09:40

Sub-cycle 3

In the final design cycle, the SEOS documented procedural skills in genuine enquiry-based lessons. Two in-service teachers underwent enquiry-based professional development in the form of five three-hour workshops (O’Neill Citation2022). They then taught five enquiry-based lessons to their fifth-year biology classes. The SEOS in documents the procedural skills in these lessons:

Table 6. Data collected from the third design cycle using the SEOS.

shows that the SEOS was rigorous enough to capture the shift in how practical work was taught between this sub-cycle and the other two sub-cycles. The ratings for Correct Manipulation of Apparatus showed a significant shift towards independent student work. The Observation skill documented how the practical activities ‘worked’, with students observing the correct aspect of the phenomenon without assistance from the teacher. There was no evidence of this in the scoping stages. The Recording skill showed how in each of the five lessons, it was the students who decided how to record, calculate, tabulate and graphically represent their results, which again was not evident in the scoping stages.

In terms of the enquiry skills, the difference between this stage and the scoping stage was substantial. Students drew their own conclusions from their own experimental data and were able to relate those conclusions back to the original hypothesis they had developed. The teachers commented on this shift from knowledge as an end-in-itself to knowledge as an end-in-view during interview:

Before I was like … “What enzyme are we using?”, “What substrate are we using?”

I know we were only using recall on the bottom of Blooms [taxonomy]

But I was of the opinion that if I asked them at least 100 times they would write it down in an exam, [laughter] you know I just thought like, the more I ask the more it might actually eventually go into their head.

You were approaching your classes as an educator more so than a scientist like, and that was the question, where you want people that were going to do well in exams or people who were going to be scientists like?

Yeah, to think for themselves.

Teacher interview – cycle 3

If you’re just given a list of instructions, like, I feel like it wouldn't even be beneficial because you’re literally just getting told what to do and you’re not being allowed the opportunity to bring your own opinions and what you think might work or won't work.

Student interview 1 – Cycle 4

Discussion

Cycle 3 – summative evaluation

The SEOS is discussed here in light of four quality criteria that any DBR artefact must be subject to: practicality, relevance, consistency and effectiveness (Kelly Citation2013). Practicality refers to its usability by target users in the target setting. In the pragmatic spirit of DBR, the SEOS was the most practical way to clearly show the presence or absence of procedural skills in biology practical lessons and the extent to which they were achieved by students (rather than teachers). Irish policy for STEM implementation identifies engagement of teachers in communities of practice as one way to enhance teacher skills (DES Citation2017). Wenger (Citation1999, 58) describes how COPs need material objects that create ‘points of focus around which negotiation of meaning becomes organised’. The sole user of the SEOS in this study was a doctoral researcher, however future research to examine the scope of the SEOS in fulfilling the policy ambition of supporting in-service and pre-service teachers to use it to self and peer assess their practical lessons is essential.

In terms of relevance, the SEOS answers international calls at the Macro, Meso and Micro curriculum level for quality resources to assist teachers with the development of enquiry-based pedagogies (DES Citation2017). While there are many STEM frameworks for teaching enquiry in the literature, such as those of Pedaste et al. (Citation2015) and Bybee (Citation2014), there is a gap in the research around whether those frameworks align curriculum policy with practice for practical activities. The simplicity of the SEOS lies in the direct translation of curriculum enquiry skills into an artefact that can be understood and used by any practising teacher. It creates an awareness of the curriculum requirements for practical work and, as is evidenced in this paper, documents improvements in practical enquiry and laboratory skills for students. Thus, it provides a solution to Deng’s (Citation2017) contention that curriculum policy and classroom practice are operating in different arenas of reality, by grounding classroom practice in the skills set out in the policy documents.

If the SEOS can be used repeatedly in the same manner, then it can be considered to fulfil the third quality criterion; consistency (Kelly Citation2013). The SEOS tracked changes to teacher and student practices through three iterative cycles of increasingly enquiry-based practical lessons. Its undergirding theoretical framework distinguishes between recipe-based knowledge and enquiry-based ways of knowing, and supported its consistent use in revealing the extent to which procedural enquiry skills stipulated in policy were independently attained by students in practical lessons..

The final quality criterion (effectiveness) required the SEOS to be effective in documenting procedural skills in practical lessons, and answers the research question posed at the beginning of the paper. It has been shown here how the SEOS highlighted patterns within practical lessons in each sub-cycle. The first sub-cycle identified a complete absence of student attainment of procedural skills across 10 lessons and the SEOS proved to be a valuable indicator of the wider issue of the misalignment of policy and practice for practical work. The SEOS in second sub-cycle documented how it was easier to improve laboratory skills (hands-on) than scientific enquiry skills (minds-on), which again brought the issue back to the epistemic divide between recipe and enquiry and evidenced the enquiry vacuum (O’Neill Citation2022). Only in the third sub-cycle did the SEOS record clear evidence of student attainment of enquiry and laboratory skills. The lessons in this sub-cycle were taught by teachers who has been trained to teach through genuine enquiry and this epistemic shift was captured by the SEOS.

Therefore, if procedural knowledge of scientific enquiry is illustrated in the manner of the SEOS, it is more likely to be included in enacted practical lessons as long as the SEOS is used as a reflective tool, meaning at the end of each practical lesson, it is filled in by the teacher and/or students. If patterns emerge that indicate a consistent failure of students to attain a skill, then this could prompt changes to how practical work is taught.

The SEOS has a place within the space of curriculum change, because it brings about a focus on including (currently absent) procedural skills such as interpretation of data, application of new knowledge, and practical enquiry (forming an hypothesis, designing a new activity, using controls, identifying variables). The inclusion of these skills into practical work, answers the State Exams Commission (SEC) call to strike a balance between propositional knowledge, currently overrepresented at senior cycle, and procedural and epistemic knowledge, which are currently underrepresented at senior cycle (SEC Citation2013, 18). The focus on highlighting procedural knowledge and skills in the curriculum is seen here as the first step towards bridging the epistemic divide between recipe and enquiry. Zohar and Hipkins (Citation2018, 44) have written about this epistemic divide in terms of the difficulties arising from curriculum reforms that do not provide ‘clear criteria for teaching, assessing and designing interventions that include a focus on knowledge-building practices in the disciplines’. This study has shown how to design, implement and evaluate an effective intervention for building knowledge around procedural skills in the curriculum.

The SEOS is not limited to use in senior cycle biology classrooms, rather is it transferrable across the spectrum of STEM education, because it is grounded in alignment of policy and practice. It answers calls in international STEM policy for quality resources to assist teachers to makes changes to their classroom practice (DES Citation2017).

Limitations of the research

While some research asserts that piloting research instruments to increase their trustworthiness is important (Bassey Citation1999), there are instances where the focus of some small scale exploratory research projects is on determining whether the research is suitable for upscaling, and in these cases, piloting is not considered essential (Malmqvist et al. Citation2019). This was the case in this research project, which points to a future direction for this research in refining the SEOS with practising teachers, as a precursor to a larger study that situates and assesses it as a tool for teachers as Irish senior cycle STEM curricula transition to more modern enquiry-based scientific practices (DES Citation2023).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data sets in each SEOS presented in this paper are derived from the PhD research of the author and can be found at the following link: https://mural.maynoothuniversity.ie/17278/1/NON%20PhD%20Thesis%20Complete.pdf

Additional information

Funding

Notes on contributors

Natalie O’Neill

Natalie O’Neill is an Assistant Professor in Education at DCU and former science teacher. In 2022 she completed a doctoral study researching, characterising and providing solutions to the epistemic divide between policy and practice in the second level biology curriculum. Her research interests are in science education where she is the ESAI Biology Special Interest Group Lead, working with a group of researchers across Ireland and Northern Ireland to effect changes in policy regarding Biology Education. She is also a member of CASTeL at DCU.

References

- Abrahams, Ian, and Robin Millar. 2008. “Does Practical Work Really Work? A Study of the Effectiveness of Practical Work as a Teaching and Learning Method in School Science.” International Journal of Science Education 30 (14): 1945–1969. https://doi.org/10.1080/09500690701749305

- Abrahams, Ian, and Michael J. Reiss. 2012. “Practical Work: Its Effectiveness in Primary and Secondary Schools in England.” Journal of Research in Science Teaching 49 (8): 1035–1055. https://doi.org/10.1002/tea.21036

- Australian Government. 2015. “National STEM School Education Strategy, 2016–2026”.

- Bank, Volker. 2012. “On OECD Policies and the Pitfalls in Economy-Driven Education: The Case of Germany.” Journal of Curriculum Studies 44 (2): 193–210. DOI: 10.1080/00220272.2011.639903.

- Bassey, Michael. 1999. Case Study Research in Educational Settings. Buckingham: Open University Press.

- Brooks, Joanna, Serena McCluskey, Emma Turley, and Nigel King. 2015. “The Utility of Template Analysis in Qualitative Psychology Research.” Qualitative Research in Psychology 12 (2): 202–222. https://doi.org/10.1080/14780887.2014.955224

- Burns, Denise, Ann Devitt, Gerry McNamara, Joe O'Hara, Martin Brown, 2018. “Is it all Memory Recall? An Empirical Investigation of Intellectual Skill Requirements in Leaving Certificate Examination Papers in Ireland.” Irish Educational Studies 37 (3): 351–372. https://doi.org/10.1080/03323315.2018.1484300

- Bybee, Rodger W. 2014. “The BSCS 5E Instructional Model: Personal Reflections and Contemporary Implications.” Science and Children 51 (8): 10–13.

- Capps, Daniel K., and Barbara A. Crawford. 2013. “Inquiry-based Instruction and Teaching About Nature of Science: Are They Happening?” Journal of Science Teacher Education 24 (3): 497–526. https://doi.org/10.1007/s10972-012-9314-z

- Carter, Lynn. 2005. “Globalisation and Science Education: Rethinking Science Education Reforms.” Journal of Research in Science Teaching: The Official Journal of the National Association for Research in Science Teaching 42 (5): 561–580.

- Childs, Ann, and Jo-Anne Baird. 2020. “General Certificate of Secondary Education (GCSE) and the Assessment of Science Practical Work: An Historical Review of Assessment Policy.” The Curriculum Journal 31 (3): 357–378. https://doi.org/10.1002/curj.20

- Costello, E., P. Girme, M. McKnight, M. Brown, E. McLoughlin, and S. Kaya. 2020. “Government Responses to the Challenge of STEM Education: Case Studies from Europe.” ATS STEM Report #2. Dublin: Dublin City University. http://doi.org/10.5281/zenodo.3673600.

- Crawford, Barbara A. 2014. “From Inquiry to Scientific Practices in the Science Classroom.” In Handbook of Research on Science Education, Volume II, edited by Norman Lederman and Sandra Abell, 529–556. London: Routledge.

- Cullinane, Alison, Judith Hillier, Ann Childs, and Sibel Erduran. 2023. “Teachers’ Perceptions of Brandon’s Matrix as a Framework for the Teaching and Assessment of Scientific Methods in School Science.” Research in Science Education 53 (1): 193–212. https://doi.org/10.1007/s11165-022-10044-y

- Deng, Zongyi.. 2017. “Rethinking Curriculum and Teaching.” In Oxford Research Encyclopedia of Education, edited by G. W. Noblitt, 1–25. New York: Oxford University Press.

- Department of Education and Skills. 2017. “STEM Education Policy Statement.” Retrieved June 2023. https://www.gov.ie/en/policy-information/4d40d5-stem-education-policy/#stem-education-policy-statement-2017-2026.

- Department of Education and Skills. 2023. STEM Education. Implementation Plan to 2026. Retrieved June 2023. https://www.gov.ie/pdf/?file = https://assets.gov.ie/249002/3a904fe0-8fcf-4e69-ab31-987babd41ccc.pdf#page = null.

- DES. 2023. “STEM Education Policy Focus Group Consultation Report.” March 2023.

- Design-Based Research Collective. 2003. “Design-based Research: An Emerging Paradigm for Educational Inquiry.” Educational Researcher 32 (1): 5–8. https://doi.org/10.3102/0013189X032001005

- Dewey, John. [1910] 2012. How we Think. Mansfield Centre, Connecticut: Martino Publishing.

- Dewey, John. [1925] 1958. Experience and Nature. Vol. 471. New York: Dover Publications.

- Dewey, John. [1938] 2015. “Experience and Education.” The Educational Forum 50 (3): 241–252. https://doi.org/10.1080/00131728609335764

- Dillon, Justin. 2008. “A Review of the Research on Practical Work in School Science.” King’s College, London: 1–9.

- English National Curriculum for Science. 2013. Accessed May 2023. https://www.gov.uk/government/publications/national-curriculum-in-england-science-programmes-of-study/national-curriculum-in-england-science-programmes-of-study#contents.

- Erduran, Sibel, Ebru Kaya, Aysegul Cilekrenkli, Selin Akgun, and Busra Aksoz. 2021. “Perceptions of Nature of Science Emerging in Group Discussions: A Comparative Account of pre-Service Teachers from Turkey and England.” International Journal of Science and Mathematics Education 19 (7): 1375–1396. https://doi.org/10.1007/s10763-020-10110-9

- Fradd, Sandra H., Okhee Lee, Francis X. Sutman, and M. Kim Saxton. 2001. “Promoting Science Literacy with English Language Learners Through Instructional Materials Development: A Case Study.” Bilingual Research Journal 25 (4): 479–501. https://doi.org/10.1080/15235882.2001.11074464

- Freeman, Brigid, Simon Marginson, and Russell Tytler. 2019. “An International View of STEM Education.” In STEM Education 2.0: Myths and Truths–What has K-12 STEM Education Research Taught us?, edited by A. Sahin, and M. Mohr-Schroeder, 350–363. Leiden, The Netherlands: Brill.

- Freire, Paulo. 1996. “Pedagogy of the Oppressed (Revised).” New York: Continuum 356: 357–358.

- Government of Ireland. 2001. Leaving Certificate Biology Syllabus. Dublin: The Stationary Office.

- Government of Ireland. 2002. Biology, Guidelines for Teachers. Dublin: Government Publications.

- Government of Ireland. 2003. Biology Support Materials Laboratory Handbook For Teachers. Dublin: Government Publications.

- Grunwald, Sandra, and Andrew Hartman. 2010. “A Case-Based Approach Improves Science Students’ Experimental Variable Identification Skills.” Journal of College Science Teaching 39: 3.

- Hodson, Derek. 2014. “Learning Science, Learning About Science, Doing Science: Different Goals Demand Different Learning Methods.” International Journal of Science Education 36 (15): 2534–2553. https://doi.org/10.1080/09500693.2014.899722

- Hofstein, Avi, and V. N. Lunetta. 2004. “The Laboratory in Science Education: Foundations for the Twenty-First Century.” Science Education 88 (1): 28–54. https://doi.org/10.1002/sce.10106

- Hyland, Aine. 2014. “The Design of Leaving Certificate science syllabi in Ireland: An International Comparison” Irish Science Teachers Association.

- Ioannidou, Olga, and Sibel Erduran. 2021. “Beyond Hypothesis Testing: Investigating the Diversity of Scientific Methods in Science Teachers’ Understanding.” Science & Education 30 (2): 345–364. https://doi.org/10.1007/s11191-020-00185-9

- Ishikawa, Mayumi, Ashlyn Moehle, and Shota Fujii. 2015. “Japan: “restoring Faith in Science Through Competitive STEM Strategy.” In The age of STEM, edited by B. Freeman, S. Marginson, and R. Tytler, 81–101. Oxon: Routledge.

- Kelly, Anthony E.. 2013. Educational Design Research, edited by Tjierd Plomp and Nienke Nieveen, 135–150. Netherlands: Slo.

- Kidman, Gillian. 2012. “Australia at the Crossroads: A Review of School Science Practical Work.” Eurasia Journal of Mathematics, Science and Technology Education 8 (1): 35–47.

- Kim, Mijung, and Aik-Ling Tan. 2011. “Rethinking Difficulties of Teaching Inquiry-Based Practical Work: Stories from Elementary pre-Service Teachers.” International Journal of Science Education 33 (4): 465–486. https://doi.org/10.1080/09500691003639913

- Kind, Per Morton, et al. 2011. “Peer Argumentation in the School Science Laboratory—Exploring Effects of Task Features.” International Journal of Science Education 33 (18): 2527–2558. https://doi.org/10.1080/09500693.2010.550952

- King, Nigel.. 2012. “Doing Template Analysis.” In Qualitative Organizational Research: Core Methods and Current Challenges. Vol. 426, edited by Gillian Symon and Cathy Cassell, 426–449. Los Angeles: Sage.

- Lankshear, Colin, and Michele Knobel. 2004. A Handbook for Teacher Research. Berkshire: Open University Press.

- Llewellyn, Douglas. 2013. Teaching High School Science Through Inquiry and Argumentation. California: Corwin Press.

- Loughran, John, Amanda Berry, and Pamela Mulhall. 2012. Understanding and Developing Scienceteachers’ Pedagogical Content Knowledge. Vol. 12. Rotterdam: Sense Publishers.

- Lunetta, Vincent N., Avi Hofstein, and Michael P. Clough. 2013. “Learning and Teaching in the School Science Laboratory: An Analysis of Research, Theory, and Practice.” In Handbook of Research on Science Education, edited by Norman Lederman and Sandra Abel, 393–441. Mahwah, NJ: Routledge.

- Lynch, Kathleen. 2021. Care and Capitalism. Cambridge: John Wiley & Sons.

- Malmqvist, Johan, Kristina Hellberg, Gunvie Möllås, Richard Rose, and Michael Shevlin. 2019. “Conducting the Pilot Study: A Neglected Part of the Research Process? Methodological Findings Supporting the Importance of Piloting in Qualitative Research Studies.” International Journal of Qualitative Methods 18: 1609406919878341. https://journals.sagepub.com/doi/epub/10. 1177/1609406919878341

- Mason, Jennifer.. 2017. Qualitative Researching. California: Sage

- McKenney, Susan, and Thomas C. Reeves. 2018. Conducting Educational Design Research. New York: Routledge.

- McLoughlin, Eilish et al. 2020. “STEM Education in Schools: What Can We Learn from the Research?" ATS STEM Report #1. Ireland: Dublin City University. https://doi.org/10.5281/zenodo.3673728

- Millar, Robin. 2004. “The Role of Practical Work in the Teaching and Learning of Science.” Commissioned Paper-Committee on High School Science Laboratories: Role and Vision. Washington DC: National Academy of Sciences, 308.

- Millar, Robin. 2009. “Analysing Practical Activities to Assess and Improve Effectiveness: The Practical Activity Analysis Inventory (PAAI).” York: Centre for Innovation and Research in Science Education, University of York.

- National Research Council. 2012. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas. Washington: National Academies Press.

- NCCA. 2019. Accessed May 2023. https://ncca.ie/media/5387/bp-lc-pcb-sep-2019.pdf.

- Nieveen, Nienke, and Elvira Folmer. 2013. “Formative Evaluation in Educational Design Research.” Design Research 153: 152–169.

- Nistor, A., A. Gras-Velazquez, N. Billon, and G. Mihai. 2018. “Science, Technology, Engineering and Mathematics Education Practices in Europe” [Scientix Observatory Report]. Brussels: European Schoolnet. http://www.scientix.eu/observatory.

- OECD. 2016. PISA 2015 Results: Policies and Practices for Successful Schools (Vol. 2). Paris: OECD Publishing.

- O’Neill, Natalie. 2022. “Bridging the Epistemic Divide: A Design Based Research Project on Laboratory Work at Upper Second Level in Ireland.” PhD diss., National University of Ireland Maynooth.

- Osborne, Jonathan. 2015. “Practical Work in Science: Misunderstood and Badly Used?” School Science Review 96 (357): 16–24.

- Pedaste, Margus, Mario Mäeots, Leo A. Siiman, Ton De Jong, Siswa AN Van Riesen, Ellen T. Kamp, Constantinos C. Manoli, Zacharias C. Zacharia, and Eleftheria Tsourlidaki. 2015. “Phases of Inquiry-Based Learning: Definitions and the Inquiry Cycle.” Educational Research Review 14: 47–61. https://doi.org/10.1016/j.edurev.2015.02.003

- Plomp, Tjeerd.. 2013. “Educational Design Research: An Introduction.” In Educational Design Research, edited by Tjeerd Plomp and Nienke Nieveen, 11–50. Enschede: Slo.

- Priestley, Mark, Daniel Alvunger, Stavroula Philippou, and Tina Soini, eds. 2021. Curriculum Making in Europe: Policy and Practice Within and Across Diverse Contexts. Bingley: Emerald Publishing Limited.

- Scientix. 2018. Education Practices in Europe. Retrieved June 2023. http://www.scientix.eu/documents/10137/782005/STEM-Edu-Practices_DEF_WEB.pdf/b4847c2d-2fa8-438c-b080-3793fe26d0c8.

- Sharpe, Rachael, and Ian Abrahams. 2020. “Secondary School Students’ Attitudes to Practical Work in Biology, Chemistry and Physics in England.” Research in Science & Technological Education 38 (1): 84–104. https://doi.org/10.1080/02635143.2019.1597696

- Shiel, Gerry, et al. 2016. “Ireland on Science, Reading Literacy and Mathematics in PISA 2015.”

- Short, James. 2021. “Commentary: Making Progress on Curriculum Reform in Science Education Through Purposes, Policies, Programs, and Practices.” Journal of Science Teacher Education 32 (7): 830–835. DOI: 10.1080/1046560X.2021.1927309.

- Simpson, Mary, and Jennifer Tuson. 2003. “Using Observations in Small-Scale Research: A Beginner’s Guide. Revised Edition. Using Research. University of Glasgow, SCRE Centre, 16 Dublin Street, Edinburgh, EH3 6NL Scotland (SCRE Publication no. 130).

- Spradley, James. 2016. Doing Participant Observation. Long Grove, IL: Waveland Press.

- State Examinations Commission. 2021. Date accessed, August 2022. https://www.examinations.ie/statistics/?l = en&mc = st&sc = r21.

- State Examinations Commission [SEC]. 2013. Leaving Certificate Examination 2013, Chief Examiner’s Report, Physics Retrieved May 2023 from https://www.examinations.ie/archive/examiners_reports/Chief_Examiner_Report_Physics_2013.pdf.

- Tasiopoulou, Evita, et al. 2022. “European Integrated STEM Teaching Framework October 2022”, European Schoolnet, Brussels.

- Thijs, Annette, and Jan Van Den Akker. 2009. Curriculum in Development. Enschede: Slo, Netherlands Institute for Curriculum Development.

- Van den Akker, Jan, et al. eds., ed. 2006. Educational Design Research. London: Routledge.

- Wenger, Etienne. 1999. Communities of Practice: Learning, Meaning, and Identity. Cambridge: Cambridge University press.

- Yoon, Hye-Gyoung, Yong Jae Joung, and Mijung Kim. 2012. “The Challenges of Science Inquiry Teaching for pre-Service Teachers in Elementary Classrooms: Difficulties on and Under the Scene.” Research in Science Education 42 (3): 589–608. https://doi.org/10.1007/s11165-011-9212-y

- Zohar, Anat, and Rosemary Hipkins. 2018. “How “Tight/Loose” Curriculum Dynamics Impact the Treatment of Knowledge in two National Contexts.” Curriculum Matters 14: 31–47. https://doi.org/10.18296/cm.0028