?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

For high-dimensional linear regression models, we review and compare several estimators of variances and

of the random slopes and errors, respectively. These variances relate directly to ridge regression penalty λ and heritability index h2, often used in genetics. Several estimators of these, either based on cross-validation (CV) or maximum marginal likelihood (MML), are also discussed. The comparisons include several cases of the high-dimensional covariate matrix such as multi-collinear covariates and data-derived ones. Moreover, we study robustness against model misspecifications such as sparse instead of dense effects and non-Gaussian errors. An example on weight gain data with genomic covariates confirms the good performance of MML compared to CV. Several extensions are presented. First, to the high-dimensional linear mixed effects model, with REML as an alternative to MML. Second, to the conjugate Bayesian setting, shown to be a good alternative. Third, and most prominently, to generalized linear models for which we derive a computationally efficient MML estimator by re-writing the marginal likelihood as an n-dimensional integral. For Poisson and Binomial ridge regression, we demonstrate the superior accuracy of the resulting MML estimator of λ as compared to CV. Software is provided to enable reproduction of all results.

1. Introduction

Estimation of hyper-parameters is an essential part of fitting high-dimensional Gaussian random effect regression models, also known as ridge regression. These models are widely applied in genomics and genetics applications, where often the number of variables p is much larger than the number of samples n, i.e. .

We initially focus on the linear model. The goal is to estimate error variance and random effects variance

or functions thereof, in particular the ridge penalty parameter,

, or heritability index,

. Here, the ridge penalty is used in classical ridge regression to shrink the regression coefficients towards zero (Hoerl and Kennard Citation1970), whereas heritability measures the fraction of variation between individuals within a population that is due to their genotypes (Visscher, Hill, and Wray Citation2008). The estimators of

and

can be used to estimate λ or h2, or for statistical testing (Kang et al. Citation2008). We review several estimators, based on maximum marginal likelihood (MML), moment equations, (generalized) cross-validation, dimension reduction, and for degrees-of-freedom adjustment. Some of these estimators are classical, while others have recently been introduced.

We systematically review and compare the estimators in a broad variety of high-dimensional settings. For estimation of λ in low-dimensional settings, we refer to Muniz and Kibria (Citation2009); Månsson and Shukur (Citation2011); Kibria and Banik (Citation2016). We address the effect of multi-collinearity and robustness against model misspecifications, such as sparsity and non-Gaussian errors. The comparisons are extended to the linear mixed effects model, with fixed effects added to the model and to Bayesian linear regression. The linear model part is concluded by a genomics data application to weight gain prediction after kidney transplantation.

The observed good performance of MML-estimation in the linear model setting was a stimulus to consider MML for high-dimensional generalized linear models (GLM). MML is more involved here than in the linear model, because of the non-conjugacy of the likelihood and prior. Therefore, approximations are required, such as Laplace ones. While these have been addressed by others (Heisterkam, van Houwelingen, and Downs Citation1999; Wood Citation2011), we derive an estimator which is computationally efficient for settings. For Poisson and Binomial ridge regression, we demonstrate the superior accuracy of MML estimation of λ as compared to cross-validation.

Our software enables reproduction of all results. In addition, it allows comparisons for one’s own high-dimensional data matrix by simulating the response conditional on this matrix, as we do for two cancer genomics examples. Computational shortcuts and considerations are discussed throughout the paper, and detailed at the end, including computing times.

1.1. The model

We initially focus on high-dimensional linear regression with random effects. Variables are denoted by and samples by

. Then:

(1)

(1)

Here, is the vector of responses,

corresponds to the random effects and

is a vector of Gaussian errors. Furthermore, X is a fixed n × p matrix:

with

.

1.2. Estimation methods

We distinguish three categories of estimation methods:

Estimation of functions of

, in particular: i)

(Golub, Heath, and Wahba Citation1979), used in ridge regression to minimize

; and ii) heritability

(Bonnet, Gassiat, and Lévy-Leduc Citation2015).

Separate estimation of

(Cule, Vineis, and De Iorio Citation2011; Cule and De Iorio Citation2012), possibly followed by plug-in estimation of

.

Joint estimation of

and

.

Below, we discuss several methods for each of these categories. They have several matrices and matrix computations in common, which we therefore introduce first.

1.3. Notation and matrix computations

Throughout the paper, we will use the following notation:(2)

(2)

Many of the estimators below require calculations on potentially very large matrices. The following two well-known equalities can highly alleviate the computational burden.

First, , and hence also

and H, can be efficiently computed by using singular value decomposition (SVD). Decompose

by SVD, and denote

. Then,

(3)

(3)

The latter requires inversion of an n × n matrix only. Second, the following efficient trace computation for matrix products applies to

(4)

(4)

2. Methods

2.1. Estimating functions of

and

and

2.1.1. Estimating λ by K-fold CV

A benchmark method that is used extensively to estimate is cross-validation. Here, we use K-fold CV, as implemented in the popular R-package glmnet (Friedman, Hastie, and Tibshirani Citation2010). Let f(i) denote the set of samples left out for testing at the same fold as sample i. Then, CV-based estimation of λ pertains to minimizing the cross-validated prediction error:

(5)

(5) where

denotes the estimate of

based on training samples

and penalty λ. Note that for leave-one-out-cross-validation (n-fold CV) the analytical solution of (5) is the PRESS statistic (Allen Citation1974).

2.1.2. Estimating λ by generalized cross validation

Generalized Cross Validation (GCV) is a rotation-invariant form of the PRESS statistic. It is more robust than the latter to (near-diagonal) hat matrices (Golub, Heath, and Wahba Citation1979). For the linear model, the criterion is (Hastie, Tibshirani, and Friedman Citation2008):

(6)

(6) where the trace of

can be computed efficiently by (4). Then,

.

2.1.3. Estimating heritability by HiLMM

Heritability is defined by . A recent method which estimates heritability directly using maximum likelihood is proposed by Bonnet, Gassiat, and Lévy-Leduc (Citation2015). Analogously to EquationEq. (12)

(12)

(12) , it is based on writing:

(7)

(7) where

and

. Now, apply an eigen-decomposition to R:

. Then, heritability is estimated by Bonnet, Gassiat, and Lévy-Leduc (Citation2015):

(8)

(8) with

and

the ith element of L and

, respectively. The authors provide rigorous consistency results for their estimator, as well as theoretical confidence bounds, also for mixed models and sparse settings.

2.2. Estimation of

The two methods below rely on an estimate , where

is estimated by (G)CV. Then

is estimated conditional on

If desired,

may then be estimated by

2.2.1. Basic estimate

A basic estimate of , and often used in practice, is given by (Hastie and Tibshirani Citation1990):

(9)

(9) which is the residual mean square error. Here, the residual effective degrees of freedom (Hastie and Tibshirani Citation1990) equals

, with H as in (2). We also considered (9) with

, as in Hellton and Hjort (Citation2018), which rendered similar, slightly inferior results.

2.2.2. PCR-based estimate

The estimator for may also be based on Principal Component Regression (PCR). PCR is based on the eigen-decomposition

. Denoting

and

, we have

. Then, Z is reduced from p columns to

principal components, a crucial step (Cule and De Iorio Citation2012). Using the reduced model,

is estimated by the residual mean square error (Cule and De Iorio Citation2012):

(10)

(10)

2.3. Joint estimation of

and

and

2.3.1. MML

An Empirical Bayes estimate of and

is obtained by maximizing the marginal likelihood (MML), also referred to as model evidence in machine learning (Murphy Citation2012). This corresponds to:

(11)

(11)

Since is simply derived from the convolution of Gaussian random variables, implying

, and

, so

(12)

(12)

This is easily maximized over and

. Note that after computing

(12) requires operations on n × n matrices only.

2.3.2. Method of moments (MoM)

An alternative to MML is to match the empirical second moments of y to their theoretical counterparts. From (12) we observe that the covariances depend on only. Hence, we obtain an estimator of

by equating the sum of

to that of the theoretical covariances,

, with

as in (12). Then, with

, an estimator for

is obtained by substituting

and equating the sum of

to the sum of theoretical variances,

:

(13)

(13)

These equations also hold for non-Gaussian error terms, which could be an advantage over MML. Moreover, no optimization over and

is required, so MoM is computationally very attractive.

3. Comparisons

For the linear random effects model (ridge regression) we study the following settings:

and

generated from model (1), independent X

or

generated from non-Gaussian distributions, independent X

and

from model (1), multicollinear X

and

from model (1), data-based X.

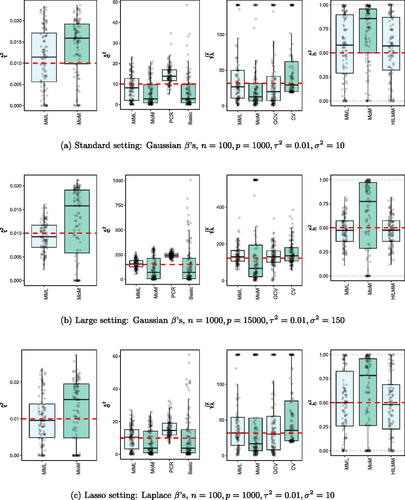

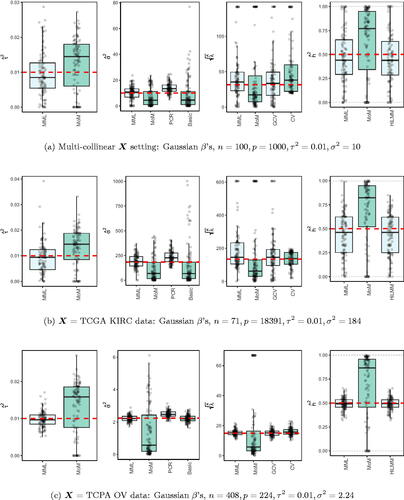

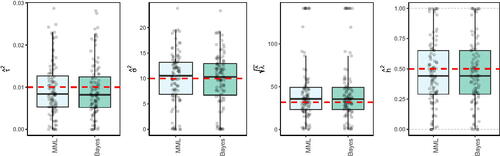

As is common for real data, the variables, i.e. the rows of X, were always standardized for the L2-penalty to have the same effect on all variables. All the results are based on 100 simulated data sets. Cross-validation is applied on 10 folds. Results from n-fold CV (leave-one-out) were generally fairly similar. We focus on the high-dimensional setting with , with excursions to larger data sets and dimensions of real data. In all visualizations below the red dotted lines indicate true values. Moreover, values larger than 20 times the true value were truncated and slightly jittered. Discussion of all results is postponed to Sec. 3.4.

3.1. Independent X

In correspondence to model (1) we sample:(14)

(14)

display the results for and for a large data setting

(which both imply

).

3.2. Departures from a normal effect size distribution

We study the robustness of the methods against (sparse) non-Gaussian effect size distribution or error distribution. In sparse settings, many variables do not have an effect. To mimic this, we simulated the β’s from a mixture distribution with a ‘spike’ and a Gaussian ‘slab’:(15)

(15)

Here, we set which implies

, as in the Gaussian βj setting. Moreover, we also considered:

where again the parameters are chosen such that

and

Apart from

all other quantities are simulated as in (14). Results are displayed for

in for the Laplace (= lasso) effect size distribution and in Supplementary Figure 3 for the spike-and-slab and uniform effect size distribution.

Moreover, we considered heavy-tailed errors by samplingwhere the scalar is chosen such that

, as in the Gaussian error setting. Apart from

, all other quantities are simulated as in (14). Results are displayed in Supplementary Figure 3c.

3.3. Multicollinear X

3.3.1. Simulated X

Next, the design matrix X is sampled using block-wise correlation. We replace the sampling of X in simulation model (14) by:(16)

(16) where

is a unit variance covariance matrix with blocks of size

with correlations ρ on the off-diagonal. shows the results for

.

3.3.2. Real data X

Finally, we consider the estimation of and

in a high- and medium-dimensional setting where X are real data, with likely collinear columns. The first data set (TCGA KIRC) concerns gene expression data of p = 18, 391 genes for n = 71 kidney tumors. The second data set (TCPA OV) holds expression data of p = 224 proteins for n = 408 ovarian tumor samples. Details on both data sets are supplied in the Supplementary Information. To generate response y we use model (14) with X given by the data. Here,

and

is set such that

show the results.

3.4. Discussion of results

3.4.1. MML vs MoM, basic and PCR

and and Supplementary Figure 3 clearly show superior performance of MML compared to MoM: both the bias and variability are much smaller for MML. Generally, MML also outperforms the Basic and PCR estimators of . The PCR estimator approaches the performance of MML for the KIRC and TCPA data (), and the Basic estimator performs reasonably well for the latter (p < n) data set. For other settings, the Basic estimator performs equally inferior as MoM. The results highlight the importance of joint estimation of

and

in high-dimensional settings, because of their delicate interplay.

3.4.2. MML vs GCV and CV

For the estimation of λ MML seems slightly superior to GCV and CV. GCV shows more estimates that deviate towards too small values of λ (e.g. and , i.e. the large p settings), whereas CV tends to render somewhat more skewed results, either to the right ( and ), or to the left (). For the spike-and-slab and uniform effects sizes and the t4 errors the right-skewness of the CV-results is more pronounced (Supplementary Figure 3), indicating that minimization of the cross-validated prediction error (5) is more vulnerable to non-Gaussian y than MML and GCV. Note that the Laplace setting () relates directly to the lasso prior with scale parameter (Tibshirani Citation1996). The results indicate that MML with Gaussian prior could be useful to find the lasso penalty, or serve as a fast initial estimate by simply setting the lasso penalty

, which follows from the variance of the lasso prior.

3.4.3. MML vs HiLMM

For the estimation of heritability h2 and and Supplementary Figure 3 show very comparable performance of MML and HiLMM. This similar performance is not surprising given that both methods are likelihood-based. Hence, while reparametrizing the likelihood (7) is certainly useful to study it as function of h2 (Bonnet, Gassiat, and Lévy-Leduc Citation2015), the reparametrization seems not beneficial for the purpose of estimating h2. In addition, unlike HiLMM, MML also returns estimates of and

. Finally, comparing we observe that both MML and HiLMM clearly benefit from the larger n and p.

4. Data example

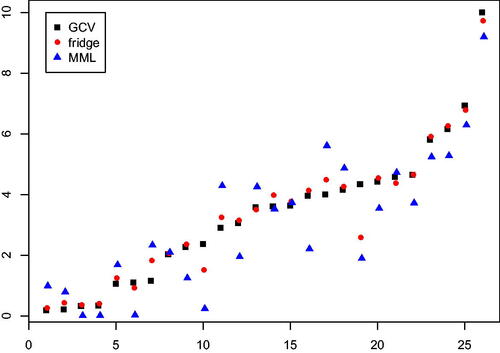

We re-analyse the weight gain data, recently discussed in Hellton and Hjort (Citation2018). Details on the data are presented there, we provide a summary. The data consists of expression profiles of n = 26 individuals with kidney transplants, where profiles consists of 28,869 genes as measured by Affymetrix Human Gene 1.0 ST arrays. The data is available in the EMBL-EBI ArrayExpress database (www.ebi.ac.uk/arrayexpress) under accession number E-GEOD-33070. It is known that kidney transplantation may lead to weight gain, and the study by Cashion et al. (Citation2013) investigates whether gene expression can be used to predict this. Such a prediction can be used to decide upon additional measures to prevent excessive weight gains. We reproduced the analysis by Hellton and Hjort (Citation2018) as much as possible, including their prior selection of 1000 genes. Details on minor discrepancies, and an alternative analysis that accounts for the gene selection are discussed in the Supplementary Material. These did not affect the comparison qualitatively.

In Hellton and Hjort (Citation2018), the authors illustrate their focused ridge (fridge) method and compare it with conventional ridge. In short, fridge estimates sample-specific ridge penalties, based on minimizing a per sample mean squared error (MSE) criterion on the level of the linear predictor . Since

is not known, it is replaced by an initial ridge estimate,

Their sample specific penalty then depends on

, and also on both

and

. The authors use GCV (6) to obtain λ, and a slight variation of (9) to estimate

. They show that fridge improves upon GCV-based ridge estimation. We wish to investigate whether i) MML estimation of

also improves the performance of GCV-based ridge regression; and ii) whether MML estimation further boosts the performance of the fridge estimator. Here, predictive performance is measured by the mean squared prediction error (MSPE) using leave-one-out cross-validation (loocv).

The estimates of MML differ markedly from those of GCV: , while

. Using

instead of

for the estimation of

substantially reduced the mean squared prediction error:

while

, a relative decrease of 12.1%. Using

, as in Hellton and Hjort (Citation2018), fridge also reduced the MSPE, but to a lesser extent:

a relative decrease of 3.5% with respect to

Application of fridge using

did not further decrease

, nor did it increase it. Possibly, the already fairly small value of

left little room for improvement. displays absolute prediction errors per sample and illustrates the improved prediction by ridge using

(and to a lesser extent by fridge) with respect to ridge using

.

5. Extensions

5.1. Extension 1: mixed effects model

A natural extension of the high-dimensional random effects model (1) is the mixed effects model:(17)

(17) where we assume that the n × m design matrix for the fixed effects,

, is of low-rank, so

, as opposed to the random effects design matrix

. Restricted maximum likelihood (REML) deals with the fixed effects by contrasting them out. For the error contrast vector

with

, the marginal likelihood for the variance components equals (see e.g. Zhang Citation2015):

(18)

(18) with

In addition to maximizing (18) as a function of

, we attempted solving the set of two estimation equations suggested by Jiang (Citation2007), but this rendered instable results inferior to maximizing (18) directly.

Alternatively, MML may be used, but it has to be adjusted to also estimate the fixed effects in the model. This implies replacing 0 in Gaussian likelihood (11) by , and optimizing (11) with respect to

parameters, where m is the number of fixed parameters. The mixed model simulation setting is as follows:

(19)

(19) where

(implying variance

for generating random effects) and

(implying variance

for generating fixed effects). Note that we focused on a fairly sparse setting for the random effects and larger prior variance of fixed effects than of random effects, which enables a stronger impact of the small number of fixed effects. shows the results of REML, MML and CV (by glmnet, using penalty factor 0 for the fixed effects) for the estimation of

and h2.

From we observe that REML indeed improves MML in terms of bias, however at the cost of increased variability. For the estimation of λ, CV is fairly competitive to REML and MML, although it renders markedly more over-penalization.

5.2. Extension 2: Bayesian linear regression

So far, we focused on classical methods. Bayesian methods may be a good alternative. We applied the standard Bayesian linear regression model, i.e. the conjugate model with i.i.d. priors , with

fixed and

endowed with a vague inverse-gamma prior (see Supplementary Material for details). For this model the maximum marginal likelihood estimator for

is still analytical (Karabatsos Citation2018), and so is the posterior mode estimate of

. shows the results in comparison to MML, i.e. maximization of (12), for the random effects case with multi-collinear X, as in Sec. 3.3.1. Results for other settings were in essence very similar.

From the results we conclude that the conjugate Bayes estimates are very close to those of MML. This is in line with the fact that both estimators maximize a marginal likelihood and the conjugate model with prior variance is known to render posterior mean estimates of

that equal the λ-penalized ridge regression estimates.

The conjugate Bayesian model is scale-invariant, because the β prior contains the error variance . Recently, it was criticized for its non-robustness against misspecification of the fixed

when estimating

(Moran, Rockova, and George Citation2018). However, in practice one needs to estimate

by either empirical Bayes (e.g. maximum marginal likelihood) or full Bayes. We repeated the simulation by Moran, Rockova, and George (Citation2018) (see Supplementary Material). The results show that the estimates of

are much better when estimating

by empirical Bayes instead of fixing it, and in fact very competitive to alternatives proposed by Moran, Rockova, and George (Citation2018).

5.3. Extension 3: generalized linear models

5.3.1. Setting

Motivated by the good results for MML in the linear setting, we wish to extend MML estimation to the high-dimensional generalized linear model (GLM) setting, where the likelihood depends on the regression parameter only via the linear predictor,

. Hence, likelihood

is defined by a density

(e.g. Poisson), where

is mapped to μ by a link function (e.g.

). As before, we a priori assume i.i.d.

, here equivalent to an L2 penalty

when estimating

by penalized likelihood. In Heisterkam, van Houwelingen, and Downs (Citation1999) an iterative algorithm to estimate λ is derived which alternates estimation of

by maximization w.r.t. λ, requiring the computation of the trace of a Hessian of a

matrix. Here, the estimation of

itself is much slower than in the linear case, because it is not analytic and requires iterative weighted least squares approximation. Below we show how to substantially alleviate the computational burden in the

setting by re-parameterizing the marginal likelihood implying computations in

instead of

.

5.3.2. Method

We have for the marginal likelihood:(20)

(20) where

denotes the normal density with mean μ and variance

. Now a crucial observation is that for GLM:

(21)

(21) because the likelihood depends on

only via the linear predictor

. Here,

is the implied n-dimensional prior distribution of

. This is a multivariate normal:

. Therefore, we have:

(22)

(22)

Hence, the p-dimensional integral may be replaced by an n-dimensional one, with obvious computational advantages when . Moreover, the use of (22) allows applying implemented Laplace approximations, which tend to be more accurate in lower dimensions. The Laplace approximation requires

. We emphasize that this does generally not equal

where

: the maximum of the commonly used L2 penalized (log)-likelihood. However,

can be computed by noting that

(23)

(23)

In other words, this is the penalized log-likelihood when regressing Y on the identity design matrix using an L2 smoothing penalty matrix

. The latter fits conveniently into the set-up of Wood (Citation2011), as implemented in the R-package mgcv. This also facilitates MML estimation of λ by maximizing

, with

as in (23). If the columns of X are standardized (common in high-dimensional studies),

has rank n – 1 instead of n, implying that

does not exist and should be replaced by a pseudo-inverse

, such as the Moore-Penrose inverse.

In a full Bayesian linear model setting, dimension reduction is also discussed by Bernardo et al. (Citation2003), where is substituted by a n-dimensional factor analytic representation, which requires an SVD of X. In addition, there it is not used for hyper-parameter estimation by marginal likelihood, but instead for specifying (hierarchical) priors for the factors.

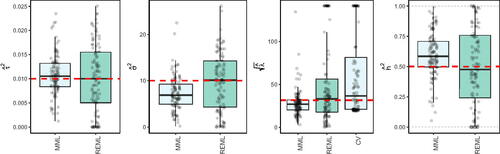

5.3.3. Results

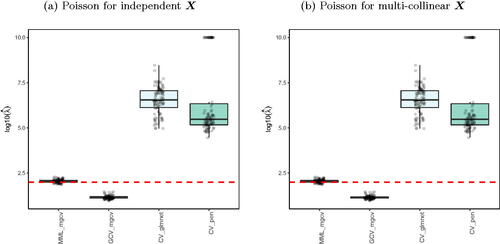

R packages like glmnet (Friedman, Hastie, and Tibshirani Citation2010) and penalized (Goeman Citation2010) estimate λ by cross-validation, and also mgcv allows, next to the MML estimation, (generalized) CV estimation (Wood Citation2011). show the results for Poisson ridge regression, with generated as in (14), and X generated as in (14) and (16), which denote the independent X and multi-collinear X setting, respectively.

clearly shows the superior performance of MML based on (22) over CV. In particular, glmnet and penalized render strongly upward biased values. The mgcv GCV values are still inferior to MML based ones, but much better than the latter two, which may be due to the different regression estimators used (Laplace approximation versus iterative weighted least squares). We should stress that CV does not target for the estimation of λ as such, but merely for minimizing prediction error. Nevertheless, the difference is remarkably larger than in the corresponding linear case (see and ).

The Supplementary Material shows the results for Binomial ridge regression. While the differences in performance are less dramatic than for the Poisson setting, MML still renders much better estimates of λ than CV-based approaches.

6. Computational aspects and software

All methods and simulations presented here are implemented in a few wrapper R scripts: one for the linear random effects model (which includes the conjugate Bayes estimator), one for the linear mixed effects model, and one for Poisson and Binomial ridge regression. Parallel computations are supported. The scripts allow exact reproduction of the results in this manuscript as well as comparisons for other simulation or user-specific real data X cases. In addition, a script is supplied to produce the box-plots as in this manuscript.

HiLMM, PCR and CV implementations are provided by the R-packages HiLMM, v1.1 (Bonnet, Gassiat, and Lévy-Leduc Citation2015), ridge, v1.8-16 (Cule and De Iorio Citation2012) (code slightly adapted for computational efficiency) and glmnet, v2.0-16 (Friedman, Hastie, and Tibshirani Citation2010). The methods MML, REML, Bayes, MoM, Basic and GCV were implemented by us for the linear random and mixed effects models. For Poisson and Binomial ridge regression we applied mgcv, v1.8-16 (Wood Citation2011) after our re-parametrization (22) to obtain MML and GCV results, while for CV glmnet and penalized, v0.9-50 (Goeman Citation2010) were applied. For all methods that required optimization the R routine optim was used, with default settings. CV was based on 10 folds.

Computing times of the various methods largely depend on n and p, much less so on the exact simulation setting. These are displayed for n = 100, 500 and in , based on computations with one CPU of an Intel®Xeon®CPUE5 - 2660v3@2.60 GHz server. For Poisson ridge regression, we only report the computing times of MML and GCV, because, as reported in , the performance of CV-based methods was very inferior.

Table 1. Computing times for hyper-parameter estimation for linear and Poisson ridge regression.

From we conclude that MML is also computationally very attractive. Its efficiency is explained by the fact that, unlike many of other methods, it does not require an SVD or other matrix decomposition of X. Moreover, the only computation that involves dimension p is the product .

7. Discussion

We compared several estimators in a large variety of high-dimensional settings. The results showed that plain maximum marginal likelihood works well in many settings. MML is generally superior to methods that aim to separately estimate (9, 10). Apparently, the estimates of

and

are so intrinsically linked in the high-dimensional setting that separate estimation is sub-optimal. The moment estimator (MoM) is generally not competitive to MML. It may, however, be useful in large systems with multiple hyper-parameters to estimate relative penalties, which are less sensitive to scaling issues than the global penalty parameter (Van de Wiel et al. Citation2016). MoM may also be a useful initial estimator for more complex estimators that are based on optimization, such as MML.

Possibly somewhat surprising is the good performance of MML for estimating λ and h2, as these are functions of and

. For the estimation of λ it is generally better than or competitive to (generalized) CV, an observation also made for the low-dimensional setting (Wood Citation2011). The inferior performance of the basic estimator of

(9) implies that alternative estimators of λ that use

as a plug-in are unlikely to perform well in high-dimensional settings. Such estimators, including the original one by Hoerl and Kennard (Citation1970), are compared by Muniz and Kibria (Citation2009); Kibria and Banik (Citation2016), who show that some do perform well in the low-dimensional setting. For Poisson ridge regression, similar estimators of λ are available (Månsson and Shukur Citation2011), but these rely on an initial maximum likelihood estimator of

, and hence do not apply to the high-dimensional setting. For estimating h2 it should be noticed that HiLMM (Bonnet, Gassiat, and Lévy-Leduc Citation2015) aims to compute a confidence interval for h2 as well. For that purpose their direct estimator (8) is likely more useful than MML on the pair

. We also used Esther (Bonnet et al. Citation2018), which precedes HiLMM by sure independence screening. It did not improve HiLMM in our (semi-)sparse settings, and requires manual steps. However, it likely improves HiLMM results in very sparse settings (Bonnet et al. Citation2018).

For mixed effect models with a small number of fixed effects, MML compares fairly well to REML, with a larger bias, but smaller variance. Probably the potential advantage of contrasting out the fixed effects is small when the number of random effects is large. REML may have a larger advantage in very sparse settings (Jiang et al. Citation2016) or when the number of fixed effects is large with respect to n. Estimates from the conjugate Bayes model are very similar to those by MML. We show that estimating along with

highly improves the

estimates presented by Moran, Rockova, and George (Citation2018), where a fixed value of

is used. In the case of many variance components or multiple similar regression equations, Bayesian extensions that shrink the estimates by a common prior are appealing, in particular in combination with efficient posterior approximations such as variational Bayes (Leday et al. Citation2017).

Our model (1) implies a dense setting, but we have demonstrated that the MML and REML estimators of and

are fairly robust against moderate sparsity, which corroborates the results by Jiang et al. (Citation2016). Nevertheless, true sparse models may be preferable when variable selection is desired, which depends on accurate estimation of

. On the other hand, post-hoc selection procedures can be rather competitive (Bondell and Reich Citation2012). Moreover, the sparsity assumption is questionable for several applications. For example in genetics, it was suggested that many complex traits (such as height or cholesterol levels) are not even polygenic, but instead ‘omnigenic’ (Boyle, Li, and Pritchard Citation2017).

The extension of MML to high-dimensional GLM settings (22) is promising given its computational efficiency and performance for Poisson and Binomial regression. A special case of the latter, logistic regression, requires further research, because the Laplace approximations of the marginal likelihood are less accurate here (Wood Citation2011). Extension to survival is a promising avenue, because Cox regression is directly linked to Poisson regression (Cai and Betensky Citation2003). Alternatively, parametric survival models may be pursued. To what extent the estimates of hyper-parameters impact predictions depends on the sensitivity of the likelihood to these parameters. For the linear setting, a re-analysis of the weight-gain data showed that predictions based on improved those based on

. Karabatsos (Citation2018) shows that MML estimation also performs well compared to GCV for linear power ridge regression, which extends ridge regression by multiplying λ by

.

The MML estimator can be extended to estimation of multiple variance components or penalty parameters, which was addressed by iterative likelihood minorization (Zhou et al. Citation2015) and by parameter-based moment estimation (Van de Wiel et al. Citation2016). The latter extends to non-Gaussian response such as survival or binary. Further comparison of these methods with multi-parameter MML, both in terms of performance and computational efficiency, is left for future research. Finally, in particular in genetics applications, extensions of estimation of variance components by MML to non-independent individuals can be implemented by use of a well-structured between-individual covariance matrix (Kang et al. Citation2008).

Although our simulations cover a fairly broad spectrum of settings, many other variations could be of interest. We therefore supply fully annotated R scripts https://github.com/markvdwiel/Hyperpar that allow i) comparison of all algorithms discussed here, also for one’s ‘own’ real covariate set X; and ii) reproduction of all results presented here.

lssp_a_1646760_sm0546.pdf

Download PDF (597.7 KB)Acknowledgment

Gwenaël Leday was supported by the Medical Research Council, grant number MR/M004421. We thank Jiming Jiang and Can Yang for their correspondence, input and software for the MM algorithm. In addition, we thank Kristoffer Hellton for providing the fridge software and data. Finally, Iuliana Ciocǎnea-Teodorescu is acknowledged for preparing the TCGA KIRC data.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Allen, D. 1974. The relationship between variable selection and data agumentation and a method for prediction. Technometrics 16 (1):125–7. doi:https://doi.org/10.1080/00401706.1974.10489157.

- Bernardo, J. M., M. J. Bayarri, J. O. Berger, and A. P. Dawid. 2003. Bayesian factor regression models in the “large p, small n” paradigm. Bayesian Statistics 7:733–42.

- Bondell, H., and B. Reich. 2012. Consistent high-dimensional Bayesian variable selection via penalized credible regions. Journal of the American Statistical Association 107 (500):1610–24. doi:https://doi.org/10.1080/01621459.2012.716344.

- Bonnet, A., E. Gassiat, and C. Lévy-Leduc. 2015. Heritability estimation in high dimensional sparse linear mixed models. Electronic Journal of Statistics 9 (2):2099–129. doi:https://doi.org/10.1214/15-EJS1069.

- Bonnet, A., C. Lévy-Leduc, E. Gassiat, R. Toro, and T. Bourgeron. 2018. Improving heritability estimation by a variable selection approach in sparse high dimensional linear mixed models. Journal of the Royal Statistical Society: Series C (Applied Statistics) 67 (4):813–39. doi:https://doi.org/10.1111/rssc.12261.

- Boyle, E. A., Y. I. Li, and J. K. Pritchard. 2017. An expanded view of complex traits: From polygenic to omnigenic. Cell 169 (7):1177–86. doi:https://doi.org/10.1016/j.cell.2017.05.038.

- Cai, T., and R. Betensky. 2003. Hazard regression for interval-censored data with penalized spline. Biometrics 59 (3):570–9. doi:https://doi.org/10.1111/1541-0420.00067.

- Cashion, A., A. Stanfill, F. Thomas, L. Xu, T. Sutter, J. Eason, M. Ensell, and R. Homayouni. 2013. Expression levels of obesity-related genes are associated with weight change in kidney transplant recipients. PLoS One 8 (3):e59962. doi:https://doi.org/10.1371/journal.pone.0059962.

- Cule, E., and M. De Iorio. 2012. A semi-automatic method to guide the choice of ridge parameter in ridge regression. arXiv preprint, arXiv:1205.0686.

- Cule, E., P. Vineis, and M. De Iorio. 2011. Significance testing in ridge regression for genetic data. BMC Bioinformatics 12:372. doi:https://doi.org/10.1186/1471-2105-12-372.

- Friedman, J., T. Hastie, and R. Tibshirani. 2010. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software 33 (1):1–22.

- Goeman, J. 2010. L1 penalized estimation in the Cox proportional hazards model. Biometrical Journal 52 (1):70–84. doi:https://doi.org/10.1002/bimj.200900028.

- Golub, G. H., M. Heath, and G. Wahba. 1979. Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics 21 (2):215–23. doi:https://doi.org/10.1080/00401706.1979.10489751.

- Hastie, T., and R. Tibshirani. 1990. Generalized additive models. Boca Raton, FL: CRC Press.

- Hastie, T., R. Tibshirani, and J. H. Friedman. 2008. The elements of statistical learning. 2nd ed. New York, NY: Springer.

- Heisterkam, S. H., J. C. van Houwelingen, and A. M. Downs. 1999. Empirical Bayesian estimators for a Poisson process propagated in time. Biometrical Journal 41 (4):385–400. doi:https://doi.org/10.1002/(SICI)1521-4036(199907)41:4<385::AID-BIMJ385>3.0.CO;2-Z.

- Hellton, K. H., and N. L. Hjort. 2018. Fridge: Focused fine-tuning of ridge regression for personalized predictions. Statistics in Medicine 37 (8):1290–303. doi:https://doi.org/10.1002/sim.7576.

- Hoerl, A. E., and R. W. Kennard. 1970. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 12 (1):55–67. doi:https://doi.org/10.1080/00401706.1970.10488634.

- Jiang, J. 2007. Linear and generalized linear mixed models and their applications. New York, NY: Springer Science & Business Media.

- Jiang, J., C. Li, D. Paul, C. Yang, and H. Zhao. 2016. On high-dimensional misspecified mixed model analysis in genome-wide association study. The Annals of Statistics 44 (5):2127–60. doi:https://doi.org/10.1214/15-AOS1421.

- Kang, H. M., N. A. Zaitlen, C. M. Wade, A. Kirby, D. Heckerman, M. J. Daly, and E. Eskin. 2008. Efficient control of population structure in model organism association mapping. Genetics 178 (3):1709–23. doi:https://doi.org/10.1534/genetics.107.080101.

- Karabatsos, G. 2018. Marginal maximum likelihood estimation methods for the tuning parameters of ridge, power ridge, and generalized ridge regression. Communications in Statistics - Simulation and Computation 47 (6):1632–51. doi:https://doi.org/10.1080/03610918.2017.1321119.

- Kibria, B., and S. Banik. 2016. Some ridge regression estimators and their performances. Journal of Modern Applied Statistical Methods 15:12.

- Leday, G. G. R., M. C. M. de Gunst, G. B. Kpogbezan, A. W. van der Vaart, W. N. van Wieringen, and M. A. van de Wiel. 2017. Gene network reconstruction using global-local shrinkage priors. The Annals of Applied Statistics 11 (1):41–68. doi:https://doi.org/10.1214/16-AOAS990.

- Månsson, K., and G. Shukur. 2011. A Poisson ridge regression estimator. Economic Modelling 28 (4):1475–81. doi:https://doi.org/10.1016/j.econmod.2011.02.030.

- Moran, G. E., V. Rockova, and E. I. George. 2018. On variance estimation for Bayesian variable selection. arXiv preprint, arXiv:1801.03019.

- Muniz, G., and B. M. G. Kibria. 2009. On some ridge regression estimators: An empirical comparisons. Communications in Statistics - Simulation and Computation 38 (3):621–30. doi:https://doi.org/10.1080/03610910802592838.

- Murphy, K. 2012. Machine learning, a probabilistic perspective. Cambridge, MA: The MIT Press.

- Tibshirani, R. 1996. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B 58 (1):267–88.

- van de Wiel, M. A., T. G. Lien, W. Verlaat, W. N. van Wieringen, and S. M. Wilting. 2016. Better prediction by use of co-data: Adaptive group-regularized ridge regression. Statistics in Medicine 35 (3):368–81. doi:https://doi.org/10.1002/sim.6732.

- Visscher, P. M., W. G. Hill, and N. R. Wray. 2008. Heritability in the genomics era – Concepts and misconceptions. Nature Reviews Genetics 9 (4):255–66. doi:https://doi.org/10.1038/nrg2322.

- Wood, S. N. 2011. Fast stable restricted maximum likelihood and marginal likelihood estimation of semiparametric generalized linear models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 73 (1):3–36. doi:https://doi.org/10.1111/j.1467-9868.2010.00749.x.

- Zhang, X. 2015. A tutorial on restricted maximum likelihood estimation in linear regression and linear mixed-effects model. http://statdb1.uos.ac.kr/teaching/multi-grad/ReML.pdf.

- Zhou, H., L. Hu, J. Zhou, and K. Lange. 2015. MM algorithms for variance components models. arXiv preprint, arXiv:1509.07426.