?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We study two Bayesian (Reference Intrinsic and Jeffreys prior), two frequentist (MLE and PWM) approaches and the nonparametric Hill estimator for the Pareto and related distributions. Three of these approaches are compared in a simulation study and all four to investigate how much equity risk capital banks subject to Basel II banking regulations must hold. The Reference Intrinsic approach, which is invariant under one-to-one transformations of the data and parameter, performs better when fitting a generalized Pareto distribution to data simulated from a Pareto distribution and is competitive in the case study on equity capital requirements.

1. The Pareto and related distributions

The generalized Pareto distribution (GPD) is widely used in engineering, environmental science and finance to model low probability events. Typically, the GPD is used to estimate extreme percentiles such as the 99th percentile for a specific event. This might be used for setting the height of flood wall defenses or estimating how much capital banks might hold for specific market risks.

We say that the positive quantity x follows a GPD if it has probability density function (PDF)

(1)

(1)

with

The support of the distribution

if

while

if

thus, κ is a shape and σ is scale parameter. The mean,

exist iff

and

respectively.

The GPD is related to several distributions. It clearly has an exponential distribution with mean σ as a special case when κ = 0. Further, follows a Pareto distribution,

if

where

(2)

(2)

and an inverted-Pareto,

if

where

(3)

(3)

In the latter case, distributes uniformly on

follows a Beta distribution,

and

follows a location (or shifted) Exponential distribution,

where

If the shape is fixed,

follows an Exponential distribution with rate α,

Similarly, if y follows a

then

follows a

(Diebolt et al. Citation2005). If a sample,

from the Pareto distribution is available,

is sufficient, with

and

No such sufficient statistics exist for the GPD.

One key feature of this family of distributions is their so-called lack of memory, a property at the core of their prominence in extreme value theory, related to peaks-over-threshold theory described in Section 1.1. Specifically, let and consider

it is immediate to prove that

with

hence

which is commonly used to graphically check model fit (Davidson and Smith Citation1990). It is straightforward to check that if x follows a

then

provided

and thus, a similar graphical model fit check can be carried out (Akhundjanov and Chamberlain Citation2019).

In this article, we consider the Bayesian Reference Intrinsic (BRI) approach (Bernardo and Juárez Citation2003; Bernardo and Rueda Citation2002; Bernardo Citation2007) for calibrating this family of distributions, and compare it with four alternative approaches, maximum likelihood (ML), probability weighted moments (PWM), the Hill estimator and a Bayesian approach using a Jeffreys prior, implemented using Markov chain Monte Carlo (MCMC). A simulation study is carried out comparing the average mean square error of three of the four approaches when calibrated to synthetic data from a Pareto distribution. These methods are then applied to some equity return data in a case study and the results compared.

1.1. The GPD and extremes

The GPD was first introduced by Pickands (Citation1975) in the extreme value framework as a distribution of sample excesses over a sufficiently high threshold (de Zea Bermudez and Kotz Citation2010a, Citation2010b). Two key theories of the extreme value framework the GPD arose from are summarized below – we use the second theory in the case study in Section 5.

1.1.1. Extreme value theory 1

Let Xn be a sequence of iid random variables. If these are divided into blocks of size k, and

(i.e., the largest value in each block), then the Mj follow a generalized extreme value (GEV) distribution, with cumulative distribution function (CDF)

with

the scale parameter and κ the shape parameter (McNeil, Frey, and Embrechts Citation2005, 265). Thus, the distribution of the maxima of blocks of data from almost any probability distribution follows a GEV with some shape parameter κ.

1.1.2. Extreme value theory 2 (Picklands-Balkema-de Haan)

Let X > 0 be a random quantity with CDF F. The excess over threshold u has CDF

for

where

is the upper bound of the support of F.

There is a positive measurable function B(u) such that

where κ is the shape parameter of the GPD and B(u) is the scale parameter, which is a function of the threshold (McNeil, Frey, and Embrechts Citation2005, 277). This means that while the scale parameter changes as the threshold changes, the shape parameter stays the same. The distributions for which the block maxima converge to a GEV distribution constitute a set of distributions for which the excess distribution converges to the GPD as the threshold is raised. The shape parameter of the GPD of the excesses is the same as the shape parameter of the GEV of block maxima. This means that the excess above a threshold can effectively be modeled by a GPD (almost) regardless of the distribution of the full data set as long as the threshold is high enough. This feature is used in a case study in Section 5.2, where the GPD is calibrated to just the tail of the data.

Characterizing the GPD and deriving probabilistic and statistical results are extensively addressed in the literature (e.g., see Galambos Citation1987; Leadbetter, Lindgren, and Rootzen Citation1983; Beirlant et al. Citation2005; Beirlant, Teugels, and Vynckier Citation1996; Embrechts, Klüppelberg, and Mikosch Citation1997; Coles Citation2001; Kotz and Nadarajah Citation2000; Castillo and Hadi Citation1997; de Haan and Ferreira Citation2006, and references therein). Several approaches have been proposed to calibrate the GPD mainly focusing on the MLE, PWM, or Method of Moments (MoM) (see e.g., de Zea Bermudez and Kotz Citation2010a, Citation2010b, and references therein). More recently, Bayesian approaches have been investigated (see e.g., Lima et al. Citation2016; Ragulina and Reitan Citation2017; Juárez Citation2005; Tancredi, Anderson, and O’Hagan Citation2006; de Zea Bermudez and Turkman Citation2003). Gilleland, Ribatet, and Stephenson (Citation2013) review available software for estimation. We refer the reader to de Zea Bermudez and Kotz (Citation2010a, Citation2010b), which include summary tables of papers describing how the GPD is calibrated to a wide range of data sets, reproduced in in Appendix A to show some of the extensive literature covering calibration of the GPD.

1.2. The Pareto principle: The Lorenz curve and Gini index

The “80-20 rule” or Pareto principle has reached popular culture through books such as Koch (Citation2007). It is a way of more easily explaining the calibration of a Pareto or GPD. An example of this is the 80–20 rule identified by V. Pareto in 1897 that 20% of the population had 80% of the wealth (Persky Citation1992). It is possible to use the calibration of the GPD to identify the Pareto principle parameters through the Lorenz curve and Gini index. The Lorenz curve,

where F(x) is the CDF of the random quantity x and μ its expected value, describes precisely this relationship. In case the distribution of the size is homogenous, i.e., “u% of the population accumulates u% of the income,” then L(u) = u. This motivates some measures of inequality, such as the Gini index,

which is the relative area under L(u), with respect to the straight line: the closer G to 0(1), the more(less) egalitarian the distribution. For the GPD,

depend only on the shape parameter; thus, inference on L and/or G is tantamount to inference on this parameter, which is explored in Section 5.

2. Intrinsic estimation

The Bayesian reference-intrinsic (BRI) approach (Bernardo and Juárez Citation2003; Bernardo and Rueda Citation2002) provides a non subjective Bayesian alternative to point estimation, based on the reference prior (Berger, Bernardo, and Sun Citation2009, Citation2015) and an intrinsic loss function (Robert Citation1996). Formally, the probability model is assumed to describe the probabilistic behavior of the observables x, and suppose that a point estimator,

of the parameter θ is required. From a Bayesian decision standpoint, the optimal estimate,

minimizes the expected loss,

where

is a loss function measuring the consequences of estimating θ by θe and

is the decision-maker posterior distribution. The BRI approach argues that in fact one is interested in using

as a proxy of

and thus, the loss function should reflect this. It advocates the use of the Kullback–Leibler (KL) divergence as an appropriate measure of discrepancy between two distributions. The KL (or directed logarithmic) divergence,

is non negative and nought if and only if

and it is invariant under one-to-one transformations of either x or θ. However, the KL divergence is not symmetric and it diverges if the support of

is a strict subset of the support of

To simultaneously address these two unwelcome features Bernardo and Juárez (Citation2003) propose to use the intrinsic discrepancy,

(4)

(4)

a symmetrized version of the KL divergence. This is taken as the quantity of interest, for which a reference posterior is derived. The intrinsic estimator can then be obtained.

Definition 1

(B RI estimator). Let be a family of probability models for some observable data x, where the sample space,

may possibly depend on the parameter value. The BRI estimator,

where

is the intrinsic expected loss and

is the reference posterior for the intrinsic discrepancy,

as defined in (Equation4

(4)

(4) ).

Within the same methodology one can also obtain interval estimates, i.e., credible regions.

Definition 2

(B RI interval). Let and

be as in Definition 1. A BRI interval,

of probability

is a subset of the parameter space Θ such that

for all and

BRI credible regions are typically unique and, since they are based in the invariant intrinsic discrepancy loss, they are also invariant under one-to-one transformations (Bernardo Citation2007).

2.1. BRI estimation for the Pareto family of distributions

In Section 1.1, we highlighted the relationship between the GPD and the Pareto and Inverse Pareto distributions. The main characteristic we will exploit here is that the GPD shape parameter remains invariant to any of those transformations, while the GPD scale parameter is linearly transformed. Given that the support of the inverted Pareto is bounded, it is easier to calibrate directly than the Pareto or the GPD. For this reason, we choose to work with this parameterization and apply the results to the GPD parameters by the above simple transformations.

Let be a random sample from an

using (Equation3

(3)

(3) ), the likelihood is

are jointly sufficient, with

Moreover, given

the MLE,

(5)

(5)

are conditionally independent, with sampling distributions

and

where the latter is an inverted Gamma distribution (Malik Citation1970; Rytgaard Citation1990).

The conjugate prior is a Pareto-Gamma distribution,

(6)

(6)

which yields a Gamma marginal posterior

with

and

For any choice of prior parameters, the posterior is asymptotically Gaussian and will converge to a mass point at

a.s. as

From (4), the intrinsic discrepancy for the inverted Pareto distribution, when the parameter of interest is the shape, can be written as

(7)

(7)

with

which does not depend on σ. Following Juárez (Citation2005), given that (7) is a (piecewise) one-to-one function of κ, we can use the reference prior

a liming case of (Equation6

(6)

(6) ), which yields the marginal posterior

for n > 1. The intrinsic expected loss,

is defined for all n > 2 and can be calculated numerically. Due to the asymptotic Gaussianity of the posterior, the approximations

and

work well even for moderate sample sizes.

The intrinsic discrepancy when the scale is the parameter of interest is

where

The reference prior is

or in terms of the original parameterization,

which is not a limiting case of the Pareto-Gamma family.

In this case, the loss function depends on both parameters and thus

(8)

(8)

with

(9)

(9)

and

The corresponding Bayes rule can be calculated numerically. An analytical approximation, which works well even for moderate sample sizes, can be obtained by substituting the shape parameter with a consistent estimator in (Equation9(9)

(9) ), carrying out the one-dimensional integration and then solving (Equation8

(8)

(8) ). Using the MLE,

yields

The Uniform and location-Exponential models are particular cases of the inverted Pareto (see Section 1). For the former, we have and in this case, the intrinsic discrepancy is

and the corresponding reference prior is

which yields a

posterior, with

the MLE. The expected intrinsic discrepancy has a simple analytical form,

where

It is immediate to prove that the BRI estimator,

is the median of the posterior, highlighting its invariance under one-to-one transformations. Indeed, the BRI estimator of the parameter in the location-Exponential model,

where

i.e., the distribution obtained by letting

is

3. Alternative approaches

We briefly describe three alternative frequentist approaches: maximum-likelihood estimate (MLE), the Hill estimator, and probability weighted moments (PWM). We also describe a Bayesian approach that uses a Jeffreys prior, which yields a proper posterior for any sample size. The posterior has no analytical form, so we implement an MCMC strategy to sample from it.

3.1. Jeffreys prior

From a Bayesian perspective, Diebolt et al. (Citation2005) propose a quasi-conjugate prior for the GPD, avoiding prior elicitation by setting its parameters using empirical Bayes. The posterior is then explored through Gibbs sampling. Vilar-Zanón and Lozano-Colomer (Citation2007) propose a generalized inverse Gaussian conjugate prior and discuss whether to use a non informative parameter setting or use prior knowledge on the first two moments of the shape parameter for elicitation.

Here, we use the independent Jeffreys prior,

which, despite being improper, yields a proper posterior for any sample size (Castellanos and Cabras Citation2007). The posterior,

is not analytical, so we device an MCMC scheme to carry out inference. Our strategy is a Metropolis-within-Gibbs algorithm with full conditionals

To set the proposal distributions, one must bear in mind the parameter space depends on the sample space; specifically, if

Hence, for the shape parameter, κ, we propose from a truncated Gaussian with mode at the MLE, and upper bound at

where σc is the current state of the shape; we use the free parameter to control the acceptance rate. For the scale, σ, if the current state of the shape,

we use a Gamma proposal with mode at σc and use the free parameter to control the acceptance rate; otherwise, we propose from a truncated Gaussian with lower bound at

mode at σc, and use the free parameter to control the acceptance rate. We implement the sampler in R (R Core Team Citation2021), the code is available under request from the corresponding author.

3.2. Maximum likelihood

Due to its invariance under bijective transformations, the MLE for the Pareto parameterization can be obtained from (Equation5(5)

(5) ). For a sample

from a

the log-likelihood can be expressed as

The MLE exist only for and is typically found using numerical methods. If

its sampling distribution is asymptotically Gaussian with covariance matrix (de Zea Bermudez and Kotz Citation2010a),

3.3. Probability weighted moments

Probability weighted moments (PWM), or L-moments,

characterize the distribution function F of a random quantity X and are exploited as a robust alternative to the method of moments for point estimation (Greenwood et al. Citation1979). Particularly for the GPD, Diebolt, Guillou, and Worms (Citation2003), suggest using

from which

The corresponding PWM estimators are obtained by substituting μ0 and μ1 by the estimators with

the j-th order statistic. Various expressions are available for

in the sequel we use

with

and c = 0 as in de Zea Bermudez and Kotz (Citation2010a). For large sample sizes and if

the PWM estimators are asymptotically Gaussian (Diebolt, Guillou, and Worms Citation2003) with covariance matrix

3.4. Hill estimator

The Hill estimator (Hill Citation1975) of the shape parameter κ,

where

is the l-th order statistic and is a nonparametric alternative based on the shape of the complementary CDF of the Pareto family of distributions. It is consistent and asymptotically Gaussian (Beirlant et al. Citation2005) with variance

for

Due to its simplicity, it is still often used in practice. However, it is not invariant to linear transformations of the data and can yield very different estimates, depending on the choice of k (Charras-Garrido and Lezaud Citation2013).

4. Synthetic data and comparison

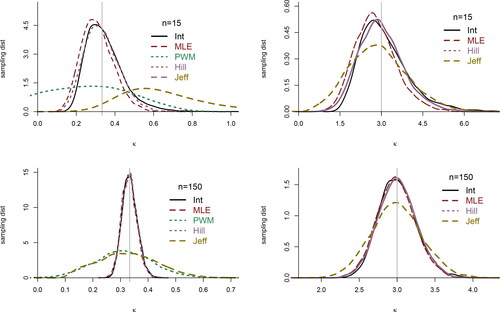

We carry out a simulation study to compare the calibration efficiency of the BRI, the ML and PWM estimators and present results on the shape parameter only for brevity. As the MHA is itself a time-consuming simulation process, it has been left out of this comparison. We generate 10,000 samples of size n = 15, 50, 100, from a Pareto distribution, – which is linked to the GPD as described in Section 1 – with σ = 4 and

The parameters are calibrated using the BRI, ML, and PWM approaches, their sampling distribution are estimated, and their bias and mean-squared error (MSE) used as efficiency measures, illustrated in .

Table 1. Comparing estimators of the shape parameter. First four columns show the relative MSE to the MLE and the rest the bias of the ML, PWM, BRI, Jeffreys, and Hill estimators of the shape parameter, κ, from 5,000 samples of sizes n = 15, 50, 150 and with scale σ = 4 in all cases.

Given that PWM works well only if (at least) the first two moments of the distribution exist it is not striking to confirm its poor performance for values of (Hosking, Wallis, and Wood Citation1985), regardless of the sample size. In contrast, both MLE and BRI estimators yield relatively low bias and MSE, even for moderate sample sizes, with their sampling distributions becoming increasingly similar as the sample size grows (). Both estimators are invariant under one-to-one transformations, while PWM is not; further, BRI credible intervals are invariant, a feature we will exploit in the sequel.

Figure 1. Sampling distributions of the BRI, ML, PWM, and Jeffreys posterior median estimators of the shape parameter, from 5,000 simulations of sample sizes n = 15 (top), and n = 150 (bottom). Right panels display the case κ = 3 and left when (marked by the vertical line). The right panels do not display PWM due to its poor performance. Note the MLE, BRI, and Hill estimators have relatively similar sampling distributions and are almost indistinguishable for large n.

5. Bank equity capital requirements

We apply the calibration approaches described above to equity risk capital that banks are required to hold in the banking Basel II regulations. In the Basel II regulations in pghs.700 and 718 (LXXVI)Footnote1 a value-at-risk () approach is required for a 99th percentile one-sided confidence interval on 10-day equity returns. This means a bank will estimate what it thinks the 99th percentile 10-day equity returns can fall by (e.g., it might estimate this as a 10% fall in its equities market value) and it is then required to hold at least this amount as a monetary capital amount on its balance sheet to demonstrate the bank can withstand a 99th percentile fall in the value of its equities.

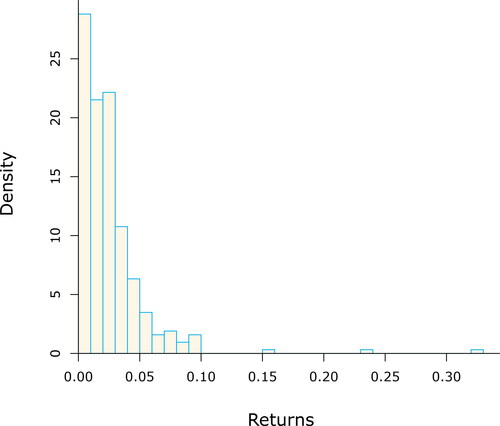

The regulations mention a number of approaches are possible to calculate this VAR and in this case study, a GPD is fitted to an historic time series of an equity index of 10-day returns. The equity index used here is the FTSE 100 index taken from yahoo finance 2/4/1984—26/7/2013Footnote2.

The raw data have been pre-processed to convert the daily index values into 10-day returns, yt (i.e., the percentage change in value of the index over non overlapping 10-day periods). A common question is either to use simple returns, where xt is the index value at time t, or log-returns,

While the difference between these two definitions is not crucial for a 10-day period, the log returns have been used in this case study. This is because the left tail is of interest and is unbounded for the log return, but bounded at −100% for the simple return. An unbounded domain is potentially more appropriate for the GPD calibration. When the 99th percentile has been calibrated in log returns, this needs to be converted back to a simple return for the VAR value. For example, if an −11% fall in equity values is the log-return 99th percentile, this is a

simple return fall in equity market value.

5.1. Exploratory data analysis

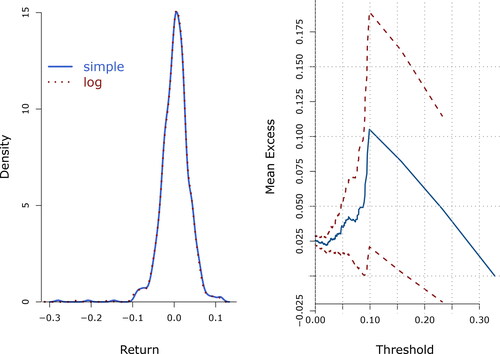

We explore some basic features of the data, presented for both simple and log returns on the left panel of .

Figure 2. On the left panel, smoothed histograms of simple and log 10-day returns of the FTSE100 index from 2/4/84–26/7/13. Both distributions are almost identical, left skew with heavy tails, and present a small secondary mode on the left hand tail. On the right panel, mean excess function of the negative 10-day returns of the FTS100 (solid) with 95% confidence intervals (segmented). The positive slope suggests a heavy tail, amenable to a power distribution.

We would like to highlight that the distribution of the returns is fat tailed – it has a higher frequency of extreme events compared with a Gaussian distribution – as measured by its kurtosis (that of a Gaussian distribution is 3). It is also negatively skewed – a higher proportion on events are on the left-hand side of the mean, which emphasizes the underlying financial risks. Plotting the Mean Excess (ME) function, is often used to explore whether the data have power tails (Ghosh and Resnick Citation2010). A characteristic of a fat-tailed GPD type distribution with negative shape parameter is an increasing straight line, while a decreasing line indicates thin tails; a horizontal line suggests exponential tails. The right panel in shows the mean excess plot of the absolute value of the negative log returns, which displays a positive slope (up to losses of about 10%), suggesting a power distribution is appropriate for this data. Combining this features with suggest a GPD with a negative shape parameter may be appropriate to model the negative returns of this dataset.

5.2. Peaks over threshold

We now apply the calibration approaches described in Sections 2.1 and 3 to the left tail of the 10-day FTSE100 log returns, using the peaks over threshold approach (McNeil, Frey, and Embrechts Citation2005, with theory as in Section 1.1.2), which allows the focus to be on the percentiles of interest.

We do not discuss how to set the threshold, but refer the reader to de Zea Bermudez and Kotz (Citation2010b). McNeil, Frey, and Embrechts (Citation2005, 280) suggest using the ME plot as a guide to threshold setting. We subjectively pick −5% as the approximate point on the mean excess plot beyond which the slope appears to increase faster and end up with n = 33. It is noted there are still enough points beyond this level for reasonable calibration and the threshold is expected to be sufficiently high for the Picklands-Balkema-de Haan theorem to apply.

Relying on the fact that if then

we use the methods in Section 2.1 to obtain point and interval estimates of the shape parameter. The MLE is straightforward to obtain,

where

is the geometric mean and

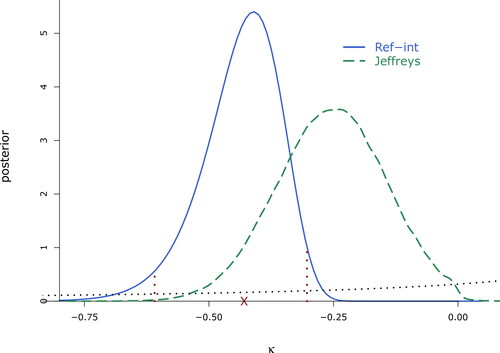

is the MLE of the shape. The marginal posterior distribution of the shape parameter is

and the BRI point estimate

and interval

are illustrated in . In particular, notice the intrinsic interval is different from the HPD,

highlights the fact that HPDs are not invariant under transformations, while the intrinsic is. Given that the scale parameter typically is a nuisance parameter, we use the analytical approximation to the BRI estimator,

Figure 4. Marginal posterior distribution of the shape parameter from the FTSE100 data, thresholded at –5%. Marked are the BRI estimate, and interval,

The (proper) marginal Jeffreys prior is represented by the dotted line, and the corresponding equally tailed interval of probability 0.95 is

We use quasi-Newton (Fletcher and Reeves Citation1964) to maximize the likelihood for the GPD, yielding MLEs and

The confidence intervals are found from the observed covariance matrix, which gives a standard errors for

of 0.228; thus, an approximate 95% confidence interval for the shape is

Using PWM, one gets

and

with

a CI of approximate 95% for κ. Exploiting the invariance of the BRI estimator,

and

the BRI interval of probability 0.95. To fit the Bayesian model with Jeffreys prior, we generated chains of length 106, dropped the first 104 as burn-in and thinned every fifth draw, ending up with samples of size 198,000 for inference, the marginal posterior distributions are illustrated in . The posterior mean and median of the shape are −0.254 and −0.252, respectively, and the equally tailed interval of posterior probability 0.95 is

Note both frequentist CIs include 0, suggesting an exponential tail behavior, while the Bayesian alternatives strongly support heavy tails; also notice the intrinsic posterior has a smaller variance; hence, the BRI interval is shorter than the equally tailed from Jeffreys prior.

The Pareto principle and Gini index discussed in Section 1.2 depend only on the shape parameter, so we can use the invariance of the BRI and MLE approaches to calculate point and interval estimates shown in . As the calibration of the GPD is based on the tail of the data, we exploit its lack of memory to calculate

where u = 0.05 is the threshold and

is empirically estimated as the proportion of data points above the threshold relative to total number of data points (McNeil, Frey, and Embrechts Citation2005, 283); in our case

Table 2. Point and interval estimates for the FTSE100 returns data, from the four approaches. The middle point in the intervals is the corresponding point estimate. Confidence intervals are of approximate 95%, and Bayesian credible intervals of probability 0.95. The point estimate from Jeffreys prior is the posterior median.

It is worth noticing both frequentist approaches do not rule out κ = 0, while the Bayesian alternatives strongly suggest all point estimates are negative, nonetheless. The posterior distribution from the intrinsic approach is shifted to the right, compared with the Jeffreys alternative and has a smaller variance. Frequentist confidence intervals are wider than the Bayesian credible counterparts.

This case study shows a practical example of how all four approaches can be used to calibrate the GPD. While there are some differences between the results from the four approaches, all point estimates of VaR0.99 are roughly similar, suggesting a level between 9% and 10% for regulatory equity capital might appropriately meet the Basel II regulations.

The BRI yields the highest VAR0.99 of the four methods, which would in practice mean holding more capital, but has the tightest intervals. Hence, although the point estimate may be seem marginally more costly, the level of uncertainty around it is much smaller, indeed reducing the risk associated with loss protection.

Point estimates of the shape and scale parameters (not shown) are roughly similar for each calibration approach, barring Jeffreys, which shrinks the estimate toward the origin; however, the length of the interval Bayesian estimates is shorter than their frequentist counterparts. Moreover, frequentist interval estimates for VaR0.99 are difficult to get and rely on asymptotic approximations, while those from BRI are immediate to obtain due to its invariance and those from Jeffreys prior are straightforward from the MCMC output.

6. Final remarks

We have illustrated how the BRI approach can be used to calibrate the GPD by using a transformation from the inverted-Pareto distribution. Four different approaches to calibrating the GPD have been presented. Three of the approaches were compared in a simulation study of simulated data from a Pareto distribution. All four approaches were then compared for similarities and differences in a case study.

From the simulation study, it is apparent that the repeated sampling behavior of the PWM estimator is poor in general and some modification is needed if it is to work in practice (see e.g., Chen et al. Citation2017). The results also indicate the BRI estimator has a similar MSE to the MLE even for moderated sample size, and its sampling distribution is asymptotically Gaussian ( and ).

Combined with its invariance under one-to-one transformations, it is a compelling alternative for calibration. One limitation of this simulation study was that it only simulated data from a Pareto distribution. An extension might be to compare the calibration approaches for data simulated from other distributions.

The BRI approach has produced lower mean-squared errors in the simulation studies, suggesting it to be more accurate for parameter estimation when the data are indeed from a Pareto distribution. The MLE is relatively simple to understand and implement; however, there are questions over the convergence of the numerical optimization methods, especially with fewer data points (de Zea Bermudez and Kotz Citation2010a). The PWM is likewise simple to understand and implement, but is efficient only for a subset of the parameter space and it could produce estimates with a likelihood of zero.

The MHA was left out of the simulation study as it is computationally intense. The results in Section 5.2 were obtained by running one million simulations. It would not be possible to run to this level of accuracy and carry out an outer layer 1000 simulation analysis in a reasonable time period or without much greater computer power. Also, implementing the sampler has a number of practical issues that are different for each dataset, which may take time to resolve and ensure the MHA converges in a reasonable time period. However, our implementation is robust and may be used as an off-the-shelf option.

One area of interest that could be the subject of further work is how sensitive the results are to the threshold used for each calibration method. A study might repeat the analysis looking at various different thresholds and how that impacts the shape, scale, and for each threshold.

Another area of potential interest is how the time period of each data point impacts the shape parameter and GPD calibrations. For example, if the case study looked at equity returns over 1 day, 5 days, 1 year, etc. What would the impact be on the GPD calibration? Clearly, a large time step for each data point would be expected to give higher values for the 99th percentile, but would the Gini index remain invariant to other size time steps as for the 10-day period investigated in this case study?

Notes

References

- Akhundjanov, S. B., and L. Chamberlain. 2019. The power-law distribution of agricultural land size. Journal of Applied Statistics 46 (16):3044–56. doi:10.1080/02664763.2019.1624695.

- Behrens, C. N., H. F. Lopes, and G. Gamerman. 2004. Bayesian analysis of extreme events with threshold estimation. Statistical Modelling 4 (3):227–44. doi:10.1191/1471082X04st075oa.

- Beirlant, J., Y. Goegebeur, J. Teugels, and J. Segers. 2005. Statistics of Extremes: Theory and Applications. Chichester: Springer.

- Beirlant, J., J. Teugels, and P. Vynckier. 1996. Practical analysis of extreme values. Leuven: University Press.

- Berger, J., J. M. Bernardo, and D. Sun. 2009. The formal definition of reference priors. The Annals of Statistics 37 (2):905–38. doi:10.1214/07-AOS587.

- Berger, J., J. M. Bernardo, and D. Sun. 2015. Overall objective priors. Bayesian Analysis 10 (1):189–221. doi:10.1214/14-BA915.

- Bernardo, J. M. 2007. Objective Bayesian point and region estimation in location-scale models. Statistics and Operations Research Transactions 31:3–44.

- Bernardo, J. M., and M. A. Juárez. 2003. Intrinsic estimation. Bayesian Statistics 7, eds. J. M. Bernardo, M. J. Bayarri, J. O. Berger, A. P. Dawid, D. Heckerman, A. F. M. Smith, and M. West, 465–76. Oxford: Oxford University Press.

- Bernardo, J. M., and R. Rueda. 2002. Bayesian hypothesis testing: A reference approach. International Statistical Review 70 (3):351–72. doi:10.1111/j.1751-5823.2002.tb00175.x.

- Castellanos, M. E., and S. Cabras. 2007. A default Bayesian procedure for the generalized Pareto distribution. Journal of Statistical Planning and Inference 137 (2):473–83. doi:10.1016/j.jspi.2006.01.006.

- Castillo, E., and A. S. Hadi. 1997. Fitting the generalized Pareto distribution to data. Journal of the American Statistical Association 92 (440):1609–20. doi:10.1080/01621459.1997.10473683.

- Castillo, E., A. S. Hadi, N. Balakrishnan, and J. M. Sarabia. 2004. Extreme value and related models with applications in engineering and science. New Jersey: Wiley.

- Charras-Garrido, M., and P. Lezaud. 2013. Extreme value analysis: An introduction. Journal de la Société Française de Statistique 154:66–97.

- Chen, H., W. Cheng, J. Zhao, and X. Zhao. 2017. Parameter estimation for generalized Pareto distribution by generalized probability weighted moment-equations. Communications in Statistics - Simulation and Computation 46 (10):7761–76. doi:10.1080/03610918.2016.1249884.

- Coles, S. 2001. An introduction to statistical modeling of extreme values. London: Springer-Verlag.

- Coles, S., L. R. Pericchi, and S. Sisson. 2003. A fully probabilistic approach to extreme rainfall modeling. Journal of Hydrology 273 (1–4):35–50. doi:10.1016/S0022-1694(02)00353-0.

- Davidson, A. C., and R. L. Smith. 1990. Models for exceedances over high thresholds. Journal of the Royal Statistical Society B 52:393–442.

- Diebolt, J., A. Guillou, and R. Worms. 2003. Asymptotic behaviour of the probability weighted moments and penultimate approximation. ESAIM: Probability and Statistics 7:219–38. doi:10.1051/ps:2003010.

- Diebolt, J., M. A. El-Aroui, M. Garrido, and S. Girard. 2005. Quasi-conjugate Bayes estimates for GPD parameters and application to heavy tails modelling. Extremes 8 (1–2):57–78. doi:10.1007/s10687-005-4860-9.

- Dupuis, D., and M. Tsao. 1998. A hybrid estimator for generalized Pareto and extreme-value distributions. Communications in Statistics - Theory and Methods 27 (4):925–41. doi:10.1080/03610929808832136.

- Embrechts, P., C. Klüppelberg, and T. Mikosch. 1997. Modelling extremal events. Berlin: Springer-Verlag.

- Engeland, K., H. Hisdal, and A. Frigessi. 2004. Practical extreme value modelling of hydrological floods and droughts: A case study. Extremes 7 (1):5–30. doi:10.1007/s10687-004-4727-5.

- Fawcett, L., and D. Walshaw. 2006. A hierarchical model for extreme wind speeds. Journal of the Royal Statistical Society: Series C (Applied Statistics) 55 (5):631–46. doi:10.1111/j.1467-9876.2006.00557.x.

- Fletcher, R., and C. M. Reeves. 1964. Function minimization by conjugate gradients. The Computer Journal 7 (2):149–54. doi:10.1093/comjnl/7.2.149.

- Frigessi, A., O. Haug, and H. Rue. 2002. A dynamic mixture model for unsupervised tail estimation without threshold selection. Extremes 5 (3):219–35. doi:10.1023/A:1024072610684.

- Galambos, J. 1987. The asymptotic theory of extreme order statistics, 2nd edn. New York: Wiley.

- Ghosh, S., and S. Resnick. 2010. A discussion on mean excess plots. Stochastic Processes and Their Applications 120 (8):1492–517. doi:10.1016/j.spa.2010.04.002.

- Gilleland, E., M. Ribatet, and A. C. Stephenson. 2013. A software review for extreme value analysis. Extremes 16 (1):103–19. doi:10.1007/s10687-012-0155-0.

- Goldstein, M. L., S. A. Morris, and G. G. Yen. 2004. Problems with fitting to the power-law distribution. The European Physical Journal B 41 (2):255–8. doi:10.1140/epjb/e2004-00316-5.

- Greenwood, J. A., J. M. Landwehr, N. C. Matalas, and J. R. Wallis. 1979. Probability weighted moments: Definition and relation to parameters of several distributions expressable in inverse form. Water Resources Research 15 (5):1049–54. doi:10.1029/WR015i005p01049.

- de Haan, L., and A. F. Ferreira. 2006. Extreme value theory: An introduction. New York: Springer.

- Hill, B. M. 1975. A simple general approach to inference about the tail of a distribution. The Annals of Statistics 3 (5):1163–74. doi:10.1214/aos/1176343247.

- Holmes, J., and W. Moriarty. 1999. Application of the generalized Pareto distribution to extreme value analysis in wind engineering. Journal of Wind Engineering and Industrial Aerodynamics 83 (1–3):1–10. doi:10.1016/S0167-6105(99)00056-2.

- Hosking, J. R. M., J. R. Wallis, and E. F. Wood. 1985. Estimation of the generalized extreme value distribution by the method of probability weighted moments. Technometrics 27 (3):251–61. doi:10.1080/00401706.1985.10488049.

- Jagger, T. H., and J. B. Elsner. 2006. Climatology models for extreme hurricane winds near the United States. Journal of Climate 19 (13):3220–36. doi:10.1175/JCLI3913.1.

- Juárez, S. F., and W. R. Schucany. 2004. Robust and efficient estimation for the generalized Pareto distribution. Extremes 7 (3):237–51. doi:10.1007/s10687-005-6475-6.

- Juárez, M. A. 2005. Objective Bayes estimation and hypothesis testing: The reference-intrinsic approach on non-regular models. CRISM Working Paper 05–14.

- Keylock, C. 2005. An alternative form for the statistical distribution of extreme avalanche runout distances. Cold Regions Science and Technology 42 (3):185–93. doi:10.1016/j.coldregions.2005.01.004.

- Koch, R. 2007. The 80/20 principle: The secret of achieving more with less. London: Nicholas Brealey Publishing.

- Kotz, S., and S. Nadarajah. 2000. Extreme value distributions: Theory and applications. London: Imperial College Press.

- Krehbiel, T., and L. C. Adkins. 2008. Extreme daily changes in U.S. dollar London inter-bank offer rates. International Review of Economics & Finance 17 (3):397–411. doi:10.1016/j.iref.2006.08.009.

- La Cour, B. R. 2004. Statistical characterization of active sonar reverberation using extreme value theory. IEEE Journal of Oceanic Engineering 29 (2):310–6. doi:10.1109/JOE.2004.826897.

- Lana, X., A. Burgueño, M. Martínez, and C. Serra. 2006. Statistical distributions and sampling strategies for the analysis of extreme dry spells in Catalonia (NE Spain). Journal of Hydrology 324 (1–4):94–114. doi:10.1016/j.jhydrol.2005.09.013.

- Leadbetter, M. R., G. Lindgren, and H. Rootzen. 1983. Extremes and related properties of random sequences and processes. New York: Springer.

- Lima, C. H. R., U. Lall, T. Troy, and N. Devineni. 2016. A hierarchical Bayesian GEV model for improving local and regional flood quantile estimates. Journal of Hydrology 541:816–23. doi:10.1016/j.jhydrol.2016.07.042.

- Malik, H. J. 1970. Estimation of the parameters of a Pareto distribution. Metrika 15 (1):126–32. doi:10.1007/BF02613565.

- McNeil, A. J., R. Frey, and P. Embrechts. 2005. Quantitative risk management: Concepts, techniques and tools. Princeton: University Press.

- McNeil, A. J. 1997. Estimating the tails of loss severity distributions using extreme value theory. ASTIN Bulletin 27 (1):117–37. doi:10.2143/AST.27.1.563210.

- Mendes, J. M., P. C. de Zea Bermudez, J. Pereira, K. F. Turkman, and M. J. P. Vasconcelos. 2010. Spatial extremes of wildfire sizes: Bayesian hierarchical models for extremes. Environmental and Ecological Statistics 17 (1):1–28. doi:10.1007/s10651-008-0099-3.

- Moharram, S. H., A. K. Gosain, and P. N. Kapoor. 1993. A comparative study for the estimators of the generalized Pareto distribution. Journal of Hydrology 150 (1):169–85. doi:10.1016/0022-1694(93)90160-B.

- Moisello, U. 2007. On the use of partial probability weighted moments in the analysis of hydrological extremes. Hydrological Processes 21 (10):1265–79. doi:10.1002/hyp.6310.

- Öztekin, T. 2005. Comparison of parameter estimation methods for the three-parameter generalized Pareto distribution. Turkish Journal of Agriculture and Forestry 29:419–28.

- Pandey, M. D., P. H. A. J. M. Van Gelder, and J. K. Vrijling. 2001. The estimation of extreme quantiles of wind velocity using L-moments in the peaks-over-threshold approach. Structural Safety 23 (2):179–92. doi:10.1016/S0167-4730(01)00012-1.

- Pandey, M. D., P. H. A. J. M. Van Gelder, and J. K. Vrijling. 2004. Dutch case studies of the estimation of extreme quantiles and associated uncertainty by bootstrap simulations. Environmetrics 15 (7):687–99. doi:10.1002/env.656.

- Peng, L., and A. Welsh. 2001. Robust estimation of the generalized Pareto distribution. Extremes 4 (1):53–65. doi:10.1023/A:1012233423407.

- Persky, J. 1992. Retrospectives: Pareto’s law. Journal of Economic Perspectives 6 (2):181–92. doi:10.1257/jep.6.2.181.

- Pickands, J. 1975. Statistical inference using extreme order statistics. The Annals of Statistics 3:119–31.

- Pisarenko, V. F., and D. Sornette. 2003. Characterization of the frequency of extreme earthquake events by the generalized Pareto distribution. Pure and Applied Geophysics 160 (12):2343–64. doi:10.1007/s00024-003-2397-x.

- R Core Team. 2021. R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. https://www.R-project.org/

- Ragulina, G., and T. Reitan. 2017. Generalized extreme value shape parameter and its nature for extreme precipitation using long time series and the Bayesian approach. Hydrological Sciences Journal 62 (6):863–79. doi:10.1080/02626667.2016.1260134.

- Robert, C. P. 1996. Intrinsic losses. Theory and Decision 40 (2):191–214. doi:10.1007/BF00133173.

- Rootzén, H., and N. Tajvidi. 1997. Extreme value statistics and wind storm losses: A case study. Scandinavian Actuarial Journal 1997 (1):70–94. doi:10.1080/03461238.1997.10413979.

- Rytgaard, M. 1990. Estimation in the Pareto distribution. ASTIN Bulletin 20 (2):201–16. doi:10.2143/AST.20.2.2005443.

- Shi, G., H. Atkinson, C. Sellars, and C. Anderson. 1999. Application of the generalized Pareto distribution to the estimation of the size of the maximum inclusion in clean steels. Acta Materialia 47 (5):1455–68. doi:10.1016/S1359-6454(99)00034-8.

- Tancredi, A., C. Anderson, and A. O’Hagan. 2006. Accounting for threshold uncertainty in extreme value estimation. Extremes 9 (2):87–106. doi:10.1007/s10687-006-0009-8.

- Vilar-Zanón, J. L., and C. Lozano-Colomer. 2007. On Pareto conjugate priors and their application to large claims reinsurance premium calculation. ASTIN Bulletin 37 (2):405–28. doi:10.1017/S0515036100014938.

- White, E. P., B. J. Enquist, and J. L. Green. 2008. On estimating the exponent of power-law frequency distributions. Ecology 89:905–12. doi:10.1890/07-1288.1.

- Zagorski, M., and M. Wnek. 2007. Analysis of the turbine steady-state data by means of generalized Pareto distribution. Mechanical Systems and Signal Processing 21 (6):2546–59. doi:10.1016/j.ymssp.2007.01.002.

- de Zea Bermudez, P., and S. Kotz. 2010a. Parameter estimation of the generalized Pareto distribution–Part I. Journal of Statistical Planning and Inference 140 (6):1353–73. doi:10.1016/j.jspi.2008.11.019.

- de Zea Bermudez, P., and S. Kotz. 2010b. Parameter estimation of the generalized Pareto distribution–Part II. Journal of Statistical Planning and Inference 140 (6):1374–88. doi:10.1016/j.jspi.2008.11.020.

- de Zea Bermudez, P., J. Mendes, J. M. C. Pereira, K. F. Turkman, and M. J. P. Vasconcelos. 2009. Spatial and temporal extremes of wildfire sizes in Portugal (1984–2004). International Journal of Wildland Fire 18 (8):983–91. doi:10.1071/WF07044.

- de Zea Bermudez, P., and M. A. A. Turkman. 2003. Bayesian approach to parameter estimation of the generalized Pareto distribution. Test 12 (1):259–77. doi:10.1007/BF02595822.

Appendix A:

A literature on GPD estimation and its applications

Table A1. Some literature on GPD calibration approaches to datasets. Abbreviations are Maximum Literature (MLE), Method of Moments (MoM), Method of Medians (MM), Probability Weighted Moments (PWM), Elemental Percentile Method (EPM), Optimal Bias Robust Estimator (OBRE), Least Squares (LS), Maximum Entropy (ME), Minimum density power divergence estimator (MDPD).