?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We consider balanced one-way, two-way, and three-way ANOVA models to test the hypothesis that the fixed factor A has no effect. The other factors are fixed or random. We determine the noncentrality parameter for the exact F-test, describe its minimal value by a sharp lower bound, and thus we can guarantee the worst-case power for the F-test. These results allow us to compute the minimal sample size, i.e. the minimal number of experiments needed. We also provide a structural result for the minimum sample size, proving a conjecture on the optimal experimental design.

1. Introduction

Consider a balanced one-way, two-way or three-way ANOVA model with fixed factor A to test the null hypothesis H0 that A has no effect, that is, all levels of A have the same effect. The other factors are denoted B, C (crossed with or nested in A) or U, V (factors that A is nested in). They can be fixed factors (printed in normal font) or random factors (printed in bold). As usual in ANOVA, we assume identifiability, normality, independence, homogeneity, and compound symmetry (Scheffé Citation1959; Maxwell, Delaney, and Kelley Citation2017). In particular, the fixed effects are identifiable and the random effects and errors have a normal distribution with mean zero and they are mutually independent. By A × B, we denote crossed factors with interaction, by we denote that B is nested in A. Practical examples that are modeled by crossed, nested, and mixed classifications are included, for example, in Canavos and Koutrouvelis (Citation2009), Doncaster and Davey (Citation2007), Jiang (Citation2007), Montgomery (Citation2017), Rasch (Citation1971), Rasch et al. (Citation2011), Rasch, Spangl, and Wang (Citation2012), Rasch and Schott (Citation2018), Rasch, Verdooren, and Pilz (Citation2020). The number of levels of A (B, C, U, V) is denoted by a (b, c, u, v, respectively). The effects are denoted by Greek letters. For example, the effects of the fixed factor A in the one-way model A, the two-way nested model

and the three-way nested model

read

(1)

(1)

The numbers of levels (excluding a) and the number of replicates n will be called parameters in this article.

This article derives the details for the noncentrality parameter and we show how to obtain the minimum sample size for a large family of ANOVA models.

We derive the details for the noncentrality parameter (Theorem 2.1).

We derive the worst-case noncentrality parameter (Theorem 2.4), required to obtain the guaranteed power of an ANOVA experiment.

We show how to determine the minimal experimental size for ANOVA experiments by a new structural result that we call “pivot” effect (Theorem 2.7). The “pivot” effect means one of the parameters (the “pivot” parameter) is more power-effective than the others. Considering this “pivot” effect is not only helpful for planning experiments but is indeed necessary in certain models, see Remark 2.3(ii).

Our main results are thus for the exact F-test noncentrality parameter, the power, and the minimum sample size determination, see Section 2. In Section 3, we include two exceptional models that do not have an exact F-test. In Section 4, we discuss the distinction between real and integer parameters for some of our results. The proofs are in Appendix A.

2. Main results

Consider a balanced one-way, two-way or three-way ANOVA model, with the notation above, to test the null hypothesis H0 that the fixed factor A has no effect. For most of these models an exact F-test exists, under the usual assumptions mentioned above. The test statistic is given by a ratio whose numerator is given by the mean squares (MS) of the fixed factor A, denoted by

The denominator depends on the model. The respective test statistic has an F-distribution (central under H0, noncentral in general). We denote its parameters by the numerator d.f.

the denominator d.f.

and the noncentrality parameter λ. The notation d.f. is short for degrees of freedom.

By we denote the total variance, it is the sum of the variance components, such as

(the variance component of the factor B) and the error term variance

2.1. The noncentrality parameter

Our first main result lists and the exact form of the noncentrality parameter λ. Our expressions for λ show the detailed form in which the variance components occur. This exact form of λ is the key to a reliable power analysis, which is essential for the design of experiments.

Theorem 2.1.

Consider a balanced one-way, two-way or three-way ANOVA model, with the assumptions of identifiability, normality, independence, homogeneity, and compound symmetry. We test the null hypothesis H0 that the fixed factor A has no effect. Then, under the assumption that an exact F-test exists, the test statistic has an F-distribution (central under H0, noncentral in general) with numerator d.f. , denominator d.f.

, and noncentrality parameter

obtained from .

Table 1. List of one-way, two-way, and three-way ANOVA models with fixed factor A, for use in Theorem 2.1, etc.

The proof of Theorem 2.1 is in Appendix A.

Example 2.2.

For the model Theorem 2.1 states that the test statistic

has an F-distribution (central under H0, noncentral in general) with numerator d.f.

denominator d.f.

and noncentrality parameter

Remark 2.3.

The models

and

are excluded from , since an exact F-test does not exist, see Section 3. We also exclude the nesting of crossed factors into others, such as

From inspecting the expression for λ in Example 2.2, we obtain the following somewhat surprising observation. If n increases, then clearly λ increases, but in the limit

we do not obtain

It implies that increasing the number of replicates n increases the power but there is a limit for the power if only n is increased. This observation affects each model in with T consisting of more than one term. These are exactly the models which in do not have the parameter n in the “pivot” column. In fact, the “pivot” effect (Theorem 2.7) shows that for these models not n but a different parameter should be increased to achieve any given prespecified power.

2.2. Least favorable case noncentrality parameter

For an exact F-test, the computation of the power is immediate: Given the type I risk α, obtain the type II risk β by solving

(2)

(2)

where

denotes the γ-quantile of the F-distribution with degrees of freedom ν1 and ν2 and noncentrality parameter λ. Then

is the power of the test. The next theorem is our second main result, we determine the noncentrality parameter

in the least favorable case, that is, the sharp lower bound in

Using

in (2) yields the guaranteed power

of the test.

Let δ denote the minimum difference to be detected between the smallest and the largest treatment effects, i.e. between the minimum and the maximum

of the set of the main effects of the fixed factor A,

(3)

(3)

We assume the standard condition to ensure identifiability of parameters, which is that α has zero mean in all directions (Fox Citation2015, pp. 157, 169, 178), (Rasch et al. Citation2011, Section 3.3.1.1), (Rasch and Schott Citation2018, Section 5), (Rasch, Verdooren, and Pilz Citation2020, Section 5), (Scheffé Citation1959, Section 4.1, p. 92), (Searle and Gruber Citation2017, p. 415, Section 7.2.i). That is, exemplified for three models,

(4)

(4)

Theorem 2.4.

We have the following lower bounds for the noncentrality parameter λ.

With the parameter or product of parameters denoted R in , we have

For the models in that involve a factor V that A is nested in, let

. Then the lower bound in (i) can be raised to

For the models in that involve the factors U, V that A is nested in, let

denote a, u, v sorted from least to greatest. Then the lower bound in (i) can be raised to

The proof of Theorem 2.4 is in Appendix A.

Remark 2.5.

The importance of a lower bound for the noncentrality parameter λ is its use for the power analysis, required for the design of experiments. By Theorem 2.4, we establish such a bound. The difference to the previous literature (Rasch et al. Citation2011; Rasch, Spangl, and Wang Citation2012) is that we use the correct, detailed form of the noncentrality parameter λ from Theorem 2.1, and we use the new, sharp bound for the sum of squared effects from Kaiblinger and Spangl (Citation2020).

The bounds in Theorem 2.4 are sharp. The extremal case (minimal λ) occurs if the main effects (1) of the factor A are least favorable, while satisfying (3) and (4), and also the variance components are least favorable, while their sum does not exceed

For the extremal

configurations we refer to Kaiblinger and Spangl (Citation2020). The least favorable splitting of

is that the total variance is consumed entirely by the first term of T in , see the worst cases in Examples 2.6(i) and 2.6(ii).

If in a model there are “inactive” variance components (i.e., some components of the model do not occur in T), then the most favorable splitting of

is that the total variance tends to be consumed entirely by inactive components. In these cases, λ goes to infinity,

See the best case in Example 2.6(i).

If in a model all variance components are “active” (i.e., all components of the model also occur in T), then the most favorable splitting of

is that the total variance is consumed entirely by the last term of T. See the best case in Example 2.6(ii).

Example 2.6.

For the model

from we have

Since R = b, by Theorem 2.4 we obtain for the noncentrality parameter λ,

Since the first term of T is and the inactive components are

we obtain by Remark 2.5 that the extremal total variance

splittings are

For the model

from we have

Since R = b, by Theorem 2.4 we obtain for the noncentrality parameter λ,

In this model there are no “inactive” variance components, and by Remark 2.5 we obtain

2.3. Minimal sample size

The size of the F-test is the product of the parameters, for the factors that occur in the model, including the number n of replications. For prespecified power requirements the minimal sample size can be determined by Theorem 2.4. Compute

and thus obtain the guaranteed power

for each set of parameters that belongs to a given size, increasing the size until the power P0 is reached.

The next theorem is the main structural result of our article. We show that for given power requirements the minimal sample size can be obtained by varying only one parameter, which we call “pivot” parameter, keeping the other parameters minimal. We thus prove and generalize suggestions in Rasch et al. (Citation2011), see Remark 2.9(ii). Part (i) of the next theorem describes the key property of the “pivot” parameter, part (ii) is an intermediate result, and part (iii) is the minimum sample size result.

Theorem 2.7.

Denote by “pivot” parameter the parameter in the second column of . Then the following hold.

If a parameter increases, then the power increases most if it is the “pivot” parameter.

For fixed size, if we allow the parameters to be real numbers, then the maximal power occurs if the “pivot” parameter varies and the other parameters are minimal.

For fixed power, if we allow the parameters to be real numbers, then the minimum size occurs if the “pivot” parameter varies and the other parameters are minimal.

The proof of Theorem 2.7 is in Appendix A.

Example 2.8.

For the model we have the following. For given power requirements

the minimal sample size is obtained by varying the parameter b, keeping c and n minimal. For this and two other examples, see .

Table 2. Exemplifying the “pivot” effect (Theorem 2.7) for three models.

Remark 2.9.

The “pivot” parameter in Theorem 2.7, defined in the second column of , can also be identified directly from the model formula in the first column of the table. That is, the “pivot” parameter is the number of levels of the random factor nearest to A, if we include the number n of replicates as a virtual random factor, and exclude factors that A is nested in (labeled U, V). For example, in

the random factor B is nearer to A than the random factor C or the virtual random factor of replicates; and indeed the “pivot” parameter is b. Inspired by related comments in Doncaster and Davey (Citation2007, p. 23) we interpret this heuristic observation as a correlation between higher power effect and higher organizatorial level.

In Rasch et al. (Citation2011, p. 73), it is observed that for the two-way model

only the parameter b should vary, but n should be chosen as small as possible, to achieve the minimum sample size. For the model

it is conjectured (Rasch et al. Citation2011, p. 78) that only n should vary, but v should be as small as possible, to achieve the minimal sample size. These suggestions are motivated by inspecting the effect of the parameters on the denominator d.f.

By Theorem 2.7(iii), we prove the conjecture and generalize these observations. In fact, from the “pivot” parameter for

is b, and the “pivot” parameter for

is n. Our proof works by inspecting the effect of the parameters not only on

and

but also on the noncentrality parameter λ. Note we assume that the parameters are real numbers, for the subtleties of the transition to integer parameters see Section 4.

The next example illustrates the minimal sample size computation for ANOVA models, based on our main results.

Example 2.10.

Consider the model

Let

let a = 6, let

and consider the power requirement

From Theorem 2.7, we observe that the minimal design has n = 2 and only the “pivot” parameter b is relevant. By Theorems 2.1 and 2.4, we obtain that to achieve

the minimal design is

with size abcn = 420 and power P = 0.909083.

Consider the model

and assume

This model is equivalent to the exact F-test models

and

cf. Lemma 3.1 below. Let

let a = 6, let

and consider the power requirement

By Theorem 2.7, we obtain that the minimal design has

and only the “pivot” parameter b is relevant. By Theorems 2.1 and 2.4, we obtain that to achieve

the minimal design is

with size abcn = 840 and power P = 0.909083.

Remark 2.11.

In Example 2.10, the power P = 0.909083 for in (ii) is the same as the power for

in (i). This coincidence is implied by the fact that (i) and (ii) have the same d.f. and in the worst case of (i) and (ii) the total variance is consumed entirely by

cf. Remark 2.5(ii).

3. Models with approximate F-test

For the two models

(5)

(5)

an exact F-test does not exist. Approximate F-tests can be obtained by Satterthwaite’s approximation that goes back to Behrens (Citation1929), Welch (Citation1938, Citation1947) and generalized by Satterthwaite (Citation1946), see Sahai and Ageel (Citation2000, Appendix K). The details of the approximate F-tests for the models in (5) are in Rasch et al. (Citation2011, Sections 3.4.1.3 and 3.4.4.5). Satterthwaite’s approximation in a similar or different form also occurs, for example, in Davenport and Webster (Citation1972, Citation1973), Doncaster and Davey (Citation2007, pp. 40–41), Hudson and Krutchkoff (Citation1968), Lorenzen and Anderson (Citation2019), Rasch, Spangl, and Wang (Citation2012), Wang, Rasch, and Verdooren (Citation2005), also denoted as quasi-F-test (Myers and Well Citation2010).

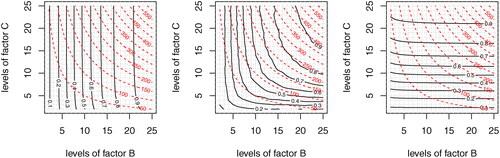

The approximate F-test d.f. involve mean squares to be simulated. To approximate the power of the test, simulate data such that H0 is false and compute the rate of rejections. The rate approximates the power of the test. In the middle plot of , we give an example of the power behavior for the approximate F-test model The plot shows that the “pivot” effect for exact F-tests (Theorem 2.7) does not generalize to approximate F-tests.

Figure 1. Power and size for the mixed model for a = 6,

δ = 5, and three variance component assignments

from left to right. Each contour plots shows the guaranteed power

(solid curves) overlaid with the size factor

(red, dashed hyperbolas) as functions of

for fixed n = 2. By Lemma 3.1(ii) the left model is equivalent to

such that by Theorem 2.7 the “pivot” parameter is b. The middle plot is an approximate F-test model (the power is approximated by 10,000 simulations), there is no “pivot” effect. The right model is equivalent to

the “pivot” parameter is c.

The next lemma rephrases observations in Rasch et al. (Citation2011) and Rasch, Spangl, and Wang (Citation2012). It allowed us to avoid approximations but use exact F-test computations for the left and the right plots of .

Lemma 3.1.

The following special cases of (5) are equivalent to exact F-test models, in the sense of identical d.f. and noncentrality parameters.

If in the model

we have

, then it is equivalent to

and

If in the model

we have

, then it is equivalent to

and

; while if

, then it is equivalent to

Proof.

The equivalences follow from inspecting the d.f. and the noncentrality parameter. □

Remark 3.2.

To look up in the first case of Lemma 3.1(ii), swap the factor names first.

4. Real versus integer parameters

The “pivot” effect for the minimum sample size described in Theorem 2.7(iii) is formulated with the assumption that the parameters are real numbers. The effect also occurs in most practical examples, where the parameters are integers. But we constructed the following example to point out that for integer parameters the “pivot” effect is not a granted fact.

Example 4.1.

Consider the two-way model with a = 15,

δ = 7,

and required power

Then for real

the minimum sample size obtained by Theorem 2.7(iii) occurs for

where P = 0.9. For integers

the minimum sample size occurs for

where P = 0.902873. Thus, in this example the “pivot” effect is obstructed if we switch from real numbers to integers. In more realistic examples this obstruction does not occur.

Remark 4.2.

While Example 4.1 shows that the transition to integers can obstruct (if by an unrealistic example) the “pivot” effect, we remark that the obstruction is limited, that is, the real number computation has the following valid implication for the integer result. The real number minimum at readily computed by using Theorem 2.7(iii), immediately implies that the integer minimum size occurs at (b, n) with

between

and

that is,

in fact in the example

A similar implication holds for all models in .

5. Conclusions

We determine the noncentrality parameter of the exact F-test for balanced factorial ANOVA models. From a sharp lower bound for the noncentrality parameter, we obtain the power that can be guaranteed in the least favorable case. These results allow us to compute the minimal sample size, but we also provide a structural result for the minimal sample size. The structural result is formulated as a “pivot” effect, which means that one of the factors is more relevant than the others, for the power and thus for the minimum sample size.

Acknowledgments

The authors are grateful to Karl Moder for helpful discussions and comments. We also thank the reviewer for useful comments.

References

- Alpargu, G., and G. P. H. Styan. 2000. Some comments and a bibliography on the Frucht-Kantorovich and Wielandt inequalities. In Innovations in multivariate statistical analysis, ed. R. D. H. Heijmans, D. S. G. Pollock, and A. Satorra, 1–38. Dordrecht, Netherlands: Springer. doi: 10.1007/978-1-4615-4603-0.

- Behrens, W. V. 1929. Ein Beitrag zur Fehlerberechnung bei wenigen Beobachtungen (in German). Landwirtschaftliche Jahrbücher 68:807–37.

- Bhattacharya, P. K., and P. Burman. 2016. Theory and methods of statistics. London: Elsevier. ISBN: 9780128041239. https://www.elsevier.com/books/theory-and-methods-of-statistics/bhattacharya/978-0-12-802440-9.

- Brauer, A., and A. C. Mewborn. 1959. The greatest distance between two characteristic roots of a matrix. Duke Mathematical Journal 26 (4):653–61. doi:10.1215/S0012-7094-59-02663-8. doi: 10.1215/S0012-7094-59-02663-8.

- Canavos, G., and I. Koutrouvelis. 2009. An introduction to the design & analysis of experiments. London: Pearson. ISBN: 978-0136158639. https://www.pearson.com/us/higher-education/program/Canavos-Introduction-to-the-Design-Analysis-of-Experiments/PGM295527.html.

- Davenport, J. M., and J. T. Webster. 1972. Type-I error and power of a test involving a Satterthwaite’s approximate F-statistic. Technometrics 14 (3):555–69. doi: 10.1080/00401706.1972.10488945.

- Davenport, J. M., and J. T. Webster. 1973. A comparison of some approximate F-tests. Technometrics 15 (4):779–89. doi: 10.1080/00401706.1973.10489111.

- Doncaster, C. P., and A. J. H. Davey. 2007. Analysis of variance and covariance: How to choose and construct models for the life sciences. Cambridge, UK: Cambridge University Press. doi: 10.1017/CBO9780511611377.

- Finner, H., and M. Roters. 1997. Log-concavity and inequalities for chi-square, F and beta distributions with applications in multiple comparisons. Statistica Sinica 7 (3):771–87.

- Fox, J. 2015. Applied regression analysis and generalized linear models. 3rd ed. Thousand Oaks, CA: SAGE Publications. ISBN: 9781452205663. https://us.sagepub.com/en-us/nam/applied-regression-analysis-and-generalized-linear-models/book237254.

- Ghosh, B. K. 1973. Some monotonicity theorems for χ2, F and t distributions with applications. Journal of Royal Statistical Society: Series B 35 (3):480–92.

- Gutman, I., K. C. Das, B. Furtula, E. Milovanović, and I. Milovanović. 2017. Generalizations of Szőkefalvi Nagy and Chebyshev inequalities with applications in spectral graph theory. Journal of Applied Mathematics and Computing 313:235–44. doi:10.1016/j.amc.2017.05.064. doi: 10.1016/j.amc.2017.05.064.

- Hocking, R. R. 2003. Methods and applications of linear models: Regression and the analysis of variance. New York: Wiley. doi: 10.1002/0471434159.

- Hudson, J. D., Jr., and R. G. Krutchkoff. 1968. A Monte Carlo investigation of the size and power of tests employing Satterthwaite’s synthetic mean squares. Biometrika 55 (2):431–3. doi: 10.2307/2334890.

- Jiang, J. 2007. Linear and generalized linear mixed models and their applications. New York: Springer. doi: 10.1007/978-0-387-47946-0.

- Johnson, N. L., S. Kotz, and N. Balakrishnan. 1995. Continuous univariate distributions. 2nd ed., vol. 2. ISBN: 9780471584940. https://www.wiley.com/en-us/Continuous+Univariate+Distributions%2C+Volume+2%2C+2nd+Edition-p-9780471584940.

- Kaiblinger, N., and B. Spangl. 2020. An inequality for the analysis of variance. Mathematical Inequalities & Applications 23 (3):961–9. doi: 10.7153/mia-2020-23-74.

- Lindman, H. R. 1992. Analysis of variance in experimental design. New York: Springer. doi: 10.1007/978-1-4613-9722-9.

- Lorenzen, T., and V. Anderson. 2019. Design of experiments: A No-Name approach. Boca Raton, FL: CRC Press. ISBN: 9780367402327. https://www.crcpress.com/Design-of-Experiments-A-No-Name-Approach/Lorenzen-Anderson/p/book/9780367402327.

- Maxwell, S. E., H. D. Delaney, and K. Kelley. 2017. Designing experiments and analyzing data. New York: Routledge. ISBN: 9781138892286. https://www.routledge.com/Designing-Experiments-and-Analyzing-Data-A-Model-Comparison-Perspective/Maxwell-Delaney-Kelley/p/book/9781138892286.

- Montgomery, D. 2017. Design and analysis of experiments. New York: Wiley. ISBN: 978-1-119-32093-7. https://www.wiley.com/Design+and+Analysis+of+Experiments%2C+9th+Edition-p-9781119320937.

- Myers, J. L., and A. D. Well. 2010. Research design and statistical analysis. New York: Taylor & Francis. doi: 10.4324/9780203726631.

- Rasch, D. 1971. Gemischte Klassifikation der dreifachen Varianzanalyse (in German). Biometrische Zeitschrift 13 (1):1–20. doi:10.1002/bimj.19710130102. doi: 10.1002/bimj.19710130102.

- Rasch, D., J. Pilz, R. Verdooren, and A. Gebhardt. 2011. Optimal experimental design with R. London: Chapman & Hall. doi: 10.1007/s00362-012-0473-y.

- Rasch, D., and D. Schott. 2018. Mathematical statistics. Hoboken, NJ: Wiley. doi: 10.1002/9781119385295.

- Rasch, D., B. Spangl, and M. Wang. 2012. Minimal experimental size in the three way ANOVA cross classification model with approximate F-tests. Communications in Statistics – Simulation and Computation 41 (7):1120–30. doi: 10.1080/03610918.2012.625832.

- Rasch, D., and R. Verdooren. 2020. Determination of minimum and maximum experimental size in one-, two- and three-way ANOVA with fixed and mixed models by R. Journal of Statistical Theory and Practice 14 (4):57. doi: 10.1007/s42519-020-00088-6.

- Rasch, D., R. Verdooren, and J. Pilz. 2020. Applied statistics. New York: Wiley. doi: 10.1002/9781119551584.

- Sahai, H., and M. I. Ageel. 2000. The analysis of variance. Basel, Switzerland: Birkhäuser. doi: 10.1007/978-1-4612-1344-4.

- Satterthwaite, F. 1946. An approximate distribution of estimates of the variance components. Biometrics Bulletin 2 (6):110–4. doi:10.2307/3002019. doi: 10.2307/3002019.

- Scheffé, H. 1959. The analysis of variance. New York: Wiley. ISBN: 9780471345053. https://www.wiley.com/en-us/The+Analysis+of+Variance-p-9780471345053.

- Searle, S. R., and M. H. J. Gruber. 2017. Linear models. 2nd ed. New York: Wiley. ISBN 9781118952856. https://www.wiley.com/en-us/Linear+Models%2C+2nd+Edition-p-9781118952832.

- Sharma, R., M. Gupta, and G. Kapoor. 2010. Some better bounds on the variance with applications. Journal of Mathematical Inequalities 4 (3):355–63. doi: 10.7153/jmi-04-32.

- Szőkefalvi-Nagy, J. 1918. Über algebraische Gleichungen mit lauter reellen Wurzeln (in German). Jahresbericht der Deutschen Mathematiker-Vereinigung 27:37–43.

- Wang, M., D. Rasch, and R. Verdooren. 2005. Determination of the size of a balanced experiment in mixed ANOVA models using the modified approximate F-test. Journal of Statistical Planning and Inference 132 (1–2):183–201. doi: 10.1016/j.jspi.2004.06.022.

- Welch, B. L. 1938. The significance of the difference between two means when the population variances are unequal. Biometrika 29 (3–4):350–62. doi: 10.1093/biomet/29.3-4.350.

- Welch, B. L. 1947. The generalization of ‘Student’s’ problem when several different population variances are involved. Biometrika 34 (1-2):28–35. doi: 10.1093/biomet/34.1-2.28.

- Witting, H. 1985. Mathematische Statistik I (in German).. New York: Springer. doi: 10.1007/978-3-322-90150-7.

Appendix A: Proofs

We include a short proof of the formula for the noncentrality parameter in Lindman (Citation1992, p. 151), formulated here in a more general form.

Lemma A.1.

Let a test statistic F have a noncentral F-distribution with numerator and denominator d.f. df1 and df2, respectively, written as , with

and

stochastically independent. Then the noncentrality parameter λ satisfies

Proof.

Since and

we obtain

and

Hence,

(A.1)

(A.1)

which implies the expression for λ in the lemma. □

Remark A.2.

Jensen’s equality implies For

see Johnson, Kotz, and Balakrishnan (Citation1995, formula (30.3a)).

The next lemma summarizes monotonicity properties of the noncentral F-distribution from Ghosh (Citation1973), listed in Hocking (Citation2003, Section 16.4.2), see also Finner and Roters (Citation1997, Theorem 4.3) with a sharper statement. Recall that for we let

denote the γ-quantile of the central F-distribution with

and

degrees of freedom.

Lemma A.3.

Let F be distributed according to the noncentral F-distribution with noncentrality parameter λ. Then referring to the probability

as power, we have if

decreases and

, λ increase, then the power increases. That is, we have the implication

with

and

.

Proof.

For varying see Ghosh (1973, Theorem 6). For varying

apply Ghosh (1973, Theorem 5) with

For varying λ, see Witting (Citation1985, p. 219, Satz 2.36(b)) or Bhattacharya and Burman (2016, p. 53, Exercise 2.9). □

Proof of Theorem 2.1.

We prove the result only for the model the proofs for the other models are analogous. In the expected mean squares table (Rasch et al. Citation2011, p. 100, Table 3.15) the two expressions

(A.2)

(A.2)

are equal under the null hypothesis H0 of no A-effects. Hence, H0 can be tested by the exact F-test

(A.3)

(A.3)

which under H0 is central F-distributed, in general noncentral F-distributed. From the ANOVA table (Rasch et al. Citation2011, p. 91, Table 3.10) the numerator and denominator d.f. are

and

respectively. By Lemma A.1 the noncentrality parameter λ is thus

(A.4)

(A.4)

□

Remark A.4.

The formula Equation(A.4)

(A.4)

(A.4) allows us to point out the difference of our results compared to the previous literature (Rasch et al. Citation2011, p. 58–59). In fact, the expression bcn in the numerator at the left-hand side of Equation(A.4)

(A.4)

(A.4) coincides with the expression C in Rasch et al. (Citation2011, Table 3.2), but note that the denominator is distinct. The exact expression for λ in Equation(A.4)

(A.4)

(A.4) has the sum of variance components

replaced by the linear combination

see also Rasch and Verdooren (Citation2020). The fourth author and Rob Verdooren have acknowledged our results and update their available R-programs accordingly, note in Rasch and Verdooren (Citation2020) some citation numbers have been mixed up. To reproduce the examples of the present paper, R-code is available from the first author.

The transformation from the left-hand side to the right-hand side in Equation(A.4)

(A.4)

(A.4) shifts the attention from the product of parameters bcn to the single parameter b. This observation is the key to our general “pivot” effect result (Theorem 2.7).

To verify the details of note that the expected mean squares table entries used in the proof of Theorem 2.1 depend on the factors being fixed or random.

Proof of Theorem 2.4.

As above we prove the result for the model

Since

(A.5)

(A.5)

and the Szőkefalvi-Nagy inequality (Szőkefalvi-Nagy Citation1918; Brauer and Mewborn Citation1959; Alpargu and Styan Citation2000, p. 11; Sharma, Gupta, and Kapoor Citation2010; Gutman et al. Citation2017; Kaiblinger and Spangl Citation2020) states that

(A.7)

(A.7)

ii, iii. By Kaiblinger and Spangl (Citation2020) we have for the

matrix

and for the

array

(A.8)

(A.8)

Proof of Theorem 2.7.

We consider the parameters as competitors in

(A.9)

(A.9)

For each model in , we analyze the effect of the parameters on

and λ, using the arguments illustrated in Example A.5 below. The inspection yields that for each model there is a sole winner, which we call the “pivot” parameter. We exemplify the scoring for four models:

Table

Since by Lemma A.3 the lead in Equation(A.9)(A.9)

(A.9) also means the lead in power increase, we thus obtain that the “pivot” yields the maximal power increase.

ii. Start with minimal parameters and apply (i).

iii. is equivalent to (ii). □

Example A.5.

We illustrate the proof of Theorem 2.7(i) by showing the typical argument for most increase in and the typical argument for most increase in λ.

In the model

the parameter n is more effective than b or c in increasing

(A.10)

(A.10)

For the model

the parameter b is more effective than c or n in increasing λ,

(A.11)

(A.11)

since b, c, n equally increase the numerator of Equation(A.11)(A.11)

(A.11) , but only b does not increase the denominator.