?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Does exposure to an unfamiliar migrant community change implicit biases? We conducted an experimental study by exposing Malaysians to a few hours of volunteering with Rohingya refugees, and we examined the effect of this treatment on their attitudes through a textual analysis. We measured changes in attitude through pure valence and multiple measures of implicit bias, including linguistic intergroup bias. We found that the volunteers became markedly more positive, and this change was statistically significant. Our results suggest that brief exposure to refugees may be a cost-effective policy lever for changing local perceptions of refugees.

Introduction

Forced displacement is one of the most serious issues of our time. The latest data from the United Nations Refugee Agency (abbreviated UNHCR from here onwardsFootnote1) indicate that there are approximately 79.5 million individuals who are forcibly displaced worldwide; this number includes refugees, internally displaced persons (IDPs), and asylum seekers.Footnote2 The UNHCR defines refugees as ‘people who have fled war, violence, conflict, or persecution and have crossed an international border to find safety in another country.’Footnote3 Refugees are a protected group under international law; specifically, their rights are outlined in the 1951 Convention and Protocol Relating to the Status of Refugees and the 1967 Protocol, which are both signed by 142 countries worldwide.Footnote4 Before formally acquiring refugee status, individuals fleeing war, violence, conflict, or persecution are granted asylum-seeker status through the UNHCR, following the trajectory depicted in (Dunstan, Citation1998; Hathaway, Citation1988; Pine & Drachman, Citation2005). also depicts two crucial points in a forced migration journey: the point at which displaced individuals become asylum-seekers, and the point at which asylum-seekers become refugees. The first point happens after the asylum paperwork has been processed, and the second point happens some time when the individual is in the intermediate host country, once UNHCR has approved their refugee paperwork and grants them a physical card to prove their status.

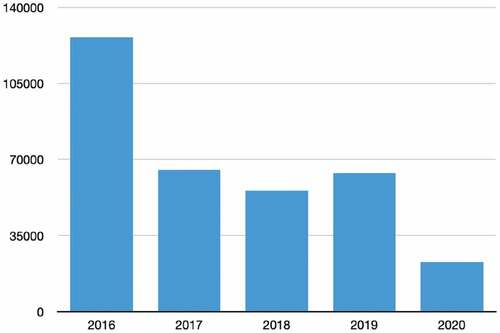

Under President Trump, the U.S. refugee resettlement program (the largest in the world) dramatically curtailed resettlement.Footnote5 The U.S. only admitted 22,491 refugees in 2018, half of its target admissions ceiling of 45,000.Footnote6 The country further reduced its admissions ceiling to 30,000 for 2019 and announced that it planned to admit a maximum of 18,000 refugees in 2020; this is the lowest number of refugees resettled by the U.S. since 1980, when the resettlement program was created.Footnote7 In many countries, a major factor in this policy shift is growing hostility toward refugees by the host population; for example, Bruneau et al. (Citation2018) found ‘blatant dehumanization of Muslim refugees’ in large samples in four European countries (the Czech Republic, Hungary, Spain, and Greece). Since many final host country destinations have restricted or lessened their numbers, refugees have recently been accumulating in countries that have traditionally served as stopovers in the resettlement process (Barcus et al., Citation2017; Rashid, Citation2020; Stein, Citation1986). Globally, fewer than 1% of the world’s refugees are ever resettled; furthermore, approximately 85% are hosted in developing countries, which includes countries such as Malaysia, where we conducted this study.Footnote8 In fact, in the past 4 years (i.e., since 2016), global departures (i.e., the total number of refugees resettled to final host countries) has been on a steady decline, as depicted in .

Figure 2. Global departures of resettled refugees. Data are from the UNHCR resettlement data portal (https://rsq.unhcr.org).

Much of the focus in addressing refugee issues has been on policy changes. A Nobel Laureate in Economics once proposed, for example, that richer countries should pay poorer countries to take in and resettle refugees (Bubb et al., Citation2011). However, we want to focus on another facet of the issue: the plasticity of host population attitudes. We choose to focus on host population attitudes as previous studies have shown that they may be a critical factor in determining refugee outcomes (Nungsari et al., Citation2020b). Thus, we examine the effectiveness of an intervention to improve host population perceptions of refugees, specifically brief, direct exposure in a volunteer setting. We investigate Malaysian citizens’ perceptions before and after volunteering for several hours in a Malay language training program for Rohingya refugees in Kuala Lumpur, the nation’s capital. The Malaysian citizens in our study had no prior direct interactions with refugees.

Malaysia is home to more than 178,000 refugees and asylum seekers, with roughly half of them being urbanites located in the Kuala Lumpur metropolitan area.Footnote9 Approximately 57% of this population is Rohingya, a stateless Muslim refugee population from the western coastal state of Rakhine in the Buddhist-majority nation of Myanmar. Most of the Rohingya population in Malaysia entered the country during the mass exodus from Rakhine State in 2015, but it is not uncommon to meet Rohingya people who have been in Malaysia for decades.Footnote10 Since Malaysia has not signed the 1951 United Nations Convention of Refugees, refugees in Malaysia are not granted legal status by the government (Stange et al., Citation2019). They cannot legally work and are constrained in the type and nature of jobs that they can hold in the country, regardless of the amount of education and training that they have (Lego, Citation2018; Nungsari et al., Citation2020a). To make money to sustain themselves and their families, refugees generally either work informally for local companies and organizations in industries such as construction, cleaning, or landscaping, complete ad hoc jobs for small amounts of money, or operate as small-time entrepreneurs by running their own business (Buscher & Heller, Citation2010; Salim, Citation2019; Sullivan, Citation2016). Given that all of these lines of work involve interfacing with members of the host country, it is crucial that refugees searching for work have at least a basic grasp of the local language (Alba & Nee, Citation1997; Chiswick & Miller, Citation2001; Remennick, Citation2003). The ability to contact locals to make a living is particularly important for the Rohingya because they mainly obtain jobs through social networks (Wahba & Zenou, Citation2005).

While the sort of brief intervention described in our study is less likely to be effective in a society where most citizens have strong, longstanding opinions about refugees, we hypothesize that it would be very effective in countries where refugees have not historically been permanently resettled. In fact, as mentioned, none of the participants in our study had ever interacted with a refugee before, and many had little knowledge of the Rohingya or refugee policy in Malaysia. Potentially, by providing a positive first impression of the Rohingya, such an intervention could pre-empt and later attenuate negative shocks to perceptions that tend to emerge as the refugee population increases; these perceptions relate to acrimonious debates about access to social services and negative news stories about crimes committed by refugees. In particular, our findings show that host citizen attitudes toward Rohingya refugees – both measured directly through the valence of the emotion conveyed through the language used (i.e., positive or negative) and indirectly through measures of implicit bias – were largely and positively impacted by short-term volunteering. Although stereotypes and prejudice are very difficult to gauge (Fazio et al., Citation1995), implicit bias measures enable researchers to study a person’s underlying biases, which cannot be easily masked. This is important because individuals may be pressured to verbalize positive sentiments about other vulnerable groups, such as the Rohingya, in public group settings. Furthermore, our results are robust to three different measures of implicit bias (i.e., linguistic intergroup bias, stereotype explanatory bias, and negation bias) and not merely explicit bias as established through valence. We explain these biases in more detail in the methodology section. The paper proceeds as follows: Section 2 presents the literature review, Section 3 describes the methodology and data, Section 4 covers the results, and Section 5 contains the conclusion. Section 6 then presents the list of references, and Section 7 contains the Appendix.

Literature review

The literature on prejudice and bias is vast. It includes studies on discrimination based on demographic characteristics (such as gender and age), statistical discrimination, and discrimination targeted at members of the out-group relative to the in-group, such as refugees and asylum-seekers. In the following literature review, we will outline these separate subsets of bodies of literature as our paper combines elements from multiple strands. To begin, it is important to note that discrimination and bias can manifest in two general ways: explicit bias, in which individuals are aware of the bias and operate on biased assumptions consciously, and implicit bias, in which associations and reactions are automatic and often without awareness of realization upon encountering an individual or ‘stimulus’ (Daumeyer et al., Citation2019). This paper focuses on implicit bias and on volunteering as an intervention to help mediate its effects, as implicit bias and attitudes may contribute to larger systemic and institutional issues (Bayer & Rouse, Citation2016).

Literature on racial bias

The existence of prejudice and bias has been well documented in the economic and sociology literature, with clear evidence of racial discrimination in the labor, housing, and product markets (Carlsson & Rooth, Citation2007; Kaas & Manger, Citation2012; Pager et al., Citation2009; Riach & Rich, Citation2002). For example, (Bertrand & Mullainathan, Citation2004) found, through an experimental study done in the U.S., that whites receive 50% more callbacks for interviews. (Carlsson & Rooth, Citation2007) and (Kaas & Manger, Citation2012) found similar evidence in the European labor market, where individuals with German and Swedish names also received a higher number of callbacks compared to individuals with Arabic or Turkish names.

(List, Citation2004) documented that there is a strong tendency for racial minorities to receive inferior initial and final job offers compared to those received by non-minorities, indicating statistical discrimination (i.e., discrimination in the form of inferring statistical information on the group to which an individual belongs). Discrimination and bias against other traits and groups, such as gender, has also been well studied. (Neumark et al., Citation1996) found that female job applicants had a lower probability of receiving a job offer than men. Women have also been discriminated against when purchasing cars at dealerships (Ayres & Siegelman, Citation1995). (Pager & Quillian, Citation2005) found that this problem could potentially be exacerbated because employers who indicated a greater likelihood of hiring individuals from particular groups (such as former convicts) were no more likely to actually hire them. Bias is also not only a ‘low-skilled” problem; (Moss-Racusin et al., Citation2012) found cases of discrimination against female student workers by university faculty, stating that ’female students are less likely to be hired (compared to male students) because she was viewed as less competent”. Evidence of discrimination and bias can be detected at very young ages; (Bian et al., Citation2017) found evidence of gender stereotyping in children as young as 6, and that these stereotypes affected the children’s interests and activities. Specifically, their findings imply that “6-year-old girls are less likely than boys to believe that members of their gender are ‘truly, truly smart’” and that girls at this age also “begin to avoid activities said to be for children who are ‘truly, truly smart.’”

Literature on prejudice towards refugees and migrants

A meta-analysis by (Cowling et al., Citation2019) identified a variety of antecedents of refugee-specific prejudice and found that demographics factors mattered. Specifically, factors such as ‘being male, religious, nationally identified, politically conservative, and less educated were associated with negative attitudes.’ (Anderson, Citation2019) further found, by studying attitudes and prejudice towards asylum-seekers, that ‘self-presentation concerns (of individuals) result is the deliberate attenuation of reported explicit attitudes.’

Evidence surrounding the issues of prejudice towards refugees and migrants exist from all around the world. (Fraser & Murakami, Citation2021) found that in Japan, humanitarianism helps shapes attitudes towards refugees – specifically, it ‘predicts public support for admitting refugees more strongly than it predicts support for economic migrants.’ (Koçak, Citation2021) studied Turkey and found that certain factors, such as religiosity, socioeconomic status, satisfaction with life, and threat perceptions towards refugees, predict the social distance towards this group, which is typically seen in areas where host citizens and residents live together intensely. In the Netherlands, (Onraet et al., Citation2021) found that right-wing authoritarianism caused respondents to be unwilling to help asylum-seekers and were more likely to perceive them as being economic migrants (and therefore, ‘less legitimate’ than ‘true’ asylum-seekers). (Smith & Minescu, Citation2021) found that in Ireland, family, peer, and school norms are significant predictors of how schoolchildren form their inter-ethnic attitudes (and hence, also, prejudices).

The context surrounding bias and prejudice towards ‘outsiders’ in Malaysia, a multi-ethnic and multi-religious country, where Islam is the official state religion, has also been explored in fair detail. (Missbach & Stange, Citation2021) found that in Southeast Asia, where refugee protection is the weakest in the world outside from the Middle East, a notion of ‘Muslim solidarity’ towards refugees is primarily a symbolic rhetoric directed at domestic (voting) audiences, and ‘fails to render effective protection to refugees.’ This was a similar finding to (Hoffstaedter, Citation2017), who found that Muslim solidarity towards refugees was tempered by prevalent racism in Malaysia against people from the Indian subcontinent, which would include Rohingya people from Myanmar who live on the border with, or in the Cox’s Bazaar refugee camp, in Bangladesh. (Deslandes & Anderson, Citation2019) found that religious affiliation was related to negative attitudes towards migrants in Malaysia, and that this effect was strong towards target groups who are refugees rather than migrants. (Cowling & Anderson, Citation2019) specifically found that this negative effect in attitudes towards migrants is stronger amongst Muslims towards migrants than Christians. (Aminnuddin & Wakefield, Citation2020) found that ethnic differences in discriminating against others were found to be very significant in Malaysia, with Malays displaying higher means of not wanting neighbors of a different race or religion compared to Chinese.

It has also been shown that economic development is a strong predictor of opposition to receiving increasing numbers of Sub-Saharan African migrants in Europe (Buehler, Fabbe and Han, Citation2020). Assimilation – either by speaking the local language or by adopting local cultural practices – has been shown to help reduce American citizens’ prejudice toward Syrian refugees (Koc & Anderson, Citation2018). Information has also played a large role in combating prejudice. (Getmansky et al., Citation2018) found that different messages about the possible effects of hosting refugees can change locals’ preceptions and attitudes toward refugees in Turkey. (Facchini et al., Citation2016) found that information campaigns were highly pivotal in decreasing public opposition to immigration by highlighting its key positive impacts. The refugees included in our study are Rohingya people, who by and large share the same faith as the majority ethnoreligious group in Malaysia, the Malays. Thus, we expected this would also have a taming effect on bias, as (Lazarev & Sharma, Citation2017) indicated that shared religion typically reduces outgroup prejudice in the context of Syrian refugees in the Middle East. Finally, the volunteering intervention was designed to be short but mindful in the spirit of (Adida et al., Citation2018; Broockman & Kalla, Citation2016; Pons, Citation2018). (Pons, Citation2018) demonstrated that just a five-minute discussion on the candidate was able to increase votes for Francois Hollande in the 2012 French presidential election, with visits actually persisting in later elections, suggesting a lasting ‘persuasion effect’. (Adida et al., Citation2018) found that short and interactive ‘perspective-taking’ exercises actually caused Americans who completed them to write in support of Syrian refugees to the White House, implying that interactive activities could potentially have very large impacts.

Literature on interventions to reduce bias

Many studies have examined different types of interventions that can help reduce bias. (Goldin & Rouse, Citation2000) found that blind auditions for orchestra players ”foster impartiability in hiring and increase the proportion of women in symphony orchestras.” A meta-study conducted by (Axt & Lai, Citation2019) indicated that ”a novel intervention that both asked participants to avoid favoring a certain group and required them to take more time when making judgments” improved bias and noise in decision-making.

Many of these interventions involve interpersonal relationships and interactions. For example, numerous studies have looked at the impact of having role models and relationships with similar individuals. Role models have been beneficial across multiple populations. (Rask & Bailey, Citation2002) proposed benefits of having role models in the context of faculty members and students for women, minorities, and men. (Sonnert et al., Citation2007) found that percentages of female students in particular majors are strongly associated with the proportions of female faculty members in the same fields, and (Bettinger & Long, Citation2005) found that female instructors positively influence course selections and choice of major in some disciplines (although this effect may be contingent on whether or not the field is male-dominated). (Hoffmann & Oreopoulos, Citation2009) demonstrated that same-sex instructors increase average gender performance differences by up to 5% and decrease drop-out rates from a course by 1.2 percentage points. (Porter & Serra, Citation2020) indicated that “female students exposed to successful and charismatic women who majored in economics at the same university significantly impacted female students’ enrollment in further economics classes“. (Behncke et al., Citation2010) studied this in the context of social caseworkers and found that job placements of unemployed people improve if the caseworker belongs to the same social group. By studying male-female pairs in labwork in chemistry courses, (Fairlie et al., Citation2020) ”did not find evidence that female students are negatively affected by male peers in intensive, long-term pairwise interactions in their course grades or future STEM course taking.”

Another class of interventions involves providing access to better information. Many authors have studied and found that the communication of information about particular groups can help reduce bias and prejudice against them (Bromme & Beelmann, Citation2018; Espinosa & Stoop, Citation2021; Reynolds et al., Citation2020). This has been studied across many social science fields, but also within the health sciences with regards to improving decision-making. For example, (Clarke et al., Citation2020) examined health warning levels on products and found that they have the potential to ‘reduce [the] selection of energy-dense snacks’ in an online setting,” implying that communicating better information can improve final outcomes. (Bayer et al., Citation2019) showed that careful messaging in welcome information emails that highlights the diversity of research and researchers in economics increases the likelihood that students will complete an economics course in their first semester of college by almost 20% of the base rate. Other interventions that have been proven to make a difference in reducing bias include workplace diversity training and media training (Paluck & Green, Citation2009). (Paluck, Citation2009) studied the impact of an informative radio soap opera and found that it was able to change listeners’ perceptions of social norms and behaviors with respect to intermarriage, open dissent, trust, empathy, cooperation, and trauma healing but had little to no impact on their personal beliefs. Livelihood interventions (providing more or better access) have also been found to reduce stigma toward HIV-positive populations.

Literature on short-term interventions and volunteering

This paper specifically focuses on the implicit bias of citizens toward non-citizens (forced migrants, i.e., refugees) and whether a short, interpersonal interaction has the ability to positively impact this bias. The intervention used consists of short-term, half-day, one-time volunteering projects, as it has been shown that intergroup contact has the ability to reduce intergroup prejudice (Pettigrew & Tropp, Citation2006). In the literature, volunteering is often defined as ‘any activity in which time is given freely to benefit another person, group or case’ (Wilson, Citation2000). Volunteering and its impact on the economic, social, and mental well-being of volunteers has been widely investigated. For example, a number of studies have found positive effects of volunteering on a variety of measures such as life satisfaction, self-esteem, self-rated health, educational and occupational achievement, functional ability, and mortality (Eccles & Barber, Citation1999; Wilson, Citation2000). However, relying on self-report survey data is problematic since respondents may overstate the effects of volunteering, as they are cognizant that they are being studied as volunteers. Nonetheless, the empirical evidence on the positive effects of volunteering is overwhelming. For example, (Wilson, Citation2000) found that ‘studies of youth suggest that volunteering reduces the likelihood of engaging in problem behaviors such as school truancy and drug abuse.’ (Eccles & Barber, Citation1999) explored extracurricular activities for adolescents and indicated that ‘involvement in prosocial activities was linked to positive education trajectories and low rates of involvement in risky behaviors.’ Using the collapse of East Germany and its infrastructure of volunteering, (Meier & Stutzer, Citation2008) found a causal link between volunteering and increased life satisfaction. Many studies have also looked at the differences in benefits from volunteering across different age groups and have found that, in general, older volunteers experience more positive emotional impacts than younger volunteers (Morrow-Howell et al., Citation2003; Van Willigen, Citation2000). Volunteering also seems to be correlated with better social integration (Wilson & Musick, Citation1999).

Finally, (Broockman & Kalla, Citation2016) examined the reduction of intergroup prejudice in the context of door-to-door canvassing on anti-transgender prejudice in South Florida and found that ‘a single approximately 10-minute conversation encouraging actively taking the perspective of others can markedly reduce prejudice for at least 3 months.’ Thus, our study uses a short, interactive intervention and implements it in the context of a citizen versus migrant context to determine its effect on short-term implicit bias.

Methodology and data

Types of implicit biases measured in this study

The first measure of implicit bias that we utilized is linguistic intergroup bias (LIB), a widely studied systematic bias in language use that contributes to the perpetuation of stereotypes (Dragojevic et al., Citation2017; Karpinski & Von Hippel, Citation1996; Maass, Citation1999). The idea behind LIB is that people maintain stereotypes about individuals in their minds, and that the expression of language employed to describe said individuals can often predict what the stereotype is. LIB typically considers statements made about ingroup members (in our context, Malaysian citizens) and outgroup members (Rohingya refugees); for this paper, however, we only considered changes in statements made about members of the outgroup. In the LIB model, information contradicting the valence of the stereotype is framed more concretely, where actions are described rather than the intent or mental state (i.e., positive, rather than normative statements are made). There are two types of concrete statements. The most concrete ones are made using descriptive action verbs (DAV), which are highly specific and value neutral, with a clear ‘beginning’ and ‘end.’ The less concrete ones are statements with interpretive action verbs (IAV), which are less specific and normative, but still have a clear ‘beginning’ and ‘end.’ For example, let us say that Hamid, a Rohingya boy, is seen pushing another child. A DAV-based statement on this occurrence could be ‘Hamid pushed the boy’ and an IAV statement could be ‘Hamid hurt the boy.’ On the other hand, information supporting the valence of the existing stereotype is framed more abstractly. There are also two types of abstract statements. The most abstract ones are made using adjectives (ADJ), which describe an attribute or character. In contrast, fewer abstract statements are made using state verbs (SV), which have no clear ‘beginning’ or ‘end’ and often describe mental states. A statement using an SV would be ‘Hamid hates the boy’, and a statement using ADJ would be ‘Hamid is a bad child.’ In this paper, we conducted a textual analysis using the LIB model by employing a team of three researchers to categorize each statement made in pre- and postvolunteering focus groups by each volunteer first into a relevant or irrelevant statement and then into a positive or negative statement. Finally, the level of abstractness was categorized for each statement made (i.e., in levels of increasing abstractness: DAV, IAV, SV, and ADJ).

The second measure of implicit bias is stereotype explanatory bias (SEB), which emerges when an individual is ‘more likely to provide explanations for behaviors that are inconsistent with expectancies than for behaviors that are consistent with expectancies’ (Sekaquaptewa et al., Citation2003). SEB has been widely used to study the use of stereotypes in processing information (Brewer, Citation1996; Hastie, Citation1984). Specifically, information contrasting the valence of a stereotype is qualified, and information supporting the valence of a stereotype is stated directly. For example, if an individual goes to the mall, watches a movie, expects it to be good and it also turns out to be good, he/she might say, ‘The movie is good’, whereas if he/she watches movie X and expects it to be bad, but it turns out to be good, he/she might say, ‘The movie was actually good; the director is improving’.

The third measure of implicit bias is negation bias (NB), where information contradicting the valence of the existing stereotype is framed as a negation of the stereotype (i.e., ‘The movie wasn’t bad’), and information supporting the valence of the stereotype is stated directly (i.e., ‘The movie was good’). Social psychological studies have proven that ‘by using negations people implicitly communicate stereotypic expectancies, and that negations play a subtle but powerful role in stereotype maintenance’ (Beukeboom et al., Citation2010). That said, for reasons described in Sections 4, 5, and 6, we drop the SEB and NB measurements from the dataset; we only consider them in checking for robustness. Our main finding is that using the LIB model, the estimated change in bias is 25 − 40% of the maximum possible measured change, which is an enormous and statistically significant effect after just a few hours of exposure to a ‘stranger’ group.

Background on the language training program and volunteers

The language training program where volunteers were placed was run once a week for 6 weeks, ending in August 2018, and focused on Rohingya adults who had been in the country for fewer than 5 years. The weekly classes were held in two blocks of 1.5 hours, with a 20–30 minute break in between, and ended with lunch afterward. Classes were taught using a language curriculum targeted toward practical, day-to-day conversational usage for adult refugees, and covered topics such as greetings, safety and law enforcement, currency, and buying basic essentials. The topics were delivered using a combination of both lecture and nonlecture methods. The lecture mode was used to teach or introduce keywords or key sentences, supported by heavy use of role-play and simulation, practice with host country volunteers (i.e., native speakers), and videos. Pictures were also heavily used to provide visual cues during language training, especially to support participants who were illiterate. Classes were taught by teachers who were not involved in the research program.

To aid in the execution of the classes, volunteers for the language program were sourced from a large, local, public university. This particular university had a mandatory community service requirement, whereby students and faculty members were expected to spend a significant amount of time doing volunteer work. We recruited volunteers for three purposes: (1), to help in the classroom, as native speakers, by talking to the refugees and helping them practice their language; (2), to provide childcare at the training center for participants who had children; and (3), to assist with logistical issues during the classes, such as packing food for the refugees or moving furniture. Volunteers underwent a very short, 10-minute ‘training session’ immediately before the day began and spent an average of 1.5 hours directly interfacing with the refugee students throughout the day. Due to this mandatory volunteer requirement by their university, all the volunteers in our study only volunteered to receive a formal certificate of completion to satisfy this requirement; specifically, they had no ties or connections to Rohingya refugees, and had only read or heard about these refugees through the media. Thus, there were no obvious self-selection issues in the volunteer group; that is, we did not select for individuals who were more likely to volunteer and could thus potentially be more open-minded than those who did not volunteer.Footnote11

Data collection and description

We derived the data from pre- and posttreatment semi-structured focus groups held with 34 volunteers (six pairs of discussions for 12 in total). The volunteers were asked to come to the training center at 8:30 am, and the pretreatment focus group was held for approximately one hour with the three questions listed below. Then, at 9:30 am, the classes with the refugees started and went on until approximately 12:30 pm, at which point the classes ended and lunch was served. At approximately 1:30 pm, all volunteers sat down for the posttreatment focus group, which lasted for about an hour. All focus groups were held in English, and all volunteers had a native or near-native command of English. Each focus group was audio-recorded, and a research assistant later transcribed it verbatim. displays the number of volunteers for each focus group, alongside their genders and ages. All 12 focus groups were semi-structured and conducted with the same research assistant, who was not a member of the ingroup or outgroup of the study,Footnote12 with the following (same) three questions asked in each focus group:

What do you know about the Rohingya?

Can Rohingya integrate well with the Malaysian community?

Would Malaysians hire Rohingya to do jobs for them?

Table 1. Number of volunteers for each focus group alongside age and gender

Prior to being utilized in the field, we tested these three questions for comprehension. We designed the questions to be as broad as possible, but also to nudge the volunteers to consider the ‘fit’ of refugees with Malaysians and to consider their access to livelihoods. We did this is to address the fact that the main issue surrounding refugee difficulties in Malaysia relates to a lack of access to sustainable, legal means of earning an income (Nungsari et al., Citation2020a).

The head coder (henceforth ‘Coder 1’) then performed preliminary processing of the textual data. This included parsing the transcript into a list of sentences and, for each sentence, identifying pre- and posttreatment status, the volunteer ID,Footnote13 the focus group ID, and which question was being responded to. The dataset included 843 sentences at this stage. Coder 1 also developed the categories for the theme, subject, and context (described below). Then, Coder 1, along with two additional coders, completed the coding as followsFootnote14 (1) They identified each relevant sentence; that is, each sentence that contained a claim or assessment about the Rohingya or a Rohingya individual with positive or negative valence. (2) For each sentence deemed relevant, they enumerated every relevant (i.e., valenced and containing a verb or adjective) atomic statement they observed. An example of a relevant sentence from the sample is the following: ‘They (the Rohingya) are hardworking, adapt more easily in a known environment, and are actually sincere with their bosses.’ The three atomic statements are ‘They are hardworking’, ‘They find it easier to adapt in known environments’ and ‘They are actually sincere with their bosses’. Finally, the coders considered each relevant atomic statement; some of the coders identified some statements as ‘neutral’. We dropped these statements from the main analysis. One coder identified some statements as ‘questionably positive’ or ‘questionably negative’. These were recoded as positive and negative, respectively; the coders also coded the valence, abstraction level based on the type of verb or adjective, subject,Footnote15 theme, context, and dummies for negation bias and stereotype explanatory bias. Descriptive statistics for included the atomic statements are given in , and the distribution of themes is given in . Descriptive statistics by group is given in of the Appendix.

Table 2. Descriptive statistics for atomic statements, pre and post. Valence is coded 1 for negative and 2 for positive. Experience is coded one for yes and 0 for no

Table 3. Themes for atomic statements, pre and post

The theme variable describes the broad topic of the sentence and consists of the following categories: language, children, parenting, work, culture, persecution, and crime. The subject variable describes the subject of the sentence and consists of the following categories: ingroup (Malays), ingroup (Chinese, Indians, other Malaysians), outgroup (Rohingya), and outgroup (non-Rohingya refugees). The context variable describes the speaker’s relationship to the information he/she conveys, and consists of the following categories: happened while volunteering (i.e., an encounter during language training or non-language training, such as over lunch), happened outside volunteering, and third-party mediation (e.g., an anecdote from a friend or information from the press).

We then compared the data across the coders. For 63 sentences, all three coders agreed that the sentence was relevant. However, many sentences were coded as relevant by only one or two coders. We dropped every sentence deemed relevant by one or fewer coders, leaving 83 additional sentences that two coders deemed relevant, while the other did not. In each of these cases, we asked the coder who had deemed it irrelevant to reconsider the sentence and to code the atomic statements if they thought it relevant.Footnote16 In 67 of these cases, the coder changed their assessment to relevant, yielding 130 unanimously relevant sentences. We restricted the analysis to these sentences to avoid attenuation bias from measurement error.

Finally, we evaluated the consistency of coding across the coders. Valence was almost perfectly correlated across the coders, but in some cases, coded levels of abstractness differed greatly. Each coder identified relevant atomic statements (or equivalently, relevant verbs and adjectives) independently, so one source of heterogeneity was different assessments of which words were valenced and thus relevant. Additionally, the three categories of verbs (DAV, IAV, and SV) can be difficult to differentiate, and we could not provide the coders with an exhaustive list of verbs that fit in each category, especially since some verbs can fit more than one category depending on their use. Because the different coders identified different atomic statements, we had to compare abstractness at the sentence level, so we used the median level of abstraction across all atomic statements in a sentence. We then calculated the standard deviation between all three coders for every unanimously relevant sentence, and identified 16 sentences with a standard deviation over 1. We asked the coders to reconsider these sentences to see if there were any atomic statements they wished to add or remove, and whether they wanted to change their abstractness coding for any of the atomic statements. We observed that confusion between adjectives and adverbs accounted for several highly inconsistent sentences, so we provided the coders with specific guidance to treat a verb combined with an adverb as a verb rather than an adjective.

Once the coders had completed this task, we collected their responses as our final dataset. We found high consistency in measures of valence and moderate consistency in measures of abstractness. Using the median level of abstraction for each sentence, we found that Spearman’s ρ was between 0.5 and 0.6 in pairwise comparisons between each of the coders, as seen in . Using the median level of valence, we found Spearman’s ρ values to be above 0.9 for every pair, as seen in . Due to the lack of consistency in measured abstractness, we expected some degree of attenuation bias due to measurement error. Using the median level of valence, we found Spearman’s ρ values to be above 0.9 for every pair. depict the correlation matrices.

Table 4. Correlations across abstractness measures (Spearman’s ρ)

Table 5. Correlations across valence measures (Spearman’s ρ)

Analysis and regression model

Before discussing our methodology, it is useful to develop an idealized benchmark to answer our research question. Ideally, we would have evaluated the LIB by presenting a fixed set of positive and negative valence prompts involving the Rohingya to the participants. These prompts could have been short videos or illustrations showing activities such as gift giving or hostile behavior. The participants would have given a written description of the prompt, and their choice of words would have been assessed using the scale described earlier. LIB theory suggests that events that conform to existing stereotypes are typically described more abstractly, while events that contradict stereotypes are described more concretely. Thus, the more positive the stereotype, the more abstract the language used to describe positive events; hence, more negative the language would be used to describe negative events. Stereotype valence, or bias, would therefore be proxied by the difference in abstractness between positive and negative valence prompts. Additionally, volunteers would have been randomly assigned to two groups; one set of volunteers would have been evaluated before treatment, while the other would have been assessed after treatment to preclude any priming effect from the pre-treatment assessment. The change in bias would have been measured by the difference in measured bias pre and post (i.e., the difference-in-difference of valence and treatment status).

We collected our data incidentally – not with the intention of running a randomized controlled trial on volunteer attitudes. However, controlling for the shortcomings of our dataset relative to this benchmark motivated the major methodological choices that we made. As described in the previous section, our textual data came from focus groups with the volunteers before and after treatment, where the volunteers responded to several open-ended questions about the Rohingya. Therefore, relative to the ideal above, we did not experimentally control the valence or abstractness of the ‘prompts’ (i.e., topics) that the participants responded to, but relied on them to facilitate a discussion of positive and negative information and observations. This threatened the validity of our study by potentially introducing an imbalance between the distribution of topics in the pretreatment session and the distribution of topics in the posttreatment session. For example, the participants may have been more likely to discuss Rohingya children after babysitting them, and, relative to other topics, this topic may have been intrinsically more abstract since relations between children and parents are often seen as durable over time and more positive because children are valued by most people. This correlation between abstractness and positivity may have caused us to identify a spurious increase in stereotype valence, solely because children were discussed more after the session.Footnote17 If higher valence topics were discussed posttreatment than pretreatment, this would simply change the distribution of positive and negative observations; if higher abstraction topics were discussed posttreatment, this would be controlled for by distinguishing between the positive and negative observations.

Therefore, we added topic fixed effects to the model, which interacted with valence. For example, we included a dummy for discussions of Rohingya children in both negative and positive contexts. Finally, as with the theme fixed effects, we intended to include context fixed effects, describing whether the event under discussion was gleaned from a third party (e.g., a news report), from this volunteering experience, from another volunteering experience, or from some other context. Unfortunately, only the first two categories had significant representation in our sample, and were overwhelmingly collinear with treatment status; before treatment, the participants talked about the news, and after treatment, they talked about their experiences. Thus, we dropped this control from the analysis.

We were not able to exclude a possible priming effect by asking the same set of questions before and after treatment, so we proceeded with that proviso. Our initial research design focused not only on LIBs but also on NBs and SEBs for robustness. Coders identified instances of each form of bias for each sentence, and our intent was to use the same comparison of positive and negative valence statements between pre- and posttreatment. While we collected these data, we only identified NB in 8.1% of relevant atomic statements across all three coders and SEB in 25.8% of relevant atomic statements. Because of this limited variation in a binary dependent variable, combined with the small sample size, the 2 × 2 matrix of valence and treatment status cases to be estimated, and the fact that these forms of bias are based on sentence structure rather than individual words and thus generated only one observation per sentence (rather than 1–5), we did not have sufficient power to identify changes in bias along these dimensions. We ran baseline versions of the model for these dependent variables, but did not focus on them due to their statistical insignificance.

Our baseline model was then

where is the abstractness of atomic statement

from 1 to 4 for DAV, IAV, SV, and adjectives, respectively.

is a dummy variable for posttreatment status,

is a dummy for positive valence,

is the interaction term for

and

,

are dummy variables for group IDsFootnote18 that interacted with valence, and

are dummies for themes that interacted with valence. We clustered standard errors by group wherever possibleFootnote19 to account for potential dependence caused by the group nature of the discussion. Where it was not possible, we used robust standard errors. In several cases, we reported unadjusted standard errors to ensure F-statistics could be reported for all specifications.Footnote20 We report the unclustered results with the proviso that they likely underestimate their respective p-values. We estimated this model, as well as permutations on the set of fixed effects. We focused on the set of sentences that were deemed relevant by all coders to minimize attenuation bias due to measurement error. We also excluded volunteers who had previous volunteering experience with refugees, as the treatment effect would likely have been attenuated for them. For robustness, we estimated a model including experienced volunteers and estimated an ordered logit model, since the OLS treatment of abstractness as a cardinal measure is not formally correct. The ordered logit results were extremely similar across model specifications, so we focused on OLS for ease of interpretation. In every specification, we report unstandardized coefficients.

We estimated this model separately for all three coders, as well as for the aggregate of all three. For the aggregate dataset, we matched atomic statements across coders and took the median of abstractness and the mode of valence and theme, breaking ties randomly when they occurred.

We also estimated the direct effect of the treatment on valence using the specification

where the fixed effects did not interact with valence.

Results

Across a variety of specifications and coders, we found statistically significant evidence of LIB; the interaction term for posttreatment and positive valence was uniformly negative. In most of the core specifications, the effect was significant at the α = 0.05 level across coders (–A9), and in only one of twelve specifications was it insignificant at the α = 0.1 level. Further, this term was significant at the α = 0.05 level across all specifications in the aggregate sample. This term can be interpreted as follows. First, in measuring the pre/posttreatment effect on abstraction for positive and negative valence statements separately, the interaction term is the negative treatment effect minus the positive treatment effect. In other words, this coefficient gauges the increase in abstraction due to treatment for negative valence statements relative to the increase in abstraction due to positive valance statements. A negative coefficient means that the treatment effect shows falling abstraction for negative statements relative to positive statements. In fact, interpretation of the regression coefficients indicates that, for most specifications, the abstraction of positive valence statements rises with exposure to refugees, while the valence of negative statements falls. In LIB theory, this corresponds to an increase in stereotype valence: A more positive attitude toward the Rohingya leads positive statements to be framed as more abstract, general, and permanent, while negative statements are framed as concrete and implicitly atypical.

The magnitude of this effect is notable. Across the wide range of specifications and coders, the coefficient estimate ranged from −0.3 to −2.2, but the coefficients derived from regressing on the aggregated-across-coders dataset () yielded a narrower range of −1.0 to −1.5. For reference, the largest possible effect (possible only if the volunteers used only DAV pre-treatment and adjectives posttreatment for positive valence statements, and the opposite for negative valence statements) would have a coefficient of −6. Realistically, one would not exclusively use adjectives, no matter how abstractly one views a statement or claim of a given valence, nor would one exclusively use descriptive action verbs. In some cases, a speaker has great discretion to choose between abstract and concrete framings of a statement, but in other cases, it may be challenging or awkward to construct sentences with certain kinds of verbs or adjectives. Then, we conservatively hypothesized a maximum realistic effect of −4. As such, our estimated change in bias was 25 − 40% of the maximum possible measured change, which is an enormous effect after just a few hours of exposure to a group. We should not overinterpret this measure, as OLS is not formally valid given the ordinal nature of our abstractness variable, and we were not able to cluster standard errors for every specification. However, this result is highly suggestive of the magnitude of the effect. This is even more notable due to the likely attenuation bias: The significant variability in sentence-level abstractness measured between coders signals that abstractness is gauged with significant error, so these coefficients may underestimate the true effect.

We focused on an OLS specification for simplicity, but the results were largely equivalent using ordered logit. In the no fixed effects specification of , the interaction coefficient was significant for all three coders; other specifications performed similarly. Adding volunteers with previous experience yielded largely similar results but with slightly lower effect sizes and t-statistics, as one would expect given that those with previous experience are less likely to have a marked change in perception due to treatment. The alternative measures of bias that we studied (stereotype explanatory bias and negation bias) showed the predicted signs, but were generally not significant across specifications (). This is likely because we measured them at a lower level of granularity (sentence vs. atomic statement for LIB) and because they are binary variables with few positive observations.

We did not focus on the direct measure of bias (valence), as a statistically significant, positive effect would not credibly demonstrate a change in attitudes. It could just as easily indicate an attempt to please the surveyor, or the volunteer’s desire to appear enlightened by the experience. However, we estimated a simple model predicting valence based on treatment status in . The effect size was substantial: an increase in the proportion of positive statements of between 10% and 23%, depending on the specification. Its magnitude was limited, however, by the fact that the participants were largely positive even before the treatment; while we found large changes in the relative abstractness of positive and negative statements, negative statements were relatively rare. This is likely due to volunteers having felt pressure to speak positively about the Rohingya given the volunteering context.

Though we did not utilize a qualitative analysis on our dataset, it is important to note that the data we collected was very rich, and could potentially be used for a different type of content or thematic analysis, in the style of (Braun & Clarke, Citation2012). To see this, we present a select number of examples from the dataset below. The following were statements made before the volunteering session (i.e. pre-intervention):

‘Most of the input or the perception that I got is that most of them (the Rohingya refugees) are quite uneducated, and some say that they are lazy because the ladies are just staying at home, they are not used to going to work.’

‘In my opinion, they have a lack of knowledge, a lack of skills … but I believe that they have a lot of spirit and this will encourage us as Malaysians to work hard because we will realize that we should be grateful with what we have, because we can compare with their country (that) is lacking and we can take them as a comparison to be grateful.’

Finally, these are a selection of statements that were made after the volunteering session (i.e. post-intervention):

‘I think that they can actually be integrated well into the society if, like today’s session, they looked like they are very keen to being our friends and they are actually more receptive than what we thought … they actually would like to be part of the society.’

‘It is also surprising that the females are here because I thought the women are not allowed to go outside the house because they don’t see education as important … for females especially and they are not allowed to be seen by others.’

Conclusion

We studied the effect of volunteering with Rohingya refugees on local Malaysians, and using textual analysis of focus groups conducted before and after the volunteering session, we found large shifts toward positive attitudes, as measured by linguistic intergroup bias. We also observed a large increase in the proportion of positive comments about the Rohingya relative to negative comments, although this change was not statistically significant. This is striking since these volunteers spent only a few hours with refugees. We hypothesized that the large effect can be attributed to the volunteers’ lack of knowledge of the Rohingya. Most had no significant knowledge of them before the program and joined because they were required by their university to complete a volunteer activity. This illustrates how a small, short, cost-effective intervention exposing local citizens to refugees could potentially generate major changes in attitudes; this of great relevance to policymakers and aid groups involved in refugee issues, especially in a country such as Malaysia, where refugees have not yet become a divisive political issue.

Moving forward, the next step in this research agenda is to study this phenomenon on a larger scale. While the effect sizes were large enough to identify changes in bias even with the small sample size available, the precision of our estimates was poor, and the shortcomings of our study relative to an ideal experimental design (outlined in the methodology section) should be addressed more robustly. Further, we were only able to gauge changes in attitude immediately after treatment. Ideally, we would follow up several months later to measure the persistence of these changes relative to an untreated control group, but without a control group or contact information for the participants; this was impossible for the current study. Finally, it would be useful to characterize the differential effect of different types of interactions with refugees. In the language training program that we investigated, the volunteers spent some time babysitting the children while their parents studied, and sometimes acted as practice partners in the Malay language class. The former activity places the volunteer in the role of an assistant, while the latter positions him/her as an instructor and expert. Activities that place volunteers in positions of authority over refugees (a common feature of many volunteer programs) may reinforce feelings of superiority, thereby undermining positive shifts in attitude, while more supportive, subordinate activities may lead to greater respect for refugees. Unfortunately, our dataset was too small to answer this question, and we did not create treatment arms for different types of activities. To our knowledge, there is no experimental evidence on this question, but it is of vital importance in deciding how to structure this sort of intervention.

Acknowledgements

We would like to thank the Asia School of Business, the World Bank Research Group in Kuala Lumpur, the United Nations High Commissioner for Refugees (UNHCR), and our research assistants (Dania Yahya Hamed Ibrahim Ahmed Alshershaby, Amelia Foong, Fiona Sloan, Khadijah Shamsul, Yizhen Fung, and Chuah Hui Yin) for their help and support. The Malay language training program underlying the study published in this paper was funded by the UNHCR office in Malaysia in 2018. All mistakes are our own.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 The UN Refugee Agency used to be known as the United Nations High Commissioners for Refugees (UNHCR), hence the abbreviation.

2 Retrieved https://www.unhcr.org/figures-at-a-glance.html. Accessed on June 8th, 2021.

3 Retrieved https://www.unhcr.org/what-is-a-refugee.html. Accessed on June 8th, 2021.

4 Retrieved https://www.unhcr.org/protect/PROTECTION/3b73b0d63.pdf. Accessed on June 8th, 2021.

5 Resettlement Data from UNHCR: https://www.unhcr.org/resettlement-data.html. Accessed on June 8th, 2021.

6 Data from the U.S. Department of State (https://www.wrapsnet.org/admissions-and-arrivals/) and the Pew Research Center (https://pewrsr.ch/2s7TFHC).

7 Data from the Pew Research Center, retrieved from https://www.pewresearch.org/fact-tank/2019/10/07/key-facts-about-refugees-to-the-u-s/. Accessed on August 18th, 2020.

8 Retrieved https://www.unhcr.org/figures-at-a-glance.html. Accessed on August 18th, 2020.

9 Retrieved https://www.unhcr.org/en-my/figures-at-a-glance-in-Malaysia.html. Accessed on August 18th, 2020.

11 That said, our study still suffers from the typical selection issue of having an educated sample, since the participants were sourced from a university.

12 She is Egyptian.

13 Volunteers were given a numerical ID and instructed to identify themselves when speaking to facilitate the coding.

14 All coders were trained in how to code the transcribed texts, and were asked to read and discuss two journal articles on LIB (Dragojevic et al., Citation2017; Maass, Citation1999) to learn the relevant definitions and coding standards.:

15 We only included observations focusing on the Rohingya in the final analysis.

16 We asked the coders not to confer with one another about specific sentences throughout the coding process in order to maintain complete independence.

17 Note that, because we differentiated valence, it was only the correlation between valence and abstractness that posed a problem to identification.

18 We collected data on the volunteer ID of each sentence, but when this set of dummies interacted with valence, a very large set of controls emerged, most of which had no associated observations. Hence, we controlled for the less granular group ID.

19 The large number of dummies in many specifications makes accurately calculating the covariance matrix for clustered errors impossible. We identified such pathological cases with the reg_sandwich package (Tyszler et al., Citation2017). For cases where clustering could be addressed, we utilized the boottest package (Roodman et al., Citation2019) to generate wild bootstrap estimates of the error distributions and p-values.

20 The large number of dummies in a few specifications also undermines calculation of the covariance matrix for robust standard errors. When possible, we dropped dummies with singleton observations to resolve this, but in several cases there were a large number of problematic dummies.

References

- Adida, C. L., Lo, A., & Platas, M. R. (2018). Perspective taking can promote short-term inclusionary behavior toward Syrian refugees. Proceedings of the National Academy of Sciences, 115(38), 9521–9526. https://doi.org/https://doi.org/10.1073/pnas.1804002115

- Alba, R., & Nee, V. (1997). Rethinking assimilation theory for a new era of immigration. The International Migration Review, [Center for Migration Studies of New York, Inc., Wiley], 31(4), 826–874. https://doi.org/https://doi.org/10.2307/2547416.

- Aminnuddin, N. A., & Wakefield, J. (2020). Ethnic differences and predictors of racial and religious discriminations among Malaysian Malays and Chinese. Cogent Psychology, 7(1), 1766737. https://doi.org/https://doi.org/10.1080/23311908.2020.1766737

- Anderson, J. R. (2019). The moderating role of socially desirable responding in implicit–explicit attitudes toward asylum seekers. International Journal of Psychology, 54(1), 1–7. https://doi.org/https://doi.org/10.1002/ijop.12439

- Axt, J. R., & Lai, C. K. (2019). Reducing discrimination: A bias versus noise perspective. Journal of Personality and Social Psychology, 117(1), 26. https://doi.org/https://doi.org/10.1037/pspa0000153

- Ayres, I., & Siegelman, P. (1995, June). Race and gender discrimination in bargaining for a new car. The American Economic Review, 85(3), 304–321. https://www.jstor.org/stable/2118176

- Barcus, H. R., Halfacree, K., & Nepp, A. (2017). An introduction to population geographies: Lives across space. Routledge.

- Bayer, A., Bhanot, S. P., & Lozano, F. (2019). Does simple information provision lead to more diverse classrooms? Evidence from a field experiment on undergraduate economics. In AEA Papers and Proceedings (Vol.109, pp. 110–114). American Economic Association.

- Bayer, A., & Rouse, C. E. (2016). Diversity in the economics profession: A new attack on an old problem. Journal of Economic Perspectives, 30(4), 221–242. https://doi.org/https://doi.org/10.1257/jep.30.4.221

- Behncke, S., Frölich, M., & Lechner, M. (2010). A caseworker like me – Does the similarity between the unemployed and their caseworkers increase job placements?. The Economic Journal, 120(549), 1430–1459. https://doi.org/https://doi.org/10.1111/j.1468-0297.2010.02382.x

- Bertrand, M., & Mullainathan, S. (2004). Are Emily and Greg more employable than Lakisha and Jamal? A field experiment on labor market discrimination. American Economic Review, 94(4), 991–1013. https://doi.org/https://doi.org/10.1257/0002828042002561

- Bettinger, E. P., & Long, B. T. (2005). Do faculty serve as role models? The impact of instructor gender on female students. American Economic Review, 95(2), 152–157. https://doi.org/https://doi.org/10.1257/000282805774670149

- Beukeboom, C., Finkenauer, C., & Wigboldus, D. (2010). The negation bias: When negations signal stereotypic expectancies. Journal of Personality and Social Psychology, 99(6), 978–992. https://doi.org/https://doi.org/10.1037/a0020861

- Bian, L., Leslie, S. J., & Cimpian, A. (2017). Gender stereotypes about intellectual ability emerge early and influence children’s interests. Science, 355(6323), 389–391. https://doi.org/https://doi.org/10.1126/science.aah6524

- Braun, V., & Clarke, V. (2012). Thematic analysis. In H. Cooper, P. M. Camic, D. L. Long, A. T. Panter, D. Rindskopf, & K. J. Sher (Eds.), APA handbook of research methods in psychology, vol. 2: Research designs: Quantitative, qualitative, neuropsychological, and biological (pp. 57–71). Washington, DC: American Psychological Association.

- Brewer, M. B. (1996). When stereotypes lead to stereotyping: The use of stereotypes in person perception. In C. N. Macrae, C. Stangor, & M. Hewstone (Eds.), Stereotypes and stereotyping (pp. 254–275). The Guilford Press.

- Bromme, R., & Beelmann, A. (2018). Transfer entails communication: The public understanding of (social) science as a stage and a play for implementing evidence-based prevention knowledge and programs. Prevention Science, 19(3), 347–357. https://doi.org/https://doi.org/10.1007/s11121-016-0686-8

- Broockman, D., & Kalla, J. (2016). Durably reducing transphobia: A field experiment on door-to-door canvassing. Science, 352(6282), 220–224. https://doi.org/https://doi.org/10.1126/science.aad9713

- Bruneau, E., Kteily, N., & Laustsen, L. (2018). The unique effects of blatant dehumanization on attitudes and behavior towards Muslim refugees during the European ‘refugee crisis’ across four countries. European Journal of Social Psychology, 48(5), 645–662. https://doi.org/https://doi.org/10.1002/ejsp.2357

- Bubb, R., Kremer, M., & Levine, D. I. (2011). The economics of international refugee law. The Journal of Legal Studies, 40(2), 367–404. https://doi.org/https://doi.org/10.1086/661185

- Buehler, M., Fabbe, K. E., & Han, K. J. (2020). Community-level postmaterialism and anti-migrant attitudes: An original survey on opposition to Sub-Saharan African Migrants in the Middle East. International Studies Quarterly, 64(3), 669–683. https://doi.org/https://doi.org/10.1093/isq/sqaa029

- Buscher, D., & Heller, L. (2010). Desperate lives: Urban refugee women in Malaysia and Egypt. Forced Migration Review, 34, 20.

- Carlsson, M., & Rooth, D.-O. (2007). Evidence of ethnic discrimination in the Swedish labor market using experimental data.”. Labour Economics, 14(4), 716–729. https://doi.org/https://doi.org/10.1016/j.labeco.2007.05.001

- Chiswick, B. R., & Miller, P. W. (2001). A model of destination-language acquisition: Application to male immigrants in Canada. Demography Springer, 38(3), 391–409. https://doi.org/https://doi.org/10.2307/3088354

- Clarke, N., Pechey, E., Mantzari, E., Blackwell, A. K. M., De-Loyde, K., Morris, R. W., Munafò, M. R., Marteau, T. M., & Hollands, G. J. (2020). Impact of health warning labels on snack selection: An online experimental study. Appetite, 154, 104744. https://doi.org/https://doi.org/10.1016/j.appet.2020.104744

- Cowling, M. M., Anderson, J. R., & Ferguson, R. (2019). Prejudice-relevant correlates of attitudes towards refugees: A meta-analysis. Journal of Refugee Studies, 32(3), 502–524. https://doi.org/https://doi.org/10.1093/jrs/fey062

- Cowling, M. M., & Anderson, J. R. (2019). The role of Christianity and Islam in explaining prejudice against asylum seekers: Evidence from Malaysia. The International Journal for the Psychology of Religion, 29(2), 108–127. https://doi.org/https://doi.org/10.1080/10508619.2019.1567242

- Daumeyer, N. M., Onyeador, I. N., Brown, X., & Richeson, J. A. (2019). Consequences of attributing discrimination to implicit vs. explicit bias. Journal of Experimental Social Psychology, 29(2), 103812. https://doi.org/https://doi.org/10.1016/j.jesp.2019.04.010

- Deslandes, C., & Anderson, J. R. (2019). Religion and prejudice toward immigrants and refugees: A meta-analytic review. The International Journal for the Psychology of Religion, 29(2), 128–145. https://doi.org/https://doi.org/10.1080/10508619.2019.1570814

- Dragojevic, M., Sink, A., & Mastro, D. (2017). Evidence of Linguistic Intergroup Bias in U.S. Print News Coverage of Immigration. Journal of Language and Social Psychology, 36(4), 462–472. https://doi.org/https://doi.org/10.1177/0261927X16666884

- Dunstan, R. (1998). United Kingdom: Breaches of Article 31 of the 1951 Refugee Convention. International Journal of Refugee Law, 10(1–2), 205. https://doi.org/https://doi.org/10.1093/ijrl/10.1–2.205

- Eccles, J. S., & Barber, B. L. (1999). Student council, volunteering, basketball, or marching band: What kind of extracurricular involvement matters?. Journal of Adolescent Research, 14(1), 10–43. https://doi.org/https://doi.org/10.1177/0743558499141003

- Espinosa, R., & Stoop, J. (2021). Do people really want to be informed? Ex-ante evaluations of information-campaign effectiveness. Experimental Economics, 24, 1–25. https://doi.org/https://doi.org/10.1007/s10683-020-09692-6

- Facchini, G., Margalit, Y., & Nakata, H. (2016). Countering public opposition to immigration: The impact of information campaigns, European Economic Review, 141, 103959 .

- Fairlie, R., Millhauser, G., Oliver, D., Roland, R., & Origo, F. M. (2020). The effects of male peers on the educational outcomes of female college students in STEM: Experimental evidence from partnerships in Chemistry courses. PLOS One, 15(7), e0235383. https://doi.org/https://doi.org/10.1371/journal.pone.0235383

- Fazio, R. H., Jackson, J. R., Dunton, B. C., & Williams, C. J. (1995). Variability in automatic activation as an unobtrusive measure of racial attitudes: A bona fide pipeline?. Journal of Personality and Social Psychology, 69(6), 1013–1027. https://doi.org/https://doi.org/10.1037/0022-3514.69.6.1013

- Fraser, N. A., & Murakami, G. (2021). The role of humanitarianism in shaping public attitudes toward refugees. Political Psychology. https://doi.org/https://doi.org/10.1111/pops.12751

- Getmansky, A., Sınmazdemir, T., & Zeitzoff, T. (2018). Refugees, xenophobia, and domestic conflict: Evidence from a survey experiment in Turkey. Journal of Peace Research, 55(4), 491–507. https://doi.org/https://doi.org/10.1177/0022343317748719

- Goldin, C., & Rouse, C. (2000). Orchestrating impartiality: The impact of” blind” auditions on female musicians. American Economic Review, 90(4), 715–741. https://doi.org/https://doi.org/10.1257/aer.90.4.715

- Hastie, R. (1984). Causes and effects of causal attribution. Journal of Personality and Social Psychology, 46(1), 44–56. https://doi.org/https://doi.org/10.1037/0022-3514.46.1.44

- Hathaway, J. C. (1988). Postscript – selective concern: An overview of refugee law in Canada. McGill LJ, 34, 354. https://lawjournal.mcgill.ca/wp-content/uploads/pdf/2807646-Hathaway.pdf

- Hoffmann, F., & Oreopoulos, P. (2009). A professor like me the influence of instructor gender on college achievement. Journal of Human Resources, 44(2), 479–494. https://doi.org/https://doi.org/10.1353/jhr.2009.0024

- Hoffstaedter, G. (2017). Refugees, Islam, and the state: The role of religion in providing sanctuary in Malaysia. Journal of Immigrant & Refugee Studies, 15(3), 287–304. https://doi.org/https://doi.org/10.1080/15562948.2017.1302033

- Kaas, L., & Manger, C. (2012). Ethnic discrimination in Germany’s labour market: A field experiment. German Economic Review, 13(1), 1–20. https://doi.org/https://doi.org/10.1111/j.1468-0475.2011.00538.x

- Karpinski, A., & Von Hippel, W. (1996). The role of the linguistic intergroup bias in expectancy maintenance. Social Cognition, 14(2), 141–163. https://doi.org/https://doi.org/10.1521/soco.1996.14.2.141

- Koc, Y., & Anderson, J. R. (2018). Social distance toward Syrian refugees: The role of intergroup anxiety in facilitating positive relations. Journal of Social Issues, 74(4), 790–811. https://doi.org/https://doi.org/10.1111/josi.12299

- Koçak, O. (2021). The effects of religiosity and socioeconomic status on social distance towards refugees and the serial mediating role of satisfaction with life and perceived threat. Religions, 12(9), 737. https://doi.org/https://doi.org/10.3390/rel12090737

- Lazarev, E., & Sharma, K. (2017). Brother or burden: An experiment on reducing prejudice toward Syrian refugees in Turkey. Political Science Research and Methods, 5(2), 201–219. https://doi.org/https://doi.org/10.1017/psrm.2015.57

- Lego, J. (2018). Making refugees (dis)appear: Identifying refugees and asylum seekers in Thailand and Malaysia. Austrian Journal of South-East Asian Studies, 11(2), 183–198. https://doi.org/https://doi.org/10.14764/10.ASEAS-0002

- List, J. A. (2004). The nature and extent of discrimination in the marketplace: Evidence from the field. The Quarterly Journal of Economics, 119(1), 49–89. https://doi.org/https://doi.org/10.1162/003355304772839524

- Maass, A. (1999). Linguistic intergroup bias: Stereotype perpetuation through language. Advances in Experimental Social Psychology, 31, 79–121. https://doi.org/https://doi.org/10.1016/S0065-2601(08)60272-5

- Meier, S., & Stutzer, A. (2008). Is volunteering rewarding in itself? Economica, 75(297), 39–59. https://doi.org/https://doi.org/10.1111/j.1468-0335.2007.00597.x

- Missbach, A., & Stange, G. (2021). Muslim solidarity and the lack of effective protection for rohingya refugees in Southeast Asia. Social Sciences, 10(5), 166. https://doi.org/https://doi.org/10.3390/socsci10050166

- Morrow-Howell, N., Jim, H., Rozario, P. A., & Tang, F. (2003). Effects of volunteering on the well-being of older adults. The Journals of Gerontology. Series B, Psychological Sciences and Social Sciences, 58(3), S137–S145. https://doi.org/https://doi.org/10.1093/geronb/58.3.S137

- Moss-Racusin, C. A., Dovidio, J. F., Brescoll, V. L., Graham, M. J., & Handelsman, J. (2012). Science faculty’s subtle gender biases favor male students. Proceedings of the National Academy of Sciences, 109(41), 16474–16479. https://doi.org/https://doi.org/10.1073/pnas.1211286109

- Neumark, D., Bank, R. J., & Van Nort, K. D. (1996). Sex discrimination in restaurant hiring: An audit study. The Quarterly Journal of Economics, 111(3), 915–941. https://doi.org/https://doi.org/10.2307/2946676

- Nungsari, M., Flanders, S., & Chuah, H. Y. (2020a). Poverty and precarious employment: The case of Rohingya refugee construction workers in Peninsular Malaysia. Humanities and Social Sciences Communications, 7(1), 1–11. https://doi.org/https://doi.org/10.1057/s41599-020-00606-8

- Nungsari, M., Flanders, S., & Chuah, H. Y. (2020b). Refugee issues in Southeast Asia: Narrowing the gaps between theory, policy, and reality. Refugee Review (Emerging Issues in Forced Migration), 4, 129–246. https://www.researchgate.net/publication/342232338_Refugee_Issues_in_Southeast_Asia_Narrowing_the_Gaps_between_Theory_Policy_and_Reality/stats

- Onraet, E., Van Hiel, A., Valcke, B., & Assche, J. V. (2021). Reactions towards asylum seekers in the Netherlands: Associations with right-wing ideological attitudes, threat and perceptions of asylum seekers as legitimate and economic. Journal of Refugee Studies, 34(2), 1695–1712. https://doi.org/https://doi.org/10.1093/jrs/fez103

- Pager, D., Bonikowski, B., & Western, B. (2009). Discrimination in a low-wage labor market: A field experiment. American Sociological Review, 74(5), 777–799. https://doi.org/https://doi.org/10.1177/000312240907400505

- Pager, D., & Quillian, L. (2005). Walking the talk? What employers say versus what they do. American Sociological Review, 70(3), 355–380. https://doi.org/https://doi.org/10.1177/000312240507000301

- Paluck, E. L., & Green, D. P. (2009). Prejudice reduction: What works? A review and assessment of research and practice. Annual Review of Psychology, 60(1), 339–367. https://doi.org/https://doi.org/10.1146/annurev.psych.60.110707.163607

- Paluck, E. L. (2009). Reducing intergroup prejudice and conflict using the media: A field experiment in Rwanda. Journal of Personality and Social Psychology, 96(3), 574. https://doi.org/https://doi.org/10.1037/a0011989

- Pettigrew, T. F., & Tropp, L. R. (2006). A meta-analytic test of intergroup contact theory. Journal of Personality and Social Psychology, 90(5), 751. https://doi.org/https://doi.org/10.1037/0022-3514.90.5.751

- Pine, B. A., & Drachman, D. (2005). Effective child welfare practice with immigrant and refugee children and their families. Child Welfare, 84, 5. https://pubmed.ncbi.nlm.nih.gov/16435650/

- Pons, V. (2018). Will a five-minute discussion change your mind? A countrywide experiment on voter choice in France. American Economic Review, 108(6), 1322–1363. https://doi.org/https://doi.org/10.1257/aer.20160524

- Porter, C., & Serra, D. (2020). Gender differences in the choice of major: The importance of female role models. American Economic Journal. Applied Economics, 12(3), 226–254. https://doi.org/https://doi.org/10.1257/app.20180426

- Rashid, S. R. (2020). Finding a durable solution to Bangladesh’s Rohingya refugee problem: Policies, prospects and politics. Asian Journal of Comparative Politics, 5(2), 174–189. https://doi.org/https://doi.org/10.1177/2057891119883700

- Rask, K. N., & Bailey, E. M. (2002). Are faculty role models? Evidence from major choice in an undergraduate institution. The Journal of Economic Education, 33(2), 99–124. https://doi.org/https://doi.org/10.1080/00220480209596461

- Remennick, L. (2003). Language acquisition as the main vehicle of social integration: Russian immigrants of the 1990s in Israel. International Journal of the Sociology of Language Berlin, Boston: De Gruyter Mouton, 2003(164), 83–105. https://doi.org/https://doi.org/10.1515/ijsl.2003.057

- Reynolds, J. P., Stautz, K., Pilling, M., van der Linden, S., & Marteau, T. M. (2020). Communicating the effectiveness and ineffectiveness of government policies and their impact on public support: A systematic review with meta-analysis. Royal Society Open Science, 7(1), 190522. https://doi.org/https://doi.org/10.1098/rsos.190522

- Riach, P. A., & Rich, J. Field experiments of discrimination in the market place. (2002). The Economic Journal, 112(483), F480–518. November 2002. https://doi.org/https://doi.org/10.1111/1468-0297.00080

- Roodman, D., Nielsen, M. Ø., MacKinnon, J. G., & Webb, M. D. (2019). Fast and wild: Bootstrap inference in Stata using boottest. The Stata Journal, 19(1), 4–60. https://doi.org/https://doi.org/10.1177/1536867X19830877

- Salim, Y. (2019). Livelihood sustainability among Rohingya refugees: A case study in Taman Senangin, Seberang Perai, Penang. Asia Proceedings of Social Sciences, 4(2), 46–48. https://doi.org/https://doi.org/10.31580/apss.v4i2.719

- Sekaquaptewa, D., Espinoza, P., Thompson, M., Vargas, P., & Von Hippel, W. (2003). Stereotypic explanatory bias: Implicit stereotyping as a predictor of discrimination. Journal of Experimental Social Psychology, 39(1), 75–82. https://doi.org/https://doi.org/10.1016/S0022-1031(02)00512-7

- Smith, E. M., & Minescu, A. (2021). Comparing normative influence from multiple groups: Beyond family, religious ingroup norms predict children’s prejudice towards refugees. International Journal of Intercultural Relations, 81, 54–67. https://doi.org/https://doi.org/10.1016/j.ijintrel.2020.12.010

- Sonnert, G., Fox, M. F., & Adkins, K. (2007). Undergraduate women in science and engineering: Effects of faculty, fields, and institutions over time. Social Science Quarterly, 88(5), 1333–1356. https://doi.org/https://doi.org/10.1111/j.1540-6237.2007.00505.x

- Stange, G., Kourek, M., Sakdapolrak, P., & Sasiwongsaroj, K. (2019). Forced migration in Southeast Asia. ASEAS-Austrian Journal of South-East Asian Studies, 12(2), 249–265. https://doi.org/https://doi.org/10.14764/10.ASEAS-0024

- Stein, B. N. (1986). Durable solutions for developing country refugees. International Migration Review, 20(2), 264–282. https://doi.org/https://doi.org/10.1177/019791838602000208