ABSTRACT

As individuals make belief decisions on truths and falsehoods, a systematic organization of (mis)information emerges. In this study, we employ a network approach to illustrate how a sample of Americans share a cognitive network of false and true statements related to COVID-19. Moreover, we examine what factors are associated with the formation of misbeliefs. Findings from our US-based rolling cross-sectional survey data indicate that conservative groups exhibit a greater tendency to mix up false and true information than liberal groups. This tendency is preserved across different time points, revealing more homogenously structured information networks of conservative groups compared to liberal groups. The benefits of a cognitive network framework that integrates structural perspectives into theories are discussed.

In the digital age, characterized by an overwhelming flood of information, the ability to distinguish truth from falsehood has become a daily challenge for individuals (Scheufele & Krause, Citation2019). The act of believing one piece of information over another is not an isolated endeavor. Rather, people’s decisions to believe certain information are intricately connected to one another, forming a complex network that reveals the interconnected relationships between different pieces of information.

Studying the cognitive and social elements of misinformation has recently garnered considerable attention as the public is more frequently exposed to and likely to believe misinformation (Carnahan et al., Citation2022; Garrett & Bond, Citation2021; van der Linden et al., Citation2023; Walter & Murphy, Citation2018). This rising trend underscores the urgent need to comprehend how the public processes and organizes information and recognize their vulnerability to misinformation. The conjunction error is one of the cognitive processes that make individuals vulnerable to misinformation (van der Linden et al., Citation2023). This error arises when people erroneously connect unrelated pieces of information, like associating vaccines with autism. While psychological training can help mitigate conjunction errors (Stall & Petrocelli, Citation2023), there is still much to uncover about how these erroneous connections arise when individuals are confronted with a mixture of truths and falsehoods, as well as which social groups are more susceptible to such errors. Answering these questions requires a comprehensive examination of how individuals organize and structure information.

The adoption of a network analysis approach has proven to be a valuable tool for studying the structure and organization of complex systems. In recent years, scholars from various fields have embraced network analysis to examine psychological systems, including political belief systems (Boutyline & Vaisey, Citation2017; Brandt et al., Citation2019) and epistemic networks (Shaffer et al., Citation2016). Network analysis, with its focus on relational patterns, enables the examination of how different elements of (mis)information are interconnected and which local and global structuresFootnote1 emerge from these interconnections. For example, by analyzing local structures through cluster analysis, we can identify the types of (mis)information that are commonly grouped together, revealing specific pieces of (mis)information that individuals are more likely to believe concurrently. Furthermore, by comparing the global structures of these networks, we can understand the variations in (mis)information networks across different social groups.

This study uses network analysis with two goals. First, this study investigates the structure of truth and falsehood in (mis)information networks, which are constructed based on the extent of shared beliefs in information of varying veracity. Given the highly politicized nature of COVID-19 in the United States (US), we conducted a comparative analysis of the aggregate-level (mis)information networks between individuals identifying as liberals and conservatives. Second, the study assesses the impact of external factors such as information veracity and individual characteristics (i.e., political orientation, information avoidance, and information seeking on social media) on misbeliefs. In this study, we define misbeliefs as erroneous beliefs that contradict the best available scientific knowledge (Nyhan & Reifler, Citation2010). We used datasets from a US-based 17-wave rolling cross-sectional survey conducted during the early stages of the COVID-19 pandemic, spanning from June to October 2020, a period characterized by significant information uncertainty.

This study contributes to the theoretical understanding of (mis)information by using a network perspective. Traditional approaches often treat belief decisions about information as isolated events, neglecting the interconnected nature of these decisions. By conceptualizing belief in (mis)information as a network, our study captures the relational patterns and structures that emerge from the interactions among multiple pieces of information. This network approach allows us to examine how people systematically organize and structure information, offering a more comprehensive understanding of the belief formation process. Furthermore, our study investigates the role of political orientation in shaping (mis)information networks, contributing to the ideological asymmetry hypothesis by demonstrating how cognitive tendencies associated with political orientation manifest in the structure and evolution of these networks.

Network approach to unravel organization of (mis)information at aggregate level

A cognitive system is formed through intricate and ever-changing interactions among its various cognitive elements. Utilizing a network approach to studying such systems is useful, as it enables us to tap into information concerning the relationships among cognitive elements. This approach entails scrutinizing the pattern of interconnections among cognitive elements, acknowledging that these interrelations constitute the fundamental framework of the system. Through adopting this structural perspective, the network approach facilitates exploration into the organization and interactions of these elements within systems.

The network approach has advanced our understanding of cognitive systems, including political belief systems (Boutyline & Vaisey, Citation2017; Brandt et al., Citation2019) and epistemic networks (Shaffer et al., Citation2009). Evidence from these studies strongly supports the value of the network approach in revealing emergent structural patterns such as centrality and density. For instance, using the network approach, previous studies identified that ideological identity (i.e., conservatism-liberalism) is positioned at the center of a political belief system, whereas beliefs in political issues (e.g., beliefs in abortion, gay rights, or buying guns) are at the periphery (Boutyline & Vaisey, Citation2017; Brandt et al., Citation2019). Moreover, a study found the interconnections of knowledge elements in an epistemic network become more densely connected as individuals become more knowledgeable through learning (Shaffer et al., Citation2009).

Within cognitive networks, nodes symbolize ideas, while edges represent the interactions or interconnections between these ideas. For example, in a political belief system, nodes represent beliefs related to political issues or identity and connections are drawn based on correlations of these beliefs (e.g., Boutyline & Vaisey, Citation2017; Brandt et al., Citation2019; Fishman & Davis, Citation2022). In our study, we define nodes as statements, each containing either true or false information related to the COVID-19 pandemic. The degree of interaction between nodes, also known as edge strength, is expressed as the fraction of individuals who concurrently believe a pair of statements to be true. That is, the stronger the connection is, the more people believe both connected statements are true.

Estimating cognitive systems at the individual level would be ideal for investigating how individuals structure cognitive systems. However, individual-level approaches have not yet been established in the field and present several methodological challenges, such as the requirement for intensive resources (e.g., intensive longitudinal panel surveys;Brandt, Citation2022). Instead, the aggregated-level approach, which represents relationships of cognitive elements based on aggregated individual data (e.g., correlations, the number of co-occurrences), has been more widely adopted in cognitive network research (e.g., Boutyline & Vaisey, Citation2017; Brandt et al., Citation2019; Fishman & Davis, Citation2022). Drawing on the practices of these earlier studies, this research constructs (mis)information networks at the collective level. This approach although not representing the (mis)information network at the individual level, potentially portrays shared cognition within a social group by demonstrating which statements are more commonly co-believed among its members. The key concepts as well as their conceptualizations and operationalizations in the study are summarized in .

Table 1. Conceptualizations and operationalizations of core concepts.

Although individuals generally aim for accuracy in their beliefs (Kunda, Citation1990), discerning truth from falsehood is complex, influenced by several factors such as cognitive biases, existing beliefs, and the information environment (Kruglanski & Ajzen, Citation1983; Lewandowsky et al., Citation2012). Our study examines the (mis)information network, focusing on connection strength and information veracity. We define connection strength as the degree to which two statements are co-believed in a society or group, offering insights into prevalent belief patterns. Information veracity refers to a statement’s truthfulness, which is different from perceived truthfulness in that information veracity is relatively an objective measure of the accuracy and correctness of the information, while perceived truthfulness is a subjective assessment of how believable or credible the information appears to an individual. It is crucial to acknowledge that belief in a statement’s truth is not solely based on its actual truthfulness (Ecker et al., Citation2022). Factors like source credibility, alignment with pre-existing beliefs (Nan et al., Citation2022, Citation2023), and dominant social ideologies can significantly impact belief formation. This complexity may result in varied connection strengths within the network. Hence, our study sets out to descriptively explore the (mis)information network through research ruestion 1 (RQ1).

RQ1: How are connection strengths and patterns of veracity characterized within the COVID-19 misinformation network, particularly in terms of the interconnectivity among true and false statements?

Role of political orientation in organizing (mis)information

The impact of individual traits, such as subjective knowledge, cognitive styles, and political orientations, on peoples’ responses to misinformation has been well-established (Nan et al., Citation2022, Citation2023). Amongst these factors, political orientation is increasingly recognized as a significant factor that influences misbeliefs and disengagement from preventive behaviors during the COVID-19 pandemic (Lee et al., Citation2021; Miller, Citation2020). Numerous studies have revealed that conservatives are more susceptible to misinformation compared to liberals (Calvillo et al., Citation2020; Garrett & Bond, Citation2021; Miller, Citation2020; Nan et al., Citation2022, Citation2023).

Why are conservatives more susceptible to misinformation than liberals? Recent systematic reviews by Nan et al. (Citation2022, Citation2023) highlight that identity-motivated and directionally-motivated reasoning play significant roles in this context. In other words, individual’s susceptibility to misinformation is heavily influenced by the salient characteristics of one’s personal or social identity, and the desire to align information with pre-existing beliefs and values. This understanding aligns with the ideological asymmetry hypothesis, which posits that conservatives tend to exhibit cognitive rigidity, closure, and dogmatism to a greater extent than liberals (Jost, Citation2017). Such cognitive styles, characterized by a preference for simplicity and a strong need for closure, might lead conservatives to rely more on their intuition in assessing information’s veracity, rather than rigorously scrutinizing the evidence (Garrett & Weeks, Citation2017). Consequently, these cognitive tendencies, coupled with identity-motivated reasoning, could contribute to lower sensitivity among conservatives in distinguishing truth from falsehood (Garrett & Bond, Citation2021).

The susceptibility of conservative individuals to misinformation can also be explained by their information environment. Notably, misinformation tends to circulate more prolifically in information environments of conservatives compared to those of liberals (Garrett & Bond, Citation2021). Empirical evidence suggests that online misinformation was found to disproportionately promote conservative issues and candidates during the 2016 presidential election (Allcott & Gentzkow, Citation2017). Similarly, the spread of misinformation was facilitated by some prominent conservatives on social media such as Twitter during the COVID-19 pandemic (Gruzd & Mai, Citation2020). Extensive exposure to such an environment, combined with the tendency for confirmation bias – selective interpretation of facts that align with one’s pre-existing beliefs and group identity (Nan et al., Citation2022, Citation2023) – may make conservatives more vulnerable to misinformation.

Given the significant impact of political orientation on the inclination to endorse or reject information, whether true or false, it is plausible that distinct (mis)information networks may emerge between liberal and conservative groups (Maglić et al., Citation2021). We propose that the disassortative mixing pattern (Newman, Citation2003) may be more common in conservative information networks compared to liberal ones. In this context, disassortative mixing refers to a situation where information of dissimilar veracity (i.e., true and false information) is more likely to be linked together. Stronger connections between true and false information can be observed within conservative information networks, as conservatives tend to more frequently believe a mix of both truths and falsehoods. Additionally, we aim to investigate if clustering patterns of (mis)information differ between political groups, considering political leanings’ impact on information categorization (Bronstein et al., Citation2019). This clustering approach may provide additional evidence of conservatives’ susceptibility to misinformation, showing divergent patterns of clustering truths and falsehoods between conservative and liberal groups.

H1a-b: A conservative group is more likely to (a) form clusters mixing true and false statements and (b) display disassortative mixing patterns than a liberal group.

The COVID-19 pandemic has posed dramatic circumstances which have potentially affected the structure of (mis)information networks. In the early phases of the pandemic, COVID-19 was veiled in profound uncertainty, primarily due to the scarcity of scientific evidence, obstructing the formulation of evidence-based guidelines. This escalated uncertainty precipitated the spread of disinformation and erroneous information (Salvi et al., Citation2021). Additionally, as the pandemic turned into a matter of political contention in the US, public perceptions and behaviors concerning COVID-19 became notably polarized (Bruine de Bruin et al., Citation2020; Lee et al., Citation2021). It is likely that (mis)information networks were affected not only by these detrimental circumstances but also by constructive factors such as the proactive communication efforts led by public health authorities and the availability of reliable information from trustworthy sources.

The dynamic nature of the COVID-19 pandemic offers a unique opportunity to investigate how (mis)information networks transform and adapt in response to dynamic situations. Existing research suggests that liberals and conservatives might differ in their cognitive network structuring, influenced by a phenomenon known as “elective affinity” (Jost et al., Citation2003, Citation2009). Specifically, conservatives might develop rigid cognitive networks, driven by their preference for certainty, simplicity, and security, potentially resulting in more homogenous cognitive networks among its members (Jost, Citation2017). On the other hand, liberals might form flexible cognitive networks, accommodating a higher degree of uncertainty, complexity, and diversity, possibly resulting in more diverse cognitive networks among the members (Jost, Citation2017). This individual-level cognitive tendency could manifest in group-level (mis)information networks, potentially leading to a more homogeneous structure of (mis)information networks within a conservative group compared to their liberal counterparts. Given the lack of empirical evidence to substantiate our speculation regarding the uniformity of (mis)information network structures within a conservative group, we formulate our speculation into a research question rather than a hypothesis.

RQ2: Do the structures of COVID-19 (mis)information networks exhibit greater similarity across time within a conservative group compared to a liberal group?

Influences of exogenous factors on misbelief formation at individual level

(Mis)information networks at the aggregate level necessitate a basis of understanding at the individual level. Considering that each person’s belief decisions serve as foundational components of a collective-level (mis)information network, a deeper comprehension of this network can be attained by scrutinizing the processes behind an individual’s belief decisions. By utilizing individual-level networks, which detail a series of personal belief decisions toward a set of statements, we can examine the relationship between external influences and belief formation.

This study focuses on misbeliefs, which arise when individuals believe in misinformation or reject the truth. Recognizing the significant societal risks posed by misbeliefs, such as exacerbating disease spread (Bridgman et al., Citation2020; Vitriol & Marsh, Citation2021), there is a growing emphasis on thorough research in this area (Vitriol & Marsh, Citation2021). Previous studies have explored factors leading to misbeliefs using regression analysis (e.g., Bridgman et al., Citation2020). However, this approach fails to acknowledge the interrelated nature of misbelief formation, which implies that one misbelief could lead to another. In contrast to regression analysis, which relies on the independence assumption, our network approach appreciates the interconnectedness of (mis)beliefs in an individual’s cognitive landscape. We use this network perspective to examine how factors, including information veracity, individual political orientation, and individual information behaviors, contribute to misbelief formation.

In highly uncertain situations like the early pandemic, people are more prone to believe misinformation, especially if it offers hope or definitive answers (Stone & Marsh, Citation2023). The human need for cognitive closure in such times may drive individuals toward false, yet seemingly conclusive, explanations (Kruglanski, Citation1989). Under such circumstances, seeking information becomes a way to reduce anxiety and uncertainty (Huang & Yang, Citation2020), leading to a heightened risk of accepting false information which provides comforting, though inaccurate, explanations. Additionally, uncertain conditions favor the rapid and wide spread of false information over truth (Vosoughi et al., Citation2018), making such misinformation seem more legitimate due to its prevalence (Dechêne et al., Citation2010). Given the intense uncertainty of the pandemic’s initial phase, people were more likely to adopt misbeliefs from false information, as it falsely presented a sense of certainty in an otherwise complex and rapidly changing scenario (van Prooijen & Douglas, Citation2017).

H2: People are more likely to form COVID-19 misbeliefs when exposed to false information (i.e., believing in false information) compared to true information (i.e., disbelieving in true information).

H3: People with a more conservative orientation are more likely to form COVID-19 misbeliefs relative to more people with a more liberal orientation.

Seeking information on social media also plays a role in misbelief development. While social media platforms are public spaces for information-sharing, they are also potential breeding grounds for false and misleading content. Past research has shown that misinformation spreads faster and wider on social media than factual information (Vosoughi et al., Citation2018). Therefore, people who rely heavily on social media for information may be more vulnerable to misinformation compared to those using a variety of sources. In the COVID-19 context, social media exposure has been linked to pandemic misconceptions, whereas traditional media exposure has been associated with an accurate understanding of the issue (Bridgman et al., Citation2020). From these observations, we propose the following hypotheses.

H4a-b: People who (a) practice information avoidance and (b) rely on social media for information seeking are more likely to form COVID-19 misbeliefs than those who do not.

Method

Sampling strategy and sample description

This study utilized data from a National Science Foundation (NSF) funded project on the social dynamic of COVID-19. All procedures were approved by the Institutional Review Board of Michigan State University (Study ID: 00004287). The project adopted a rolling cross-sectional survey design to probe the dynamic social phenomena during the pandemic’s early stages.Footnote2 This study accounted for state-level variation (e.g., COVID-19 policies) across waves by selecting participants from 20 predetermined states, based on the prevalence of confirmed COVID-19 cases as of 5 May 2020. Ten states with the highest confirmed cases were classified as the first tier, while the remaining 40 states were evenly split into the second and third tiers. From these latter tiers, five states were randomly selected from each.Footnote3

Using the rolling cross-survey method, 17 independent datasets were collected on a weekly basis from 22 June to 18 October 2020. Participants were drawn from the national Qualtrics panel, employing quota sampling techniques based on age, sex, race, and education level, as informed by US Census data. In each wave, approximately 25 individuals aged 18 years or older were recruited from each state. Oversampling was employed to ensure all quotas were met, yielding a total response from 8778 individuals. After excluding cases with missing values for the study variables, a valid sample of 8288 respondents was obtained. The sample slightly favored female participants (51%) over males (49%), with the majority identifying as White (70%). The participants’ mean age was around 46 years (SD = 17.7), and less than half had attended college for a bachelor’s degree or higher (40%). Supplemental Table 1 in the supplemental document presents a comparison between the original sample (N = 8778) and the census data, suggesting a sample reflective of the US population.

Survey measurement

Information veracity

Information veracity refers to whether a statement is true or false at the time of the survey. For the purpose of this study, 15 statements concerning COVID-19, comprising five true and ten false statements, were sourced from the official website of Johns Hopkins Medicine (Johns Hopkins Medicine, Citationn.d.). The 15 statements about COVID-19 were carefully chosen to encompass a wide array of topics, ranging from the virus’s origin and prevention methods to prevailing myths and potential cures. Additionally, these particular statements were selected because of their widespread circulation among the public at the time of the survey, which underscores the significant impact they can have on society. presents each statement along with its respective veracity. For a detailed rationale behind the veracity assessment for certain less obvious statements (i.e., VACCINEAVAIL and DELIBCREATED), see the supplementary document.

Table 2. Percentage of misbelief by statement.

Beliefs and misbeliefs

In the survey, participants were instructed to indicate whether they believed each of the 15 statements related to COVID-19 to be true or false. Belief was measured by assigning 1 if a participant believed a statement to be true, regardless of the veracity of the statement itself, and otherwise 0. The measurement of belief was used to construct a (mis)information network. Misbelief was measured by assigning a value of 1 if a participant held an incorrect belief about a statement, either by believing true information to be false or false information to be true, and 0 otherwise. The measurement of misbelief was used to construct a misbelief network. On average, participants held misbeliefs about approximately two out of 15 statements (M = 2.23, SD = 1.91). presents a summary of the percentage of participants who held misbeliefs for each statement.

Political orientation and political group

Political orientation was measured with an item asking to what extent a respondent is liberal or conservative on an 8-point Likert scale (from 1 = extremely liberal to 8 = extremely conservative, M = 4.73, SD = 1.96). Participants whose political orientation scores between 1 and 4 were assigned to the liberal group, while those with scores between 5 and 8 were assigned to the conservative group. There are more participants in the conservative group (58%, n = 4826) than participants in the liberal group (42%, n = 3462) in the sample.

Information avoidance

Information avoidance was measured with an item asking to what extent a respondent ignored information about COVID-19 on a 0–100 scale with higher scores indicating greater avoidance (M = 18.02, SD = 25.46).

Information seeking on social media

Information seeking on social media was measured with an item asking how often, if ever, a respondent seeks information about COVID-19 on social media on a 5-point Likert scale (from 1 = never to 5 = almost all the time, M = 2.90, SD = 1.42).

Controls

The study also took into account control variables including demographic attributes such as age, sex, race, and education, along with objective COVID-19 risk indicators. Respondents reported their birth year to determine their age. Sex was categorized as either male (0) or female (1), and race was recoded into a dichotomous variable, white (0) and non-white (1), for simplicity. Education was measured on an ordinal scale ranging from 1 (less than high school degree/high school graduate) to 4 (graduate degree). Objective risk indicators were also employed, representing the percentage change in cumulative confirmed COVID-19 cases and COVID-19 deaths per week compared to the previous week on a state-by-state basis. These indicators were calculated using data sourced from the Centers for Disease Control and Prevention (Centers for Disease Control and Prevention, Citation2020).

Construction and measurement of (mis)information network at aggregate level

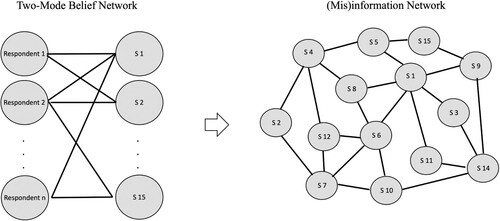

The process of constructing a (mis)information network involved two steps. First, we built a two-mode belief network consisting of two types of nodes, namely respondents and statements. Edges in this network represented the respondents’ beliefs in the statements (refer to Supplemental Table 2 in the supplemental document for the topology of the two-mode belief network). Subsequently, we derived the (mis)information network by multiplying the transposed matrix of the two-mode network by the two-mode network matrix itself. conceptually illustrates the transformation from a two-mode network to a (mis)information network.

Figure 1. Conversion of two-mode belief network to (mis)information network.

Note: S = Statement. Edges in the two-mode belief network occur when respondents believe statements regardless of their veracity to be true. The two-mode belief network is transformed into (mis)information network through a process known as projection. The strength of the edges in the (mis)information network is assigned based on the proportion of respondents who simultaneously believe in two distinct statements.

A (mis)information network consists of nodes and ties. In this study, there are 15 nodes, each corresponding to one of the 15 COVID-19 statements. The strength of the tie between a pair of nodes is operationalized as the proportion of participants who co-believe those statements. For example, let us consider two statements A and B. If 50% of participants concurrently believe both A and B to be true, then the strength of the tie between nodes A and B is 0.5. However, if no one believes both statements to be true, there will be no tie between A and B.

To address RQ1, we constructed a (mis)information network by aggregating all datasets. For H1a, we constructed separate (mis)information networks for the liberal and conservative groups. Lastly, to address H1b and RQ2, we further segmented these (mis)information networks by waves, yielding a total of 34 networks (2 political groups * 17 waves = 34 networks).

Construction and measurement of two-mode misbelief network at individual level

The construction of a two-mode misbelief network at the individual level for each of the 17 waves was achieved as follows: each edge within the network represents a participant’s misbelief concerning a given statement. If a participant holds a misbelief, an edge exists between that participant and the respective statement.Footnote4 If, on the other hand, a participant holds an accurate belief about a statement – namely, they correctly identify true information as true, or false information as false – no edge exists between the participant and that statement.

Analytic plans

Analytic plans for RQ1, H1, and RQ2

To address RQ1, the strength of connections among statements is descriptively reported. The connection strength between two statements is calculated by measuring the proportion of respondents who hold concurrent beliefs in both. The metric ranges from 0 (no respondents co-believe a pair of statements) to 1 (all respondents co-believe them).

To address H1, the (mis)information networks of the liberal and conservative groups are compared in several dimensions. First, the strength of connections, represented by the proportion of individuals who co-believe statements, is compared between liberals and conservatives through descriptive analysis. Second, the fast greedy modularity optimization algorithm (Clauset et al., Citation2004), which identifies clusters based on the strength of connections, is utilized to extract clusters from the (mis)information networks of both groups, and the derived clusters between liberals and conservatives are compared. Third, a negative binomial regression is conducted to investigate whether the connection strength between true and false statements for conservative groups is higher than for liberal groups. For this analysis, we grouped the statement pairs into three conditions based on veracity: true-true, false-true, and false-false conditions. The regression model takes the veracity type pair condition as the independent variable, political group (liberal or conservative) as the moderator, and connection strength as the dependent variable. The negative binomial regression is chosen because the strength of connectivity is heavily skewed to the right, violating the assumptions of linear regression (Gardner et al., Citation1995). To increase the statistical power, we obtain the strength of connectivity from networks segmented by political group and wave (i.e., 34 networks), resulting in 3570 data points (2 political groups × 17 waves × 105 statement pairs).

To address RQ2, the graph diffusion distance score is utilized, which quantifies the degree of structural heterogeneity between two weighted networks with the same number of nodes (Hammond et al., Citation2013). The graph diffusion distance is based on the premise that two networks with the same set of nodes may have different structures if diffusion patterns of something that flows thorough these networks vary. This method involves simulating and examining the variation in these flow patterns to measure the degree of difference between the networks. For instance, this algorithm performs diffusion simulations by initiating heat from node A and observing how heat is diffused throughout the entire network. By repeating heat diffusion simulation across multiple nodes and doing the same simulation using the comparing network, this algorithm allows us to compare the structure of two networks (Hammond et al., Citation2013). The graph diffusion distance values can extend from 0, indicating completely identical networks, to an indefinite maximum, representing extreme dissimilarity. The NetworkDistance R package is used to compute the graph diffusion distance score (v0.3.4; You, Citation2021). These scores are calculated for all pairs of (mis)information networks across the 17 waves for each group.

Analytic plans for H2, H3, and H4

To address H2, H3, and H4, an inferential network model, the Exponential Random Graph Model (ERGM), was applied to each of the two-mode misbelief networks. We used the statnet R package for this purpose (v2019.6; Hunter et al., Citation2008). ERGM can be compared to binary logistic regression, as it investigates the influences of various factors on the presence of binary-coded ties (e.g., 0 = no tie, 1 = tie). However, ERGM offers advantages over binary logistic regression as it assumes interdependency, reflecting the theoretical notion that beliefs are interconnected within one’s mind. Additionally, ERGM, when applied to a two-mode network, allows researchers to easily examine the influences of attributes associated with information (e.g., veracity type), which can be more complex to test with binary logistic regression.

Results

Overall (mis)information network (RQ1)

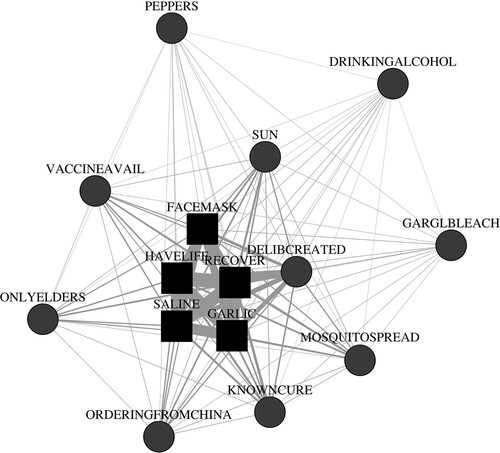

demonstrates the overall (mis)information network aggregated over the entire study period. The (mis)information network comprised five true statements and ten false statements. Strong connections existed between true statements (M = 0.64, SD = 0.16), whereas weak connections existed between the rest of the statement combinations (M = 0.06, SD = 0.07).

Figure 2. Overall (mis)information network.

Note: The shape of a node indicates the veracity of statements. Square-shaped nodes represent true statements, while round-shaped nodes represent false statements. The thickness of the line indicates the degree of connection between statements. The thicker the line between a pair of statements, the more participants co-believe them.

Four true statements out of the five were strongly interconnected with each other, namely HAVELIFE (i.e., “Getting COVID-19 doesn’t mean that you will have it for life”), RECOVER (i.e., “You can recover from COVID-19”), SALINE (i.e., “There is no evidence that rinsing your nose with saline protects against COVID-19”), and GARLIC (i.e., “Eating garlic does not help prevent COVID-19”) (M = 0.75, SD = 0.07). For instance, 86% of participants co-believed HAVELIFE and RECOVER to be true, and 80% of participants co-believed RECOVER and SALINE to be true. Although FACEMASK (i.e., “A face mask will protect you from COVID-19”) was a true statement, its connection with other true statements was relatively moderate (M = 0.46, SD = 0.07).

False statements had weak connections with each other (M = 0.03, SD = 0.02) as well as with true statements (M = 0.09, SD = 0.08). This weak connection of false statements with other statements was expected, given that many of these false statements included obtrusive false information. Nevertheless, some false statements tended to be co-believed with true statements. For instance, approximately 30% of respondents co-believed DELIBCREATED (i.e., “COVID-19 was deliberately created or released by people”) with other true statements (M = 0.30, SD = 0.08). Although not strongly connected, other false statements such as KNOWNCURE (i.e., “There is a known cure for COVID-19”), SUN (i.e., “Exposure to the sun prevents COVID-19”), MOSQUITOSPREAD (i.e., “Mosquitos help spread COVID-19”) had some degree of connection with true statements (MKNOWNCURE = 0.13, SDKNOWNCURE = 0.04; MSUN = 0.10, SDSUN = 0.03; MMOSQUITOSPREAD = 0.10, SDMOSQUITOSPREAD = 0.03). Supplemental Table 3 provides the adjacency matrix for this overall (mis)information network.

(Mis)information networks between liberals and conservatives (H1a and b)

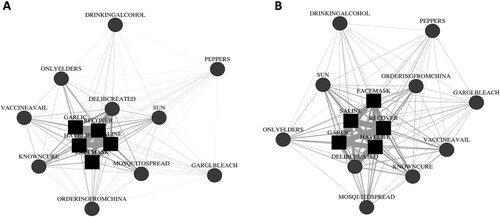

demonstrates the (mis)information network of the liberal and conservative groups. For both groups, strong connections exist between true statements (MLiberal = 0.66, SDLiberal = 0.15; MConservative = 0.62, SDConservative = 0.17). However, while there were strong connections among HAVELIFE, RECOVER, SALINE, and GARLIC in both the liberal (M = 0.76, SD = 0.07) and conservative (mis)information networks (M = 0.74, SD = 0.07), the conservative group tended to co-believe FACEMASK less with other true statements (M = 0.43, SD = 0.05) than the liberal group (M = 0.50, SD = 0.05). Supplemental Tables 4 and 5 provide the adjacency matrices for liberal and conservative (mis)information networks, respectively.

Figure 3. (A) Liberal and (B) Conservative (mis)information network.

Note: The shape of a node indicates the veracity of statements. Square-shaped nodes represent true statements, while round-shaped nodes represent false statements. The thickness of the line indicates the degree of connection between statements. The thicker the line between a pair of statements, the more participants co-believe them.

Two distinct clusters emerged in the COVID-19 (mis)information networks from the liberal and conservative groups. The cluster analysis confirmed the presence of an erroneous connection between the false statement (i.e., DELIBCREATED) and true statements in the conservatives’ (mis)information network. The clusters were mostly driven by the veracity of statements. True statements tended to cluster with true statements, whereas false statements tended to cluster with false statements. This pattern was mostly evident for both groups, with one exception. The false statement DELIBCREATED was clustered with true statements in the (mis)information network of the conservative group. The result indicated that the conservative group treated this false statement as if it were true in their (mis)information network. On the other hand, the liberal group did not mix true and false statements in their (mis)information network. visualizes the cluster analysis of the liberal and conservative (mis)information networks.

Figure 4. Cluster analysis results of (A) Liberal and (B) Conservative (mis)information network.

Note: The shape of a node indicates the veracity of statements. Square-shaped nodes represent true statements, while round-shaped nodes represent false statements. The color and shade of nodes indicate cluster membership. Nodes in blue color are clustered in one group, while nodes in orange color are clustered in another group. Edges between nodes are omitted for visual simplicity.

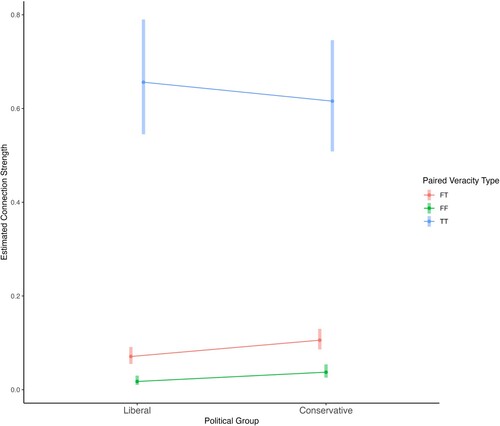

The connectivity patterns between the liberal and conservative groups were examined. illustrates the results. The findings confirmed that both groups developed (mis)information networks consisting of strong connections between true information, but the conservative group was more likely to mix false and true information in their (mis)information network compared to the liberal group (b = −0.46, p < .05). Specifically, the level of connectivity for true-true statements did not differ between the liberal group (estimated strength of connection (estimation, hereafter) = 0.66, SE = 0.06, CI 95% = [0.55, 0.79]) and the conservative group (estimation = 0.62, SE = 0.06, CI 95% = [0.51, 0.75]). However, the level of connectivity between false-true statements tended to be higher in the conservative (mis)information network (estimation = 0.11, SE = 0.01, CI 95% = [0.09, 0.13]) than in the liberal (mis)information network (estimation = 0.07, SE = 0.01, CI 95% = [0.06, 0.09]).

Figure 5. Disassortative and assortative patterns between liberal and conservative groups.

Note: Pairs of statements are categorized into three conditions based on veracity. If both statements are true, the pair is assigned to the true-true condition. If both statements are false, the pair is assigned to the false-false condition. If the veracity of statements is mixed, the pair is assigned to the false-true condition.

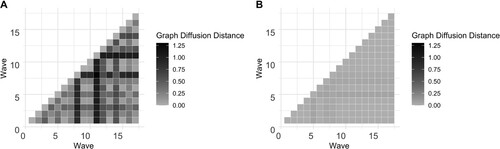

Structural heterogeneity and homogeneity of (mis)information network (RQ2)

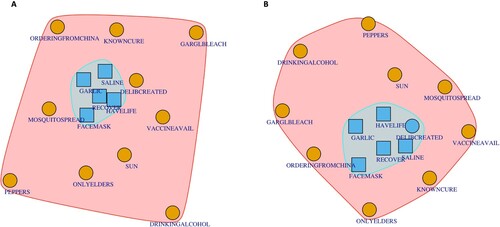

The average score of graph diffusion distance was greater for the liberal group (M = 0.44, SD = 0.32) than for the conservative group (M = 0.02, SD = 0.02), and such difference was statistically significant, t(135.9) = 15.65, p < .001. This result indicated that the (mis)information networks of the liberal group were more structurally heterogeneous and dynamic than those of the conservative group across different time points. Put differently, the conservative group developed (mis)information networks that were structurally homogenous and rigid. This implies that within the conservative group, the patterns of connectivity, arrangement, and other structural elements of their (mis)information networks remained remarkably consistent across different time points.

illustrates the overall distribution of graph diffusion distance scores between the liberal and conservative groups. This figure clearly showed that there was more dynamism in the liberal group than in the conservative group. It demonstrated that the liberal group developed heterogeneous (mis)information networks where structures of (mis)information network changed across different samples and settings. On the contrary, the flattened distribution of the light gray color scales demonstrated that the conservative group developed extremely invariant (mis)information networks, which were featured by low graph diffusion scores and variation. In other words, the conservative group developed (mis)information networks that were homogeneous across different samples and settings.

Figure 6. Bivariate graph diffusion distances of (A) the liberal group and (B) the conservative group.

What network structures might enable the conservative group to have homogeneous networks? Post hoc analysis, presented in Supplemental Table 6 and Supplemental Figure 2, investigated the density of networks; operationally defined as the sum of connectivity. The results demonstrate that across 17 waves, the conservative group (M = 13.12, SD = 1.29) exhibits denser (mis)information networks compared to the liberal group (M = 10.89, SD = 0.48), t(21.64) = 6.89, p < .001.

Formation of misbeliefs: influences of statement veracity (H2), political orientation (H3), information behaviors (H4a–c)

The associations between statement veracity, political orientation, information behaviors, and misbelief were examined through ERGM with a two-mode misbelief network per wave. The Supplemental Table 7 presents the overall results including detailed statistical information.

Unexpectedly, the results showed that true statements were positively associated with being mistakenly believed as false. In other words, people were more likely to incorrectly believe true statements to be false rather than false statements to be true. This association persisted across all 17 waves, indicating that the data were not consistent with H2. Conservative orientation was significantly associated with the formation of misbelief throughout all 17 waves, supporting H3. Specifically, individuals with conservative views were more likely to form misbeliefs regarding COVID-19 statements. The data also supported H4a and H4b, which proposed that information avoidance and social media use were positively related to misbelief. The associations remained significant across all 17 waves.

Discussion

The present study employs a novel approach, integrating the network perspective with rolling cross-sectional survey datasets containing binary-based belief responses to COVID-19 (mis)information. This research constructed (mis)information networks to investigate the relationships and structures of (mis)information at the aggregate level. In the second part of the study, two-mode misbelief networks were built at the individual level to explore the associations between misbelief, information veracity, political orientation, and information behaviors. Although this study was conducted within a specific context, the cognitive network framework employed here offers a promising approach that may be adapted and applied to a variety of research settings, contexts, and data, subject to further validation and exploration.

Overall, this study contributes to enhancing our understanding of how (mis)information networks are systematically organized in a highly uncertain situation. Moreover, this study contributes to advancing theories such as the ideological asymmetric hypothesis, by demonstrating that the cognitive rigidity associated with conservatism and the cognitive flexibility associated with liberalism manifest not only at the individual level but also in the structure and evolution of (mis)information networks at the group level. By employing the network approach, this study enriches our understanding of how political orientation shapes the way individuals and groups process and organize information, providing a comprehensive view of the belief formation process.

Network perspectives reflect systematic nature of information organization

Our findings highlight that an organized structure of information emerges from the aggregation of individual belief decisions about information. This may suggest that the way individuals or society organize information is not random but meaningful and systematic. This is consistent with well-established psychological constructs like schema (Graesser & Nakamura, Citation1982), and supported by empirical evidence from psychology and neuroscience research (Ahn et al., Citation1992; Ghosh & Gilboa, Citation2014).

Incorporating a network approach into (mis)information research offers a holistic view of how individuals in a group systematically organize an array of (mis)information. Through constructing a (mis)information network at an aggregate level in this study, we found that society tends to form a constructive network where true statements are often believed and placed centrally, while false statements are largely disbelieved and positioned at the periphery. This global view is informative as it illustrates how belief decisions about information can be structured through local and global interactions among pieces of information.

Further, the network approach enhances our understanding of belief decisions about specific information relative to other information. While it is generally observed that true statements are strongly interconnected, closer examination reveals that certain true statements are weakly connected with others. For instance, the true statement, “FACEMASK,” had weaker connections with other true statements. This could be due to the high uncertainty surrounding the effectiveness of face masks during the early stages of the pandemic (Peeples, Citation2020) and the period when this study took place. As a result, people might have been less confident about believing the FACEMASK statement as true compared to other true statements, leading to its weak connections.

Our findings also show that certain false information, such as whether or not the virus was deliberately created (“DELIBCREATED”) tends to be co-believed and clustered with true information within the conservative group. This finding aligns with previous literature suggesting a higher susceptibility to misinformation among conservatives compared to liberals (Calvillo et al., Citation2020; Garrett & Bond, Citation2021; Miller, Citation2020; Nan et al., Citation2022, Citation2023). DELIBCREATED appears to be a piece of misleading information that resonates widely within conservative circles. This trend could be attributed to the influence of political values and identities, which drive individuals toward embracing misinformation that aligns with their political values and affiliations (Nan et al., Citation2022, Citation2023). Future research is needed to integrate the network approach to investigate whether interventions designed to counteract belief in misinformation could effectively restructure the (mis)information network. Such research would be instrumental in understanding whether these interventions can weaken the connections between falsehoods and truths within these networks.

Network perspectives foster research with fresh insights

Over the past decade, we have witnessed considerable advances in network perspectives and metrics, offering a robust methodological foundation for scholars researching cognitive networks. For instance, a previous study used the concept of network centrality to identify the central political belief in a political belief system (Boutyline & Vaisey, Citation2017). By conceptualizing the organization of (mis)information as a network, we can incorporate a variety of network perspectives and metrics into this research domain.

In our study, we used several network metrics, such as edge strength, and network algorithms like modularity and graph diffusion distance. We examined edge strength descriptively to show how participants, grouped by their political orientation, organize true and false information. We utilized modularity to examine how political groups cluster their (mis)information networks. The graph diffusion distance algorithm was employed to investigate the structural heterogeneity of (mis)information networks within each political group across different time points. These network metrics and algorithms enable us to shed light on the organization and evolution of (mis)information networks of two political groups, aspects that would remain unexplored with traditional analytic approaches and perspectives.

The network analysis techniques used here are just a few examples among many. Researchers are encouraged to consider a variety of network perspectives relevant to their research questions. For example, we can utilize a global-level network property like density, which represents network cohesiveness (i.e., the sum of edge strengths) (Brandt & Sleegers, Citation2021; Dalege & van der Does, Citation2022) to examine whether a (mis)information network with high density is more resilient to change or external interventions than a network with low density. The findings from our post-hoc analysis offer additional insights into why the conservative group is consistently susceptible to misinformation throughout the study period. The conservative group might resist change and maintain the status quo of their (mis)information networks as their (mis)information networks are more cohesive and firmly established than those of the liberal group. Likewise, applying network perspectives could offer numerous opportunities to enhance the theoretical and methodological aspects of (mis)information research.

Ideological asymmetry in (mis)information networks

A cognitive network is conceived as both dynamic and enduringly stable (Quackenbush, Citation1989). This current research is among the inaugural studies to investigate the structural changes of (mis)information networks within liberal and conservative groups during the high-stakes, unpredictable context of the pandemic. Our findings are consistent with the ideological asymmetry hypothesis, which proposes a contrast between conservative individuals’ rigid thinking and the more adaptable mindset of liberal individuals’ (Jost, Citation2017; Jost et al., Citation2003, Citation2009). This research builds upon the existing body of knowledge by affirming that this ideological asymmetry prevails even at the group level. The cognitive rigidity intrinsic to conservative individuals may be manifested in their collective cognition, thereby fostering an intense homogeneity within their (mis)information networks across various temporal phases and environments. Conversely, the cognitive fluidity inherent in liberal individuals may potentially steer their (mis)information networks toward a notably diversified state, exhibiting varied characteristics across distinct temporal dimensions and contexts.

The results of our study carry significant implications for public health and misinformation. In our analysis, we observed a notable consistency within the conservative group regarding their response to certain types of misinformation. This observed consistency implies a pattern where certain misbeliefs may circulate more persistently and become more deeply ingrained within the information networks of this group. It is important to highlight that this consistency does not suggest a lack of diversity in thought or opinion among conservatives but rather points to specific trends in how misinformation is processed and retained. Once misinformation becomes entrenched and is systematically integrated with other information, the conservative group tends to show less inclination toward updating their information networks compared to the liberal group. Our results may shed light on the complexities involved in rectifying misbeliefs among conservatives, and why attempts at correction can, in certain situations, prove to be counterproductive (Nyhan & Reifler, Citation2010). Challenges may also arise within the liberal group. While their cognitive flexibility may grant them greater capacity for rectifying misbeliefs, this adaptability may simultaneously render them more susceptible to misleading information. Although this aspect was not formally presented in our study, mapping the (mis)information networks of the liberal group by wave in Supplemental Figure 1 indicates their vulnerability to a broad spectrum of misleading information.

Misbelief formation

Our study offers several practical insights for public health communication researchers and practitioners. First, the significant association between information veracity and individuals’ decision to accept misbeliefs indicates that in shaping peoples’ beliefs around COVID-19, misbeliefs around the true information (e.g., not believing the facts) are more prominent than misbeliefs around the false information (e.g., believing falsehoods). In other words, despite the escalating concern over the spread of fake news and mis- and disinformation, the bulk of misbeliefs originate from exposure to incomplete factual information rather than counterfeit or fabricated information. Consequently, in addressing emergent misbeliefs during health crises, the primary emphasis of public health communication should be on disseminating comprehensive, truthful information, coupled with efforts to counteract misinformation (Walter et al., Citation2021; Walter & Murphy, Citation2018; Walter & Tukachinsky, Citation2020).

Second, our findings elucidate the role of predispositions such as conservatism and information management behaviors (i.e., information avoidance, information seeking on social media) in the formation of misbeliefs. These insights provide valuable guidance on possible target audiences for public health messages. Empirical evidence reveals that people who lean more conservative are more susceptible to misbeliefs than people who lean more liberal (Miller, Citation2020), not only in the realm of political issues but also in health and scientific matters. Therefore, it is important to understand the (mis)information networks unique to conservatives and target communication accordingly. Furthermore, individuals who strategically evade information or seek information exclusively on social media platforms present a challenge in terms of reachability. Therefore, understanding their patterns of information consumption and media habits can assist in reaching such elusive audiences.

Limitations

The present study has several limitations. First, the execution of this study during the early stages of the COVID-19 pandemic in the US introduces limitations regarding the applicability of our findings to broader contexts of health misinformation and different populations. The initial phase of the pandemic was marked by heightened uncertainty and widespread misinformation, creating a unique environment. Additionally, the absence of a COVID-19 vaccine at that time may have amplified individual anxiety, potentially increasing susceptibility to misinformation. This specific context raises questions about the extent to which our findings can be generalized to other health misinformation topics. Furthermore, the generalizability of our results to populations outside the US is uncertain, considering the high degree of politicization of COVID-19 (Bruine de Bruin et al., Citation2020; Lee et al., Citation2021) and the relative public distrust in healthcare systems in the US (Armstrong et al., Citation2006). Therefore, future research employing a similar framework in varied contexts is essential to affirm the broader applicability of our study’s findings.

Second, the study’s reliance on a rolling cross-sectional survey design is a limitation, as it maps (mis)information networks across various samples but cannot track changes within the same group over time, unlike a panel survey. The COVID-19 pandemic, with its rapidly changing information, influences individuals’ beliefs about misinformation. Our method, focusing on aggregated trends, misses these individual-level dynamics, making it crucial to interpret findings at the group level and avoid ecological fallacy. Future research should combine panel surveys and individual-level network approaches to better understand individual changes in (mis)information networks during dynamic events like pandemics.

Third, the aggregated approach precludes investigating the association of (mis)information networks with individual-level variables due to a disparity in the units of analysis. To overcome this limitation, recent research has proposed a method to measure cognitive networks at an individual level (Brandt, Citation2022). Considering that an individual’s cognitive network is likely to be shaped by a multitude of personal traits, including demographics, political orientation, and various perceptions, constructing individual-level information networks, and correlating them with individual-level variables could provide a more nuanced understanding of the origin and structure of (mis)information.

Conclusion

The present study applies a network perspective to investigate (mis)information networks at an aggregate level and misbelief networks at an individual level. While society broadly constructs a beneficial network, conservative groups tend to conflate false and true information more than their liberal counterparts. The structures of (mis)information networks exhibit a pronounced homogeneity within conservative groups, while those within liberal groups are markedly heterogeneous. The results derived from misbelief networks corroborate that political orientation serves as a significant determinant of misbeliefs. Additionally, the veracity of information and individual information behaviors also exhibit associations with misbeliefs. By integrating a network perspective, this approach potentially holds the promise to enrich theories and methodologies pertinent to (mis)information and belief research.

Supplemental Material

Download MS Word (1.2 MB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data and code will be shared upon request.

Additional information

Funding

Notes

1 Local structures refer to the specific configurations or patterns of nodes and edges found within localized segment of a network. These local structures include, but are not limited to, the strength of connections between specific nodes, clustering, and centrality, which highlight the relational patterns or arrangements of individual nodes and their neighboring nodes. In contrast, global structures pertain to the broad-scale patterns, configurations, and traits that define the overarching organization and behavior of a network. These global structures include, but are not limited to, density and disassortativity, which respectively refer to the degree of connectivity in a network and the tendency of nodes within a network to connect with dissimilar others.

2 A rolling cross-sectional survey differs from a panel survey as it collects an independent sample at each wave to represent the population for that timeline (Yee & Niemeier, Citation1996). Compared to the panel survey design, the rolling cross-sectional survey design has several advantages such as avoidance of participant dropout issues and better reflection of the community changes.

3 The first-tier states comprised of California, Connecticut, Florida, Illinois, Massachusetts, Michigan, New Jersey, New York, Pennsylvania, and Texas. The second-tier states included Colorado, Mississippi, North Carolina, South Carolina, and Tennessee, while the third-tier states were Arizona, the District of Columbia, Idaho, Kansas, and Kentucky.

4 Refer to Supplemental Table 2 in the supplemental documents for a representation of the topology of the two-mode misbelief network.

References

- Ahn, W., Brewer, W. F., & Mooney, R. J. (1992). Schema acquisition from a single example. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18(2), 391–412. https://doi.org/10.1037/0278-7393.18.2.391

- Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211

- Armstrong, K., Rose, A., Peters, N., Long, J. A., McMurphy, S., & Shea, J. A. (2006). Distrust of the health care system and self-reported health in the United States. Journal of General Internal Medicine, 21(4), 292–297. https://doi.org/10.1111/j.1525-1497.2006.00396.x

- Boutyline, A., & Vaisey, S. (2017). Belief network analysis: A relational approach to understanding the structure of attitudes. American Journal of Sociology, 122(5), 1371–1447. https://doi.org/10.1086/691274

- Brandt, M. J. (2022). Measuring the belief system of a person. Journal of Personality and Social Psychology, 123(4), 830–853. https://doi.org/10.1037/pspp0000416

- Brandt, M. J., Sibley, C. G., & Osborne, D. (2019). What is central to political belief system networks? Personality and Social Psychology Bulletin, 45(9), 1352–1364. https://doi.org/10.1177/0146167218824354

- Brandt, M. J., & Sleegers, W. W. A. (2021). Evaluating belief system networks as a theory of political belief system dynamics. Personality and Social Psychology Review, 25(2), 159–185. https://doi.org/10.1177/1088868321993751

- Bridgman, A., Merkley, E., Loewen, P. J., Owen, T., Ruths, D., Teichmann, L., & Zhilin, O. (2020). The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School Misinformation Review, 1(3). https://doi.org/10.37016/mr-2020-028

- Bronstein, M. V., Pennycook, G., Bear, A., Rand, D. G., & Cannon, T. D. (2019). Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. Journal of Applied Research in Memory and Cognition, 8(1), 108–117. https://doi.org/10.1037/h0101832

- Bruine de Bruin, W., Saw, H.-W., & Goldman, D. P. (2020). Political polarization in US residents’ COVID-19 risk perceptions, policy preferences, and protective behaviors. Journal of Risk and Uncertainty, 61(2), 177–194. https://doi.org/10.1007/s11166-020-09336-3

- Calvillo, D. P., Ross, B. J., Garcia, R. J. B., Smelter, T. J., & Rutchick, A. M. (2020). Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it). Social Psychological and Personality Science, 11(8), 1119–1128. https://doi.org/10.1177/1948550620940539

- Carnahan, D., Ulusoy, E., Barry, R., McGraw, J., Virtue, I., & Bergan, D. E. (2022). What should I believe? A conjoint analysis of the influence of message characteristics on belief in, perceived credibility of, and intent to share political posts. Journal of Communication, 72(5), 592–603. https://doi.org/10.1093/joc/jqac023

- Centers for Disease Control and Prevention. (2020). United States COVID-19 cases and deaths by state over time. https://data.cdc.gov/Case-Surveillance/United-States-COVID-19-Cases-and-Deaths-by-State-o/9mfq-cb36

- Clauset, A., Newman, M. E., & Moore, C. (2004). Finding community structure in very large networks. Physical Review E, 70(6), 066111. https://doi.org/10.1103/PhysRevE.70.066111

- Dalege, J., & van der Does, T. (2022). Using a cognitive network model of moral and social beliefs to explain belief change. Science Advances, 8(33), eabm0137. https://doi.org/10.1126/sciadv.abm0137

- Damstra, A., Vliegenthart, R., Boomgaarden, H., Glüer, K., Lindgren, E., Strömbäck, J., & Tsfati, Y. (2023). Knowledge and the news: An investigation of the relation between news use, news avoidance, and the presence of (mis)beliefs. The International Journal of Press/Politics, 28(1), 29–48. https://doi.org/10.1177/19401612211031457

- Dechêne, A., Stahl, C., Hansen, J., & Wänke, M. (2010). The truth about the truth: A meta-analytic review of the truth effect. Personality and Social Psychology Review, 14(2), 238–257. https://doi.org/10.1177/1088868309352251

- Ecker, U. K. H., Lewandowsky, S., Cook, J., Schmid, P., Fazio, L. K., Brashier, N., Kendeou, P., Vraga, E. K., & Amazeen, M. A. (2022). The psychological drivers of misinformation belief and its resistance to correction. Nature Reviews Psychology, 1(1), 13–29. https://doi.org/10.1038/s44159-021-00006-y

- Fishman, N., & Davis, N. T. (2022). Change we can believe in: Structural and content dynamics within belief networks. American Journal of Political Science, 66(3), 648–663. https://doi.org/10.1111/ajps.12626

- Gardner, W., Mulvey, E. P., & Shaw, E. C. (1995). Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models. Psychological Bulletin, 118(3), 392–404. https://doi.org/10.1037/0033-2909.118.3.392

- Garrett, R. K., & Bond, R. M. (2021). Conservatives’ susceptibility to political misperceptions. Science Advances, 7(23), eabf1234. https://doi.org/10.1126/sciadv.abf1234

- Garrett, R. K., & Weeks, B. E. (2017). Epistemic beliefs’ role in promoting misperceptions and conspiracist ideation. PLoS ONE, 12(9), e0184733. https://doi.org/10.1371/journal.pone.0184733

- Ghosh, V. E., & Gilboa, A. (2014). What is a memory schema? A historical perspective on current neuroscience literature. Neuropsychologia, 53, 104–114. https://doi.org/10.1016/j.neuropsychologia.2013.11.010

- Graesser, A. C., & Nakamura, G. V. (1982). The impact of a schema on comprehension and memory. In G. H. Bower (Ed.), Psychology of learning and motivation (Vol. 16, pp. 59–109). Academic Press. https://doi.org/10.1016/S0079-7421(08)60547-2

- Gruzd, A., & Mai, P. (2020). Going viral: How a single tweet spawned a COVID-19 conspiracy theory on Twitter. Big Data & Society, 7(2). https://doi.org/10.1177/2053951720938405

- Hammond, D. K., Gur, Y., & Johnson, C. R. (2013). Graph diffusion distance: A difference measure for weighted graphs based on the graph Laplacian exponential kernel. Proceedings of IEEE GlobalSIP, 419–422. https://doi.org/10.1109/GlobalSIP.2013.6736904

- Huang, Y., & Yang, C. (2020). A metacognitive approach to reconsidering risk perceptions and uncertainty: Understand information seeking during COVID-19. Science Communication, 42(5), 616–642. https://doi.org/10.1177/1075547020959818

- Hunter, D. R., Handcock, M. S., Butts, C. T., Goodreau, S. M., & Morris, M. (2008). Ergm: A package to fit, simulate and diagnose exponential-family models for networks. Journal of Statistical Software, 24(3), nihpa54860. https://doi.org/10.18637/jss.v024.i03

- Johns Hopkins Medicine. (n.d.). Covid-19 – myth versus fact. https://www.hopkinsmedicine.org/health/conditions-and-diseases/coronavirus/2019-novel-coronavirus-myth-versus-fact

- Jost, J. T. (2017). Ideological asymmetries and the essence of political psychology. Political Psychology, 38(2), 167–208. https://doi.org/10.1111/pops.12407

- Jost, J. T., Federico, C. M., & Napier, J. L. (2009). Political ideology: Its structure, functions, and elective affinities. Annual Review of Psychology, 60(1), 307–337. https://doi.org/10.1146/annurev.psych.60.110707.163600

- Jost, J. T., Glaser, J., Kruglanski, A. W., & Sulloway, F. J. (2003). Political conservatism as motivated social cognition. Psychological Bulletin, 129(3), 339–375. https://doi.org/10.1037/0033-2909.129.3.339

- Kruglanski, A. W. (1989). The psychology of being “right”: The problem of accuracy in social perception and cognition. Psychological Bulletin, 106(3), 395–409. https://doi.org/10.1037/0033-2909.106.3.395

- Kruglanski, A. W., & Ajzen, I. (1983). Bias and error in human judgment. European Journal of Social Psychology, 13(1), 1–44. https://doi.org/10.1002/ejsp.2420130102

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. https://doi.org/10.1037/0033-2909.108.3.480

- Lee, S., Peng, T.-Q., Lapinski, M. K., Turner, M. M., Jang, Y., & Schaaf, A. (2021). Too stringent or too Lenient: Antecedents and consequences of perceived stringency of COVID-19 policies in the United States. Health Policy OPEN, 2, 100047. https://doi.org/10.1016/j.hpopen.2021.100047

- Lewandowsky, S., Ecker, U. K. H., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest, 13(3), 106–131. https://doi.org/10.1177/1529100612451018

- Maglić, M., Pavlović, T., & Franc, R. (2021). Analytic thinking and political orientation in the corona crisis. Frontiers in Psychology, 12, 631800. https://doi.org/10.3389/fpsyg.2021.631800

- Miller, J. M. (2020). Psychological, political, and situational factors combine to boost COVID-19 conspiracy theory beliefs. Canadian Journal of Political Science, 53(2), 327–334. https://doi.org/10.1017/S000842392000058X

- Nan, X., Thier, K., & Wang, Y. (2023). Health misinformation: What it is, why people believe it, how to counter it. Annals of the International Communication Association, 47(4), 381–410. https://doi.org/10.1080/23808985.2023.2225489

- Nan, X., Wang, Y., & Thier, K. (2022). Why do people believe health misinformation and who is at risk? A systematic review of individual differences in susceptibility to health misinformation. Social Science & Medicine, 314, 115398. https://doi.org/10.1016/j.socscimed.2022.115398

- Newman, M. E. J. (2003). Mixing patterns in networks. Physical Review E, 67(2), 026126. https://doi.org/10.1103/PhysRevE.67.026126

- Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330. https://doi.org/10.1007/s11109-010-9112-2

- Peeples, L. (2020). What the data say about wearing face masks. Nature, 586(7828), 186–189. https://doi.org/10.1038/d41586-020-02801-8

- Quackenbush, R. L. (1989). Comparison and contrast between belief system theory and cognitive theory. The Journal of Psychology, 123(4), 315–328. https://doi.org/10.1080/00223980.1989.10542988

- Salvi, C., Iannello, P., Cancer, A., McClay, M., Rago, S., Dunsmoor, J. E., & Antonietti, A. (2021). Going viral: How fear, socio-cognitive polarization and problem-solving influence fake news detection and proliferation during COVID-19 pandemic. Frontiers in Communication, 5, 562588. https://doi.org/10.3389/fcomm.2020.562588

- Scheufele, D. A., & Krause, N. M. (2019). Science audiences, misinformation, and fake news. Proceedings of the National Academy of Sciences, 116(16), 7662–7669. https://doi.org/10.1073/pnas.1805871115

- Shaffer, D. W., Collier, W., & Ruis, A. R. (2016). A tutorial on epistemic network analysis: Analyzing the structure of connections in cognitive, social, and interaction data. Journal of Learning Analytics, 3(3), 9–45. https://doi.org/10.18608/jla.2016.33.3

- Shaffer, D. W., Hatfield, D., Svarovsky, G. N., Nash, P., Nulty, A., Bagley, E., Frank, K., Rupp, A. A., & Mislevy, R. (2009). Epistemic network analysis: A prototype for 21st-century assessment of learning. International Journal of Learning and Media, 1(2), 33–53. https://doi.org/10.1162/ijlm.2009.0013

- Stall, L. M., & Petrocelli, J. V. (2023). Countering conspiracy theory beliefs: Understanding the conjunction fallacy and considering disconfirming evidence. Applied Cognitive Psychology, 37(2), 266–276. https://doi.org/10.1002/acp.3998

- Stone, A. R., & Marsh, E. J. (2023). Belief in COVID-19 misinformation: Hopeful claims are rated as truer. Applied Cognitive Psychology, 37(2), 399–408. https://doi.org/10.1002/acp.4042

- van der Linden, S., Thompson, B., & Roozenbeek, J. (2023). Editorial – the truth is out there: The psychology of conspiracy theories and how to counter them. Applied Cognitive Psychology, 37(2), 252–255. https://doi.org/10.1002/acp.4054

- van Prooijen, J.-W., & Douglas, K. M. (2017). Conspiracy theories as part of history: The role of societal crisis situations. Memory Studies, 10(3), 323–333. https://doi.org/10.1177/1750698017701615

- Vitriol, J. A., & Marsh, J. K. (2021). A pandemic of misbelief: How beliefs promote or undermine COVID-19 mitigation. Frontiers in Political Science, 3. https://doi.org/10.3389/fpos.2021.648082

- Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

- Walter, N., Brooks, J. J., Saucier, C. J., & Suresh, S. (2021). Evaluating the impact of attempts to correct health misinformation on social media: A meta-analysis. Health Communication, 36(13), 1776–1784. https://doi.org/10.1080/10410236.2020.1794553

- Walter, N., & Murphy, S. T. (2018). How to unring the bell: A meta-analytic approach to correction of misinformation. Communication Monographs, 85(3), 423–441. https://doi.org/10.1080/03637751.2018.1467564

- Walter, N., & Tukachinsky, R. (2020). A meta-analytic examination of the continued influence of misinformation in the face of correction: How powerful is it, why does it happen, and how to stop it? Communication Research, 47(2), 155–177. https://doi.org/10.1177/0093650219854600

- Yee, J. L., & Niemeier, D. (1996). Advantages and disadvantages: Longitudinal vs. Repeated cross-section surveys (dot:13793). https://rosap.ntl.bts.gov/view/dot/13793

- You, K. (2021). NetworkDistance: Distance measures for networks [Computer software]. https://CRAN.R-project.org/package=NetworkDistance