ABSTRACT

This article proposes a new model to support the use of evidence by decision-makers. There has been increased emphasis over the last 15 years on the use of evidence to inform decision-making at policy and practice levels but the conceptual thinking has not kept pace with practical developments in the field. We have developed a new demand-side model, with multiple dimensions to conceptualise support for the use of evidence in decision-making. This model emphasises the need for multiple levels of engagement, a combination of interventions, a spectrum of outcomes and a detailed consideration of context.

1. Introduction

There has been increased emphasis over the last 15 years on the use of evidence to inform decision-making for development within public administrations at both policy and practice levels. This drive toward evidence-based or evidence-informed policy or practice is argued for on social, political and economic grounds, and is increasingly promoted as a key development initiative (Newman et al., Citation2012).

From a social perspective, designing development policies and programmes on the basis of ‘theories unsupported by reliable empirical evidence’ is irresponsible as it leaves as much potential to do harm as to do good (Chalmers, Citation2005: 229). There are many examples of well-intended development policies resulting in negative outcomes, for example the Scared Straight programme to reduce juvenile delinquency (Petrosino et al., Citation2003) or more recently the Virtual Infant Parenting programme to reduce teenage pregnancy (Brinkman et al., Citation2016), justifying the social and ethical imperative to consider evidence during policy design and implementation.

From a political perspective, the use of evidence in development decisions increases transparency and accountability in the policy-making process (Jones et al., Citation2012; Rutter & Gold, Citation2015). Being explicit about what factors influenced policy-making and how different factors (e.g. research evidence, interest and activist groups; government-commissioned reports) were considered can increase the defensibility of policies and public’s trust in policy proposals and public programmes (Shaxson, Citation2014).

From an economic perspective, the use of evidence can reduce the waste of scarce public resources. In the health sector, it has been estimated that as much as 85% of research – to a total value of $200 billion in 2010 alone – is wasted, that is research is not used to inform decision-making (Chalmers & Glasziou, Citation2009). Seeing that public resources fund most research, this is a threat to development. This amount of wasted recourse is likely to be higher considering that, as a result of this non-use of evidence, public policies and programmes are introduced at scale that might not achieve their assumed outcomes. This argument carries particular weight in Southern African countries in which the public sector assumes a direct developmental mandate (Stewart, Citation2014; Goldman et al., Citation2015; Dayal, Citation2016).

Over the last 15 years, the arguments above have translated into an increased support for and adoption of the evidence-based policy-making paradigm (Dayal, Citation2016). This development has originated and seen the strongest growth in the health sector where evidence-based policy-making has become the norm in policy and practice settings with established systems for the production and integration of research into policies, guidelines and standard setting (Chalmers, Citation2005). However, other areas of policy-making (e.g. social care, development, crime) have begun to experience similar developments (Stewart, Citation2015; Breckon & Dodson, Citation2016; Langer et al., Citation2016). Evidence-based policy-making has also received a particular wave of support in African governments that have driven innovation regarding the institutionalisation of evidence use, with notable examples from South Africa and Uganda (Gaarder & Briceño, Citation2010; Dayal, Citation2016).

Despite this, general support for the use of evidence has not been translated into a systematic and structured practice of evidence-based policy-making. For example, under the past two Obama administrations, a mere 1% of government funding was informed by evidence (Bridgeland & Orszag, Citation2015). A similar paradox is observed in the UK policy-making context, which arguably has gone furthest in the institutionalisation of evidence-based policy-making with the creation of the What Works Network, Scientific Advisory Posts and clearing houses such as the National Institute for Health and Clinical Excellence (NICE). Despite these efforts, a recent inquiry into the ‘Missing Evidence’ (Sedley, Citation2016) found that while spending £2.5 billion on research per year, only 4 out of 21 government departments could account for the status and whereabouts of their commissioned research evidence (let alone use it). Closer to home, in South Africa a survey of senior decision-makers found that while 45% hoped to use of evidence during decision-making, only 9% reported being able to translate this intention into practice (Paine Cronin, Citation2015).

It seems that the use of evidence to inform decision-making is challenged by a disconnect between the support for it in principle (which is widespread) and its practical application. This disconnect has led to wide-range research interest, largely focused on empirical analysis to explain and overcome this phenomenon. Barriers to evidence use have been explored in detail (Uneke et al., Citation2011; Oliver et al., Citation2014) and so have the effectiveness of interventions aiming to bridge this disconnect (Walter et al., Citation2005; Langer et al., Citation2016). This article proposes a fourth explanation: that a lack of an explicit theoretical foundation challenges the design of applied interventions aiming to support the use of evidence during decision-making. That is, our current models for conceptualising evidence-based policy-making, in particular from a demand-side perspective, can be improved to more accurately reflect the realities of decision-makers and the contexts in which they aim to use evidence.

This article proposes five important elements to consider for those supporting the use of evidence by for development. These five elements have emerged from many years of experience of providing support to public administrations and have been integrated within one model.

1.1. Current models

The majority of proposed models conceptualise evidence-based policy-making as an interplay between a supply of and demand for evidence. This body of scholarship originates in Weiss’s (Citation1979) seven-stage model of research use and Caplan’s (Citation1979) two-community model of research use. Notable refinements have since been added through the linkages and exchange model (CHSRF, Citation2000; Lomas, Citation2000); the context, evidence and links model (Crewe & Young, Citation2002); the knowledge to action framework (Graham & Tetroe, Citation2009) and many others. The proposed model developed in this article is only focused on the demand side of evidence use. That is, it only concerns activities and mechanisms that support demand-side actors (i.e. policy-makers and practitioners) in their use of evidence. It is based on many years of work in this field within Southern Africa.

This focus on the demand side of evidence use seems justified given the relative lack of research in evidence-based policy-making explicitly focusing on decision-makers (Newman et al., Citation2013), as well as the well-established limitations of pushing evidence by research professionals into policy and practice contexts (Lavis et al., Citation2003; Nutley et al., Citation2007; Milne et al., Citation2014). There is increased decision-maker interest in understanding what works to make policy and practice processes more receptive to the use of evidence. The UK Department for International Development funded a £13 million programme in 2014 to pilot different approaches to increase the capacity to use research evidence by decision-makers, including initiatives across Southern Africa. However, as with many development initiatives while this investment in demand-side mechanisms is welcome, there is a need to investigate and evaluate how best to conceptualise what is essential for these mechanisms of change to be effective.

1.2. Our experience

We have experienced first hand how this lack of a theoretical foundation affects the design of demand-side evidence-based policy-making interventions. Working across sectors in Southern African contexts and targeting a combination of different interventions working at different levels of public administrations, we found there was little theoretical guidance available to guide us. Subsequently, our underlying theory of change has mainly been informed by the literature from the health care sector; we had to consult different bodies of knowledge for each intervention applied and lacked a meta-framework to guide us on the likely effects of the combined intervention and its pathway to change (Stewart, Citation2015). With the notable exception of Newman et al. (Citation2012), our theoretical foundation was informed by the literature not explicitly focused on building demand for evidence and not tailored to our context of decision-making. Nevertheless, we developed an approach to support the use of evidence in decision-making in governments that integrated different interventions with awareness raising, capacity-building and evidence use at multiple levels, starting with support to individuals as an entry point (see Box 1). However, we have repeatedly been asked to describe our approach in terms of the interventions we offer and have found the options available too narrow to describe our activities. To some extent, these options are in line with the current literature that is asking us to position ourselves either as providing training, which is seen as essential, or as building relationships and networks, which is seen as secondary. Likewise, we have repeatedly been asked to define one level of decision-making that we work at, or choose and separate the impacts of the different interventions we use. There has been an expectation that the outcomes of our activities will be visible in changes to policies and not changes in practice. It seems that the lack of an overall model for demand-side interventions to increase the use of evidence negates an understanding of the spectrum of outcomes, interventions and levels of decision-making required for this type of engagement. We have therefore reached the conclusion that we need to share and integrate the elements that we have experienced as key when conceptualising support to increase the use of evidence particularly by public administrations.

2. Methods

In order to develop this new set of elements, we have drawn on 20 years of experience in this field, and specifically on 3 years of empirical data from the University of Johannesburg-based programme to Build Capacity to Use Research Evidence (UJ-BCURE). Our empirical data sources have included: monitoring and evaluation data from all our workshops and mentorships; engagement data from the Africa Evidence Network, ranging from those who attend our events, a survey of members, outcome diaries where we record instances of incremental changes, and reports documenting reflections developed in partnership with evidence users. We have also held a number of reflection meetings, including a programme-wide stakeholder event and a mentorship-specific reflection workshop, as well as consultations with our advisors and steering group. We have also drawn out lessons from our research and capacity-building, which has informed the identification of key elements. Having pulled together preliminary ideas, we held a writing workshop and team reflection meetings to further develop and test out our ideas.

3. Results

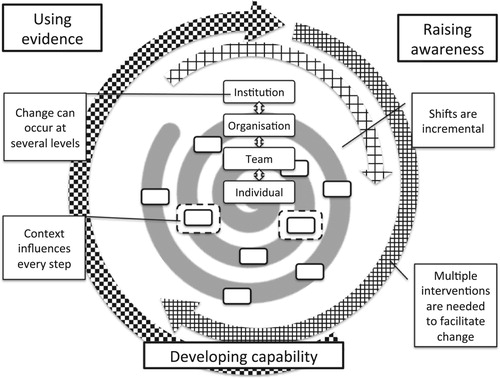

We have identified five important elements for consideration by those supporting the use of evidence in decision-making for development in Southern Africa, and further afield. We have integrated these to form five dimensions within one model. Each dimension is listed and then expanded in turn below.

Outcomes: Decision-makers move from awareness of evidence-informed decision-making for development to capability to actual evidence use and back around to awareness again.

Entry points: Decision-makers can enter the process according to their need based on a combination of two parameters: their individual position within an institution and their starting point on the awareness – capability – use cycle.

Outputs: What can be achieved increases and changes as individuals move around the cycle.

Contexts: Contextual factors are hugely influential in defining the nature and significance of the shifts that take place.

Interventions: In order to facilitate movement around the cycle, more than one approach is needed to facilitate change.

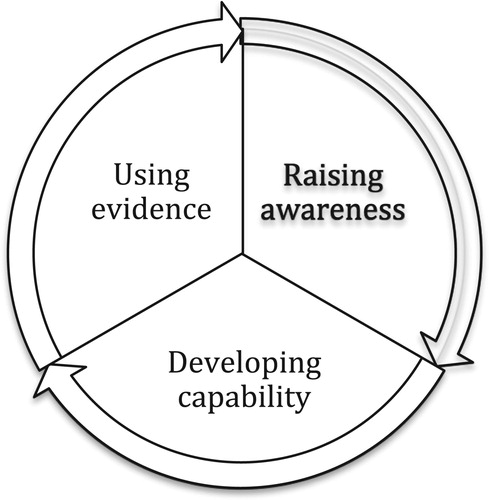

3.1. Dimension 1: outcomes

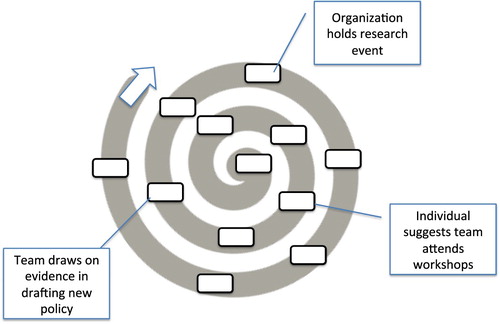

Decision-makers move from awareness of evidence-informed decision-making to capability to use evidence in decision-making, to actual use of evidence and back around to increased awareness again (). These changes happen on a continuous cycle on which awareness, capability and evidence use are not necessarily discrete outcomes, but where people can move around the cycle in small incremental steps. All three phases are important and relate to one another in a fluid rather than linear fashion. This model refutes the idea that evidence-informed decision-making is only achieved when research influences a policy. When working with groups of people, they might be starting at different points on the cycle. They do not all have to be starting at the same place. The individual’s own awareness, as well as demand within the specific government sector plays a role in determining their starting point. To facilitate change, we are therefore aiming to see shifts around the cycle, no matter how incremental they seem.

Figure 1. A model in which the outcomes awareness, capability and use of evidence are a cyclical continuum not linear steps.

Individuals have attended our workshops and taken part in our mentorships with a wide range of starting points: Some had very little awareness of evidence-informed decision-making and came to learn more, whilst others were already aware and skilled and came to explore opportunities to send colleagues along, and/or gain mentorship support. A government researcher in South Africa was new to the evidence-based approach and attended to find out what the approach was all about. In Malawi, monitoring and evaluation officers in the districts in which we worked knew about monitoring and evaluation but had not heard of evidence-informed decision-making. Others were unclear on how to use monitoring and evaluation data in decision-making and wanted to learn more. A Director in South Africa was not new to the use of evidence but came to our workshops looking for support and guidance in implementing tools in her practice. These examples all illustrate the variety of outcomes that individuals sought and how they progress around the cycle.

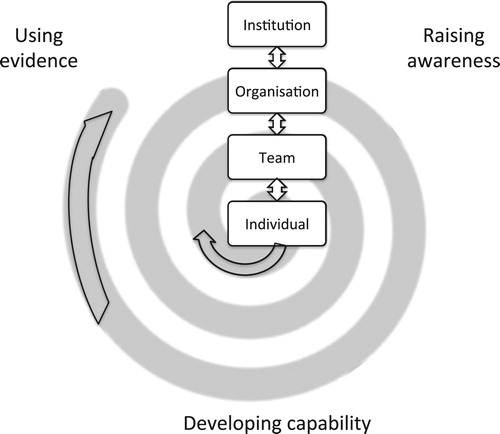

3.2. Dimension 2: entry points

The model caters for multiple entry points for decision-makers (). When supporting the use of evidence, people start at different points, not only in their engagement with the approach as described above, but also their professional position as individuals and within teams and organisations. The level at which decision-makers engage with evidence-informed decision-making needs to be taken into account when designing a programme to support evidence-informed decision-making. Even if the support to use evidence is aimed at an individual level, no individuals work in a vacuum. If the support is needs-led with a focus on real issues faced in their professional practice, then the levels of ‘team’ and ‘organisation’ inevitably get drawn in. The engagement with evidence can move up to higher levels of organisational structure. Engagement can also be driven by senior leadership sending their teams or individuals along (arrows go both ways). As such, this model can be applied to programmes that focus on high-level engagement and those that start with individuals as an entry point, and the full spectrum in between. Furthermore, they need a certain degree of flexibility as no level operates in isolation so a programme needs to be able to adapt as other levels engage with it.

Figure 2. A model that combines multiple levels in an institution with the continuous cycle between awareness, capability and use of evidence.

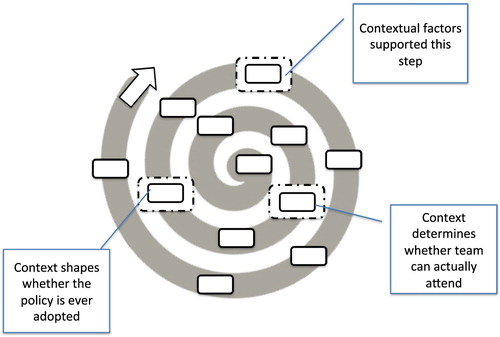

Note that as we start to engage with evidence-informed decision-making at different levels (individual, team, organisation and institution), it becomes a spiral (), enabling movement from awareness to capability to evidence use (dimension 1), as well as building from individual engagement to team engagement to organisational engagement, and indeed organisational influence on team, on individuals, etc. (dimension 2).

This movement between levels of entry and around the cycle was clearly evident in our activities. In both South Africa and Malawi, some individuals started by attending the workshops, building their awareness and knowledge and moving on to mentorships to embed their learning, develop their capability and begin to apply their learning. In South Africa, as they were promoted in their institutions, they sent along their staff for training. Some people who were already well aware of the approach and wanted more support came to the workshops to explore what we could offer (a different aspect of awareness) and then sent their team for training and negotiated a team mentorship to ensure team-level and/or organisational-level implementation of EIDM. For example, in South Africa, an individual with awareness and capability who was already working to implement EIDM sent her team along to awareness raising workshops, whilst another requested individual mentorships for his already-aware team.

3.3. Dimension 3: outputs

What can be achieved increases and changes as you move around the spiral (). We refute the idea that the introduction of a new evidence-informed policy is the only indicator of success, but rather that there are many small shifts that occur and that are also important. In addition, even where policy changes occur, there are critiques that point out that even if an evidence-informed policy is introduced, implementation and acceptance of that evidence-informed policy is not a given (Nutley et al., Citation2007; Langer et al., Citation2016). The decision itself is not an endpoint. We argue further that there are many incremental shifts, as you move around the spiral, all of which are important. We recognise that big changes are the result of multiple small steps, and that the larger changes can take many years to accumulate. One programme taking credit for a change in policy fails to recognise the many small steps that have led to that change. Similarly, judging a programme on the presence or absence of that policy change fails to understand the importance of the many increments that may have taken place. Our spiral provides scope for small changes to be recorded, whether they are influencing a future leader or introducing a new idea to someone’s thinking. We have seen this play out in practice within our programme.

We experienced a wide range of incremental shifts related to evidence used as the following examples illustrate. An attendee at our workshops commented that they understood evidence better now. One Director talked about how their team searches differently now, and are just more mindful of using/considering evidence in their tasks. A national department is in discussions of how to amend the required paperwork, which accompanies new policy drafts to indicate the evidence on which the draft is based. A team has discussed how they might encourage institutionalisation of systematic evidence maps to inform new white papers, having conducted one map as part of a UJ-BCURE team mentorship. In Malawi M&E officers at district level became aware of and gained more general EIDM skills first, but when some were mentored individually they were able to concretely apply EIDM skills to developmental problems in their communities with immediate impact on decision-making and the improvement of service delivery.

3.4. Dimension 4: contexts

Contextual factors are hugely influential in defining the nature and significance of the shifts that take place (). The spiral does not operate in a vacuum, people do not work in isolation and programmes to support evidence use are always building and interacting with other related initiatives. Contextual factors can present both barriers and facilitators to change. There is, therefore, a need to continually engage with, reflect on and respond to the environment. This needs to incorporate efforts to understand the environment, as well as analysis of issues as they arise and, where feasible, strategies to overcome or maximise them. We have seen this play out in practice within our programme.

Throughout our programme of activities, contextual factors have been identified as shaping what we are able to achieve. For example, we have conducted landscape reviews to understand the contexts in which we are working before starting our interventions and have sought to update our understanding of context throughout our activities. These efforts have been highly significant. Our engagement with one department was delayed because of a national drought requiring the attention of those we were working with. Our response was to wait until they were ready to engage with us. In one case, many months and years of investment in relationship-building have led to a trusting partnership which is now making significant impacts on the use of evidence in decision-making. Differences between government departments and contestation over remits have led to some of our initiatives gaining limited traction. Our response has been to invest more in building cross-government relationships. Working across two countries we have noticed particular contextual differences in each country. For example, in Malawi, there is a new M&E system and therefore a focus on the use of M&E data. In South Africa, where there is a more established M&E system, there has been a greater focus on the use of evaluations and evidence syntheses. There are also more practical aspects, for example, limited access to computers and the internet in Malawi, shape the potential outcomes of evidence-use initiatives as do request for per diems.

3.5. Dimension 5: interventions

If, as proposed above, the role players, their levels of engagement, the incremental changes and the contexts, all vary, then it is logical that in order to facilitate movement around the cycle, you need more than one approach to facilitate change. It does not make sense that a single intervention could achieve awareness raising, build capacity and support applied learning, or support the full range of shifts that need to accumulate to make bigger changes. Furthermore, individuals and their contexts change over time, requiring a responsive and flexible approach.

In our programme, we found that we have needed more than one approach to support change, and that those approaches needed to be flexible in their design to respond to needs (Stewart, Citation2015). For example, our workshops provided a starting point for our mentorships, increasing awareness and capability amongst colleagues, who then took up the opportunity for mentorship to help them consolidate learning and increase their use of evidence. In one example, a workshop participant used monitoring and evaluation data to support improvements in ante-natal attendance at local clinics. In this case, evidence was used to understand the problem better and improving service delivery, not to change policy per se. In some cases, the workshops provided a means for individuals and teams to establish trust in what we do (they were already aware of EIDM). Having built up trust, they engaged in the mentorship programme. In a few cases, individuals took up mentorships through being referred by others who had taken part in our workshops, rather than attending themselves. Even in these cases, the combination of interventions was key in that referral. We are aware that together our workshop and mentorship programme has achieved more than either intervention could have achieved on its own. In Malawi, workshops and group mentoring were first necessary for individuals to acquire EIDM skills before some individual mentoring could take place as the concept of EIDM had been new. Such group orientation created an environment in which there was greater acceptance of the value and practice of EIDM and therefore made individual mentoring possible.

4. Discussion

As illustrated in , we have identified five key elements relating to the provision of support for evidence use for development in Southern Africa. These have been integrated into a demand-side model, with five dimensions to conceptualise support for the use of evidence in decision-making. This model emphasises the need for multiple levels of engagement, a combination of interventions, a spectrum of outcomes and a detailed consideration of context. This model builds on and contributes to the literature of evidence-informed decision-making in a number of ways.

Figure 5. A model for increasing the use of evidence by decision-makers at multiple levels, raising their awareness, building capacity and supporting evidence use.

Contrary to most conceptual scholarship on evidence-based policy-making for development, this model starts with the evidence user. In fact, this model is exclusively focused on the evidence user and interventions aiming to increase its use. It thereby hopes to shift attention and currency away from the producers of evidence to zoom in on the actors positioned to consider evidence during decision-making. If evidence-based policy-making, as it claims, concerns the making of policy and practice decisions informed by the best available evidence, then something is missing in the models that have largely focused on the supply of evidence or the interplay between supply and demand. A model of evidence-based policy-making that does not consider and centre around the act of decision-making (or the actor as a decision-maker) risks losing legitimacy and relevance to the very institutions and individuals it aims to serve – the decision-makers. We are therefore using the term evidence-informed decision-making and positioning this model as an attempt to conceptualise efforts to improve the demand-side of evidence use. This builds in particular on the research by Newman et al. (Citation2012), who laid out initial components required to stimulate demand for research evidence. Our contribution, therefore, hopes to expand these components and to provide a coherent framework to map their interrelations.

While our concern here is exclusively on conceptualising demand-side interventions for evidence use by public administrations, we acknowledge the continued need for supply-side programmes and overall meta-frameworks of the evidence use system. We hope to echo existing calls for frameworks to become less linear and reflect more closely the realities and perspectives of all actors in the evidence-based policy-making process (Nutley et al., Citation2007; Best & Holmes, Citation2010; Stewart & Oliver, Citation2012).

This article aims to unpack and formalise the demand-side of research use providing a structured approach to combine and analyse a range of different interventions (e.g. training, mentoring, organisational change, co-production) in their contribution to decision-makers’ use of evidence for development. If, as our model proposes, there is variation in who, what, when and how changes occur around the cycle, then it is logical that in order to facilitate movement around the cycle, we need more than one approach to facilitate change (). There is clearly a need for more than one approach, and for flexibility in how these approaches are employed. Our model, therefore, attempts to conceptualise different demand-side mechanisms and interventions as mutual reinforcing. This overlaps with findings of systematic reviews on the impact of interventions aiming to improve the use of research evidence (Gray et al., Citation2013; Langer et al., Citation2016) that indicate, for example, how the effects of evidence-informed decision-making training programmes can be enhanced by a complementary change in management structures. Our model thus advocates to increasingly understand demand-side interventions as complimentary and to study their combined effects. Such a holistic structure and study of demand-side interventions might reduce the need to consult different bodies of literature and epistemic communities as encountered when conceptualising our own capacity-building programme. Newman et al. (Citation2012) identify six different approaches to capacity-building alone, while Langer et al. (Citation2016) encountered 91 interventions in their systematic review of what works to increase evidence use. Thus an overall approach to structure and analyse these diverse interventions is required.

This article highlights that interventions to encourage the use of evidence need to focus on the many stages of the cycle. By using overlapping interventions, we are able to impact along the dual continuums of awareness-capability-evidence-use and of individuals-teams-organisations (Stewart, Citation2015; Stewart et al., Citation2018). As multiple levels are considered, the use of the term ‘evidence-based policy-making’ becomes too specific. For this reason, we advocate the broader, more inclusive and realistic term of ‘evidence-informed decision-making’ (EIDM, rather than EBPM). The model encourages the careful tailoring of demand-side interventions to the levels of decision-making and their associated evidence-informed decision-making needs. The model thereby allows for consideration of multiple aspects of an individual’s work (their own position on evidence-informed decision-making), their individual, team and organisational roles, and their incremental learning: it does not put them in a box, that is it unpacks the ‘user’ to provide a more disaggregated and nuanced understanding of different types of decision-makers working in development.

Our approach conceives the use of evidence by decision-makers as a continuum. As much as this applies to refuting the conception of separate and discrete interventions, it also applies to outcomes of evidence use. By positioning evidence used as a continuum, this article attempts to counter both, a strict separation of evidence-informed decision-making awareness, capacity and decision-making processes, as well as an equation of each of them with evidence use. This evidence use continuum builds on Newman et al. spectrum of evidence-informed decision-making capacity from intangible shifts in attitudes to tangible shifts in practical skills. Thus an intangible shift such as decision-makers incorporating the question ‘where is the evidence’ in their professional vocabulary (Dayal, Citation2016) is valued as much as a decision-maker searching an academic database. We also side with Newman et al. in emphasising that evidence use is not tied to the content of the final decision at practice or policy level. For instance, if during policy development decision-makers did consider rigorous evidence, but opted not to formulate policy in line with the results of this evidence, the policy should nevertheless be regarded as evidence-informed. Such a conception of evidence use emphasises the value of incremental changes, all of which are important and should be targeted by demand-side interventions. We thereby challenges the falsehood that a new policy or a policy change is the single goal of programmes to facilitate the use of evidence and should present the measure by which to evaluate their success.

Our model leaves much room for adaptation and is in direct conversation with a number of different bodies of research. We recognise that it does not include citizen evidence specifically, nor the role of citizens in decision-making, although we would argue that it also does not exclude citizens as either generators of evidence nor as actors in decision-making. We hope our model may serve as an adaptable platform that other can develop further and integrate their ideas within; and, equally important, that our five elements might be integrated with existing evidence-use frameworks and encourage others to build on these. In terms of further development, there is a strong overlap between the conception of our outcomes with Michie et al. (Citation2011) Capability, Opportunity, Motivation–Behaviour Change (COM-B) framework of behaviour change. We have deliberately avoided the term motivation to not create confusion. A recent systematic review used Michie’s COM-B framework to conceptualise evidence use outcomes (Langer et al., Citation2016) and we would assume that further research could attempt to integrate this framework closer with our five elements. The same applies to the scholarship of French and peers (Citation2009), who have developed a detailed model of user capacities required for the adoption of evidence-based practices in health care. Their circle of capacities features a transition of 15 different capacities that could be directly integrated into our proposed model.

An additional body of highly relevant research is that led by the Overseas Development Institute (ODI) and International Network for the Availability of Scientific Publications (INASP) on how context affects evidence use. A range of recent publications (Shaxson et al., Citation2015; INASP, Citation2016; Weyrauch, Citation2016) has added a wealth of knowledge on the role and importance of context in the interaction between evidence and decision-making. What is more though, and extends beyond the mere acknowledgment that context matters greatly as in our model, is that increasingly this research and capacity-building provide active advice and guidance on how to move from an analysis of contextual factors into an active integration and embedment of these factors into evidence use interventions. Lastly, we also point to the body of scholarship on co-production and user-engagement in research (Stewart & Liabo, Citation2012, Oliver et al., Citation2015; Sharples, Citation2015). While we have not explored this in detail in our own research and capacity-building, we would be curious to see the analytical and conceptual relevance of our model for evidence-informed decision-making interventions that foster a co-production and engagement between evidence producers and users.

Naturally, this article is subject to some limitations. It is based on 20 years of experience in the development field in Southern Africa, and in particular on recent research and capacity-building conducted in two countries (Malawi and South Africa), which was large scale involving a total of 14 government departments across a wide range of sectors. It also draws on the wider experience of the authors, but there is nevertheless a risk that the model might be bound by the contexts in which it was developed. In addition, and as indicated above, this model is exclusively concerned with demand for evidence and its use. The supply of evidence is treated exogenous. We assume other scholarship to be better placed to provide a meta-framework for evidence use (Greenhalgh et al., Citation2004) and position our contribution to conceptualise a subset of these frameworks. From our experiences, our proposed model might also more accurately explain how and why evidence use occurs and what active interventions can bring about this occurrence. As a diagnostic tool to explain an existing lack of evidence use, however, the model seems to be less relevant.

Our article is also firmly anchored in the normative assumption that the use of evidence during decision-making is desirable and beneficial for development. This is an assumption implicit throughout the article and others might not agree (Hammersley, Citation2005). While important, we do not perceive these debates to have direct implications for the relevance and validity of our model.

5. Conclusion

In conclusion, we reflect on what the implications are of our article for future facilitation to increase evidence use for development. We propose that future programmes should consider multiple interventions that can be combined to meet needs as individuals, teams and organisations seek to increase their awareness, capability and implementation of evidence-informed decision-making. Importantly, we highlight the need to rethink what we define as ‘success’ to be broader than just the production of an evidence-informed policy, extending back to all the multiple steps between initial awareness, incorporating other kinds of decisions (such as not introducing a policy at all or not changing an existing one), and following any decisions forward to implementation. As a consequence of this, we highlight the need for new approaches to M&E of interventions that aim to increase evidence use and new approaches to evaluate their impact. We encourage others to test out our model in their research and capacity-building and to continue to reflect upon and improve our understanding of how we can engage with decision-makers to increase the use of evidence.

Acknowledgements

This work builds on 20 years of experience in the field with a number of colleagues and across numerous projects. We are grateful for all we have learnt along the way. In particular, this work was supported by the UK Aid from the UK Government under Grant PO 6123.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Best, A & Holmes, B, 2010. Systems thinking, knowledge and action: towards better models and methods. Evidence & Policy: A Journal of Research, Debate and Practice 6(2), 145–159. doi: 10.1332/174426410X502284

- Breckon, J & Dodson, J, 2016. Using evidence, what works? A discussion paper. Alliance for Useful Evidence, London.

- Bridgeland, J & Orszag, P, 2015. Can Government Play Money Ball? The Atlantic. http://www.theatlantic.com/magazine/archive/2013/07/can-government-play-moneyball/309389/

- Brinkman, SA, Johnson, SE, Codde, JP, Hart, MB, Straton, JA, Mittinty, MN & Silburn, SR, 2016. Efficacy of infant simulator programmes to prevent teenage pregnancy: a school-based cluster randomised controlled trial in western Australia. The Lancet. 388(10057), 2264–2271. doi: 10.1016/S0140-6736(16)30384-1

- Candian Health Services Research Foundation (CHSRF), 2000. Health services research and evidence-based decision-making. CHSFR, Ottawa, CA.

- Caplan, N, 1979. The two-communities theory and knowledge utilization. American Behavioral Scientist 22(3), 459–470. doi: 10.1177/000276427902200308

- Chalmers, I, 2005. If evidence-informed policy works in practice, does it matter if it doesn't work in theory?. Evidence & Policy 1(2), 227–242. doi: 10.1332/1744264053730806

- Chalmers, I & Glasziou, P, 2009. Avoidable waste in the production and reporting of research evidence. Obstetrics & Gynecology 114(6), 1341–1345. doi: 10.1097/AOG.0b013e3181c3020d

- Crewe, E & Young, MJ, 2002. Bridging research and policy: context, evidence and links. Overseas Development Institute, London.

- Dayal, H, 2016. Using evidence to reflect on South Africa’s 20 Years of democracy, Insights from within the Policy Space. Knowledge Sector Initiative Working Paper 7. http://www.ksi-indonesia.org/index.php/publications/2016/03/23/82/using-evidence-to-reflect-on-south-africa-s-20-years-of-democracy.html

- French, B, Thomas, LH, Baker, P, Burton, CR, Pennington, L & Roddam, H, 2009. What can management theories offer evidence-based practice? A comparative analysis of measurement tools for organisational context. Implementation Science 4, 28. doi: 10.1186/1748-5908-4-28

- Gaarder, MM & Briceño, B, 2010. Institutionalisation of government evaluation: balancing trade-offs. Journal of Development Effectiveness 2(3), 289–309. doi: 10.1080/19439342.2010.505027

- Goldman, I, Mathe, JE, Jacob, C, Hercules, A, Amisi, M, Buthelezi, T, Narsee, H, Ntakumba, S & Sadan, M, 2015. Developing South Africa’s national evaluation policy and system: first lessons learned. African Evaluation Journal 3(1), 1–9.

- Graham, ID & Tetroe, JM, 2009. Getting evidence into policy and practice: perspective of a health research funder. Journal of Canadian Academy Child Adolescence Psychiatry 18, 46–50.

- Gray, M, Joy, E, Plath, D & Webb, SA, 2013. Implementing evidence-based practice: A review of the empirical research literature. Research on Social Work Practice 23(2), 157–166. doi: 10.1177/1049731512467072

- Greenhalgh, T, Robert, G, Macfarlane, F, Bate, P & Kyriakidou, O, 2004. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Quarterly 82(4), 581–629. doi: 10.1111/j.0887-378X.2004.00325.x

- Hammersley, M, 2005. Is the evidence-based practice movement doing more good than harm? reflections on Iain Chalmers’ case for research-based policy making and practice. Evidence & Policy 1(1), 85–100. doi: 10.1332/1744264052703203

- INASP, 2016. Evidence-informed policy-making tool kit. INASP, Oxford. http://www.inasp.info/uploads/filer_public/cc/4b/cc4bd494-645e-4bc0-b07e-2261213a3ac2/introduction_to_the_toolkit.pdf

- Jones, H, Jones, N, Shaxson, L & Walker, D, 2012. Knowledge, policy and power in international development: a practical guide. Policy Press, London.

- Langer, L, Tripney, J & Gough, D, 2016. The science of using science. researching the use of research evidence in decision-making. EPPI-Centre, Social Science Research Unit, UCL Institute of Education, University College London, London.

- Lavis, JN, Robterson, D, Woodside, JM, McLeod, CB & Abelson, J, 2003. How can research organisations more effectively transfer knowledge to decision makers. Milbank Quarterly 81(2), 221–48. doi: 10.1111/1468-0009.t01-1-00052

- Lomas, J, 2000. Using linkage and exchange to move research into policy at a Canadian Foundation. Health Affairs 19(3), 236–240. doi: 10.1377/hlthaff.19.3.236

- Michie, S, van Stralen, MM & West, R, 2011. The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Science 6, 42. doi: 10.1186/1748-5908-6-42

- Milne, BJ, Lay-Yee, R, McLay, J, Tobias, M, Tuohy, P, Armstrong, A, Lynn, R, Pearson, J, Mannion, O & Davies, P, 2014. A collaborative approach to bridging the research-policy gap through the development of policy advice software. Evidence & Policy 10(1), 127–136. doi: 10.1332/174426413X672210

- Newman, K, Fisher, C & Shaxson, L, 2012. Stimulating demand for research evidence: what role for capacity building. IDS Bulletin 43(5), 17–24. doi: 10.1111/j.1759-5436.2012.00358.x

- Newman, K, Capillo, A, Famurewa, A, Nath, C & Siyanbola, W, 2013. What is the evidence on evidence informed policymaking? Lessons from the International Conference on Evidence Informed Policy Making, International Network for the Availability of Scientific Publications (INASP), Oxford.

- Nutley, SM, Walter, I & Davies, HTO, 2007. Using evidence: How research can inform public services. Policy Press, Oxford.

- Oliver, K, Innvar, S, Lorenc, T, Woodman, J & Thomas, J, 2014. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Services Research 14(1), 1. doi: 10.1186/1472-6963-14-2

- Oliver, K, Rees, R, Brady, LM, Kavanagh, J, Oliver, S & Thomas, J, 2015. Broadening public participation in systematic reviews: A case example involving young people in two configurative reviews. Research Synthesis Methods 6(2), 206–217. doi: 10.1002/jrsm.1145

- Paine Cronin, G & Sadan, M, 2015. Use of evidence in policy making in South Africa: An exploratory study of attitudes of senior government officials. African Evaluation Journal 3(1), 1–10. doi: 10.4102/aej.v3i1.145

- Petrosino, A, Turpin-Petrosino, C & Buehler, J, 2003. Scared straight and other juvenile awareness programs for preventing juvenile delinquency: A systematic review of the randomized experimental evidence. The Annals of the American Academy of Political and Social Science 589(1), 41–62. doi: 10.1177/0002716203254693

- Rutter, J & Gold, J, 2015. Show your workings: Assessing how government uses evidence to make policy. Institute for Government, London.

- Sedley, S, 2016. Missing evidence: An inquiry into the delayed publication of government commissioned Research. https://researchinquiry.org/inquiry-report/

- Sharples, J, 2015. Developing an evidence-informed support service for schools–reflections on a UK model. Evidence & Policy, 11(4), 577–587. doi: 10.1332/174426415X14222958889404

- Shaxson, L, 2014. Investing in evidence. Lessons from the UK Department for Environment, Food and Rural Affairs. http://www.ksi-indonesia.org/files/1421384737$1$QBTM0U$.pdf

- Shaxson, L, Datta, A, Matomela, B & Tshangela M, 2015. Supporting evidence approaching evidence: Understanding the organisational context for an evidence-informed policymaking. DEA and VakaYiko, Pretoria.

- Stewart, R, 2014. Changing the world one systematic review at a time: A new development methodology for making a difference. Development Southern Africa 31(4), 581–590. doi: 10.1080/0376835X.2014.907537

- Stewart, R, 2015. A theory of change for capacity building for the use of research evidence by decision makers in Southern Africa. Evidence & Policy 11(4), 547–557. doi: 10.1332/174426414X1417545274793

- Stewart, R, Langer, L, Wildemann, R, Maluwa, L, Erasmus, Y, Jordaan, S, Lötter, D, Mitchell, J & Motha, P, 2018. Building capacity for evidence-informed decision-making: an example from South Africa. Evidence & Policy 14(2), 241–258. doi: 10.1332/174426417X14890741484716

- Stewart, R & Liabo, K, 2012. Involvement in research without compromising research quality. Journal of Health Services Research and Policy 17(4), 248–251. doi: 10.1258/jhsrp.2012.011086

- Stewart R & Oliver S, 2012. Making a difference with systematic reviews. In Gough, D, Oliver S, & Thomas, J (eds), An introduction to systematic reviews. Sage, London.

- Uneke, CJ, Ezeoha, AE, Ndukwe, CD, Oyibo, PG, Onwe, F, Igbinedion, EB & Chukwu, PN, 2011. Individual and organisational capacity for evidence use in policy making in Nigeria: an exploratory study of the perceptions of Nigeria health policy makers. Evidence & Policy 7(3), 251–276. doi: 10.1332/174426411X591744

- Walter, I, Nutley, S & Davies, H, 2005. What works to promote evidence-based practice? A cross-sector review. Evidence & Policy 1(3), 335–364. doi: 10.1332/1744264054851612

- Weiss, CH, 1979. The many meanings of research utilization. Public Administration Review 39(5), 426–431. doi: 10.2307/3109916

- Weyrauch, V, 2016. Knowledge into policy: Going beyond context matters. http://www.politicsandideas.org/wp-content/uploads/2016/07/Going-beyond-context-matters-Framework_PI.compressed.pdf