?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We propose a novel variational Bayes approach to estimate high-dimensional Vector Autoregressive (VAR) models with hierarchical shrinkage priors. Our approach does not rely on a conventional structural representation of the parameter space for posterior inference. Instead, we elicit hierarchical shrinkage priors directly on the matrix of regression coefficients so that (a) the prior structure maps into posterior inference on the reduced-form transition matrix and (b) posterior estimates are more robust to variables permutation. An extensive simulation study provides evidence that our approach compares favorably against existing linear and nonlinear Markov chain Monte Carlo and variational Bayes methods. We investigate the statistical and economic value of the forecasts from our variational inference approach for a mean-variance investor allocating her wealth to different industry portfolios. The results show that more accurate estimates translate into substantial out-of-sample gains across hierarchical shrinkage priors and model dimensions.

1 Introduction

Hierarchical shrinkage priors have been shown to represent an effective regularization technique when estimating large Vector Autoregressive (VAR) models. The use of these priors often relies on a Cholesky decomposition of the residuals covariance matrix so that a large system of equations is reduced to a sequence of univariate regressions. This allows for more efficient computations as priors can be elicited on the structural VAR representation implied by the Cholesky factorization, and posterior inference is carried out equation-by-equation.

Such a conventional approach has two important implications for posterior inference: first, priors are not order-invariant, meaning that posterior inference is sensitive to permutations of the endogenous variables for a given prior specification. This is particularly relevant in high dimensions whereby logical orders of the variables of interest might be unclear, or a full search among all possible ordering combinations might be unfeasible (see, e.g., Chan, Koop, and Yu Citation2023). Second, imposing a shrinkage prior to the structural VAR formulation might not help to pin down reduced-form VAR parameters. That is, priors are not translation-invariant. This is especially relevant in forecasting applications whereby the main objective is to accurately identify predictive relationships across variables rather than to identify structural shocks.

In this article, we take a different approach toward posterior inference with hierarchical shrinkage priors in large VAR models. Specifically, we propose a novel variational Bayes estimation approach which allows for a fast and accurate estimation of the reduced-form VAR without leveraging on a conventional structural VAR representation. This allows us to elicit shrinkage priors directly on the matrix of regression coefficients so that (a) the prior structure directly maps into the posterior inference of the reduced-form transition matrix and (b) posterior estimates are less sensitive to variable permutation. We also account for the effect of “exogenous” predictors and stochastic volatility in the residuals.

The key idea is that by abstracting from the linearity constraints implied by a structural VAR formulation, one can provide a direct identification of the reduced-form VAR parameters. This could have important implications for forecasting, especially in large-scale models where the set of regression coefficients may be sparse (see, e.g., Bernardi, Bianchi, and Bianco Citation2023). Our approach is computationally more efficient than comparable Markov chain Monte Carlo (MCMC) methods while maintaining a high accuracy in posterior estimates.

We investigate the estimation accuracy using an extensive simulation study for different model dimensions and variable permutations. As benchmarks, we consider a variety of established estimation approaches developed for large Bayesian VAR models, such as the linearized MCMC proposed by Chan and Eisenstat (Citation2018) and Cross, Hou, and Poon (Citation2020) and its variational Bayes counterpart proposed by Chan and Yu (Citation2022) and Gefang, Koop, and Poon (Citation2023). Both approaches are built upon a structural VAR formulation. In addition, we compare our variational inference method against the MCMC algorithm developed by Gruber and Kastner (Citation2022), which is not constrained by a Cholesky factorization for parameter identification similar to our approach. We test each estimation method for different hierarchical priors, such as the adaptive-Lasso of Leng, Tran, and Nott (Citation2014), an adaptive version of the Normal-Gamma of Griffin and Brown (Citation2010), and the Horseshoe of Carvalho, Polson, and Scott (Citation2010).

Overall, the simulation results show that our variational Bayes algorithm represents the best tradeoff between estimation accuracy and computational efficiency. Specifically, posterior inference from our variational Bayes method is as accurate as nonlinear MCMC methods (see, e.g., Gruber and Kastner Citation2022) but is considerably more efficient. At the same time, our approach is as efficient as conventional MCMC and variational Bayes methods based on a structural VAR formulation but is significantly more accurate and less sensitive to variable permutation.

Our approach toward posterior inference in large VARs is guided by the principle that more accurate identification of the reduced-form transition matrix should ultimately lead to better out-of-sample forecasts and financial decision-making. To test this assumption, we investigate the statistical and economic value of the forecasts from our model in the context of a mean-variance investor who allocates her wealth between an industry portfolio and a risk-free asset based on lagged cross-industry returns and macroeconomic predictors.

Although the model is general and can be applied to any type of financial returns, as far as data are stationary, our focus on different industry portfolios is motivated by keen interest from researchers (see, e.g., Fama and French Citation1997; Hou and Robinson Citation2006) and practitioners alike. Indeed, the implications of industry returns predictability are arguably far from trivial. If all industries are unpredictable, the market return, a weighted average of the industry portfolios, should also be unpredictable. As a result, the abundant evidence of aggregate market return predictability (see, e.g., Rapach and Zhou Citation2013) implies that at least some industry portfolio returns should be predictable.

The main results show that our variational inference approach fares better than competing methods regarding out-of-sample point and density forecasts. More accurate forecasts translate into larger economic gains as measured by certainty equivalent return spreads vis-á-vis a naive investor who makes investment decisions based on the sample mean and variance of the returns. This holds across different hierarchical prior specifications. Overall, the empirical results support our view that by accurately identifying weak correlations between predictors and portfolio returns, one can significantly improve—statistically and economically—the out-of-sample performance of large-scale VAR models.

Our article connects to a growing literature exploring the use of Bayesian methods to estimate high-dimensional VAR models, such as Chan and Eisenstat (Citation2018), Carriero, Clark, and Marcellino (Citation2019), Huber and Feldkircher (Citation2019), Chan and Yu (Citation2022), Cross, Hou, and Poon (Citation2020), Kastner and Huber (Citation2020), Chan, Koop, and Yu (Citation2023), Chan (Citation2021), Gruber and Kastner (Citation2022), and Gefang, Koop, and Poon (Citation2023), among others. We contribute to this literature by providing a fast and accurate variational inference method which generalizes posterior inference with hierarchical shrinkage priors by abstracting from a conventional structural VAR representation.

A second strand of literature we contribute to is related to the predictability of stock returns. Specifically, we contribute to the ongoing struggle to capture the dynamics of risk premiums by looking at industry-based portfolios. Early exceptions are Ferson and Harvey (Citation1991), Ferson and Korajczyk (Citation1995), Ferson and Harvey (Citation1999) and Avramov (Citation2004). As highlighted by Lewellen, Nagel, and Shanken (Citation2010), the sample variation of industry portfolios is particularly elusive to model since conventional risk factors do not seem to capture significant comovements. We contribute to this literature by investigating the out-of-sample predictability of industry portfolios through the lens of a novel estimation method for large Bayesian VAR models.

2 The Choice of Model Parameterization

Let be a multivariate normal random variable and denote by

a vector of covariates at time t. A vector autoregressive model with exogenous covariates and stochastic volatility is defined in compact form as

(1)

(1) with

and

consistently partitioned, where

is the transition matrix containing the autoregression coefficients and

is the matrix of regression parameters for the exogenous predictors. Here,

is a sequence of uncorrelated innovation terms such that

with

and

being a symmetric and positive-definite time-varying precision matrix. A modified Cholesky factorization of

can be conveniently exploited to re-write the model with orthogonal innovations (see, e.g., Rothman, Levina, and Zhu Citation2010).

Let , where

is unit-lower-triangular and

is diagonal with time-varying elements

. By multiplying both sides of (1) by

one can obtain two alternative re-parameterizations of the same model:

(2a)

(2a)

(2b)

(2b) where

, and B has a strict-lower-triangular structure with elements

for

and

. The key difference is that (2a) is nonlinear in the parameters, while (2b) is linear. The latter is the structural VAR representation, widely used in existing MCMC and variational Bayes estimation methods for high-dimensional VAR models (see, e.g., Chan and Eisenstat Citation2018; Chan and Yu Citation2022; Gefang, Koop, and Poon Citation2023). Instead, (2a) is the reduced-form parameterization at the core of our variational inference approach. This has been used in the context of MCMC algorithms for smaller dimensions (see, e.g., Huber and Feldkircher Citation2019; Gruber and Kastner Citation2022).

From (2a)–(2b) one can obtain two alternative equation-by-equation representations in which the jth component of is defined as

(3a)

(3a)

(3b)

(3b) for all

and

, where

is a row vector containing the non-null elements in the jth row of B, and

denote the jth row of

and A, respectively. For any

, let

denotes the vector of residuals up to the

th regression, with

and

the sub-matrix containing the first j–1 rows of

. We follow Gefang, Koop, and Poon (Citation2023) and Chan and Yu (Citation2022) and model the time variation in

assuming a log-volatility process

with

, where the initial state

, is unknown.

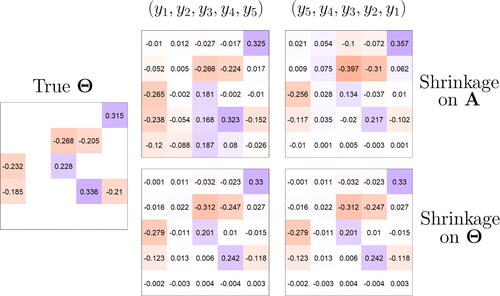

A discussion on variable permutation

Existing Bayesian approaches for large VAR models often rely on the structural representation in (2b), and therefore consider the elements in A as the parameters of interest. This simplifies the implementation of MCMC (see, e.g., Chan and Eisenstat Citation2018) and variational Bayes algorithms (see, e.g., Gefang, Koop, and Poon Citation2023). Under the re-parameterization , each element

–which denotes the (i, j)-entry of

–is a linear combination

, where

and

are the (i, j)-entry of A and

, respectively.

This raises two main issues: first, does not imply

, that is a shrinkage prior on A does not preserve the structure of

. Second, the estimate

for a given prior is sensitive to variables permutation due to its dependence on the Cholesky factorization (see, e.g., Chan, Koop, and Yu Citation2023). provides a visual representation of this issue by comparing the estimates obtained from a horseshoe prior on (2a) versus (2b), for two different permutations of

.

Fig. 1 Comparison between the posterior inference obtained from (first row) and the original parameterization

(second row), for two different permutations of

.

This simple example suggests that the estimates diverge from the true

and are sensitive to variable permutation. Instead, inference based on (2a) provides a more accurate identification of

, less sensitive to variable permutation.

3 Variational Bayes Inference

A variational approach to Bayesian inference requires to minimize the Kullback-Leibler () divergence between an approximating density

and the true posterior density

, where

denotes the set of parameters of interest. Ormerod and Wand (Citation2010) show that minimizing the

divergence can be equivalently stated as the maximization of the “effective lower bound” (ELBO) denoted by

:

(4)

(4) where

represents the optimal variational density and

is a space of density functions. Depending on the assumption on

, one falls into different variational paradigms. For instance, given a partition of the parameters vector

, a mean-field variational Bayes (MFVB) approach assumes a factorization of the form

. A closed-form expression for each optimal variational density

can be defined as

(5)

(5)

where the expectation is taken with respect to the joint approximating density with the jth element of the partition removed

. This allows the implementation of an efficient iterative algorithm to estimate the optimal density

. However, some components

may remain too complex to handle and further restrictions are needed. If we assume that

belongs to a pre-specified parametric family of distributions, the MFVB outlined above is sometimes labeled as semi-parametric (see Rohde and Wand Citation2016).

3.1 Optimal Variational Densities

We present a factorization of the variational density for the model in (2a). As a baseline, we consider a non-informative Normal prior for each entry of

; that is,

, for

and

. In addition, let

for

, and

, for

and

. Here,

denotes the Inverse-Gamma distribution, and

and

are the related hyper-parameters.

Let be the set of parameters of interest, the corresponding variational density can be factorized as

, where:

(6)

(6)

For ease of exposition, we summarize in the main text the optimal variatonal density for the main parameters of interest for the baseline non-informative prior and three alternative hierarchical shrinkage priors. The full derivations of the optimal variational densities

, and

are reported in Appendix B as Proposition B.1.1, B.1.7, and B.1.4, respectively. We leave to Proposition B.1.3 in Appendix B the derivations for the constant volatility case with

and

for

, where

denotes the gamma distribution, and

. Appendix B also provides the analytical form of the lower bound for each set of parameters.

Proposition 3.1 provides the optimal variational density for the jth row of under the Normal prior specification

. The proof and analytical derivations are reported in Appendix B.1.

Proposition 3.1.

The optimal variational density for is

with hyper-parameters:

(7)

(7) where

and

denotes the jth row of

Note that although the multivariate model is reduced to a sequence of univariate regressions, the analytical form of the variational means in Proposition 3.1 depends on all the other rows through

. As a result, the estimates of

explicitly depend on all of the other

. This addresses the issue in the MCMC algorithm of Carriero, Clark, and Marcellino (Citation2019), which has been highlighted by Bognanni (Citation2022) and corrected by Carriero et al. (Citation2022).

Bayesian adaptive-Lasso

The Bayesian adaptive-Lasso of Leng, Tran, and Nott (Citation2014) extends the original work of Park and Casella (Citation2008) by assuming a different shrinkage for each regression parameter based on a Laplace distribution with an individual scaling parameter , for

and

. The latter can be represented as a scale mixture of Normal distributions with an exponential mixing density,

. The scaling parameters

are not fixed but are inferred from the data by assuming a common hyper-prior distribution

, where

.

Let be the vector

augmented with the adaptive-Lasso prior parameters. The distribution

can be factorized as,

(8)

(8)

Proposition 3.2 provides the optimal variational density for the jth row of under the Bayesian adaptive-Lasso prior specification

, and

. The proof and the analytical derivations are reported in Appendix B.2.

Proposition 3.2.

The optimal variational density for is

with

, where

is a diagonal matrix with elements

. The parameters

and

are as in Proposition 3.1. The optimal variational densities of the scaling parameters are

with

defined in (B.20), and

with

defined in (B.19).

Adaptive Normal-Gamma

We expand the original Normal-Gamma prior in Griffin and Brown (Citation2010) by assuming that each regression coefficient has a different shrinkage parameter. The hierarchical specification requires that , and

for

and

. Note that by restricting

one obtains the adaptive-Lasso prior. The marginalization over the variance

leads to

which corresponds to a Variance-Gamma distribution. The hyper-parameters ηj and

are not fixed but are inferred from the data by assuming two common hyper-priors

and

, where

for l = 1, 2, 3.

Let be the vector

augmented with the parameters of the adaptive Normal-Gamma prior. The joint distribution

can be factorized as,

(9)

(9)

Proposition 3.3 provides the optimal variational density for the jth row of under an adaptive Normal-Gamma specification

and

. The proof and analytical derivations are reported in Appendix B.3.

Proposition 3.3.

The optimal variational density for is

with

, where

is a diagonal matrix with elements

. The parameters

and

are as in Proposition 3.1. The optimal variational densities of the scaling parameters are

with

defined in (B.24), and

is a generalized inverse normal distribution with

defined in (B.23).

The optimal density for the parameter ηj is not a known distribution function. Proposition B.3.3 in Appendix B.3 provides an analytical approximation of its moments so that the optimal density can be calculated via numerical integration.

Horseshoe prior

We consider the Horseshoe prior as proposed by Carvalho, Polson, and Scott (Citation2009, Citation2010). This is based on the hierarchical specification , where

denotes the standard half-Cauchy distribution with probability density function equal to

. The Horseshoe is a global-local prior that implies an aggressive shrinkage of weak signals without affecting the strong ones (see, e.g., Polson and Scott Citation2011). We follow Wand et al. (Citation2011) and leverage on a scale mixture representation of the half-Cauchy distribution as,

(10)

(10) where the local and global shrinkage parameters are

and

, respectively.

Let be the vector

augmented with the parameters of the Horseshoe prior. The joint distribution

can be factorized as,

(11)

(11)

Proposition 3.4 provides the optimal variational density for the jth row of under the Horseshoe prior outlined in (10). The proof and analytical derivations are reported in Appendix B.4.

Proposition 3.4.

The optimal variational density for is

with

, where

is a diagonal matrix with elements

. The parameters

and

are as in Proposition 3.1. The optimal variational densities for the global shrinkage is

with

defined in (B.33), and

with

defined in (B.35). The optimal variational densities for the local shrinkage parameters are

and

, with

and

defined in (B.32) and (B.34), respectively.

3.2 From Shrinkage to Sparsity

In addition to computational tractability, shrinking rather than selecting is a defining feature of the hierarchical priors outlined in Section 3.1. Yet, posterior estimates of are non-sparse and thus can not provide exact differentiation between significant versus nonsignificant predictors. The latter is particularly relevant since we ultimately want to assess the accuracy of our variational inference approach—versus existing MCMC and variational Bayes algorithms—in identifying the exact structure of

.

To address this issue, we build upon Ray and Bhattacharya (Citation2018) and implement a Signal Adaptive Variable Selector (SAVS) algorithm to induce sparsity in , conditional on a given prior. The SAVS is a post-processing algorithm which divides signals and nulls on the basis of the point estimates of the regression coefficients (see, e.g., Hauzenberger, Huber, and Onorante Citation2021). Specifically, let

be the posterior estimate of

and

be the associated vector of covariates. If

we set

, where

denotes the Euclidean norm.

The reason why we rely on the SAVS post-processing to induce sparsity in the posterior estimates is threefold. First, as highlighted by Ray and Bhattacharya (Citation2018), the SAVS represents an automatic procedure in which the sparsity-inducing property directly depends on the effectiveness of the shrinkage performed on . This refers to the precision of the posterior mean estimates; that is, the more accurate is

, the more precise is the identification of the nonzero elements in

. Second, the SAVS is “agnostic” with respect to the shrinkage prior or estimation approach adopted, so it represents a natural tool to compare different estimation methods. Third, it is decision-theoretically motivated as it grounds on the idea of minimizing the posterior expected loss (see, e.g., Huber, Koop, and Onorante Citation2021).

In addition to SAVS, we expand on Hahn and Carvalho (Citation2015) and provide a multivariate extension to their least-angle regression originally built for univariate regressions. Appendix D.2 provides the full derivation as well as a complete discussion of the drawbacks compared to SAVS. In addition, Appendix D.2 reports the results of a direct comparison between the SAVS and our multivariate extension to Hahn and Carvalho (Citation2015) based on simulated data.

3.3 Variational Predictive Density

Consider the posterior distribution given the information set

and the conditional likelihood

. A standard predictive density takes the form,

(12)

(12)

Given an optimal variational density that approximates

, we follow Gunawan, Kohn, and Nott (Citation2020) and obtain the variational predictive distribution

(13)

(13)

Although an analytical expression for (13) is not available, a simulation-based estimator for can be obtained through Monte Carlo integration by averaging

over the draws

, such that

. Note that a complete characterization of the optimal variational predictive density entails

with

. Proposition 3.5 shows that conditional on L and

, the optimal distribution of

can be approximated by a d-dimensional Wishart distribution

, where δt and

are the degrees of freedom parameter and the scaling matrix, respectively.

Proposition 3.5.

The approximate distribution of

is

, where the scaling matrix is given by

and

can be obtained numerically as the solution of a convex optimization problem.

The complete proof is available in Appendix C.1 and is based on the Expectation Propagation (EP) approach proposed by Minka (Citation2001). In order to implement this approach, there is no need to know , but it is sufficient to be able to compute

. The latter can be reconstructed based on the optimal variational densities of the Cholesky factor

–and therefore for L–and of

. The simulation results in Appendix C.1 show that the proposed Wishart distribution provides an accurate approximation of

for both small and large dimensional models.

Based on Proposition 3.5, we can further simplify (13) by integrating such that

(14)

(14) where

denotes the probability density function of a multivariate Student-t distribution

with mean

, scaling matrix

, and degrees of freedom parameter

. As a result, the predictive distribution can be more efficiently approximated by averaging the density of the multivariate Student-t

over the draws

, for

, such that

.

Note that the main advantage of the approximation obtained from Proposition 3.5 is to allow for a considerably faster computation of the variational predictive density, compared to using and

as stationary distributions to sample

, similar to an MCMC. This is because the scaling matrix of the Wishart distribution is available in closed form, and the computation of degrees of freedom requires only a one-dimensional optimization. In Appendix C.2, we discuss a further simplification that minimizes the KL divergence between the multivariate Student-t and a multivariate Normal distribution.

4 Simulation Study

In this section, we report the results of an extensive simulation study designed to compare the properties of our estimation approach against both MCMC and variational Bayes methods for large VAR models. To begin, we compare our VB algorithm against the MCMC approach of Chan and Eisenstat (Citation2018) and Cross, Hou, and Poon (Citation2020) and the variational inference algorithm proposed by Chan and Yu (Citation2022) and Gefang, Koop, and Poon (Citation2023). Both approaches are built upon the structural VAR representation in (2b). In addition, we also compare our VB method against the MCMC approach developed by Huber and Feldkircher (Citation2019) and Gruber and Kastner (Citation2022), which is based upon a nonlinear parameterization as in (2a).

For comparability with Gruber and Kastner (Citation2022) and Gefang, Koop, and Poon (Citation2023), which do not consider the presence of exogenous predictors, we consider a standard VAR(1) as a data generating process. Consistent with the empirical implementations, we set T = 360 and d = 30, 49. The choice of d is due to the two alternative industry classifications explored in the main empirical analysis. We assume either a moderate—50% of zeros—or a high—90% of zeros—level of sparsity in the true matrix . The latter is generated as follows: we fix to zero

entries at random, with

and d = 30, 49, and the remaining nonzero coefficients are sampled from a mixture of two normal distributions with means equal to ±0.08 and standard deviation 0.1. Appendix D provides additional details on the data-generating process and additional simulation results for d = 15.

4.1 Estimation Accuracy

As a measure of point estimation accuracy, we first look at the Frobenius norm . The latter measures the difference between the true

observed at each simulation and its estimate

. In addition, we compare the ability of each estimation method to identify the nonzero elements in the true

based on the F1 score. This is expressed as a function of counts of true positives (tp), false positives (fp) and false negatives (fn),

The F1 score takes value one if identification is perfect, that is, no false positives and no false negatives, and zero if there are no true positives. We compute both measures of estimation accuracy on N = 100 replications and compare each estimation method and prior specification. The estimates from the MCMC specifications are based on 5000 posterior simulations after discarding the first 5000 as a burn-in sample.

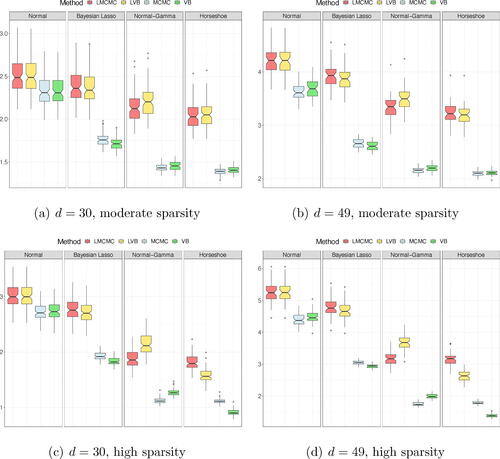

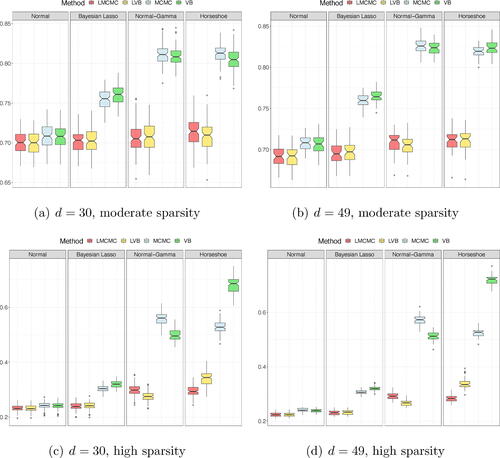

Point estimates

shows the box charts summarizing the Frobenius norm across N = 100 replications. We label the linearized MCMC and variational methods with LMCMC and LVB, respectively, with MCMC the nonlinear method of Gruber and Kastner (Citation2022) and with VB our variational inference method. To increase readability, we separate the results by prior and color-coding the four estimation methods. For instance, for a given subplot, we report the results for the Normal, adaptive-Lasso, adaptive Normal-Gamma and Horseshoe priors from the left to the right panel. Within each panel, the simulation results for the LMCMC, LVB, MCMC, and VB estimates are reported in red, yellow, light blue, and green, respectively.

Fig. 2 Frobenius norm of across N = 100 replications, for different shrinkage priors and different inference methods.

Beginning with the moderate sparsity case (top panels), the simulation results show that LMCMC and LVB approaches tend to perform equally across different shrinkage priors, with the only exception of the Normal-Gamma prior, whereby LMCMC slightly outperforms LVB. However, the discrepancy between the two structural VAR representation methods increases when sparsity becomes more pervasive (see bottom panels).

Overall, the simulation results support our view that by eliciting shrinkage priors directly on —as per the parameterization in (2a)—the accuracy of the posterior estimates improves. The mean squared errors obtained from MCMC and VB are lower compared to both LMCMC and LVB. This holds for all priors and model dimensions. The accuracy with d = 30 of the MCMC and VB is virtually the same. Yet, with d = 49, our VB is slightly more accurate than MCMC for the adaptive-Lasso and the Horseshoe prior.

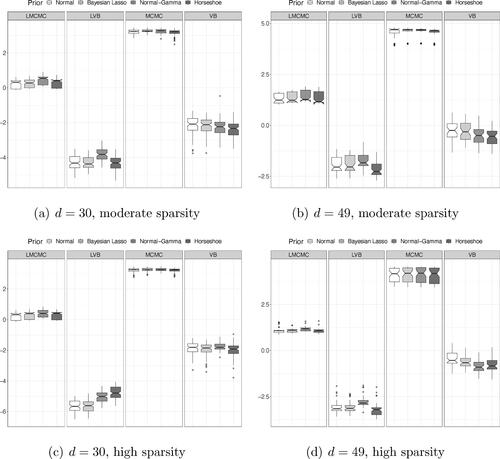

Sparsity identification

shows the box charts of F1 scores across N = 100 simulations. The labeling is the same as in . Both LMCMC and LVB produce a rather dismal identification of the nonzero elements in across prior and model dimensions. This is due to the fact that

in (2b) so that a sparse

does not translate into a sparse

, and thus is less accurate in identifying the nonzero coefficients in the true

. When the level of sparsity increases, so does the divergence between A and

.

Fig. 3 F1 score computed across N = 100 replications by looking at the true non-null parameters in and the non-null parameters estimated based on

.

Consistent with our argument in favor of the parameterization in (2a), both the MCMC and VB approaches produce a more accurate identification of the nonzero coefficients in , as shown by the F1 score. The gap between LMCMC, LVB, versus MCMC and VB becomes larger for higher levels of sparsity. This result holds across different hierarchical shrinkage priors and for different model dimensions. Yet, our VB approach turns out to be more accurate than MCMC under the adaptive-Lasso and Horseshoe priors for higher sparsity levels.

Note that sparsity in the posterior estimates for for different hierarchical shrinkage priors is induced in the simulation results by using the SAVS algorithm of Ray and Bhattacharya (Citation2018). Appendix D.2 provides additional simulation results obtained by implementing a multivariate version of the post-processing method proposed by Hahn and Carvalho (Citation2015) as an alternative to the SAVS. The F1 scores are largely the same across methods; in fact, the evidence is even more in favor of our VB, compared to its MCMC counterpart when using the extended Hahn and Carvalho (Citation2015) approach: our VB is more accurate than MCMC with a Normal-Gamma prior.

Computational efficiency

reports the computational time—expressed in a log-minute scale—required by each competing estimation approach under different shrinkage priors. To highlight the performance for a given prior, we separate the results by estimation methods and color-coding the four different shrinkage priors. For instance, for a given subplot, we report the results for the LMCMC, LVB, MCMC, and VB estimates from left to right panel. Within each panel, the Normal, adaptive-Lasso, adaptive Normal-Gamma, and Horseshoe priors are colored in shades of gray from light (left) to dark (right) gray, respectively. To guarantee more reliable comparability, we re-coded all competing methods in Rcpp and used the same 2.5 GHz Intel Xeon W-2175 with 32GB of RAM for all implementations. This allows us to compare all methods on an equal footing.

Fig. 4 Computational time required by each estimation approach for different hierarchical shrinkage priors. The time is expressed on a log-minute scale.

The results highlight that our VB approach has a clear computational advantage compared to linear and nonlinear MCMC methods. For instance, for d = 30, our VB is more than 100 times faster than the MCMC of Gruber and Kastner (Citation2022) and more than 10 times faster than the LMCMC of Cross, Hou, and Poon (Citation2020), respectively. The gap in favor of our VB method increases in larger dimensions; for d = 49, the MCMC approach takes on average almost 60 min to generate posterior estimates which are comparably accurate to our VB, which instead takes on average between 30–40 sec for the estimate. Such an efficiency gap has profound implications for a practical forecasting implementation, especially within the context of recursive predictions with higher frequency data such as stock returns (see Section 5.2). Perhaps not surprisingly, the LVB approach of Chan and Yu (Citation2022) and Gefang, Koop, and Poon (Citation2023) is highly competitive in terms of computational efficiency. However, being built on a structural VAR formulation, and show that the computational efficiency of the LVB approach comes at the cost of substantially lower estimation accuracy.

Appendix E.1 provides a further qualitative discussion on the computational costs of some of the existing MCMC approaches. We review some of the results reported in the original papers and show that these largely align with our own findings. In addition, we also discuss some of the limitations of the nonlinear MCMC for the recursive forecasting implementation.

Robustness to variable permutation

At the paper’s outset, we argue that a conventional structural VAR formulation potentially generates posterior estimates sensitive to variable permutation. That is posterior estimates of depend on the ordering imposed on the target variables

, conditional on a given prior. To highlight this issue, in Appendix D, we report additional simulation results for all estimation methods and shrinkage priors under variable permutation. The results show that the accuracy of the posterior estimates from both LMCMC and LVB changes once the variable’s ordering is reversed (see ). This is especially evident for the Normal-Gamma and Horseshoe priors and when zero coefficients in

are more pervasive. On the other hand, the estimation accuracy of both the MCMC approach of Gruber and Kastner (Citation2022) and our VB method do not substantially deteriorate by arbitrarily changing the ordering of the target variables.

Note that although our approach provides estimates and point predictions that are robust with respect to variable ordering, density forecasts might differ. To address this issue, Arias, Rubio-Ramirez, and (Citation2023) propose a time-varying correlation matrix model based on the parameterization of Archakov and Hansen (Citation2021). Still, the latter is computationally intensive and may not be suitable for large datasets. As shown by Chan, Koop, and Yu (Citation2023), a decomposition , where L is an unrestricted square matrix rather than lower-triangular, represent a valid alternative toward permutation invariance in high-dimensional settings.

5 A Empirical Study of Industry Returns Predictability

We investigate both the statistical and economic value of our variational Bayes approach within the context of US industry returns predictability. To expand the scope of the empirical exercise, we consider two alternative industry aggregations: d = 30 industry portfolios from July 1926 to May 2020 and a larger cross-section of d = 49 industry portfolios from July 1969 to May 2020. The size of the cross sections changes due to a different industry classification. At the end of June in year t, each NYSE, AMEX, and NASDAQ stock is assigned to an industry portfolio based on its four-digit SIC code. Thus, the returns on a given value-weighted portfolio are computed from July of t to June of t + 1. The sample periods cover major events, from the great depression to the Covid-19 outbreak.

In addition to cross-industry portfolio returns, we consider a variety of predictors, such as the returns on the market portfolio (mkt), and the returns on four alternative long-short investment strategies based on market capitalization (smb), book-to-market ratios (hml), operating profitability (rmw) and firm investments (cma) (see Fama and French Citation2015). We also consider a set of additional macroeconomic predictors from Goyal and Welch (Citation2008), such as the log price-dividend ratio (pd), the difference between the long term yield on government bonds and the T-bill (term), the BAA-AAA bond yields difference (credit), the monthly log change in the CPI (infl), the aggregate market book-to-market ratio (bm), the net-equity issuing activity (ntis) and the corporate bond returns (corpr).

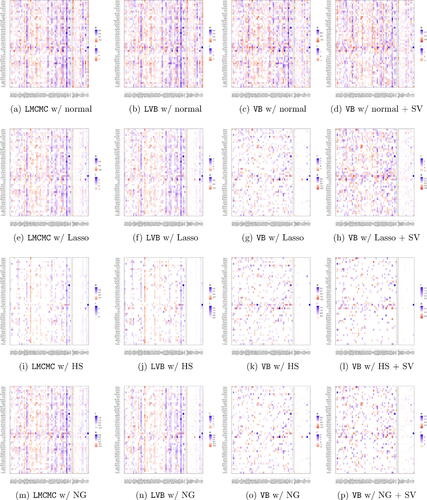

5.1 In-Sample Parameter Estimates

In order to highlight some of the main properties of each method, we first report the in-sample estimates of for the d = 49 industry case for all priors. compares

based on the full sample obtained from the LMCMC and the LVB with constant volatility, and our VB with and without stochastic volatility. Appendix E.3 reports the additional in-sample estimates for d = 30 industry portfolios.

Fig. 5 Variational Bayes estimates of the regression coefficients for different estimation methods. We report the estimates for the d = 49 industry case obtained for all priors. We report the results for VB with and without stochastic volatility.

The in-sample estimates highlight three key results. First, there are visible differences across shrinkage priors. For instance, the Horseshoe tend to shrink parameters more aggressively toward zero so that is more sparse than, for example, the adaptive Normal-Gamma. Second, consistent with Gefang, Koop, and Poon (Citation2023), the estimates of the LMCMC and LVB tend to be closely related. Yet, these in-sample estimates are substantially different compared to our VB approach. This is due to the parameterization

in (2b). Third, with the exception of the adaptive-Lasso prior, the estimates

from VB are remarkably stable between constant versus stochastic volatility specifications.

5.2 Out-of-Sample Forecasting Accuracy

Intuitively, different estimates of should be reflected in different conditional forecasts. To test this intuition, we now compare the LMCMC, LVB, and the VB estimation approaches with and without stochastic volatility. For completeness, we also consider a series of univariate model specifications (U henceforth), which is akin to assuming conditional independence across industry portfolios. We consider a 360-month rolling window period for each model estimation; for instance, for the 30-industry classification, the out-of-sample period is from July 1957 to May 2020.

Given the recursive nature of the empirical implementation and the extensive out-of-sample period, we do not consider the MCMC approach of Gruber and Kastner (Citation2022). This is because the computational cost would make such implementation prohibitive in practice, as discussed in the simulation study based on . For instance, on a 2.5 GHz Intel Xeon W-2175 with 32GB of RAM and 14 cores, it would take min, or 42 days, to recursively implement the MCMC approach for the 30 industry portfolios with constant volatility. The computational cost would be even larger when adding stochastic volatility or for the 49 industry portfolios. Appendix E.1 provides a complete discussion of the computational costs of some of the existing MCMC approaches and the key relevance for a higher-frequency forecasting implementation, such as ours.

Point forecasts

We begin by inspecting the accuracy of point forecasts for each industry based on the out-of-sample predictive R2 (see, e.g., Goyal and Welch Citation2008),

where t0 is the date of the first prediction,

is the naive forecast from the recursive mean–using the same rolling window of observations–and

is the conditional mean returns for industry

for a given estimation method

.

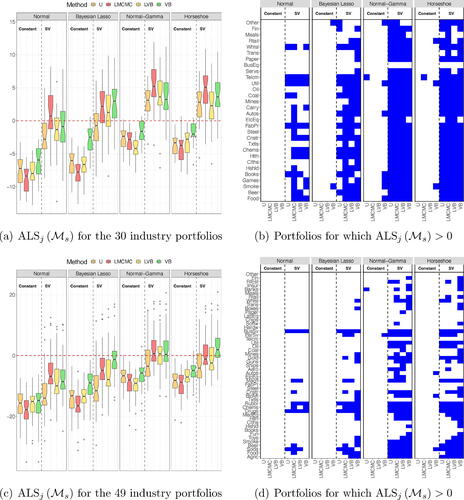

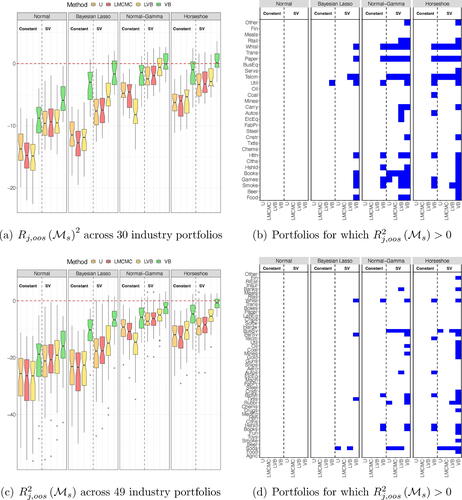

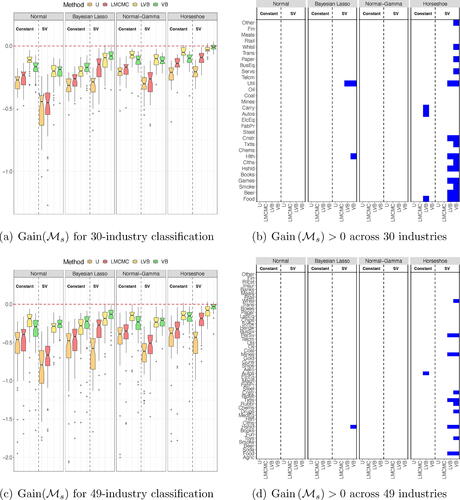

The left panels of show the box charts with the distribution of the over the

industries. For a given subplot, the results for the Normal, Bayesian Lasso, Normal-Gamma and Horseshoe priors are reported from the left to the right. Within each panel of a subplot, the forecasting results for the U, LMCMC, LVB, and VB estimates are color-coded in orange, red, yellow, and green (from left to right), respectively. The vertical dashed line within each panel separates between constant and stochastic volatility specifications. Based on the same separation across methods and priors, the right panels of report a breakdown of the industries for which

.

Fig. 6 Left panels report the (in %) across industry portfolios. Right panels report the industries for which a given model can generate

. The top (bottom) panels report the results for 30 (49) industry portfolios.

The out-of-sample tends to be mostly negative across estimation methods and shrinkage priors. This is consistent with the existing evidence on stock returns predictability: a simple naive forecast based on a rolling sample mean represents a challenging benchmark to beat (see, e.g., Campbell and Thompson Citation2007). However, our variational inference approach substantially improves upon univariate regressions and the LMCMC, LVB methods, which are both based on a structural VAR representation. For instance, our VB with stochastic volatility generates a positive

for more than half of the 30 industry portfolios based on the adaptive Normal-Gamma and the Horseshoe. This compares to 4 (adaptive Normal-Gamma) and 3 (Horseshoe) positive

obtained from LMCMC with stochastic volatility. The gap further increases for the 49-industry classification; our VB method is virtually the only approach that can systematically generate positive

across industries. Although more concentrated on the Horseshoe prior, the out-performance of our method relative to both LMCMC and VB holds across different priors.

Density forecasts

We follow Fisher et al. (Citation2020) and assess the accuracy of the density forecasts across priors and estimation methods based on the average log-score (ALS) differential with respect to a “no-predictability” benchmark,

(15)

(15) where

denotes the log-score at time t for industry j obtained by evaluating a Normal density with the conditional mean and variance forecast from the model

. Consistent with the rationale of the no-predictability benchmark in

, the log-score for

is constructed by evaluating a Normal density based on recursive mean and variance.

reports the results. The labeling is the same as in . The results show that by adding stochastic volatility, the accuracy of density forecasts substantially improves across priors and estimation methods. For instance, our VB method with stochastic volatility generates positive log-score differentials for almost all of the portfolios for the 30 industry classification and for more than half of the 49 industry portfolios. Interestingly, when it comes to density forecasts rather than modeling expected returns, the Gefang, Koop, and Poon (Citation2023) variational method built on a structural VAR representation performs on par with our VB method. This is likely due to stochastic volatility alone since our VB still stands out within the constant volatility specifications. Overall, our VB approach outperforms the competing estimation methods under all prior specifications.

Returns predictability over the business cycle

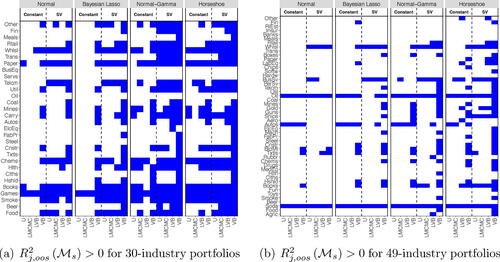

Existing literature suggests that expected returns are counter-cyclical and that returns predictability is more pronounced during periods of economic contractions versus expansions (see, e.g., Rapach, Strauss, and Zhou Citation2010). Thus, in the following, we investigate if the forecasting performance of our estimation framework changes over the business cycle. More precisely, we split the data into recession and expansionary periods using the NBER dates of peaks and troughs. This information is considered ex-post and is not used at any time in the estimation and/or forecasting process. We compute the corresponding for the recession periods only.

reports the industries for which for both the 30 (left panel) and the 49 (right panel) industry classification. The corresponding cross-sectional distribution of the

and the relative log-scores are reported in Appendix E.2. The labeling of is the same as in . By comparing with the full sample, the results suggest that the accuracy of the predictions substantially improves across methods and priors. Nevertheless, our VB method outperforms the naive forecast from the rolling mean for a larger fraction of industry portfolios compared to other methods, in particular when stochastic volatility is added to the model. The difference between the recession and the full-sample performance persists when considering the 49 industry classification, especially for the adaptive Normal-Gamma and the Horseshoe prior.

5.3 Economic Evaluation

A positive predictive performance does not necessarily translate into economic value. However, in practice, an investor is keenly interested in the economic value of returns predictability, perhaps even more than the statistical performance. Hence, it is of paramount importance to evaluate the extent to which apparent gains in predictive accuracy translate into better investment performances.

Following existing literature (see, e.g., Goyal and Welch Citation2008; Rapach, Strauss, and Zhou Citation2010), we consider a representative investor with a single-period horizon and mean-variance preferences who allocates her wealth between an industry portfolio and a risk-free asset. Thus, the investor optimal allocation to stocks for period t + 1 based on information at time t is given by , where

represents the expected return for industry

and

the corresponding volatility forecast at time t. We also constrain the weights for each industry to

to prevent extreme short sales and leveraged positions. We assume a risk aversion coefficient of γ = 5.

reports the average utility gain—in monthly %—obtained by using a given forecast instead of the recursive sample mean

. The average utility for a given model is calculated as

where

and

represent the sample mean and variance, respectively, of the portfolio return

realized over the forecasting period for the industry

under a given prior specification and estimation method. The utility gain is calculated by subtracting from the average utility

the average utility obtained by using the naive forecast from the recursive mean and variance to calculate wjt. A positive value for the utility gain indicates the fee a risk-averse investor is willing to pay to access the investment strategy implied by

.

Fig. 9 The left panel reports the cross-sectional distribution of the average utility gain across industry portfolios. The right panel reports the industries for which the utility gain is positive. The top (bottom) panels report the results for the 30-industry (49-industry) classification.

The out-of-sample economic significance largely confirms the statistical performance across methods. From a purely economic standpoint, the forecast from a recursive mean is quite challenging to beat: we observe that the average utility gain is mostly negative, with the only exception of those provided by VB with a Horseshoe prior specification. The results show that a representative investor with mean-variance utility is willing to pay, on average, a monthly fee of almost 15 basis points to access the strategy based on our variational estimation of a large VAR with stochastic volatility. In addition, the right panels of show that the positive economic value obtained from our VB is more broadly spread across industries than alternative methods. This holds especially true for the 30-industry classification but applies to the more granular 49-industry classification.

6 Concluding Remarks

We propose a novel variational Bayes inference method for large-scale VAR with exogenous predictors and stochastic volatility. Different from most existing estimation methods for high-dimensional VAR models, our approach does not rely on a structural form representation. This allows fast and accurate estimation of the model parameters without leveraging on a standard Cholesky-based transformation of the parameter space. We show both in simulation and empirically that our estimation approach outperforms across different prior specifications, both statistically and economically, forecasts from existing benchmark estimation strategies, such as equivalent, nonlinear MCMC algorithms (see, e.g., Gruber and Kastner Citation2022) linearized MCMC (see, e.g., Cross, Hou, and Poon Citation2020) and linearized variational inference methods (see, e.g., Gefang, Koop, and Poon Citation2023).

Supplementary Materials

The supplementary material contains the proof and derivations of all propositions and theoretical results in the paper. The supplementary material contains also additional simulation and empirical results. These additional results have also been briefly discussed in the main text of the paper. The R code pertaining to the variational inference scheme developed in the paper can be found at this link: https://github.com/whitenoise8/Variational-inference-for-large-Bayesian-vector-autoregressions.

OnlineAppendix.pdf

Download PDF (8.1 MB)Acknowledgments

We are thankful to Andrea Carriero and seminar participants at the 2021 Virtual NBER-NSF SBIES, the 2021 European Summer Meeting of the Econometric Society, the 2nd Workshop on Dimensionality Reduction and Inference in High-Dimensional Time Series at Maastricht University, the 2023 Summer Forum Workshop on Macroeconomics and Policy Evaluation at the Barcelona School of Economics, and the 3rd International Conference on Econometrics and Business Analytics in Tashkent, for their helpful comments and suggestions.

Disclosure Statement

The authors report there are no competing interests to declare.

Additional information

Funding

References

- Archakov, I., and Hansen, P. R. (2021), “A New Parametrization of Correlation Matrices,” Econometrica, 89, 1699–1715. DOI: 10.3982/ECTA16910.

- Arias, J. E., Rubio-Ramirez, J. F., and Shin, M. (2023), “Macroeconomic Forecasting and Variable Ordering in Multivariate Stochastic Volatility Models,” Journal of Econometrics, 235, 1054–1086. DOI: 10.1016/j.jeconom.2022.04.013.

- Avramov, D. (2004), “Stock Return Predictability and Asset Pricing Models,” Review of Financial Studies, 17, 699–738. DOI: 10.1093/rfs/hhg059.

- Bernardi, M., Bianchi, D., and Bianco, N. (2023), “Dynamic Variable Selection in High-Dimensional Predictive Regressions,” working paper.

- Bognanni, M. (2022), “Comment on “Large Bayesian Vector Autoregressions with Stochastic Volatility and Non-conjugate Priors,” Journal of Econometrics, 227, 498–505. DOI: 10.1016/j.jeconom.2021.10.008.

- Campbell, J. Y., and Thompson, S. B. (2007), “Predicting Excess Stock Returns Out of Sample: Can Anything Beat the Historical Average?” The Review of Financial Studies, 21, 1509–1531. DOI: 10.1093/rfs/hhm055.

- Carriero, A., Clark, T. E., and Marcellino, M. (2019), “Large Bayesian Vector Autoregressions with Stochastic Volatility and Non-conjugate Priors,” Journal of Econometrics, 212, 137–154. DOI: 10.1016/j.jeconom.2019.04.024.

- Carriero, A., Chan, J., Clark, T. E., and Marcellino, M. (2022), “Corrigendum to “Large Bayesian Vector Autoregressions with Stochastic Volatility and Non-conjugate Priors” [j. econometrics 212 (1)(2019) 137–154],” Journal of Econometrics, 227, 506–512. DOI: 10.1016/j.jeconom.2019.04.024.

- Carvalho, C. M., Polson, N. G., and Scott, J. G. (2009), “Handling Sparsity via the Horseshoe,” in Proceedings of the 12th International Conference on Artificial Intelligence and Statistics, April 16–18, 2009 (Vol. 5), pp. 73–80.

- Carvalho, C. M., Polson, N. G., and Scott, J. G. (2010), “The Horseshoe Estimator for Sparse Signals,” Biometrika, 97, 465–480. DOI: 10.1093/biomet/asq017.

- Chan, J. C. (2021), “Minnesota-Type Adaptive Hierarchical Priors for Large Bayesian Vars,” International Journal of Forecasting, 37, 1212–1226. DOI: 10.1016/j.ijforecast.2021.01.002.

- Chan, J. C., and Eisenstat, E. (2018), “Bayesian Model Comparison for Time-Varying Parameter Vars with Stochastic Volatility,” Journal of Applied Econometrics, 33, 509–532. DOI: 10.1002/jae.2617.

- Chan, J. C., and Yu, X. (2022), “Fast and Accurate Variational Inference for Large Bayesian VARs with Stochastic Volatility,” Journal of Economic Dynamics and Control, 143, 104505. DOI: 10.1016/j.jedc.2022.104505.

- Chan, J. C., Koop, G., and Yu, X. (2023), “Large Order-Invariant Bayesian VARs with Stochastic Volatility,” Journal of Business & Economic Statistics, 1–13. DOI: 10.1080/07350015.2023.2252039.

- Cross, J. L., Hou, C., and Poon, A. (2020), “Macroeconomic Forecasting with Large Bayesian VARs: Global-Local Priors and the Illusion of Sparsity,” International Journal of Forecasting, 36, 899–915. DOI: 10.1016/j.ijforecast.2019.10.002.

- Fama, E. F., and French, K. R. (1997), “Industry Costs of Equity,” Journal of Financial Economics, 43, 153–193. DOI: 10.1016/S0304-405X(96)00896-3.

- ———(2015), “A Five-Factor Asset Pricing Model,” Journal of Financial Economics, 116, 1–22.

- Ferson, W. E., and Harvey, C. R. (1991), “The Variation of Economic Risk Premiums,” Journal of Political Economy, 99, 385–415. DOI: 10.1086/261755.

- ———(1999), “Conditioning Variables and the Cross Section of Stock Returns,” The Journal of Finance, 54, 1325–1360.

- Ferson, W. E., and Korajczyk, R. A. (1995), “Do Arbitrage Pricing Models Explain the Predictability of Stock Returns?” Journal of Business, 68, 309–349. DOI: 10.1086/296667.

- Fisher, J. D., Pettenuzzo, D., and Carvalho, C. M. (2020), “Optimal Asset Allocation with Multivariate Bayesian Dynamic Linear Models,” Annals of Applied Statistics, 14, 299–338.

- Gefang, D., Koop, G., and Poon, A. (2023), “Forecasting Using Variational Bayesian Inference in Large Vector Autoregressions with Hierarchical Shrinkage,” International Journal of Forecasting, 39, 346–363. DOI: 10.1016/j.ijforecast.2021.11.012.

- Goyal, A., and Welch, I. (2008), “A Comprehensive Look at the Empirical Performance of Equity Premium Prediction,” The Review of Financial Studies, 21, 1455–1508. DOI: 10.1093/rfs/hhm014.

- Griffin, J. E., and Brown, P. J. (2010), “Inference with Normal-Gamma Prior Distributions in Regression Problems,” Bayesian Analysis, 5, 171–188. DOI: 10.1214/10-BA507.

- Gruber, L., and Kastner, G. (2022), “Forecasting Macroeconomic Data with Bayesian VARs: Sparse or Dense? It depends!,” arXiv preprint arXiv:2206.04902.

- Gunawan, D., Kohn, R., and Nott, D. (2020), “Variational Approximation of Factor Stochastic Volatility Models,” arXiv e-prints, art. arXiv:2010.06738.

- Hahn, P. R., and Carvalho, C. M. (2015), “Decoupling Shrinkage and Selection in Bayesian Linear Models: A Posterior Summary Perspective,” Journal of the American Statistical Association, 110, 435–448. DOI: 10.1080/01621459.2014.993077.

- Hauzenberger, N., Huber, F., and Onorante, L. (2021), “Combining Shrinkage and Sparsity in Conjugate Vector Autoregressive Models,” Journal of Applied Econometrics, 36, 304–327. DOI: 10.1002/jae.2807.

- Hou, K., and Robinson, D. T. (2006), “Industry Concentration and Average Stock Returns,” The Journal of Finance, 61, 1927–1956. DOI: 10.1111/j.1540-6261.2006.00893.x.

- Huber, F., and Feldkircher, M. (2019), “Adaptive Shrinkage in Bayesian Vector Autoregressive Models,” Journal of Business & Economic Statistics, 37, 27–39. DOI: 10.1080/07350015.2016.1256217.

- Huber, F., Koop, G., and Onorante, L. (2021), “Inducing Sparsity and Shrinkage in Time-Varying Parameter Models,” Journal of Business & Economic Statistics, 39, 669–683. DOI: 10.1080/07350015.2020.1713796.

- Kastner, G., and Huber, F. (2020), “Sparse Bayesian Vector Autoregressions in Huge Dimensions,” Journal of Forecasting, 39, 1142–1165. DOI: 10.1002/for.2680.

- Leng, C., Tran, M. N., and Nott, D. (2014), “Bayesian Adaptive Lasso,” Annals of the Institute of Statistical Mathematics, 66, 221–244. DOI: 10.1007/s10463-013-0429-6.

- Lewellen, J., Nagel, S., and Shanken, J. (2010), “A Skeptical Appraisal of Asset Pricing Tests,” Journal of Financial Economics, 96, 175–194. DOI: 10.1016/j.jfineco.2009.09.001.

- Minka, T. P. (2001), “Expectation Propagation for Approximate Bayesian Inference,” in Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence, pp. 362–369.

- Ormerod, J. T., and Wand, M. P. (2010), “Explaining Variational Approximations,” The American Statistician, 64, 140–153. DOI: 10.1198/tast.2010.09058.

- Park, T., and Casella, G. (2008), “The Bayesian Lasso, Journal of the American Statistical Association, 103, 681–686. DOI: 10.1198/016214508000000337.

- Polson, N. G., and Scott, J. G. (2011), “Shrink Globally, Act Locally: Sparse Bayesian Regularization and Prediction,” in Bayesian Statistics, 9, 501–538.

- Rapach, D., and Zhou, G. (2013), “Forecasting Stock Returns,” in Handbook of Economic Forecasting (Vol. 2), eds. G. Elliott and A. Timmermann, pp. 328–383, Amsterdam: Elsevier.

- Rapach, D. E., Strauss, J. K., and Zhou, G. (2010), “Out-of-Sample Equity Premium Prediction: Combination Forecasts and Links to the Real Economy,” The Review of Financial Studies, 23, 821–862. DOI: 10.1093/rfs/hhp063.

- Ray, P., and Bhattacharya, A. (2018), “Signal Adaptive Variable Selector for the Horseshoe Prior,” arXiv: Methodology.

- Rohde, D., and Wand, M. P. (2016), “Semiparametric Mean Field Variational Bayes: General Principles and Nmerical Issues,” The Journal of Machine Learning Research, 17, 5975–6021.

- Rothman, A. J., Levina, E., and Zhu, J. (2010), “A New Approach to Cholesky-based Covariance Regularization in High Dimensions,” Biometrika, 97, 539–550. DOI: 10.1093/biomet/asq022.

- Wand, M. P., Ormerod, J. T., Padoan, S. A., and Frührwirth, R. (2011), “Mean Field Variational Bayes for Elaborate Distributions,” Bayesian Analysis, 6, 847–900. DOI: 10.1214/11-BA631.