?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract–

This paper fills the limited statistical understanding of Shapley values as a variable importance measure from a nonparametric (or smoothing) perspective. We introduce population-level Shapley curves to measure the true variable importance, determined by the conditional expectation function and the distribution of covariates. Having defined the estimand, we derive minimax convergence rates and asymptotic normality under general conditions for the two leading estimation strategies. For finite sample inference, we propose a novel version of the wild bootstrap procedure tailored for capturing lower-order terms in the estimation of Shapley curves. Numerical studies confirm our theoretical findings, and an empirical application analyzes the determining factors of vehicle prices.

Disclaimer

As a service to authors and researchers we are providing this version of an accepted manuscript (AM). Copyediting, typesetting, and review of the resulting proofs will be undertaken on this manuscript before final publication of the Version of Record (VoR). During production and pre-press, errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal relate to these versions also.1 Introduction

Modern data science techniques, such as deep neural networks and ensemble methods like gradient boosting, are renowned for their high predictive accuracy. However, these methods often operate as black boxes, offering limited interpretability. To address this challenge, the Shapley value has gained popularity as a measure of variable importance in recent years. Originally introduced in game theory, Shapley values provide a unique solution to cooperative games (Shapley, 1953). In the realm of machine learning, they enable model explanation by quantifying the contribution of each variable to the prediction. This is achieved by calculating the difference between a prediction for a subset of variables and the same subset additional with the variable of interest. This difference is then weighted and averaged over all possible subset combinations, yielding the respective Shapley values. For example, Lundberg and Lee (2017) assume variable independence and introduce an approximation method known as KernelSHAP, which aims to explain the predictions of the conditional mean. Other researchers have proposed variants of Shapley values based on different predictiveness measures, such as the variance of the conditional mean (Owen and Prieur, 2017; Bénard et al., 2022a).

A major portion of the existing literature is predominantly focused on practical approximations of Shapley values, given that their computational complexity grows exponentially with the number of variables (Chen et al., 2023). However, the theoretical understanding of Shapley values, especially concerning uncertainty quantification, remains somewhat limited. It is crucial to distinguish between uncertainty due to the computational approximation on the one hand and estimation uncertainty on the other. To address the former, Covert and Lee (2021) study the asymptotic properties of KernelSHAP, a weighted least squares approximation for Shapley values. While these findings confirm the effectiveness of the approximation, their relevance for statistical inference is unclear, as they offer no insight into estimation uncertainty. A low level of approximation uncertainty cannot mitigate the issues of a large error in the function estimation. Another strand of the literature is concerned with estimation uncertainty, which is the key interest of our paper. Fryer et al. (2020) focus on estimation uncertainty for the Shapley values by assuming an underlying linear model, hence the Shapley values are defined as a function of the coefficient of determination, R2, instead of the conditional mean. Johnsen et al. (2021) propose bootstrap inference for the SAGE estimator, which is a global measure, but do not prove its asymptotic validity. Additionally, there are other closely related publications from a statistical standpoint that include asymptotic arguments. Williamson and Feng (2020) define Shapley effects as a global measure of predictiveness and prove their asymptotic normality. Bénard et al. (2022a) establish consistency for Shapley effects, utilizing the variance of the conditional mean with a random forest estimator. While these two papers are similar in spirit, they differ from our analysis, as they focus on global measures for variable importance based on predictive measures. In contrast, our research is centered on providing asymptotics for local measures within the conditional mean framework of Shapley values.

The purpose of this paper is to conduct a rigorous asymptotic analysis of the Shapley values, adopting a fully nonparametric approach. In doing so, we establish both consistency results and asymptotic normality. It is crucial to first define the estimand, which we call the (population level) Shapley curves. With the term ‘population level’ we want to highlight that Shapley curves are d-dimensional functions characterizing the true variable importance of a given variable at any point in the support of the covariates, which are uniquely determined by the true conditional expectation function and by the joint distribution of covariates. The perspective in this paper is therefore different from most existing work on Shapley values specifically, and from variable importance measures more broadly. Rather than merely ‘explain’ a prediction, our goal is to precisely estimate the true (population level) variable importance.

To study the asymptotics of the estimated Shapley curves, we analyze two types of plug-in estimators previously discussed in the literature. First, the component-based approach requires the direct estimation of all components in the Shapley decomposition. This is achieved by having separate regression equations for all subsets of variables (see e.g., Štrumbelj et al. (2009) and Williamson and Feng (2020)). Second, the integration-based approach requires only a single estimate of the full regression model. The estimates of the lower-dimensional components in the Shapley decomposition are obtained by integrating out the variables not in the given subset. Our asymptotic analysis reveals that this latter approach is closely related to the literature on the marginal integration estimator of additive models (Tjøstheim and Auestad, 1994; Linton and Nielsen, 1995). However, in the Shapley context, this idea has been explored by Frye et al. (2020), Aas et al. (2021b), Covert et al. (2020), and Chen et al. (2023), among others. By relying on asymptotic arguments proven in the nonparametric literature, we bridge the existing gap of asymptotic developments of this estimator within the field of explainability. In the case of dependent features, the integration-based approach relies on an estimate of the conditional densities of the variables. Various estimation strategies, such as the assumption of Gaussian distributions or the application of copula methods, are discussed by Aas et al. (2021a). Additionally, Lin et al. (2024) present theoretical findings on the robustness of the integration-based approach concerning variable omission.

As we consider a fully nonparametric model setup with general assumptions, we rely on local linear estimation (Fan, 1993). We demonstrate that both the component-based and integration-based approaches to estimation achieve the minimax rate of convergence. Furthermore, we establish the asymptotic normality of these estimates. Notably, we prove that the asymptotic distributions of the two estimators differ only in their bias, not in their asymptotic variance. Specifically, the integration-based approach has a larger bias. This finding is not unique to local linear estimation; reliance on a d-dimensional pilot estimator will typically lead to oversmoothing of the lower-dimensional components. This oversmoothing effect has implications for other modern smoothing techniques that rely on hyperparameters to balance the bias-variance trade-off, including regression tree ensembles and neural networks. Similarly, using the integration-based approach will result in an inflated bias.

While inference based on the asymptotic normal distribution is possible, it often leads to unsatisfactory performance in finite sample scenarios. To achieve better coverage in finite samples, we introduce a consistent wild bootstrap procedure (Mammen, 1992; Härdle and Mammen, 1993) specifically designed for the construction of Shapley curves. To the best of our knowledge, our wild bootstrap procedure, also referred to as the multiplier bootstrap, is the first in the context of estimation uncertainty for local Shapley measures that is proven to be consistent. By generating bootstrap versions of the lower-order terms, we effectively mimic the variance of the estimator counterpart. An extensive simulations study confirms our theoretical results, highlighting the strong coverage performance of our bootstrap procedure.

Our contributions to the field are two-fold. The first contribution is of a conceptual nature. By considering population-level Shapley curves we argue for a perspective that is different than the majority of the existing literature. Instead of merely viewing Shapley values as an ‘explanation’ of a given prediction method (Lundberg and Lee, 2017; Covert et al., 2020), our goal is to provide accurate estimates of the true variable importance as determined by the distribution of the data. This is a prerequisite for our second, more technical contribution, which is the rigorous statistical analysis of the two predominant estimation techniques for Shapley curves. By proving that both estimators achieve the minimax rate of convergence in the class of smooth functions and establishing their asymptotic normality, we enhance the theoretical understanding of Shapley values.

This paper contributes to the rapidly growing body of literature on variable importance measures. A general overview of state-of-the-art algorithms to estimate the Shapley value variable attributions is given in Covert et al. (2021) and, more recently, in Chen et al. (2023). Further research has focused on improving the computational efficiency of Shapley value estimation, such as the FastSHAP (Jethani et al., 2022). Unlike local methods that explain predictions at the level of individual observations, there is also considerable interest in global explanations that apply to the entire sample, such as SAGE (Covert et al., 2020) and Shapley Effects (Owen, 2014; Song et al., 2016; Owen and Prieur, 2017). Other methods provide algorithm-specific approximations instead of model agnostic, such as decision tree ensembles (Lundberg et al., 2020; Muschalik et al., 2024).

The structure of this paper is as follows. First, we introduce the nonparametric setting and notation of our work in Section 2. The Shapley curves are defined on population level and examples are given for building the intuition of the reader. In Section 3 we propose two estimation approaches for Shapley curves. Our main theorems regarding asymptotics are given and we elaborate on their heuristics. Additionally, we detail the novel implementation of our wild bootstrap methodology. In Section 4, through simulations, we demonstrate that prediction accuracy improves with increasing sample size, and our wild bootstrap procedure achieves good coverage. Section 5 applies our methodology to estimate Shapley curves for vehicle prices based on vehicle characteristics. The estimated confidence intervals for the Shapley curves enable practitioners to conduct statistical inference. The paper concludes with Section 6, summarizing our findings and contributions.

2 A Smoothing Perspective on Shapley Values

2.1 Model Setup and Notation

Consider a vector of covariates and a scalar response variable

. Let F denote the cumulative distribution function (cdf) of X with continuous density (pdf), f. Let

be a sample drawn from a joint distribution function

. Consider the following nonparametric regression setting,

(1)

(1)

with and

, where

is a rich class of functions. Consequently,

is the conditional expectation. Let

, and let

denote the power set of N. For a set

, Xs

denotes a vector consisting of elements of X with indices in s. Correspondingly,

denotes a vector consisting of elements of X not in s. We write

to denote functions which ignore arguments with index not in s,

. Similarly, we write

for functions which ignore arguments in s. Finally, we write

for the conditional density functions of

given Xs

= xs

. The indicator function

is equal to 1 if

and 0 otherwise. The sign function

takes the value 1 if

and –1 otherwise.

2.2 Population Shapley Curves

We now define the population-level Shapley curves, which are functions, measuring the local variable importance of a variable j at a given point

. They are uniquely determined by m(x) defined in (1) and the joint distribution function of the covariates, F. Our focus differs from the prevalent perspective in the literature, as we aim not merely to interpret a given prediction but rather to estimate and make inference on the true Shapley curves. As a consequence, looking at variable importance on the population level is agnostic of the specific form a corresponding estimator might take.

Originally proposed in the game theory framework, Shapley values measure the difference of the resulting payoff for a coalition of players and the same coalition including an additional player (Shapley, 1953). By keeping a player fixed, this difference is calculated across all possible coalitions of players and averaged with a combinatorial weight. From a statistical point of view, each player is represented by a variable and the payoff is therefore a measure of contribution for the corresponding subset of variables. In this work, this measure is set to be the conditional mean. Finally, let us define , as follows,

(2)

(2)

for , where the components are defined as

(3)

(3)

for . Note that

represents the full nonparametric model and

is the unconditional mean of the response variable. A point

in the Shapley curve measures the difference in the conditional mean of Y from including the variable Xj

, averaged through the combinatorial weight over all possible subsets.

The Shapley decomposition satisfies several convenient properties, namely additivity, symmetry, null feature and linearity. These properties have been initially proved for cooperative games and subsequently transferred to feature attribution methods. Most importantly, our definition of Shapley curves (2) satisfies the crucial additivity property, . Namely, the conditional expectation of the full nonparametric model (1) subtracted by the unconditional mean of the dependent variable,

, can be recovered exactly as a sum of Shapley curves of variables Xj

evaluated at the point x. The remaining properties are discussed in Covert et al. (2021).

In contrast to the Shapley-based variable importance measures of Williamson and Feng (2020) and Bénard et al. (2022a), Shapley curves provide a local instead of a global assessment of the importance of a variable. Consequently, the Shapley curve will take different values for different points on the d-dimensional support of the covariates. It might be the case that a variable is redundant in certain areas of

, but indispensable in other areas. Local measures are thus able to paint a more nuanced picture.

We want to highlight the important role that the dependency in X plays in (3), and consequently in the definition of the Shapley curves. For a set represents the conditional expected value of the dependent variable, with variables not in s integrated out with respect to the conditional density

. In the special case of independent covariates, the expression for the conditional density simplifies to a product of (unconditional) marginal densities. In general, however, the component (3), and consequently

, will depend crucially on the dependency structure of X. In particular, the difference in the conditional expected value for a given set s,

, can be a result of the direct effect of variable Xj

on Y via the functional relationship described by

, or it might be due to the dependence of Xj

with another variable Xk

, which in turn has a direct effect on Y. This can be seen as a bias caused by the endogeneity of Xj

. It is therefore crucial to understand, that Shapley curves, even if they are defined on the population, are a predictive measure of variable importance and not a causal measure. We will demonstrate the role of dependence in a few examples in the next subsection.

2.3 Examples

Let us have a look at Shapley curves in a few interesting scenarios. We consider different settings for the regression function, m(x), as well as for the dependence structure of X. In particular, we are interested in the difference between the case of independent and dependent regressors.

Example 1: Additive Interaction Model

We first consider the following additive interaction model, with mean-dependent covariates X1 and X2,

This model was discussed in Sperlich et al. (2002), Chastaing et al. (2012), and more recently in Hiabu et al. (2020) in the context of a random forest variant. It is used thoroughly in our simulation studies. The Shapley curve on population level for variable X1 is given by

By symmetry, can be defined in a similar way. It is important to understand how the Shapley curve is affected (i) by the interaction effect, and (ii) by the dependence across the covariates. In the case of non-zero interaction but under mean independence of covariates, and under the following identification assumptions,

, the expression simplifies to

The simplified expression consists of the main effect and variable X1’s share of the interaction effect. On the other hand, assuming zero interaction, i.e. , but allowing for mean-dependent covariates, we can isolate the effect of dependence,

Finally, in the absence of any interaction and dependence, the Shapley curve simplifies to the partial dependence function of an additive model, i.e. .

Example 2: Threshold Regression Model

In our second example, we consider the threshold regression model (Dagenais, 1969), a well-known econometric model in which the effects of the variables enter non-linearly. A notable economic application is the multiple equilibria growth model (Durlauf and Johnson, 1995; Hansen, 2000). Empirical analyses suggest the existence of a regime change in GDP growth depending on whether the initial endowment of a country exceeds a certain threshold. More precisely, take the conditional expectation of Y given x as

where are scalar parameters. Under the assumption of mean-independence among X1 and X2, the population-level Shapley curves for both variables are

where is the marginal cdf of X2. The Shapley curve for variable X1 will take a larger value in absolute values whenever the coordinate x1 is far away from the unconditional expectation,

. Similarly, the Shapley curve for the threshold variable X2 will take a large value if the difference

is large, i.e., in situations in which the effect of the variable is not well captured by its unconditional expectation.

3 Estimators and Asymptotics

In this section, we discuss the two common approaches for estimating Shapley curves. By considering curves instead of a value of importance, we gain insights on the whole support of the covariates. The goal is to establish consistent and asymptotically normal estimation of these curves in a general nonparametric setting. This is crucial because otherwise, we are not able to determine whether the estimate on the sample level is meaningful in any way. Indeed, Scornet (2023) and Bénard et al. (2022b) demonstrate that a variety of measures for variable importance in random forests only have a meaningful population-level target in the restrictive case of independence of regressors and no interactions. Both approaches discussed in this chapter are plug-in estimators using the Shapley formula in (2). The first approach is based on separate estimators of all component functions, for all subsets s. We call this the component-based approach. Alternatively, one can obtain estimators of the component using a pilot estimator for the full model, i.e., an estimator of m(x), and integrate out the variables not contained in the set s with respect to the estimated conditional densities using (3). This method we denote as the integration-based approach.

3.1 Component-Based Approach

The component-based approach for estimating Shapley curves involves the separate estimation of each component. For each subset , we use a nonparametric smoothing technique to regress Yi

on

, i.e., those regressors contained in s. This yields in total

regression equations,

(4)

(4)

with and ms

is defined as in equation (3). Given an estimator for the components, we obtain a plug-in estimator for the Shapley curve of variable j using (2). As a result, the estimated Shapley curve follows as

(5)

(5)

Since ms

is not specified parametrically, we employ local linear estimators (Fan, 1993) for the component functions. Let and

, where k is a one-dimensional kernel function and hs

is the bandwidth. Then we have,

(6)

(6)

and the local linear estimator is . The idea is to fit a linear model locally by using a weighted least squares procedure which puts more weight on the observations close to the point xs

via the kernel function.

We are interested in the asymptotic behavior in terms of the global rate of convergence and Gaussianity. This has at least two important implications. First, it demonstrates that the estimation of Shapley curves on the sample level targets the correct quantities. Second, it allows us to construct confidence intervals and conduct hypothesis tests on the estimated curves. For this purpose, we impose the following regularity assumptions.

Assumption 1. (i) The support of X is . (ii) The density f of X is bounded, bounded away from zero, and twice continuously differentiable on

. (iii)

for all

. (iv)

for some

.

Assumption 2. Assume m(x) belongs to , the space of d-dimensional twice continuously differentiable functions.

Assumption 3. Assume is a univariate twice continuously differentiable probability density function symmetric about zero and

and

for some

.

The following theorem shows the consistency of the component-based estimator in the mean integrated squared error (MISE) sense.

Proposition 1. Let be the component-based estimator with components estimated via the local linear method with bandwidths

. Then we have under Assumptions 1, 2 and 3, as n goes to infinity,

The proof of Proposition 1 can be found in the supplementary material A.1. The main idea is to write the difference between the estimator and the true Shapley curve in terms of a weighted sum, (7)

(7)

where the weights are defined as (8)

(8)

for all . We show that the leading term in the MISE of the component-based estimator depends on the MISE of the local linear estimator for the full model, and since the corresponding weight is defined as

,

Ultimately, the convergence rate is determined by the slowest rate of the components, i.e. the convergence rate of the full model, which is known to be . Since

also belongs to

, the class of twice continuously differentiable functions,

is a minimax-optimal estimator for

by Stone (1982).

The following theorem establishes the point-wise asymptotic normality of the component-based estimator and the corresponding proof is given in the supplementary material A.2.

Theorem 2. Let the conditions of Proposition 1 hold and let denote the optimal bandwidth of the full model. Then we have, for a point x in the interior of

, as n goes to infinity,

where the asymptotic bias and the asymptotic variance are given as

respectively and denotes the squared L2 norm of k.

Interestingly, the asymptotic distribution of the component-based estimator is the same for all variables, , as neither bias nor variance are variable-specific. The estimators for the bias and the variance,

and

respectively, can be obtained via plug-in estimates. This allows us to construct asymptotically valid confidence intervals around the estimated Shapley curves.

It is well known that bootstrap sampling, particularly the wild bootstrap (Mammen, 1992), yields improved finite sample coverage compared to the direct estimation of the confidence intervals relying on the asymptotic normal distribution (Härdle and Marron, 1991; Härdle and Mammen, 1993). Bootstrap methods in the Shapley context were previously studied by Covert and Lee (2021) and Johnsen et al. (2021). However, the former are interested in the quantification of uncertainty caused by computational approximation methods while our focus is on the estimation uncertainty. The latter also focus on estimation uncertainty but do not provide any theoretical justification for their bootstrap procedure. We propose Algorithm 1, a tailored wild bootstrap procedure to construct asymptotically valid confidence intervals around the component-based estimator.

Algorithm 1 Wild bootstrap procedure for the component-based estimator

1: Estimate on

, with the optimal bandwidth hs

for

and calculate

.

2: Estimate on

, with bandwidth gs

such that

as

for all

and calculate

.

3: Bootstrap sampling

(a) Generate bootstrap residuals , where

for all

. Following Mammen (1993), the random variable Vi

is

with probability

and

with probability

1)

.

(b) Construct for

and for all

.

(c) Estimate based on the bootstrap version

with bandwidths hs

and calculate

.

4: Iteration

Repeat Step 3(a) - 3(c) for bootstrap iterations.

5: Construct confidence intervals

Construct confidence intervals , where α is the significance level and

and

are the empirical quantiles of the bootstrap distribution of

.

The novelty of our proposed bootstrap procedure is the creation of subset-specific bootstrap observations , and the inclusion of the lower-order components. By incorporating the subset-specific bootstrap data, the variance of the bootstrap version better mimics the variance of the estimator in finite samples, as shown in Section A.4 in the supplementary material. Even though the lower-order components are irrelevant to the asymptotic distribution, they do matter in finite samples. Further, it is crucial to choose a different bandwidth gs

such that it oversmooths the data to correctly adjust the bias in the bootstrap version of the Shapley curves (Härdle and Marron, 1991). The following proposition presents the consistency of our bootstrap procedure. For this result, we have to assume that the third moment of the error term is bounded.

Assumption 4. Assume that the conditional variance is twice continuously differentiable and

.

Proposition 3. Let Assumptions 1-4 hold and let denote the conditional distribution and

denote the bootstrap distribution. Then we have, for a point x in the interior of

and

, as n goes to infinity

The underlying function class in Proposition 1 and Theorem 2 is quite large, leading to a prohibitively slow convergence rate in a setting with high-dimensional covariates due to the curse of dimensionality. However, we want to emphasize that it is possible to obtain convergence rate results for function classes more suited for such higher-dimensional settings. A relevant example is the function class considered in Schmidt-Hieber (2020), containing elements that are compositions of lower-dimensional functions with dimensions up to , which can be much smaller than the original covariate dimension d. Deep neural networks with ReLU activation functions can estimate such functions with the rate

, assuming that the underlying lower-dimensional functions are twice continuously differentiable. In the case of independent covariates, this favorable convergence rate can also be achieved for the estimation of Shapley curves when deep learning is used in the estimation of the components in (5). The result of Schmidt-Hieber (2020) can directly be applied to Proposition 1. As a downside, while we know the rate of convergence, asymptotic normality results as in Theorem 2 are much harder to obtain and are still an open research problem.

Remark 4. The component-based estimator for the Shapley curves, , requires the estimation of

conditional mean functions,

, for all

. This task is particularly cumbersome when d is large and the chosen estimator is computationally intensive. The KernelSHAP approximation mitigates this issue by subsampling the subsets

. This subsampling idea can be incorporated into a constrained weighted least-squares problem, whose solution offers an approximation to the usual analytical formula. Recently, Jethani et al. (2022) extended this idea to FastSHAP, an online method for estimating Shapley values based on stochastic gradient descent. We refer to Chen et al. (2023) for a comprehensive overview of approximation methods. Since our focus is to carefully derive the main asymptotic properties of the introduced estimators, we do not provide new solutions to computational issues. However, it is straightforward to incorporate these approximation methods into our statistical analysis. The reason is that as the number of sampled subsets goes to infinity reasonably fast, the approximation error is of smaller order compared with the estimation error.

3.2 Integration-Based Approach

In contrast to the component-based method, the integration-based approach requires the estimation of only one regression function, namely that of the full model. This pilot estimator is obtained by local linear estimation and is thus identical to the estimator for the full model in the component-based approach, . To estimate the regression functions for the subsets

, the variables not contained in s are integrated out using the pilot estimator and an estimate for the conditional density,

(9)

(9)

In the case of mean-independent regressors, there is no need to estimate conditional densities. In this case, a simplified estimator can be used, which averages over the observations, (10)

(10)

This estimator is well-known in the literature as the marginal integration estimator for additive models (Linton and Nielsen, 1995; Tjøstheim and Auestad, 1994) and is discussed in a more general setting by Fan et al. (1998). However, the assumption of mean-independent regressors is not plausible in general, and the estimator described in (10) will lead to inconsistent estimates for the true component, . In this setting, the estimation of the conditional densities is a necessity and one needs to use the estimator (9).

The estimation of the conditional density is somewhat cumbersome in practice. For example, Aas et al. (2021b) use a similar definition of the conditional mean as what we call integration-based approach (see equation 3). However, they do not focus on the resulting asymptotic properties but rather provide practical approaches to tackle the estimation problem of the conditional density. These approaches range from assuming variable independence, a Gaussian distribution, or vine copula structures. Going further, Chen et al. (2023) provide a widespread summary of approximation techniques to the same estimation problem.

To study the asymptotic behavior of the integration-based estimator for Shapley curves, we assume the simplified setting of known conditional densities. Thus, we consider the following estimator for the component function of subset s, (11)

(11)

In analogy to the component-based estimated Shapley curve (5), we obtain the integration-based estimated Shapley curve as a plug-in estimate using

.

The following theorem shows the global convergence rate and the asymptotic distribution of the integration-based estimator.

Theorem 5. Under Assumptions 1, 2 and 3, let be the integration-based estimator with known density and a pilot estimator based on local linear estimation with bandwidth

. Then we have as n goes to infinity,

(12)

(12)

Further, we have, for a point x in the interior of , as n goes to infinity the asymptotic distribution of the integration-based estimator,

where the asymptotic bias term is (13)

(13)

and the asymptotic variance term is

The proof of Theorem 5 is given in the supplementary material A.5. Notice that the rate is identical to the convergence rate of the component-based approach and it is also optimal in the minimax sense. The reason is that, once again, the (pilot) estimator determines the convergence rate of

.

The asymptotic variance is identical to that of the component-based approach. The difference in the asymptotic distribution is solely due to the bias. Compared with the component-based approach, the bias term defined in (13) is now a sum over elements, instead of a single term. As a consequence, the bias is inflated. This fact becomes clear when looking at the bias and the variance of the estimated components. Following Linton and Nielsen (1995), we get the following expression for the bias and the variance of

,

The bandwidth of the pilot estimator is chosen as , which balances the squared bias and variance only for the component associated with the full model. However, all components are based on this pilot estimator and thus rely on the same bandwidth. For all other subsets s, we have

which leads to oversmoothing,

As a consequence, the bias of the lower-dimensional components does not vanish in the bias of the integration-based estimator, as it is of the same order for all the components, namely . While the order of the bias term in the integration-based estimator is still identical to that of the component-based approach, it might be substantially larger in finite samples. However, the advantage of the integration-based curve is that the pilot estimator only needs to be estimated once. Essentially, this constitutes a trade-off between computational complexity and accuracy in the estimation.

Remark 6. While the focus in this Section lies on the local linear estimator and the class of twice-continuously differentiable functions, the bias problem of the integration-based approach presumably also arises in other contexts. This is due to the pilot estimator, which typically involves the selection of hyperparameters governing the bias-variance trade-off. For random forests, this could be the depth of the individual trees, for neural networks, the number of layers, and number of nodes. There is no reason to believe that the optimal choice of hyperparameters for the pilot estimator, which is based on the set of all regressors, is optimal for the estimation of the components associated with the lower-dimensional subsets. These components are less complex than the full model, which the integration-based approach cannot accommodate, while the component-based approach can.

Remark 7. The result of Theorem 5 relies on the knowledge of the true conditional densities for the integration-based estimation of the components. In practice, these densities have to be estimated, introducing another term in the asymptotic expression. For example, a Gaussian distribution is a reasonable assumption (Aas et al., 2021a), leading to a smaller order term of rate . Besides, practitioners could assume a hierarchical dependence structure of the variables and estimate the conditional density via parametric or nonparametric vine copula approaches (Aas et al., 2021b). For a kernel-density estimator, this additional estimation error is of order

. This is a further disadvantage of the integration-based method of estimating Shapley curves compared with the component-based approach.

The previous theorems are affected by the curse of dimensionality. Additive models are known to bypass this problem by imposing structure on the true process. They are renowned for offering a good balance between model flexibility and ease of interpretation. For example, Scornet et al. (2015) assume an additive structure on the true process for random forest estimation. Under the assumption of additivity and independence of the explanatory variables, we know that the Shapley curve simplifies to the corresponding partial dependence function, as explained in Example 1.

Assumption 5. Assume the regression function m(x) follows an additive structure, s.t. with

for

and the explanatory variables are independent.

It follows directly by Stone (1985) that the partial dependence function, and thus the corresponding Shapley curve, can be estimated with a one-dimensional rate, see Corollary 8. The estimation of the partial dependence function is sufficient to obtain an estimator for . The bias and the variance of the estimated Shapley curves follow by the established asymptotic results for the estimator of the partial dependence function for variable j (Linton and Nielsen, 1995; Nielsen and Linton, 1998). Note that recent results relying on lower-order terms in a functional decomposition of the regression function such as Hiabu et al. (2023), can directly be applied to the Shapely curves. In that case, the order of approximation determines the convergence rate.

Corollary 8. Let the partial dependence function be an estimator for

obtained by marginal integration or backfitting. Under Assumptions 1, 2, 3 and 5 we have that

4 Numerical Studies

In this section, we conduct simulation studies to validate the previous asymptotic results. First, we empirically demonstrate the consistent estimation of both the component and integration-based estimation techniques. Next, we use the proposed bootstrap approach to show empirical coverage.

Let the regressors be zero mean Gaussian with variance and correlation ρ, which is either 0 or 0.8 for different setups. The corresponding density functions are assumed to be known. The first data-generating process (DGP) is an additive model and the second one includes interactions:

The error terms follow . To investigate for robustness, we also draw the error terms from the Student’s t-distribution with 5 degrees of freedom,

, which reduces the signal-to-noise ratio. The local linear estimator is used to obtain component-based and integration-based Shapley curves for all three variables. The bandwidths

differ in each direction and are chosen via leave-one-out cross-validation in each j-direction. We use the second-order Gaussian kernel. To evaluate the global performance of both estimators, we calculate the MISE for DGP 1 and DGP 2 based on 6000 Monte Carlo replications of the same experiment. The estimated as well as the population-level Shapley curve are illustrated as heatmaps in Figure S1 in the supplementary material, together with the squared error.

The simulation results are displayed in Table 1 for both DGPs and Gaussian error terms. Recall that and

are denoted as the estimated integration-based Shapley curve and the estimated component-based Shapley curve for variable j, respectively. First, we observe that the MISE is shrinking for both estimators as the sample size increases. This result empirically confirms Proposition 1 and the first part of Theorem 5. Second, the component-based approach (almost) always results in a smaller MISE than the integration-based approach. This aligns with the asymptotic bias and asymptotic variance comparison of both estimators in Chapter 3. Since we oversmooth within the integration-based estimator, the bias of

accumulates over each component. In contrast, the bias of

is only determined by a single component, namely the one associated with the full d-dimensional model. Since the asymptotic variance of

and

is equal, the bias is the crucial part for the better performance of the component-based estimator. Further, Table 1 underlines that the bias term is more prominent the more complex the true process is.

The Shapley curves for the third variable result in better performance when the integration-based estimation is applied. This observation might seem counterintuitive at first. As the pilot estimator oversmooths in each dimension, the bandwidth of the third variable, h3, is tuned to take the whole support of X3. Computationally, this happens in every iteration of our Monte Carlo simulation. As a consequence, a linear model is fitted in the X3 direction, which takes the whole support for estimation. This reduces the variance as more observations are available for the fit. In contrast, the component-based estimation is not able to tune h3 such that it captures the whole support in

, for each subset that contains the third variable.

Note that we do not assume knowledge of the true process during the estimation of Shapley curves. If we conduct the estimation knowing that the first DGP is of additive structure, we can use backfitting or marginal integration. In this case, the optimal one-dimensional bandwidth for dimension j would be used, instead of the full model bandwidth. Following Corollary 8 a faster decrease of the MISE will result since the convergence is one-dimensional.

Further, we conclude that the estimation procedure is robust to a lower signal-to-noise ratio as we obtain reasonable results for in Table S1 the supplementary material. Both tables show that including dependence between the variables in the form of correlation introduces indirect effects as described in Example 1. This leads to an increase in the MISE for (almost) all considered sample sizes. We have included further simulation results for higher dimensional settings up to dimension d = 9 in Table S3 in the supplementary material C. These results are accompanied by computational run times, illustrating the practical feasibility. The run time increases reasonably as we increase sample size and number of variables. Further, with higher dimension, the local linear estimator leads to a lower estimation accuracy due to the curse of dimensionality.

In the subsequent paragraphs, we are going to investigate the empirical coverage of the confidence intervals for the component-based Shapley curves. Due to the better convergence performance as well as the smaller asymptotic bias, we set our focus merely on the component-based Shapley curves. The proposed wild bootstrap procedure from Section 3.1 is applied. Let the true process be a non-linear function of X1 and X2: (14)

(14)

The empirical coverage for confidence intervals of different significance levels are reported in Table 2 for the component-based estimation at the point and B = 1000 bootstrap replications. The error terms are set to be Gaussian and t-distributed. It demonstrates that our bootstrap procedure works well in finite samples since we obtain the desired coverage ratios. This means that the confidence intervals are well calibrated. Further, the estimation is robust to increased noise in the true process. As commonly observed in nonparametric procedures, there is a slight under-coverage in finite samples. Further, Figure S2 in the supplementary material illustrates the estimated curve, the population curve as well as the bootstrap confidence intervals.

5 Empirical Application

This empirical application illustrates our previous results in a real-world situation of vehicle price setting. Vehicle manufacturers typically rely on price-setting approaches that involve teardown data, surveying, or indirect cost multiplier adjustments for calculating a markup. In contrast, we apply a data-driven approach as a potential alternative. The sample consists of extensive vehicle price data for the U.S. provided by Moawad et al. (2021) and collected by the Argonne National Laboratory. Our data set includes the 20 most important variables identified by the latter authors out of a larger pool of characteristics for the prediction of 38, 435 vehicle prices ranging from the year 2001 to the year 2020. However, our motivation differs in the sense that we are interested in the visualization of Shapley curves over the whole support of the variables at hand, instead of being restricted to have a variable importance measure only for the observations in the sample. Our goal is not to obtain the most precise prediction of vehicle prices, but rather to provide a nuanced interpretation approach. For this matter, we make use of the three most important variables of the data set, which are horsepower, vehicle weight (in pounds), and vehicle length (in inches). The data is divided into three time intervals, ranging from 2001–2007 (12,230), 2008–2013 (11,955), and 2014–2020 (14,250). The pooled summary statistics can be found in Table S2 in the supplementary material. In the following, the vehicle price is reported in 1000 USD.

It is of empirical interest to consider Shapley curves as proposed in this work for several reasons. First, we are able to decompose the estimated conditional expectation locally at any point vector of interest. This type of investigation can contribute to an empirical understanding of prices for U.S. vehicle companies. Second, we obtain asymptotically valid confidence intervals around the estimated Shapley curves. This enables the price setter to differentiate between significant and non-significant support sections of the covariates. In this application, we use the component-based Shapley curves as proposed in the previous chapters. The bandwidth choice is motivated by a dual objective, on the one hand, to get a good fit to the data, and on the other hand to get interpretable and smooth curves.

To graphically illustrate the Shapley curves in dependence of two variables, we fix the third variable at the median. The surface plot for the first variable, horsepower (hp), is illustrated in Figure S3 in the supplementary material in dependence of horsepower and vehicle weight for the pooled data from years 2001–2020. This plot allows us to illustrate the interactive contribution on the price prediction between the variables. As we see, very light cars with high horsepower such as sports cars, are resulting in the highest increase in the price prediction. The lighter the car as well as the higher the horsepower, the higher the contribution to the price.

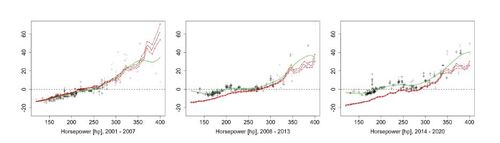

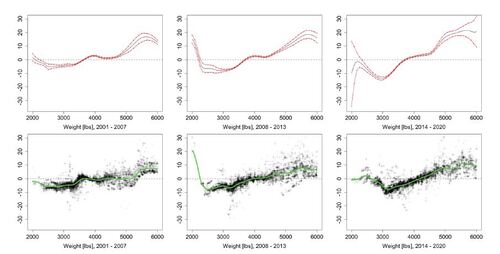

Next, we illustrate the estimated confidence intervals for the Shapley Curves over the support of variable j. Therefore we fix the remaining variables at the median. The results of this exercise are shown in Figure 2 for vehicle weight, in Figure 1 for horsepower, and in Figure S4 in the supplementary material for vehicle length. This type of analysis is not to be mistaken with the interpretation of confidence bands. For each of these slice plots we illustrate against Xj

. Figure 1 leads to the following economics insight. Across the time domain, we infer that horsepower, in general, is contributing less to an increased price as we move from the first to the second and third time interval. This effect is especially prominent as we compare the first and second time interval. The reason is that as technology develops over time, it is less costly for car manufacturers to produce vehicles with higher horsepower.

Further, we include estimated KernelSHAP values, as well as a smoothed curve of these values in Figure 1. KernelSHAP assumes independence between the variables and does not include subsampling of subsets in the sense of a computational approximation. As an estimator, we use XGBoost with default hyperparameter settings. To make the comparison between Shapley curves and smoothed KernelSHAP values as fair as possible, we estimate KernelSHAP for Horsepower, only on observations with realizations of weight and length lying in an interval around the median. It shows that the Shapley curve for horsepower and the smoothed SHAP values result in different curves in such a scenario. This is not surprising, as the assumption of variable independence can be practically misleading. Further, the smoothed SHAP curves are not accompanied by confidence intervals. Note that we could run KernelSHAP using estimation methods that account for variable dependence, as discussed by, for example, Aas et al. (2021a) or Frye et al. (2020).

The first row of Figure 2 includes the Shapley curve for vehicle weight. It shows that a lighter weight contributes to a price decrease, accompanied by stagnation at around 4000 lbs and an increase shortly after. Further, the Shapley curve is stretched apart on the tails across the time intervals. The estimated confidence intervals have a larger spread as we move to the tails of the variables. This is intuitive as we have more variation in price for the observations in this area. For example, a very heavy car with more than 5500 lbs can either be a Nissan NV Cargo Van or a Cadillac Escalade SUV. On the left tail, we observe insignificance for the third period.

The second row in Figure 2 includes the KernelSHAP values estimated for each observation in the respective time interval. In addition, we fit a smoother on these values, to make a comparison to our Shapley curves. In contrast to Figure 1, we are estimating the KernelSHAP for all observations.

6 Conclusion

This paper analyzes Shapley curves as a local measure of variable importance in a nonparametric framework. We give a rigorous definition of Shapley curves on the population level. As for estimation, we discuss two estimation strategies, the component-based approach, and the integration-based approach. We prove that both estimators globally converge with the nonparametric rate of Stone (1982) to the population counterpart. Asymptotic normality is obtained for both estimators. We show that the asymptotic variance is identical for both approaches. However, the integration-based approach is accompanied by an inflated asymptotic bias. In our simulation exercise, this difference is visible in the results for the MISE. The advantage of the integration-based estimator is that only the pilot estimator is required to estimate the components. We proposed a tailored wild bootstrap procedure, which we proved to be consistent. Empirically, it results in good finite sample coverage. Under the assumption of an additive model a one-dimensional convergence rate results for Shapley curves by Stone (1985). For an extensive vehicle price data set we show that Shapley curves are a useful tool for the practitioner to gain insight into pricing and the importance of vehicle characteristics. The estimated confidence intervals enable us to distinguish between significant and non-significant sections of the variables. Building on our results, empirical researchers as well as practitioners can conduct statistical inference for Shapley curves.

Acknowledgements

The authors are grateful to the editor, associate editor, and two anonymous referees for their insightful comments and constructive suggestions. This research is supported by the German Academic Scholarship Foundation; Deutsche Forschungsgemeinschaft via SFB 1294, KI-FOR 5363 DeSBI and IRTG 1792, Humboldt University of Berlin; the Yushan Fellowship, National Yang Ming Chiao Tung University, Taiwan; the Czech Science Foundation’s grant no. 19-28231X and the Institute for Digital Assets (IDA), Academy of Economic Sciences, Romania.

Disclosure Statement

The authors report there are no competing interests to declare

Table 1. MISE of Shapley Curves for component-based estimator and integration-based estimator

for each variable. The additive and interactive DGP is simulated with variable correlations of ρ = 0 and

. The error terms follow

.

Table 2. Empirical coverage for component-based Shapley curves for and

and significance levels

with M = 1000 Monte Carlo replications.

Figure 1. Estimated component-based Shapley curves for horsepower in dependence of horsepower (in hp) for a vehicle length of 190 inches and vehicle weight of 3500 pounds (black line), confidence intervals (red lines), estimated SHAP values (black crosses) and smoothed curve based on these values (green line).

Figure 2. Estimated component-based Shapley curves for vehicle weight in dependence of weight (in lbs) for a vehicle length of 190 inches and 190 horsepower (black line), confidence intervals (red lines), estimated KernelSHAP values (black crosses) and smoothed curve based on these values (green curve).

supplement_authors.zip

Download Zip (698.2 KB)References

- Aas, K., Jullum, M., and Løland, A. (2021a). Explaining individual predictions when features are dependent: More accurate approximations to Shapley values. Artificial Intelligence, 298:103502.

- Aas, K., Nagler, T., Jullum, M., and Løland, A. (2021b). Explaining predictive models using Shapley values and non-parametric vine copulas. Dependence Modeling, 9(1):62–81.

- Bénard, C., Biau, G., Da Veiga, S., and Scornet, E. (2022a). SHAFF: Fast and consistent SHApley eFfect estimates via random Forests. In International Conference on Artificial Intelligence and Statistics, pages 5563–5582. PMLR.

- Bénard, C., Da Veiga, S., and Scornet, E. (2022b). Mean decrease accuracy for random forests: inconsistency, and a practical solution via the Sobol-MDA. Biometrika.

- Chastaing, G., Gamboa, F., and Prieur, C. (2012). Generalized Hoeffding-Sobol decomposition for dependent variables-application to sensitivity analysis. Electronic Journal of Statistics, 6:2420–2448.

- Chen, H., Covert, I. C., Lundberg, S. M., and Lee, S.-I. (2023). Algorithms to estimate Shapley value feature attributions. Nature Machine Intelligence, pages 1–12.

- Covert, I. and Lee, S.-I. (2021). Improving kernelshap: Practical Shapley value estimation using linear regression. In International Conference on Artificial Intelligence and Statistics, pages 3457–3465. PMLR.

- Covert, I., Lundberg, S., and Lee, S.-I. (2021). Explaining by removing: A unified framework for model explanation. Journal of Machine Learning Research, 22(209):1–90.

- Covert, I., Lundberg, S. M., and Lee, S.-I. (2020). Understanding global feature contributions with additive importance measures. Advances in Neural Information Processing Systems, 33:17212–17223.

- Dagenais, M. G. (1969). A threshold regression model. Econometrica, 37(2):193–203.

- Durlauf, S. N. and Johnson, P. A. (1995). Multiple regimes and cross-country growth behaviour. Journal of Applied Econometrics, 10(4):365–384.

- Fan, J. (1993). Local linear regression smoothers and their minimax efficiencies. The Annals of Statistics, pages 196–216.

- Fan, J., Härdle, W., and Mammen, E. (1998). Direct estimation of low-dimensional components in additive models. The Annals of Statistics, 26(3):943–971.

- Frye, C., de Mijolla, D., Begley, T., Cowton, L., Stanley, M., and Feige, I. (2020). Shapley explainability on the data manifold. In International Conference on Learning Representations.

- Fryer, D., Strümke, I., and Nguyen, H. (2020). Shapley value confidence intervals for attributing variance explained. Frontiers in Applied Mathematics and Statistics, 6:587199.

- Hansen, B. E. (2000). Sample splitting and threshold estimation. Econometrica, 68(3):575–603.

- Härdle, W. and Mammen, E. (1993). Comparing nonparametric versus parametric regression fits. The Annals of Statistics, pages 1926–1947.

- Härdle, W. and Marron, J. S. (1991). Bootstrap simultaneous error bars for nonparametric regression. The Annals of Statistics, pages 778–796.

- Hiabu, M., Mammen, E., and Meyer, J. T. (2020). Random planted forest: a directly interpretable tree ensemble. arXiv preprint arXiv:2012.14563.

- Hiabu, M., Meyer, J. T., and Wright, M. N. (2023). Unifying local and global model explanations by functional decomposition of low dimensional structures. In International Conference on Artificial Intelligence and Statistics, pages 7040–7060. PMLR.

- Jethani, N., Sudarshan, M., Covert, I., Lee, S.-I., and Ranganath, R. (2022). Fastshap: Real-time shapley value estimation. ICLR 2022.

- Johnsen, P. V., Strümke, I., Riemer-Sørensen, S., DeWan, A. T., and Langaas, M. (2021). Inferring feature importance with uncertainties in high-dimensional data. arXiv preprint arXiv:2109.00855.

- Lin, C., Covert, I., and Lee, S.-I. (2024). On the robustness of removal-based feature attributions. Advances in Neural Information Processing Systems, 36.

- Linton, O. and Nielsen, J. P. (1995). A kernel method of estimating structured nonparametric regression based on marginal integration. Biometrika, pages 93–100.

- Lundberg, S. M., Erion, G., Chen, H., DeGrave, A., Prutkin, J. M., Nair, B., Katz, R., Himmelfarb, J., Bansal, N., and Lee, S.-I. (2020). From local explanations to global understanding with explainable AI for trees. Nature Machine Intelligence, 2(1):56–67.

- Lundberg, S. M. and Lee, S.-I. (2017). A unified approach to interpreting model predictions. Advances in Neural Information Processing Systems, 30.

- Mammen, E. (1992). Bootstrap, wild bootstrap, and asymptotic normality. Probability Theory and Related Fields, 93(4):439–455.

- Mammen, E. (1993). Bootstrap and wild bootstrap for high dimensional linear models. The Annals of Statistics, 21(1):255–285.

- Moawad, A., Islam, E., Kim, N., Vijayagopal, R., Rousseau, A., and Wu, W. B. (2021). Explainable AI for a no-teardown vehicle component cost estimation: A top-down approach. IEEE Transactions on Artificial Intelligence, 2(2):185–199.

- Muschalik, M., Fumagalli, F., Hammer, B., and Hüllermeier, E. (2024). Beyond treeSHAP: Efficient computation of any-order Shapley interactions for tree ensembles. arXiv preprint arXiv:2401.12069.

- Nielsen, J. P. and Linton, O. (1998). An optimization interpretation of integration and back-fitting estimators for separable nonparametric models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 60(1):217–222.

- Owen, A. B. (2014). Sobol’indices and Shapley value. SIAM/ASA Journal on Uncertainty Quantification, 2(1):245–251.

- Owen, A. B. and Prieur, C. (2017). On Shapley value for measuring importance of dependent inputs. SIAM/ASA Journal on Uncertainty Quantification, 5(1):986–1002.

- Schmidt-Hieber, J. (2020). Nonparametric regression using deep neural networks with ReLU activation function. The Annals of Statistics, 48(4):1875–1897.

- Scornet, E. (2023). Trees, forests, and impurity-based variable importance in regression. Annales de l’Institut Henri Poincare (B) Probabilites et statistiques, 59(1):21–52.

- Scornet, E., Biau, G., and Vert, J.-P. (2015). Consistency of random forests. The Annals of Statistics, 43(4):1716 – 1741.

- Shapley, L. S. (1953). A value for n-person games. Contributions to the Theory of Games, 28(2):307–317.

- Song, E., Nelson, B. L., and Staum, J. (2016). Shapley effects for global sensitivity analysis: Theory and computation. SIAM/ASA Journal on Uncertainty Quantification, 4(1):1060–1083.

- Sperlich, S., Tjøstheim, D., and Yang, L. (2002). Nonparametric estimation and testing of interaction in additive models. Econometric Theory, 18(2):197–251.

- Stone, C. J. (1982). Optimal global rates of convergence for nonparametric regression. The Annals of Statistics, pages 1040–1053.

- Stone, C. J. (1985). Additive regression and other nonparametric models. The Annals of Statistics, pages 689–705.

- Štrumbelj, E., Kononenko, I., and Šikonja, M. R. (2009). Explaining instance classifications with interactions of subsets of feature values. Data & Knowledge Engineering, 68(10):886–904.

- Tjøstheim, D. and Auestad, B. H. (1994). Nonparametric identification of nonlinear time series: projections. Journal of the American Statistical Association, 89(428):1398–1409.

- Williamson, B. and Feng, J. (2020). Efficient nonparametric statistical inference on population feature importance using Shapley values. In International Conference on Machine Learning, pages 10282–10291. PMLR.