ABSTRACT

Informed by theoretical perspectives on working memory demands and devices’ potential to “prime” different types of cognitive processing, this paper investigates whether we tend to think “faster” and more intuitively, with less reflection when we use a smartphone instead of a personal computer (PC) or notebook. Three complementary experimental studies with a total of 823 participants reveal that the results of using such devices surface only when participants can select the smartphone as their preferred device. Controlling for potential confounding variables reveals no evidence of general differences between devices. Our findings caution against overemphasizing the importance of the type of device in thinking slow or fast and establish self-selection bias as an important factor in explaining such differences. This study contributes to clarifying the psychology of smartphone screens and how humans make choices when they are using these devices.

Introduction

In the digital world, the primary medium for information retrieval and decision-making has changed from the physical to the digital sphere. Recent years have witnessed a rapid “shift-to-mobile” [Citation142, p.1], driven by advancing miniaturization, broad area coverage, and high Internet speeds. Smartphones connect us with the digitalized world and our social networks and extend our cognitive capabilities, as these devices are constantly available as external stores of knowledge and information [Citation53]. Since people already spend half of their online time on smartphones, and smartphones have surpassed computers as the most commonly used devices [Citation117], businesses and organizations will benefit from more insight into users’ cognitive processing when it comes to understanding how the decisions they make differ depending on the type of device they use. Put simply, do we think differently when we use a smartphone instead of a personal computer (PC)?

Despite smartphones’ near omnipresence, only a few studies have examined their effects on cognition [Citation111, Citation153], particularly decision-making, an important sub-field of cognition [Citation123]. Research on decision-making has reported device-dependent differences in various areas, such as in online reviews [e.g., Citation86, Citation104, Citation106, Citation157], consumer decisions [e.g., Citation61, Citation107, Citation117], social media posts [e.g., Citation85], and interactions with fake news [e.g., Citation93]. Evidence has also shown that information-seeking [Citation22, Citation53] and information-processing [Citation31, Citation64] as the bases for rational decision-making are complicated by smartphones’ small screens.

While research on cognitive performance based on the type of device used is inconclusive, even less is known about whether different types of devices “prime” different types of cognitive processing. In other words, we do not know whether smartphones systematically predispose users to think faster and more intuitively and to be less reflective. This type of cognitive processing is commonly known as “Type 1,” as compared to the reflective, slow, and deliberate “Type 2.” While Type 1 processing requires minimal attention or cognitive effort, such as that required in adding 2 and 2 or reading a simple sentence, reflective Type 2 processing is required, for example, to fill out a tax form or compare multiple products in making a consumer decision [Citation62]. Other authors have referred to “System 1” and “System 2” processing [e.g., Citation28, Citation67, Citation89, Citation90], but we follow Evans and Stanovich’s [Citation36] suggestion to use “Type 1” and “Type 2” processing because “System 1” and “System 2” could be misinterpreted as two separate areas of the brain.

A review of the extant research reveals three particularly noteworthy gaps. First, even though research has indicated that more cognitive reflection is used when one uses a computer than when one works on paper [Citation14], priming effects for Type 1 versus Type 2 processing when one uses a smartphone versus a PC have not been investigated systematically. In priming, an environmental stimulus has an unintended and non-aware influence on thoughts, feelings and behaviors [Citation9]. The prime, in this case, a particular device, would activate a related associated cognitive processing type based on previous experience without conscious effort. Since the omnipresence of smartphones indicates that decision processes are increasingly affected by the use of such devices, it would be beneficial to know whether mere using a smartphone would motivate the type of cognitive processing.

The second gap in the research that has compared devices refers to its foci on cognitive performance when one uses reflective Type 2 processing [Citation24, Citation140, Citation142] and on subjective thinking styles. However, these measures are less well suited to predicting rational decision-making when smartphones are used, such as when the decision is particularly or less risky or the user prefers larger rewards over smaller but immediate benefits [Citation38]. As a result, current research should focus on measuring cognitive reflection vs. intuition [Citation105, Citation136], which goes “beyond measures of cognitive ability by examining the depth of processing that is actually used” [Citation133, p. 99].

The third gap is that research has not made clear the role that self-selection plays in decisions or specific user behaviors on the smartphone. Research in survey science [Citation78], e-commerce [Citation74], and personal testing [Citation5, Citation140] has argued that the experimental setup (self-selection versus randomization of participants to devices) may account for contradictory study results on differences in the device used. Addressing such selection bias is necessary to clarify the causal relationship between the type of device and decision-making.

These shortcomings point to the need to address the following research question: Does using a smartphone systematically prime more or less use of intuitive “Type 1” processing versus reflective “Type 2” processing when compared to using a PC? We address this research question in three experiments in which subjects performed domain-independent cognitive tasks (adapted verbal and numerical cognitive reflection test items in a multiple-choice format) on a smartphone or a PC. An experimental investigation can tease out the effect of using a particular type of device on cognitive reflection and rule out alternative explanations. For example, differences in how devices are used could also stem from higher search costs on smartphones compared to PCs because of changes in navigation [Citation41, Citation107] and mobile-adapted applications [Citation97]. In addition, research on device differences has suffered from a variety of limitations, such as the fact that individuals often work in distracting surroundings when completing assessments on a mobile device, and failure to optimize assessment webpages for display on a mobile device [Citation57], leading to longer completion times and lower-rated user satisfaction with the device. Furthermore, user interface design options may not be predictable if not planned for (e.g., drop-down buttons rendered as spinner lists on Android but as picker wheels on Apple devices) [Citation3]. Thus, prior research has often not isolated only those features that are indispensable and essential for the perception of a smartphone as a holistic concept, such as a touching interface or small screen size [Citation117], but also allowed many context factors to confound results, making it more difficult to separate the pure device effect. Finally, when participants are not assigned to either a smartphone or a PC, selection bias is likely to emerge, and observed differences might stem from a third variable. To disentangle the effect of self-selection and shed light on contradictory findings, the present study examines the effect of smartphones in a between-subjects design with self-selection, a randomized between-subjects design without self-selection, and a within-subjects design to control for a large variety of confounding variables. In our study design, we aim to separate most typical smartphone features that distinguish smartphones from PCs such as the touch-sensitive user interface or the smaller screen size, from other contextual factors that may be more common in combination with smartphones, but are not inseparably linked to them, such as screen clutter or distraction.

Our research results extend current theories on smartphones and cognition and contribute to our understanding of what influences our ability to think effectively and make good decisions on smartphones. The results also have implications for areas like online reviews [e.g., Citation86, Citation104, Citation106, Citation157], consumer decisions [e.g., Citation61, Citation107, Citation117], posting on social media [e.g., Citation85], and interaction with fake news [e.g., Citation93]. Finally, they will also be of high practical relevance to designers of mobile applications.

Theoretical background

Smartphones and cognition

The popular media portray smartphones as a threat to our cognition, and evidence shows that multitasking with devices has adverse effects on attention [Citation153], particularly in situations in which individuals seek immediate gratification, such as receiving a new “like” on social media and when performing tasks they do not find rewarding. Mobile phones can interfere with everyday behaviors like walking and real-life social interactions, as smartphones occupy valuable attention resources. Research has also found that the addictive nature of phone-related “self-interruptions” may lower work and study performance [Citation81].

Research on using smartphones as a form of “extended cognition” has suggested that “more frequent smartphone usage would lead to less reliance on higher cognition” [Citation39, p. 1057]. Even the mere presence of a smartphone can have cognitive costs, reduce cognitive capacity, and impair task performance [Citation39, Citation134, Citation146]. Hartmann et al. [Citation54] explained this smartphone effect using the “affordances” for interaction that a smartphone offers and reported smartphone dependency as a moderator of the effect of a smartphone’s presence but found no overall effect of smartphone presence on memory performance. Recent experimental studies have also demonstrated adverse long-term effects of smartphones on cognition, although these effects are not stable over time. Heavy smartphone use (over 5 hours a day) leads to a “diminished ability to interpret and analyze the deeper meaning of information” [Citation39, p. 1], although this effect disappeared after a few weeks.

Despite the prevalence of smartphone use in everyday life, we know little about how they influence cognitive information-processing and decision-making [Citation111, Citation153]. Users worldwide spend an average of about three hours a day with their smartphones [Citation127]. Half of the time people spend on the Internet is already spent on smartphones—as much as they spend on desktops/PCs [Citation45]—during which time, users make decision after decision. In the context of information acquisition and related decision-making, people under age 35 use smartphones to read news more often than desktop devices and traditional news sources such as print, radio, and TV [Citation94], a trend that has progressed quickly since news apps began to gain acceptance in 2015 [Citation13]. In addition, the rate of online reviews written on smartphones has risen sharply compared to those written on PCs [Citation80]. These changing patterns in device use suggest the value in determining whether users make the same informed decisions using smartphones as when they use PCs.

Type 1 and Type 2 cognitive processing and their measurement

Cognition is defined as the “mental activity of processing information and using that information in judgment” [Citation59], whereas decision-making can entail both choices that depend on taste and preference and inference decisions that are based on decision criteria [Citation43]. Research on human decision-making has long recognized that normative models of decision-making—that is, how rational decision-makers should decide based on reasoning and weighting of decision options to achieve maximum expected utility—do not match descriptive accounts of which decisions are made in reality [Citation123]. Bounded rationality, a term Simon [Citation118] coined to acknowledge the cognitive limitations of decision-makers, is also reflected in “satisficing” [Citation119], which occurs when individuals “make a decision that satisfies and suffices for the purpose” [Citation18, p. 1241], but which may not be optimal or fully rational.

Dual-process theories distinguish two types of cognitive processing in decision-making [Citation98]: Type 1 processing (“intuitive” processing), which humans often use when deciding spontaneously and intuitively in everyday life, is fast, automatic, and efficient in routine, repetitive decisions and is associated with systematic cognitive biases and heuristics (rules of thumb), as it is executed without much reflection. Gigerenzer and Brighton [Citation42] and Hertwig and Gigerenzer [Citation55] further refined Type 1 processing in investigating which heuristics people use and in which cases such heuristics are useful, may even be ecologically rational, and might have a better accuracy-effort trade-off.

In contrast, Type 2 processing (“reflective” processing) is rational, slow, and deliberate. Dual-process theories were discussed as early as the 1970s [Citation147] and, following popular works by Kahneman [Citation62] and Stanovich and West [Citation126], have been applied extensively in research more recently. People are cognitive misers by nature [Citation125], as they tend to prefer Type 1 intuitive processing, which is comparatively effortless, over the cognitive effort required in Type 2 processing, as it requires conscious thought, effort, and costly analytical reflection. Type 2 processing is characterized by “cognitive decoupling and hypothetical thinking—and by its strong loading on the working memory resources that this requires” [Citation36, p. 226]. Recently, neuropsychological tests with electroencephalography [Citation90] also revealed evidence for dual processing and distinguished Type 1 and Type 2 processing during problem-solving tasks involving cognitive biases [Citation8] and while reading social media posts of fake news [Citation90].

In general, cognitive reflection, or lack thereof, is predictive of many decisions and beliefs in every aspect of life, from falling for conspiracy theories to moral decisions [Citation100] to problematic use of social networks [Citation141]. As a result, increasing attention has been paid to the measurement of cognitive reflection. For broad use on large samples, the cognitive reflection test provides an objective measure of cognitive reflection; Frederick’s [Citation38] classic version has three items. Cognitive reflection tests require respondents to “override intuitively appealing but incorrect answers” [Citation88, p. 246] as the following, example question from Sirota et al. [Citation120, p. 327] demonstrates: “If you were running a race, and you passed the person in second place, what place would you be in now?” The incorrect, “intuitive” Type 1 response is first place, but the correct, “reflective” Type 2 response is second place. The performance on cognitive reflection tests cannot be accounted for entirely by numerical or cognitive capabilities, but cognitive reflection tests can measure miserly cognitive processing [Citation137]. Recent mouse movement analyses show that intuitive responses are initially activated in such tests and must be suppressed to arrive at a correct answer [Citation139]. Moreover, stimulation of the brain areas that are responsible for inhibitory control has been shown to lower participants’ ability to suppress incorrect intuitive responses in cognitive reflection tests [Citation32, Citation96]. Because of its established validity and ability to anticipate people’s reasoning and decision-making skills in other situations [Citation120], the cognitive reflection test has been used in hundreds of studies. In fact, a meta-study of the cognitive reflection test in 2015 [Citation14] drew on 118 publications using the original cognitive reflection test. More recent research has shown, for instance, that performance on the cognitive reflection test is negatively correlated with the perceived accuracy of fake news and positively correlated with the ability to distinguish fake news from real news, even for headlines that align with the individual’s political ideology [Citation101].

Following Novak and Hoffman [Citation95], we conceptualize cognitive processing types as a situation-specific state instead of as an individual characteristic or trait. In other words, we posit that any person’s use of Type 1 or 2 processing may depend on the device and the specific situation.

Literature review

Although research has not directly investigated whether Type 1 or Type 2 processing occurs on smartphones more often than on PCs, many of the findings on differences between devices point to an answer. Work on such differences is scattered across domains like online and mobile shopping [e.g., Citation76, Citation97, Citation107], online reviews, respondents’ behavior in online surveys [e.g., Citation24, Citation70, Citation83], psychological testing (e.g., for personal assessment [e.g., Citation6, Citation17, Citation57]), user behavior on social media [e.g., Citation22, Citation64, Citation86] and news consumption [e.g., Citation30, Citation31]. To get a clearer picture of agreement and disagreement in these studies’ results, we carried out a systematic literature analysis based on 79 studies and coded the major results according to determinants of rational decision-making and their relationships to intuitive Type 1 versus reflective Type 2 processing. (For details on the 79 studies, see online Appendix A.)

To contextualize research on differences between devices, we built and refined a model of human information-processing [Citation150, Citation151], which has been applied successfully in the context of device differences [Citation5]. We refined the model presented in to include concepts that are relevant to our literature review: information-seeking, thinking styles, and Type 1 versus Type 2 processing, which, together with perception, are the main foci of our literature review. The conceptual visual model is a simplified abstraction of cognitive processes and does not include, for instance, cognitive control mechanisms. As illustrated in our model, recent neuropsychological studies have shown that the mechanisms of attention, working memory and long-term memory play roles in Type 1 and Type 2 processing, albeit to different degrees [Citation152].

Figure 1. Contextualization of the determinants of rational decision-making, adapted from [Citation150, Citation151]. Newly included concepts in the information-processing model by [Citation150, Citation151] are printed in bold, and the main foci of the literature review are highlighted in light gray.

![Figure 1. Contextualization of the determinants of rational decision-making, adapted from [Citation150, Citation151]. Newly included concepts in the information-processing model by [Citation150, Citation151] are printed in bold, and the main foci of the literature review are highlighted in light gray.](/cms/asset/31d1cc06-3f94-4c05-b3ea-744993e5e0a2/mmis_a_2196769_f0001_b.gif)

In the literature review, five concepts emerged: information-seeking about decision alternatives, which is a phase in many models of decision phases [Citation124]; device-dependent attention and perception of information; thinking styles as a predisposition for Type 1 and Type 2 processing; cognitive performance of reflective Type 2 processing; and indicators of cognitive effort invested in decision-making and the use of Type 1 vs. 2 processing. Variables like time taken and the characteristics of written texts could indicate the decision process used and the cognitive effort invested in using different devices from a motivational perspective. Such process variables could also be associated with less effortful elaboration in the context of Type 1 and 2 processing. However, apart from these indirect measurements, research has not directly measured Type 1 and Type 2 processing in the use of devices, which is a clear research gap.

Information search and information acquisition on decision alternatives

Numerous studies have indicated that users search for additional information on a smartphone less often than they search on a PC. In the context of multichannel customer management, Sohn and Groß [Citation122] described factors on the customer side that prevent customers from shopping on mobile devices, such as the perceived effort required to evaluate and select products. These results are in line with Raphaeli et al. [Citation107], who showed that consumer behavior differs on smartphones and PCs, as smartphone users show more task-orientated browsing than exploration behavior. Session duration in online shopping is reported to be shorter on smartphones than PCs [Citation107], and customer journeys on smartphones include fewer clicks [Citation61]. These results are consistent with observations that have indicated that casual browsing for products prompted by newsletters or social media is more prevalent on PCs, whereas smartphone users are more likely to click sponsored search results or visit familiar online stores, neither of which require extensive information search [Citation61]. In addition, in the domain of news-reading, findings have indicated that, when consumers use smartphones to read news on social media, they are less likely to click on related links than they are when they read news on PCs, reducing in-depth engagement [Citation22].

As a consequence of the greater search costs associated with mobile devices, smartphone users tend to be more receptive to recommendations, which has been demonstrated by, for instance, more clicks and views of recommended products on smartphones than on PCs [Citation74]. While the evidence of a stronger ranking bias—that is, whether users click on the search results displayed first—when users use smartphones than when they use PCs is still debated [Citation155], research has demonstrated that top-ranked links are more likely to be clicked on mobile devices than they are on PCs [Citation41]. Lower levels of information acquisition on smartphones have also been demonstrated by less switching of windows [Citation49] and lower performance when study participants are asked to search online for answers to a quiz [Citation53] or to identify relevant documents for a search query [Citation130].

Perception and cognitive elaboration of information

Smartphones impact how users perceive and process information [Citation111]. Ross and Campbell’s [Citation111, p. 150] review on smartphones’ effects on cognition and emotion argued that smartphones “appear to suppress deeper information processing in favor of quick and convenient extractions of information” and that “mobile interfaces and usage contexts generally favor shallower levels of information processing” [Citation111, p. 156]. For example, an eye-tracking study showed that social media news posts on smartphones acquire less visual attention to both images and text and less cognitive engagement than when they appear on PCs [Citation64]. Users also invest less time when reading news on a smartphone than when they read it on a PC [Citation30]. Dunaway and Soroka [Citation31] also reported a lower level of cognitive attention to news in the video format on smartphones, measured by psychophysiological responses. High amounts of information (e.g., as measured by excessive numbers of consumer reviews) are more likely to induce cognitive overload (i.e., “higher load imposed on an individual’s working memory” [Citation143]) when viewed on smartphones than when viewed on PCs and lowers purchase intention [Citation40].

Thinking styles and decision-making in preferential choices

Outcomes of consumer decisions can indicate how consumers evaluated decision alternatives on different devices and whether Type 1 or Type 2 processing was involved. When discussing smartphone and PC differences, some authors have referred to theories from various research streams that can be subsumed under the umbrella term of dual processing [Citation113], even if they do not directly refer to Type 1 and 2 processing [Citation36]. While thinking styles or dispositions should not be confused with Type 1 and Type 2 processing, such dispositions can “determine the probability that a response primed by Type 1 processing will be expressed” [Citation36, p. 230].

For instance, Liu and Wang [Citation76] investigated whether devices trigger different “consumer decision systems” in the context of choosing a hotel on a smartphone or a PC. They concluded that PCs and laptops evoke a logic-based (related to Type 2 processing) instead of a feeling-based (related to Type 1 processing) decision system, the latter of which is more likely to be used on smartphones. These results were supported by Kaatz et al. [Citation61], who reported, based on clickstream data from a fashion retailer, that cognitive components of the customer experience (related to Type 2 processing) are more relevant to purchase decisions made on PCs, while affective components (related to Type 1 processing) have more influence on decisions made on smartphones. Regarding thinking styles in purchase decisions, research has also reported that smartphones evoke an intuitive-experiential (related to Type 1 processing) thinking style, while PCs evoke an analytical-rational (related to Type 2 processing) style.

Indicators of the cognitive effort invested in decision-making and the use of Type 1 versus Type 2 processing

Time spent

Authors have argued that a number of dependent variables that differ across devices, such as time spent on similar decisions or tasks in general, can be explained by lower cognitive effort invested when smartphones are used than when PCs are used. In the context of survey science and studies that have compared devices for psychological measurement, the impact of smartphones on completion time for web surveys [Citation2, Citation23], for example, has long been debated; however, overall, research has provided a minimal amount of evidence that respondents devote more time to filling out questionnaires on smartphones than PCs. Both a meta-study in the field of survey science [Citation23] and one in the field of personnel testing [Citation5], for instance, found that most studies reported longer completion times on smartphones. However, some of the earlier studies might be outdated. As Schlosser and Mays [Citation116] noted in 2017, answer times are reduced if a fast internet connection and a current smartphone device are used. More recent studies [Citation50, Citation83, Citation108, Citation148] found similar completion times for closed-ended questions on smartphones and PCs and shorter completion times on smartphones than on PCs, which might indicate less cognitive effort expended when using smartphones, are rarely reported [Citation50].

Satisficing and response bias, break-off rates

Peytchev and Hill [Citation103] were among the first to argue that responding to a survey on a smartphone in a distracting situation can lead to “peripheral” processing (related to Type 1 processing) instead of “central processing” (related to Type 2 processing) of questions and to investing less attention and effort to the task at hand than would responding on a PC. While the distinction between the central and peripheral routes is based on the elaboration likelihood model [Citation102] and has typically been used in literature that has focused on persuasion and attitude change, it has also been characterized as a dual-processing theory [Citation113]. Response patterns in online surveys like straight-lining, which refers to selecting the same answer option to a series of questions or choosing the first answer option (primacy effect), may indicate satisficing when answering questionnaires to “shortcut the cognitive processes necessary for generating optimal answers” [Citation71, p. 29]. Mixed results were found for the difference in the incidence of straight-lining between using a smartphone or a PC [Citation66, Citation78, Citation138]. Another study showed that using smartphones did not lead to more motivation for underreporting (by selecting certain answers on filter questions that would reduce effort) than using PCs did [Citation25]. Some authors reported higher break-off rates [Citation66, Citation73] or more missing items [Citation50, Citation66, Citation78, Citation108] for participants who used smartphones, but others found no noticeable differences [Citation116]. Neither was a higher acquiescence rate [Citation66, Citation75] or midpoint bias [Citation66] found for smartphones. In addition, results that showed stronger primacy effects on smartphones [Citation78, Citation108] were contrasted by studies that presented contradicting results [Citation84, Citation138, Citation149]. In areas beyond surveys, where real-world emotions play a role, research findings have suggested that using smartphones may have polarization effects, that is, more comments on the extreme poles of opinion. Online reviews made on smartphones have more variance and tend to be more extreme than those written on desktops [Citation19, Citation80, Citation85]. Moreover, smartphones may amplify negative emotions, increasing the intensity of online complaints [Citation156].

Shorter user-generated texts

Several authors concluded from empirical data that user-generated answers to open-ended survey questions that are created on smartphones are shorter than those created on PCs [Citation78, Citation109, Citation149] or in online review forms [Citation19, Citation80, Citation85, Citation157], which is explained by the greater effort required to write longer texts [Citation85, Citation157]. These results alone could not be clearly linked to lower cognitive effort, as they could be directly related to more effort required to write on a smartphone because of the absence of a keyboard. However, linguistic analyses of texts written by users on different devices have indicated that Type 2 processing is more common on PCs than it is on smartphones. Ransbotham et al. [Citation106] found that restaurant reviews written on smartphones contain less “cognitive content” than those written on PCs, where cognitive content was measured “through words reflecting insight, causation, and discrepancy.” Melumad and Meyer [Citation86] reported that tweets written on smartphones have a less “analytical” style than those written on a PC [Citation34, Citation158].

Cognitive performance of reflective Type 2 processing

Studies on device-dependent performance in cognitive decision-making tasks, for which the use of Type 2 thinking is typically necessary [Citation125], have included reports that scores on general mental ability tests, such as IQ tests, are lower when participants were using a smartphone to fill out the test [Citation4, Citation69]. However, conflicting results were also published from studies that did not find differences in which device was used in the results of general mental ability tests [Citation6, Citation17, Citation140]. Tzur and Fink [Citation142] found evidence that users perform better on cognitive tasks when they use PCs than when they use smartphones but only when the experimental setting imposes a high cognitive load. The interaction effect between differences between devices and additional cognitive load was evident for both intrinsic cognitive load, measured by tasks with varying difficulty in a within-subjects design, and the extrinsic cognitive load, which was manipulated by presenting the information repetitively with more sentences in the specifications of the test items.

Other studies that have examined deductive reasoning performance (e.g., using tasks that resembled work-related activities and that required the application of rules) also revealed both differences in the device used [Citation114] and no differences in the device used [Citation47]. Small screen size lowers investors’ judgment when they read firm disclosures on a smartphone screen instead of a larger PC screen or on a smartphone-adapted version that requires less scrolling [Citation46].

Another observation was that fake news leads to more engagement and user interaction on a smartphone than on a PC [Citation93]. Whether a user believes fake news has been clearly linked to whether readers use Type 1 or Type 2 processing in other studies [Citation91, Citation101].

Explanations for differences between devices and hypotheses building

Having laid out empirically identified differences between the results of using a smartphone and using a PC, we turn to the underlying reasons for differences in information-processing and decision-making on devices as a basis on which to advance our hypotheses on the effects of devices on Type 1 and Type 2 processing.

One widely accepted theory that explains cognitive performance in the context of differences between devices is the structural characteristics/information processing framework for “psychologically conceptualizing the effect of UIT [unproctored Internet-based testing] device type on assessment scores” [Citation5, p. 1]. The framework explains why scores on Internet-based personality tests are similar for participants who use smartphones and those who use PCs, but performance on IQ tests is significantly lower when smartphones are used, with various authors also reporting high effect sizes for the difference [Citation4]. Arthur et al. [Citation5] argue that desktops add the lowest cognitive load to the task itself, while the cognitive load in using notebooks and tablets lies in the middle, and smartphones have the highest additional cognitive load.

When we compare smartphones and PCs, several differences stand out. A small screen size heightens the demand for working memory, while a higher perceptual speed and acuity are needed to deal with high screen clutter (a high amount of visual information relative to the screen size). A larger cognitive load because of small screen size and visual clutter in a mobile display lowers, for instance, the accuracy of consumers’ decisions, measured by its consistency with users’ preferences [Citation97]. Another structural characteristic of a device is its interface (e.g., touchscreens and virtual keyboards vs. physical keyboards), which relates to psychomotor skills. Users have smartphones with them at almost all times, so smartphones can be used in more diverse situations (higher situation variability) than desktop PCs and laptops can [Citation115]. Arthur et al. [Citation5] labeled that factor “permissibility” in the context of personnel assessment tests in their framework because it reflects test-takers’ freedom to take a test anytime and anywhere, which increases the potential for distraction and therefore requires greater selective attention to focus on the task [Citation5].

Several studies have used the structural characteristics/information-processing framework to examine the effect of smaller screen size on the demand for working memory [Citation6] and to test the role of distracting environments [Citation140]. In an experimental study with random assignment of the test subjects to a device in a laboratory environment, however, not all predictions of the model could be confirmed because the experimental setting offered no differences in general mental ability scores between the devices [Citation6]. However, the results suggested that smartphones place higher demands on working memory (e.g., because of the smaller screen size) than PCs do [Citation6]. Arthur et al. [Citation5] argued that distractions when participants had a free choice of location play a particularly important role as a determinant of lower cognitive performance in using smartphones, as studies in a laboratory context, which eliminated the higher demands on selective attention, were less likely to show differences in cognitive performance between smartphones and PCs. Given the finding that cognitive performance differences based on the device used occurred primarily when people were allowed to choose their own test environment, Traylor et al. [Citation140] investigated the interaction effect between the device and the environment in which a cognitive test is completed. The underlying hypothesis was that, when a quiet test environment is not predetermined, participants often choose situations for testing that involve the potential for distraction.

Following the line of reasoning presented in the structural characteristics/information-processing framework [Citation5], we conclude that distraction and higher cognitive load because of smaller screens and screen clutter would lead to a higher degree of intuitive Type 1 processing since Type 2 processing depends in part on working memory [Citation35, Citation99]. However, such an effect should disappear if apps optimized for display on a mobile device (making the elements more readable, reducing scrolling) are used and the participant is dedicated to completing a task on the smartphone without distractions or multitasking.

Beyond the structural characteristics/information-processing framework [Citation5] are additional aspects of differences between devices that are relevant to a discussion of the effects of smartphones on cognitive intuition and reflection that have been discussed in other literature streams. Our research focus is on the reasons (other than demands on working memory) for differences in the results of using one or another device: the priming effects based on typical use patterns of devices, and the touchscreen interaction with smartphones, and self-selection effects.

Priming effect of smartphones and associations with smartphones

In a distracting environment, such as that which occurs when one uses a smartphone in public or while walking, the user is aware that his or her full concentration is not available, but smartphones may also influence cognition in more subtle ways. The possibility that the mere use of a smartphone puts a user in a different mood or lowers his or her motivation for cognitive effort, in the sense of a priming effect, is likely to be far less transparent to the user than the environment is. Research has not yet addressed a priming effect due to the device on which one worked or with which a cognitive reflection test was administered, although a meta-review of 118 cognitive reflection studies revealed without further theorizing that solving cognitive reflection tasks on a computer leads to more reflective scores than completing them on paper [Citation14]. Moreover, a priming effect of smartphones for Type 1 processing may also be “ecologically” rational and provide a better accuracy-effort trade-off [Citation42, Citation55] since, for example, further search for information is more cumbersome on smartphones than it is on PCs.

Use-based priming effects of smartphones

Liu and Wang [Citation76, p. 447] stated that “one potentially underestimated factor is the nature of the device and the concepts the device represents.” Research has studied users’ associations with smartphones to find out which concepts a smartphone represents [Citation63, Citation117] by, for example, asking participants to complete word fragments or by using implicit associations tests. Kardos et al. [Citation63, p. 84] argued that smartphones can induce different user behaviors in the form of priming in observing that “as an important personal and cultural object, the mobile phone may carry meanings that can be activated by its presence or mere concept, which in turn influences behavior.”

For example, smartphones are often characterized as being used mainly for leisure activities and are often associated with fun, evoking a for-fun mindset. Smartphone users have faster responses to such fun-related words as “entertain” in combination with pictures of a smartphone than to non-fun-related words like “study” and “task” [Citation117]. We also associate smartphones with relationship-related concepts [Citation63], as smartphones are likely to prime the notion of close personal relationships that people cultivate with their smartphones’ help. Users’ most frequent activities on smartphones reflect this notion, as users spend most of their time on their smartphones engaging in social networking, texting, and telephoning [Citation39]. Furthermore, automated linguistic analyses of large Twitter corpora and TripAdvisor reviews reveal that users refer more often to friends and family when they tweet or review using smartphones than when they use a PC [Citation86]. Online reviews made on smartphones are more emotional and disclose more personal information than those made on PCs [Citation82, Citation85, Citation86, Citation106]. Therefore, smartphones are likely to induce intuitive Type 1 cognitive processing because social sharing and empathy are positively related to intuitive thinking but negatively related to reflective, deliberative Type 2 processing [Citation16, Citation129].

Conversely, because of their typical work context, PCs and laptops may trigger utilitarian, instrumental, and functional (shopping) associations and a logic-based thinking system that relies less on feelings and impulses [Citation76]. The associations of smartphones with fun and PCs with work heighten the probability that a smartphone user will choose hedonic products over utilitarian products and rate them more positively than a PC user will [Citation117]. At the same time, PCs and laptops are perceived more as utilitarian objects from the work context and should be more readily associated with deeper and more reflective Type 2 thinking. In addition, smartphones have been reported to have a more calming and pacifying effect than PCs due to properties such as being a personal possession and promoting a sense of privacy [Citation87]. Since positive mood is related to intuitive processing, while negative mood leads to reflective processing [Citation27], this characteristic of smartphones may also induce Type 1 processing to a higher extent than PCs.

Smartphones’ touchscreen-based priming effects

Another stream of literature discusses the differences between the use of smartphones and PCs based on their interface modalities, with a focus on touch. The influence of sensorimotor experiences and touch on cognition can be explained in the context of embodied cognition since “the mind must be understood in the context of its relationship to a physical body that interacts with the world” [Citation51, p. 343]. For example, Halali et al. [Citation51] demonstrated that the positive “priming” effect on cognitive control evoked by touching cold objects is similar to that from viewing icy, snowy landscape images.

Research in consumer psychology has focused on the differences in interactions with a smartphone’s touchscreen vs. those with the mouse on PCs [Citation21, Citation145, Citation158]. Brasel and Gips [Citation15, p. 537] posited that customers might be “especially susceptible to biases in their online search and purchasing behavior” on touchscreen devices and are likely to exhibit a “bias toward sensory information over abstract information” and to focus on tangible product attributes in product-related decisions [Citation15, p. 535]. In a study on hotel selection, customers rated their “gut feel and instinct” as more relevant to their decisions than information in user reviews when they used a touchscreen device than when they used a mouse [Citation15]. Wang et al. [Citation145] observed that the touch surface could reinforce existing opinions about products in the sense of polarization, in both in positive and negative directions.

Hypothesis on priming effect

Since we associate smartphones, which are primarily communication devices, with relational concepts [Citation63, Citation117], we contend that they are more likely to appeal to intuitive Type 1 processing than PCs are because of the relationship between social sharing and empathy and intuitive thinking [Citation16, Citation129]. Our central hypothesis is that smartphones activate Type 1 cognitive processing, not only because of distractions or small screens, but also based on a priming effect that is related to their typical use patterns and associations and the touch-interaction involved [Citation15, Citation21, Citation145, Citation158]. Therefore, we state the following general hypothesis:

Hypothesis 1 (H1): Using a smartphone primes for intuitive Type 1 processing, while using a PC primes for reflective Type 2 processing.

Self-selection effects

Frequent use of smartphones and the greater proportion of daily computing time spent on smartphones than on PCs positively correlate with intuitive thinking, measured with the cognitive reflection test [Citation11, Citation144]. This correlation could occur because increased use of smartphones leads to higher use of intuitive Type 1 thinking, or because people who are inclined to Type 1 thinking use the smartphone to reduce their cognitive effort by, for example, using it to look up information in the calendar or ask questions whose answers they could easily remember or learn [Citation11]. In the same vein, previously reported correlations, such as those between frequent smartphone use and various measures of key cognitive abilities like attention, memory, and executive functioning [Citation153], are not likely to be causal relationships but are caused by some people’s inclination toward immediate gratification. Thus, self-selection may explain why “cognitive misers” who tend to think intuitively in cognitive tasks (i.e., use Type 1 processing more often than Type 2) also tend to be dependent on their smartphones’ everyday use [Citation11]. As Pennycook et al. [Citation100, p. 430] pointed out, “smartphones may serve as a ‘second brain’ to which those inclined to avoid analytic thought offload their thinking.”

Users who score high in smartphone addiction have also been found to be impulsive and to choose immediate monetary rewards over later rewards, which Tang et al. characterized as a “higher tendency to make irrational decisions” [Citation132, p. 3]. Impulsive individuals have also been found to use the mobile Internet frequently for work and leisure activities and to exhibit attentional patterns that are consistent with making risky security decisions by choosing public WI-FI-networks and considering fewer security-related details on their smartphones, as measured via eye-tracking [Citation58]. Various application domains, such as online shopping, have also found that the mobile smartphone channel attracts different customers or user groups than the PC channel. Field data on omni-channel use in e-commerce has shown that “impulsive individuals use relatively more mobile devices compared to online devices than do low impulsive individuals” [Citation110, p. 469]. Customers who tend to make spontaneous impulse purchases expect to have more fun using smartphones for shopping, while customers who value convenience and want to spend less time shopping do not expect to enjoy shopping on a smartphone, probably because searching for products on a smartphone is more challenging and, therefore, more time-consuming than it is on a PC [Citation48].

Survey research has identified a number of demographic characteristics in which participants typically differ when they select a device to use [see, e.g., Citation66]. Lugtig and Toepoel [Citation78, p. 92] argued in relation to research on survey science that yielded contradictory results on differences between devices that “the measurement error differences that we find between the devices should not be attributed to the device being used, but rather to the respondents. Those respondents who are likely to respond with more measurement error in surveys are also more likely to use tablets or smartphones to complete questionnaires.” Researchers in the area of personal testing have noticed that, while some comparison studies have shown apparently clear results that indicate lower cognitive performance scores on mobile smartphone devices than on non-mobile desktops, other lab-based studies have repeatedly shown that such differences do not exist [Citation5, Citation140]. Traylor et al. [Citation140] suggested self-selection versus random assignment to devices as a likely reason for this inconsistency in results, and Brown and Grossenbacher [Citation17, p. 68] observed that “once selection bias is eliminated as a threat to validity, we expect that differences between mobile and non-mobile test scores will be much weaker, if not equivalent.”

Field data on the differences between the use of smartphones and PCs in e-commerce is rare because of the difficulty in obtaining data (e.g., by cooperating with analytics companies) and because real data sets typically lack the opportunity to randomize the device treatment [Citation74], relying instead predominantly on users’ choosing their device. In this context, Lee et al. [Citation74, p. 894] noted that “it is possible that mobile users are fundamentally ‘different’ from PC users, and therefore any results … could be attributable to these unobserved differences.” Our literature review revealed that only 3 out of 63 research articles contained both research data in which participants selected their devices and a randomized between-subjects design [Citation85, Citation86, Citation156], thus allowing the role of self-selection to be assessed. However, this research gap remains because comparing multiple studies, some with and some without self-selection, from multiple papers mentioned in literature reviews [e.g., Citation5, 23] makes it exceedingly difficult to assess the role of self-selection using extant research.

Hypothesis on self-selection effects

Self-selection may play a large role in many of the differences between devices that have been identified based on empirical datasets [Citation153], so possible device effects should be investigated independent of the choice of a device. Studies have suggested clear associations between smartphone use and more intuitive cognitive processing when a user chooses the device [Citation11, Citation144]. Moreover, demographic variables like gender, for which meta-studies have identified robust performance differences in cognitive reflection [Citation14], typically differ when participants select a device [Citation66]. Therefore, we posit:

Hypothesis 2 (H2): Self-selection of the device (smartphone vs. PC) increases the difference between the amount of intuitive Type 1 and reflective Type 2 processing used between smartphones and PCs more than when participants are randomly assigned their devices.

Research method

Research design

To account for the typical limitations in prior experimental settings, we conducted three consecutive online experiments that differed in their research designs: (1) a between-subjects design with self-selection to groups, (2) a between-subjects design with randomized groups, and (3) a within-subjects design. The studies’ underlying rationale was to have participants answer a cognitive reflection test using either a smartphone or a PC so as to measure the Type 1 and Type 2 processing that occurs when using these devices.

The first between-subjects study used a quasi-experimental design to assess the effect of the device so we could investigate the effects of self-selection of a smartphone or a PC. We did not force the participants to use either a smartphone or a desktop computer; instead, the questionnaire was designed to record the device the participants chose to use to complete it.

In the second between-subjects study, participants were randomly assigned to use a smartphone or a PC via an email invitation.

In the third study, the device served as a within-subjects factor to allow for more robust comparisons “because each test-taker completes the assessment on both device types and thus can make an intrasubject comparison” [Citation5, p. 6]. The research design was similar to that used in Brown and Grossenbacher [Citation17], who used parallel versions of the Worderlic Test, first testing all participants on the PC and then randomizing PC, smartphone, and tab for the administration of a second, parallel version of the test. In contrast, we used a fully-factorial design that featured a device order sequence and a parallel test version sequence as two counter-balanced between-subjects factors, resulting in four between-subjects experimental groups (). This means that one participant answered half of the questions on one device and the other half on the other device.

Because of the many differences between PCs and smartphones, we designed the studies to focus on particular differences between the devices used while keeping the influence of other factors low or constant ().

Table 1. Focus of the studies.

Measurement of constructs

Measurement of the dependent variable: Development of a cognitive reflection test

We needed a larger number of cognitive reflection tasks than the traditional 3-item test [Citation38] to measure Type 1 and Type 2 cognitive processing reliably [see, e.g., Citation137] and to fulfill the assumptions of the statistical tests for comparing the devices. We adapted 6 of the 7 items from the cognitive reflection test’s multiple-choice version [Citation121] and 12 items from the verbal cognitive reflection test [Citation120], whose questions were inspired by quizzes and brainteasers from the Internet. Instead of the traditional open-text format used in the original cognitive reflection test [Citation38], we chose a multiple-choice format that could be answered via radio buttons. Doing so lowered the influence of psychomotor abilities on the results of using a PC versus a smartphone because of the effect of using a virtual versus a physical keyboard [Citation68]. Thus, we created multiple-choice response options for the set of verbal cognitive reflection tasks. To align the response format of the verbal and numerical parts of the questionnaire, we added the response option “Other, please specify” to the multiple-choice response options of each numerical cognitive reflection test item, resulting in five response options for each cognitive reflection task. An example of a verbal cognitive reflection task adapted from Sirota et al. [Citation120] was “How many of each animal did Moses put on the ark?” The incorrect “intuitive” Type 1 response is 2, while the correct “reflective” Type 2 response is “Other: None/It was Noah”; Wrong, distracting options were answers 1 and 7. An example of a numerical task is “Jerry received both the 15th highest and the 15th lowest mark in the class. How many students are in the class?” The incorrect, “intuitive” Type 1 response is 30 students, while the correct, “reflective” Type 2 response is 29 students. The wrong distracting options were 1 student and 15 students.

To measure Type 2 processing, we employed the standard scoring technique based on the number of correct (reflective) responses, although we use the percentage value from the total of 18 items for ease of interpretation [Citation99]. We measured intuitive (Type 2) processing using the percentage of intuitive responses, which is also a common approach when scoring cognitive reflection tests [e.g., Citation16, Citation121].

The test items were translated into German, the country’s native language, and proofread by two study assistants. We constructed two parallel test versions A and B, each with half of the 18 items, for the within-subjects design of the cognitive reflection test based on 467 data points for each cognitive reflection item. Each parallel test included three adapted items from the numerical multiple-choice question version of the cognitive reflection test [Citation121, p. 2511] and six items from the verbal cognitive reflection test [Citation120], resulting in a total of nine items. We ensured that item difficulty was approximately the same for reflective responses (version A: 25 percent, version B: 27 percent) and intuitive responses (version A: 58 percent, version B: 60 percent). The internal consistency of the 18 cognitive reflection test items used, shown in , demonstrates adequate reliability [Citation44].

Table 2. Cronbach’s alpha coefficient for the cognitive reflection tasks.

Visual design of the cognitive reflection tasks

In choosing the questionnaire’s visual design, an important goal was that the choice tasks in the online questionnaire look the same on both devices, regardless of screen size. We chose a survey layout for smartphones and mobile devices by soscisurvey.com, which is optimized for the presentation of questionnaires on small screens and displays questions with appropriately large and easy-to-read fonts. This layout reduces the questionnaire’s width to 310 px on each device, so the screen is not fully utilized on larger screens but resembles a smartphone screen when shown on a PC. Choosing this design made the visual displays more comparable and reduced the influence of confounding variables regarding readability (). Because the layout may influence information processing and readers’ attitudes toward the material [Citation31, Citation46, Citation64, Citation79, Citation92, Citation97], this decision to keep the design stable among experimental groups is necessary to avoid confounding such additional effects with the pure priming effect of the device.

Figure 3. Visualization of a cognitive reflection task on both devices: Desktop (left) and smartphone (right).

Since the questionnaire was optimized for mobile phones, all information was visible on one screen; as a result, the participant did not have to hold several screens in working memory or scroll to answer a question. We ensured that the short text of the question stem and the answers were easily readable even on small devices and that the font size was nearly identical for typical default screen resolution.

Procedure

The cognitive reflection test was not proctored, but participants were asked to read and sign a consent form before answering the questionnaire. Before and after the cognitive reflection tasks and between parallel test versions, we used filler tasks that were unrelated to the cognitive reflection tasks. To lower the influence of order effects, participants could not jump back and forth between cognitive reflection tasks but had to answer them one after the other in a pre-specified order, which was the same for all participants. Thus, the participants all answered the same 18 cognitive reflection tasks in the same sequence, alternating between verbal and numerical tasks. However, the response options’ order was individually randomized to be used as a control variable.

Measurement of the independent variable: Device

As the primary independent variable in our study was the type of device, we recorded the device that participants used when they completed the online questionnaires. In the between-subjects study with randomization, the questionnaire first checked the assigned device based on the link used when the participant opened it, and the survey could be continued only when the participant answered it on the correct device. In the within-subjects study, controlling whether the participants were following the procedure and answering the questionnaire on the correct devices was essential.

All three studies accessed device-specific information stored by the questionnaire platform, SoSci Survey, such as the type of device (computer, TV device, tablet, mobile phone, unknown) and display information (width × height), as presented in .

Table 3. Display size of smartphone and PC screens.

In line with Arthur et al. [Citation5], we argue that desktops’ characteristics are so similar to those of laptops and notebooks that similar results can be expected in cognitive testing. Therefore, we used a binary variable (PC vs. smartphone) to distinguish between devices. The section “Sample description” details how we used data on the interaction mode each user employed to distinguish the various types of devices in the PC category.

Measurement of control variables in the questionnaire

User interaction mode with device

In addition to device-specific variables stored by the questionnaire platform, the survey included self-reported questions about which input device(s) participants used to answer the questionnaire: mouse, touchscreen, touchpad, pen, keyboard, and a blank to fill in other input devices in an open-text field. As we could not prevent the participants’ switching to landscape orientation, we asked them whether they had rotated their screens to complete the questionnaire to interpret the variables related to screen size.

Environmental context and distraction

Our research design kept the effects of distraction similar among device groups, so we also asked participants to report possible distractions caused by the environment when they completed the questionnaire. Whether participants completed the questionnaire in a public or private environment could affect their responses, so we asked participants to select “at home” or enter a different location using a free text box [Citation135, Citation142]. We also asked participants to report the number of activities they carried out while answering the questionnaire, such as checking emails, watching TV, or eating and drinking.

Device usability

In the within-subjects study, we measured comfort with device interaction twice, once for the smartphone and once for the PC. We used 7 of the original 13 items of the device assessment questionnaire from Douglas et al. [Citation29], which was designed to evaluate subjective perceptions of comfort and the ease of a device’s interaction with input devices like a touchpad, a mouse, or a touchscreen. All items were measured on 5-point scales with various verbal anchors (e.g., “The physical effort required for operation was (1: too low - 5: too high).”) We recoded three items so higher scores for all items represented more comfort, and we calculated an average score for comfort with the device’s interaction with the input device. The Cronbach’s alpha for the instrument was .86 for smartphones and .83 for PCs.

Typing test

To control for ease of typing on the devices, we developed a typing test for the within-subjects study that was executed twice, once for the smartphone and once for the PC. We asked participants to enter a selection of ten words as quickly and as accurately as possible. We used the five most common nouns and verbs in the German language, the participants’ native language [Citation29].

Demographics

We collected the participants’ demographic data for gender, age, level of education, current field of study (as all participants were students), and, if applicable, current employment area, working hours, and job title.

Rational and intuitive decision styles

To measure participants’ predisposition toward using Type 1 or Type 2 processing, we used the rational and intuitive decision styles scale [Citation52], with five items for each decision style, answered on a 5-point Likert scale (from “strongly disagree” to “strongly agree”). For example, for the rational scale, we used the item “Investigating the facts is an important part of my decision-making process,” and for the intuitive scale, we used the item “When making decisions, I rely mainly on my gut feelings.” Reliability analysis demonstrated internal consistency for the rational and intuitive decision styles scales in our dataset (Cronbach’s α = 0.86 and α = 0.82 in the between-subjects sample with self-selection; α = 0.82 and α = 0.78 in the randomized between-subjects sample; and α = 0.88 and α = 0.85 in the within-subjects sample).

Online Appendices B and C provide additional analyses with the control variables.

Operational hypotheses

Operational hypothesis: Choice between intuitive and reflective answers

We derived operational hypotheses from our general hypothesis, H1. Since we used a cognitive reflection test in our study, our operational hypotheses reflect that we rely on two typical scoring techniques for cognitive reflection tasks: the number of intuitive (incorrect) responses and the number of reflective (correct) responses [Citation100]. The intuitive score primarily measures the “lack of willingness or ability to engage in analytic reasoning to question the default answer” [Citation100, p. 346] and the “trust or faith that a person has in his or her ‘gut feelings,’” while the reflective scores serve as a proxy for the “ability to reflect upon and ultimately override the intuitive responses” [Citation100, p. 342]. Although the intuitive and the reflective score are structurally interdependent, as the answer options are mutually exclusive and, therefore, negatively correlated [Citation16, Citation99], we consider it relevant to report the results for both measures as dependent variables, as the reflective score does not in itself distinguish between intuitive and other incorrect responses. Our first operational hypothesis proposes that, if smartphones lead to (intuitive) Type 1 thinking, intuitive responses should increase sharply relative to other responses, and intuitive responses should be chosen significantly more often on a smartphone than they are on a PC (H1a). In contrast, using a PC is expected to trigger more (reflective) Type 2 processing than using a smartphone does (H1b).

H1a/boperational: Using a smartphone leads to more intuitive answers (1a) and fewer reflective answers (1b) than using a PC.

Operational hypothesis: Response times for intuitive answers

In the time before an answer for a cognitive reflection task is chosen and submitted in an online test, several information processing steps take place: perceiving the stimulus, making the decision, choosing an answer option, and entering the answer [Citation5].

Although the dichotomy of dual processing types is often described as thinking “fast or slow” [Citation26, Citation62], Type 2 processing cannot be measured directly in terms of longer response times [Citation7, Citation128, Citation139]. Still, response times can offer valuable insights in interpreting answer choices. Additionally, using speeded tasks is a typical experimental manipulation to disable Type 2 processing and ensure that decisions are based on Type 1 processing [Citation36]. Evans and Stanovich [Citation36, p. 225] describe the dichotomy of being “fast” versus “slow” as a “typical correlate” of Type 1 versus Type 2 processing but not as a “defining feature.” Therefore, we expand our H1a in proposing that more “intuitive” answers will be given when one uses a PC by considering the response times of such intuitive responses in H1c. Shorter response times for incorrect answers in cognitive reflection tests are due to either a “detection failure” or the lack of “mindware” like mathematical skills [Citation125]. If options are available for responses other than the intuitive and reflective responses, we believe it is more likely that participants will select another incorrect response than that they will stick with an intuitive response. Thus, fast, intuitive responses should primarily reflect a “recognition failure” and an absence of Type 2 processing.

Although Type 1 processing does not inevitably lead to an intuitive answer [Citation131], as when the answer to a task on a cognitive reflection test is already known and can be retrieved only from memory, without cognitive effort, we argue that fast, intuitive answers indicate miserly processing better than the choice of answer on its own. Since the response time for an intuitive response can indicate whether it was actually caused by Type 1 thinking, we hypothesize that:

H1coperational: Using a smartphone leads to shorter response times for intuitive answers than using a PC.

We do not posit a device-dependent hypothesis about the response time for reflective responses because longer answer times are likely to involve factors that are not directly related to smartphone use. A very short time to answer could be related to guessing or prior knowledge about a reflective task, and a very long response time could be related to task interruption or greater effort, depending on the individual mindware [Citation125].

Operational hypothesis: Self-selection

H2 proposes that the difference in using Type 1 intuitive processing and Type 2 reflective processing between smartphones and PCs is more pronounced when participants choose the device to use. To test this hypothesis, we compared the results of the between-subjects design with self-selection with the results of the other two research designs we used in the study, as summarized in operational H2:

Hypothesis 2operational: The difference in the use of Type 1 and Type 2 processing between smartphones and PCs is more pronounced in the between-subjects design with self-selection than it is in the between-subjects design with randomization and the within-subjects design.

Sample selection and study invitation

In line with other studies, such as Tzur and Fink [Citation142, p. 6], we use student participants to increase “control over individual differences in cognitive expertise.” Three relatively homogeneous samples drawn from the same population of students in a German-speaking university facilitate the comparison of cognitive test scores. For the between-subjects study with self-selection, we used the university’s internal email service for study invitations, and those who participated were entered into a prize drawing with five chances to win €50. To ensure sufficient motivation for the between-subjects study with randomization and the within-subjects study, we recruited undergraduate business students by offering 5 percent course credit in an information systems course for participating. Potential participants were informed that their performance in the study would not affect their course credit because questionnaire responses would be anonymous. No further actions were taken to incentivize correct answers in the cognitive reflection test because such interventions were reported to lead to cheating in cognitive reflection tests in online unproctored tests [Citation77] and distortion in answer times [Citation33].

We asked participants to avoid turning the smartphone and using it in the horizontal position to avoid confounding variations of screens. (See, e.g., Sanchez and Branaghan [Citation114] for the effect of screen orientation on reasoning performance.)

We used a block-structured randomization procedure for the second between-subjects study. We used email to invite 297 students who were enrolled in an introduction to information systems course to participate in the study. We crafted two emails, one with the instruction to answer the questionnaire on a smartphone (in vertical format), the other one to answer it on a PC (or laptop/notebook). The two emails each had a unique link to the questionnaire, based on the device the participants were to use. To ensure that each device condition would be answered by an equal proportion of men and women, we grouped the students’ email-addresses by gender prior to randomization.

In the within-subjects design, we provided two links to the questionnaire and asked participants to open one questionnaire link on a computer and the other one on a smartphone. To link the two questionnaires, random numbers were generated for each questionnaire web page call, and the respondents entered these numbers on the opposite device. The procedure worked well, as demonstrated by the low number of questionnaires that we had to exclude because instructions were not followed (3 cases).

Sample description

We excluded nine participants from the between-subjects dataset with self-selection and four from the randomized between-subjects dataset because they did not complete the questionnaire in a quiet environment (at home or in a learning facility at the university) but did so on a bus or train or while walking. In the randomized between-subjects design, we excluded thirteen participants because they indicated that they had taken part in the study in an earlier semester. In the within-subjects data-set, we also excluded 22 questionnaires that were completed on only one device, on which participants did not enter correct identification numbers, or that were both completed on a PC (3 cases). We excluded all questionnaires that were completed using end-devices in the PC category if the recorded height was larger than the width (7 in the between-subjects design with self-selection, 6 in the randomized between-subjects design, 3 in the within-subjects design), as using such a rare device blurred the line between a smartphone and a PC. Similarly, we discarded questionnaires from participants who indicated that they had rotated the screen (3 in the between-subjects design with self-selection, 2 in the randomized between-subjects design, 1 in the within-subjects design). Thus, we ensured that all smartphone screens were used vertically positioned and all PC screens were horizontally positioned. The final between-subjects sample with self-selection consisted of 342 students (125 men, 215 women, and 2 other, with a mean age of 22.79 years, ranging from 18 to 39 years), while the randomized between-subjects sample consisted of 171 students (90 men and 81 women, with a mean age of 22.36 years, ranging from 18 to 32 years), and the within-subjects sample had 310 students (146 men and 159 women, and 5 unknown, with a mean age of 21.77 years, ranging from 19 to 43 years), as summarized in . shows that, in the within-subjects sample, the order in which the devices were used and the order of the parallel test versions were balanced.

Table 4. Sample size and place of execution.

Table 5. Sample distribution of experimental groups in the within-subjects design.

We conducted an outlier analysis prior to analyzing the relationships between device and response time because it was apparent that some time values (e.g., 6,219 seconds) could have been caused only by a prolonged interruption, not by extended contemplation of the task. Based on the standardized z-values of the individual recorded values and the strict criterion [-1.96 ≤ z ≤ 1.96], 135 outliers among 6,457 time recordings (2 percent) in the between-subjects design with self-selection, 91 of 2,990 time recordings (3 percent) in the randomized between-subjects design, and 148 of 5,480 time recordings (3 percent) in the within-subjects design were excluded. We identified outliers separately for wrong, reflective, and intuitive answers because their answer times differ systematically.

Results

Choice between reflective and intuitive answers

We used analysis of variance (ANOVA) and linear mixed models to analyze data on the item level to examine the data collected on the participant level.

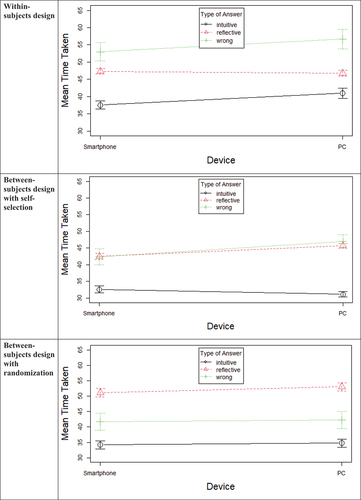

Within-subjects dataset

Data for the within-subjects sample were analyzed with mixed-design ANOVAs using the GLM module of SPSS 25 with the within-subjects factor device (PC, smartphone) and two between-subjects factors as control factors with two levels each (parallel test version: test version A on the PC and version B on the smartphone versus test version B on the PC and version B on the smartphone; device order: PC first and smartphone second vs. smartphone first and PC second). The percentage of intuitive answers and the percentage of reflective answers were used as dependent variables and ANOVAs were conducted for both separately. Participants solved 59.50 percent (SD = 24.46) of tasks correctly on the PC and 59.18 percent (SD = 24.40) correctly on the smartphone, while the incorrect intuitive answer option was chosen 28.42 percent (SD = 20.21) of the time on the PC and 28.71 percent (SD = 24.40) of the time on the smartphone. The strikingly similar solution percentages demonstrate that the test’s parallel versions were of comparable difficulty. presents the mean choice of answer options across devices separately for each parallel test version per device, while controlling for gender.

Both the within-subjects effect of device for intuitive answers, F(1,307) = .92, p = 761, and the within-subjects effect of device for reflective answers, F(1,307) = .077, p = .782 were non-significant. , which provides detailed statistics, shows that neither a between-subjects effect nor any interaction effect was significant (all p > .162). However, the intuitive decision style was a significant predictor of the percentage of reflective answers and a marginally significant predictor of the percentage of intuitive answers and gender was a significant predictor of the percentage of intuitive answers.

Table 6. ANOVA results of the within-subjects design dataset.

Between-subjects dataset (self-selection)

In the first model that analyzed the between-subjects sample, two between-subjects ANOVAs were calculated, one using the percentage of intuitive answers and the other using the percentage of reflective answers as the dependent variable and both using the device (PC, smartphone) as the independent variable. shows that, without including any control variables, the devices’ effect on reflective responses was significant, F(1,340) = 8.46, p = .004, with a medium effect size (η2 = .024). The participants in the smartphone group correctly solved an average of 52.74 percent (SD = 24.95) of the tasks, while those in the PC group solved 60.36 percent (SD = 22.83). In addition, the participants in the smartphone group selected the intuitive response options more often (34.16 percent, SD = 19.35) than participants in the PC group did (28.58 percent, SD = 18.21), yielding a small-to-medium effect size F(1,340) = 7.30, p = .007, η2 = .021.

Table 7. ANOVA results of the between-subjects design with self-selection dataset.