?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We introduce a new class of nonlinear autoregressive models from their representation as linear autoregressive models with time-varying coefficients. The parameter updating scheme is subsequently based on the score of the predictive likelihood function at each point in time. We study in detail the information theoretic optimality properties of this updating scheme and establish the asymptotic theory for the maximum likelihood estimator of the static parameters of the model. We compare the dynamic properties of the new model with those of well-known nonlinear dynamic models such as the threshold and smooth transition autoregressive models. Finally, we study the model’s performance in a Monte Carlo study and in an empirical out-of-sample forecasting analysis for U.S. macroeconomic time series.

1. Introduction

Many empirically relevant phenomena in fields such as biology, medicine, engineering, finance and economics exhibit nonlinear dynamics; see the discussion in Teräsvirta et al. (Citation2010). In economics, for example, economic agents typically interact nonlinearly as implied by capital or capacity constraints, asymmetric information problems, and habit formation. Various nonlinear dynamic models have been proposed in the literature to describe such phenomena. Important examples include the threshold AR (TAR) model of Tong (Citation1983) and the smooth transition AR (STAR) model of Chan and Tong (Citation1986) and Teräsvirta (Citation1994).

Consider a nonlinear AR model with additive innovations of the form

(1)

(1)

for an observed process

and a sequence of zero-mean independent innovations

with density

where ψ is a function of the vector

We allow the data generating process (DGP) for

to be general and potentially nonparametric in nature. In particular, we only impose high-level conditions on

such as strict stationarity, ergodicity and bounded moments. We then focus on how to best ‘fit’ a potentially misspecified dynamic parametric model to the observed data

where T denotes the sample size. The statistical model thus adopts a specific parametric and possibly misspecified functional form for ψ. This approach of allowing for a discrepancy between the DGP and the statistical model follows the literature on misspecified parametric models dating back to White (Citation1980, Citation1981, Citation1982) and Domowitz and White (Citation1982), and also including the work of Maasoumi (Citation1990) on the effects of misspecification for forecasting based on econometric models.

Given that the model is allowed to be misspecified from the outset, our main focus lies on finding good formulations for the parametric dynamic model that we use to ‘fit’ the data. We argue that despite the current general setting for the DGP, we still find some (misspecified) parametric models more suitable than others. In order to formulate our argument, we first note that (1) admits an autoregressive representation with time-varying autoregressive coefficient,

(2)

(2)

where ft is the time-varying autoregressive parameter, which can be written as a measurable function

of the infinite past

We use the representations in (Equation1

(1)

(1) ) and (Equation2

(2)

(2) ) interchangeably by setting

The parameter ft in (Equation2

(2)

(2) ) implies the autoregressive function ψ and vice-versa. We then use the representation in (Equation2

(2)

(2) ) and appeal to the results in Blasques et al. (Citation2015) to obtain a parametric functional form for our model that is locally optimal in an information theoretic sense.

While the nonlinear autoregressive representation in (Equation1(1)

(1) ) is more commonly used, the time-varying parameter representation in (Equation2

(2)

(2) ) has the advantage of revealing the changing dependence in the data more clearly through the time-varying parameter ft. For example, in econometric applications, major economic events such as the burst of the dotcom bubble in 2000, the 2008 global financial crisis, or the 2010–2011 European sovereign debt crisis can lead to temporary changes in the dependence structure of economic time series and thus lead to time-variation in the coefficients of standard linear time series models. The representation in (2) reveals these changes directly.

Some earlier contributions have also considered time-varying parameters in (vector) autoregressive models. Doan et al. (Citation1984) explored the estimation of time-varying coefficients in AR models via the model’s representation in state space form and the application of the Kalman filter. More elaborate Markov chain Monte Carlo methods were explored by, for instance, Kadiyala and Karlsson (Citation1993) and Clark and McCracken (Citation2010).

Here we adopt the time-varying parameter representation in (2) to find a nonlinear specification for the nonlinear AR model in (1) that possesses particular optimality properties. We do so by studying how to select the function Specifically, we extend the results in Blasques et al. (Citation2015) and Creal et al. (Citation2018) to dynamic autoregressive models. This allows us to find a parametric functional form for ψ that at each time point t is guaranteed to improve the local Kullback-Leibler divergence between the true unknown conditional density of yt and the conditional density implied by the fitted parametric model. The notion of optimality we work with is thus information-theoretic in nature. The original results in Blasques et al. (Citation2015) do not cover our current setting, as they do not allow for yt to depend on

conditional on ft. The parameters of our time-varying autoregressive parameter model can be estimated by maximum likelihood (ML), and we formulate conditions under which the ML estimator (MLE) has the usual asymptotic properties, such as consistency and asymptotic normality. We also analyze the finite-sample performance of the model and its ability to recover the time-varying AR coefficient ft in a Monte Carlo study. Our results show that the model performs well.

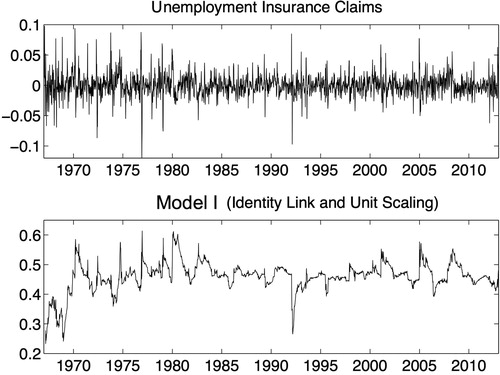

We illustrate the model empirically in two ways. First, we model the growth rate of U.S. unemployment insurance claims, which is an often used leading indicator for U.S. gross domestic production growth. We show how temporal dependence in this series varies over time. Second, we illustrate that our model provides better out-of-sample forecasts than most direct competitors for three important macroeconomic time series observed at different frequencies: the weekly growth rate of U.S. unemployment insurance claims, the monthly growth rate in industrial production, and the quarterly growth rate of money velocity.

The remainder of this paper is organized as follows. Section 2 introduces the model and establishes its information theoretic optimality properties, regardless of whether the model is correctly specified or not. Section 3 discusses the reduced form dynamics of the model and compares these with the properties of well-known alternatives. Section 4 establishes the asymptotic properties of the MLE. Section 5 provides our empirical analysis. Section 6 concludes. In the Supplementary Appendix, we gather supplementary material including technical proofs and extensions to the theoretical optimality results of Section 2.

2. Score driven time-varying AR coefficient

2.1. The model

We consider a generalization of the time-varying AR coefficient model in EquationEq. (2)(2)

(2) ,

(3)

(3)

where yt denotes the observation, and

is a bijective link function. Obvious choices for

are

as in EquationEq. (2)

(2)

(2) ,

to rule out negative temporal dependence, or

to rule out unit-root behavior. Other appropriate link functions can be thought of as well. If we allow

to be equal to or even exceed 1 from time to time, we can endow

with ‘transient’ unit-root or explosive behavior during specific time periods. This does not rule out that

is strictly stationary and ergodic (SE); see Bougerol (Citation1993) as well as the discussions below. All results derived in this paper extend trivially to the autoregressive model with intercept

as given by

For simplicity, we set a = 0 and treat the case of the de-meaned sequence of data

We specify the time-varying parameter ft as an observation driven process as formally defined by Cox (Citation1981). In particular, ft is a function of past observations i.e.,

Observation driven models are essentially ‘filters’ for the unobserved

They update the parameter ft using the information provided by the most recent observations of the process

In general, they take the form

(4)

(4)

where

is a vector of unknown static parameters. EquationEq. (4)

(4)

(4) implies that

is a function of all past observations. Any function

can be considered for updating ft to

such as the constant function, but also the threshold or smooth transition autoregressive specifications as used in Tong (Citation1983), Chan and Tong (Citation1986) and Teräsvirta (Citation1994), amongst others. Petruccelli (Citation1992) argues that many time series models of interest can be approximated by the threshold model and therefore our theoretical results below may have wider implications.

The parameter update function in (4) can lead to both linear and nonlinear dynamic specifications. For example, if and

is given by

we obtain the autoregressive moving average model

where ω and α are static unknown parameters. For a discrete update function

of the type

we obtain the self-exciting threshold autoregression (SETAR) of Tong and Lim (Citation1980),

In the next subsection, we introduce alternative formulations of that lead to empirically relevant nonlinear AR models with information theoretic optimality properties.

2.2. Information theoretic optimality

As stressed in the introduction, it is important for our analysis to clearly distinguish between the data generating process (DGP) and the postulated parametric statistical model. The DGP is typically unknown and potentially highly complex. For expositional purposes, we assume the DGP is the nonlinear AR process from EquationEq. (1)(1)

(1) with

of unknown form. The analysis below, however, still applies if the DGP falls outside this very general class of nonlinear time series models and is only characterized by its (unknown) conditional density.

The unknown DGP in (1) gives rise to a true, unobserved time-varying parameter where

denotes the inverse function of

Next to ft, we distinguish the filtered time-varying parameter

as obtained from the possibly misspecified statistical model (3). The parameter

is based on the updating Equationequation (4)

(4)

(4) , i.e.,

where the link function

used in the model may also depend on

i.e.,

The difference between ft and

is similar to the difference between innovations and regression residuals. While

has properties that are directly implied by the DGP, the filtered sequence

only achieves those properties in the ideal setting of correct model specification, true values for the static parameters, and correct initialization of the time-varying parameter

Furthermore, while

stretches to the infinite past and depends on the entire time series

the filtered path

is initialized at time t = 1 and depends only on the observed sequence

with T increasing as more data become available.

We write the true unknown joint density of the vector as

This density can be factorized as

where

denotes the true, unknown conditional density of yt given its infinite past

We write the filtered conditional density based on the statistical model as

where

and

for t > 1, with

being a function of the first observation y1 and the fixed starting value for the filter

The conditional model density

will typically differ from the conditional true density pt.

To estimate the static parameters we use the scaled log likelihood function

(5)

(5)

which naturally depends on the filtered parameter sequence

and thus on

and on the initialization

since

Our notation is summarized in .

Table 1. Notation.

We now proceed by showing that an update function in (4) is only optimal in an information theoretic sense if it is based on the score of the predictive log-density for yt, that is on

Such an update locally results in an expected decrease in the Kullback-Leibler (KL) divergence between the true conditional density pt and the conditional model density

KL divergence is an important and widely applied measure of statistical divergence in various fields; see, for example, Ullah (Citation1996, Citation2002). The results we derive extend the results of Blasques et al. (Citation2015) and Creal et al. (Citation2018) to the context of autoregressive models with time-varying dependence parameters.

The optimality properties below hold whether or not the statistical model is correctly specified. We first define the notions of expected KL variation and expected KL optimality.

Definition 1.

(EKL Optimality) Let denote the KL divergence between

and pt, i.e.,

then a parameter update from

to

with Expected KL (EKL) variation

(6)

(6)

is EKL optimal if and only if

for every true density pt. The nonlinear autoregressive model (2) with time-varying dependence parameter as in (3) is said to be EKL optimal if it admits an EKL optimal parameter update.

The EKL variation in (6) measures the change in KL divergence between the true conditional density and the conditional model densities

As depends on yt, it is a random variable given the information up to time t – 1 only. Therefore, the EKL variation concentrates on whether the KL divergence reduces in expectation. Any individual step from

to

may incidentally increase the KL divergence, but for an update to be EKL the steps should reduce the KL divergence on average, whatever the true unobserved density pt.

For a general update function the parameter update from

to

does not necessarily have this property. For a general

the update steps may leave

farther away on average from the true conditional density

The surprising feature of our analysis is that despite the generality of the current set-up, we can still show that a local EKL optimal time-varying parameter update actually exists. In particular, we show that only a score update (or a locally topologically equivalent of that) ensures that the observation yt is incorporated in such a way that the parameter update provides a better approximation to the conditional density of yt in an expected KL divergence sense. This does not hold for any other updating mechanism.

The optimality property leads to a nonlinear autoregressive model formulation that takes the form of a score driven time-varying parameter model as introduced by Creal et al. (Citation2011, Citation2013) and Harvey (2013). The score driven model is defined as

(7)

(7)

where ω, α and β are unknown coefficients included in

and

(8)

(8)

is the scaled score of the predictive density, where

(9)

(9)

and with

some scaling function. For our current purposes it suffices to consider the simplified setting with

The update Equationequation (7)

(7)

(7) formulates a possibly highly nonlinear function for

in terms of the past observations

The functional form is partly determined by the postulated model density

while the impact of past observations on

is also determined by the coefficients ω, α and β.

To show that the update in (7) satisfies EKL optimality properties, we make the following assumptions.

Assumption 1.

The filtering density

is twice continuously differentiable in y and

and satisfies the moment conditions

The filtering density is misspecified in the sense that

and

The proofs of Lemmas 1 and 2 below are easily obtained by extending the proofs of Propositions 1–5 in Blasques et al. (Citation2015) and Proposition 2 in Creal et al. (Citation2018) so as to allow to enter the conditioning sets of both pt and

The proofs can be found in the Supplementary Appendix.

Lemma 1 shows that the score update of ft is locally EKL optimal.

Lemma 1.

Let Assumption 1 hold and let . Then, for α sufficiently small, the score update for ft is EKL optimal given

and

Lemma 2 shows that only a ‘score-equivalent’ update can have this optimality property. An update is said to be ‘score-equivalent’ if it is proportional to the score as a function of yt.

Definition 2.

(Score-equivalent updates) An observation driven parameter update is ‘score-equivalent’ if and only if

for every

We make the following additional assumption.

Assumption 2.

The score as a function of yt changes sign at least once for every

Assumption 2 is intuitive. The score update should be such that the fitered time-varying parameter can go up as well as down for particular (possibly extreme) realizations of yt. Otherwise, updates will always be in one direction only. We now have the following result.

Lemma 2.

Let Assumptions 1 and 2 hold. For any given pt, a parameter update is locally EKL optimal if and only if the parameter update is score-equivalent.

The optimality properties above can be further extended to settings where and/or

see Blasques et al. (Citation2015) for examples of this in a slightly different set-up. Such results apply as long as the ‘forces away’ from the optimal direction at

as determined by the autoregressive component

are weaker than the ‘forces toward’ the optimal direction as determined by the score component

Concluding, we find that the score updates have firm foundations from the perspective of information theoretic criteria (Kullback-Leibler). In fact, in the current general set-up only score updates possess such desirabe properties.

2.3. Illustrations

We present three illustrations to provide more intuition for the main results derived in Section 2.2.

2.3.1. Model I: Affine gaussian updating

Consider the statistical model with and conditional model density

equal to a normal with mean zero and variance

where

The score function is given by

For the case of unit scaling

we obtain the update

(10)

(10)

The update of responds to the model’s prediction error

multiplied by the scaled leverage of the observation

The score pushes the update up (down) if yt lies above (below) its predicted mean

i.e., if

(versus

). The strength of this effect is determined both by α and

When

is large, the update sizes are mitigated because the predition errors

are noisy signals of where

is located. The score update balances all these features in an optimal manner.

If β = 1 and ω = 0, the score is the only determinant of the parameter update. For the updating mechanism becomes more complex and the signal from the score has to off-set the autoregressive step

toward the long-term unconditional mean of

that is toward

2.3.2. Model II: Logistic updating

Consider the same setting as Model I but with link function which allows for transient (near) unit-root dynamics in yt, but rules out negative dependence and explosive behavior. The parameter update becomes

(11)

(11)

The intuition for this update is similar to Model I, with the exception that the size of the update is mitigated if is large, i.e., when

is close to zero or one.

2.3.3. Model III: Robust updating

Robustness to outliers and influential observations can be obtained by making alternative assumptions for the conditional model density For example, we can assume that

is fat-tailed as in Harvey and Luati (Citation2014). Consider the same setting as Model I, but with

a Student’s t distribution with zero mean, scale parameter σ, and degrees of freedom parameter λ, i.e.,

where

As a result, the score update becomes

(12)

(12)

The update is now less sensitive to large values of

compared to the Gaussian case (

). In particular, the robust score update in (12) is a bounded function of

The intuition is as follows. When the conditional model density is fat-tailed, large realizations of

can be attributed to either an increase of the true, unobserved conditional mean

or to the fat-tailed nature of the prediction errors. The score update again balances these two competing explanations in an information theoretic optimal way. For the limiting case

we recover EquationEq. (10)

(10)

(10) .

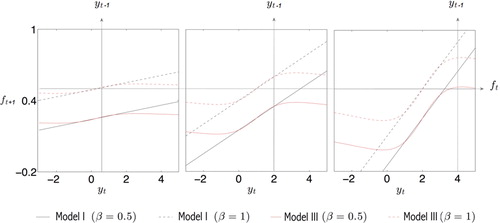

compares the different updating functions for for Models I and III. For the Student’s t distribution (Model III), the impact of large prediction erorrs on

is clearly bounded, in contrast to the updates for Model I. The reactions are steeper if yt is persistently away from the zero unconditional mean, i.e., if both

and yt are substantially positive. For extremely large prediction errors, the update tends to zero again as the KL perspective attributes such observations to the fat-tailedness of the model distribution rather than to shifts in the conditional mean. The parameter updates with

tend to bring

faster to its unconditional mean compared to β = 1.

Figure 1. Shape of Normal (black) and Student’s t (red) updating functions. The updated parameter is plotted as a function of yt for given ft = 0.5 and given low initial state

(left) high initial state

(middle) and very high initial state

(right). All plots are obtained with ω = 0 and

Solid lines have

and dashed lines have β = 1.

further reveals how the updating function uses the value as a crucial guidance mechanism to distinguish between changes in observed data that provide information about the conditional expectation and those that do not. For example, if the new observation is very close to its zero unconditional mean (left graph), then there is no reason to strongly update the conditional expectation, regardless of whether the realization yt is large or small: the observation yt does not contain much information about the dependence of the process as the mean-reverting mechanism is almost inactive in this case. By contrast, if

is large, the observed yt carries more information about

Consider the case where

(right graph). Then, if yt is also large, these observations provide strong evidence that the process has strong dependence and hence that ft is large, resulting in an upward drift of

On the other hand, if yt is close to zero, mean reversion apparently is fast and causes a downward pressure on

2.4. Estimation and forecasting

Maximum likelihood (ML) estimation of the parameter vector in the score driven AR(1) model (7) is similar to ML estimation for autoregressive moving average (ARMA) models. The conditional likelihood function (5) is known in closed-form given the explicit expressions for both the updating equation for

and the score function st. The maximization of the log-likelihood function (5) with respect to

is typically carried out using a quasi-Newton optimization method. The prediction errors

evaluated at the maximum likelihood estimate

of

can be used for diagnostic checking.

Forecasting with the score driven time-varying AR model is also straightforward. The forecast for can be based directly on (3) with

computed by (7) given the value for yT. Given the nonlinearity of the model, multi-step-ahead forecasts can only be obtained via simulation. For example, to forecast

one simulates values of

using

and simulated values of

Each simulated value of

can be used to obtain a simulated value of

which in turn can be combined with a simulated value of

to produce a simulated value of

A series of simulated realizations

can be used to construct the mean or median or quantile forecasts of

The computations are simple, fast, and can be carried out in parallel for large simulation sizes to achieve accuracy and efficiency. Forecasts of

for

can be obtained similarly.

3. Nonlinear AR model representations

3.1. Reduced form of time-varying AR coefficient model

It may appear difficult to compare the nonlinear autoregressive model from Section 2 with other nonlinear models such as the TAR and STAR models that are discussed in Section 1. The TAR and STAR models use lags of the dependent variable yt itself as state variables to make the autoregressive coefficient of the AR(1) model time-varying. The score driven approach from Section 2 treats the time-varying autoregressive parameter as a time series process with innovations that are also functions of past observations. The commonalities become apparent when we consider the reduced form of the score driven model.

To obtain the reduced form, we first write EquationEq. (3)(3)

(3) as

which is valid almost surely. Here we suppress the dependence of functions on

We also use ft rather than

as we treat the model here as the true data generating process rather than as the filter. Using

as the inverse of the link function h, we obtain

Substituting this expression into (3), we obtain

which reduces the model to a nonlinear ARMA model with two lags of yt and one lag of ut, that is a nonlinear ARMA(2, 1). This formulation of the score driven time-varying AR(1) model as a nonlinear ARMA(2, 1) model facilitates a direct comparison with the TAR and STAR models.

For Model I from Section 2.3, we have and

The nonlinear ARMA(2, 1) specification then becomes

Similarly, for Model III we obtain the nonlinear ARMA representation

These highly nonlinear ARMA representations originate from a linear AR(1) model with a time-varying autoregressive coefficient based on the update function While the original model is relatively simple, it implies a complex but parsimonious nonlinear ARMA model. We emphasize that the current reduced form of the score driven model is only used for studying the nonlinearity of the model compared to competing model specifications, and not for the actual implementation of the model in simulations or empirical estimation. For such purposes we use the specification as presented in Section 2.

In case of Model II, the score expressions are slightly more complicated due to the chain rule for the nonidentity link function with

The corresponding score function is

with

since

The updating equation becomes

(13)

(13)

and we obtain the nonlinear ARMA model representation as

We conclude that any score driven model can be represented in reduced form as a nonlinear ARMA model. To provide an intuition for the dynamic patterns described by these representations, we now compare the dynamic patterns of our model with those of TAR and STAR models.

3.2. Comparison with other nonlinear AR models

Two well-known nonlinear AR models are the threshold AR (TAR) model of Tong (Citation1983) and the smooth transition AR (STAR) model of Chan and Tong (Citation1986) and Teräsvirta (Citation1994). We relate our nonlinear dynamic model with the basic versions of these two competing nonlinear AR models. We already have shown in Section 2 that our model has information theoretic optimality properties. Such optimality properties are not available for other models, including the TAR and STAR models.

We consider a standard TAR model of the following nonlinear autoregressive form,

where

is an indicator function that takes the value one if the condition in the argument holds, and zero otherwise. The AR(1) coefficient switches between γ1 and

depending on whether

is smaller or larger than γ3. The model can be generalized in various ways.

A standard STAR model is given by

where the AR(1) coefficient switches smoothly from γ4 to

depending on the value of

Both the TAR and STAR models are nonlinear ARMA(2, 0) models and have the same number of parameters as Models I and II from Section 2.3.

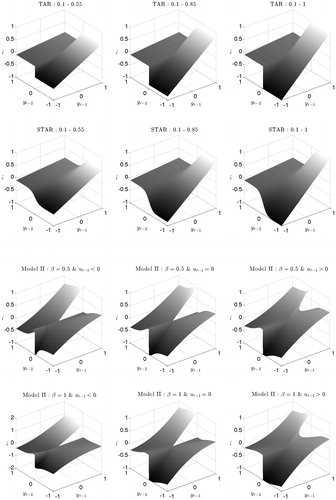

We visualize the differences between the models in where each panel presents the response of yt to different values of and

for the TAR and STAR models, and for Model II with specific values of

In this visualization, the nonlinear response functions appear similar in many respects. The similarities hold even though TAR and STAR models are nonlinear AR(2) models and Model II is a nonlinear ARMA(2, 1) model.

Figure 2 Response functions for TAR, STAR, and Model II. Response functions for the TAR and STAR model (top 2 rows) are presented for different slopes in each regime. For example, in the top-left panel the TAR switches AR(1) coefficient from 0.1 to 0.55 depending on whether is positive or negative. The response functions for Model II (bottom 2 rows) are presented for ω = 0,

different values of β (0.5 or 1.0), different values for the innovations

(-0.5, 0 and 0.5).

In all cases, we can distinguish two separate regimes. For the STAR model, one regime occurs for positive values of and has a large slope in the

direction. Another regime with a small slope in the

direction occurs for negative values of

In both the TAR and STAR models these regimes are linear in

and hence, in each regime, the slope is constant over

The cross-section over the

axis, however, shows the difference between the TAR and STAR models: the transition from one regime to the other is discontinuous for the TAR model, whereas the transition is smooth for the STAR model.

The response of Model II is similar to the TAR model given that the transition is abrupt from negative to positive values of Model II is also similar to the STAR model because the response functions in

vary continuously with the values of

in a similar way, within each regime. The response functions for Model II are nonlinear in

whether we have a positive or negative

This feature makes the nonlinearity of Model II markedly different. In particular, for negative values of

we observe an increasing slope in

while for positive values of

the response function has a decreasing slope in

In Section 5, we investigate whether these differences improve the forecasting performance of the score driven model. The Supplementary Appendix moreover contains simulation evidence that the score driven model succeeds in uncovering the dynamics of the true ft by the filtered

in cases where the model is severely misspecified.

4. Statistical properties

4.1. Stochastic properties of the filter

The elements of the log-likelihood function (5) depend on both the data

and the filter

Even if the data

are well-behaved, the stochastic properties of the likelihood function cannot be obtained without first establishing the stochastic properties of the filter

for the unobserved time-varying parameter

In particular, to derive the asymptotic properties of the ML estimator (MLE) for the score driven time-varying AR parameter model of Section 2, we need to establish strict stationarity, ergodicity and bounded moments of the filter

uniformly on the parameter space Θ, and we need to ensure that the filter is Near Epoch Dependent (NED) on a mixing sequence at

where

is the true parameter; see the treatments of Gallant and White (Citation1988) and Pötscher and Prucha (Citation1997) for precise definitions. The stationarity and ergodicity (SE) property and bounded moments are required to obtain the consistency of the MLE. The additional NED property is used to establish asymptotic normality.

As mentioned in the introduction, we allow the data generating process to be general and nonparametric in nature. As such we only impose high-level conditions on the data and obtain the properties of the filter and the MLE allowing for misspecification of our parametric model. In this sense, we follow the classical M-estimation literature in deriving the MLE asymptotics while imposing only high-level conditions on the data such as stationarity, fading memory and moments; see e.g. Domowitz and White (Citation1982), Gallant and White (Citation1988), White (Citation1994), and Pötscher and Prucha (Citation1997). If one wishes to work under an axiom of correct specification, then additional work should be carried out to show that the data generated by the model satisfies the desired properties.

For notational simplicity, we define the score update as

and the supremum as

In many cases of interest, this supremum will prove to be bounded. We notice that is a random variable due to its dependence on yt and

Whenever convenient, we make the dependence of the filtered parameter

on the initialization

the data

and the parameter vector

explicit in our notation, for example,

(14)

(14)

for all

To establish the asymptotic properties of the MLE, we require the dependence of the filter on the initial condition

to vanish in the limit. Theorem 1 below is a slight adaptation of Blasques et al. (Citation2014) and formulates these conditions more precisely. Apart from requiring the existence of appropriate moments, the main requirements are conditions (ii), (iv), and (v), which state that (14) is contracting on average in an appropriate sense. Below we let

be a function satisfying

for

and

for x > 1. Additionally,

is used to denote independence between random variables.

Theorem 1

(Blasques et al., Citation2014). Let be convex, Θ be compact,

be SE,

and assume there exists a nonrandom

such that

; and

Then converges exponentially fast almost surely (e.a.s.) to the limit SE process

; i.e. we have

for some c > 1 as

. If furthermore

such that

; and either

; or

and

For more details on e.a.s. convergence, we refer to Straumann and Mikosch (Citation2006). The limiting sequence in Theorem 1 does not depend on the initialization condition

Whereas condition (ii) is key in ensuring that the initialized sequence

converges to its stationary and ergodic (SE) limit, conditions (iv) and (v) are essential for establishing the existence of an appropriate number of unconditional moments of the SE limiting sequence.

The verification of the conditions in Theorem 1 is often straightforward. Consider Model II from Section 2.3 with its updating Equationequation (11)(11)

(11) . If

is SE and satisfies

then the SE condition (ii) reduces to

(16)

(16)

with

The parameter space over which (16) is satisfied can now easily be computed, either numerically or by using upper bounds on the constituents of (16). For example, if

has some bounded moment, it is easy to see that there exists a parameter space Θ with

and α sufficiently close to zero for every

such that (16) is satisfied for all points in the parameter space.

The presence of the supremum over in all of the expressions in Theorem 1 guarantees that we do not only obtain pointwise convergence, but that we also establish the convergence of the sequence

with random elements taking values in the Banach space

for every

to a limiting sequence

where

denotes the supremum norm on Θ. This more abstract convergence result in a function space allows us to relax some smoothness requirements for the likelihood in Section 4.2. In particular, we only need to put appropriate conditions on the second rather than on the third order derivatives of the likelihood; compare Straumann and Mikosch (Citation2006) and Blasques et al. (Citation2014).

Following Pötscher and Prucha (Citation1997), Theorem 2 below shows that, under appropriate conditions, the NED properties of the data can be ‘inherited’ by the filtered sequence

This additional property is needed to establish the asymptotic normality of the MLE.

Theorem 2.

Let be SE, have two bounded moments

and be NED of size – q on some sequence

. Furthermore, assume that

with

and

. Then

is NED of size – q on

for every initialization

Theorem 2 imposes that the score is bounded by a linear function in

and bounded by a contracting linear function in f. This condition is slightly more restrictive than its counterpart in Theorem 1. We use the NED property to establish asymptotic normality of the MLE for our model under misspecification: the result of the theorem allows us to use a central limit theorem for the score of the log-likelihood function.

The results in this section do not require the statistical model to be correctly specified. As the optimality results from Section 2.2 already indicate, the score based updates are optimal even if the model is severely misspecified. The Supplementary Appendix presents a number of simulated examples that demonstrate the usefulness and stability of the filter in such cases. The results of those simulations show that the score based track well the dynamics the time-varying ft if the later varies sufficiently slowly over time. For a highly volatile ft process, the data may not be sufficiently informative to allow for an accurate local estimation of the time-varying autoregressive parameter.

4.2. Asymptotic properties of MLE

To establish the strong consistency of the MLE,

(17)

(17)

with

as defined in EquationEq. (5)

(5)

(5) , we make the following three assumptions.

Assumption 3.

is a measurable space and Θ is a compact set. Furthermore,

and

are continuously differentiable in their arguments.

Assumption 4.

such that

and either

or

and

Definition 3.

(Moment Preserving Maps) A function is said to be n/m-moment preserving, denoted as

if and only if

implies

Assumption 5.

and

with

for

Assumption 3 ensures the existence of the MLE as a well-defined random variable, while Assumptions 4 and 5 ensure the SE properties of the filter and the existence of the correct number of moments of the likelihood function, respectively. Moments are ensured via the notion of moment preserving maps; see Blasques et al. (Citation2014). Products and sums satisfy all the intuitive moment preservation properties via triangle and Minkowski inequalities.

Assumption 4 is easy to verify for the robust update Model III introduced in Section 2.4. The moment bound for the score in Assumption 4(i) and the contraction condition in Assumption 4(ii) hold on a non-degenerate parameter space Θ since the score function

where λ is an element of

is uniformly bounded and Lipschitz continuous.

The following theorem now establishes the consistency of the MLE. Below, denotes the limit likelihood function.

Theorem 3.

(Consistency) Let be an SE sequence satisfying

for some ny > 0 and assume that Assumptions 3, 4 and 5 hold. Then the MLE (17) exists. Furthermore, let

be the unique maximizer of

on the parameter space Θ. Then the MLE satisfies

as

for every

Blasques et al. (Citation2015, Theorem 4.9) offer global identification conditions for well-specified score models which ensure that the limit log likelihood has a unique maximum The assumption of a unique

may however be too restrictive in the case of a misspecified model; see also Freedman and Diaconis (Citation1982) for failure of this assumption in a simple location problem with iid data and Kabaila (1983) in the context of ARMA models. Remark 1 below follows Pötscher and Prucha (Citation1997, Lemma 4.2) and highlights that if the restrictive identifiable uniqueness condition fails, then we can still show that the MLE

converges to the set of maximizers of the limit loglikelihood function

In other words, we can avoid the assumption of uniqueness of

stated in Theorem 3, and obtain the a set-consistency result. A simple regularity condition is required which states that the level sets of the limit loglikelihood function

are regular (see Definition 4.1 in Pötscher and Prucha, Citation1997). The regularity of the level sets is trivially satisfied in our case.

Remark 1.

Let the conditions of Theorem 3 hold. Suppose that is maximized at a set of points. Then the MLE converges

converges to that set as

for every

see Lemma 4.2 Pötscher and Prucha (Citation1997).

Assumption 6 below imposes the conditions used in Theorem 2 to ensure that the filter inherits the NED properties of the data. It also states conditions that are used to ensure that the likelihood score

inherits the NED properties of the vector

with

and

Assumption 6.

For every , it holds that

with

for all

with

and

Conditions (i) and (ii) of Assumption 6 can be verified for the robust update Model III since, for λ bounded away from zero, the ML score function is Lipschitz continuous on w and the updating score function s is Lipschitz continuous on f and

Condition (ii) in Assumption 6 allows for simple and clear results and is the same contraction condition as used in Pötscher and Prucha (Citation1997) and Davidson (1994). A less restrictive condition can be used that allows for random coefficient autoregressive updates; see Hansen (Citation1991).

Using Assumption 6, we obtain the asymptotic normality of the MLE in Theorem 4 by assuming that is NED on an α-mixing sequence. To ease the exposition, we imposed moment bounds in Assumption 6 directly on the derivatives of the likelihood function; see also Straumann and Mikosch (Citation2006). Alternatively, these bounds could have been derived in a similar way as in Theorem 3 from primitive conditions concerning the moment preserving properties of h and pu; see the Supplementary Appendix.

Theorem 4.

(Asymptotic Normality) Let be an SE sequence that is NED of size –1 on the

-mixing process

of size

, and let Assumptions 3, 4, 5 and 6 hold. Furthermore, let

for some

and

be the unique maximizer of

on Θ. Then the ML estimator

satisfies

where

and

are the Fisher information matrix and the expected outer product of gradients, respectively, both evaluated at

5. Empirical application

5.1. Time-varying temporal dependence in U.S. insurance claims

We illustrate the empirical relevance of our nonlinear autoregressive model by analyzing weekly observations of U.S. unemployment insurance claims (UIC). The empirical analysis of the time series of UIC based on dynamic macroeconomic models has received much attention in the literature; see for example McMurrer and Chasanov (Citation1995), Meyer (Citation1995), Anderson and Meyer (Citation1997, Citation2000), Hopenhayn and Nicolini (Citation1997), and Ashenfelter et al. (Citation2005). The importance of forecasting weekly UIC time series data has been highlighted by Gavin and Kliesen (Citation2002) who show that UIC is a highly effective leading indicator for labor market conditions and hence for forecasting gross domestic product growth rates. Our sample consists of weekly continuously compounded growth rates of the seasonally adjusted (four-week moving) average initial unemployment insurance claims observed from 1960 to 2013, as included in the Conference Board Leading Economic Index.

We only present the estimation results for Model I. The results for Model II are very similar. We find that the nonlinearity of the model sometimes poses challenges to the numerical optimization of the likelihood function, and that one has to use different starting values to ensure convergence to the proper maximum. If the nonlinearity of the model is combined with a density that is not log-concave, such as the Student’s t distribution in Model III, multiple local optima occur more often. Several of these local optima are not stable if the parameters are perturbed around the optimum. For example, in Model III we obtain a maximum of the likelihood function close to the one reported for Model I below, as well as a second higher local maximum for a negative value of α. A negative α does not satisfy the optimality theory developed in Section 2. The likelihood function near this second maximum is very peaked and disappears if the degrees of freedom parameter in Model III is perturbed to somewhat higher levels. Combining the properties of the different specifications, Model I presented below provides the best compromise in terms of (i) the stability of the optimum under perturbations of the parameter and the empirical interpretability of the filtered path, (ii) the optimality restriction from Section 2.2, that is and (iii) in-sample fit in terms of corrected Akaike’s information criterion (AICc) of Hurvich and Tsai (Citation1991).

presents the UIC data together with the filtered estimates of the time-varying autoregressive parameter that fluctuate considerably over time. The parameter reaches a minimum of roughly 0.2 in the late 1960s, indicating that UIC has little temporal dependence during this time period. In the 1980s, the parameter climbs to about 0.6, indicating that the UIC may deviate persistently from its unconditional mean over an extended number of weeks. During the financial crisis of 2008 and its aftermath in 2009, we again see a rise in the level of persistence of claims, followed by a steady decline until the end of the sample.

5.2. Forecasting comparison for three U.S. economic time series

We consider the one-step ahead forecasting performance of Model I and three benchmark models. We consider the weekly unemployment insurance claims series from Section 5.1 and two additional series: the U.S. monthly industrial production index from 1947 to 2013, and the U.S. quarterly money velocity M2 from 1919 to 2013. All three time series are in log-differences such that we focus on forecasting growth rates. The three series have three different seasonal frequencies: weekly, monthly and quarterly. The parameter estimates are obtained from the in-sample analysis.

compares the forecast precision of Model I with the forecast precision of the TAR, STAR and linear AR(p) models for all three series. The order of the AR model p is chosen by the general-to-specific methodology that selects the lag length based on the minimum AICc; the optimal order is denoted by We find that for all three macroeconomic time series Model I, the TAR, and the STAR model outperform the linear AR model in terms of root mean squared forecast error by a wide margin. Also, for all three time series, the score driven Model I has the lowest root mean squared forecast error out of the models considered. These results are consistent with the likelihood-based results: Model I also outperforms the TAR, STAR, and AR(

) models in terms of the log-likelihood value and AICc.

Table 2. Out-of-sample forecast comparisons for three U.S. macroeconomic time series

We conclude that the score driven Model I produces relatively accurate out-of-sample forecasts for the three U.S. macroeconomic time series. The reported F-RMSEs of Model I are considerably lower than those of the AR() models. The nonlinear adaptation to the serial dependence parameter in Model I is therefore potentially an important feature for the forecasting of such key economic time series.

6. Conclusions

We have shown that updating the parameters in an autoregressive model by the score of the predictive likelihood results in local improvements of the expected Kullback-Leibler divergence, and thus in nonlinear autoregressive models with information theoretic optimality properties. The reduced form of the resulting model can be written as a nonlinear ARMA model that can be compared to alternative nonlinear autoregressive models such as the threshold and smooth transition autoregressive models. Estimation of the static parameters in the new model is straightforward, and the maximum likelhood estimator can be shown to be consistent and asymptotically normal. In our empirical illustration for U.S. unemployment insurance claims, and for two other key U.S. macroeconomic time series, our most basic nonlinear dynamic model outperforms well-known alternatives such as the threshold and smooth transition autoregressive models.

Additional information

Funding

References

- Anderson, P. M., Meyer, B. D. (1997). Unemployment insurance takeup rates and the after-tax value of benefits. The Quarterly Journal of Economics 112(3):913–937. doi:10.1162/003355397555389

- Anderson, P. M., Meyer, B. D. (2000). The effects of the unemployment insurance payroll tax on wages, employment, claims and denials. Journal of Public Economics 78(1–2):81–106. doi:10.1016/S0047-2727(99)00112-7

- Ashenfelter, O., Ashmore, D., Deschenes, O. (2005). Do unemployment insurance recipients actively seek work? Evidence from randomized trials in four US states. Journal of Econometrics 125(1–2):53–75. doi:10.1016/j.jeconom.2004.04.003

- Blasques, F., Koopman, S. J., Lucas, A. (2014). Maximum likelihood estimation for generalized autoregressive score models. Discussion Paper 14-029/III, Tinbergen Institute.

- Blasques, F., Koopman, S. J., Lucas, A. (2015). Information theoretic optimality of observation driven time series models with continuous responses. Biometrika 102(2):325–343. doi:10.1093/biomet/asu076

- Bougerol, P. (1993). Kalman filtering with random coefficients and contractions. SIAM Journal on Control and Optimization 31(4):942–959. doi:10.1137/0331041

- Chan, K. S., Tong, H. (1986). On estimating thresholds in autoregressive models. Journal of Time Series Analysis 7(3):179–190. doi:10.1111/j.1467-9892.1986.tb00501.x

- Clark, T. E., McCracken, M. W. (2010). Averaging forecasts from VARs with uncertain instabilities. Journal of Applied Econometrics 25(1):5–29. doi:10.1002/jae.1127

- Cox, D. R. (1981). Statistical analysis of time series: Some recent developments. Scandinavian Journal of Statistics 8:93–115.

- Creal, D., Koopman, S. J., Lucas, A. (2011). A dynamic multivariate heavy-tailed model for time-varying volatilities and correlations. Journal of Business & Economic Statistics 29(4):552–563. doi:10.1198/jbes.2011.10070

- Creal, D., Koopman, S. J., Lucas, A. (2013). Generalized autoregressive score models with applications. Journal of Applied Econometrics 28(5):777–795. doi:10.1002/jae.1279

- Creal, D., Koopman, S. J., Lucas, A., Zamojski, M. (2018). Generalized autoregressive method of moments. Discussion Paper 15-138/III, Tinbergen Institute.

- Davidson, J. (1994). Stochastic Limit Theory. Advanced Texts in Econometrics. Oxford: Oxford University Press.

- Doan, T., Litterman, R. B., Sims, C. A. (1984). Forecasting and conditional projection using realistic prior distributions. Econometric Reviews 3(1):1–144. doi:10.1080/07474938408800053

- Domowitz, I., White, H. (1982). Misspecified models with dependent observations. Journal of Econometrics Econometrics 20(1):35–58. doi:10.1016/0304-4076(82)90102-6

- Freedman, D., Diaconis, P. (1982). On inconsistent M-estimators. The Annals of Statistics 10(2):454–461. doi:10.1214/aos/1176345786

- Gallant, R., White, H. (1988). A Unified Theory of Estimation and Inference for Nonlinear Dynamic Models. Cambridge: Cambridge University Press.

- Gavin, W. T., Kliesen, K. L. (2002). Unemployment insurance claims and economic activity. Review 84:15–28. doi:10.20955/r.84.15-28

- Hansen, B. E. (1991). GARCH(1,1) processes are near epoch dependent. Economics Letters 36(2):181–186. doi:10.1016/0165-1765(91)90186-O

- Harvey, A. C. (2013). Dynamic Models for Volatility and Heavy Tails. Cambridge: Cambridge University Press.

- Harvey, A. C., Luati, A. (2014). Filtering with heavy tails. Journal of the American Statistical Association 109(507):1112–1122. doi:10.1080/01621459.2014.887011

- Hopenhayn, H. A., Nicolini, J. P. (1997). Optimal unemployment insurance. Journal of Political Economy 105(2):412–438. doi:10.1086/262078

- Hurvich, C. M., Tsai, C. (1991). Bias of the corrected AIC criterion for underfitted regression and time series models. Biometrika 78(3):499–509. doi:10.2307/2337019

- Kabaila, P. (1983). On the asymptotic efficiency of estimators of the parameters of an ARMA process. Journal of Time Series Analysis 4(1):37–47 (doi:10.1111/j.1467-9892.1983.tb00355.x

- Kadiyala, K. R., Karlsson, S. (1993). Forecasting with generalized Bayesian vector autoregressions. Journal of Forecasting 12:365–378. doi:10.1002/for.3980120314

- Maasoumi, E. (1990). How to live with misspecification if you must. Journal of Econometrics 44(1-2):67–86. doi:10.1016/0304-4076(90)90073-3

- McMurrer, D., Chasanov, A. (1995). Trends in unemployment insurance benefits. Monthly Labor Review 118(9):30–39.

- Meyer, B. D. (1995). Natural and quasi-experiments in economics. Journal of Business & Economic Statistics 13(2):151–161. doi:10.2307/1392369

- Petruccelli, J. (1992). On the approximation of time series by threshold autoregressive models. Sankhya, Series B 54:54–61.

- Pötscher, B. M., Prucha, I. R. (1997). Dynamic Nonlinear Econometric Models: Asymptotic Theory. New York: Springer-Verlag.

- Rao, R. R. (1962). Relations between weak and uniform convergence of measures with applications. The Annals of Mathematical Statistics 33(2):659–680. doi:10.1214/aoms/1177704588

- Straumann, D., Mikosch, T. (2006). Quasi-maximum-likelihood estimation in conditionally heteroeskedastic time series: a stochastic recurrence equations approach. The Annals of Statistics 34(5):2449–2495. doi:10.1214/009053606000000803

- Teräsvirta, T. (1994). Specification, estimation, and evaluation of smooth transition autoregressive models. Journal of the American Statistical Association 89:208–218. doi:10.1080/01621459.1994.10476462

- Teräsvirta, T., Tjostheim, D., Granger, C. W. J. (2010). Modelling Nonlinear Economic Time Series. Oxford: Oxford University Press.

- Tong, H. (1983). Threshold Models in Non-Linear Time Series Analysis. New York: Springer-Verlag.

- Tong, H., Lim, K. S. (1980). On the effects of non-normality on the distribution of the sample product-moment correlation coefficient. Journal of the Royal Statistical Society: Series B (Methodological) 42(3):245–292. doi:10.1111/j.2517-6161.1980.tb01126.x

- Ullah, A. (1996). Entropy, divergence and distance measures with econometric applications. Journal of Statistical Planning and Inference 69:137–162. doi:10.1016/0378-3758(95)00034-8

- Ullah, A. (2002). Uses of entropy and divergence measures for evaluating econometric approximations and inference. Journal of Econometrics 107(1-2):313–326. doi:10.1016/S0304-4076(01)00126-9

- White, H. (1980). Using least squares to approximate unknown regression functions. International Economic Review Review 21(1):149–170. doi:10.2307/2526245

- White, H. (1981). Consequences and detection of misspecified nonlinear regression models. Journal of the American Statistical Association 76(374):419–433. doi:10.1080/01621459.1981.10477663

- White, H. (1982). Maximum likelihood estimation of misspecified models. Econometrica 50(1):1–25. doi:10.2307/1912526

- White, H. (1994). Estimation, Inference and Specification Analysis. Cambridge: Cambridge Books; Cambridge University Press.