Abstract

This article presents an innovative learning technique for modeling nonlinear systems. Our belief–desire–intention algorithm for neural networks can effectively identify the parameters of most relevance to a model for the online adjustment of weights, neurons, and layers. We present a detailed explanation of each component in the proposed agent, and successfully apply our model to describe the lateral forces on a tire under a range of test conditions. The model output is compared to test data and the output of an existing neural network model. Our results demonstrate that the belief–desire–intention agent is reliable and applicable in nonlinear modeling and is superior to backpropagation neural networks.

INTRODUCTION

Today, the simulation of logic and emotions is a major challenge for artificial intelligence (AI) systems. Due to the need to respond to human behavior, the level of interest in multiagent systems (MASs) is growing rapidly, as shown by the increasing number of publications found in the literature (Vasile Citation2009; Kumar, Sharma, and Kumar Citation2009; Lurgi and Robertson Citation2011; Wang, Chen, and Jiang Citation2012; Zhong et al. Citation2012; Nourafza, Setayeshi, and Khadem-Zadeh Citation2012).

As one of the main MASs, the belief–desire–intention (BDI) model has been investigated with the aim of eliciting more complex mental attitudes in AI systems (Mikic Fonte, Burguillo, and Nistal Citation2012; Tsai and Pan Citation2011; Castanedo et al. Citation2011; Mukun and Kiang Citation2012).

The BDI approach can be applied under rapidly changing environments that have been conditioned or solved by humans. This is due to three important factors: First, BDI appears to have an emotional state that matches that of a human in the same situation (Steunebrink, Dastani, and Meyer Citation2012; Sardina and Padgham Citation2011). Second, the model logic can express complex mental attitudes and interrelations acquired through interactions with the external environment (Wu et al. Citation2012; Wilges et al. Citation2012; Casali, Godo, and Sierra Citation2011). Third, there is evidence that the mechanisms of BDI allow for intelligent and rational behavior in complex environments with limited resources (Yadav et al. Citation2010; Bosse, Memon, and Treur Citation2011; Alechina et al. Citation2011).

Over the last few decades, artificial neural networks (ANNs) have been developed to model the underlying nonlinearities and complexities of artificial and physical systems. Many ANNs were developed to solve different problems, such as central force optimization (Formato Citation2009), laser solid freeform fabrication (Mozaffari and Fathi Citation2012), nonlinear system identification (Chen et al. Citation2009), peak ground acceleration prediction (Derras et al. Citation2012), semantic similarity measurement (Li, Raskin, and Goodchild Citation2012), and transient flow fields (Cohen et al. Citation2012). However, considerably less effort has been focused on modeling high-level cognitive tasks using ANNs and studying how they represent and reason their acquired knowledge. Lately, AI systems have attracted much attention in the online training of neural networks (NNs) (Ludermir, de Souto, and de Oliveira Citation2009; Chang, Cheng, and Chang, Citation2012). However, these systems have adjusted only the weights, which, unfortunately, results in shortcomings in adjustment of neurons and layers.

To improve their expressivity, we propose a new BDI model to enhance the overall utility of ANNs as learning and generalization tools. Based on this, the basic idea of this study is to develop a framework that integrates the BDI agent architecture with NNs. The result of this integration is a module built to the specifications of the proposed framework, which has beliefs modeled by NN theory.

In this article, we will translate the agent theory into an ANN. Moreover, we train the network online using a dataset of lateral forces applied to a tire. As a result, the major goal of this article is to further demonstrate the reliability and applicability of the BDI approach in nonlinear modeling accuracy. The performance of our model is compared with that of a backpropagation (BP) NN.

METHODS

BDI Agent

The history or makeup of an agent leads it to develop a set of desires. The history of the agent is also relevant in terms of the information obtained previously, which is stored as a form of memory by a set of beliefs. Some of these beliefs form the reasons for the agent to pursue a particular course of action, and may be received by observation.

First, when an action is performed, it is assumed that the agent intended it. Moreover, an agent that intends to perform an action will execute the action if an opportunity occurs in the external world (or in the cell’s own physical internal state). Third, it is assumed that every intention is based on a desire. An agent can have a desire for some state of the world, as well as a desire for some action to be performed. When the agent has a set of desires, it can choose which of them to pursue. A chosen desire can lead to an intention to engage in an action only if an additional reason is present; thus, this assumption basically says that for each intended action, there is both a reason and a desire. The fourth assumption is that if the desire is present and the agent believes the reason to pursue the desire is present, then the intention to perform the action will be generated. The relations between the intentional state properties are depicted on the right-hand side of .

Neural Networks

A typical NN is modeled by weighted-sum-and-threshold neurons, as described by Equations (1) and (2):

where uj are the inputs, Wj are the synaptic weights of the neurons, h is the activation of the neuron, v controls the shape of the sigmoid function, and y is the output. Most training algorithms adjust the weights Wj. The transfer function shown could also be replaced by other functions. The neurons and layers are often defined before training. However, it is better that all of them are adjusted in the online learning, which differs significantly from previous methods.

BDI Agent with NN

We define a BDI agent as a computational entity with a certain lifecycle inserted into a NN. Therefore, this model simulates serial behavior in order to pursue optimization goals. First, by employing the process of observation (or “See” in ), the agent senses the external environment. Subsequently, through the “opt” process, the agent forms a new intention. Desires and belief rules are modified using the “filter” and “bmp” processes. Finally, with “exe,” the intention is executed.

The mathematical expression of the agent is given as follows:

In this formulation, Aid is used to distinguish different agent entities, and P is the visual state set, such that p () is the visual reflection of certain external states in the agent after being sensed. If

is the set of all possible behaviors,

, where A is a set of certain determined programs. For the set “Bel,” composed of all possible Belief rules,

is the determined Belief set. Similarly, for “Des,” comprising all possible Desires of the agent,

represents a determined set of Desires. Finally, for the set “Int,” comprising all possible Intentions of the Agent, we have

to represent a determined set of Intentions.

From these definitions, the internal behavior of the agent can be demonstrated as the following projection:

The process of See introduces the input vector (S, D) into the network, where S = x(t) is the input layer of the neural network and D = y(t) is the test data.

This can be normalized as follows:

The bmp process modifies the original Belief rules to form rules that are coordinated with the current environment based on the new visual state. In this manner, bmp is an optimization process for the NN structure, where P is the input to the input layer of the NN, I is the output of the output layer of the NN, w denotes the weight between the input and hidden layers, and v denotes the weight between the hidden and output layers. If we assume that this network is an (m − n) × (s − r) structure, the neurons input to the hidden layer can be expressed as

Here, are the input values of the jth neuron of the dth hidden layer, and

are the linking weights between the input layer and hidden layer. The linking between the input and output of the hidden layer employs the sigmoid function; in other words, the neuronal output of the hidden layer can be expressed as follows:

Here, is the output of the jth neuron of the hidden layer and f[ψ] = 1/[1+exp(−ψ)]. The neuronal input function of the output layer can be expressed as follows:

where vjl are the linking weights between the hidden layer and output layer. The relationship between the input and output of the output layer can be expressed by the following sigmoid function:

where is the lth neuronal output of the output layer.

The opt process represents the regulation by the agent of the current executable intentions. This is based on the original desires while abiding by the Belief rules in order to coordinate with the current environment after modification. The executable Intention of the NN is to obtain the minimum value for the target function through the adjustment of NN weights and the modification of the input to the controlled target. In other words, the agent seeks to obtain the minimum value of the average error:

Here, L is the number of trained sample pairs, P is the number of output Intention variables, r(k) is the expected output of Intention, and y(k) is the actual value of Intention.

The filter process modifies the original Desire based on the updated executable Intention. This is done in accordance with the Belief rules under the current environment after modification. Moreover, this is a continuously evolving process. The agent makes continuous mutual modifications to Intention and Desire in order to obtain the optimization goal. Its realization process can be described as follows: for a given p (), if

, B is the coordinating Belief rule, and the Broyden–Fletcher–Goldfarb–Shanno (BFGS) optimization method is employed as the core method. The basic concept involves substituting the inverse of the Hessian matrix with a symmetric positive-definite matrix and finding successively better gradient descent directions without computing second-order partial derivatives or inverse matrices. An iterative function for this procedure is as follows:

The Newton-inverse function is , and

is the inverse Hessian matrix. The search direction can be determined using the following formula:

Here, H(n) is the current approximation of the Hessian matrix, g(n) is the current gradient vector, and β(n) approximates H(n) and g(n). Let g(k) be the network parameter vector (the weights and threshold value of the hidden layer, and the number of neurons and hidden layers) for the Kth iteration, and let ΔX(k) be the search direction of the Kth iteration.

The Intention, regulated by the Agent according to the opt process, is then given by exe, and this must be normalized to output Program Set A as follows:

APPLICATION

The lateral-slip characteristics of tires not only represent a very complex nonlinear problem, but are also extremely difficult to model (). Building a real-time multifactor model has always been one of the most important and challenging tasks for vehicle engineers. These factors are correlated with the lateral angle, vertical load, inflation pressure, structure and material of the tire, and even the road conditions. In addition, the lateral forces on the tire often exhibit complex nonlinear features. An adaptive tire model comprises a radial basis function (RBF) NN that is well suited to the tire dataset ().

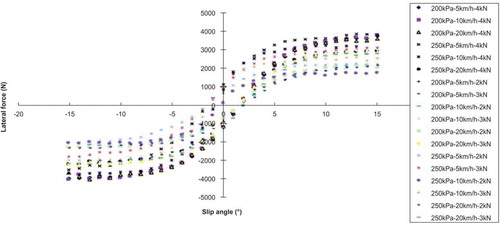

The lateral-slip characteristics of a tire under 18 working conditions () were obtained using the experimental setup shown in . The 558 data points given by this experiment are plotted in .

TABLE 1 Parameters of Testing Conditions

Comparing these results with those from a typical BP algorithm, we find that the decreasing trend of the BP and agent-based algorithms mostly agree with each other, but the network approximation based on the agent technology is more advanced than that of the BP algorithm (). During the network structure determination with adaptive selection, our method does not decrease the convergence rate of the algorithm but approximates the convergence around the 400th generation. The double vibration in the average error is a result of an increase in the number of hidden layers (two hidden layers are added during training). Given the large time involved in selecting the network structure, the apparently insignificant decrease in the iteration times nevertheless improves the overall efficiency of the algorithm. Finally, when the training is complete, the network structure obtained by this algorithm is 4-12-16-14-1, which means that two additional hidden layers are required over the 4-40-1 network structure employed in the BP algorithm. However, both accuracy and convergence speed are improved (). In this manner, the adaptive tire model based on the proposed agent can adaptively determine the most suitable network structure, not only saving computational resources but also reducing the occurrence of overfitting.

TABLE 2 Average Error of Neural Network

A comparison of the forecast performances of the agents BDI-ANN and BP-ANN was conducted (). As shown in the figure, the BDI-ANN adaptive model exhibits a better fit to the experimental data. In the case of BP-ANN, because the basis function displays trigonometric characteristics, the fitting curve cannot match the experimental data, and there are distortions in the approximation of the lateral force.

shows the experimental results for the NN learning rate according to the number of epochs. The Y-axis indicates the number of ANNs that must be trained until the result converges. When the learning rate is more than 0.6, the setting of the epoch number will not influence the number of training runs required. Another observation is that when the learning rate is less than 0.5, the number of training runs for 3000 epochs decreases much faster than the number of training runs for 5000 or 9000 epochs. This means that the designed NN is most sensitive to changes in learning rate when the epoch number is set to 3000.

CONCLUSIONS

In this article, a novel BDI agent algorithm was derived for an ANN. Its ability for effective learning and accurate forecasting was demonstrated through a case study of the lateral forces applied to a tire. For comparison, the original BP-ANN algorithm was also performed, and the results indicated that the proposed BDI-agent network outperformed the BP method. The proposed BDI-agent network also exhibited significantly improved forecasting effects compared to the BP algorithm, and the proposed online learning strategy was shown to be suitable for application to NNs. Our future work will facilitate the exchange of information between agents to improve the Belief rules.

REFERENCES

- Alechina, N., M. Dastani, B. Logana, and J. J. Meyer. 2011. Reasoning about plan revision in BDI agent programs. Theoretical Computer Science 412:6115–34. doi:10.1016/j.tcs.2011.05.052.

- Bosse, T., Z. A. Memon, and J. Treur. 2011. A recursive BDI agent model for theory of mind and its applications. Applied Artificial Intelligence 25:1–44. doi:10.1080/08839514.2010.529259.

- Casali, A., L. Godo, and C. Sierra. 2011. A graded BDI agent model to represent and reason about preferences. Artificial Intelligence 175:1468–78. doi:10.1016/j.artint.2010.12.006.

- Castanedo, F., J. García, M. A. Patricio, and J. M. Molina. 2011. A multi-agent architecture based on the BDI model for data fusion in visual sensor network’s. Journal of Intelligent & Robotic Systems 62:299–328. doi:10.1007/s10846-010-9448-1.

- Chang, L.-C., P.-A. Chen, and F.-J. Chang. 2012. Reinforced two-step-ahead weight adjustment technique for online training of recurrent neural networks. IEEE Transactions on Neural Networks and Learning Systems 23:1269–78. doi:10.1109/TNNLS.2012.2200695.

- Chen, S., X. Hong, B. L. Luk, and C. J. Harris. 2009. Non-linear system identification using particle swarm optimisation tuned radial basis function models. International Journal of Bio-Inspired Computation 1:246–58. doi:10.1504/IJBIC.2009.024723.

- Cohen, K., S. Siegel, J. Seidel, S. Aradag, and T. McLaughlin. 2012. Nonlinear estimation of transient flow field low dimensional states using artificial neural nets. Expert Systems with Applications 39:1264–72. doi:10.1016/j.eswa.2011.07.135.

- Derras, B., P.-Y. Bard, F. Cotton, and A. Bekkouche. 2012. Adapting the neural network approach to PGA prediction: An example based on the KiK-net data. Bulletin of the Seismological Society of America 102:1446–61. doi:10.1785/0120110088.

- Formato, R. A. 2009. Central force optimisation: A new gradient-like metaheuristic for multidimensional search and optimisation. International Journal of Bio-Inspired Computation 1:217–38. doi:10.1504/IJBIC.2009.024721.

- Kumar, R., D. Sharma, and A. Kumar. 2009. A new hybrid multi-agent-based particle swarm optimisation technique. International Journal of Bio-Inspired Computation 1:259–69. doi:10.1504/IJBIC.2009.024724.

- Li, W., R. Raskin, and M. F. Goodchild. 2012. Semantic similarity measurement based on knowledge mining: An artificial neural net approach. International Journal of Geographical Information Science 26:1415–35. doi:10.1080/13658816.2011.635595.

- Ludermir, T. B., M. C. P. de Souto, and W. R. de Oliveira. 2009. On a hybrid weightless neural system. International Journal of Bio-Inspired Computation 1:93–104. doi:10.1504/IJBIC.2009.022778.

- Lurgi, M., and D. Robertson. 2011. Evolution in ecological agent systems. International Journal of Bio-Inspired Computation 3:331–45. doi:10.1504/IJBIC.2011.043622.

- Mikic Fonte, A., J. C. Burguillo, and M. L. Nistal. 2012. An intelligent tutoring module controlled by BDI agents for an e-learning platform. Expert Systems with Applications 39:7546–54. doi:10.1016/j.eswa.2012.01.161.

- Mozaffari, A., and A. Fathi. 2012. Identifying the behaviour of laser solid freeform fabrication system using aggregated neural network and the great salmon run optimisation algorithm. International Journal of Bio-Inspired Computation 4:330–43. doi:10.1504/IJBIC.2012.049901.

- Mukun, C., and M. Y. Kiang. 2012. BDI agent architecture for multi-strategy selection in automated negotiation. Journal of Universal Computer Science 18:1379–404.

- Nourafza, N., S. Setayeshi, and A. Khadem-Zadeh. 2012. A novel approach to accelerate the convergence speed of a stochastic multi-agent system using recurrent neural nets. Neural Computing and Applications 21:2015–21. doi:10.1007/s00521-011-0624-4.

- Sardina, S., and L. Padgham. 2011. A BDI agent programming language with failure handling, declarative goals, and planning. Autonomous Agents and Multi-Agent Systems 23:18–70. doi:10.1007/s10458-010-9130-9.

- Steunebrink, B. R., M. Dastani, and J. J. Meyer. 2012. A formal model of emotion triggers: An approach for BDI agents. Synthese 185:83–129. doi:10.1007/s11229-011-0004-8.

- Tsai, M.-S., and Y.-T. Pan. 2011. Application of BDI-based intelligent multi-agent systems for distribution system service restoration planning. European Transactions on Electrical Power 21:1783–801. doi:10.1002/etep.v21.5.

- Vasile, M. 2009. A memetic multi-agent collaborative search for space trajectory optimisation. International Journal of Bio-Inspired Computation 1:186–97. doi:10.1504/IJBIC.2009.023814.

- Wang, R.-C., L. Chen, and H.-B. Jiang. 2012. Integrated control of semi-active suspension and electric power steering based on multi-agent system. International Journal of Bio-Inspired Computation 4:73–78. doi:10.1504/IJBIC.2012.047175.

- Wilges, B., G. P. Mateus, S. M. Nassar, and R. C. Bastos. 2012. Integration of BDI agent with fuzzy knowledge logic in a virtual learning environment. IEEE Latin America Transactions 10:1370–1376.

- Wu, L., K. Su, A. Sattar, Q. Chen, J. Su, and W. Wu. 2012. A complete first-order temporal BDI logic for forest multi-agent systems. Knowledge-Based Systems 27:343–51. doi:10.1016/j.knosys.2011.11.006.

- Yadav, N., C. Zhou, S. Sardina, and R. Rönnquist. 2010. A BDI agent system for the cow herding domain. Annals of Mathematics and Artificial Intelligence 59:313–33. Doi:10.1007/s10472-010-9182-1.

- Zhong, Y., L. Wang, C. Wang, and H. Zhang. 2012. Multi-agent simulated annealing algorithm based on differential evolution algorithm. International Journal of Bio-Inspired Computation 4:217–28. Doi:10.1504/IJBIC.2012.048062.