ABSTRACT

In this article, use of Zernike moments is presented for invariant recognition of Gurumukhi characters. Zernike moments belong to a class of continuous orthogonal moments defined over a unit circle. So, for a square image, computations of Zernike moments involve a certain square-to-circle mapping to map the pixel coordinates within the range of the unit circle. There exist two forms of mapping, which are used by various authors in their research works. Here, a computational framework has been proposed for calculation of Zernike moments using both mapping techniques, and a comparison between the performances of both of these mapping techniques is shown through a series of extensive experiments.

Introduction

Optical character recognition (OCR) is one of the most important areas of pattern recognition because of its variety of practical and commercial applications in banks, post offices, reservation counters, libraries, and publishing houses, among others (Pal, Jayadevan, and Sharma Citation2012). Every character recognition system takes input as a character in image form, from which certain sets of attributes in the form of numerical quantities are extracted that uniquely represent the character in that image. The working of any character recognition system of a given script is specific to certain types of character images, for example, specific to certain font or size or orientation of the character in the image. For any other image that is outside their scope of functionality, their performance degrades. The main reason behind this is lack of knowledge about the source, the type or quality of the character images present in the database under processing. So, there is always a possibility that the character images being processed are in some degraded form, for example, rotated, slanted, unevenly sized, etc., and recognition of such images is altogether different and difficult from nondegraded character images. Until now, a great deal of research has been conducted all over the globe to find an approach that is strong and robust enough to cope with all such variations in an image, such that change in extracted feature values in the presence of any variation in an image is insignificant and does not hamper the performance of the character recognition system. As a result, several techniques have been proposed; one of them is use of orthogonal moments. From the literature, it has been observed that orthogonal moments-based techniques are quite popular due to their invariant properties toward rotation, scaling, translation, and presence of noise.

A number of orthogonal moments are proposed, among them Zernike moments (ZMs) (Teague Citation1980), which are the most popular and frequently used orthogonal moments in various image-processing applications. The reason for selecting ZMs among the other orthogonal moments is that they are rotation invariant, i.e., the magnitude of Zernike moments doesn’t change on rotating the image. Hence, they can be used as rotation invariant features for image representation. They can be made to scale as well as translation invariant after applying simple geometric transformations (Wee and Paramesran Citation2007). Another main property of ZMs is the ease of image reconstruction. The orthogonal property of ZMs enables them to separate the individual contribution of each moment order for the reconstruction process. Simple additions of these individual contributions generate the reconstructed image. Teh and Chin (Citation1988) examined noise sensitivity and information redundancy of Zernike moments along with five other moments. They concluded that higher order moments are more sensitive to noise. It was also shown that orthogonal moments, including ZMs are better than other types of moments in terms of information redundancy and image representation. All these properties enable ZMs to be used in a wide area of applications such as pattern recognition applications (Abu-Mostafa and Psaltis Citation1984), image reconstruction (Pawlak Citation1992), image segmentation (Ghosal and Mehrotra Citation1993), edge detection (Ghosal and Mehrotra Citation1992), watermarking (Xin, Liao, and Pawlak Citation2004), face recognition (Haddadnia, Ahmadi, and Raahemifar Citation2003), content-based image retrieval (Kim and Kim Citation1998), palm print verification (Pang et al. Citation2003), etc.

The definition of ZMs has a form of mapping the discrete-space image function, which is normally in square or rectangular shape, onto Zernike polynomials over a unit disk. Now, this image-to-circular mapping can be carried out in two ways. In the first form, the image function is mapped onto a unit disk by first making the center of the image the same as the center of the unit disk (i.e., origin). The pixel coordinates are then normalized over the range of the unit disk. This mapping is commonly referred to as inner circle mapping. One drawback associated with this form of mapping is loss of information in the form of corner pixels that are not included in computation of moments. In the second form of mapping, the entire discrete image is bounded inside the unit disk, i.e., all pixels of the discrete image are mapped inside the unit disk of Zernike polynomials and, hence, included in moment computation. This approach ensures that there is no pixel loss during computation of moments, and thus, the entire image information can be preserved during moment computation. This mapping is commonly referred to as outer circle mapping.

Many authors in the past have applied ZMs for recognition of characters of different languages (Broumandnia and Shanbehzadeh Citation2007; Bailey and Srinath Citation1996; Kan and Srinath Citation2002; Khotanzad and Hong Citation1990; Patil and Sontakke Citation2007; Ramteke and Mehrotra Citation2006; Singh, Walia, and Mittal Citation2011; Trier, Jain, and Taxt Citation1996). Khotanzad and Hong (Citation1990) were perhaps the first to introduce ZMs features to character recognition. They observed that the ZMs features are very effective not only for the recognition of the printed characters but also for the recognition of handwritten alphanumeric Roman characters. An exhaustive analysis was performed by Bailey and Srinath (Citation1996) on the performance of ZMs and Pseudo-Zernike Moments (PZMs) with regard to the handwritten characters using different classifiers. Experimental results conducted on large databases for unconstrained handwritten numerals reveal that the different variations due to writing style, shape, stroke, and orientation can be handled successfully by their proposed features. Kan and Srinath (Citation2002) conducted extensive experiments on handwritten characters using ZMs and OFMMs as features and observed that Orthogonal Fourier Mellin Moments (OFMMs) provide better recognition performance for small character images. Ramteke and Mehrotra (Citation2006) evaluated the performance of various techniques based on moment invariants on handwritten Devanagari numerals and observed that the performance of the ZMs features is far better than other invariants. Patil and Sontakke (Citation2007) used ZMs features and a fuzzy neural network classifier for the recognition of Devanagari numerals, achieving a very high recognition rate.

In this article, a computational framework for invariant recognition of handwritten Gurumukhi characters involving both inner and outer circle mapping techniques along with their comparison is presented. Finally, the superiority of outer circle mapping technique over inner circle technique for character recognition is stated based on experimental results obtained. The rest of the article is organized as follows. The following section describes Zernike moments and their invariant properties. In “Computational Framework for Computation of Invariant Zernike Moments,” a computational framework for ZMs computation, using both mapping techniques, is presented. Experiments to analyze the performance of ZMs using both mapping techniques on Gurumukhi characters dataset are presented in “Experimental Study.” Concluding remarks are given in the final section.

Zernike Moments

Zernike moments (Teague Citation1980) are derived from a set of complex polynomials called Zernike polynomials, which form a complete orthogonal set over the interior of the unit circle, i.e., . Let the set of these polynomials be denoted by

. The Zernike polynomials are defined as products:

where is a positive integer or zero, such that

,

belongs to set of positive and negative integers subjected to constraints,

, r is the length of vector from origin to

pixel, θ is the angle between vector r and the x-axis in counter-clockwise direction given by

, and

is the radial part of the polynomial.

Radial polynomial is defined as

The Zernike polynomials satisfy the relation of orthogonality:

where * (asterisk) in Equation (3) means the complex conjugate and is the Kronecker delta given by

ZMs are the features extracted by projecting the input image onto the complex orthogonal Zernike polynomials (basis functions). The Zernike moment of order with repetition

for a continuous image function

is given by

For a digital image, the integrals are replaced by summations to obtain

To compute the Zernike moments of a given image, the center of the image is taken as the origin and pixel coordinates are mapped to the range of the unit circle, . Also note that

, then

so one can only concentrate on ZMs

with

.

Suppose θ0 is the rotation angle; and

represents the ZMs of the original and rotated image, respectively. We have

where and

represent the magnitude and phase of Zernike moments, respectively. From Equations (8) and (9) we observe that the magnitude of ZMs remain unchanged whereas the phase component of Zernike moments change with image rotation (Khotanzad and Hong Citation1990) (more detail on rotation invariant property of ZMs magnitudes will be covered in a later section). For this reason, many applications use ZMs magnitude alone as rotation invariant image features.

With knowledge of all ZMs of image

up to a given order

, one can reconstruct an image

given by

Computational Framework for Computation of Invariant Zernike Moments

For a digital image of size N × N, ZMs of order p and repetition q computed over a unit disk are given by

The mapped pixel coordinates that provide the center of pixel

, which itself occupies the area

, are given by

where

Rewriting Equation (11) using Equations (12) and (13), we get

Now, the value of D depends upon the mapping technique used to map the square image over the circular unit disk. There exist two mapping approaches, described in the following subsections.

Inner Circle Mapping Approach

In this approach the square image is mapped onto the circular disk as shown in , such that the center of the image is transformed to origin and pixel coordinates are normalized within the range of the unit circular disk.

One drawback associated with this form of mapping is loss of information in the form of corner pixels that are not included in the computation of moments. This is because of the constraint imposed for calculation of ZMs. This mapping allows those pixels whose centers fall inside the unit circle to take part in moment calculation. Those pixels whose centers do not satisfy this condition are left out of the calculation. Thus, a perfect mapping of the square domain into a circular domain is not achieved, and the circular boundary of the unit circle is approximated in a zig-zag pattern as represented in . So, for this mapping, the value of D is N, i.e., the diameter of the inscribed circle is equal to the square image dimension.

So, for an inner circle mapping approach, Equation (14) can be written as

Outer Circle Mapping Approach

In this mapping, the entire square image is mapped inside the unit circular disk as shown in , ensuring that there is no pixel loss during computation of moments, and thus, all information of the square image can be preserved during moment computation. So, for this mapping, the value of D is , i.e., the diameter of the circumscribing circle is equal to the diagonal of the square image.

Figure 3. Outer circle mapping approach in which an image is mapped entirely within the unit circular disk.

So, for the outer circle mapping approach Equation (14) can be written as

Now, because of this square-to-circle mapping (irrespective of whether it is inner or outer circle mapping), magnitudes of ZMs become scale invariant. This is due to the fact that, regardless of the size of an image whose ZMs we are calculating, the pixel coordinates of that image have to be mapped within the range of the unit circular disk, i.e., . So, whether the image is of size

or

, all pixels of these images will be normalized within the unit circle area. Hence, the ZMs become scale invariant.

The magnitudes of ZMs are rotation invariant as well. Let us assume that the given input image is rotated by an angle α and the rotated image be represented by

. The relationship between the original and the rotated image in polar coordinates is shown as

Using Equation (6), the expression for calculation of ZMs of image and rotated image

can be written as

From Equation (20), we can rewrite Equation (22) as

Equations (23) and (24) show that on rotation, ZMs merely acquire a phase shift but their magnitudes remain intact, with no change before or after rotation. So, their magnitudes can be used as image-representing features. In the following section, various experimental results are provided, demonstrating the comparison between inner and outer circle mapping techniques and also the superiority of using outer circle over inner circle.

Experimental Study

To evaluate the effect of the mapping techniques on the performance of ZMs, different experiments on the Gurumukhi character dataset are performed. Two forms of this dataset are used: 1) The complete dataset comprising 7,000 presegmented images of 35 different Gurumukhi characters (200 images per character). 2) A subset of this complete dataset, having only 50 images of each character, i.e., a total of 1750 images only. We refer to these datasets as GC-f and GC-s, respectively. Sample images of different Gurumukhi characters contained in these datasets are shown in . Both GC-f and GC-s datasets consists of binary images of Gurumukhi characters of size 32 × 32.

In the first stage, the GC-s dataset is used to test the effectiveness of both mapping schemes, using multiple similarity measures. In the next stage, the larger dataset GC-f is used to further show the performance of ZMs, using both mapping techniques, one at a time on different character sizes (obtained by resizing the original character images of GC-f at various sizes) using the best similarity measure observed during the experiments performed on the smaller dataset (in the initial stage). At all stages, experiments on both binary as well as grayscale images (obtained by applying a mean filter of size 2 × 2 repeatedly 10 times ; Shi et al. Citation2002) are performed. In the third and final stage of our experiments, we tested the performance of ZMs using a much more powerful classifier, Support Vector Machine (SVM) on both binary and grayscale images.

Effect of Mapping Approaches

We began by testing the performance of ZMs that were computed using both mapping approaches on a GC-s dataset of Gurumukhi characters with 1750 images of 35 characters having 50 samples each, using five different similarity measures: Euclidean distance, Bray–Curtis, Squared Chord, Chi-Square, (for these, see Kokare, Chatterji, and Biswas Citation2003), and Extended Canberra (Liu and Yang Citation2013).

Let x and y be two n-dimensional feature vectors of database image and query image, respectively, then, the various similarity measures are defined as follows.

The Euclidean distance:

The Bray–Curtis distance:

The Squared Chord:

The Chi-Square distance:

The Extended Canberra distance:

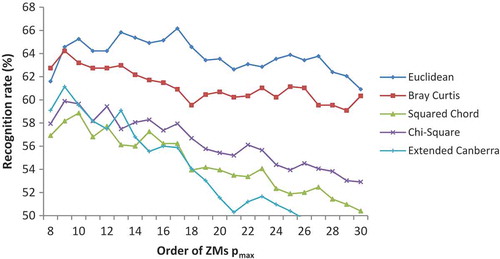

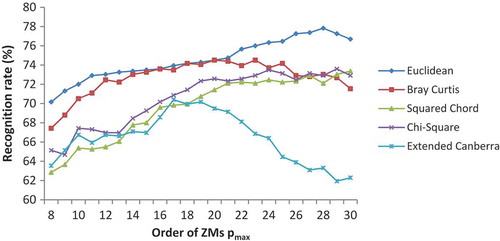

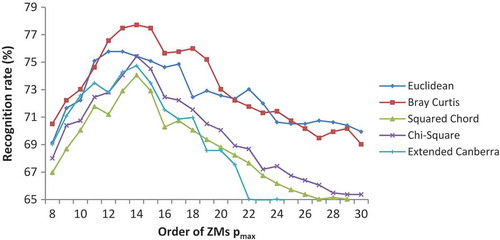

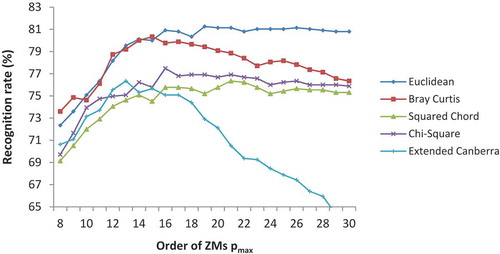

ZMs to order 30 are calculated for both binary as well as grayscale images of the GC-s dataset, using both mapping approaches separately, and recognition rates are obtained at each order by using all five of these similarity measures. shows the recognition rates obtained on the GC-s dataset, using both mapping techniques on binary as well as on grayscale images.

Table 1. Recognition rates (%) obtained on GC-s dataset using both mapping techniques.

From , it is quite clear that there is an increase in recognition rate of about 9%–12% for outer circle mapping over inner circle mapping in the case of binary images and about 3%–6% in the case of grayscale images. Also, Euclidean distance gives the best recognition rate in three out of four cases, so we will use Euclidean distance as a our base similarity measure in further experiments.

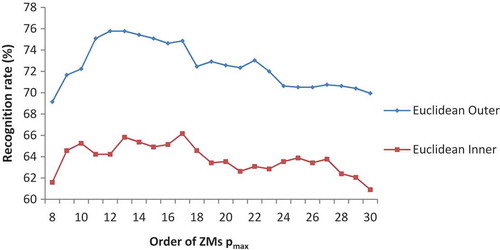

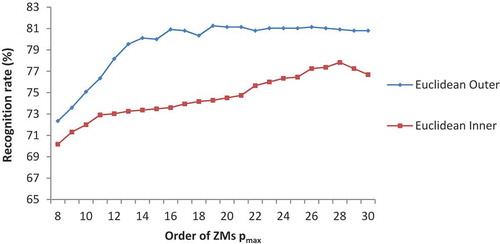

– represent recognition rate graphs of the aforementioned four cases on the GC-s dataset obtained by using different similarity measures at different orders of ZMs.

Figure 5. Recognition rate (%) versus moment order for binary images on GC-s dataset, using inner circle mapping approach.

Figure 6. Recognition rate (%) versus moment order for grayscale images on GC-s dataset, using inner circle mapping approach.

Figure 7. Recognition rate (%) versus moment order for binary images on GC-s dataset, using outer circle mapping approach.

Figure 8. Recognition rate (%) versus moment order for grayscale images on GC-s dataset, using outer circle mapping approach.

A performance comparison of both mapping techniques for binary and grayscale images using Euclidean distance as a base similarity measure is shown in and .

Figure 9. Performance comparison of inner circle mapping versus outer circle mapping approach for binary images on GC-s dataset.

Figure 10. Performance comparison of inner circle versus outer circle mapping approach for grayscale images on GC-s dataset.

Now we extend our experiments on a larger dataset of Gurumukhi characters, i.e., GC-f, containing 7,000 images. In this set of experiments, again, the comparison between performances of ZMs computed using both mapping techniques are done but on images of different sizes. We have used the Euclidean distance only as our similarity measure because it gave best results in almost all cases, as observed from our earlier results. Initially, the images in the dataset are of size 32 × 32. In order to perform experiments on different image sizes, we resized them to 28 × 28, 36 × 36, 48 × 48 and 64 × 64. As a result, we have five different datasets, i.e., four variants of GC-f having resized images and the original GC-f itself. shows the recognition rates obtained by these five different datasets, using both mapping techniques.

Table 2. Recognition rates (%) obtained at different image sizes using both inner circle and outer circle mapping schemes.

From , we can deduce that even on images of different sizes, the outer circle mapping approach outperforms inner circle mapping. There is a clear difference of about 9%–11% and about 5%–6% in recognition rates obtained by inner and outer circle mapping techniques on binary and grayscale images, respectively, and in an application area such as character recognition, this much difference in recognition rate would seriously affect the performance of the overall system.

In our final set of experiments to evaluate both mapping approaches, we tested the computed ZMs against a powerful classifier—SVM. The SVM classifier is trained by a given set of training data and a model is prepared to classify test data based on this model. For a multiclass classification problem, we decompose the multiclass problem into multiple binary class problems, and we design suitable combined multiple binary SVM classifiers. Our problem is also a multiclass problem in which the classifier has to differentiate between 35 different classes of Gurumukhi characters. We obtained such multiclass SVM classifier tool from A Library for SVM (LIBSVM), available at Chang and Lin (Citation2014). A practical guide for SVM and its implementation is also available (Chang and Lin Citation2015; Hsu, Chang, and Lin Citation2015). According to the manner in which all the samples can be classified in different classes with appropriate margin, different types of kernels in the SVM classifier are used. Commonly used kernels are: linear kernel, polynomial kernel, Gaussian radial basis function (RBF) and sigmoid (hyperbolic tangent). We have selected the RBF kernel for our experimental setup.

ZMs computed on the GC-f dataset with image size 32 × 32 are classified with the SVM classifier and results are tabulated in . shows the recognition rate obtained by SVM using both mapping techniques and their comparison with those obtained earlier by Euclidean distance on binary and grayscale images.

Table 3. Comparison of recognition rates (%) obtained by Euclidean distance and SVM for binary and grayscale images, using both mapping techniques.

From , it is quite evident that there is an increase of around 5%–7% in recognition rate by using SVM as a classifier over Euclidean distance.

From all the experiments conducted, it can be seen clearly that ZMs computed using the outer circle mapping approach significantly outperform those computed by using the inner circle mapping in all cases, with an approximate difference of about 5%–10%, irrespective of any dataset or similarity measure being used.

Conclusions

In this article, an effort is made to describe various mapping techniques used to compute Zernike moments and to compare them in terms of performance of the ZMs computed by those mapping schemes, when recognizing Gurumukhi characters. Two mapping schemes are illustrated in this article: outer circle and inner circle mapping schemes. Through a series of rigorous experiments on the Gurumukhi characters dataset using multiple similarity measures such as Euclidean distance, Chi-Square distance, Squared Chord distance, etc., and also after using the SVM classifier, it was observed that the outer circle mapping approach outperforms the inner circle approach significantly, with a margin of 5%–10% recognition rate on both binary as well as grayscale images. The robustness to image rotation and image scaling is the main strength of Zernike moments, and their wide spread future use as image descriptors should replace other existing descriptors, which are either invariant to rotation or scaling or none at all.

Funding

The authors are grateful to the University Grants Commission (UGC), New Delhi, India, for providing financial grants for the Major Research Project entitled, “Development of Efficient Techniques for Feature Extraction and Classification for Invariant Pattern Matching and Computer Vision Applications”, vide its File No.: 43-275/2014(SR). The financial grant provided by the UGC, New Delhi, India, to one of the authors (Ashutosh Aggarwal) under the SAP-III programme is also highly acknowledged.

Additional information

Funding

References

- Abu-Mostafa, Y. S., and D. Psaltis. 1984. Recognitive aspects of moment invariants. IEEE Transactions on Pattern Analysis and Machine Intelligence 6:698–706. doi:10.1109/TPAMI.1984.4767594.

- Bailey, R. R., and M. Srinath. 1996. Orthogonal moment features for use with parametric and non parametric classifiers. IEEE Transactions on Pattern Analysis and Machine Intelligence 18:389–99. doi:10.1109/34.491620.

- Broumandnia, A., and J. Shanbehzadeh. 2007. Fast Zernike wavelet moments for Farsi character recognition. Image Vision and Computing 25:717–26. doi:10.1016/j.imavis.2006.05.014.

- Chang, C. C., and C. J. Lin. 2014. LIBSVM: A library for support vector machines. Software available http://www.csie.ntu.edu.tw/~cjlin/libsvm ( accessed December 5, 2014).

- Chang, C. C., and C. J. Lin. 2015. LIBSVM: A library for support vector machines. http://www.csie.ntu.edu.tw/~cjlin/papers/libsvm.pdf ( accessed January 20, 2015).

- Ghosal, S., and R. Mehrotra. 1992. Edge detection using orthogonal moment based operators, In Proceedings of 11th image, speech and signal analysis (IAPR) international conference on pattern recognition III:413–16. IAPR.

- Ghosal, S., and R. Mehrotra. 1993. Segmentation of range images: An orthogonal moment-based integrated approach. IEEE Transactions on Robotics and Automation 9:385–99. doi:10.1109/70.246050.

- Haddadnia, J., M. Ahmadi, and K. Raahemifar. 2003. An effective feature extraction method for face recognition. In Proceedings of international conference on image processing, IEEE and Conference held in Barcelona, Spain, 2003, 3:917–20.

- Hsu, C. W., C. C. Chang, and C. J. Lin, A practical guide to support vector classification, http://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf ( accessed January 20, 2015).

- Kan, C., and M. D. Srinath. 2002. Invariant character recognition with Zernike and orthogonal Fourier-Mellin moments. Pattern Recognition 35:143–54. doi:10.1016/S0031-3203(00)00179-5.

- Khotanzad, A., and Y. H. Hong. 1990. Invariant image recognition by Zernike moments. IEEE Transactions on Pattern Analysis and Machine Intelligence 12:489–97. doi:10.1109/34.55109.

- Kim, Y. S., and W. Y. Kim. 1998. Content-based trademark retrieval system using a visually salient feature. Image and Vision Computing 16:931–39. doi:10.1016/S0262-8856(98)00060-2.

- Kokare, M., B. N. Chatterji, and P. K. Biswas. 2003. Comparison of similarity metrics for texture image retreival. Proceedings of Conference on Convergent Technologies for the Asia-Pacific Region TENCON 2:571–75.

- Liu, G.-H., and J.-Y. Yang. 2013. Content based image retrieval using color difference histogram. Pattern Recognition 46:188–98. doi:10.1016/j.patcog.2012.06.001.

- Pal, U., R. Jayadevan, and N. Sharma. 2012. Handwriting recognition in Indian regional scripts: A survey of offline techniques. ACM Transactions on Asian Language Information Processing 11:1–35. doi:10.1145/2090176.

- Pang, Y. H., T. B. J. Andrew, N. C. L. David, and F. S. Hiew. 2003. Palm print verification with moments. Journal of WSCG 12:1–3.

- Patil, P. M., and T. R. Sontakke. 2007. Rotation, scale and translation invariant Devanagari numeral character recognition using general fuzzy neural network. Pattern Recognition 40:2110–17. doi:10.1016/j.patcog.2006.12.018.

- Pawlak, M. 1992. On the reconstruction aspect of moment descriptors. IEEE Transactions on Information Theory 38 (1992):1698–1708. doi:10.1109/18.165444.

- Ramteke, R. J., and S. C. Mehrotra. 2006. Feature extraction based on moment invariants for handwriting recognition. Proceedings of IEEE Conference on Cybernetics and Intelligent Systems 1–6.

- Shi, M., Y. Fujisawa, T. Wakabayashi, and F. Kimura. 2002. Handwritten numeral recognition using gradient and curvature of gray scale image. Pattern Recognition 35:2051–59. doi:10.1016/S0031-3203(01)00203-5.

- Singh, C., E. Walia, and N. Mittal. 2011. Rotation Invariant complex Zernike moments features and their applications to human face and character recognition. IET Computer Vision 5:255–66. doi:10.1049/iet-cvi.2010.0020.

- Teague, M. R. 1980. Image analysis via the general theory of moments. Journal of the Optical Society of America 70:920–30. doi:10.1364/JOSA.70.000920.

- Teh, C.-H., and R. T. Chin. 1988. On image analysis by the methods of moments. IEEE Transactions on Pattern Analysis and Machine Intelligence 10:496–513. doi:10.1109/34.3913.

- Trier, O. D., A. K. Jain, and T. Taxt. 1996. Feature extraction methods for character recognition—A survey. Pattern Recognition 29:641–62. doi:10.1016/0031-3203(95)00118-2.

- Wee, C. Y., and R. Paramesran. 2007. On the computational aspects of Zernike moments. Image and Vision Computing 25:967–80. doi:10.1016/j.imavis.2006.07.010.

- Xin, Y., S. Liao, and M. Pawlak. 2004. Geometrically robust image watermark via pseudo Zernike moments. Proceedings of Canadian Conference on Electrical and Computer Engineering 2:939–42.