?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Pest in the plant is a major challenge in the agriculture sector. Hence, early and accurate detection and classification of pests could help in precautionary measures while substantially reducing economic losses. Recent developments in deep convolutional neural network (CNN) have drastically improved the accuracy of image recognition systems. In this paper, we have presented a transfer learning of pre-trained deep CNN-based framework for classification of pest in tomato plants. The dataset for this study has been collected from online sources that consist of 859 images categorized into 10 classes. This study is first of its kind where: (i) dataset with 10 classes of tomato pest are involved; (ii) an exhaustive comparison of the performance of 15 pre-trained deep CNN models has been presented on tomato pest classification. The experimental results show that the highest classification accuracy of 88.83% has been obtained using DenseNet169 model. Further, the encouraging results of transfer learning-based models demonstrate its effectiveness in pest detection and classification tasks.

Introduction

The economy of a country can be assessed by the role of agriculture. However, there are various challenges in agriculture such as huge requirements due to population growth, climate change, insufficient resource, and plant disease. One of the major hindrances in the cultivation of the crop is pest (Manoja and Rajalakshmi Citation2014). It is estimated that approximately 18% of the crop production lost every year due to animal pest (Oerke Citation2006). It causes substantial loss to farmers and a threat to food security (Food and Agriculture Organization of the United Nations Citation2017). Thus, it is urgently needed to find efficient pest management strategies. Pest may be controlled by applying physical (cultivation, mechanical weeding), biological (cultivar choice, crop rotation, antagonists, predators), and chemical measures (pesticides) (Ehler Citation2006). The integration of biological and chemical control put forth a concept of Integrated Pest Management which involves multiple tactics to control all classes of pests (Ehler Citation2006). Further, there are few traditional methods of pest control like blacklight traps (Mutwiwa and Tantau Citation2005) and sticky traps (Pinto-Zevallos and Vänninen Citation2013). Since backlight traps and sticky traps need to be replaced at a regular time interval, so it is not economical and less effective. Spraying pesticides is one of the solutions but ample and random use of pesticides causes health hazards in human beings by consuming the food (Prathibha et al. Citation2014). Therefore, early detection and classification of pests play a vital role in crop management, but it is a challenging task and needs to be treated with special attention. Due to the advancement in digital technology, image processing, and artificial intelligence can play a significant role in agricultural research which becomes a catalyst for the researchers to solve the pest detection and classification problem.

Along this line, various methods have been presented in the literature for image-based pest detection and classification on different crops. This includes greenhouse crops like a rose (Boissard, Martin, and Moisan Citation2008) and agricultural-based crops like rice (Faithpraise et al. Citation2013), cotton (He et al. Citation2013), maize (Sena Jr et al. Citation2003), soybean (Souza et al. Citation2011), and teagarden (Samanta and Ghosh Citation2012). The image processing technique based on morphological characteristics has been implemented for the detection of pests like honey bees (Cho et al. Citation2007) and wasps (Watson, O’Neill, and Kitching Citation2004). The three most common pests like whiteflies, thrips, and aphids were identified using the thresholding method in YUV color space (Cho et al. Citation2007). In Qing et al. (Citation2014), an approach has been presented to count rice plant hopper using features like Haar and Histogram of Gradient and classifiers like AdaBoost and Support Vector Machine (SVM).

This paper is focused on the classification of pests in tomato plants. Tomato is one of the most important vegetable crops all around the world. The major tomato-producing countries are China, the European Union, India, the USA, and Turkey (Atherton and Rudich Citation2012). India has got 2nd position in the agricultural area of tomato as well as in the production of tomato (Rupanagudi et al. Citation2015). Further, it ranks third in priority after Potato and Onion in India and second after Potato in the world (Atherton and Rudich Citation2012). However, the cultivation of these crops has undergone a crisis due to pest attacks. In literature, a handful number of research works have been presented on tomato pest classification. Prathibha et al. (Citation2014) have presented an approach to segment the tomato region from the captured images using thresholding and morphological techniques and then the number of Borer (Helicoverpa Armigera) insect has been counted. Similarly, the Borer insect has been detected using k-means clustering and morphological techniques in Rupanagudi et al. (Citation2015). Recently, deep learning (Gautam and Singh Citation2020) has attracted the researchers because of its superior performance in image recognition tasks including pest detection on tomato plants. In Fuentes et al. (Citation2017), the authors have presented a deep learning-based approach for the detection and classification of tomato plant diseases and pests. They have experimented with three architectures: faster region-based convolutional neural network (Faster R-CNN), region-based fully convolution network (R-FCN), and single-shot multiplex detector (SSD) with various CNN-based feature extractors such as Virtual Geometry Group (VGGNet) and Residual Network (ResNet). It has been reported that the best average precision of 85.98% has been achieved using R-FCN with ResNet-50. Shijie, Peiyi, and Siping (Citation2017) presented a transfer learning approach using VGG16 for detection and classification of tomato plant diseases and pests. Further, they have experimented with VGG16 as a feature extractor and SVM as a classifier. The transfer learning using VGG16 approach performed better than the VGG16+ SVM approach with an average accuracy of 89%. In Nieuwenhuizen, Hemming, and Suh (Citation2018), an approach has been proposed to detect tomato whitefly and its predatory bugs using deep CNN model. The result has been compared with hand-counted insects using the yellow sticky trap method. The average classification accuracy was obtained as 87.40%. In Gutierrez et al. (Citation2019), a comparative study of KNN (K-Nearest Neighbor), SVM, MLP (Multilayer Perceptron), Faster R-CNN, and SSD classifiers has been presented in distinguishing Bemisia Tabacii egg and Trialeurodes Vaporariorum egg tomato pest classes. It has been reported that the best classification accuracy of 82.51% has been obtained using Faster-RCNN. A transfer learning of pre-trained models AlexNet and GoogleNet was used in Brahimi, Boukhalfa, and Moussaoui (Citation2017) for classifying nine tomato diseases. The accuracy of these deep model was reported higher than shallow model like SVM and Random Forest. The following observations have been made from the literature review on tomato pest detection and classification: (i) a handful number of research works have been done and hence, there is a need to explore the image-based tomato pest classification tasks; (ii) The dataset used in most of the research works is mix of tomato plant diseases and pests, which may not result in robust and reliable model for tomato pest classification; (iii) number of pest classes in the considered dataset used in literature are limited to 2–4, which need to be increased.

In this paper, a transfer learning-based approach has been presented for the classification of tomato pest with the objective to minimize aforementioned limitations. In deep neural network, a fundamental problem is the requirement of a large dataset for efficient training of the model. One of the promising solutions to this problem is transfer learning where a pre-trained model on a large dataset is used. Here, we have explored 15 pre-trained deep CNN models and presented an exhaustive comparison of the performance of these models on tomato pest classification. The experiments have been performed on 859 tomatopest images belonging to 10 classes. This study is first of its kind where 10 classes of tomato pests are involved.

The rest of the paper is organized as follows. In Section 2, we have described the methodology which consists of dataset description, transfer learning, and pre-trained deep CNN models. In Section 3, experimental results have been presented followed by discussion in Section 4. Finally, Section 5 concludes our work.

Methodology

Dataset Description

The dataset used in this study has been downloaded from online sources (Flickr Citation2018; Insect Images Citation2018; IPM Images Citation2018; The National Bureau of Agricultural Insect Resources (NBAIR) Citation2013; The Tamil Nadu Agricultural University [TNAU] Citation2019). The dataset consists of 859 tomato pest images belonging to 10 classes. All the images are in RGB color space. The details of the dataset have been provided in . The first class, Bactrocera Litifrons (Shimizu et al. Citation2007) is one of the damaging insects often affects tomato plant including other plants such as brinjal, bell pepper, and cucurbits. However, the damage is less due to this pest. Bemisia Tabaci (Citation2012) species is another kind of pest which is difficult to predict due to the more affected area. CitationChrysodeixis Chalcites is an extremely polyphagous pest which feeds on many fruits, vegetables, ornamental crops along with tomato plant. Epilachna Vigintiopunctata (Rajagopal and Trivedi Citation1989) and Spodoptera Litura (Citation2012) are the major pests that attack solanaceous plants such as tomato and potato. Another fruit borer named Helicoverpa Armigera (Citation2012) bores into fruit which leads to rotting in fruits. Icerya Aegyptiaca (Meena et al. Citation2012) is a pest having a wide host range. It sucks the cell sap from the leaves and upper soft portion of the plant which results in damage of leaf. One more hazardous pest named Liriomyza Trifolii (Citation2012) reduces production due to its damaging effect. Tuta Absoluta (Desneux et al. Citation2010) is one of the most devastating pest tomato leaf miners that affects tomato in all growing stages of egg, larvae, pupa, and adult. On the contrary, a pest like Nesidiocoris Tenuis (Pérez-Hedo, Arias-Sanguine, and Urbaneja Citation2018) is a useful pest capable of inducing plant defenses in tomato due to its phytophagous behavior.

Table 5. Benchmarking of our approach with literature on tomato pest classification.

Table 1. Details of pest dataset.

Transfer Learning

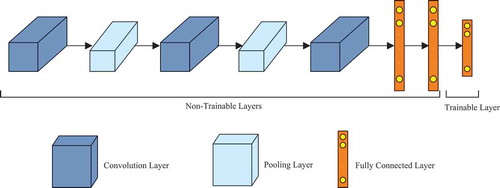

Deep learning models, especially CNN, have shown tremendous success in classifying images. However, training deep CNN model from scratch usually requires a large amount of data and high computation power. The collection of a large dataset is not always possible, specifically in the domain like agriculture due to local weather condition, indiscrimination of insect pests, and uncontrolled experimental field of invasive species. Further, there may be a problem of overfitting (Skalski Citation2018), if the deep CNN model is trained with small dataset. Hence, the transfer learningtechnique (Talo et al. Citation2019) is a solution to this problem. Transfer learning is a technique where the model uses the knowledge gained during the training of a relatively large dataset. The concept of transfer learning has been depicted in . The figure shows general deep CNN model pre-trained on large dataset. While training, all the layers of this model are frozen (non-trainable layers) except last layer (trainable layer). Due to this, only the weights of last layer are updated while training. Hence it reduces the computational cost while achieving adequate performance.

Pre-trained Deep CNN Model

Here, we have explored 15 pre-trained deep CNN models which are VGG16 (Simonyan and Zisserman Citation2014), VGG19 (Simonyan and Zisserman Citation2014), ResNet50V2 (He et al. Citation2016), ResNet101V2 (He et al. Citation2016), ResNet152V2 (He et al. Citation2016), InceptionV3 (Szegedy et al. Citation2016), Xception (Chollet Citation2017), InceptionResNetV2 (Szegedy et al. Citation2017), MobileNet (Howard et al. Citation2017), DenseNet121 (Huang et al. Citation2017), DenseNet169 (Huang et al. Citation2017), DenseNet201 (Huang et al. Citation2017), NASNetMobile (Zoph et al. Citation2018), NASNetLarge (Zoph et al. Citation2018), and MobileNetV2 (Sandler et al. Citation2018). All the above models are trained on ImageNet dataset (Krizhevsky, Sutskever, and Hinton Citation2012) which has 1.2 million images belonging to 1,000 categories. Each model has some unique characteristics. VGG16 and VGG19 are a sequential convolutional neural network using 3 × 3 filters. Max-pooling was performed on 2 × 2 pixel window with stride of 2. After each maxpool layer, the number of convolution filters gets doubled in VGG16 and VGG19. As the name specifies, VGG16 has 16 layers whereas VGG19 has 19 layers. The ResNet model (ResNet50V2, ResNet101V2, and ResNet152V2) has the skip connections from earlier layer along with direct connection from the immediate previous layer. InceptionV3 works in blocks and each block consists of parallel existence of convolution filters and pooling layer. It handles the computing resources in a better way. InceptionResNetV2 is the combination of Inception architecture and residual connections. This model has three ensembles residual and one InceptionV3connection. Xception model is the result of depthwise separable convolution implying a complete separation of spatial convolution and cross channel convolution. MobileNet is built from depthwise separable convolutions and followed Inception models to reduce complications in initial few layers. Another model, MobileNetV2, is based on a flip of residual structure where the shortcut connections are between the thin bottleneck layers. In DenseNet, each layer is connected to every other layer in a dense connectivity pattern. It introduces direct connections instead of L layers in other networks. NASNet introduced a new regularization technique called scheduled drop path which improves the performance. Further details of all 15 pre-trained models are provided in . The discussion on non-trainable and trainable parameters can be found in Section 3.1.

Table 2. Details of pre-trained deep CNN models.

Results

Experimental Setup

In this subsection, we have provided all the experimental setup to train 15 pre-trained models for tomato pest classification. It can be observed from that the input shape varies for each model. Therefore, we have reshaped our tomato pest images to the desired shape as per the requirement of each model. For example, the tomato pest images have been reshaped to for VGG16 model and

for Inception model. The second experimental setup is replacing the last fully connected layer which consists of 1,000 neurons to the fully connected layer with 10 neurons. This is done because all the 15 pre-trained models considered here were trained on ImageNet dataset which is having 1,000 classes and hence last layer consists of 1,000 neurons. Whereas, the tomato pest dataset used in this study is having 10 classes and hence last fully connected layer should have 10 neurons. Further, all the layers were frozen while training except last layer based on the concept of transfer learning as shown in , i.e., the weights obtained from training of ImageNet dataset were remained intact while training for tomato pest dataset and only the weights of last layer will be updated. Consequently, the number of trainable parameters was drastically reduced as shown in . Then, we have randomly partitioned the tomato pest dataset into 70% training set, 10% validation set, and 20% test set. Each model has been trained for 100 epochs with a mini-batch size of 8 and learning rate of 0.01. The experiment was performed with Adam (Adaptive Moment Estimation) optimizer. Moreover, we have run our model for five trials(T) to reduce the variability obtained in classification accuracy due to random partitioning of train, validation, and test dataset. Finally, the overall accuracy (OA) has been calculated by averaging the accuracy of five trials. In addition, we have shown standard deviation (STD) of accuracy in five trials which demonstrate the robustness of the model. All experiments have been performed in Python 3.6 with Keras framework having Tensorflow backend. Simulation was carried out in Google Colaboratory that provides Intel(R) Xeon(R) CPU @ 2.30 GHz, 13GB RAM, and NVIDIA Tesla K80 GPU.

Experimental Results

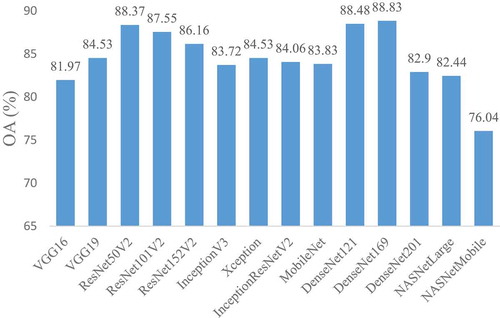

The classification accuracy obtained using 15 pre-trained models on test set of tomato pest dataset has been shown in . We have shown the classification accuracy for each trial along with OA and STD of five trials. It can be observed that the highest OA of 88.83% with STD of 1.48% has been obtained by applying DenseNet169 model. Further, depicts the graphical view of performance comparison of 15 pre-trained models on tomato pest dataset. For a more detailed analysis, we have calculated the following parameters: Class-wise Accuracy, Precision, Sensitivity, Specificity, and F1 Score using DenseNet169 model as it has produced the highest OA ().

Table 3. Classification results obtained using 15 pre-trained models on tomato pest dataset.

Table 4. Other performance parameters obtained using denseNet169 model for tomato pest dataset.

Discussion

To highlight the performance of transfer learning approach adopted in this paper for classification of tomato pest dataset, we have presented a benchmarking of our approach with literature (). From , it can be observed that the presented transfer learning approach has obtained the highest classification accuracy of 88.83% using DenseNet169 model. Further, it has been observed that the training is done only for single trial probably because of high computation cost and then classification accuracy is computed. But, the model with single trial may not be reliable because of random partition of train, validation, and test set. For different set of train, validation, and test set, the classification accuracy may vary. In this paper, we have used transfer learning approach where computation cost is drastically reduced because of reduction in trainable parameters. Hence, we run our model for five trials and classification accuracy is computed by averaging the classification accuracies of five trials. Consequently, we have computed STD of five trials and the low STD shows the reliability of the system. The main advantages of presented work are summarized as follows:

Most of the studies are focused on the classification of tomato leaf diseases or mix dataset of diseases and pests. In this study, we have focussed on classification of tomato pests only.

To the best of our knowledge, this is the first study where 10 tomato pest classes are involved.

The exhaustive comparison of 15 pre-trained deep CNN models for tomato pest classification has been presented.

Conclusion

Tomato pest detection and classification have been performed with images obtained from online resources using transfer learning of deep CNN models. In this study, we employed 15 pre-trained models to classify tomato pest dataset. Our results showed that the DenseNet169 model obtained the highest classification accuracy of 88.83%±1.48% among the 15 models. The presented transfer learning approach shows the encouraging results and demonstrates its ability to classify tomato pests. In the future, we intent to work on data augmentation to generate a large dataset and train the deep CNN model from scratch for tomato pest classification.

Declaration of Interest Statement

Authors declare no conflict of interest.

Additional information

Funding

References

- An assignment on Pests of tomato. 2012. Published on Mar 11, 2012, by Dinesh Dalvaniya. https://www.slideshare.net/DineshDalvaniya/pests-of-tomato1.

- Atherton, J., and J. Rudich, Eds.. 2012. The tomato crop: A scientific basis for improvement. Springer Science & Business Media, New York, USA.

- Boissard, P., V. Martin, and S. Moisan. 2008. A cognitive vision approach to early pest detection in greenhouse crops. Computers and Electronics in Agriculture 62 (2):81–93. doi:10.1016/j.compag.2007.11.009.

- Brahimi, M., K. Boukhalfa, and A. Moussaoui. 2017. Deep learning for tomato diseases: Classification and symptoms visualization. Applied Artificial Intelligence 31 (4):299–315. doi:10.1080/08839514.2017.1315516.

- Cho, J., J. Choi, M. Qiao, C. W. Ji, H. Y. Kim, K. B. Uhm, and T. S. Chon. 2007. Automatic identification of whiteflies, aphids and thrips in greenhouse based on image analysis. J. Math. Comput. Simul. 1:46–53.

- Chollet, F. 2017. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, Hawaii, (pp. 1251–58).

- Chrysodeixis Chalcites. (golden twin-spot moths). https://www.cabi.org/isc/datasheet/13243.

- Desneux, N., E. Wajnberg, K. A. Wyckhuys, G. Burgio, S. Arpaia, C. A. Narváez-Vasquez, and J. Pizzol. 2010. Biological invasion of European tomato crops by Tuta absoluta: Ecology, geographic expansion, and prospects for biological control. Journal of Pest Science 83 (3):197–215. doi:10.1007/s10340-010-0321-6.

- Ehler, L. E. 2006. Integrated pest management (IPM): Definition, historical development and implementation, and the other IPM. Pest Management Science 62 (9):787–89. doi:10.1002/ps.1247.

- Faithpraise, F., P. Birch, R. Young, J. Obu, B. Faithpraise, and C. Chatwin. 2013. Automatic plant pest detection and recognition using k-means clustering algorithm and correspondence filters. International Journal of Advanced Biotechnology and Research 4 (2):189–99.

- Flickr. 2018. The online photo management and sharing application in the world. Photos of pests available online. Accessed December 10, 2018. https://www.flickr.com/search/?text=helicoverpa%20armigera.

- Food and Agriculture Organization of the United Nations. 2017. Plant pests and diseases. http://www.fao.org/emergencies/emergency-types/plant-pests-and-diseases/en/.

- Fuentes, A., S. Yoon, S. Kim, and D. Park. 2017. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 17 (9):2022. doi:10.3390/s17092022.

- Gautam, A., and V. Singh. 2020. CNN-VSR: A deep learning architecture with validation-based stopping rule for time series classification. Applied Artificial Intelligence 34 (2):101–24. doi:10.1080/08839514.2020.1713454.

- Gutierrez, A., A. Ansuategi, L. Susperregi, C. Tubío, I. Rankić, and L. Lenža. 2019. A benchmarking of learning strategies for pest detection and identification on tomato plants for autonomous scouting robots using internal databases. Journal of Sensors 2019.

- He, K., X. Zhang, S. Ren, and J. Sun. 2016, October. Identity mappings in deep residual networks. In European conference on computer vision (pp. 630–45). Springer, Cham.

- He, Q., B. Ma, D. Qu, Q. Zhang, X. Hou, and J. Zhao. 2013. Cotton pests and diseases detection based on image processing. TELKOMNIKA Indonesian Journal of Electrical Engineering 11 (6):3445–50. doi:10.11591/telkomnika.v11i6.2721.

- Howard, A. G., M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, and H. Adam. 2017. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv Preprint arXiv:1704.04861.

- Huang, G., Z. Liu, L. Van Der Maaten, and K. Q. Weinberger. 2017. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, Hawaii, (pp. 4700–08).

- Insect Images. 2018. The entomology society of America and USDA Identification Technology Program, Last updated in 2018. Photos of pests available online. Accessed December 22 2018. https://www.insectimages.org/search/action.cfm?q=spodoptera+litura.

- IPM Images. 2018. The center for invasive species and ecosystem health, last updated in 2018. Photos of pests available online. Accessed December 18, 2018. https://www.ipmimages.org/browse/Areathumb.cfm?area=63.

- Krizhevsky, A., I. Sutskever, and G. E. Hinton. 2012. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems, 25 (pp. 1097–1105).

- Manoja, M., and J. Rajalakshmi. 2014. Early detection of pest on leaves using support vector machine. International Journal of Electrical and Electronics Research 2 (4):187–94.

- Meena, S. C., K. K. Sharma, A. Mohanasundaram, and M. Md. 2012. Icerya aegyptiaca Douglas: A new pest of Flemingia semialata and as an alternate host of Aprostocetus purpureus (Cameron) in the lac ecosystem. Indian Journal of Entomology 74 (4):404–05.

- Mutwiwa, U. N., and H. J. Tantau. 2005. Suitability of a UV lamp for trapping the greenhouse whitefly Trialeurodes vaporariorum Westwood (Hom: Aleyrodidae). Agricultural Engineering International: CIGR Journal, VII: 1-11.

- The National Bureau of Agricultural Insect Resources (NBAIR). 2013. Insects in Indian agro ecosystem. Photos of pests available online. Accessed December 25 2018. http://www.nbair.res.in/insectpests/Bactrocera-latifrons.php.

- Nieuwenhuizen, A. T., J. Hemming, and H. Suh. 2018. Detection and classification of insects on stick-traps in a tomato crop using Faster R-CNN. Proceedings of the Netherlands Conference on Computer Vision NCCV18, Netherlands (pp. 1-4).

- Oerke, E. C. 2006. Crop losses to pests. The Journal of Agricultural Science 144 (1):31–43. doi:10.1017/S0021859605005708.

- Pérez-Hedo, M., Á. M. Arias-Sanguine, and A. Urbaneja. 2018. Induced tomato plant resistance against Tetranychus urticae triggered by the phytophagy of Nesidiocoris tenuis. Frontiers in Plant Science 9: doi: 10.3389/fpls.2018.01419.

- Pinto-Zevallos, D. M., and I. Vänninen. 2013. Yellow sticky traps for decision-making in whitefly management: What has been achieved? Crop Protection 47:74–84. doi:10.1016/j.cropro.2013.01.009.

- Prathibha, G. P., T. G. Goutham, M. V. Tejaswini, P. R. Rajas, and K. Balasubramani. 2014. Early pest detection in tomato plantation using image processing. International Journal of Computer Applications 96 (12): 22.

- Qing, Y., D. X. Xian, Q. J. Liu, B. J. Yang, G. Q. Diao, and T. A. N. G. Jian. 2014. Automated counting of rice planthoppers in paddy fields based on image processing. Journal of Integrative Agriculture 13 (8):1736–45. doi:10.1016/S2095-3119(14)60799-1.

- Rajagopal, D., and T. P. Trivedi. 1989. Status, bio ecology, and management of Epilachna beetle, Epilachna vigintioctopunctata (Fab.)(Coleoptera: Coccinellidae) on potato in India: A review. International Journal of Pest Management 35 (4):410–13.

- Rupanagudi, S. R., B. S. Ranjani, P. Nagaraj, V. G. Bhat, and G. Thippeswamy. 2015, January. A novel cloud computing-based smart farming system for early detection of borer insects in tomatoes. In 2015 International Conference on Communication, Information & Computing Technology (ICCICT), Nagpur, India, (pp. 1–6). IEEE.

- Samanta, R. K., and I. Ghosh. 2012. Tea insect pests classification based on artificial neural networks. International Journal of Computer Engineering Science (IJCES) 2 (6):1–13.

- Sandler, M., A. Howard, M. Zhu, A. Zhmoginov, and L. C. Chen. 2018. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, (pp. 4510–20).

- Sena Jr, D. G., Jr, F. A. C. Pinto, D. M. Queiroz, and P. A. Viana. 2003. Fall armyworm damaged maize plant identification using digital images. Biosystems Engineering 85 (4):449–54. doi:10.1016/S1537-5110(03)00098-9.

- Shijie, J., J. Peiyi, and H. Siping. 2017 October. Automatic detection of tomato diseases and pests based on leaf images. In 2017 Chinese automation congress (CAC), Jinan, China, 2537–2510. IEEE.

- Shimizu, Y., T. Kohama, T. Uesato, T. Matsuyama, and M. Yamagishi. 2007. Invasion of solanum fruit fly Bactrocera latifrons (Diptera: Tephritidae) to Yonaguni Island, Okinawa Prefecture, Japan. Applied Entomology and Zoology 42 (2):269–75. doi:10.1303/aez.2007.269.

- Simonyan, K., and A. Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv Preprint arXiv:1409.1556.

- Skalski, P. 2018. Preventing deep neural network from overfitting. Mysteries of neural networks part II. Towards Data Science, Sep. 7.

- Souza, T. L., E. S. Mapa, K. Dos Santos, and D. Menotti. 2011, September. Application of complex networks for automatic classification of damaging agents in soybean leaflets. In 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, (pp. 1065–68). IEEE.

- Szegedy, C., S. Ioffe, V. Vanhoucke, and A. A. Alemi. 2017, February. Inception-v4, inception-resnet and the impact of residual connections on learning. In The Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, California, USA.

- Szegedy, C., V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna. 2016. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, (pp. 2818–26).

- Talo, M., O. Yildirim, U. B. Baloglu, G. Aydin, and U. R. Acharya. 2019. Convolutional neural networks for multi-class brain disease detection using MRI images. Computerized Medical Imaging and Graphics 78:101673. doi:10.1016/j.compmedimag.2019.101673.

- The Tamil Nadu Agricultural University (TNAU). 2019. Established in 1971.Pests of Tomato. Accessed January 5, 2019. http://agritech.tnau.ac.in/crop_protection/crop_prot_crop_insect-veg_tomato.html

- Watson, A. T., M. A. O’Neill, and I. J. Kitching. 2004. Automated identification of live moths (Macrolepidoptera) using a digital automated identification System (DAISY). Systematics and Biodiversity 1 (3):287–300. doi:10.1017/S1477200003001208.

- Zoph, B., V. Vasudevan, J. Shlens, and Q. V. Le. 2018. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, Utah, (pp. 8697–710).