?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Sine-cosine algorithm (SCA) has found a widespread application in various engineering optimization problems. However, SCA suffers from premature convergence and insufficient exploitation. Cylindricity error evaluation is a typical engineering optimization problem related to the quality of cylindrical parts. A hybrid greedy sine-cosine algorithm with differential evolution (HGSCADE) is developed in this paper to solve optimization problems and evaluate cylindricity error. HGSCADE integrates the SCA with the opposition-based population initialization, the greedy search, the differential evolution (DE), the success history-based parameter adaptation, and the Levy flight-based local search. HGSCADE is tested on the CEC2014 benchmark functions and is employed in cylindricity error evaluation. The results show the superiority of HGSCADE to other state-of-the-art algorithms for the benchmark functions and cylindricity error evaluation.

Introduction

Optimization refers to reaching the optimal objective value of a certain problem under-related constraints. Optimization problems exist in different areas of engineering or science. Meta-heuristic algorithms (MAs) have been developed to solve complex optimization problems that possess the properties of discontinuity, nondifferentiability, nonconvexity, nonlinearity, and multimodality (Yang Citation2008). Generally, MAs take the optimization problem as a black box and search for the solution based on stochastic rules. By transferring and exchanging information among search agents, the positions of the search agents are constantly updated to a promising region with the optimal solution.

No Free Lunch (NFL) theorem (Wolpert and Macready Citation1997) states that it is impossible to solve all optimization problems by a specific algorithm, i.e., an algorithm is applicable to a given optimization problem that may not be applicable to another one with distinct characteristics. Therefore, MAs need to be further studied to deal with different optimization problems. The research directions of MAs can be categorized as initiating new algorithms, improving existing algorithms and combining different algorithms. Some of the well-known MAs are genetic algorithms (GAs) (Srinivas and Patnaik Citation1994), particle swarm optimization (PSO) (Kennedy and Eberhart Citation1995), differential evolution (DE) (Storn and Price Citation1997), ant colony optimization (ACO) (Dorigo and Di Caro Citation1999), harmony search (HS) (Geem, Kim, and Loganathan Citation2001), bacterial foraging optimization (BFO) (Passino Citation2002), artificial bee colony (ABC) (Karaboga and Basturk Citation2007), biogeography-based optimization (BBO) (Simon Citation2008), gravitational search algorithm (GSA) (Rashedi, Nezamabadi-pour, and Saryazdi Citation2009), gray wolf optimizer (GWO) (Mirjalili, Mirjalili, and Lewis Citation2014), ant lion optimizer (ALO) (Mirjalili Citation2015), whale optimization algorithm (WOA) (Mirjalili and Lewis Citation2016), sine-cosine algorithm (SCA) (Mirjalili Citation2016), salp swarm algorithm (SSA) (Mirjalili et al. Citation2017).

As a crucial index to evaluate the machining accuracy and form error of cylindrical parts, cylindricity error is closely related to the product quality and performance. The minimum zone cylindricity (MZC) error evaluation method is consistent with the international standards, but a general algorithm that corresponds to MZC has not been developed. Essentially, cylindricity error evaluation can be regarded as a single objective real-parameter numerical optimization problem. Therefore, MAs has been applied for cylindricity error evaluation under the MZC principle, such as GA (Lai et al. Citation2000), PSO (Wen et al. Citation2010), HS (Yang et al. Citation2018), etc. It is very important to improve the convergence speed and accuracy of MAs in cylindricity error evaluation.

SCA, proposed by Mirjalili (Citation2016), is a population-based optimization algorithm for solving optimization problems. SCA presents a good performance in some cases (Das, Bhattacharya, and Chakraborty Citation2018; Li, Fang, and Liu Citation2018; Mirjalili Citation2016), but it suffers from premature convergence and insufficient exploitation. Therefore, SCA variants were developed and applied for different optimization problems like feature selection (Sindhu et al. Citation2017), object tracking (Nenavath and Jatoth Citation2018; Nenavath, Kumar Jatoth, and Das Citation2018), pairwise local sequence alignment (Issa et al. Citation2018), optimal power flow (Attia, El Sehiemy, and Hasanien Citation2018), engineering design (Abd Elaziz, Oliva, and Xiong Citation2017; Chegini, Bagheri, and Najafi Citation2018; Gupta and Deep Citation2019; Rizk-Allah Citation2018), etc.

In this study, a novel algorithm called hybrid greedy sine-cosine algorithm with differential evolution (HGSCADE) is proposed for solving benchmark problems and evaluating cylindricity error. In HGSCADE, the opposition-based learning (OBL) is applied in population initialization, and the greedy search is employed to prevent excluding good solutions. Meanwhile, the DE is incorporated into SCA to improve the exploitation ability and maintain the population diversity, and a success history-based parameter adaptation is introduced to overcome invalid search. Then, a Levy flight-based local search is used to escape from local optima. HGSCADE is compared with standard SCA, other state-of-the-art MAs, and SCA variants over the CEC2014 benchmark functions. Finally, the proposed HGSCADE algorithm is used for cylindricity error evaluation as a real engineering case. The test and experiment results testify to the superiority and effectiveness of the proposed algorithm.

The remainder of this paper is organized as follows: the brief introduction of SCA is present in Section 2. The proposed HGSCADE algorithm is described in Section 3. The test results and comparisons are presented in Section 4. The application of HGSCADE for cylindricity error evaluation is introduced in Section 5. Eventually, conclusions are drawn in Section 6.

Sine-Cosine Algorithm

Similar to other MAs, the standard SCA starts with random candidate agents. Then, the agents are updated by sine and cosine functions to create new ones.

where is the position of the i-th agent in j-th dimension at t-th iteration, r1/r2/r3 are random numbers,

is the best position obtained so far in j-th dimension, r4 is a random number in [0, 1], and || denotes the absolute value.

The variable r1 is adjusted with the number of iterations by EquationEquation (2)(2)

(2) :

where a is a constant, t is the t-th iteration and T represents the upper limit of iterations.

The Proposed Algorithm

In this section, the proposed HGSCADE algorithm is introduced in detail. Although the standard SCA has found a widespread application in various engineering optimization problems, it suffers from premature convergence and insufficient exploitation. To overcome the drawbacks of SCA, we integrate five strategies into SCA as follows:

Opposition-based Population Initialization

Random population initialization generates some useless agents due to the absence of systemic knowledge. Therefore, the OBL is employed to form the initial population. The opposite value of a real number x ∈ [l, u] (l, u ∈ R) is specified as (Tizhoosh Citation2005):

where l and u are the lower limit and upper limit of x, respectively.

Firstly, a population is generated from the uniform distribution. Secondly, the opposite vector

for each agent is calculated by EquationEquation (3)

(3)

(3) . Thirdly, the objective values of the populations

and

are computed, and the best N agents are selected from

, as the initial population.

Greedy Search

In the earlier search stage of SCA (r1 > 1), the optimal position might be skipped over. Therefore, the greedy search is employed to update the position of each agent, i.e.

where and

are the parent position and new position of i-th agent, respectively.

Combined with Differential Evolution

The standard SCA uses the knowledge of a best-so-far agents to produce new members, which may result in insufficient population diversity and premature convergence. Hence, DE is incorporated into SCA in a parallel way. The component of position oversteps the boundary is set to be the mean of the overstepped boundary and the corresponding component of the parent position. For each iteration, the agent i will be updated by the SCA method if randi < pui, where randi is a random number in [0, 1] and pui ∈ [0, 1] is the updating rate. Otherwise, the agent i will be updated by the DE method as follows:

Firstly, a donor/mutant vector is produced by the parent vectors

.

where indexes R1, R2, and R3 represent integers stochastically generated from [1, N], and . Fi is the scaling factor uniformly distributed in [0, 1].

is the position of the one picked out from the top N × pt (pt ∈ (0, 1)) agents in the population at t-th iteration stochastically (the agents in the population are sorted according to their fitness values), randm represents a random number in [0, 1],

.

Secondly, a trial vector is generated by crossing

with

.

where rij is a random number uniformly distributed in [0, 1], j0 is a random integer generated from [1, d], cr ∈ [0, 1] denotes the crossover rate.

Finally, the survivor is selected from the better one between and

.

Success History-Based Parameter Adaptation

To reduce invalid search, HGSCADE regulates the critical parameters adaptively by the mechanism developed in (Tanabe and Fukunaga Citation2013). As shown in , the historical information for three memory parameters Mpu, MF , and Mcr is stored with H entries. At each iteration, pui, Fi , and cri for each agent i are generated as follows:

Table 1. The historical memory

where index ri is randomly selected from [1, H], randni(μ, σ) and randci(μ, σ) denote two values generated from Gaussian distribution and Cauchy distribution, respectively. In the case that the generated value of pui or cri is out of [0, 1], set it to the closest limit value. For Fi, truncate it to be 1 if Fi ≥ 1 or regenerate it if Fi < 1.

At each iteration, the values of pui, Fi , and cri that successfully generate a superior offspring are recorded as Spu, SF , and Scr. If and only if superior offspring are generated, the values of Mpu, MF , and Mcr are updated as follows:

where index k (1 ≤ k ≤ H) indicates the entry to be updated. k is initialized to 1 at the first iteration and is incremental with iteration. When k > H, reset k = 1. meanWA(Spu) and meanWA(Scr) are the weighted mean. meanWL(SF) is the weighted Lehmer mean.

where ,

,

,

.

Local Search Based on Levy Flight

SCA may stagnate if the best agent is caught in local optima. Therefore, a Levy flight disturbance (Jensi and Jiji Citation2016) is applied to the best agent in the current population:

where step = Levy(β) ⊕ Xcb, the symbol ⊕ denotes entry-wise multiplication. Levy(β) is derived from EquationEquation (12)(12)

(12) :

where scale is a factor to control the step size of Levy flight, β ∈ [0, 2] is Levy index, u and v are derived from Gaussian distributions, i.e.

where Γ is the standard gamma function.

Then, compute the opposite vector for XcbLevy, and substitute Xcb with the best one chosen from

.

Overall Implementation

The overall implementation steps of HGSCADE are described in Algorithm 1. HGSCADE involves three stages: Firstly, the OBL is employed to form the initial population, and the initial values of parameters are given. Secondly, each agent in the population is updated by the greedy search SCA method or DE method according to the updating rate, and the success history-based mechanism is used for critical parameter adaptation. Thirdly, the Levy flight-based local search is applied to the current best agent, and the search continues until the termination condition is reached.

Algorithm 1 The HGSCADE

1. Randomly generate a population X of N agents.

2. Compute the opposite population by EquationEquation (3)

(3)

(3) .

3. Evaluate the fitness of and

by calculating the corresponding objective function values.

4. Select the best N agents from

5. Initialize the values of parameters a, Mpu, MF, Mcr, pt, H, β, scale

6. k = 1, t = 0

7. While (t < T) do

8. Update the global best position Xgb

9. Randomly select an index ri from [1, H]

10. Generate pu, F and cr by EquationEquation (8)(8)

(8)

11.For (i = 1: N) do

12. Generate a random number randi in [0, 1]

13. If (randi < pui) then

14. Update the position of the i-th agent by EquationEquations (1)(1)

(1) and (Equation4

(4)

(4) )

15. Else

16. Randomly select an agent Xpt from the top N × pt (pt ∈ (0, 1)) members in the population.

17. Update the position of the i-th agent by EquationEquations (5)(5)

(5) , (Equation6

(6)

(6) ) and (Equation7

(7)

(7) )

18. End if

19.End for

20. Evaluate the fitness of each agent by calculating the corresponding objective function value.

21. Update Mpu, MF, Mcr using EquationEquation (9)(9)

(9)

22. k = k + 1

23. If (k > H) then

24. k = 1

25. End if

26. Perform Levy flight disturbance for the current best position Xcb by EquationEquation (11)(11)

(11)

27. Compute the opposite position for XcbLevy

28. Update the current best position Xcb

29. Update r1, r2, r3 , r4

30. t = t + 1

31. End While

32. Return The global best position Xgb

Results and Discussion

In this section, the performance of HGSCADE is evaluated on the CEC2014 benchmark functions. This test series contains 30 functions that can be categorized as unimodal functions (H1–H3), simple multimodal functions (H4–H16), hybrid functions (H17–H22), and composition functions (H23–H30) (Liang, Qu, and Suganthan Citation2013). The population size N is set as 50 and the maximum number of function evaluations is fixed as 104 × D (D denotes the dimension of problem) for all the given problems. Fifty-one independent algorithm runs are executed for each problem. Moreover, the best value (the difference between the obtained best solution and the true optimal solution) for each run will be truncated to 0 if it is smaller than 10–8. The statistical measures as mean and standard deviation (Std.) are reported, and the best is highlighted in bold.

Compared Algorithms

To verify the effectiveness and competitiveness of the proposed algorithm, it is compared with the standard SCA, other state-of-the-art MAs, and the variants of SCA:

SCA: a population-based optimization algorithm which updates the population by sine and cosine functions (Mirjalili Citation2016b).

DE: a powerful and straightforward evolutionary algorithm which was primarily designed for real-parameter optimization problems (Storn and Price Citation1997).

ABC: a swarm-based optimization algorithm which simulates the intelligent foraging behavior of a honeybee swarm (Karaboga and Basturk Citation2007).

GWO: a swarm intelligence algorithm which mimics the social behavior of gray wolves in chasing and hunting the prey (Mirjalili, Mirjalili, and Lewis Citation2014).

WOA: a meta-heuristic algorithm which is inspired by the bubble-net hunting strategy of humpback whales (Mirjalili and Lewis Citation2016).

SSA: a bio-heuristic algorithm which is inspired by the swarming behavior of salps when navigating and foraging in oceans (Mirjalili et al. Citation2017).

HSCAPSO: a hybrid algorithm which adds memory-enabled behavior of PSO to SCA approach, and hybridizes SCA with PSO in iteration level (Nenavath, Kumar Jatoth, and Das Citation2018).

HSCADE: a hybrid algorithm which incorporates DE operators into SCA in iteration level, and performs a greedy search (Nenavath and Jatoth Citation2018).

PSOSCALF: a hybrid algorithm which combines PSO with the position updating equations of SCA and Levy flight (Rizk-Allah Citation2018).

m-SCA: a hybrid algorithm which adds a self-adaptive component to SCA, and incorporates opposite perturbation into SCA (Gupta and Deep Citation2019).

The parameters settings for existing algorithms are extracted from the original reference. The parameter settings for the proposed HGSCADE algorithm are the combination of suggested parameter settings from SCA (a, r2, r3, r4) (Mirjalili Citation2016), SHADE (Mpu, MF, Mcr, pt, H) (Tanabe and Fukunaga Citation2013), and PSOLF (β, scale) (Jensi and Jiji Citation2016). The parameter values for all algorithms are set in .

Table 2. The parameters of algorithms and their values

Test Results

The CEC2014 benchmark functions are considered with the dimension of D = 30 and D = 50. Due to space constraints, only the test results for D = 30 are reported in .

Table 3. Wilcoxon signed rank test between HGSCADE and other algorithms for the CEC2014 functions with D = 30 at a 5% level of significance

As seen in , HGSCADE provides the best results in terms of the mean for all unimodal functions (H1–H3). By introducing a greedy search, DE, and Levy flight-based local search, the population can exploit the nearby area of every individual best position. Therefore, the exploitation ability of HGSCADE is enhanced.

For simple multimodal functions, HGSCADE provides the best results in terms of mean on functions H4–H6, H8–H12, H15, and H16, ABC on functions H8, H13, and H14, and DE on functions H7 and H8. By inheriting the randomness of SCA and Levy flight disturbance, the population can explore the whole search space. Therefore, the exploration ability of HGSCADE is enhanced.

HGSCADE provides the best results in terms of the mean for all hybrid functions (H17–H22). For composition functions, HGSCADE provides the best results in terms of mean on functions H23, H25, H26, H29, and H30, DE on functions H23, H26–28, ABC on functions H23 and H26, and SSA on function H26. For function H24, SCA, GWO, and m-SCA give the same best results in terms of the mean. By the success history-based adaptation of parameter pui, each agent is automatically updated by the SCA method or DE method to balance the trade-off between exploration and exploitation.

In addition, it can be observed that HGSCADE is the best algorithm in terms of overall standard deviation, i.e., HGSCADE has the highest stability. The opposition-based population initialization, greedy search, and success history-based parameter adaptation improve the stability of HGSCADE.

Statistical Analysis

Considering the effect of each run, a non-parametric statistical test called Wilcoxon signed rank test is implemented at a significance level of 5%. The calculated p-value determines to accept or reject the null hypothesis. The grade symbols +, ≈ and – signify that HGSCADE is superior, similar, and inferior to a compared algorithm, respectively. The character string N/A signifies that the two samples are identical and the Wilcoxon signed rank test is invalid. Accordingly, the Wilcoxon signed rank test results are presented in . The grades are summarized in the last row of the table.

Table 4. The cylindricity error evaluation results of different algorithms

From , HGSCADE performs better than HSCAPSO, HSCADE, and PSOSCALF in all the CEC2014 benchmark functions for D = 30. In 29, 22, 26, 29, 28, 26, and 28 out of 30 CEC2014 benchmark functions for D = 30, HGSCADE performs better than SCA, DE, ABC, GWO, WOA, SSA, and m-SCA, respectively. It verifies the test results that HGSCADE is the best algorithm in comparison with other algorithms.

Convergence Analysis

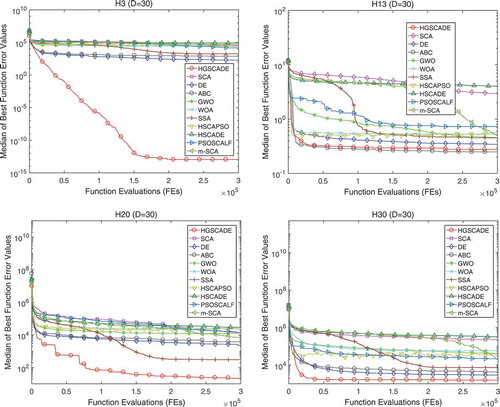

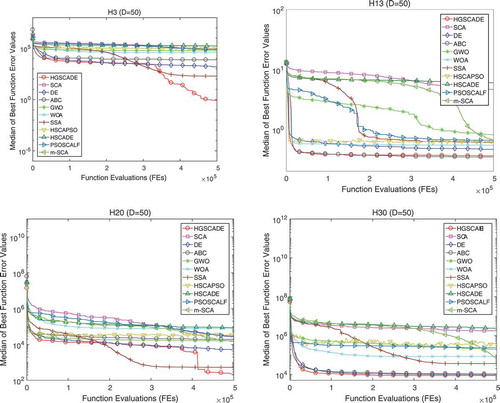

Considering that there are too many functions, four functions (H3, H13, H20, and H30) from different categories are selected to exemplify the convergence behavior. The median of the obtained best values is displayed on the vertical axis in logarithmic scale, while the number of function evaluations is displayed on the horizontal axis. The convergence curves for the selected functions are shown in .

Figure 1. The convergence curves of all algorithms for certain CEC2014 functions (H3, H13, H20, and H30) with D = 30

Figure 2. The convergence curves of all algorithms for certain CEC2014 functions (H3, H13, H20, and H30) with D = 50

It can be observed that HGSCADE has a faster convergence speed to produce an accurate solution than that of compared algorithms except for H13. It indicates that HGSCADE has the ability to create a balance between exploration and exploitation. The opposition-based population initialization provides initial solutions with favorable distribution, and the greedy search prevents excluding good solutions; thus, the convergence speed is accelerated. The DE enhances the exploitation ability and maintains the population diversity, the Levy flight-based local search avoids local optima, and the success history-based parameter adaptation strengthens the stability; thus, the solution accuracy is improved.

Complexity Analysis

The complexity of HGSCADE (represented by notation O) can be calculated as O (HGSCADE) = O (positions initializing by OBL) + O (positions updating by greedy SCA) + O (positions updating by DE) + O (global best position updating) + O (current best position updating by local search), i.e.

where N is the population size, D is the dimension of the problem and T is the maximum number of iterations. Similarly, the complexity of SCA is . The opposition-based population initialization, the greedy search, and the Levy flight-based local search increase the computational complexity of the proposed algorithm.

HGSCADE for Cylindricity Error Evaluation

Mathematical Model of Cylindricity Error

As shown in , the cylindricity error f is defined as the minimum radius difference between two coaxial theoretical cylinders which envelop the actual contour of a cylindrical part. It is assumed that the measured points in the Cartesian coordinate system are pi(xi, yi, zi) (i = 1, 2, … , n), where xi, yi, zi are coordinate values, and n is the number of measured points. Supposing that the fixed point of L is (x0, y0, z0) and the directional vector of L is [a, b, c]. Then, the parametric equation of L can be defined as:

The distance from point pi to axis L can be calculated by:

where A = (yi – y0) × c – (zi – z0) × b, B = (zi – z0) × a – (xi–x0) × c, C = (xi–x0) × b – (yi – y0) × a.

Finally, the cylindricity error is defined as:

Data Sets

Five original-measured data sets from corresponding literature are used to experiment. These data sets are named as Data set 1 (24 samples cited from Lai et al. Citation2000), Data set 2 (32 samples cited from Yang et al. Citation2018), Data set 3 (80 samples cited from Lei et al. Citation2011), Data set 4 (24 samples cited from; Li et al. Citation2009), and Data set 5 (60 samples cited from Zhao, Wen, and Xu Citation2015), respectively.

Experiments and Analysis

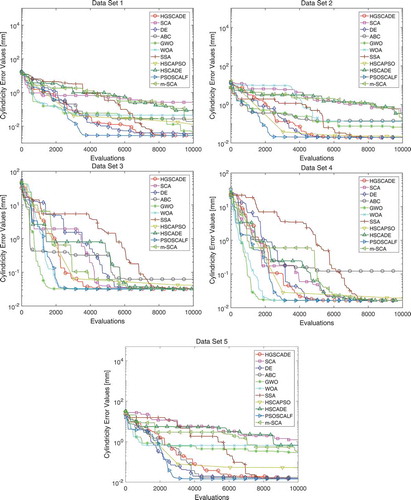

HGSCADE is used for cylindricity error evaluation and compared with other algorithms. For all algorithms, the population size is fixed to 30, the search space of variables (x0, y0, z0) is set as the data range, and the search space of variables (a, b, c) is set from – 1 to 1. The search will be terminated when the number of evaluations reaches 10000. The evaluation results (the mean of 30 independent evaluation values for each data set) of HGSCADE and other comparison algorithms are listed in . The convergence curves of HGSCADE and other comparison algorithms are shown in .

Table 5. Mean and standard deviation of 51 independent runs for all algorithms on the CEC2014 functions with D = 30

As can be observed from , the cylindricity error evaluation results of HGSCADE are much better than that of SCA, ABC, WOA, HSCAPSO, HSCADE, and LSC for all the data sets. Although SSA and PSOSCALF provide the same results as HGSCADE for Data set 1 and Data set 4, DE and GWO provide the same results as HGSCADE for Data set 4, they cannot give the best results for all the data sets. Moreover, the results of HGSCADE are well in agreement with the published results (equally for Data set 1, Data set 4 and Data set 5, slightly worse for Data set 2, slightly better for Data set 3). It can be observed from that the evaluation speed of HGSCADE is not the fastest, but HGSCADE can give the best evaluation results with relative efficiency. HGSCADE keeps a very good balance between exploration and exploitation in cylindricity error evaluation, so satisfactory results are obtained. The availability of HGSCADE for cylindricity error evaluation is verified by the experiment.

Conclusions

In this study, a novel HGSCADE algorithm is proposed for solving optimization problems and evaluating cylindricity error. The proposed algorithm combines SCA with five strategies: (1) Opposition-based learning (2) Greedy search (3) Differential evolution (4) Success history-based parameter adaptation (5) Local search based on Levy flight. HGSCADE is compared with the standard SCA, other state-of-the-art MAs, and the variants of SCA on the CEC2014 benchmark functions. The comparison and analysis of the test results prove that the performance of HGSCADE is better than that of other compared algorithms. In addition, the proposed algorithm is applied for cylindricity error evaluation of five measured cylindrical parts and is verified to be an effective mean to evaluate cylindricity error.

However, the test results of HGSCADE for several CEC2014 functions are worse than that of certain-compared algorithms (SSA for H5, H7, and H8; DE for H23, H24, and H27; ABC for H14; SCA, GWO, WOA, and m-SCA for H24). This suggests that HGSCADE accords with the NFL theorem. The future work will be concentrated in two directions: (1) Attempting to apply HGSCADE in solving other engineering optimization problems (2) Extending the study of SCA for solving more complex problems.

Disclosure Statement

The authors declare that they have no conflict of interest.

Additional information

Funding

References

- Abd Elaziz, M., D. Oliva, and S. W. Xiong. 2017. An improved opposition-based sine cosine algorithm for global optimization. Expert Systems with Applications 90:484–500. doi:10.1016/j.eswa.2017.07.043.

- Attia, A. F., R. A. El Sehiemy, and H. M. Hasanien. 2018. Optimal power flow solution in power systems using a novel sine-cosine algorithm. International Journal of Electrical Power & Energy Systems 99:331–43.

- Chegini, S. N., A. Bagheri, and F. Najafi. 2018. PSOSCALF: A new hybrid PSO based on sine cosine algorithm and Levy flight for solving optimization problems. Applied Soft Computing 73:697–726.

- Das, S., A. Bhattacharya, and A. K. Chakraborty. 2018. Solution of short-term hydrothermal scheduling using sine cosine algorithm. Soft Computing 22:6409–27.

- Dorigo, M., and G. Di Caro. 1999. Ant colony optimization: A new meta-heuristic. Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 1470–77. IEEE.

- Geem, Z. W., J. H. Kim, and G. V. Loganathan. 2001. A new heuristic optimization algorithm: Harmony search. Simulation 76 (2):60–68.

- Gupta, S., and K. Deep. 2019. A hybrid self-adaptive sine cosine algorithm with opposition based learning. Expert Systems with Applications 119:210–30.

- Issa, M., A. E. Hassanien, D. Oliva, A. Helmi, I. Ziedan, and A. Alzohairy. 2018. ASCA-PSO: Adaptive sine cosine optimization algorithm integrated with particle swarm for pairwise local sequence alignment. Expert Systems with Applications 99:56–70.

- Jensi, R., and G. W. Jiji. 2016. An enhanced particle swarm optimization with levy flight for global optimization. Applied Soft Computing 43:248–61.

- Karaboga, D., and B. Basturk. 2007. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. Journal of Global Optimization 39:459–71.

- Kennedy, J., and R. Eberhart. 1995. Particle swarm optimization. Proceedings of ICNN’95 - International Conference on Neural Networks, Perth, WA, Australia, 1942–48. IEEE.

- Lai, H. Y., W. Y. Jywe, C. K. Chen, and C. H. Liu. 2000. Precision modeling of form errors for cylindricity evaluation using genetic algorithms. Precision Engineering 24 (4):310–19.

- Lei, X. Q., H. W. Song, Y. J. Xue, J. S. Li, J. Zhou, and M. D. Duan. 2011. Method for cylindricity error evaluation using geometry optimization searching algorithm. Measurement 44 (9):1556–63.

- Li, J. S., X. Q. Lei, Y. J. Xue, and M. D. Duan. 2009. Evaluation algorithm of cylindricity error based on coordinate transformation. China Mechanical Engineering 20 (16):1983–87.

- Li, S., H. J. Fang, and X. Y. Liu. 2018. Parameter optimization of support vector regression based on sine cosine algorithm. Expert Systems with Applications 91:63–77.

- Liang, J. J., B. Y. Qu, and P. N. Suganthan. 2013. Problem definitions and evaluation criteria for the CEC 2014 special session and competition on single objective real-parameter numerical optimization. Singapore: Zhengzhou University, Zhengzhou, China and Nanyang Technological University.

- Mirjalili, S. 2015. The ant lion optimizer. Advances in Engineering Software 83:80–98.

- Mirjalili, S. 2016. SCA: A sine cosine algorithm for solving optimization problems. Knowledge-Based Systems 96:120–33.

- Mirjalili, S., A. H. Gandomi, S. Z. Mirjalili, S. Saremi, H. Faris, and S. M. Mirjalili. 2017. Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Advances in Engineering Software 114:163–91.

- Mirjalili, S., and A. Lewis. 2016. The whale optimization algorithm. Advances in Engineering Software 95:51–67.

- Mirjalili, S., S. M. Mirjalili, and A. Lewis. 2014. Grey wolf optimizer. Advances in Engineering Software 69:46–61.

- Nenavath, H., and R. K. Jatoth. 2018. Hybridizing sine cosine algorithm with differential evolution for global optimization and object tracking. Applied Soft Computing 62:1019–43.

- Nenavath, H., D. R. Kumar Jatoth, and D. S. Das. 2018. A synergy of the sine-cosine algorithm and particle swarm optimizer for improved global optimization and object tracking. Swarm and Evolutionary Computation 43:1–30.

- Passino, K. M. 2002. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Systems Magazine 22 (3):52–67.

- Rashedi, E., H. Nezamabadi-pour, and S. Saryazdi. 2009. GSA: A gravitational search algorithm. Information Sciences 179 (13):2232–48.

- Rizk-Allah, R. M. 2018. Hybridizing sine cosine algorithm with multi-orthogonal search strategy for engineering design problems. Journal of Computational Design and Engineering 5 (2):249–73.

- Simon, D. 2008. Biogeography-based optimization. IEEE Transactions on Evolutionary Computation 12 (6):702–13.

- Sindhu, R., R. Ngadiran, Y. M. Yacob, N. A. H. Zahri, and M. Hariharan. 2017. Sine-cosine algorithm for feature selection with elitism strategy and new updating mechanism. Neural Computing and Applications 28:2947–58.

- Srinivas, M., and L. M. Patnaik. 1994. Genetic algorithms: A survey. Computer 27 (6):17–26.

- Storn, R., and K. Price. 1997. Differential evolution – A simple and efficient heuristic for global optimization over continuous spaces. Journal of Global Optimization 11:341–59.

- Tanabe, R., and A. Fukunaga. 2013. Success-history based parameter adaptation for differential evolution. Proceedings of 2013 IEEE Congress on Evolutionary Computation, Cancún, México, 71–78. IEEE.

- Tizhoosh, H. R. 2005. Opposition-based learning: A new scheme for machine intelligence. Proceedings of International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 695–701. IEEE.

- Wen, X. L., J. C. Huang, D. H. Sheng, and F. L. Wang. 2010. Conicity and cylindricity error evaluation using particle swarm optimization. Precision Engineering 34 (2):338–44.

- Wolpert, D. H., and W. G. Macready. 1997. No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation 1 (1):67–82.

- Yang, X. S. 2008. Introduction to mathematical optimization: From linear programming to metaheuristics. Cambridge: Cambridge International Science Publishing.

- Yang, Y., M. Li, C. Wang, and Q. Y. Wei. 2018. Cylindricity error evaluation based on an improved harmony search algorithm. Scientific Programming 2:1–13.

- Zhao, Y. B., X. L. Wen, and Y. X. Xu. 2015. Cylindricity error inspection and evaluation based on CMM and QPA. China Mechanical Engineering 26 (18):2432–36.