?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper presents anovel method for texture classification and biometric authentication based on a descriptor called the weighted mean-based patterns (WEM). The proposed descriptor has been developed for extracting texture features from a large dataset of hand images, which has been created by the authors. The method uses the distinctive features of finger knuckle print (FKP) for hand image retrieval, which can be used in biometric identity recognition systems. The proposed method also includes a feature selection step for eliminating less important patterns and a weighted distance measure for quantifying the similarity of images. The method uses the support vector machine (SVM) for the classification stage. The proposed method has been tested on the FKP image dataset to evaluate the image retrieval performance, and also on Brodatz, Vistex, and Stex datasets to evaluate the performance for texture classification. Higher performance of the proposed method is demonstrated through comparison with other methods. The proposed method is shown to be sufficiently precise for a variety of applications, including identity recognition and classification.

Introduction

With increasing growth of digital images available on the Internet over recent years, methods of information retrieval from massive image datasets have become a subject of great interest. Image retrieval is searching for an image in an image dataset, which has many applications in technology and science, including machine vision, information security, and biometric systems (Gao et al. Citation2014). In image retrieval systems, the similarity of an input image and the images of the dataset is calculated by a distance criterion based on visual contents like shape, color, and texture. But texture is a particularly important feature. In content-based image retrieval (CBIR), texture is prized for its ability to provide useful features. Despite much advancement in this field, there are still many challenges in managing large image datasets for biometric identity recognition purposes. The main challenge in the use of biometric systems is how to achieve a high recognition rate, especially when working with massive datasets. The systems initially developed for this purpose were operating based on color, shape, and texture. However, over the years, the need for higher image retrieval performance has led to the development of more advanced methods based on texture information. The image retrieval solution proposed in this work aims to improve image retrieval system based on texture features.

In this study, the main goal is to improve the performance of image retrieval by a powerful texture descriptor for use in hand image retrieval phase of identity recognition systems. To achieve this, the authors developed a texture feature extraction method called weighted mean-based patterns (WEM). In summary, this study consists of the following steps:

Devising a suitable hand imaging setup

Collecting a large set of hand images for use in image retrieval and biometric systems (Heidari and Chalechale Citation2020)

Proposing a method for feature extraction from finger knuckle print (FKP) to be used in hand image-based identity recognition

Developing a new method for FKP feature extraction from the collected hand images, Brodatz, Vistex, and Stex image datasets

Using a feature selection procedure to identify and discard insignificant patterns

Developing a weighted image similarity measure and comparing it with alternatives to demonstrate its superior performance

Testing the proposed approach in a pattern recognition framework with support vector machine (SVM)

Comparing the proposed approach with some of the existing solutions in terms of retrieval precision

The rest of this paper is organized as follows. Section 2 explains the methods available for texture feature extraction. Section 3 describes features used in the proposed approach. Section 4 outlines the framework of the proposed method. Section 5 presents the results of the evaluation and Section 6 presents the conclusions and future works.

Related Works

Texture feature extraction methods are usually divided into four board groups: statistical, structural, transform-based methods, and model-based methods. In statistical methods, the spatial distribution of pixels and the relationships between pixel values in the image are treated as local features. Some of the well-known methods of feature extraction based on spatial distribution include local binary patterns (LBP) (Xu et al. Citation2019), median binary patterns (MBP) (Ji et al. Citation2018), improved local binary patterns (ILBP) (Yang and Yang Citation2017), and local ternary patterns (LTP) (Adnan et al. Citation2018). LBP operates based on the central pixel value and its neighboring pixels (Zhao et al. Citation2013). For example, an article by Singh proposed an LBP method for image retrieval, which involves extracting patterns from color images by using a plane (Singh, Walia, and Kaur Citation2018). In MBP, the median value of the set of pixels is taken as the threshold, and then the pattern is obtained by comparing all sets of pixels with this median value. For example, Hafiane used an adaptive threshold selection method to develop an adaptive MBP, while also using improved local binary patterns to reduce the noise effect (Hafiane, Palaniappan, and Seetharaman Citation2015). Dan et al. proposed an improved joint LBP descriptor for texture classification, where a Weber-like function is used to organize the local intensity differences (Dan et al. Citation2014). LTP allows us to use three values for threshold, which results in less sensitivity to noise than LBP. For example, Agarwal used LTP in the retrieval of medical images (Agarwal, Singhal, and Lall Citation2018).

The transform-based feature extraction methods involve analyzing the changes in the intensity of pixels and the characteristics of the frequency distribution of the image. The transforms commonly used in these methods include wavelet transform (Srivastava and Khare Citation2017), Fourier transform (Yang and Yu Citation2018), Gabor wavelet transform (Li, Huang, and Zhu Citation2016), Radon transform (Khatami et al. Citation2018), and Contourlet transform (Meng et al. Citation2016). Wavelet transform is a method of analysis based on small waveforms. This transform can display an image in several resolutions based on a specific frequency. For example, in a study by Srivastava and Khare (Citation2017), they provided a multi-resolution analysis for image retrieval based on wavelet transform. In this work, LBP was combined with Legendre moments in several resolutions, where these moments are orthogonal moments that are based on orthogonal polynomials. Fourier transform is a method for converting the spatial data of image and analyzing their frequency domain information. This transform is widely used for analyzing image processing methods. For example Yang and Yu (Citation2018) introduced a multi-dimensional Fourier descriptor for identifying different shapes. This descriptor captures the local and global characteristics of shapes. Li, Huang, and Zhu (Citation2016) presented an approach for texture and color retrieval by the use of a Gabor wavelet-based copula model. In this method, three types of dependency including color, scale, and orientation were used. Radon transform can also be used to analyze the pattern of an image in a set of angles so that a pattern can be extracted from the sum of pixel intensities in each orientation. In a study by Khatami et al. (Citation2018), they used a combination of Radon transform and local binary patterns in a hierarchical search space for image retrieval. Contourlet transform provides directional data on multiple scales. Meng et al. (Citation2016) used a method based on this transform for the retrieval of visible and infrared images.

The model-based methods made use of empirical models of the pixels. These methods include Markov models and fractal models. The Markov-model-based method is a graphical model of the joint probability distribution, which can be used to identify the suitable intensity distribution for image analysis (Jayech, Mahjoub, and Amara Citation2016). In contrast, fractal methods provide an estimation of texture complexity based on geometry and dimensions. Lastly, the structural texture feature extraction methods make use of autocorrelation, edge detection, and morphological operations for feature extraction (Ji et al. Citation2019).

(Hiremath and Bhusnurmath (Citation2017) proposed a method for texture classification based on anisotropic diffusion and local directional binary patterns by analyzing the effect of neighborhoods and radial distance on texture classification. Basu et al. (Citation2017) also proposed the use of deep neural networks for the classification of image datasets. They derived the size of the feature space for textural features extracted from the input dataset. Moreover Nasser et al. (Citation2017) suggested the use of a super-resolution method which exploits the complementry information provided by multiple images of the same target using gray level co-occurrence matrix features, local binary patterns, phase congruency-based local binary pattern, histogram of oriented gradients and pattern lacunarity spectrum.

(Aparicio, Monedero, and Engan Citation2018) introduced a noise-robust approach to texture feature analysis and classification based on a texture feature extraction step with oriented filters. Also, an interpolation step is applied to extract the main orientations of the texture for image analysis. In addition Lippi et al. (Citation2019) introduced an approach based on multiple-instance learning methods and texture analysis. Here, features are extracted from positron emission tomography. (Cruz et al. Citation2019) proposed an approach for classification of the tear film lipid layer using phylogenetic diversity indexes for feature extraction and various classifiers (support vector machines, random forest, naive bayes, multilayer perceptron, random tree, and radial base function kernel (RBF) Network (RBFNet)) are tested.

Cai et al. (Citation2019) implemented texture analysis on medical images by using the available information. They explored advanced texture analysis approaches for magnetic resonance imaging (MRI) image classification. Also Song et al. (Citation2019) investigated the ability of computed tomography texture analysis to distinguish various hypervascular hepatic focal lesions. For this purpose, the texture features were generated for lesion classification. Condori and Bruno (Citation2020) proposed the effects of global pooling measurements on extracting texture information from a set of activation maps using multi-layer feature extraction.

Attia et al. (Citation2020) suggested a novel finger knuckle pattern using maltilayer deep rule-based classifier. In the proposed scheme, binarized statistical image features and Gabor filter bank are extracted from the input finger knuckle. The extraction of region of interest (ROI) consists of the Gaussian smoothing operation, a canny edge detector for extracting the bottom boundary of the finger, and the canny edge detector on the cropped sub-image to select the y-axis of the coordinate system. Ferrer, Travieso, and Alonso (Citation2005) presented a ride features-based method that extracted ridge features from FKP images and evaluated their similarity by applying hidden markov model. Also Zeinali, Ayatollahi, and Kakooei (Citation2014) proposed an FKP recognition system where a directional filter bank is used to extract feature and linear discriminate analysis (LDA) applied to reduce the dimensionality of the large feature vector.

So far, none of the above methods has been able to achieve high precision in the retrieval of hand images and therefore in identity detection based on these images. This motivated the authors to develop a high-precision texture descriptor for this purpose as well as texture classification.

Texture Feature Extraction

LBP captures the spatial characteristics of images. LBP-based extraction techniques are widely used in various fields, including texture classification, image retrieval, and biometric systems.

Local Binary Patterns

The features extracted by the use of LBP as a descriptor can be used for image retrieval. This operation involves converting the textural contents of the image to grayscale values and then capturing its textural information. This operator, which is widely used in machine vision and image processing, functions based on the comparison of a central pixel gc with its neighboring pixels gi. The LBP code of the central pixel is obtained as follows:

where P is the number of neighbor pixels and R is the neighborhood radius. Also, δ(y) is defined as follows:

After calculating the LBP code, the LBP histogram for the input image of size M × N is determined as follows:

where

where KK is the maximum value of the binary code. To reduce the number of features in the LBP histogram, a simple uniform pattern (Yuan, Xia, and Shi Citation2018) can be formulated as follows:

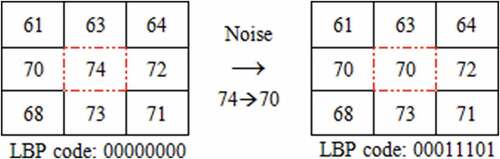

The main problem of the LBP operator is its sensitivity to noise. In (), it can be seen that with a change in the central pixel from 74 to 70, the 8-bit LBP code will change from 00000000 to 00011101. Several new texture patterns have been proposed to resolve this problem.

Weighted Mean-based Patterns

LBP is severely sensitive to noise because of its thresholding mechanism. To eliminate this issue, this paper proposes an operator called weighted mean-based patterns (WEM), which uses the mean value of pixels instead of the value of the central pixel. This operator is defined by EquationEquations 5(5)

(5) and Equation6

(6)

(6) :

The threshold T is the weighted mean of all pixels in the neighborhood of the neighbor pixel.

where Y is the number of pixels in the neighborhood u × v, Gi,j is the intensity of the pixel positioned in row i and column j of the image, and Ki,j is the importance coefficient defined for row i and column j in the kernel K. Here, u and v are both set to 3. The kernel K is defined as follows:

The thresholds T1 and T2 are defined as follows:

Here, M and S are the mean and standard deviation of the neighboring pixels, and NDB is the total number of images. In the proposed threshold, instead of using the value of the central pixel to generate binary values, this is done based on the information of neighboring pixels. This approach reduces the effect of noise and increases the probability of producing correct binary patterns. In fact, using the mean value as the threshold can eliminate the noise effect. () shows the method of computation of the WEM code for a binary value of 01001100.

In this figure, the pixel being processed has a value of gc (here gc = 10) and the set of neighboring pixels are g0 to g7. To compute the first bit of the 8-bit WEM code, instead of comparing g0 with gc, the weighted mean of the set of neighboring pixels, which here is T = 5, is compared with the neighbor pixel. Other bits of the WEM code are computed in the same way. The algorithm of WEM computation is provided below.

The Proposed Framework

Given the use of hand images for the tests of this work, the first part of this section is dedicated to provide detailed information about these images. The second part of the section describes the image retrieval scheme that is used for the implementation of the tests.

Dataset

The hand image dataset was created by taking photographs from the hands of a group of volunteers. The photographs were taken by a Canon SX 600 HS digital camera while volunteers were resting their hands on a flat wooden board (Heidari and Chalechale Citation2020). Since FKP can be used as a precise feature for identity recognition, these areas of the hand had to be isolated from the images. To isolate these areas, a skin detection procedure was used. Since this procedure had to be performed in the YCbCr color space, first, images were mapped from RGB to YCbCr. Here, the skin detection method was based on the trapezia definition in the YCb and YCr subspaces (Brancati et al. Citation2016). Lastly, correction rules for the composition of chrominance values were applied to finalize skin pixel identification.

After identifying the skin areas, finger areas had to be separated. For this purpose, having the center of gravity of the hand and the boundary points with the least distance from this center, the radius of the palm area was determined. Then, the palm area was separated from the wrist area with the help of square structures. Lastly, the obtained area was extracted from the image to obtain the five fingers (Heidari and Chalechale Citation2020).

Having the length, orientation, and center of gravity of each finger, the FKP areas were easily extracted. Using this process, the authors created a set of FKP images from the hand images taken from 74 men and 35 women aged 16–69 years. For each person, 5 images from the right hand and 5 from the left hand were taken. Given the lack of a similar set of hand images in the literature, this image dataset is made available to other researchers and can be accessed from the website of the university. The size of FKP areas in this set is 75 × 75 pixels.

The Proposed Image Retrieval Approach

() illustrates the overall architecture of the presented image retrieval approach. The input of this diagram is a query image. The texture feature vector of this image is extracted by the proposed WEM descriptor. Then, the important features of the image are chosen based on random sub-spaces of patterns and compared with the features of other images in the dataset. If the distance between the feature vectors of the query image and dataset image is smaller than the defined threshold, then the system identifies the two images as matching and reports the result to the image retrieval system. Different distance measures can be used in the matching process. Here, the similarity of the images is determined by a weighted similarity measure and the set of images with high similarity to the query image is retrieved with the help of SVM. The proposed distance measure is defined as follows:

where ωi denotes the similarity weighting constants, which are determined based on the fitness function of the particle swarm optimization (PSO) algorithm (Heidari and Chalechale Citation2016) in order to achieve the highest possible precision, WEMquery (i) and WEMtarget (i) are ith texture feature vectors of the query image and the target image, and NF is the total number of features of the image.

In summary, the proposed algorithm consists of the following steps:

Input: query image

Output: The retrieved image that is similar to the input image

Step 1: Collecting a large set of hand images

Step 2: Preprocessing operations (conversion to YCbCr color space and cropping of FKP areas from images) for each image in the dataset

Step 3: Extracting the texture features by the WEM descriptor

Step 4: Selecting the important features by the use of random sub-spaces (Nanni, Brahnam, and Lumini Citation2010)

Step 5: Calculating the features of the query image by the proposed descriptor and identifying its important features

Step 6: Using the proposed distance measure to quantify the similarity between the input image and other images

Step 7: Sorting all images of the dataset in descending order of similarity

Step 8: Selecting the N images with the highest similarity to the query image

Step 9: First iteration: generating training instances for the dataset

Step 10: Other iteration: running the SVM classifier for the training instances

Step 11: Stopping when the results are satisfactory to the user

Step 12: In the end, N images with the highest similarity to the query image will be selected and retrieved by the SVM. () shows the pseudo-code of the presented image retrieval system.

Results and Discussion

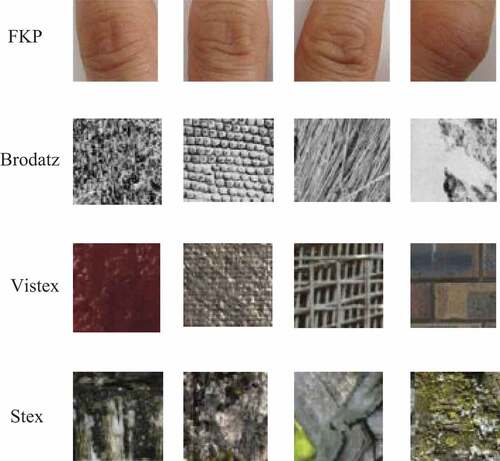

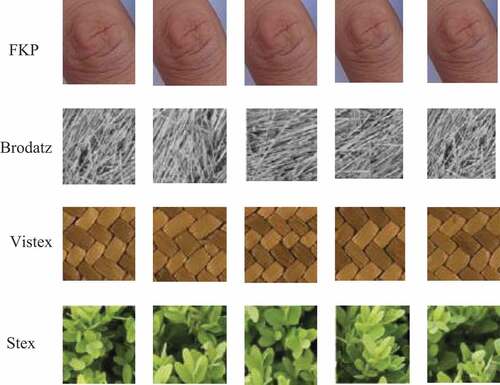

This section presents the experimental results to demonstrate the effectiveness of the proposed approach. These tests were performed using the FKP, Brodatz, Vistex, and Stex datasets. shows several examples of the images in these datasets. The FKP dataset contains 5450 images taken from 109 different people, in which each category contains 50 images which is constructed by collecting images from the image acquistion. For the first test, 1000 of these images were randomly selected as test queries. Another test was conducted using 1744 images in the Brodatz dataset. For this test, the generated sub-images were used as query images. Similar tests were also performed on Vistex and Stex datasets, where each class contained 16 similar images.

Using the proposed WEM descriptor, the similarity between the input image and the target image was measured in terms of the defined criterion and then images with high similarity to the input image were retrieved. shows an example of the results of image retrieval in different datasets. It can be seen that the proposed descriptor has been well capable of retrieving similar images from these datasets.

After extracting the texture information of the images by the proposed descriptor, a sequence of query images was randomly generated. The images of the dataset were ranked in terms of the defined distance measure and 15 images were tagged as the training dataset. In particular, the user only needs to mark the positive images, and all the other images are tagged as negative. shows the results of the proposed method without feedback. In this figure, the image in the top left corner is the query image. The incorrectly picked images are marked with NR.

shows the results of image retrieval with SVM. It can be seen the image retrieval system with SVM clearly outperforms the without feedback.

Figure 8. The retrieved results after the first feedback iteration for an input image FKP (from hand dataset)

The performance of the presented method was evaluated in terms of three criteria: precision, recall, and average normalized modified retrieval rank (ANMRR) (Chalechale, Mertins, and Naghdi Citation2004). After this evaluation, a comparison was made between the proposed approach and the other methods in terms of performance according to these criteria. Here, precision is the ratio of the total number of relevant images retrieved from the dataset to the total number of images retrieved from the dataset. Recall is the ratio of the total number of relevant images in retrieved images to the total number of relevant images in the dataset. ANMRR provides a ranking-based evaluation of performance (Chalechale, Mertins, and Naghdi Citation2004). First, the average retrieval ranking R(q) for the query image q is calculated as follows:

If Rank (i) is greater than κ, then Rank (i) = 1.25 × κ; else, Rank (i) is unchanged. The parameter κ is defined as follows:

If NR>50, then Ψ = 2, else Ψ = 4. The parameter Ω is defined as follows:

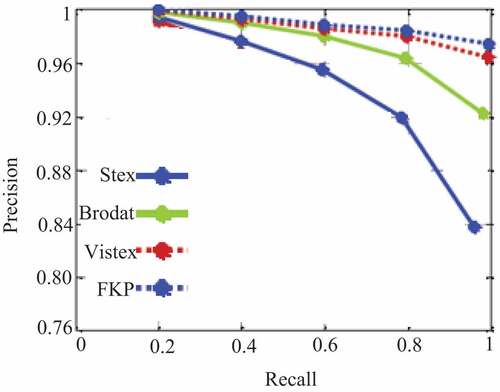

where TQ is the total number of query images. A summary of the parameters and relationships used in the presented image retrieval system is provided in (). After measuring the performance of the proposed retrieval method on the FKP, Brodatz, Vistex, and Stex datasets, it was compared with the performance of other methods on the same datasets. For this comparison, several texture descriptors were implemented.

Table 1. The parameters and definitions

To demonstrate the superiority of the presented method over other texture descriptors (1) local binary pattern (Pan et al. Citation2017), 2) median binary pattern (Hafiane, Palaniappan, and Seetharaman Citation2015), 3) improved local binary pattern (Kylberg and Sintorn Citation2013), 4) local ternary pattern (Kylberg and Sintorn Citation2013), 5) robust local binary pattern (Kylberg and Sintorn Citation2013), 6) fuzzy local binary pattern (Alfy and Binsaadoon Citation2017), 7) histogram of oriented gradient (Jung Citation2017), 8) scale invariant feature transform (Joo and Jeon Citation2015), 9) wavelet transform (Busch and Boles Citation2002), 10) local correction pattern (Xikai et al. Citation2015), and, 11) noise-resistant color local binary pattern (Ershah and Tajeripour Citation2017)), () compares their precision in different datasets. As shown in this table, the proposed image retrieval system exhibits a higher precision than other texture descriptors. The precision-recall curve of proposed method on different datasets is presented in (). The proposed method outperforms other methods in the retrieval of images from the FKP dataset.

Table 2. Performance comparison of the proposed approach with various approaches using average precision (%)

() shows the performance of the proposed image retrieval method on different datasets. These results demonstrate that the presented method is superior to wavelet-based image retrieval systems.

Table 3. Image retrieval performance comparisons of the proposed method

The methods of (Kwitt and Uhl Citation2010; Lasmar and Berthoumieu Citation2014) generate feature vectors based on a probability model that is separate from the maximum likelihood estimator, but the methods of (Kokare, Biswas, and Chatterji Citation2005; Manjunath and Ma Citation1996) estimate the distribution parameters through copula-based computations.

The probability density function of the copula model C is interpreted as:

where χi denotes the random variables and Fi(χi) is the marginal cumulative distribution function. Texture-based image retrieval and classification systems have a high degree of computational complexity. They also have a high computational cost because of extensive distance calculations during the matching phase and the search for similar images. According to the results, the proposed method proved to be a valid choice for practical applications in image retrieval and classification and therefore in identity recognition.

The average precision of the proposed method on the FKP, Brodatz, Vistex and Stex databases was, respectively, 98.38%, 93.62%, 95.13%, and 84.86%. The ANMRR of the method on these databases was calculated to 0.01, 0.2, 0.33, and 0.41, respectively. These results show that the proposed descriptor provides a higher retrieval precision than other methods tested. On average, the proposed method has about 5.15% higher precision than the next most precise method (ODII), which has a precision of 93.23%. The average ANMRR of the proposed method is also very low. From these results, it can be concluded that the presented method has a better image retrieval and classification performance than other descriptors. Also, compared to other methods, the proposed method has a relatively short feature vector and therefore relatively low memory consumption.

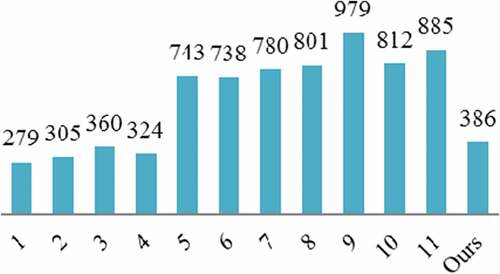

To determine effect of the use of the proposed similarity measure on the precision of the image retrieval system, different distance measures including Euclidean, Cosine, Manhattan, Canberra, and Square-Chord measures were also tested. The comparison of these results revealed the great impact of the proposed measure on the performance and showed it to be an accurate distance measure. The proposed method also had a shorter retrieval time (989 milliseconds) than other methods, which can be attributed to its lower computational complexity. shows the runtimes of different steps of hand image retrieval.

Table 4. Times taken in different stages of hand image processing

shows the time length of the feature extraction phase in the implemented methods. As illustrated in this figure, the ninth method has the longest feature extraction phase among the tested methods and the proposed method has a shorter feature extraction phase than most other methods. Thanks to this relatively short feature extraction and image retrieval, the proposed system can be used in security and real-time applications.

The performance of image classification is examined using percentage of correct classification results. reports the image classification results between the proposed method and other schemes using the FKP, Brodatz, Vistex and Stex image datasets using SVM classifier. The proposed method outperforms the former image retrieval systems. As it can be seen from , the proposed method outperforms the other schemes in terms of classification task. Thus, the proposed descriptor is proved as an effective candidate in image retrieval and classification systems.

Table 5. Classification accuracy (%) for FKP, Brodatz, Vistex and Stex image datasets

In addition, a comparison among convolutional neural network (CNN), K-Nearest Neighbor (KNN) and SVM classifiers is presented in (). Here, six different methods working on FKP, MRI, and StructureRSMAS datasets using CNN, KNN and SVM classifiers are compared. It can be seen that the proposed approach using the SVM classifier shows a higher actuary than the other methods using CNN and KNN classifiers. Based on this, it can be deduced that the performance of the proposed scheme utilizing SVM classifier on FKP dataset has a supremacy over the others.

Table 6. Classification accuracy (%) for FKP, MRI, and StructureRSMAS image datasets using CNN, KNN, and SVM classifiers

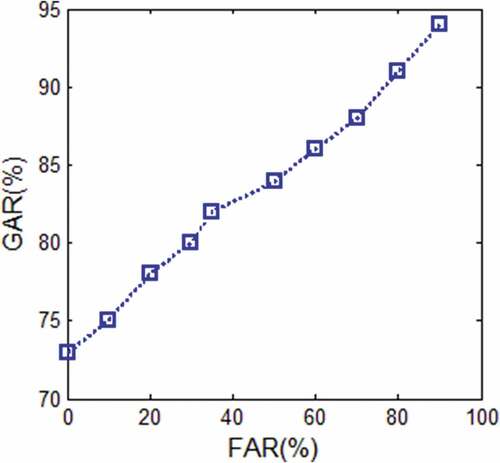

Moreover, the FKP collected images have been used in biometric systems for person identification. Performance of the FKP-based biometric system can be evaluated by receiver operating characteristic (ROC) curve. This curve is obtained by plotting false rejection rate (FRR) against false acceptance rate (FAR) for all thresholds. This evaluation can be done utilizing the genuine acceptance rate (GAR) as a substitute for FRR. () shows the ROC curve of the proposed method for the FKP dataset. This diagram confirms the effectiveness of the proposed scheme.

Conclusions and Future Works

This paper presented a new method for texture classification and especially for hand image classification utilizing finger knuckle print features. The proposed method involves extracting the important patterns of an image using a feature selection method based on random sub-spaces. A newly defined weighted distance measure has been used to quantify the similarity of images; finally making use of SVM to classify and achieve a high-precision and fast image recognition. The performance of the method was tested on the FKP, Brodatz, Vistex and Stex datasets and was compared with the performance of some other methods. The proposed method achieved an average precision of, respectively, 98.38%, 93.62%, 95.13% and 84.86% on the FKP, Brodatz, Vistex, and Stex datasets, and was found to have a superior performance in this respect. The proposed descriptor can be used in a variety of applications such as identity recognition and classification.

For future works, it is recommended to try using a combination of shape, color, and texture features for image recognition. The use of graphic processing unit (GPU) may also be effective in reducing the runtime of the process. Moreover, applying learning-based approaches such as CNN for feature extraction and classification is a recommended research direction.

Disclosure Statement

We hereby confirm that there is no conflict of interest.

References

- Adnan, S., A. Irtaza, S. Aziz, M. Ullah, A. Javed, and M. Tariq. 2018. Fall detection through acoustic local ternary patterns. Applied Acoustics 140: (296–300):296–300. doi:10.1016/j.apacoust.2018.06.013.

- Agarwal, M., A. Singhal, and B. Lall. 2018. 3D local ternary co-occurrence patterns for natural, texture, face and biomedical image retrirval. Neurocomputing 313:1–24. doi:10.1016/j.neucom.2018.06.027.

- Alfy, E., and A. Binsaadoon. 2017. Silhouette-based gender recognition in smart environments using fuzzy local binary patterns and support vector machines. Procedia Computer Science 109: (164–171). doi: 10.1016/j.procs.2017.05.313.

- Aparicio, A. G. L., R. V. Monedero, and K. Engan. 2018. Noise robust and rotation invariant framework for texture analysis and classification. Applied Mathematics and Computation 335: (124–132). doi: 10.1016/j.amc.2018.04.018.

- Attia, A., Z. Akhtar, N. E. Chalabi, S. Maza, Y. Chahir. 2020. Deep rule-based classifier for finger knuckle pattern recognition system. Evolving Systems 1:1-15. doi: 10.1007/s12530-020-09359-w.

- Basu, S., S. Mukhopadhyay, M. Karki, R. Dibiano, S. Ganguly, R. Nemani, and S. Gayaka. 2017. Deep neural networks for texture classification- A theoretical analysis. Neural Networks 1–28. doi:10.1016/j.neunet.2017.10.001.

- Bianconi, F., A. Fernandez, E. Gonzalez, D. Caride, and A. Calvino. 2009. Rotation-invariant colour texture classification through multilayer CCR. Pattern Recognition Letters 30 ((8):):765–73. doi:10.1016/j.patrec.2009.02.006.

- Brancati, N., G. Pietro, M. Frucci, and L. Gallo. 2016. Human skin detection through correlation rules between the YCb and YCr subspaces based on dynamic color clustering. Computer Vision and Image Understanding 155: (1–16). doi: 10.1016/j.cviu.2016.12.001.

- Busch, A., and W. Boles. 2002. Texture classification using multiple wavelet analysis. Digital Image Computing Techniques and Applications 1–8. Melbourne, Australia.

- Cai, J., F. Xing, A. Batra, F. Liu, G. A. Walter, K. Vandenborne, and L. Yang. 2019. Texture analysis for muscular dytrophy classification in MRI with improved class activation mapping. Pattern Recognition 86: (368–375):368–75. doi:10.1016/j.patcog.2018.08.012.

- Campana, B. J. L., and E. J. Keogh. 2010. A compression-based distance measure for texture. Statistical Analysis and Data Mining 3 (6):381–98. doi:10.1002/sam.10093.

- Chalechale, A., A. Mertins, and G. Naghdi. 2004. Edge image description using angular radial partitioning. IEE Proceeding, Vision, Image and Signal Processing 151 ((2):):93–101. doi:10.1049/ip-vis:20040332.

- Chen, J., S. Shan, C. He, G. Zhao, M. Pietikainen, X. Chen, and W. Gao. 2010. WLD: A robust local image descriptor. IEEE Transaction on Pattern Analysis and Machine Inteligence 32 ((9):):1705–20. doi:10.1109/TPAMI.2009.155.

- Condori, R. H. M., and O. M. Bruno. 2020. Analysis of activation maps through global pooling measurements for texture classification. Information Sciences 1–32. doi:10.1016/j.ins.2020.09.058.

- Cruz, L. B. D., J. C. Souza, J. A. D. Sousa, A. M. Santos, A. C. D. Paiva, J. D. S. D. Almeida, A. C. Silva, G. B. Junior, and M. Gattass. 2019. Interferometer eye image classification for dry eye categorization using phylogenetic diversity indexes for texture analysis. Computer Methods and Programs in Biomedicine 1–28. doi:10.1016/j.cmpb.2019.105269.

- Dan, Z., Y. Chen, Z. Yang, and G. Wu. 2014. An Improved local binary pattern for texture classification. Optik 125 ((20):):6320–24. doi:10.1016/j.ijleo.2014.08.003.

- Ershah, S., and F. Tajeripour. 2017. Multi-resolution and noise-resistant surface defect detection approach using new version of local binary patterns. Applied Artificial Intelligence 31: (1–10). doi: 10.1080/08839514.2017.1378012.

- Ferrer, M., C. Travieso, and J. Alonso. 2005. Using hand knuckle texture for biometric identification. In: Proceedings 39th annual international carnahan conference on security technology, (pp. 74–78) Las Palmas, Spain.

- Gao, G., J. Yang, J. Qian, and L. Zhang. 2014. Integration of multiple prientation for finger knuckle print verification. Neurocomputing 135: (180–191):180–91. doi:10.1016/j.neucom.2013.12.036.

- Guha, T., and R. K. Ward. 2014. Image similarity using sparse representation and compression distanse. IEEE Transaction on Multimedia 16 ((4):):980–87. doi:10.1109/TMM.2014.2306175.

- Guo, J., H. Prasetyo, and H. Su. 2013. Image indexing using the color and bit pattern feature fusion. Journal of Vision Communication and Image Representation 24 ((8):):1360–79. doi:10.1016/j.jvcir.2013.09.005.

- Guo, Z., L. Zhang, and D. Zhang. 2010. Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognition 43 ((3):):706–19. doi:10.1016/j.patcog.2009.08.017.

- Hafiane, A., K. Palaniappan, and G. Seetharaman. 2015. Joint adaptive median binary patterns for texture classification. Pattern Recognition 48 ((8):):1–12. doi:10.1016/j.patcog.2015.02.007.

- Heidari, H., and A. Chalechale. 2016. An evolutionary stochastic approach for efficient image retrieval using modified particle swarm optimization. International Journal of Advanced Computer Science and Applications 7 (7):105–12. doi:10.14569/IJACSA.2016.070715.

- Heidari, H., and A. Chalechale. 2020. A new biometric identity recognition system based on a combination of superior features in finger knuckle print images. Turkish Journal of Electrical Engineering & Computer Science 28: (238–252). doi: 10.3906/elk-1906-12.

- Hiremath, P. S., and R. A. Bhusnurmath. 2017. Multiresolution LDBP descriptors for texture classification using anisotropic diffusion with an application to wood texture analysis. Pattern Recognition Letters 17: (1–10). doi: 10.1016/j.patrec.2017.01.015.

- Hoang, M. A., J. M. Geusebroek, and A. W. M. Smeulders. 2005. Color texture measurement and segmentation. Signal Processing 85 ((2):):265–75. doi:10.1016/j.sigpro.2004.10.009.

- Jayech, K., M. A. Mahjoub, and N. E. B. Amara. 2016. Synchronous multi-stream hidden Markov modal for offline Arabic handwriting recognition without explicit segmentation. Neurocomputing 214: (958–971):958–71. doi:10.1016/j.neucom.2016.07.020.

- Ji, G., K. Li, G. Zhang, S. Li, and L. Zhang. 2019. An assessment method for shale fracability based on fractal theory and fracture toughness. Engineering Fracture Mechanics 211: (282–290):282–90. doi:10.1016/j.engfracmech.2019.02.011.

- Ji, L., Y. Ren, X. Pu, and G. Liu. 2018. Median local ternary patterns optimied with rotation invariant uniform three mapping for noisy texture classification. Pattern Recognition 79: (1–40):387–401. doi:10.1016/j.patcog.2018.02.009.

- Joo, H., and J. Jeon. 2015. Feature-point extraction based on an improved SIFT algorithm. Control, Automation and Systems, (pp. 1–5) Jeju, South Korea.

- Jung, H. 2017. Analysis of reduced-set construction using image reconstruction from a HOG feature vector. IET Computer Vision 11 ((8):):725–32. doi:10.1049/iet-cvi.2016.0317.

- Junior, J. J., P. C. Cortex, and A. R. Backes. 2014. Color texture classification using shortest paths in graphs. IEEE Transactions on Image Processing 23 ((9):):3751–61. doi:10.1109/TIP.2014.2333655.

- Khatami, A., M. Babaie, H. Tizhoosh, A. Khosravi, T. Nguyen, and S. Nahavandi. 2018. A sequential search-space shrinking using CNN transfer learning and a Radon projection pool for medical image retrieval. Expert Systems with Application 100: (224–233):224–33. doi:10.1016/j.eswa.2018.01.056.

- Kokare, M., P. Biswas, and B. Chatterji. 2005. Texture image retrieval using new rotated complex wavelet filters. IEEE Transactions on System Management Cybern 35 ((6):):1168–78. doi:10.1109/TSMCB.2005.850176.

- Kwitt, R., and A. Uhl. 2008. Image similarity measurement by Kullback-Leibler divergences between complex wavelet subband statistics for texture retrieval. IEEE International Conference on Image Processing, (pp. 933–36) San Diego, CA, USA.

- Kwitt, R., and A. Uhl. 2010. Lightweight probabilistic texture retrieval. IEEE Transactions on Image Processing 19 ((1):):241–53. doi:10.1109/TIP.2009.2032313.

- Kylberg, G., and I. Sintorn. 2013. Evaluation of noise robustness for local binary pattern descriptors in texture classification. Journal on Image and Video Processing 17:1–20.

- Lasmar, N., and Y. Berthoumieu. 2014.Gaussian copula multivariate modeling for texture image retrieval using wavelet transforms. IEEE Transactions on Image Processing 23 ((5):):2246–61. doi:10.1109/TIP.2014.2313232.

- Li, C., Y. Huang, and L. Zhu. 2016. Color texture image retrieval based on Gaussian copula models of Gabor wavelet. Pattern Recognition 64: (1–16). doi: 10.1016/j.patcog.2016.10.030.

- Lippi, M., S. Gianotti, A. Fama, M. Casali, E. Barbolini, A. Ferrari, F. Fioroni, M. Iori, S. Luminari, M. Menga, et al. 2019. Texture analysis and multiple-instance learning for the classification of malignant lymphomas. Computer Methods and Programs in Biomedicine 124–32. doi:10.1016/j.cmpb.2019.105153.

- Liu, L., Y. Long, P. W. Fieguth, S. Lao, and G. Zhao. 2014. BRINT: Binary rotation invariant and noise tolerant texture classification. IEEE Transactions on Image Processing 23 ((7):):3071–84. doi:10.1109/TIP.2014.2325777.

- Liu, G., H.,Z. Lei, Y. Xu.. 2011.Image retrieval based on micro-structure descriptor. Pattern Recognition Letters 44(9):2123–2133. doi:10.1016/j.patcog.2011.02.003.

- Lowe, D. 2004. Distinctive image features from scale-invariant keypoints. Computer Vision 60 (2):91–110. doi:10.1023/B:VISI.0000029664.99615.94.

- Manjunath, B., and W. Ma. 1996. Texture feature for browsing and retrieval of image data. IEEE Transactions on Pattern Analysis and Machine Intelligence 18 ((8):):837–42. doi:10.1109/34.531803.

- Meng, F., M. Song, B. Guo, R. Shi, and D. Shan. 2016. Image fusion based on object region detection and non-subsampled contourlet transform. Computers & Electrical Engineering 62: (1–9). doi: 10.1016/j.compeleceng.2016.09.019.

- Murala, S., R. Maheshwari, and R. Balasubramanian. 2012. Local tetra patterns: A new feature descriptor for content-based image retrieval. IEEE Transactions on Image Processing 21 ((5):):2874–86. doi:10.1109/TIP.2012.2188809.

- Nanni, L., S. Brahnam, and A. Lumini. 2010. Selecting the best performing rotation invariant patterns in local binary/ternary patterns. International Conference on IP, Computer Vision and Pattern Recognition, (pp. 369–75) USA.

- Nasser, M. A., J. Melendez, A. Moreno, O. A. Omer, and D. Puig. 2017. Breast tumor classificaation in ultrasound images using texture analysis and super-resolution methods. Engineering Applications of Artificial Intelligence 59: (84–92). doi: 10.1016/j.engappai.2016.12.019.

- Pan, Z., Z. Li, H. Fan, and X. Wu. 2017. Feature based local binary pattern for rotation invariant texture classification. Expert Systems with Applications 88: (238–248):238–48. doi:10.1016/j.eswa.2017.07.007.

- Paschos, G., and M. Petrou. 2003. Histogram ratio features for color texture classifiction. Pattern Recognition Letters 24 ((1–3):):309–14. doi:10.1016/S0167-8655(02)00244-1.

- Porebski, A., N. Vandenbroucke, and L. Macaire. 2008. Haralick feature extraction from LBP images for color texture classification. In Proceedings of the 1st Workshops on Image Processing Theory, Tools and Applications, (pp. 1–8) Sousse, Tunisia.

- Ramteke, R., and K. Monali. 2012. Automatic medical image classification and abnormality detection using K nearest neighbour. Advanced Computer Research 2:190–96.

- Rios, A. G., S. Tabik, J. Luengo, and A. S. M. Shihavuddin. 2019. Coral species identification with texture or structure images using a two-level classifier based on convolutional neural networks. Knowledge-Based Systems 184: (1–10). doi: 10.1016/j.knosys.2019.104891.

- Satpathy, A., X. Jiang, and H. L. Eng. 2014. LBP-based edge-texture features for object recognition. IEEE Transaction on Image Processing 23:1953-1964. doi:10.1109/TIP.2014.2310123.

- Singh, C., E. Walia, and K. Kaur. 2018. Color texture description with novel local binary patterns for effective image retrieval. Pattern Recognition 76: (50–68):50–68. doi:10.1016/j.patcog.2017.10.021.

- Song, S., Z. Li, L. Niu, X. Zhou, G. Wang, Y. Gao, J. Wang, F. Liu, Q. Sui, L. Jiao, et al. 2019. Hypervascular hepatic focal lesions on dynamic contrast-enhanced CT: Preliminary data from arterial phase scans texture analysis for classification. Clinical Rediology 74: (653–671). doi: 10.1016/j.crad.2019.05.010.

- Srivastava, P., and A. Khare. 2017. Integration of wavelet transform, local binary patterns and moments for content based image retrival. Visual Comminication and Image Representation 42:78–103. doi:10.1016/j.jvcir.2016.11.008.

- Subrahmanyam, M., R. Maheswari, and R. Balasubramanian. 2012. Local maximum edge binary patterns: A new descriptor for image retrieval and object tracking. Signal Processing 92 ((6):):1467–79. doi:10.1016/j.sigpro.2011.12.005.

- Viji, K. S. A., and D. H. Rajesh. 2020. An efficient technique to segment the tumor and abnormality detection in the brain MRI images using KNN classifier. Material Today 24:1944–1954. doi: 10.1016/j.matpr.2020.03.622.

- Xikai, X., J. Dong, W. Wang, and T. Tan. 2015. Local correction pattern for image steganalysis. IEEE China Summit and International Conference on Signal and Information Processing(pp. 1–6) Chengdu, China

- Xu, Z., Y. Jiang, Y. Wang, Y. Zhou, Q. Liao, and Q. Liao. 2019. Local polynomial contrast binary patterns for face recognition. Neurocomputing 355: (1–12):1–12. doi:10.1016/j.neucom.2018.09.056.

- Yang, C., and Q. Yu. 2018. Multiscale fourier descriptor based on triangular features for shape retrieval. Signal Processing: Image Communication 71::1–44. doi:10.1016/j.image.2018.11.004.

- Yang, C., and Y. Yang. 2017. Improved local binary pattern for real scene optical character recognition. Pattern Recognition Letters 100: (14–21):14–21. doi:10.1016/j.patrec.2017.08.005.

- Yuan, F., X. Xia, and J. Shi. 2018. Mixed co-occurrence of local binary patterns and hamming distance based local binary patterns. Information Sciences 460-461:202–22. doi:10.1016/j.ins.2018.05.033.

- Zeinali, B., A. Ayatollahi, and M. Kakooei. 2014. A novel method of applying directional filter bank (DFB) for finger-knuckle-print (FKP) recognition. In: Electrical engineering, 22th iranian conference, (pp. 500–04) Tehran, Iran.

- Zhang, B., Y. Gao, S. Zhao, and J. Liu. 2010. Local derivate pattern versus local binary pattern: Face recognition with high-order local pattern descriptor. IEEE Transactions on Image Processing 19 (2):533–44. doi:10.1109/TIP.2009.2035882.

- Zhao, Y., W. Jia, R. Hu, and H. Min. 2013. Completed robust local binary pattern for texture classification. Neurocomputing 106: (68–76):68–76. doi:10.1016/j.neucom.2012.10.017.