?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Semantic segmentation is the pixel-wise labeling of an image. Boosted by the extraordinary ability of convolutional neural networks (CNN) in creating semantic, high-level and hierarchical image features; several deep learning-based 2D semantic segmentation approaches have been proposed within the last decade. In this survey, we mainly focus on the recent scientific developments in semantic segmentation, specifically on deep learning-based methods using 2D images. We started with an analysis of the public image sets and leaderboards for 2D semantic segmentation, with an overview of the techniques employed in performance evaluation. In examining the evolution of the field, we chronologically categorized the approaches into three main periods, namely pre-and early deep learning era, the fully convolutional era, and the post-FCN era. We technically analyzed the solutions put forward in terms of solving the fundamental problems of the field, such as fine-grained localization and scale invariance. Before drawing our conclusions, we present a table of methods from all mentioned eras, with a summary of each approach that explains their contribution to the field. We conclude the survey by discussing the current challenges of the field and to what extent they have been solved.

Introduction

Semantic segmentation has recently become one of the fundamental problems, and accordingly, a hot topic for the fields of computer vision and machine learning. Assigning a separate class label to each pixel of an image is one of the important steps in building complex robotic systems, such as driverless cars/drones, human-friendly robots, robot-assisted surgery, and intelligent military systems. Thus, it is no wonder that in addition to scientific institutions, industry-leading companies studying artificial intelligence are now summarily confronting this problem.

The simplest problem definition for semantic segmentation is pixel-wise labeling. Because the problem is defined at the pixel level, finding only class labels that the scene includes is considered insufficient, but localizing labels at the original image pixel resolution is also a fundamental goal. Depending on the context, class labels may change. For example, in a driverless car, the pixel labels may be human, road, and car (Siam et al. Citation2017), whereas for a medical system (Jiang et al. Citation2017; Saha and Chakraborty Citation2018), they could be cancer cells, muscle tissue, aorta wall etc.

The recent increase in interest in this topic has been undeniably caused by the extraordinary success seen with convolutional neural networks (LeCun et al. Citation1989) (CNN) that have been brought to semantic segmentation. Understanding a scene at the semantic level has long been one of the main topics of computer vision, but it is only now that we have seen actual solutions to the problem.

In this paper, our primary motivation is to focus on the recent scientific developments in semantic segmentation, specifically on the evolution of deep learning-based methods using 2D images. The reason we narrowed down our survey to techniques that utilize only 2D visible imagery is that, in our opinion, the scale of the problem in the literature is so vast and widespread that it would be impractical to analyze and categorize all semantic segmentation modalities (such as 3D point clouds, hyper-spectral data, MRI, CT,Footnote1 etc.) found in journal articles to any degree of detail. In addition to analyzing, the techniques that make semantic segmentation possible and accurate, we also examine the most popular image sets created for this problem. Additionally, we review the performance measures used for evaluating the success of semantic segmentation. Most importantly, we propose a taxonomy of methods, together with a technical evolution of them, which we believe is novel in the sense that it provides insight to the existing deficiencies and suggests future directions for the field.

The remainder of the paper is organized as follows: in the following subsection, we refer to other survey studies on the subject and underline our contribution. Section 2 presents information about the different image sets, the challenges, and how to measure the performance of semantic segmentation. Starting with Section 3, we chronologically scrutinize semantic segmentation methods under three main titles, hence in three separate sections. Section 3 covers the methods of pre- and early deep convolutional neural networks era. Section 4 provides details on the fully convolutional neural networks, which we consider a milestone for the semantic segmentation literature. Section 5 covers the state-of-the-art methods on the problem and provides details on both the architectural details and the success of these methods. Before finally concluding the paper in Section 7, Section 6 provides a future scope and potential directions for the field.

Surveys on Semantic Segmentation

Very recently, driven by both academia and industry, the rapid increase of interest in semantic segmentation has inevitably led to a number of survey studies being published (Thoma Citation2016; Ahmad et al. Citation2017; Jiang et al. Citation2017; Siam et al. Citation2017; Garcia-Garcia et al. Citation2017; Saffar et al. Citation2018; Yu et al. Citation2018; Guo et al. Citation2018; Lateef and Ruichek Citation2019; Minaee et al. Citation2020).

Some of these surveys focus on a specific problem, such as comparing semantic segmentation approaches for horizon/skyline detection (Ahmad et al. Citation2017), whilst others deal with relatively broader problems related to industrial challenges, such as semantic segmentation for driverless cars (Siam et al. Citation2017) or medical systems (Jiang et al. Citation2017). These studies are useful if working on the same specific problem, but they lack an overarching vision that may ‘technically’ contribute to the future directions of the field.

Another group (Thoma Citation2016; Saffar et al. Citation2018; Yu et al. Citation2018; Guo et al. Citation2018) of survey studies on semantic segmentation have provided a general overview of the subject, but they lack the necessary depth of analysis regarding deep learning-based methods. Whilst semantic segmentation was studied for two decades prior to deep learning, actual contribution to the field has only been achieved very recently, particularly following a revolutionary paper on fully convolutional networks (FCN) (Shelhamer, Long, and Darrell Citation2017) (which has also been thoroughly analyzed in this paper). It could be said that most state-of-the-art studies are in fact extensions of that same (Shelhamer, Long, and Darrell Citation2017) study. For this reason, without scrupulous analysis of FCNs and the direction of the subsequent papers, survey studies will lack the necessary academic rigor in examining semantic segmentation using deep learning.

There are recent reviews of deep semantic segmentation, for example, by (Garcia-Garcia et al. Citation2017) and (Minaee et al. Citation2020), which provide a comprehensive survey on the subject. These survey studies cover almost all the popular semantic segmentation image sets and methods, and for all modalities, such as 2D, RGB, 2.5D, RGB-D, and 3D data. Although they are inclusive in the sense that most related material on deep semantic segmentation is included, the categorization of the methods is coarse, since the surveys attempt to cover almost everything umbrellaed under the topic of semantic segmentation literature.

A detailed categorization of the subject was provided in (Lateef and Ruichek Citation2019). Although this survey provides important details on the subcategories that cover almost all approaches in the field, discussions on how the proposed techniques are chronologically correlated are left out of their scope. Recent deep learning studies on semantic segmentation follow a number of fundamental directions and labor with tackling the varied corresponding issues. In this survey paper, we define and describe these new challenges, and present the chronological evolution of the techniques of all the studies within this proposed context. We believe our attempt to understand the evolution of semantic segmentation architectures is the main contribution of the paper. We provide a table of these related methods, and explain them briefly one after another in chronological order, with their metric performance and computational efficiency. This way, we believe that readers will better understand the evolution, current state-of-the-art, as well as the future directions seen for 2D semantic segmentation.

Image Sets, Challenges, and Performance Evaluation

Image Sets and Challenges

The level of success for any machine-learning application is undoubtedly determined by the quality and the depth of the data being used for training. When it comes to deep learning, data is even more important since most systems are termed end-to-end; thus, even the features are determined by the data, not for the data. Therefore, data is no longer the object but becomes the actual subject in the case of deep learning.

In this section, we scrutinize the most popular large-scale 2D image sets that have been utilized for the semantic segmentation problem. The image sets were categorized into two main branches, namely general-purpose image sets, with generic class labels including almost every type of object or background, and also urban street image sets, which include class labels such as car and person, and are generally created for the training of driverless car systems. There are many other unresolved 2D semantic segmentation problem domains, such as medical imaging, satellite imagery, or infrared imagery. However, urban street image is currently driving scientific development in the field because they attract more attention from the industry. Therefore, very large-scale image sets and challenges with crowded leaderboards exist, yet, only specifically for industrial users. Scientific interest for depth-based semantic segmentation is growing rapidly; however, as mentioned in the Introduction, we have excluded depth-based and 3D-based segmentation datasets from the current study in order to focus with sufficient detail on the novel categorization of recent techniques pertinent to 2D semantic segmentation.

General Purpose Semantic Segmentation Image Sets

• PASCAL Visual Object Classes (VOC) (Everingham et al. Citation2010): This image set includes image annotations not only for semantic segmentation, but for also classification, detection, action classification, and person layout tasks. The image set and annotations are regularly updated and the leaderboard of the challenge is publicFootnote2 (with more than 140 submissions just for the segmentation challenge alone). It is the most popular among the semantic segmentation challenges and is still active following its initial release in 2005. The PASCAL VOC semantic segmentation challenge image set includes 20 foreground object classes and one background class. The original data consisted of 1,464 images for the purposes of training, plus 1,449 images for validation. The 1,456 test images are kept private for the challenge. The image set includes all types of indoor and outdoor images and is generic across all categories.

The PASCAL VOC image set has a number of extension image sets, the most popular among these are PASCAL Context (Mottaghi et al. Citation2014) and PASCAL Parts (Chen et al. Citation2014b). The first (Mottaghi et al. Citation2014) is a set of additional annotations for PASCAL VOC 2010, which goes beyond the original PASCAL semantic segmentation task by providing annotations for the whole scene. The statistics section contains a full list of more than 400 labels (compared to the original 21 labels). The second (Chen e tal. Citation2014b) is also a set of additional annotations for PASCAL VOC 2010. It provides segmentation masks for each body part of the object, such as the separately labeled limbs and body of an animal. For these extensions, the training and validation set contains 10,103 images, while the test set contains 9,637 images. There are other extensions to PASCAL VOC using other functional annotations such as the Semantic Parts (PASParts) (Wang et al. Citation2015) image set and the Semantic Boundaries Dataset (SBD) (Hariharan et al. Citation2011). For example, PASParts (Wang et al. Citation2015) additionally provides ‘instance’ labels, such as two instances of an object within an image are labeled separately, rather than using a single class label. However, unlike the former two additional extensions (Chen et al. Citation2014b; Mottaghi et al. Citation2014), these further extensions (Hariharan et al. Citation2011; Wang et al. Citation2015) have proven less popular as their challenges have attracted much less attention in state-of-the-art semantic segmentation studies; thus, their leaderboards are less crowded. In , a sample object, parts and instance segmentation are depicted.

Figure 1. A sample image and its annotation for object, instance and parts segmentations separately, from left to right.

• Common Objects in Context (COCO) (Lin et al. Citation2014): With 200 K labeled images, 1.5 million object instances, and 80 object categories, COCO is a very large-scale object detection, semantic segmentation, and captioning image set, including almost every possible types of scene. COCO provides challenges not only at the instance-level and pixel-level (which they refer to as stuff) semantic segmentation, but also introduces a novel task, namely that of panoptic segmentation (Kirillov et al. Citation2018), which aims at unifying instance-level and pixel-level segmentation tasks. Their leaderboardsFootnote3 are relatively less crowded because of the scale of the data. On the other hand, for the same reason, their challenges are assessed only by the most ambitious scientific and industrial groups, and thus are considered as the state-of-the-art in their leaderboards. Due to its extensive volume, most studies partially use this image set to pre-train or fine-tune their model, before submitting to other challenges such as PASCAL VOC 2012.

• ADE20K dataset (Zhou et al. Citation2019): ADE20K contains more than 20 K scene-centric images with objects and object parts annotations. Similarly to PASCAL VOC, there is a public leaderboardFootnote4 and the benchmark is divided into 20 K images for training, 2 K images for validation, and another batch of held-out images for testing. The samples in the dataset have varying resolutions (average image size being 1.3 M pixels), which can be up to 2400 1800 pixels. There are total of 150 semantic categories included for evaluation.

• Other General Purpose Semantic Segmentation Image Sets: Although less popular than either PASCAL VOC or COCO, there are also some other image sets in the same domain. Introduced by (Prest et al. Citation2012), YouTube-Objects is a set of low-resolution (480 360) video clips with more than 10k pixel-wise annotated frames.

Similarly, SIFT-flow (Tighe and Lazebnik Citation2010) is another low-resolution (256 256) semantic segmentation image set with 33 class labels for a total of 2,688 images. These and other relatively primitive image sets have been mostly abandoned in the semantic segmentation literature due to their limited resolution and low volume.

Urban Street Semantic Segmentation Image Sets

• Cityscapes (Cordts et al. Citation2016): This is a large-scale image set with a focus on the semantic understanding of urban street scenes. It contains annotations for high-resolution images from 50 different cities, taken at different hours of the day and from all seasons of the year, and also with varying backgrounds and scene layouts. The annotations are carried out at two quality levels: fine for 5,000 images and course for 20,000 images. There are 30 different class labels, some of which also have instance annotations (vehicles, people, riders, etc.). Consequently, there are two challenges with separate public leaderboardsFootnote5: one for pixel-level semantic segmentation, and a second for instance-level semantic segmentation. There are more than 100 entries to the challenge, making it the most popular regarding semantic segmentation of urban street scenes.

• Other Urban Street Semantic Segmentation Image Sets: There are a number of alternative image sets for urban street semantic segmentation, such as Cam-Vid (Brostow, Fauqueur, and Cipolla Citation2009), KITTI (Geiger et al. Citation2013), SYNTHIA (Ros et al. Citation2016a), and IDD (Varma et al. Citation2018). These are generally overshadowed by the Cityscapes image set (Cordts et al. Citation2016) for several reasons. Principally, their scale is relatively low. Only the SYNTHIA image set (Ros et al. Citation2016a) can be considered as large scale (with more than 13k annotated images); however, it is an artificially generated image set, and this is considered a major limitation for security-critical systems like driverless cars.

Small-scale and Imbalanced Image Sets

In addition to the aforementioned large-scale image sets of different categories, there are several image sets with insufficient scale or strong imbalance such that, when applied to deep learning-based semantic segmentation models, high-level segmentation accuracies cannot be directly obtained. Most public challenges on semantic segmentation include sets of this nature such as the DSTL or RIT-18 (DSTLab; Kemker, Salvaggio, and Kanan Citation2018), just to name a few. Because of the overwhelming numbers of these types of sets, we chose to include only the details of the large-scale sets that attract the utmost attention from the field.

Nonetheless, being able to train a model that performs well on small-scale or imbalanced data is a correlated problem to ours. Besides conventional deep learning techniques, such as transfer learning or data augmentation; the problem of insufficient or imbalanced data can be attacked by using specially designed deep learning architectures such as some optimized convolution layer types (Chen et al. (Citation2018a); He et al. (Citation2015), etc.) and others that we cover in this survey paper. What is more, there are recent studies that focus on the specific problem of utilizing insufficient sets for the problem of deep learning-based semantic segmentation (Xia, Lu, and Gu Citation2019). Although we acknowledge this problem as fundamental for the semantic segmentation field, we leave the discussions on techniques to handle small-scale or imbalanced sets for semantic segmentation, beyond the scope of this survey paper.

Performance Evaluation

There are two main criteria in evaluating the performance of semantics segmentation: accuracy, or in other words, the success of an algorithm; and computation complexity in terms of speed and memory requirements. In this section, we analyze these two criteria separately.

Accuracy

Measuring the performance of segmentation can be complicated, mainly because there are two distinct values to measure. The first is classification, which is simply determining the pixel-wise class labels; and the second is localization, or finding the correct set of pixels that enclose the object. Different metrics can be found in the literature to measure one or both of these values. The following is a brief explanation of the principal measures most commonly used in evaluating semantic segmentation performance.

• ROC-AUC: ROC stands for the Receiver-Operator Characteristic curve, which summarises the trade-off between true positive rate and false-positive rate for a predictive model using different probability thresholds; whereas AUC stands for the area under this curve, which is 1 at maximum. This tool is useful in interpreting binary classification problems and is appropriate when observations are balanced between classes. However, since most semantic segmentation image sets (Everingham et al. Citation2010; Mottaghi et al. Citation2014; Chen et al. Citation2014b; Wang et al. Citation2015; Hariharan et al. Citation2011; Lin et al. Citation2014; Cordts et al. Citation2016) are not balanced between the classes, this metric is no longer used by the most popular challenges.

• Pixel Accuracy: Also known as global accuracy (Badrinarayanan, Kendall, and Cipolla Citation2015), pixel accuracy (PA) is a very simple metric which calculates the ratio between the amount of properly classified pixels and their total number. Mean pixel accuracy (mPA), is a version of this metric which computes the ratio of correct pixels on a per-class basis. mPA is also referred to as class average accuracy (Badrinarayanan, Kendall, and Cipolla Citation2015).

where is the total number of pixels both classified and labeled as class j. In other words,

corresponds to the total number of True Positives for class j.

is the total number of pixels labeled as class j.

• Intersection over Union (IoU): Also known as the Jaccard Index, IoU is a statistic used for comparing the similarity and diversity of sample sets. In semantics segmentation, it is the ratio of the intersection of the pixel-wise classification results with the ground truth, to their union.

where, is the number of pixels, which are labeled as class i, but classified as class j. In other words, they are False Positives (false alarms) for class j. Similarly,

, the total number of pixels labeled as class j, but classified as class i are the False Negatives (misses) for class j.

Two extended versions of IoU are also widely in use:

Mean Intersection over Union (mIoU): mIoU is the class-averaged IoU, as in (Equation3

(3)

(3) ).

Frequency-weighted IoU (FwIoU): This is an improved version of MIoU that weighs each class importance depending on appearance frequency by using

(the total number of pixels labeled as class j, as also defined in (1)). The formula of FwIoU is given in (Equation4

(4)

(4) ):

IoU and its extensions, compute the ratio of true positives (hits) to the sum of false positives (false alarms), false negatives (misses) and true positives (hits). Thereby, the IoU measure is more informative when compared to pixel accuracy simply because it takes false alarms into consideration, whereas PA does not. However, since false alarms and misses are summed up in the denominator, the significance between them is not measured by this metric, which is considered its primary drawback. In addition, IoU only measures the number of pixels correctly labeled without considering how accurate the segmentation boundaries are.

• Precision-Recall Curve (PRC)-based metrics: Precision (ratio of hits over a summation of hits and false alarms) and recall (ratio of hits over a summation of hits and misses) are the two axes of the PRC used to depict the trade-off between precision and recall, under a varying threshold for the task of binary classification. PRC is very similar to ROC. However, PRC is more powerful in discriminating the effects between the false positives (alarms) and false negatives (misses). That is predominantly why PRC-based metrics are commonly used for evaluating the performance of semantic segmentation. The formula for Precision (also called Specificity) and Recall (also called Sensitivity) for a given class j, are provided in (Equation5(5)

(5) ):

There are three main PRC-based metrics:

F

: Also known as the ‘dice coefficient,’ this measure is the harmonic mean of the precision and recall for a given threshold. It is a normalised measure of similarity, and ranges between 0 and 1 (Please see (Equation6

(6)

(6) )).

PRC-AuC: This is similar to the ROC-AUC metric. It is simply the area under the PRC. This metric refers to information about the precision-recall trade-off for different thresholds, but not the shape of the PR curve.

Average Precision (AP): This metric is a single value that summarizes both the shape and the AUC of PRC. In order to calculate AP, using the PRC, for uniformly sampled recall values (e.g., 0.0, 0.1, 0.2, …, 1.0), precision values are recorded. The average of these precision values is referred to as the average precision. This is the most commonly used single value metric for semantic segmentation. Similarly, mean average precision (mAP) is the mean of the AP values, calculated on a per-class basis.

• Hausdorff Distance (HD): Hausdorff Distance is used incorporating the longest distance between classified and labeled pixels as an indicator of the largest segmentation error (Jadon Citation2020; Karimi and Salcudean Citation2019), with the aim of tracking the performance of a semantic segmentation model. The unidirectional HDs as and

are presented in (Equation7

(7)

(7) ) and (Equation8

(8)

(8) ), respectively.

where, and

are the pixel sets. The

is the pixel in the segmented counter

and

is the pixel in the target counter

(Huang et al. Citation2020). The bidirectional HD between these sets is shown in (9), where the Euclidean distance is employed for (Equation7

(7)

(7) ), (Equation8

(8)

(8) ), and (Equation9

(9)

(9) ).

IoU and its variants, along with AP, are the most commonly used accuracy evaluation metrics in the most popular semantic segmentation challenges (Everingham et al. Citation2010; Mottaghi et al. Citation2014; Chen et al. Citation2014b; Wang et al. Citation2015; Hariharan et al. Citation2011; Lin et al. Citation2014; Cordts et al. Citation2016).

Computational Complexity

The burden of computation is evaluated using two main metrics: how fast the algorithm completes, and how much computational memory is demanded.

• Execution time: This is measured as the whole processing time, starting from the instant a single image is introduced to the system/algorithm right through until the pixel-wise semantic segmentation results are obtained. The performance of this metric significantly depends on the hardware utilized. Thus, for an algorithm, any execution time metric should be accompanied by a thorough description of the hardware used. There are notations such as Big-O, which provide a complexity measure independent of the implementation domain. However, these notations are highly theoretical and are predominantly not preferred for extremely complex algorithms such as deep semantic segmentation as they are simple and largely inaccurate.

For a deep learning-based algorithm, the offline (i.e., training) and online (i.e., testing) operation may last for considerably different time intervals. Technically, the execution time refers only to the online operation or academically speaking, the test duration for a single image. Although this metric is extremely important for industrial applications, academic studies refrain from publishing exact execution times, and none of the aforementioned challenges was found to have provided this metric. A recent study, (Zhao et al. Citation2018a) provided a 2D histogram of Accuracy (MIoU%) vs frames-per-second, in which some of the state-of-the-art methods with open-source codes (including their proposed structure, namely image cascade network – ICNet), were benchmarked using the Cityscapes (Cordts et al. Citation2016) image set.

• Memory Usage: Memory usage is specifically important when semantic segmentation is utilized in limited performance devices, such as smartphones, digital cameras, or when the requirements of the system are extremely restrictive. The prime examples of these would be military systems or security-critical systems, such as self-driving cars.

The usage of memory for a complex algorithm like semantic segmentation may change drastically during operation. That is why a common metric for this purpose is peak memory usage, which is simply the maximum memory required for the entire segmentation operation for a single image. The metric may apply to computer (data) memory or GPU memory depending on the hardware design.

Although critical for industrial applications, this metric is not usually made available for any of the aforementioned challenges.

Computational efficiency is a very important aspect of any algorithm that is to be implemented on a real system. A comparative assessment of the speed and capacity of various semantic segmentation algorithms is a challenging task. Although most state-of-the-art algorithms are available with open-source codes, benchmarking all of them, with their optimal hyper-parameters, seems implausible. For this purpose, we provide an inductive way of comparing the computational efficiencies of methods in the following sections. In , we categorize methods into mainly four levels of computational efficiency and discuss our categorization related to the architectural design of a given method. This table also provides a chronological evolution of the semantic segmentation methods in the literature.

Table 1. State-of-the-art semantic segmentation methods, showing the method name and reference, brief summary, problem type targeted, and refinement model (if any).

Before Fully Convolutional Networks

As mentioned in the Introduction, the utilization of FCNs is a breaking point for semantic segmentation literature. Efforts on semantic segmentation literature prior to FCNs (Shelhamer, Long, and Darrell Citation2017) can be analyzed in two separate branches, as pre-deep learning and early deep learning approaches. In this section, we briefly discuss both sets of approaches.

Pre-Deep Learning Approaches

The differentiating factor between conventional image segmentation and semantic segmentation is the utilization of semantic features in the process. Conventional methods for image segmentation such as thresholding, clustering, and region growing, etc., (please see (Zaitoun and Aqel Citation2015) for a survey on conventional image segmentation techniques) utilize handcrafted low-level features (i.e., edges and blobs) to locate object boundaries in images. Thus, in situations where the semantic information of an image is necessary for pixel-wise segmentation, such as in similar objects occluding each other, these methods usually return a poor performance.

Regarding semantic segmentation efforts prior to DCNNs becoming popular, a wide variety of approaches (Fröhlich, Rodner, and Denzler Citation2013; He and Zemel Citation2008; Krähenbühl and Koltun Citation2011; Ladický et al. Citation2009; Lempitsky, Vedaldi, and Zisserman Citation2011; Mičušĺík and Košecká Citation2009; Montillo et al. Citation2011; Rav et al. Citation2016; Shotton, Johnson, and Cipolla Citation2008; Ulusoy and Bishop Citation2005; Vezhnevets, Ferrari, and Buhmann Citation2011; Xiao and Quan Citation2009; Yao, Fidler, and Urtasun Citation2012) utilized graphical models, such as Markov Random Fields (MRF), Conditional Random Fields (CRF) or forest-based (or sometimes referred to as ‘holistic’) methods, in order to find scene labels at the pixel level. The main idea was to find an inference by observing the dependencies between neighboring pixels. In other words, these methods modeled the semantics of the image as a kind of prior information among adjacent pixels. Thanks to deep learning, today we know that image semantics require abstract exploitation of large-scale data. Initially, graph-based approaches were thought to have this potential. The so-called ‘super-pixelisation,’ which is usually the term applied in these studies, was a process of modeling abstract regions. However, a practical and feasible implementation for large-scale data processing was never achieved for these methods, while it was accomplished for DCNNs, first by (Krizhevsky et al. Citation2012) and then in many other studies.

Another group of studies, sometimes referred to as the ‘Layered models’ (Arbeláez et al. Citation2012; Ladický et al. Citation2010; Yang et al. Citation2012), used a composition of pre-trained and separate object detectors so as to extract the semantic information from the image. Because the individual object detectors failed to classify regions properly, or because the methods were limited by the finite number of object classes provided by the ‘hand-selected’ bank of detectors in general, their performance was seen as relatively low compared to today’s state-of-the-art methods.

Although the aforementioned methods of the pre-deep learning era are no longer preferred as segmentation methods, some of the graphical models, especially CRFs, are currently being utilized by the state-of-the-art methods as post-processing (refinement) layers, with the purpose of improving the semantic segmentation performance, the details of which are discussed in the following section.

Refinement Methods

Deep neural networks are powerful in extracting abstract local features. However, they lack the capability to utilize global context information, and accordingly cannot model interactions between adjacent pixel predictions (Teichmann and Cipolla Citation2018). On the other hand, the popular segmentation methods of the pre-deep learning era, the graphical models, are highly suited to this sort of task. That is why they are currently being used as a refinement layer on many DCNN-based semantic segmentation architectures.

As also mentioned in the previous section, the idea behind using graphical models for segmentation is finding an inference by observing the low-level relations between neighboring pixels. In , the effect of using a graphical model-based refinement on segmentation results can be seen. The classifier (see .b) cannot correctly segment pixels where different class labels are adjacent. In this example, a CRF-based refinement (Krähenbühl and Koltun Citation2011) is applied to improve the pixel-wise segmentation results. CRF-based methods are widely used for the refinement of deep semantic segmentation methods, although some alternative graphical model-based refinement methods also exist in the literature (Liu et al. Citation2015; Zuo and Drummond Citation2017).

CRFs (Lafferty, McCallum, and Pereira Citation2001) are a type of discriminative undirected probabilistic graphical model. They are used to encode known relationships between observations and to construct consistent interpretations. Their usage as a refinement layer comes from the fact that, unlike a discrete classifier, which does not consider the similarity of adjacent pixels, a CRF can utilize this information. The main advantage of CRFs over other graphical models (such as Hidden Markov Models) is their conditional nature and their ability to avoid the problem of label bias (Lafferty, McCallum, and Pereira Citation2001). Even though a considerable number of methods (see ) utilize CRFs for refinement, these models started to lose popularity in relatively recent approaches because they are notoriously slow and very difficult to optimize (Teichmann and Cipolla Citation2018).

Early Deep Learning Approaches

Before FCNs first appeared in 2014,Footnote6 the initial few years of deep convolutional networks saw a growing interest in the idea of utilizing the newly discovered deep features for semantic segmentation (Ciresan et al. Citation2012; Farabet et al. Citation2013; Ganin and Lempitsky Citation2014; Hariharan et al. Citation2014; Ning et al. Citation2005; Pinheiro and Collobert Citation2014). The very first approaches, which were published prior to the proposal of a Rectified Linear Unit (ReLU) layer (Krizhevsky et al. Citation2012), used activation functions such as tanh (Ning et al. Citation2005) (or similar continuous functions), which can be computationally difficult to differentiate. Thus, training such systems were not considered to be computation-friendly, or even feasible for large-scale data.

However, the first mature approaches were just simple attempts to convert classification networks such Alex-Net and VGG to segmentation networks by fine-tuning the fully connected layers (Ciresan et al. Citation2012; Ganin and Lempitsky Citation2014; Ning et al. Citation2005). They suffered from the overfitting and time-consuming nature of their fully connected layers in the training phase. Moreover, the CNNs used were not sufficiently deep so as to create abstract features, which would relate to the semantics of the image.

There were a few early deep learning studies in which the researchers declined to use fully connected layers for their decision-making. However, they utilized different structures such as a recurrent architecture (Pinheiro and Collobert Citation2014) or using labelling from a family of separately computed segmentations (Farabet et al. Citation2013). By proposing alternative solutions to fully connected layers, these early studies showed the first traces of the necessity for a structure like the FCN, and unsurprisingly they were succeeded by (Shelhamer, Long, and Darrell Citation2017).

Since their segmentation results were deemed to be unsatisfactory, these studies generally utilized a refinement process, either as a post-processing layer(Ciresan et al. Citation2012; Ganin and Lempitsky Citation2014; Hariharan et al. Citation2014; Ning et al. Citation2005) or as an alternative architecture to fully connected decision layers (Farabet et al. Citation2013; Pinheiro and Collobert Citation2014). Refinement methods varied, such as Markov random fields (Ning et al. Citation2005), nearest neighbor-based approach (Ganin and Lempitsky Citation2014), the use of a calibration layer (Ciresan et al. Citation2012), using super-pixels (Farabet et al. Citation2013; Hariharan et al. Citation2014), or a recurrent network of plain CNNs (Pinheiro and Collobert Citation2014). Refinement layers, as discussed in the previous section, are still being utilized by post-FCN methods, with the purpose of increasing the pixel-wise labelling performance around regions where class intersections occur.

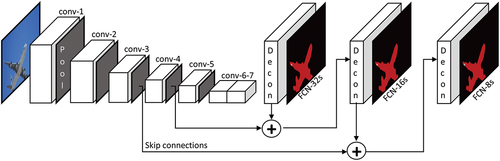

Fully Convolutional Networks for Semantic Segmentation

In (Shelhamer, Long, and Darrell Citation2017), the idea of dismantling fully connected layers from deep CNNs (DCNN) was proposed, and to imply this idea, the proposed architecture was named as ‘Fully Convolutional Networks’ (see ). The main objective was to create semantic segmentation networks by adapting classification networks such as AlexNet (Krizhevsky et al. Citation2012), VGG (Simonyan and Zisserman Citation2015), and GoogLeNet (Szegedy et al. Citation2015) into fully convolutional networks, and then transferring their learnt representations by fine-tuning. The most widely used architectures obtained from the study (Shelhamer, Long, and Darrell Citation2017) are known as ‘FCN-32s,’ ‘FCN16s,’ and ‘FCN8s,’ which are all transfer-learnt using the VGG architecture (Simonyan and Zisserman Citation2015).

Figure 3. Fully convolutional networks (FCNs) are trained end-to-end and are designed to make dense predictions for per-pixel tasks like semantic segmentation. FCNs consist of no fully connected layers.

FCN architecture was considered revolutionary in many aspects. First of all, since FCNs did not include fully connected layers, inference per image was seen to be considerably faster. This was mainly because convolutional layers when compared to fully connected layers, had a marginal number of weights. Second, and maybe more significant, the structure allowed segmentation maps to be generated for images of any resolution. In order to achieve this, FCNs used deconvolutional layers that can upsample coarse deep convolutional layer outputs to dense pixels of any desired resolution. Finally, and most importantly, they proposed the skip architecture for DCNNs.

Skip architectures (or connections) provide links between nonadjacent layers in DCNNs. Simply by summing or concatenating outputs of unconnected layers, these connections enable information to flow, which would otherwise be lost because of an architectural choice such as max-pooling layers or dropouts. The most common practice is to use skip connections preceding a max-pooling layer, which downsamples layer output by choosing the maximum value in a specific region. Pooling layers helps the architecture create feature hierarchies, but also causes loss of localized information, which could be valuable for semantic segmentation, especially at object borders. Skip connections preserve and forward this information to deeper layers by way of bypassing the pooling layers. Actually, the usage of skip connections in (Shelhamer, Long, and Darrell Citation2017) was perceived as being considerably primitive. The ‘FCN-8s’ and ‘FCN-16s’ networks included these skip connections at different layers. Denser skip connections for the same architecture, namely ‘FCN-4s’ and ‘FCN-2s,’ were also utilized for various applications (Lee et al. Citation2017; Zhong et al. Citation2016). This idea eventually evolved into the encoder-decoder structures (Badrinarayanan, Kendall, and Cipolla Citation2015; Ronneberger, Fischer, and Brox Citation2015) for semantic segmentation, which are presented in the following section.

Post-FCN Approaches

Almost all subsequent approaches on semantic segmentation followed the idea of FCNs; thus it would not be wrong to state that decision-making with fully connected layers effectively ceased to existFootnote7 following the appearance of FCNs to the issue of semantic segmentation.

On the other hand, the idea of FCNs also created new opportunities to further improve deep semantic segmentation architectures. Generally speaking, the main drawbacks of FCNs can be summarized as inefficient loss of label localization within the feature hierarchy, inability to process global context knowledge, and the lack of a mechanism for multiscale processing. Thus, most subsequent studies have been principally aimed at solving these issues through the proposal of various architectures or techniques. For the remainder of this paper, we analyze these issues under the title, ‘fine-grained localisation.’ Consequently, before presenting a list of the post-FCN state-of-the-art methods, we focus on this categorization of techniques and examine different approaches that aim at solving these main issues. In the following, we also discuss scale invariance in the semantic segmentation context and finish with object detection-based approaches, which are a new breed of solution that aim at resolving the semantic segmentation problem simultaneously with detecting object instances.

Techniques for Fine-grained Localisation

Semantic segmentation is, by definition, a dense procedure; hence, it requires fine-grained localisation of class labels at the pixel level. For example, in robotic surgery, pixel errors in semantic segmentation can lead to life or death situations. Hierarchical features created by pooling (i.e., max-pooling) layers can partially lose localisation. Moreover, due to their fully convolutional nature, FCNs do not inherently possess the ability to model global context information in an image, which is also very effective in the localisation of class labels. Thus, these two issues are intertwined in nature, and in the following, we discuss different approaches that aim at overcoming these problems and to provide finer localisation of class labels.

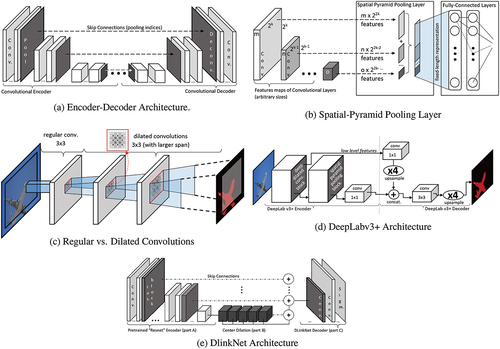

Encoder-Decoder Architecture

The so-called Encoder-Decoder (ED) architectures (also known as the U-nets, referring to the pioneering study of (Ronneberger, Fischer, and Brox Citation2015)) are comprised of two parts. Encoder gradually reduces the spatial dimension with pooling layers, whilst the decoder gradually recovers the object details and spatial dimension. Each feature map of the decoder part only directly receives the information from the feature map at the same level of the encoder part using skip connections, thus EDs can create abstract hierarchical features with fine localisation (see .a). U-Net (Ronneberger, Fischer, and Brox Citation2015) and Seg-Net (Badrinarayanan, Kendall, and Cipolla Citation2015) are very well-known examples. In this architecture, the strongly correlated semantic information, which is provided by the adjacent lower-resolution feature map of the encoder part, has to pass through additional intermediate layers in order to reach the same decoder layer. This usually results in a level of information decay. However, U-Net architectures have proven very useful for the segmentation of different applications, such as medical images (Ronneberger, Fischer, and Brox Citation2015), street view images (Badrinarayanan, Kendall, and Cipolla Citation2015), satellite images (Ulku et al. Citation2019), just to name a few. Although earlier ED architectures were designed for object segmentation tasks only, there are also modified versions such as “TernausNetV2” (Iglovikov et al. Citation2018), that provide instance segmentation capability with minor architectural changes.

Spatial Pyramid Pooling

The idea of constructing a fixed-sized spatial pyramid was first proposed by (Lazebnik, Schmid, and Ponce Citation2006), in order to prevent a Bag-of-Words system losing spatial relations among features. Later, the approach was adopted to CNNs by (He et al. Citation2015), in that, regardless of the input size, a spatial pyramid representation of deep features could be created in a Spatial Pyramid Pooling Network (SPP-Net). The most important contribution of the SPP-Net was that it allowed inputs of different sizes to be fed into CNNs. Images of different sizes fed into convolutional layers inevitably create different-sized feature maps. However, if a pooling layer, just prior to a decision layer, has stride values proportional to the input size, the feature map created by that layer would be fixed (see .b). By (Li et al. Citation2018), a modified version, namely Pyramid Attention Network (PAN) was additionally proposed. The idea of PAN was combining an SPP layer with global pooling to learn a better feature representation.

There is a common misconception that SPP-Net structure carries an inherent scale-invariance property, which is incorrect. SPP-Net allows the efficient training of images at different scales (or resolutions) by allowing different input sizes to a CNN. However, the trained CNN with SPP is scale-invariant if, and only if, the training set includes images with different scales. This fact is also true for a CNN without SPP layers.

However, similar to the original idea proposed in (Lazebnik, Schmid, and Ponce Citation2006), the SPP layer in a CNN constructs relations among the features of different hierarchies. Thus, it is quite similar to skip connections in ED structures, which also allow information flow between feature hierarchies. The most common utilization of an SPP layer for semantic segmentation is proposed in (He et al. Citation2015), such that the SPP layer is appended to the last convolutional layer and fed to the pixel-wise classifier.

Feature Concatenation

This idea is based on fusing features extracted from different sources. For example, in (Pinheiro, Collobert, and Dollar Citation2015) the so-called ‘DeepMask’ network utilizes skip connections in a feed-forward manner, so that an architecture partially similar to both SPP layer and ED is obtained. The same group extends this idea with a top-down refinement approach of the feed-forward module and propose the so-called ‘SharpMask’ network (Pinheiro et al. Citation2016), which has proven to be more efficient and accurate in segmentation performance. Another approach from this category is the so-called ‘ParseNet’ (Liu et al. Citation2015), which fuses CNN features with external global features from previous layers in order to provide context knowledge. Another approach by (Wang et al. Citation2020) is to fuse the “stage features” (i.e. deep encoder activations) with “refinement path features” (an idea similar to skip connections), using a convolutional (Feature Adaptive Fusion FAF) block. Although a novel idea in principle, feature fusion approaches (including SPP) create hybrid structures; therefore, they are relatively difficult to train.

Dilated Convolution

The idea of dilated (atrous) convolutions is actually quite simple: with contiguous convolutional filters, an effective receptive field of units can only grow linearly with layers; whereas with dilated convolution, which has gaps in the filter (see .c), the effective receptive field would grow much more quickly (Chen et al. Citation2018a). Thus, with no pooling or subsampling, a rectangular prism of convolutional layers is created. Dilated convolution is a very effective and powerful method for the detailed preservation of feature map resolutions. The negative aspect of the technique, compared to other techniques, concerns its higher demand for GPU storage and computation, since the feature map resolutions do not shrink within the feature hierarchy (He et al. Citation2016).

Conditional Random Fields

As also discussed in Section 3.1.1, CNNs naturally lack mechanisms to specifically ‘focus’ on regions where class intersections occur. Around these regions, graphical models are used to find inference by observing low-level relations between neighboring feature maps of CNN layers. Consequently, graphical models, mainly CRFs, are utilized as refinement layers in deep semantic segmentation architectures. As in (Rother, Kolmogorov, and Blake Citation2004), CRFs connect low-level interactions with output from multiclass interactions, and in this way, global context knowledge is constructed.

As a refinement layer, various methods exist that employ CRFs to DCNNs, such as the Convolutional CRFs (Teichmann and Cipolla Citation2018), the Dense CRF (Krähenbühl and Koltun Citation2011), and CRN-as-RNN (Zheng et al. Citation2015). In general, CRFs help build context knowledge and thus a finer level of localisation in class labels.

Recurrent Approaches

The ability of Recurrent Neural Networks (RNNs) to handle sequential information can help improve segmentation accuracy. For example, (Pfeuffer, Schulz, and Dietmayer Citation2019) used Conv-LSTM layers to improve their semantic segmentation results in image sequences. However, there are also methods that use recurrent structures on still images. For example, the Graph LSTM network (Liang et al. Citation2016) is a generalization of LSTM from sequential data or multidimensional data to general graph-structured data for semantic segmentation on 2D still images. Graph-RNN Shuai2016 is another example of a similar approach in which an LSTM-based network is used to fuse a deep encoder output with the original image in order to obtain a finer pixel-level segmentation. Likewise, in (Lin et al. Citation2018), the researchers utilized LSTM-chains in order to intertwine multiple scales, resulting in pixel-wise segmentation improvements. There are also hybrid approaches where CNNs and RNNs are fused. A good example of this is the so-called ReSeg model (Visin et al. Citation2016), in which the input image is fed to a VGG-like CNN encoder, and is then processed afterwards by recurrent layers (namely the ReNet architecture) in order to better localize the pixel labels. Another similar approach is the DAG-RNN (Shuai et al. Citation2016), which utilize a DAG-structured CNN+RNN network, and models long-range semantic dependencies among image units. To the best of our knowledge, no purely recurrent structures for semantic segmentation exist, mainly because semantic segmentation requires a preliminary CNN-based feature encoding scheme.

There is currently an increasing trend in one specific type of RNN, namely ‘recurrent attention modules.’ In these modules, attention (Vaswani et al. Citation2017) is technically fused in the RNN, providing a focus on certain regions of the input when predicting a certain part of the output sequence. Consequently, they are also being utilized in semantic segmentation (Li et al. Citation2019b; Zhao et al. Citation2018b; Oktay et al. Citation2018).

Scale-Invariance

Scale Invariance is, by definition, the ability of a method to process a given input, independent of the relative scale (i.e. the scale of an object to its scene) or image resolution. Although it is extremely crucial for certain applications, this ability is usually overlooked or is confused with a method’s ability to include multiscale information. A method may use multiscale information to improve its pixel-wise segmentation ability, but can still be dependent on scale or resolution. That is why we find it necessary to discuss this issue under a different title and to provide information on the techniques that provide scale and/or resolution invariance.

In computer vision, any method can become scale invariant if trained with multiple scales of the training set. Some semantic segmentation methods, such as (Eigen and Fergus Citation2014; Farabet et al. Citation2013; Lin et al. Citation2016a; Pinheiro and Collobert Citation2014; Yu and Koltun Citation2015) utilize this strategy. However, these methods do not possess an inherent scale-invariance property, which is usually obtained by normalization with a global scale factor (such as in SIFT by (Lowe Citation2004)). This approach is not usually preferred in the literature on semantic segmentation. The image sets that exist in semantic segmentation literature are extremely large in size. Thus, the methods are trained to memorize that training set, because in principle, overfitting a large-scale training set is actually tantamount to solving the entire problem space.

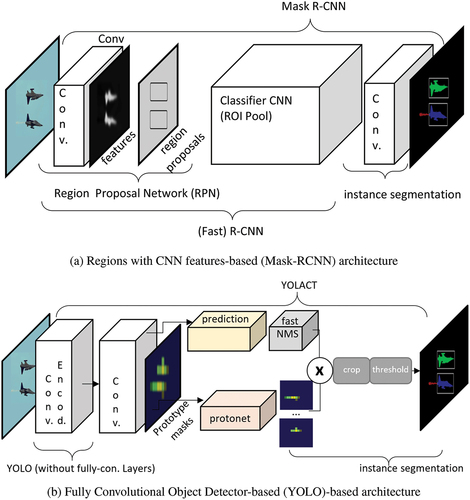

Object Detection-based Methods

There has been a recent growing trend in computer vision, which aims at specifically resolving the problem of object detection, that is, establishing a bounding box around all objects within an image. Given that the image may or may not contain any number of objects, the architectures utilized to tackle such a problem differ to the existing fully-connected/convolutional classification or segmentation models.

The pioneering study that represents this idea is the renowned ‘Regions with CNN features’ (RCNN) network (Girshick et al. Citation2013). Standard CNNs with fully convolutional and fully connected layers lack the ability to provide varying length output, which is a major flaw for an object detection algorithm that aims to detect an unknown number of objects within an image. The simplest way to resolve this problem is to take different regions of interest from the image, and then to employ a CNN in order to detect objects within each region separately. This region selection architecture is called the ‘Region Proposal Network’ (RPN) and is the fundamental structure used to construct the RCNN network (see .a). Improved versions of RCNN, namely ‘Fast-RCNN’ (Girshick et al. Citation2013) and ‘Faster-RCNN’ (Ren et al. Citation2015) were subsequently also proposed by the same research group. Because these networks allow for the separate detection of all objects within the image, the idea was easily implemented for instance segmentation, as the ‘Mask-RCNN’ (He et al. Citation2017).

The basic structure of RCNNs included the RPN, which is the combination of CNN layers and a fully connected structure in order to decide the object categories and bounding box positions. As discussed within the previous sections of this paper, due to their cumbersome structure, fully connected layers were largely abandoned with FCNs. RCNNs shared a similar fate when the ‘You-Only-Look-Once’ (YOLO) by (Redmon et al. Citation2016) and ‘Single Shot Detector’ (SSD) by (Liu et al. Citation2016) were proposed. YOLO utilizes a single convolutional network that predicts the bounding boxes and the class probabilities for these boxes. It consists of no fully connected layers, and consequently provides real-time performance. SSD proposed a similar idea, in which bounding boxes were predicted after multiple convolutional layers. Since each convolutional layer operates at a different scale, the architecture is able to detect objects of various scales. Whilst slower than YOLO, it is still considered to be faster then RCNNs. This new breed of object detection techniques was immediately applied to semantic segmentation. Similar to MaskRCNN, ‘Mask-YOLO’ (Sun Citation2019) and ‘YOLACT’ (Bolya et al. Citation2019) architectures were implementations of these object detectors to the problem of instance segmentation (see ). Similar to YOLACT, some other methods also achieve fast, real-time instance segmentation such as: ESE-Seg (Xu et al. 2019a), SOLO (Wang et al. Citation2019a), SOLOv2 (Wang et al. 2020a), DeepSnake (Peng et al. Citation2020), and CenterPoly (Perreault et al. Citation2021).

Locating objects within an image prior to segmenting them at the pixel level is both intuitive and natural, due to the fact that it is effectively how the human brain supposedly accomplishes this task (Rosenholtz Citation2016). In addition to these two-stage (detection+segmentation) methods, there are some recent studies that aim at utilizing the segmentation task to be incorporated into one-stage bounding-box detectors and result in a simple yet efficient instance segmentation framework (Xu et al. 2019a; R. Zhang et al. 2020a; Lee and Park Citation2020; Xie et al. Citation2020a). However, the latest trend is to use global-area-based methods by generating intermediate FCN feature maps and then assembling these basis features to obtain final masks (Chen et al. Citation2020; Ke, Tai, and Tang Citation2021; Kim et al. Citation2021).

In recent years, a trend of alleviating the demand for pixel-wise labels is realized mainly by employing bounding boxes, and by expanding from semantic segmentation to instance segmentation applications. In both semantic segmentation and instance segmentation methods, the category of each pixel is recognized, and the only difference is that instance segmentation also differentiates object occurrences of the same category. Therefore, weakly-supervisedinstance segmentation (WSIS) methods are also utilized for instance segmentation. The supervision of WSIS methods can use different annotation types for training, which are usually in the form of either bounding boxes (Khoreva et al. Citation2017; Hsu et al. Citation2019; Arun, Jawahar, and Kumar Citation2020; Tian et al. Citation2021; Lee et al. Citation2021; Cheng et al. 2021b) or image-level labels (Liu et al. Citation2020; Shen et al. 2021b; Zhou et al. Citation2016; Shen et al. 2021a). Hence, employing object detection-based methods for semantic segmentation is an area significantly prone to further development in near future by the time this manuscript is prepared.

Evolution of Methods

In , we present several semantic segmentation methods, each with a brief summary, explaining the fundamental idea that represents the proposed solutions, their position in available leaderboards, and a categorical level of the method’s computational efficiency. The intention is for readers to gain a better evolutionary understanding of the methods and architectures in this field, and a clearer conception of how the field may subsequently progress in the future. Regarding the brief summaries of the listed methods, please refer to the categorizations provided earlier in this section.

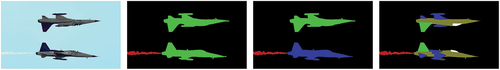

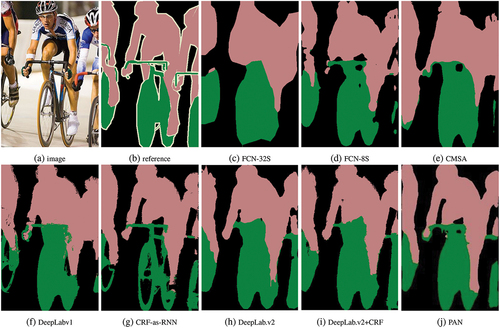

includes 34 methods spanning an eight-year period, starting with early deep learning approaches through to the most recent state-of-the-art techniques. Most of the listed studies have been quite successful and have significantly high rankings in the previously mentioned leaderboards. Whilst there are many other methods, we believe this list to be a clear depiction of the advances in deep learning-based semantic segmentation approaches. In , a sample image from the PASCAL VOC validation set, its semantic segmentation ground truth and results obtained from some of the listed studies are depicted. clearly shows the gradually growing success of different methods starting with the pioneering FCN architectures to more advanced architectures such as DeepLab (Chen et al. 2014a, Chen et al. Citation2018a) or CRF-as-RNN (Zheng et al. Citation2015).

Figure 6. (a) A sample image from the PASCAL VOC validation set, (b) its semantic segmentation ground truth, and results obtained from different studies are depicted: c) FCN-32S (Shelhamer, Long, and Darrell Citation2017), d) FCN-8S (Shelhamer, Long, and Darrell Citation2017), e) CMSA (Eigen and Fergus Citation2014), f) DeepLab-v1 (Chen et al. 2014a), g) CRF-as-RNN (Zheng et al. Citation2015), h) DeepLab-v2 (Chen et al. Citation2018a), i) DeepLab-v2 with CRF refinement (Chen et al. Citation2018a), (j) PAN (Li et al. Citation2018).

Judging by the picture it portrays, the deep evolution of the literature clearly reveals a number of important implications. First, graphical model-based refinement modules are being abandoned due to their slow nature. A good example of this trend would be the evolution of DeepLab from (Chen et al. 2014a) to (Chen et al. Citation2018b) (see ). Notably, no significant study published in 2019 and 2020 employed a CRF-based or similar module to refine their segmentation results. Second, most studies published in the past two years show no significant leap in performance rates. For this reason, researchers have tended to focus on experimental solutions such as object detection-based or Neural Architecture Search (NAS)-based approaches. Some of these very recent group of studies (Zhang et al. Citation2020b; Zoph et al. Citation2020) focus on (NAS)-based techniques, instead of hand-crafted architectures. EfficientNet-NAS (Zoph et al. Citation2020) belongs to this category and is the leading study in PASCAL VOC 2012 semantic segmentation challenge at the time the paper was prepared. We believe that the field will witness an increasing interest in NAS-based methods in the near future. In general, considering all studies of the post-FCN era, the main challenge of the field still remains to be efficiently integrating (i.e. in real-time) global context to localisation information, which still does not appear to have an off-the-shelf solution, although there are some promising techniques, such as YOLACT (Bolya et al. Citation2019).

In , the right-most column represents a categorical level of computational efficiency. We use a four-level categorization (one star to four stars) to indicate the computational efficiency of each listed method. For any assigned level of the computational efficiency of a method, we explain our reasoning in the table with solid arguments. For example, one of the four-star methods in is “YOLACT” by (Bolya et al. Citation2019), which claims to provide real-time performance (i.e. 30fps) on both PASCAL VOC 2012 and COCO image sets.

Future Scope and Potential Research Directions

Although tremendous successes have been achieved so far in the semantic segmentation field, there are still many open challenges in this field due to hard requirements time-consuming pixel-level annotations, lack of generalization ability to new domains and classes, and need for real-time performance with higher segmentation accuracies. In this section, we categorize possible future directions under different titles by providing examples of recent studies that represent that direction.

Weakly-Supervised Semantic Segmentation (WSSS)

Over the last few years, there has been an increasing research effort directed toward the approaches that are alternative to pixel-level annotations such as; unsupervised, semi-supervised (He, Yang, and Qi Citation2021) and weakly-supervised methods. Recent studies show that, WSSS methods usually perform better than the other schemes (Chan, Hosseini, and Plataniotis Citation2021) where annotations are in the form of image-level labels (Kolesnikov and Lampert Citation2016; Pathak, Krahenbuhl, and Darrell Citation2015; Pinheiro and Collobert Citation2015; Wang et al. 2020b; Ahn and Kwak Citation2018; Li et al. 2021b; Chang et al. Citation2020; Xu et al. Citation2021; Yao et al. Citation2021; Jiang et al. Citation2021), video-level labels (Zhong et al. Citation2016b), scribbles (Lin et al. Citation2016a), points (Bearman et al. Citation2016), and bounding boxes (Dai, He, and Sun Citation2015; Khoreva et al. Citation2017; Xu, Schwing, and Urtasun Citation2015). In case of image-level labels, class activation maps (CAMs) (Zhou et al. Citation2016) are used to localize the small discriminative regions, which are not suitable particularly for the large-scale objects, but can be utilized as initial seeds (pseudo-masks) (Araslanov and Roth Citation2020; Fan et al. Citation2020; Kweon et al. Citation2021; Sun et al. Citation2021).

Zero-/Few-Shot Learning

Motivated by humans’ ability to recognize new concepts in a scene by using only a few visual samples, zero-shot and/or few-shot learning methods have been introduced. Few-shot semantic segmentation (FS3) methods (Wang et al. Citation2019a; Xie et al. Citation2021) has been proposed to recognize objects from unseen classes by utilizing few annotated examples; however, these methods are limited to handling a single unseen class only. Zero-shot semantic segmentation (ZS3) methods have been developed recently to generate visual features by exploiting word embedding vectors in the case of zero training samples (Bucher et al. Citation2019; Lu et al. Citation2021; Pastore et al. Citation2021; Xian et al. Citation2019). However, the major drawback of ZS3 methods is their insufficient prediction ability to distinguish between the seen and the unseen classes even if both are included in a scene. This disadvantage is usually overcome by generalized ZS3 (GZS3), which recognizes both seen and unseen classes simultaneously. GZS3 studies mainly rely on generative-based methods. Feature extractor training is realized without considering semantic features in GZS3 adopted with generative approaches so that the bias is introduced toward the seen classes. Therefore, GZS3 methods result in performance reduction on unseen classes (Pastore et al. Citation2021). Much of the recent work on ZS3 has involved such as: exploiting joint embedding space to alleviate the seen bias problem (Baek, Oh, and Ham Citation2021), analyzing different domain performances chan2021comprehensive, and incorporating spatial information (Cheng et al. 2021a).

Domain Adaptation

Recent studies also rely on the use of synthetic large-scale image sets such as GTA5 (Richter et al. Citation2016) and SYNTHIA (Ros et al. Citation2016b) because of their capability to cope with laborious pixel-level annotations. Although these rich-labeled synthetic images have the advantage of reducing the labeling cost, they also bring about domain shift while training with unlabeled real images. Therefore, applying domain adaptation for aligning the synthetic and the real image sets is of much importance (Zhao et al. Citation2019; Kang et al. Citation2020; Wu et al. Citation2021; Wang et al.Citation2021; Shin et al. Citation2021; Fleuret et al. Citation2021). Unsupervised domain adaptation (UDA) methods are widely employed in semantic segmentation (Cheng et al. 2021c; Liu, Zhang, and Wang Citation2021; Hong et al. Citation2018; Vu et al. Citation2019; Pan et al. Citation2020; Wang et al. Citation2021; Saporta et al. Citation2021; Zheng and Yang Citation2021).

Real-Time Processing

Adopting compact and shallow model architectures (Zhao et al. 2018a; Orsic et al. Citation2019; Yu et al. 2018a; Li et al. Citation2019a; Fan et al. Citation2021) and restricting the input to be low-resolution (Marin et al. Citation2019) are brand new innovations proposed very recently to overcome the computational burden of large-scale semantic segmentation. To choose a real-time semantic segmentation strategy, all aspects of an application should be considered, as all of these strategies somehow correlate with decreasing the model’s discriminative ability and losing information of object boundaries or small objects to some extent. Some other strategies have also been proposed for the retrieval of rich contextual information in real-time applications including attention mechanisms (Ding et al. Citation2021; Hu et al. Citation2020), depth-wise separable convolutions (Chollet Citation2017; Howard et al. Citation2019), pyramid fusion (Oršić and Šegvić Citation2021; Rosas-Arias et al. Citation2021), grouped convolutions (Zhang et al. 2018b; Huang et al. Citation2018) neural architecture search (Zoph et al. Citation2018) and, pipeline parallelism (Chew, Ji, and Zhang Citation2022).

Contextual Information

Contextual information aggregation with the purpose of augmenting pixel representations in semantic segmentation architectures is another promising research direction in recent years. In this aspect, mining contextual information (Jin et al. Citation2021), exploring context information on spatial and channel dimensions (Li et al. 2021c), focusing on object-based contextual representations (Yuan, Chen, and Wang Citation2020) and capturing the global contextual information for fine-resolution remote sensing imagery (Li et al. 2021a) are some of the recent studies. Alternative methods of reducing dense pixel-level annotations in semantic segmentation have been described which are based on using pixel-wise contrastive loss (Chaitanya et al. Citation2020; Zhang et al. Citation2021; Zhao et al. Citation2021).

Conclusions

In this survey, we aimed at reviewing the current developments in the literature regarding deep learning-based 2D image semantic segmentation. We commenced with an analysis of the public image sets and leaderboards for 2D semantic segmentation and then continued by providing an overview of the techniques for performance evaluation. Following this introduction, our focus shifted to the 10-year evolution seen in this field under three chronological titles, namely the pre- and early-deep learning era, the fully convolutional era, and the post-FCN era. After a technical analysis on the approaches of each period, we presented a table of methods spanning all three eras, with a brief summary of each technique that explicates their contribution to the field.

In our review, we paid particular attention to the key technical challenges of the 2D semantic segmentation problem, the deep learning-based solutions that were proposed, and how these solutions evolved as they shaped the advancements in the field. To this end, we observed that the fine-grained localisation of pixel labels is clearly the definitive challenge to the overall problem. Although the title may imply a more ‘local’ interest, the research published in this field evidently shows that it is the global context that determines the actual performance of a method. Thus, it is eminently conceivable why the literature is rich with approaches that attempt to bridge local information with a more global context, such as graphical models, context aggregating networks, recurrent approaches, and attention-based modules. It is also clear that efforts to fulfill this local-global semantics gap at the pixel level will continue for the foreseeable future.

Another important revelation from this review has been the profound effect seen from public challenges to the field. Academic and industrial groups alike are in a constant struggle to top these public leaderboards, which has an obvious effect of accelerating development in this field. Therefore, it would be prudent to promote or even contribute to creating similar public image sets and challenges affiliated to more specific subjects of the semantic segmentation problem, such as 2D medical images.

Considering the rapid and continuing development seen in this field, there is an irrefutable need for an update on the surveys regarding the semantic segmentation problem. However, we believe that the current survey may be considered as a milestone in measuring how much the field has progressed thus far, and where the future directions possibly lie.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1. We consider MRI and CT essentially as 3D volume data. Although individual MRI/CT slices are 2D, when doing semantic segmentation on these types of data, neighbourhood information in all three dimensions are utilised. For this reason, medical applications are excluded from this survey.

6. FCN (Shelhamer, Long, and Darrell Citation2017) was officially published in 2017. However, the same group first shared the idea online as pre-printed literature in 2014 (Long, Shelhamer, and Darrell Citation2014).

7. Many methods utilise fully connected layers such as RCNN (Girshick Citation2015), which are discussed in the following sections. However, this and other similar methods that include fully connected layers have mostly been succeeded by fully convolutional versions for the sake of computational efficiency.

References

- Ahmad, T., P. Campr, M. Cadik, and G. Bebis (2017, May). Comparison of semantic segmentation approaches for horizon/sky line detection. 2017 International Joint Conference on Neural Networks (IJCNN) Anchorage, AK, USA.

- Ahn, J., and S. Kwak (2018). Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Salt Lake City, UT, USA, 4981–2157.

- Araslanov, N., and S. Roth (2020). Single-stage semantic segmentation from image labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Seattle, WA, USA, 4253–62.

- Arbeláez, P., B. Hariharan, C. Gu, S. Gupta, L. Bourdev, and J. Malik (2012). Semantic segmentation using regions and parts. In Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on Providence, RI, USA, 3378–85. IEEE.

- Arun, A., C. Jawahar, and M. P. Kumar (2020). Weakly supervised instance segmentation by learning annotation consistent instances. In European Conference on Computer Vision Glasgow, UK (Virtual/Online), 254–70. Springer.

- Badrinarayanan, V., A. Kendall, and R. Cipolla (2015). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. CoRR abs/1511.00561.

- Baek, D., Y. Oh, and B. Ham (2021). Exploiting a joint embedding space for generalized zero-shot semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision Montreal, Canada, 9536–45.

- Bearman, A., O. Russakovsky, V. Ferrari, and L. Fei-Fei (2016). What’s the point: Semantic segmentation with point supervision. In European Conference on Computer Vision Amsterdam, the Netherlands, 549–65. Springer.

- Bolya, D., C. Zhou, F. Xiao, and Y. J. Lee (2019). YOLACT: Real-time instance segmentation. CoRR abs/1904.02689.

- Brostow, G. J., J. Fauqueur, and R. Cipolla. 2009. Semantic object classes in video: A high-definition ground truth database. Pattern Recognition Letters 30:88–97. doi:10.1016/j.patrec.2008.04.005.

- Bucher, M., T.-H. Vu, M. Cord, and P. Pérez. (2019). Zero-shot semantic segmentation Conference on Neural Information Processing Systems, Vancouver, Canada vol. 32 (Curran Associates, Inc.), 468–479.

- Chaitanya, K., E. Erdil, N. Karani, and E. Konukoglu (2020). Contrastive learning of global and local features for medical image segmentation with limited annotations. arXiv:2006.10511.

- Chan, L., M. S. Hosseini, and K. N. Plataniotis. 2021. A comprehensive analysis of weakly-supervised semantic segmentation in different image domains. International Journal of Computer Vision 129 (2):361–84. doi:10.1007/s11263-020-01373-4.

- Chang, Y.-T., Q. Wang, W.-C. Hung, R. Piramuthu, Y.-H. Tsai, and M.-H. Yang (2020). Weakly-supervised semantic segmentation via sub-category exploration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Seattle, WA, USA, 8991–9000.

- Chen, X., R. Mottaghi, X. Liu, S. Fidler, R. Urtasun, and A. Yuille (2014b). Detect what you can: Detecting and representing objects using holistic models and body parts. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Columbus, OH, USA.

- Chen, L., G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille (2014a). Semantic image segmentation with deep convolutional nets and fully connected crfs. CoRR abs/1412.7062.

- Chen, L., G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. 2018a. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence 40(4):834–48. April. doi: 10.1109/TPAMI.2017.2699184.

- Chen, L., G. Papandreou, F. Schroff, and H. Adam. 2017. . In Rethinking Atrous Convolution for Semantic Image Segmentation. http://arxiv.org/abs/1706.05587

- Chen, H., K. Sun, Z. Tian, C. Shen, Y. Huang, and Y. Yan (2020). Blendmask: Top-down meets bottom-up for instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition Seattle, WA, USA, 8573–81.

- Chen, L., Y. Zhu, G. Papandreou, F. Schroff, and H. Adam (2018b). Encoder-decoder with atrous separable convolution for semantic image segmentation. CoRR abs/1802.02611.

- Cheng, J., S. Nandi, P. Natarajan, and W. Abd-Almageed (2021a). Sign: Spatial-information incorporated generative network for generalized zero-shot semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision Montreal, Canada, 9556–66.

- Cheng, B., A. Schwing, and A. Kirillov. 2021b. Per-pixel classification is not all you need for semantic segmentation. In Advances in Neural Information Processing Systems, 34.

- Cheng, Y., F. Wei, J. Bao, D. Chen, F. Wen, and W. Zhang (2021c). Dual path learning for domain adaptation of semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9082–91.

- Chew, A. W. Z., A. Ji, and L. Zhang. 2022. Large-scale 3d point-cloud semantic segmentation of urban and rural scenes using data volume decomposition coupled with pipeline parallelism. Automation in Construction 133:103995. doi:10.1016/j.autcon.2021.103995.

- Chollet, F. (2017). Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition Honolulu, HI, USA, 1251–58.

- Ciresan, D., A. Giusti, L. M. Gambardella, and J. Schmidhuber. 2012. Deep neural networks segment neuronal membranes in electron microscopy images. In Advances in neural information processing systems, F. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger. ed., vol. 25. 2843–51. Curran Associates, Inc.