?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Dental images are utilized to gather significant signs that are useful in disease diagnosis, treatment, and forensic examination. Many dental age and gender detection procedures have limitations, such as minimal accuracy and dependability. Gender identification techniques aren’t well studied, despite the fact that classification effectiveness and accuracy are low. The suggested approach takes into account the shortcomings of the current system. Deep learning techniques can successfully resolve issues that occurred in other classifiers. Human gender and age identification is a crucial process in the fields of forensics, anthropology, and bio archeology. The image preparation and feature extraction process are accomplished by deep learning algorithms. The performance of classification is improved by minimizing the occurrence of loss with the assistance of a spike neuron-based convolutional neural network (SN-CNN). The performance of SN-CNN is examined by comparing the performance metrics with the existing state-of-art techniques. SN-CNN-based classifier achieved 99.6% accuracy over existing techniques.

Introduction

Estimation of human age is distinct from chronological age, which is a major component in an individual’s biological identity identification process. In most cases, a living person’s chronological age corresponds to his or her calendar age. The biological or physiological age is associated with the process of maturation in the organs and other tissues. Dental age is considered one of the maturity indicators in humans, and it is commonly used in pedodontics, orthodontics, orthopedics, and pediatric surgery. In the domains of forensic science and physical anthropology, this indication is also utilized for certain identification Galibourg et al. (Citation2021), Braga et al. (Citation2005). The recent movement of living beings has necessitated the accurate calculation of age, which has been reported in research studies.

Estimating chronological age is a crucial task in a variety of therapeutic processes, and teeth have been found as useful indications. However, it has been shown that various methodologies are utilized in the calculation of age from the assessment of orthopantomogram (OPG) images, which is a time-consuming procedure where output is heavily impacted by the observer’s subjectivity. The age identification procedures are evaluated using OPG images of the highest radiological reliability, without the use of any dental conditioning features in the OPG image Vila-Blanco et al. (Citation2020). Various types of dental images are used in age identification, and X-rays of dental images are processed to provide important information. Panoramic X-rays, periapical X-rays, bitewing X-rays, and other types of dental imaging are used in dentistry.

The hidden patterns of dental images will be revealed by X-ray images, as well as the computer-based methodologies used in the detection and categorization of age, illness, and other relevant factors. Computational intelligence is used in dental age classification and gender identification, such as fuzzy, machine learning, and optimization algorithms, which are broad strategies for creating a needful projection from a collection of input data Tuan et al. (Citation2018). The age of an unknown person is determined using computer algorithms by comparing the dental, physical, and skeletal maturity of any human Wolfart, Menzel, and Kern (Citation2004). Dental investigations are commonly used in dental treatment and forensics, where they meet challenges in the evaluation. Uneven exposure and poor contrast affect the prominence of dental images. This context can be reflected in the process of segmentation, which leads to the false contour delineation of teeth Pandey et al. (Citation2017).

In the last few years, teeth division and dental radiography-related methods were started. A self-loader for shape extraction system that incorporates the Bayes lead and other important projection methods, with the vital prediction connected in a natural way for teeth separation. Many research publications have presented numerous methods for image augmentation, intrigue limiting, and pattern retrieval Avuçlu et al.(Citation2018) that employ the snake technique

Keshtkar et al.(Citation2006) and morphological operations. Most of the computational techniques are completely automated, in terms of adaptable and iterative thresholding Lai et al.(Citation2008), Lin, Lai, and Huang (Citation2010).

For the critical assessment of age and gender, a variety of age and gender classification approaches have been established. Computer technology plays an important role in determining age and gender. Various gender classification and gender identification procedures are discussed in a subsequent section. Furthermore, machine learning and optimization approaches have drawbacks, such as ineffective performance and huge calculation times. To overcome this issue, an effective gender and age classification system is established with the assistance of deep learning approaches. The occurrence of loss in the process of training is minimized by the SN-CNN.

The remaining of the article is arranged as follows: various human age and gender classification techniques are surveyed in Section 2, the proposed deep learning-based classification methodology is detailed in Section 3, acquired results from the deep learning approach is illustrated in Section 4 and the article is concluded with a suggestion for future work in Section 5.

Literature Review

In forensic ontology, determining and estimating an individual’s age plays an important role. Dental images are reviewed for a variety of reasons, including diagnosis and therapy. The majority of researchers concentrated their study on illness diagnosis, and it was later utilized to determine the age. Classifiers namely Decision Tree (DT), Support Vector Machine (SVM), and K-Nearest Neighbor (KNN) leverage characteristics extracted by the ResNet and AlexNet-based neural networks to classify dental age Houssein, Mualla, and Hassan (Citation2020). When identifying dental images, biological factors, such as bone, dental structures, face, and skeleton are taken into account in certain studies Alkaabi, Yussof, and Al-Mulla (Citation2019).

For image segmentation, the Active Contour Model (ACM) with Jaya Optimization (JO) is used. The successful segmentation approach simplifies and optimizes the classification process. The divided picture allows for quick access and retrieval of the required elements. With the recovered features, the Modified Extreme Learning Machine with Sparse Representation Classification (MELM-SRC) is used to determine the dental age Hemalatha et al.(Citation2021). The mathematical technique and Demirjian Score, which employs panoramic radiographs to investigate an individual’s dental age are used to estimate dental age Wolf et al. (Citation2016). A diverse count of orthopantomograms was examined for the identification of gender Guo et al. (Citation2020).

The image classification procedure necessitates a good pre-processing and segmentation approach, with the Gray Level Co-occurrence Matrix being used for segmentation. The required characteristics are obtained and recorded in a matrix, which is subsequently categorized using the Random Forest (RF) method. The matrix representation technique is complicated, which renders the retrieval procedure inefficient and time-consuming Akkoç, Arslan, and Kök (Citation2017). The Discrete Cosine Transform (DCT) is used to select features, and the returned relevant features are categorized using the RF approach.

Three-dimensional dental plaster models and a small number of samples are employed in the DCT-based RF classification approach. When the amount of data is large, the DCT requires quantization, which complicated the process of classification Esmaeilyfard, Paknahad, and Dokohaki (Citation2021). The gender categorization is done using cone-beam computed tomography (CBCT) images and a hybrid Genetic Algorithm (GA) based Naive Bayes (NB) technique. GA retrieves necessary characteristics, which are then categorized by NB where exploitation ability is not supported by the genetic algorithm. GA takes a long time to complete its progression and it is computationally costly Acharya et al.(Citation2008).

In the dental age and gender identification, several classical, machine learning, and optimization approaches are used, which are time demanding, classification is impacted by inconsistency, and attainment of accuracy is minimal in certain algorithms that is discussed in the above literature. Computational difficulties exist in optimization and bio-inspired techniques that is considered in the proposed approach. The procedures for determining age and gender have several limitations and drawbacks. Taking into account the disadvantages, a deep learning-based solution for gender and age identification is formulated.

Proposed Spiking Neuron CNN Classifier for Human Age and Gender Identification Using Dental Image

In this section, the age and gender forecasting system with the assistance of the deep learning technique is discussed, where the automatic identification of age and gender passes through the process like pre-processing, image segmentation, feature selection, and classification. The overall block diagram for human age and gender classification is depicted in .

Image Acquisition

The identification process of human age and gender from the image process is initiated by image acquisition and it is the action of collecting the dental images from the appropriate sources in . Further, acquired dental images are transmitted to the pre-processing phase.

Pre-processing – ADF with Speckle Noise Model

ADF is used in the pre-processing step to improve smoothing accuracy and noise reduction. ADF is a selective diffusion approach that uses the probabilistic characterization of dental images. In the dissemination regions, it practices the reduction of presumably acceptable information. The elimination of unwanted noise from non-relevant areas and important structures is still evident. The ADF filter’s primary goal is to reduce noise while also preserving edge information. The image-enhancing strategy used by the speckle noise model prevents the degrading of images that are caused by ADF Dogra, Ahuja, and Kumar (Citation2021).

Segmentation – Deep CNN

For segmenting sharp edges and curves, CNN techniques have demonstrated promising results. However, CNN techniques were adapted for speedy and precise segmentation of all required portions. To conduct thorough segmentation of the dental image, Deep CNN is suggested by merging an upgraded convolutional encoder-decoder (CED) network, 3D fully connected conditional random field (CRF), and 3D simplex deformable modeling Maier et al. (Citation2019).

A deep CED network is a fundamental component of the automatic segmentation process. The CED network is an adaption of a network topology that is used in dental imaging to segregate needed areas. A coupled encoder network and a decoder network make up the CED network. The encoder network compresses the input picture data set while also detecting its features. Because it has been proved to be an effective feature extraction network in CED-based medical imaging applications, the popular VGG16 network is used as the encoder. Every unit layer of the VGG16 encoder consists of a convolutional layer with a configurable set of 2D filters, a batch normalization (BN), a rectified-linear unit activation, and a max-pooling technique for reducing data dimensions. To accomplish appropriate data compression, this unit layer is repeated five times in the encoder network.

A maximum a posteriori (MAP) inference is defined over the 3D volume dental picture in the fully linked 3D CRF process. The unary perspective on every voxel is generated using the probability findings from the CED netork, and the pairwise potentials on all pairs of voxels are calculated using the original image volume. The iterative CRF optimization is performed by minimizing Gibbs energy, wd as

where the allocated labels are indicated as kx and ky for the voxel x and y, the range of x and y is from 1 to the total count of the voxels. The unary potential is determined as negative logarithm of the distinct label from the softmax identification and potentials in pairwise kx and ky formation is determined as,

where the location voxels are indicated as that is the intensity rate of image, pairwise potential is considered as the functional incidence of kernel and kernethe l smooth the occurence of kernel is the initial depiction term in the equation that adopts voxels nearer to every pixel the l value of the intensity of image and also they share similar label. The extension of every pixel rate is regulated by θα and θβ. The subsequent exponential term, the smoothness kernel, eliminates isolated tiny patches, and its influence on the pairwise potential is regulated by θγ. The values of ω1 and ω2 establish the weights for the occurrence of kernel and the smoothness kernel, respectively. The Potts model is used as the compatibility function is

,

A very efficient Deep CNN technique is developed in this paper, which is used to make a sophisticated inferences with a large number of paired potentials practicable in a dental image volume. As a consequence, the CRF inference approach is linear in terms of the number of variables and sublinear in terms of the number of edges in the model, resulting in increased computer efficiency throughout the dental image refining process.

Neuro Multi-Kernel with SVM Based Spiking Neuron CNN

The primary goal of this method is to increase the margin among pixels while decreasing the distance among hyperplane focus. The goal of this proposed feature selection is may need a different definition of resemblance (an alternate portion). The kernel functions are initiated in the SVM classification to execute the non-linear process. The classification procedure in this work is based on linear kernel and radial bias function (RBF) kernel functions Krishnakumar et al.(Citation2021). These classification methodologies are indicated by constant c, δ as kernel variables and support vectors.

Linear Kernel: The two classes are linearly separable, which means that at least one hyperplane described by a vector with a bias is capable of separating the pixels with zero error may be found. F stands for the fundamental kernel that is the simplest and takes the least amount of time to execute.

RBF Kernel: The radial basis function kernel, often known as the Gaussian kernel, was used in SVM. Because the external notations are random to the test vector, the feature selection for a test vector can explicitly use the outcomes of unconnected external notations.

Multiple kernel work is regarded as a successful way for designing a superior feature selection mechanism. It’s as simple as putting together a convex combination of more than one kernel. The advantage of multi-kernel functions (4) and (5) is that the internal product can be defined as the principal activity in the new virtual space. The feature vectors are generated from the neuro multi-kernel SVM is transmitted to the SNCNN-based classification process.

The construction of SNCNN architecture for image classification involves two phases. The first phase involves the construction of a CNN-based training network using the principles of deep learning and optimizing the weights. Inclusion of convolution connections are used to construct the network. The SNCNN consists of the following layers:

One or Two general convolution layers: The input to a convolution layer is an input image and the output is obtained as an activation map. The filters are applied in convolution layer to extract high-level features from raw pixel data, which the model can then use for classification.

One max pooling layer: Pooling layers are added to capture the semantic information of the image.

Drop out layer: A drop-out layer with a regularization rate of 0.4 and a dense layer with 10 neurons, one for every digit target class, follow the pooling layer. Convolution and pooling layers do feature extraction, whereas dropout and dense layers perform classification.

Output layer: The output layer in CNN is a fully connected layer, unlike other layers. Here, the input from the other layers is flattened and sent so that transformation of the output into a number of classes happens as desired by the network. To compute the loss and its gradient, the output layer utilizes a softmax regression as the loss function. For loss reduction, backpropagation begins by updating the weight and biases. One training cycle consists of a single forward and backward pass. The evidence of the input being in particular classes is added in softmax regression by conducting a weighted sum of the pixel intensities. After then, the evidence is transformed into probabilities. If the evidence is favorable, the weight is positive; otherwise, it is negative. As a result, the softmax values for a particular picture may be used as a relative indicator of the image’s chance of falling into each target class.

The result is that the evidence for a class p given an input I is:

where the weight is denoted by wp, bp is bias for the class p, and q is an index for adding the pixel value in input image I.

The acquired evidence is further transmitted into forecasted probability J by utilizing the softmax function is given as,

where the softmax function for the given class p is given as,

The Softmax function multiplies the input and normalizes the result. The negative log probability of the right output acquired after going through a softmax function is known as softmax loss. The log of the softmax function output is identical to the linear classifier output since it is normalized by the log of the sum of all exponentiated outputs. Unlike weighted square loss, it is more stable as the number of neurons increases. Softmax loss outperforms hinge loss for large numbers of neurons, but softmax loss outperforms hinge loss for few neurons. The overall flowchart of the proposed model is given in .

Softmax loss does not benefit from regularization while moving from rate-based neurons to spiking neurons. While considering the time taken to compute in CPU, both softmax and hinge functions scale linearly with the number of neurons. However, Hinge loss takes more time consistently than softmax. Owing to the benefits of softmax over the weighted loss and hinge loss functions in terms of stability in a robust environment, performance while moving from rate based to spike based and the time taken to compute in low-cost hardware, it is chosen as the error function for the SN-CNN model. The SCNN is illustrated in .

Optimizing the Weights with ADAM Optimizer

The final step in the Training process is defining the optimizer for training. Adaptive Moment Estimation (ADAM) optimizer is chosen as it combines the advantages of Adaptive Gradient Algorithm (AdaGrad) that works well with sparse gradients and Root Mean Square Propagation (RMSProp) with the typically best performance in an online and non-stationary environment.

Adam is a first-order gradient-based optimization algorithm having stochastic objective functions. It is based on adaptive estimates of the lower-order moment and requires little memory with straightforward implementation methods. Being computationally efficient, ADAM is best for problems involving a huge amount of data and parameters. The intuitive interpretations of its hyper-parameters ensure little tuning from the user. It calculates an exponential moving average of the gradient and the squared gradient thereby achieving results faster. All these factors have led to the choice of ADAM as the best optimizer choice for SN-CNN architecture. The procedure of SNCNN is given in Algorithm 1.

Table

Result and Discussion

The performance of the SNCNN-based classification of a person’s dental age and gender is studied and compared with existing classification approaches such as Random Forest (RF), Naive Bayes (NB), Convolutional Neural Network (CNN), Deep CNN, and Support Vector Machine (SVM) in this section. The database is initially contained with OPG images that were used for training and testing. MATLAB is used to examine the simulation results, and the trained picture has a size of 275 × 158 pixels. SNCNN utilizes 60% for training and 40% of the image is used for testing. For effective training of network, it necessitated 40% of the dataset for training whereby the remaining images are utilized in testing.

Dataset Description

The orthopantomogram (OPG) dataset was obtained from Coimbatore’s Kovai Scan Center. The gender and dental age of a hundred healthy Indian juveniles and children aged 4 to 18 are investigated using OPG images, which are then matched to the individual’s chronological data.

Performance Metrics

The performance of the proposed technique SN-CNN is compared with the existing approaches namely Random Forest (RF), Naïve Bayes (NB), Convolutional Neural Network (CNN), Deep CNN (DCNN), and Support Vector Machine (SVM). The performance metrics utilized for the examination of these approaches are Accuracy, Sensitivity, and Specificity Monga, Li, and Eldar (Citation2021), Foody (Citation2008), Jeyaraj et al.(Citation2019), Connor et al.(Citation2016).

Accuracy

Number of appropriate age and gender identification is divided by the total number of cases is used to calculate the classification accuracy of the dental image. The accuracy value determines the categorization model’s proficiency. The true positive (TP) and true negative (TN) values derived from the age and gender classes are used to measure accuracy. Effective classification algorithm refers to the algorithm that has the highest level of accuracy. The following is an estimate of the accuracy value:

Sensitivity

The ratio of positive or correctly classified values out of all instances is known as sensitivity, and it is expressed as the rate of TP. It determines the accurately identified results obtained from the test, with higher sensitivity minimizes specificity rates and vice versa. The TP value identifies correct identification performance, and the assessment of sensitivity is as follows:

Specificity

The rate of TN denotes the percentage of negative or erroneously categorized values out of all the occurrences. The estimation of specificity aids in the identification of accuracy over the whole classification population, and the method is as follows:

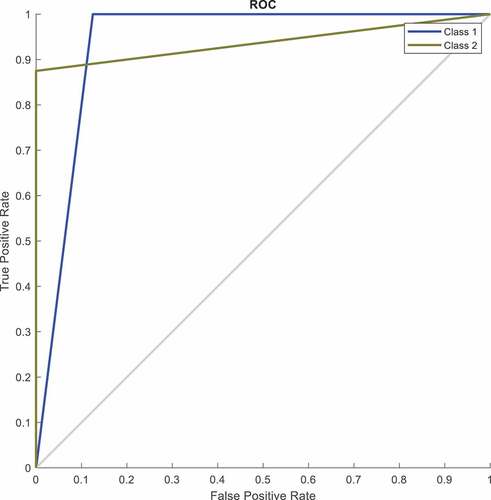

When the rate of TP value grows, the rate of TN values decreases, resulting in a trade-off between different categorization thresholds. The Receiving Operating Characteristic Curve (ROC) Narkhede (Citation2018) depicts the value trade-off.

Performance Evaluation

The SNCNN’s classification performance is compared with the existing techniques, the image is improved using a pre-processing approach, and the relevant features are extracted using a segmentation and feature selection procedure. The obtained data is sent to the classification phase.

Pre-Processing

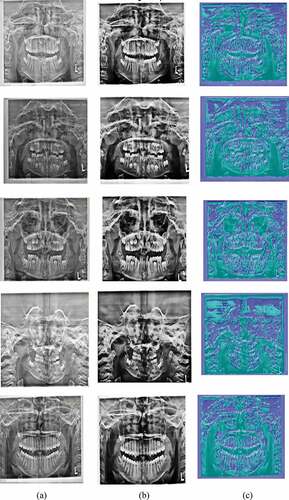

In this article, the image pre-processing is accomplished by the Anisotropic Diffusion Filter (ADF) with Speckle filter. The output of the pre-processed image is given in .

In , the input image is given and in , the pre-processed image is illustrated. The pre-processing is accomplished by ADF with SF where the edge information are highly preserved and the noise value is removed. The pre-processed and enhanced image is given in .

Segmentation – Deep CNN

The process of segmentation modifies the image, which makes the image investigation simple as well as easier. The segmentation is attained by SN-CNN technique and it is given in .

In , the segmentation process is illustrated and it is performed on the dental images with the DCNN approach, which makes the classification process reliable. depicts the input image, 5(b) depicts the pre-processed image and 5(c) depicts the segmented image.

Classification of Age

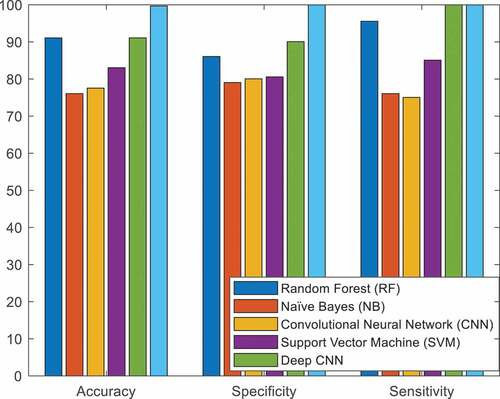

The pre-processed and segmented dental image is classified for the identification of chronological age with the feature vectors acquired from the neuro multi-kernel SVM. The classification performance of the SN-CNN is given in and .

Table 1. Comparison of age classification

In , the performance of age classification is compared with the proposed SNCNN and existing technique. The classification accuracy of the proposed technique SNCNN is higher {8.6%, 23.6%, 22.1%, 16.6%, and 8.6%} than {RF, NB, CNN, SVM, and DCNN}, respectively. The classification sensitivity of the proposed technique SNCNN is higher {14%, 21%, 20%, 19.5%, and 10%} than {RF, NB, CNN, SVM, and DCNN}, respectively. The classification specificity of the proposed technique SNCNN is higher {4.5%, 24%, 25%, 15%, and 0%} than {RF, NB, CNN, SVM, and DCNN}, respectively. The classification performance of the proposed SNCNN outperforms the existing technique.

Classification of Gender

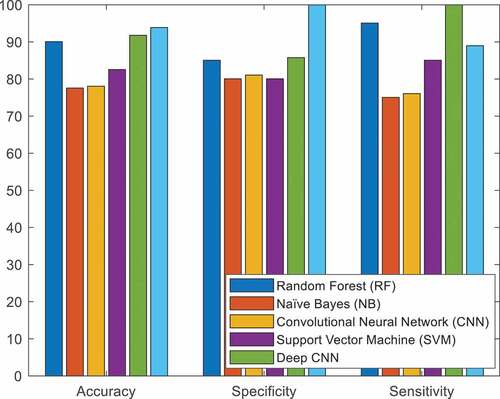

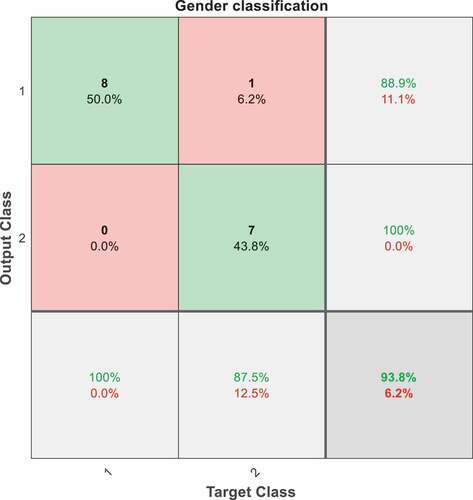

The pre-processed and segmented dental image is classified for the identification of gender. For gender classification, 12 images are considered (5 males and 7 females) and 11 images are correctly classified. The gender classification is attained with the support of acquired feature from DCNN. The classification performance of the SN-CNN is given in and .

Table 2. Comparison of gender classification

In , the performance of gender classification is compared with the proposed SNCNN and existing technique. The classification accuracy of the proposed technique SNCNN is higher {3.8%, 16.3%, 15.8%, 11.3%, and 2.1%} than {RF, NB, CNN, SVM, and DCNN}, respectively. The classification sensitivity of the proposed technique SNCNN is higher {15%, 20%, 19%, 20%, and 14.3%} than {RF, NB, CNN, SVM, and DCNN}, respectively. The classification specificity of the proposed technique SNCNN is higher {13.9%, 12.9%, and 3.9%} than {NB, CNN, and SVM}, respectively. The classification performance of the proposed SNCNN outperforms the existing technique.

In , the trade-off among the TP of Sensitivity and TN of specificity for different class is illustrated

In , the visualization of SN-CNN classification is illustrated and the forecasting summary is given with confusion matrix where the count of improper and exact classification is denoted as diverse classes.

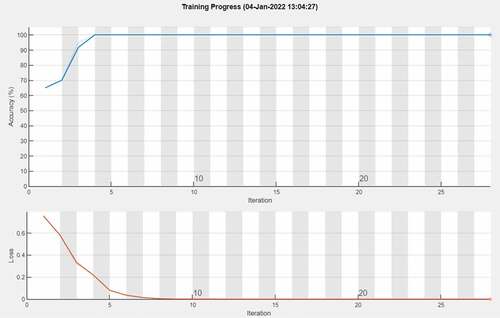

In , the accuracy rate and frequency of loss or error over the performance of the SN-CNN is presented for various iterations. Iteration 2 depicts an improvement in accuracy, whereas iteration 1 depicts a decrease in loss. The SN-CNN’s performance is improved when the loss function is reduced, and a successful categorization of age and gender is attained. The accuracy of the classifier is measured, and the loss function is used to optimize performance Amirzadi, Jamkhaneh, and Deiri (Citation2021). The loss function in SN-CNN lowers the accuracy and iteration is improved, which indicates the classifier model is efficient with more iterations.

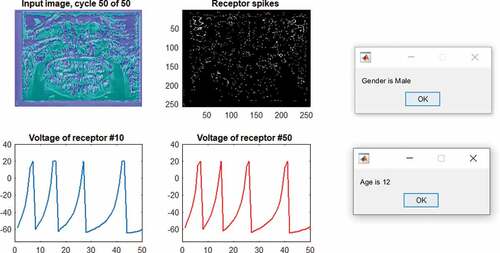

depicts the classification of age and gender from dental image utilizing SN-CNN whereas the process of identification is distinct and proficient.

Conclusion

This article describes a usefulness of gender and age categorization approach that is automated and does not require the input of any specialists. The major goal of this study is to use deep learning techniques to determine the precise gender and age of a juvenile or adolescent. The ADF approach is used to process and improve the speckle noise in the OPG image. The DCNN-based segmentation and neural multi-kernel SVM for feature vector retrieval make the analysis and classification procedure easier. The processed image and feature vectors are sent into the SNCNN classifier, which effectively classifies the age and gender. The proposed SNCNN has a gender classification accuracy of 99.6% and an age classification accuracy of 93.8%. The outcome of the proposed technique is compared with the existing state-of-art techniques and the proposed SNCNN outperforms the existing techniques.

Acknowledgments

Research Supporting Project number (RSP-2021/323), King Saud University, Riyadh, Saudi Arabia.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Acharya, A. B., and S. Mainali. 2008. Sex discrimination potential of buccolingual and mesiodistal tooth dimensions. Journal of Forensic Sciences 53 (4):790–2051. doi:10.1111/j.1556-4029.2008.00778.x.

- Akkoç, B., A. Arslan, and H. Kök. 2017. Automatic gender determination from 3D digital maxillary tooth plaster models based on the random forest algorithm and discrete cosine transform. Computer Methods and Programs in Biomedicine 143:59–65. doi:10.1016/j.cmpb.2017.03.001.

- Alkaabi, S., S. Yussof, and S. Al-Mulla (2019, November). Evaluation of convolutional neural network based on dental images for Age Estimation. In 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA) Ras Al Khaimah, United Arab Emirates (pp. 1–5). IEEE.

- Amirzadi, A., E. B. Jamkhaneh, and E. Deiri. 2021. A comparison of estimation methods for reliability function of inverse generalized weibull distribution under new loss function. Journal of Statistical Computation and Simulation 91 (13) 2595–2622.

- Avuçlu, E., and F. Başçiftçi. 2018. Determination age and gender with developed a novel algorithm in image processing techniques by implementing to dental X-ray images. Romanian Journal of Legal Medicine 26 (4):412–18.

- Braga, J., Y. Heuze, O. Chabadel, N. K. Sonan, and A. Gueramy. 2005. Non-adult dental age assessment: Correspondence analysis and linear regression versus bayesian predictions. International Journal of Legal Medicine 119 (5):260–74. doi:10.1007/s00414-004-0494-8.

- Connor, R., and F. A. Cardillo (2016, February). Quantifying the specificity of near-duplicate image classification functions. In 11th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications Rome, Italy.

- Dogra, A., C. K. Ahuja, and S. Kumar. 2021. A multi‐modality paradigm for CT and MRI fusion with applications of quantum image processing. Concurrency and Computation: Practice and Experience 21 e6610.

- Esmaeilyfard, R., M. Paknahad, and S. Dokohaki. 2021. Sex classification of first molar teeth in cone beam computed tomography images using data mining. Forensic Science International 318:110633. doi:10.1016/j.forsciint.2020.110633.

- Foody, G. M. 2008. Harshness in image classification accuracy assessment. International Journal of Remote Sensing 29 (11):3137–58. doi:10.1080/01431160701442120.

- Galibourg, A., S. Cussat-Blanc, J. Dumoncel, N. Telmon, P. Monsarrat, and D. Maret. 2021. Comparison of different machine learning approaches to predict dental age using Demirjian’s staging approach. International Journal of Legal Medicine 135 (2):665–75. doi:10.1007/s00414-020-02489-5.

- Guo, Y. C., Y. H. Wang, A. Olze, S. Schmidt, R. Schulz, H. Pfeiffer, and A. Schmeling. 2020. Dental age estimation based on the radiographic visibility of the periodontal ligament in the lower third molars: Application of a new stage classification. International Journal of Legal Medicine 134 (1):369–74. doi:10.1007/s00414-019-02178-y.

- Hemalatha, B., and N. Rajkumar. 2021. A modified machine learning classification for dental age assessment with effectual ACM-JO based segmentation. International Journal of Bio-Inspired Computation 17 (2):95–104. doi:10.1504/IJBIC.2021.114089.

- Houssein, E. H., N. Mualla, and M. R. Hassan. 2020. Dental age estimation based on X-ray images. Computers, Materials & Continua 62 (2):591–605. doi:10.32604/cmc.2020.08580.

- Jeyaraj, P. R., and E. R. S. Nadar. 2019. Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. Journal of Cancer Research and Clinical Oncology 145 (4):829–37. doi:10.1007/s00432-018-02834-7.

- Keshtkar, F., and W. Gueaieb (2006, May). Segmentation of dental radiographs using a swarm intelligence approach. In 2006 Canadian Conference on Electrical and Computer Engineering Ontario, Canada (pp. 328–31). IEEE.

- Krishnakumar, S., and K. Manivannan. 2021. Effective segmentation and classification of brain tumor using rough K means algorithm and multi kernel SVM in MR images. Journal of Ambient Intelligence and Humanized Computing 12 (6):6751–60. doi:10.1007/s12652-020-02300-8.

- Lai, Y. H., and P. L. Lin (2008, October). Effective segmentation for dental X-ray images using texture-based fuzzy inference system. In International Conference on Advanced Concepts for Intelligent Vision Systems (pp. 936–47). Springer, Berlin, Heidelberg.

- Lin, P. L., Y. H. Lai, and P. W. Huang. 2010. An effective classification and numbering system for dental bitewing radiographs using teeth region and contour information. Pattern Recognition 43 (4):1380–92. doi:10.1016/j.patcog.2009.10.005.

- Maier, A., C. Syben, T. Lasser, and C. Riess. 2019. A gentle introduction to deep learning in medical image processing. Zeitschrift für Medizinische Physik 29 (2):86–101. doi:10.1016/j.zemedi.2018.12.003.

- Monga, V., Y. Li, and Y. C. Eldar. 2021. Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing. IEEE Signal Processing Magazine 38 (2):18–44. doi:10.1109/MSP.2020.3016905.

- Narkhede, S. 2018. Understanding auc-roc curve. Towards Data Science 26:220–27.

- Pandey, P., A. Bhan, M. K. Dutta, and C. M. Travieso (2017, July). Automatic image processing based dental image analysis using automatic Gaussian fitting energy and level sets. In 2017 International Conference and Workshop on Bioinspired Intelligence (IWOBI) Noida, India (pp. 1–5). IEEE.

- Tuan, T. M., H. Fujita, N. Dey, A. S. Ashour, V. T. N. Ngoc, and D. T. Chu. 2018. Dental diagnosis from X-ray images: An expert system based on fuzzy computing. Biomedical Signal Processing and Control 39:64–73. doi:10.1016/j.bspc.2017.07.005.

- Vila-Blanco, N., M. J. Carreira, P. Varas-Quintana, C. Balsa-Castro, and I. Tomas. 2020. Deep neural networks for chronological age estimation from OPG images. IEEE Transactions on Medical Imaging 39 (7):2374–84. doi:10.1109/TMI.2020.2968765.

- Wolf, T. G., B. Briseño-Marroquín, A. Callaway, M. Patyna, V. T. Müller, I. Willershausen, and B. Willershausen. 2016. Dental age assessment in 6-to 14-year old German children: Comparison of Cameriere and Demirjian methods. BMC Oral Health 16 (1):1–8. doi:10.1186/s12903-016-0315-8.

- Wolfart, S., H. Menzel, and M. Kern. 2004. Inability to relate tooth forms to face shape and gender. European Journal of Oral Sciences 112 (6):471–76. doi:10.1111/j.1600-0722.2004.00170.x.